Abstract

Robots frequently need to perceive object attributes, such as red, heavy, and empty, using multimodal exploratory behaviors, such as look, lift, and shake. One possible way for robots to do so is to learn a classifier for each perceivable attribute given an exploratory behavior. Once the attribute classifiers are learned, they can be used by robots to select actions and identify attributes of new objects, answering questions, such as “Is this object red and empty ?” In this article, we introduce a robot interactive perception problem, called Multimodal Embodied Attribute Learning (meal), and explore solutions to this new problem. Under different assumptions, there are two classes of meal problems. offline-meal problems are defined in this article as learning attribute classifiers from pre-collected data, and sequencing actions towards attribute identification under the challenging trade-off between information gains and exploration action costs. For this purpose, we introduce Mixed Observability Robot Control (morc), an algorithm for offline-meal problems, that dynamically constructs both fully and partially observable components of the state for multimodal attribute identification of objects. We further investigate a more challenging class of meal problems, called online-meal, where the robot assumes no pre-collected data, and works on both attribute classification and attribute identification at the same time. Based on morc, we develop an algorithm called Information-Theoretic Reward Shaping (morc-itrs) that actively addresses the trade-off between exploration and exploitation in online-meal problems. morc and morc-itrs are evaluated in comparison with competitive meal baselines, and results demonstrate the superiority of our methods in learning efficiency and identification accuracy.

Similar content being viewed by others

Notes

The terms of “behavior” and “action” are widely used in developmental robotics and sequential decision-making communities respectively. In this article, the two terms are used interchangeably.

Project webpage: https://sites.google.com/view/attribute-learning-robotics/

We use attribute classification to refer to the problem of learning the attribute classifiers, which is a supervised machine learning problem. We use attribute identification to refer to the task of identifying whether an object has a set of attributes or not, which corresponds to a sequential decision-making problem.

Source code: https://github.com/keke-220/Predicate_Learning

Action ask was used only in the ISPY32 experiments, because other exploration behaviors are not as effective as in ROC36 and CY101.

References

Puterman, M. L. (2014). Markov decision processes: Discrete stochastic dynamic programming. John Wiley & Sons.

Kaelbling, L. P., Littman, M. L., & Cassandra, A. R. (1998). Planning and acting in partially observable stochastic domains. Artificial Intelligence, 101(1–2), 99–134.

Ong, S. C., Png, S. W., Hsu, D., & Lee, W. S. (2010). Planning under uncertainty for robotic tasks with mixed observability. The International Journal of Robotics Research, 29(8), 1053–1068.

Thomason, J., Sinapov, J., Svetlik, M., Stone, P., & Mooney, R. J. (2016). Learning multi-modal grounded linguistic semantics by playing “I Spy”. In: IJCAI (pp. 3477–3483).

Sinapov, J., Schenck, C., & Stoytchev, A. (2014). Learning relational object categories using behavioral exploration and multimodal perception. In: 2014 IEEE international conference on robotics and automation (ICRA) (pp. 5691–5698). IEEE.

Tatiya, G., & Sinapov, J. (2019). Deep multi-sensory object category recognition using interactive behavioral exploration. In: 2019 international conference on robotics and automation (ICRA) (pp. 7872–7878). IEEE.

Thomason, J., Padmakumar, A., Sinapov, J., Hart, J., Stone, P., & Mooney, R.J. (2017). Opportunistic active learning for grounding natural language descriptions. In: Conference on robot learning (pp. 67–76). PMLR.

Thomason, J., Sinapov, J., Mooney, R., & Stone, P. (2018). Guiding exploratory behaviors for multi-modal grounding of linguistic descriptions. In: Proceedings of the AAAI conference on artificial intelligence (vol. 32).

Sinapov, J., & Stoytchev, A. (2013). Grounded object individuation by a humanoid robot. In: 2013 IEEE international conference on robotics and automation (pp. 4981–4988). IEEE.

Sinapov, J., Schenck, C., Staley, K., Sukhoy, V., & Stoytchev, A. (2014). Grounding semantic categories in behavioral interactions: Experiments with 100 objects. Robotics and Autonomous Systems, 62(5), 632–645.

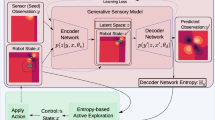

Chen, X., Hosseini, R., Panetta, K., & Sinapov, J. (2021). A framework for multisensory foresight for embodied agents. In: 2021 IEEE international conference on robotics and automation. IEEE.

Amiri, S., Wei, S., Zhang, S., Sinapov, J., Thomason, J., & Stone, P. (2018). Multi-modal predicate identification using dynamically learned robot controllers. In: Proceedings of the 27th international joint conference on artificial intelligence (IJCAI-18).

Zhang, X., Sinapov, J., & Zhang, S. (2021). Planning multimodal exploratory actions for online robot attribute learning. In: Robotics: Science and Systems (RSS).

Russakovsky, O., & Fei-Fei, L. (2010). Attribute learning in large-scale datasets. In: European conference on computer vision (pp. 1–14). Springer.

Chen, S., & Grauman, K. (2018). Compare and contrast: Learning prominent visual differences. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1267–1276).

Ferrari, V., & Zisserman, A. (2007). Learning visual attributes. Advances in Neural Information Processing Systems, 20, 433–440.

Farhadi, A., Endres, I., Hoiem, D., & Forsyth, D. (2009). Describing objects by their attributes. In: 2009 IEEE conference on computer vision and pattern recognition (pp. 1778–1785). IEEE.

Lampert, C. H., Nickisch, H., & Harmeling, S. (2009). Learning to detect unseen object classes by between-class attribute transfer. In: 2009 IEEE conference on computer vision and pattern recognition (pp. 951–958). IEEE.

Jayaraman, D., & Grauman, K. (2014). Zero shot recognition with unreliable attributes. In: Advances in neural information processing systems.

Al-Halah, Z., Tapaswi, M., & Stiefelhagen, R. (2016). Recovering the missing link: Predicting class-attribute associations for unsupervised zero-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5975–5984).

Ren, M., Triantafillou, E., Wang, K. -C., Lucas, J., Snell, J., Pitkow, X., Tolias, A. S., & Zemel, R. (2020). Flexible few-shot learning with contextual similarity. In: 4th Workshop on Meta-Learning at NeurIPS.

Parikh, D., & Grauman, K. (2011). Relative attributes. In: 2011 International conference on computer vision (pp. 503–510). IEEE.

Patterson, G., & Hays, J. (2016). Coco attributes: Attributes for people, animals, and objects. In: European conference on computer vision (pp. 85–100). Springer.

Krishna, R., Zhu, Y., Groth, O., Johnson, J., Hata, K., Kravitz, J., Chen, S., Kalantidis, Y., Li, L.-J., Shamma, D. A., et al. (2017). Visual genome: Connecting language and vision using crowdsourced dense image annotations. International Journal of Computer Vision, 123(1), 32–73.

Pham, K., Kafle, K., Lin, Z., Ding, Z., Cohen, S., Tran, Q., & Shrivastava, A. (2021). Learning to predict visual attributes in the wild. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 13018–13028).

Harnad, S. (1990). The symbol grounding problem. Physica D: Nonlinear Phenomena, 42(1–3), 335–346.

Kalashnikov, D., Irpan, A., Pastor, P., Ibarz, J., Herzog, A., Jang, E., Quillen, D., Holly, E., Kalakrishnan, M., & Vanhoucke, V., et al. (2018). Qt-opt: Scalable deep reinforcement learning for vision-based robotic manipulation. In: Conference on Robot Learning.

Zhu, Y., Mottaghi, R., Kolve, E., Lim, J. J., Gupta, A., Fei-Fei, L., & Farhadi, A. (2017). Target-driven visual navigation in indoor scenes using deep reinforcement learning. In: 2017 IEEE international conference on robotics and automation (ICRA) (pp. 3357–3364). IEEE.

Tellex, S., Gopalan, N., Kress-Gazit, H., & Matuszek, C. (2020). Robots that use language. Annual Review of Control, Robotics, and Autonomous Systems, 3, 25–55.

Dahiya, R. S., Metta, G., Valle, M., & Sandini, G. (2009). Tactile sensing-from humans to humanoids. IEEE Transactions on Robotics, 26(1), 1–20.

Li, Q., Kroemer, O., Su, Z., Veiga, F. F., Kaboli, M., & Ritter, H. J. (2020). A review of tactile information: Perception and action through touch. IEEE Transactions on Robotics, 36(6), 1619–1634.

Monroy, J., Ruiz-Sarmiento, J.-R., Moreno, F.-A., Melendez-Fernandez, F., Galindo, C., & Gonzalez-Jimenez, J. (2018). A semantic-based gas source localization with a mobile robot combining vision and chemical sensing. Sensors, 18(12), 4174.

Ciui, B., Martin, A., Mishra, R. K., Nakagawa, T., Dawkins, T. J., Lyu, M., Cristea, C., Sandulescu, R., & Wang, J. (2018). Chemical sensing at the robot fingertips: Toward automated taste discrimination in food samples. ACS sensors, 3(11), 2375–2384.

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems, 25, 1097–1105.

Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2016). You only look once: Unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 779–788).

Devlin, J., Chang, M. -W., Lee, K., & Toutanova, K. (2018). Bert: Pre-training of deep bidirectional transformers for language understanding. In: The 17th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., & Askell, A., et al. (2020). Language models are few-shot learners. In: Advances in Neural Information Processing Systems.

Gibson, E. J. (1988). Exploratory behavior in the development of perceiving, acting, and the acquiring of knowledge. Annual review of psychology, 39(1), 1–42.

Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., & Clark, J., et al. (2021). Learning transferable visual models from natural language supervision. In: International conference on machine learning.

Lynott, D., & Connell, L. (2009). Modality exclusivity norms for 423 object properties. Behavior Research Methods, 41(2), 558–564.

Bohg, J., Hausman, K., Sankaran, B., Brock, O., Kragic, D., Schaal, S., & Sukhatme, G. S. (2017). Interactive perception: Leveraging action in perception and perception in action. IEEE Transactions on Robotics, 33(6), 1273–1291.

Gao, Y., Hendricks, L. A., Kuchenbecker, K. J., & Darrell, T. (2016). Deep learning for tactile understanding from visual and haptic data. In: 2016 IEEE international conference on robotics and automation (ICRA) (pp. 536–543). IEEE

Kerzel, M., Strahl, E., Gaede, C., Gasanov, E., & Wermter, S. (2019). Neuro-robotic haptic object classification by active exploration on a novel dataset. In: 2019 International joint conference on neural networks (IJCNN) (pp. 1–8). IEEE.

Gandhi, D., Gupta, A., & Pinto, L. (2020). Swoosh! rattle! thump!–actions that sound. In: Robotics: Science and Systems (RSS).

Braud, R., Giagkos, A., Shaw, P., Lee, M., & Shen, Q. (2020). Robot multi-modal object perception and recognition: synthetic maturation of sensorimotor learning in embodied systems. IEEE Transactions on Cognitive and Developmental Systems, 13(2), 416–428.

Arkin, J., Park, D., Roy, S., Walter, M. R., Roy, N., Howard, T. M., & Paul, R. (2020). Multimodal estimation and communication of latent semantic knowledge for robust execution of robot instructions. The International Journal of Robotics Research, 39(10–11), 1279–1304.

Lee, M. A., Zhu, Y., Srinivasan, K., Shah, P., Savarese, S., Fei-Fei, L., Garg, A., & Bohg, J. (2019). Making sense of vision and touch: Self-supervised learning of multimodal representations for contact-rich tasks. In: 2019 International conference on robotics and automation (ICRA) (pp. 8943–8950). IEEE.

Wang, C., Wang, S., Romero, B., Veiga, F., & Adelson, E. (2020). Swingbot: Learning physical features from in-hand tactile exploration for dynamic swing-up manipulation. In: IEEE/RSJ International conference on intelligent robots and systems (pp. 5633–5640).

Fishel, J. A., & Loeb, G. E. (2012). Bayesian exploration for intelligent identification of textures. Frontiers in neurorobotics, 6, 4.

Sutton, R. S., & Barto, A. G. (2018). Reinforcement learning: An introduction. MIT press.

Platt Jr, R., Tedrake, R., Kaelbling, L., & Lozano-Perez, T. (2010). Belief space planning assuming maximum likelihood observations.

Ross, S., Pineau, J., Chaib-draa, B., & Kreitmann, P. (2011). A Bayesian approach for learning and planning in partially observable Markov decision processes. Journal of Machine Learning Research 12(5).

Sridharan, M., Wyatt, J., & Dearden, R. (2010). Planning to see: A hierarchical approach to planning visual actions on a robot using POMDPs. Artificial Intelligence, 174(11), 704–725.

Eidenberger, R., & Scharinger, J. (2010). Active perception and scene modeling by planning with probabilistic 6d object poses. In: 2010 IEEE/RSJ international conference on intelligent robots and systems (pp. 1036–1043). IEEE.

Zheng, K., Sung, Y., Konidaris, G., & Tellex, S. (2021). Multi-resolution pomdp planning for multi-object search in 3d. In: IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

Zhang, S., Sridharan, M., & Washington, C. (2013). Active visual planning for mobile robot teams using hierarchical POMDPs. IEEE Transactions on Robotics, 29(4), 975–985.

Konidaris, G., Kaelbling, L. P., & Lozano-Perez, T. (2018). From skills to symbols: Learning symbolic representations for abstract high-level planning. Journal of Artificial Intelligence Research, 61, 215–289.

Sinapov, J., Khante, P., Svetlik, M., & Stone, P. (2016). Learning to order objects using haptic and proprioceptive exploratory behaviors. In: IJCAI (pp. 3462–3468).

Aldoma, A., Tombari, F., & Vincze, M. (2012). Supervised learning of hidden and non-hidden 0-order affordances and detection in real scenes. In: 2012 IEEE international conference on robotics and automation (pp. 1732–1739). IEEE.

Katehakis, M. N., & Veinott, A. F., Jr. (1987). The multi-armed bandit problem: decomposition and computation. Mathematics of Operations Research, 12(2), 262–268.

Zhang, S., Khandelwal, P., & Stone, P. (2017). Dynamically constructed (po) MDPs for adaptive robot planning. In: Proceedings of the AAAI conference on artificial intelligence (vol. 31).

Zhang, S., & Stone, P. (2020). icorpp: Interleaved commonsense reasoning and probabilistic planning on robots. arXiv preprint arXiv:2004.08672.

Kurniawati, H., Hsu, D., & Lee, W. S. (2008). Sarsop: Efficient point-based pomdp planning by approximating optimally reachable belief spaces. In: Robotics: science and systems (vol. 2008). Citeseer.

Khandelwal, P., Zhang, S., Sinapov, J., Leonetti, M., Thomason, J., Yang, F., Gori, I., Svetlik, M., Khante, P., Lifschitz, V., et al. (2017). Bwibots: A platform for bridging the gap between ai and human-robot interaction research. The International Journal of Robotics Research, 36(5–7), 635–659.

Tatiya, G., Shukla, Y., Edegware, M., & Sinapov, J. (2020). Haptic knowledge transfer between heterogeneous robots using kernel manifold alignment. In: 2020 IEEE/RSJ international conference on intelligent robots and systems.

Tatiya, G., Hosseini, R., Hughes, M. C., & Sinapov, J. (2020). A framework for sensorimotor cross-perception and cross-behavior knowledge transfer for object categorization. Frontiers in Robotics and AI, 7, 137.

Ross, S., Chaib-draa, B., & Pineau, J. (2007). Bayes-adaptive pomdps. Advances in neural information processing systems 20.

Ding, Y., Zhang, X., Zhan, Xingyu., Zhang, S. (2022). Learning to ground objects for robot task and motion planning. IEEE Robotics and Automation Letters. 7(2),5536–5543.

Tatiya, G., Francis, J., Sinapov, J. (2023). Transferring Implicit Knowledge of Non-Visual Object Properties Across Heterogeneous Robot Morphologies. IEEE International Conference on Robotics and Automation (ICRA).

Funding

AIR research is supported in part by the National Science Foundation (NRI-1925044), Ford Motor Company, OPPO, and SUNY Research Foundation. MuLIP lab research is supported in part by the National Science Foundation (IIS-2132887, IIS-2119174), DARPA (W911NF-20-2-0006), the Air Force Research Laboratory (FA8750-22-C-0501), Amazon Robotics, and the Verizon Foundation. GLAMOR research is supported in part by the Laboratory for Analytic Sciences (LAS), the Army Research Laboratory (ARL, W911NF-17-S-0003), and the Amazon AWS Public Sector Cloud Credit for Research Program. LARG research is supported in part by the National Science Foundation (CPS-1739964, IIS-1724157, FAIN-2019844), the Office of Naval Research (N00014-18-2243), Army Research Office (W911NF-19-2-0333), DARPA, General Motors, Bosch, and Good Systems, a research grand challenge at the University of Texas at Austin.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

This work has taken place in the Autonomous Intelligent Robotics (AIR) group at The State University of New York at Binghamton, the Multimodal Learning, Interaction, and Perception (MuLIP) laboratory at Tufts University, the Grounding Language in Actions, Multimodal Observations, and Robots (GLAMOR) lab at The University of Southern California, and the Learning Agents Research Group (LARG) at the Artificial Intelligence Laboratory, The University of Texas at Austin. Peter Stone serves as the Executive Director of Sony AI America and receives financial compensation for this work. The terms of this arrangement have been reviewed and approved by the University of Texas at Austin in accordance with its policy on objectivity in research. The views and conclusions contained in this document are those of the authors alone.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, X., Amiri, S., Sinapov, J. et al. Multimodal embodied attribute learning by robots for object-centric action policies. Auton Robot 47, 505–528 (2023). https://doi.org/10.1007/s10514-023-10098-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10514-023-10098-5