Abstract

During the past decade, a small but rapidly growing number of Law&Tech scholars have been applying algorithmic methods in their legal research. This Article does it too, for the sake of saving disclosure regulation failure: a normative strategy that has long been considered dead by legal scholars, but conspicuously abused by rule-makers. Existing proposals to revive disclosure duties, however, either focus on the industry policies (e.g. seeking to reduce consumers’ costs of reading) or on rulemaking (e.g. by simplifying linguistic intricacies). But failure may well depend on both. Therefore, this Article develops a `comprehensive approach', suggesting to use computational tools to cope with linguistic and behavioral failures at both the enactment and implementation phases of disclosure duties, thus filling a void in the Law & Tech scholarship. Specifically, it outlines how algorithmic tools can be used in a holistic manner to address the many failures of disclosures from the rulemaking in parliament to consumer screens. It suggests a multi-layered design where lawmakers deploy three tools in order to produce optimal disclosure rules: machine learning, natural language processing, and behavioral experimentation through regulatory sandboxes. To clarify how and why these tasks should be performed, disclosures in the contexts of online contract terms and privacy online are taken as examples. Because algorithmic rulemaking is frequently met with well-justified skepticism, problems of its compatibility with legitimacy, efficacy and proportionality are also discussed.

Similar content being viewed by others

1 Introduction

Ms Schwarz had just finished editing her article, and was about to email it to a journal, when her laptop stuck: she was asked to choose among three different layouts of her browser’s tab, so that next time she would open the app, the tab would look exactly like her preferred option, between an ‘Inspirational’, ‘Informational’ and ‘Focused’ appearance.

Here are the layouts:

The browser tab is an example of algorithmically ‘targeted disclosure’, through which a companyFootnote 1 uses Information TechnologyFootnote 2 to convey information to consumers in a way that suits their preferences. It also provides a nice way to visualize the goal of this article: How can NLP and ML algorithmsFootnote 3 be used to target disclosure rules at clusters (not individuals) of consumers to reduce the rules’ failures? How could that be done in ways to ensure that disclosure rules be implemented automatically by the industry, thus significantly decreasing the cost of complying? And how could disclosure be differentiated to target the different informational preferences of consumers? Could that be done by rulemakers? Taking the economic ground for producing disclosure rules as a given, how should rulemakers proceed?

We take online contract terms and online privacy disclosures as examples to illustrate our proposal. In fact, both are massively produced by providers of websites, are usually not subject to face-to-face negotiations with consumers but rather accepted on a take-it-or-leave-it basis. Far from reducing information asymmetry and increasing the bargaining power of consumers, the information conveyed through such duties not only increases obfuscation (Bar-Gill 2014), but is often ‘weaponized’ by the industry (Luguri and Strahilevitz 2021; Stigler Center 2019) to steer individuals towards behaviors that maximize the industry’s profits (Thaler 2018). For instance, through lengthy and obscure contract terms, companies may increase the acceptance rate of online offers, or induce individuals to buy tied insurance services. Similarly, by framing the choice of lenient cookie policies in a more prominent way, users are induced to provide more personal data than they would according to their rational preferences.

That disclosure regulation online is prone to failure is nothing new (Ben-Shahar and Schneider 2014; Bakos et al. 2014; Marotta-Wurgler 2015). And its detractors are as numerous as are attempts to revitalize it. Among the latter, the most promising are the Law&Tech scholars (Ashley and Kevin 2017; Livermore and Rockmore 2019), some of whom suggest using NLP and ML tools to personalize, simplify and summarize disclosures. Some authors (Ayres and Schwartz 2014) propose to automatically detect unexpected or unfavorable terms in privacy disclosure policies, and presenting them in a separate warning box. Others suggest to frame terms of contracts differently to increase readability of online privacy policies, based on behavioral evidence (Plaut and Bartlett 2012); still others propose to base the decision of which parts consumers should pay special attention to on the requirements imposed by privacy law and consequently focus on choice provisions (Mysore Sathyendra et al. 2017). Moreover, others recommend to employ bots to highlight the content of platforms’ privacy disclaimers or help educate consumers (Harkous et al. 2018). Finally, an important literature is emerging that identifies “probabilistic disclosures” as superior to discrete yes/no type disclosures (Levmore 2021).Footnote 4

Although the proposed solutions are certainly promising, and come with the great benefit of only marginally intervening with the consumer’s autonomy, they present some critical shortcomings.

First, they suggest intervening mostly on the implementation phase, namely to adjust fallacies at the firm-level disclosure policies, assuming that the sole reason disclosure regulations fail is the prohibitive cost of reading (Bartlett et al. 2019). For instance, a great work has been done using algorithms to show that online privacy policies are often incomplete (Contissa et al. 2018a, b; Lepina et al. 2019) or some of their clauses are linguistically imprecise (Liu et al. 2016). With regard to online contracts, an algorithm has been developed to automatically detect clauses that are potentially unfair under EU law (Lippi et al. 2018).

However, the source of failure may well depend also on how disclosure rules are formulated in the first place. For example, goals may be self-defeating: the GDPR, Article 12 requires data controllers to provide individuals with the information on their rights (stipulated in Articles 13 and 14) ‘in a concise, transparent, intelligible and easily accessible form, using clear and plain language’. Being concise and intelligible at the same time can be two conflicting goals.

Second, the solutions proposed make assumptions about what consumers need to be warned of that are based on singular surveys. In this sense, they are static, instead of being continuously revived and dynamically updated with the support of live data. However, this would be necessary in order to react to shifts in both consumer preferences and business behavior. Designing legal solutions on evidence that is gathered through ad hoc experiments may be limiting, as new evidence may come about showing opposing results. For instance, in the US, the recently proposed ‘Algorithmic Justice and Online Platform Transparency Act’Footnote 5 establishes new disclosure duties on online platformsFootnote 6 with regard to the algorithmic processes they utilize to ‘promote content to a user’ (i.e. personalized product/service). Although these data are made available to the regulator,Footnote 7 the FTC would only access past (not real time) information. In other words, this solution may not allow capturing in due time if consumers become reactive to some piece of information and not to other, or if companies adapt their disclosures to behavioral changes of consumers (as is the case with dark patterns)Footnote 8 or to counter changes in the law. Therefore, repeated experiments using real time data would be preferable in order to sustain regulatory intervention. Apart from that, no evidence is available of the number of consumers actually using, or the efficacy of the bots like CLAUDETTE (Lippi et al., at 136–137) and darkpatterns.com (Calo 2014; Stigler Center, at 28).

Before this background, this Article innovates in a relevant regard: it argues that algorithmic tools can and should be used that ‘comprehensively’ consider solutions at the drafting stage jointly with the implementation phase of disclosure regulation. This ‘comprehensive approach’ conceptualizes disclosure regulation as a process composed of rulemaking and implementation, and therefore suggests using algorithms to tackle the fallacies affecting each step singularly and all of them together (part one). As a unique contribution, this article elucidates on how to implement this ‘comprehensive approach’ in practice. From a technical point of view, lawmakers should deploy three tools in order to produce optimal disclosure rules: machine learning (ML), natural language processing (NLP), and behavioral experimentation through regulatory sandboxes (part two).

Lawmakers should begin (Phase One) by creating a dataset composed of disclosure rules, firm-level disclosure policies, and the case law pertaining to both (Sect. 3.1.1). Next, they should deploy NLP and other techniques to map out the causes of failure, rank the disclosures accordingly and find the best matches of law and implementation on the basis of such ranking (Sect. 3.1.2). The goal would be to develop an ontology of self-implementable rules that produces good outcomes in terms of readability, informativeness, and coherence, which could be dynamically updated. This ontology is called HOD or Hypothetically Optimal Disclosure (Sect. 3.1.3), which would only include disclosure rules that fail the least (according to our library of measurable failure indexes), and therefore give rise to the least disputed issues (out of the relevant case-law). HOD raise nonetheless questions of efficacy, legitimacy and proportionality that need to be addressed (Sect. 3.1.4).

For Phase Two suggests exploring the potential of regulatory experimentation in sandbox, as a viable solution. We propose testing HOD with regulatory experimental methods, as a unique solution. Regulatory sandboxes are thus presented as a means to pre-test of different layouts of HOD disclosures with stakeholders in a collaborative (co-regulatory) fashion; to ensure transparency and participation; target disclosures to increase efficacy; and cluster individuals (Sect. 3.2.1).

Section 3.2.2 explains how the sandbox is organized, both from a governance perspective and a technical one. The final outcome to sort with, once behavioral data from the sandbox are integrated, is the Best Ever Disclosures, or BED: an algorithm producing legal notices that would be targeted at clusters of consumers; updated continuously with rules, caselaw and behavioral data, and that would also be automatically implementable.

Section 3.2.3 explains how automatic implementation of BED on large scale works, both at the very first launch on the market, and successively, when amendments are needed.

Lastly, a discussion of possible drawbacks and wider effects of BED algorithmic disclosures on stakeholders is presented (Sect. 3.2.4) before concluding.

2 Part one: The case for a ‘comprehensive approach’

2.1 Disclosure regulation in online markets: a failing strategy in need of a cure

Traditionally, Disclosure Regulation serves function of reducing information asymmetries that plague consumers (Akerlof 1970) and their unequal bargaining power (Coffee 1984; Grossman and Stiglitz 1980). In online consumer transactions we are literally flooded with transparency and disclosure duties and policies. For instance, the EU Consumer Rights Directive (CRD)Footnote 9 contains several rules mandating the provision of information to the point of limiting the freedom to design e-commerce websites.Footnote 10 The CRD also relies on pre-contractual information requirements to protect consumers: online marketplaces must inform consumers about the characteristics of a third party offering goods, services, or digital content in the online marketplaceFootnote 11; state if the provider is a trader or not (in which case, other and less protective laws would apply) (Di Porto and Zuppetta 2020)Footnote 12; or break down key information which involve costs.Footnote 13 Similarly, in the online privacy field, disclosure duties have flourished: ‘cookie banners’ (or more precisely ‘consent management platforms’, CMP) appear at any first website access requiring user consent for personal data processing, based on legal requirements in both the EUFootnote 14 and the US.Footnote 15

However, the appropriateness of such duties to provide effective protection to consumers is knowingly poor. Online contract terms and online privacy policies are unilaterally designed by the platform, and are mass-marketed online, essentially on a take-it-or-leave-it basis (Bar-Gill 2014). In this scenario, the platform can determine the ‘choice architecture’ in which consumers act, thus deliberately exploiting irrational consumer behavior to increase its profits (sludging) (Thaler 2018). For instance, one may accept to provide more personal data than she would deem reasonable according to her preferences, or accept to buy more quantities of a given service.

Personalization has boosted the manipulative power of platforms, (Zuboff 2019) and digital firms have become skilled at developing ‘dark patterns’. (Brignull 2013) through which the most vulnerable consumers are especially targeted (Stigler Center 2019).

Disclosure duties can do little to intercept or counter these practices or educate consumers. This because of the disproportionate informational disadvantage of which regulators suffer vis-à-vis the industry. Regulators do not possess granular and real-time data about users’ behavior, nor can they observe changes in privacy policies made by the industry as a response. They can certainly run experiments and collect data, but do not have enough resources to do so on a regular basis, as can digital firms (e.g. by running A/B testing). In addition, educational campaigns for consumers do not seem a viable solution, not only because they suffer from a collective action problem (Bar Gill 2014), but also because they are costly for the industry.

Despite all this evidence, rule-makers continue to employ disclosure regulation massively in both online contract terms and the privacy contexts. To quote a few: traders of online marketplaces like Amazon shall inform consumers if their prices are personalized;Footnote 16 to ensure that reviews originate from real customers or are not manipulated, platforms must provide with ‘clear and comprehensible information’ about the ‘main parameters determining the ranking’ in research queriesFootnote 17; online general search engines (like Google, Edge and the like) must provide a ‘description of the main ranking parameters and of the possibilities to influence such rankings against remuneration’.Footnote 18

In the US, as seen, the information a consumer needs to be provided with must be given in a ‘in conspicuous, accessible, and plain language that is not misleading’.Footnote 19

All these new requirements do not innovate in terms of disclosure strategy, which remains based on long duties to provide information to some impersonal non-differentiated addressee (e.g. the average consumer). Rather, they rest on traditional and disproved assumptions: that individuals will read the disclosures by just using plain and intelligible language, or by putting the information in a given place of the platform’s websites. For general terms and conditions this is requested by Articles 3 and 5, EU Regulation 2019/1150, and for rankings parameters by Article 6, CRD.

Based on previous massive evidence, however, we should expect that this avalanche of new information duties would not escape failure. For this paper argues that computer science solutions should be used ‘comprehensively’, that is: to tackle failures at the rule-making phase jointly with the implementation phase. Before illustrating how to implement this ‘comprehensive approach’ in practice (Phase Two), in the following we elucidate on our methodological approach.

2.2 Tackling failures at rulemaking and implementation stages

The idea behind the ‘comprehensive approach’ is to address failures at all levels through a two-step methodology. First we use text analysis to tackle failures of rules and policies; then we employ behavioral testing.

There is a reason if we separate this in two phases. Given the current state of art, algorithmic tools exist that allow intervening on texts and helping measuring failures pertaining specifically to the drafting of disclosures rules as well as firm-level disclosure policies in terms of readability, informativeness, and coherence (Phase One).

On the other hand, text analysis is not (yet) a good tool to identify and measure consumer behavior, nor the industry reaction to it. However, behavioral data is needed to assess how consumers and firms interact with the respective documents, and assess disclosures effectiveness with a view to overcome these types of failures. Hence, we will address the issue of how behavioral data can be generated and used in an inclusive and efficient way in a different part of the Article, by taking inspiration from the ‘regulatory sandbox’ model (Phase Two).

For completeness, one should mention a strain of literature that seems to consider both text and behavioral elements of disclosure, by addressing changes in the privacy disclosure based on rules from the GDPR as well as consumer expectations (behavioral element). More specifically, Schwartzhneider et al. (2018) claim that big disorders (like the Cambridge Analytica scandal) depend on the ‘mis-alignment’ between privacy notice and consumers’ expectations (a behavioral element) regarding those notices and contend that such failure could be avoidable if both (i) a ‘coherent flow’ of information was identifiable between rules (principles level) and disclosure policies, and if (ii) the (average) consumer was not ‘overwhelmed by the legal[istic] language.’ Another noteworthy example is the work of Gluck et al. (2016) who link textual failures of overly lengthy privacy policies with behavioral elements (like the negative framing of disclosures offered to consumers).

In both cases, however, what lacks is a full picture, capable of capturing and measuring all failures of disclosures (not just length or legalistic language) at all different stages: the drafting of disclosure rules, their firm-level implementation, and behavioral failures when consumers are exposed (as well as their interactions).

3 Part two: Implementing the ‘comprehensive approach’

3.1 Phase one: Getting to hypothetically optimal disclosures (HOD)

3.1.1 Mapping texts

Lawmakers should begin by creating three datasets composed of disclosure rules (Sect. 1), firm-level policies (Sect. 2), and the case-law pertaining to both (Sect. 3). Again, the domains of online privacy and online contract terms are taken as examples.

-

1.

Disclosure rules as dataset: the De Iure disclosures

For the sake of simplicity, we term De Iure disclosures all rules where disclosure duties are set. In the privacy context, requirements to platforms to disclose information to individuals regarding their rights, how their data are collected and treated would fit this category. Just as examples we may quote: Sec. 1798.100(a) CCPA, which stipulates the duty of ‘a business that collects a consumer’s personal information [to] disclose to that consumer the categories and specific pieces of personal information the business has collected’. GDPR Art. 12 requires data-controllers to provide similar information to data subjects.

Technically speaking, these rules can be understood as datasets (Livermore and Rockmore), that can be retrieved and analyzed through NLP techniques (Boella et al. 2013, 2015), easily searched (e.g. via the Eur-lex repository), modelled (e.g. using LegalRuleML) (Governatori et al. 2016; Palmirani and Governatori 2018), classified and annotated (e.g. through the ELI annotation tool)Footnote 20 For instance, the PrOnto ontology has been developed specifically to retrieve normative content from the GDPR (Palmirani et al. 2018).

While rules may be clear in stating the goals of required disclosure, it may well be that convoluted sentences or implied meaning appear that make the stated goal far from clear. Also, the same rule may sometime prescribe a conduct with a nice level of detail (if X, than Y), but it may include provisions that require, for instance, that information about privacy shall be given by platforms in ‘conspicuous, accessible, and plain language’.Footnote 21 Even if governmental regulation is adopted specifying what these terms mean, they would not escape interpretation (Waddington 2020), and thus possible conflicting views by the courts.Footnote 22

To help attenuate these problems, proposals have been made to use NLP tools to extract legal concepts and linking them to one another, e.g. through the combination of legislation database and legal ontology (or knowledge graph). Boella et al. (2015) suggest using the unsupervised TULE parser and a supervised SVM to automate the collection, classification of rules and extraction of legal concepts (in accordance with Eurovoc Thesaurus). This way, the meaning of legal texts will be easier to understand, making complex regulations and the relationships between rules simpler to catch, even if they change overtime. Similarly, LegalRuleML may be used to specify in different ways how legal documents evolve, and to keep track of these evolutions and connect them to each other.

-

2.

Firm-level disclosure policies as dataset: the De Facto disclosures

The second dataset is that of firm-level disclosure policies, that we term De Facto disclosures. The latter include but are not identical to the notices elaborated by the industry to implement the law or regulations. We refer to the overly-famous online Terms of Services (commonly found online and seldom read). With regard to privacy policies, pioneering work in assembling and annotating them was undertaken by Wilson et al. (2016), resulting in the frequently used ‘OPP-155’ corpus. Indeed, ML is now standard method to annotate and analyze industry privacy policies (Sarne et al. 2019; Harkous et al. 2018).

-

3.

The linking role of case-law

The case-law would play an important role, serving as the missing link between legal provisions and their implementation. Indeed, courts’ decisions help detect controversial text and provide clarification on the exact meaning to give both De Iure and De Facto disclosures. It follows that case outcomes and rule interpretation should be used to update the libraries with terms that can come out as disputed, and others that can become settled and undisputed.Footnote 23

A good way to link the case law with rules is that proposed by Boella et al (2019) who present a ‘database of prescriptions (duties and prohibitions), annotated with explanations in natural language, indexed according to the roles involved in the norm, and connected with relevant parts of legislation and case law’.

In the EU legal system, a question might arise if only interpretative decisions by the European Courts or also those of national jurisdictions should be included in the text analysis, given that the first would provide uniform elucidation that binds all national courts (having force of precedent), but most case-law on disclosures originates from national controversies and does not reach the EU courts. We know, for instance, that the EU jurisprudence saves to global platforms only a minor part of the costs they spend in controversies with consumers; the paramount ones are those platforms bear for litigations hold before national jurisdictions,Footnote 24 where there is no binding precedent, and the same clause can be qualified differently.

Moreover, differently from the US,Footnote 25 in Europe, only the decisions by the EU Courts are fully machine-readable and coded (Panagis et al. 2017),Footnote 26 while the process to make national courts’ ones also so is still in the making (it is the European Case Law Identifier: ECLI),Footnote 27 although at a very advanced stage. Nonetheless, analytical tools are already available that allow to link the EU to national courts’ cases. For instance, Agnoloni et al 2017 introduced the BO-ECLI Parser Engine, which is a Java-based system enabling to extract and link case law from different European countries. By offering pluggable, national extension, the system produces standard identifier (ECLI or CELEX) annotations to link case law from different countries. Furthermore, the EU itself is increasingly conscious of the need to link European and national case law, resulting, for instance, in the EUCases project which developed a unique pan-European law and case law Linking Platform.Footnote 28

As shown by Panagis (cit.), of algorithmic tools, citation network analysis in particular, can be extremely useful in addressing not only the question of which is the valid law but also which preceding cases are relevant as well as how to deal with conflicting interpretations by different courts. The latter is especially relevant in systems where there is no binding precedence (i.e. most national EU legal systems) and where, consequently, differing interpretations of certain ambiguous terms might arise. By combining network analysis and NLP to distinguish between different kind of references, it might be possible to assess which opinions are endorsed by the majority of courts and could thus be considered the ‘majority opinion’. While other methods to analyze citations in case law might establish the overall relevance of certain cases in general, only the more granular methodology suggested by Panagis et al. seems well fit to assess which interpretations of certain ambiguous terms are “the truly important reference points in a court’s repository”. In this way, case law can be used to link the general de iure disclosures and the specific de facto disclosures while duly taking into account different interpretations of the former by different courts.

3.1.2 Mapping the causes of failure

To measure the causes of failure of both de iure and de facto disclosures is not an easy task (Costante et al. 2012). Nevertheless, quantitative indices are indispensable to conduct the following analysis, to make the information they store easily accessible and readable for machines and algorithms. Also, such indexes guarantee the repeatability and objectivity required for the sake of scientific validity.

In line with our ‘comprehensive approach’, for each stage, failures must be identified, mapped and linked with the failures at other stages, since these are inherently intimately related.

Therefore, we propose defining a standard made of three top-level categories of failure that can be used for both de Iure and de facto disclosures:

-

(i)

Readability. Length of text can be excessive leading to information overload.

-

(ii)

Informativeness. Lack of clarity and simplicity can lead to information overload. But also the lack of information can result in asymmetry.

-

(iii)

Consistency. Lack of same lexicon and cross reference in the same document or across documents that may lead to incoherence.

Based on these three framework categories, we establish golden standard thresholds and rank clauses as optimal (O) or sub-optimal (S–O) (Contissa et al. 2018a). This way, we would for instance, rank as S–O a privacy policy clause under the ‘length of text’ index, if it fails to achieve the established threshold under the goal of ‘clarity’ as stated in the GDPR Article 12. At the same time, however, Article 12 or some of its provisions—as seen—may score S–O under other failure indexes, such as lack of clarity (vagueness). The case-law might help clarify whether this is the case.

In the following, we elaborate the methodology for designing a detailed system of indexes to capture the main causes of failure. Furthermore, we provide ideas on how to translate each indicator into quantitative, machine-readable indices. Table 1 summarizes our findings.

-

1.

Readability. Information overload: length of text

The first quantitative index is readability. It is mainly understood as non-readership due to information overload, and measured in terms of ‘length of text’.

There is a large variety of readability scores (Shedlosky-Shoemaker et al. 2009), based on the length of text which are frequently highly correlated, thus ‘easing future choice making processes and comparisons’ between different readability measures (Fabian et al. 2017).

Among the many, we take Bartlett et al. (2019) proposing an updated version of the old (1969) SMOG. Accordingly, annotators establish a threshold of polysyllables (words with more than 3 syllables) a sentence may contain, in order to be tagged as unreadable by the machine,Footnote 29 and hence S–O. The authors suggest ‘a domain specific validation to verify the validity of the SMOG Grade’.

This is especially relevant to make our proposal workable. Not all domains are the same and an assessment of firm-level privacy policies would clearly require to be made in each sector. For instance, the type of personal data a provider of health-related services collects would be treated differently from those of a manufacturer retailer dealing with non-sensitive data.

Under the Readability-Length of text index, sub-optimal disclosure clauses use more polysyllables than those established in the golden standard, set and measured using the revised version of SMOG proposed by Bartlett et al (2019) |

-

2.

Informativeness. Information overload: complexity of text

Lack of readability of disclosures may also depend on the complexity of text. The scholarship has suggested to measure it from both a semantic and syntactic points of view.

-

(a)

Syntactic complexity

While most analyses of readability focus on the number of words in a specified unit (e.g. a sentence, paragraph, etc.) as a proxy for complexity, only few authors focus on analyzing the syntactic complexity of a text separately (Botel and Granowsky 1972). Although some scholars search for certain conditional or relational operators, they usually do so with the aim of detecting sentences that are semantically vague or difficult to understand (e.g. see Liepina et al. (2019): see next para.).

Going back to Botel and Granowsky, they propose a count system which designates a certain amount of ‘points’ to certain grammatical structures, based on their complexity (the more complex, the more points). For instance, conjunctive adverbs (‘however’, ‘thus’, ‘nevertheless’, etc.), dependent clauses, noun modifiers, modal verbs (‘should’, ‘could’, etc.) and passives will be assigned one or two points respectively, whereas, for instance, simple subject-verb structures (e.g. ‘she speaks’) receive no points.Footnote 30 The final complexity score of a text is then calculated as the arithmetic average of the complexity counts of all sentences.Footnote 31

An alternative approach is that of Szmrecsanyi (2004), who proposes an ‘Index of Syntactic Complexity’, which relies on the notion that ‘syntactic complexity in language is related to the number, type, and depth of embedding in a text’, meaning that the more number of nodes in a sentence (e.g. subject, object, pronouns), the higher the complexity of a text.Footnote 32 The proposed index thus combines counts of linguistic tokens like subordinating conjunctions (e.g. ‘because’, ‘since’, ‘when’, etc.), WH-pronouns (e.g. ‘who’, ‘whose’, ‘which’, etc.), verb forms (finite and non-finite) and noun phrases.Footnote 33

Although this might be ‘conceptually certainly the most direct and intuitively the most appropriate way to assess syntactic complexity’, it is pointed out that this method usually requires manual coding.Footnote 34

Since at least the last two measures seem to be highly correlated,Footnote 35 choosing among them might in the end be a question of the computational effort associated with calculating such scores.

-

(b)

Semantic complexity

Semantic complexity (or the use of complex, difficult, technical or unusual terms called ‘outliers’) is analyzed by Bartlett et al. (2019) who use the Local Outlier Factor (LOF) algorithm (based on the density of a term’s nearest neighbors) to detect such terms.

Approaching the issue of semantic complexity from a slightly different angle, Liepina et al. (2019) evaluate the complexity of a text based on four criteria: (1) indeterminate conditioners (e.g. ‘as necessary’, ‘from time to time’, etc.), (2) expression generalizations (e.g. ‘generally’,’normally’, ‘largely’, etc.), (3) modality (‘adverbs and non-specific adjectives, which create uncertainty with respect to the possibility of certain actions and events’) and (4) non-specific numeric qualifiers (e.g. ‘numerous’, ‘some’, etc.). These indicators are then used to tag problematic sentences as ‘vague’.

In a similar vein, Shvartzshnaider et al. (2019) base their assessment of complexity/clarity on tags, however, in a different manner. They analyze the phenomenon of ‘parameter bloating’, which can be explained as follows: building on the idea of ‘Contextual Integrity’ or CI and information flows,Footnote 36 the description of an information flow is deemed (too) complex (or bloated) when it ‘contains two or more semantically different CI parameters (senders, recipients, subjects of information, information types, condition of transference or collection) of the same type (e.g., two senders or four attributes) without a clear indication of how these parameter instances are related to each other’Footnote 37 This results in a situation where the reader must infer the exact relationship between different actors and types of information, which significantly increases the complexity of the respective disclosure (at 164). Therefore, the number of possible information flows might be used as a quantitative index to measure the semantic complexity of a clause.

Under the Informativeness-Semantic complexity Index, a S–O disclosure clause contains more outliers or semantically different CI parameters in information flows than the number set in the golden standard, defined and measured using Bartlett et al (2019) or Shvartzshnaider et al. (2019) respectively. |

-

3.

Informativeness. Information asymmetry: lack of information

Another failure index of information asymmetry is the completeness of the information provided in a disclosure. Comprehensiveness has been investigated mainly at the firm-level disclosure policies, rather than the rulemaking (Costante et al. 2012). It must be noted that the requirement of completeness does not automatically counter readability. While an evaluation of the completeness of a disclosure clause is merely concerned with the question whether all essential information requested by the law is provided, readability problems mostly arise from the way this information is presented to the consumer by the industry. Therefore, a complete disclosure is not per se unreadable (just as an unreadable disclosure is not automatically complete) and the two concepts thus need to be separated.

Several authors suggest tools to measure completeness, especially in the context of privacy disclosure. However, nothing impedes to transfer the approaches presented in this section to disclosures like terms and conditions of online contracts.

For instance, based on the above-outlined theory of CI, Shvartzshnaider et al. define completeness of privacy policies as the specification of all five CI parameters (senders, recipients, subjects of information, information types, condition of transference or collection). Similarly, Liepina et al. (2019) consider a clause complete if it contains information on 23 pre-defined categories (i.e. ‘ <id> identity of the data controller, <cat> categories of personal data concerned, and <ret> the period for which the personal data will be stored’). If information that is considered ‘crucial’ is missing, the respective clause is tagged as incomplete. Manually setting the threshold would then help define if a clause scores as optimal or not.

A similar, but slightly refined approach is presented by Costante et al. (2012, at 3): while they also define a number of ‘privacy categories’ (e.g. advertising, cookies, location, retention, etc.), their proposed completeness score is calculated as the weighted and normalized sum of the categories covered in a paragraph.120F.

For our purposes, a privacy or online contract disclosure clause could be ranked using the methodology suggested by Costante et al (2012) and Liepina et al., or alternatively, by Contissa et al. In both cases, however, corpus tagging would be necessary.

Under the Informativeness-Lack of information Index, in sub-optimal disclosure clauses (of a given length) the number of omitted elements is higher than the pre-defined minimum necessary standard, defined and measured using Liepina et al (2019) and Costante et al (2012) or Contissa et al. (2018a, b). |

-

4.

Consistency of documents

One of the two root causes of failure identified above concerns the misalignment of the regulatory goals behind the duty to disclose certain information (as stated in the de iure disclosures) and the actual implementation thereof in the de facto disclosure. The general criterion that can be derived from this is that of consistency, which can be translated into two sub-criteria: internal and external. However, since their measure and computability are identical, they will be treated together.

Internal consistency denotes the recurrence of the same lexicon in different clauses of the same document as well as the verification of cross-references between different clauses within the same document. External coherence means the cross-references that refer to clauses contained in different legal documents. External coherence too can be understood both as the recurrence of the same lexicon across referred documents and the verification of the respective cross-references. For instance, one rule in the GDPR might refer to others both explicitly (e.g. Article 12 recalling Article 5) or implicitly (like the Guidelines on Transparency provided for by the European Data Protection Board)Footnote 38; or a privacy policy might refer to a rule without expressly quoting its article or alinea in the article.

Unfortunately, there is no common, explicit operationalization of internal and external coherence in the literature.

A first attempt to analyze cross-references in legal documents is made by Sannier et al. (2017), who develop a NLP-based algorithmic tool to automatically detect and resolve complex cross-references within legal texts. Testing their tool on Luxembourgian legislation as well as on regional Canadian legislation, they conclude that NLP can be used to accurately detect and verify cross-references (at 236). However, their tool would allow to construct a simple count measure of unresolved cross-referenced, which might serve as a basis for the operationalization of internal and external coherence, both in terms of the lexicon used (see above, 2nd cause of failure: complexity of text), and the correct referencing of different clauses (see above, 3rd cause of failure: lack of information). Nevertheless, this is far from the straightforward, comprehensive solution one might wish for.

A solution could be to rely on more complex NLP tools such as ‘citation networks’, as proposed by Panagis et al. (2017) or ‘text similarity models’, as suggested by Nanda et al. (2019).

The citation network analysis tool by Panagis et al. (2017), seems particularly straightforward, since it uses the Tversky index to measure text similarity. Therefore, using a tool such as theirs would automatically cover both the verification of cross-reference links as well as an evaluation of the textual similarity of the cited text.

Another promising option to capture text similarity is the model proposed by Nanda et al. (2019), who use a word and paragraph vector model to help measure the semantic similarity from combined corpuses.Footnote 39 After manually mapping the documents (rules provisions and respective policy disclosures), the corpuses are automatically annotated helping to establish the gold standard for coherence. Provisions and terms in the disclosure documents would then be represented as vectors in a common vector space (VSM) and later processed to measure the magnitude of similarity among texts.

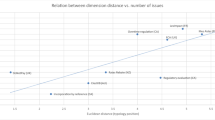

This last two models especially come with the advantage of capturing the distance in implementation of rules-based disclosures by the industry policies. They seem therefore very promising in the aim of measuring both the distance in lexicon as well as the presence of cross-reference within the same disclosure rule or policy (and define the gold standard).

3.1.3 Getting to hypothetically optimal disclosures (HOD) through ontology

Preparing the texts in the de iure and de facto data sets means that we process the disclosures in each domain to rank them, thus collecting those that score optimal for each failure index. More specifically, per each clause or text partition of the disclosures in each (de iure and de facto) dataset, processing for the five analyzed indexes will provide a score, allowing to identify a set of optimal disclosure texts (see Table 2). So for instance, we should be able to select the optimal disclosure provision in the GDPR as far as its ‘readability’ index is concerned. The same should be for the clause of a privacy policy implementing that provision in a given sector (like e.g. the short term online home renting): imagine that is ‘Clause X’ of AirBnB disclosure policy. The two would form the ‘optimal pair’, under readability, of de iure and de facto privacy disclosures in the short-term online home renting sector. The same should be done for all clauses and each failure index.

The kind of coding (whether done manually or automatically) and training to employ, clearly depends on the methodology that will be chosen to perform for each of the failure indexes sketched above. In any event, labelling the disclosures might require some manual work by legal experts in the specific sector considered.

The next step is to link the two selected ‘optimal pair’ of de iure and de facto disclosures in the data sets, to reach a sole dataset of what we should term Hypothetically Optimal Disclosure, or HOD.

While, theoretically, a simple, manually organized, static database could be used to do so, the Law & Tech literature suggests a significantly more effective and flexible solution: the use of an ontology/knowledge graph (Shrader 2020; Sartor et al. 2011; Benjamins 2005)10 (Table 3).

As discussed above (I.A.1), legal ontologies are especially apt in this purpose, because they allow automating the extraction and linking of legal concepts, and to keep them up to date even if they change overtime (Boella et al 2015). Another reason is that some ontologies allow to link legal norms with their implementation practices, a feature that is relevant to us.

A good model for linking texts through ontology is provided for by the Lynx projectFootnote 40 (Montiel-Ponsoda and Rodríguez-Doncel 2018). Lynx has developed a ‘Legal Knowledge Graph Ontology’, meaning an algorithmic technology that links and integrates heterogeneous legal data sources such as legislation, case law, standards, industry norms and best practices.Footnote 41 Lynx is especially interesting as it accommodates several ontologies able to provide the flexibility required to include additional nodes anytime rules or policies change.

To adapt the Lynx ontology to our needs, manual annotation to establish structural and semantical links of de iure and de facto disclosure datasets would nonetheless be needed. That should be done taking into consideration the results of the ranking process, upon which optimal disclosure pairs are selected (Table 2, above). Hence, manual annotation in ontology would consist in functionally linking of only the latter texts, based on semantic relations between their contents.

In our model, nodes will be represented by the failure criteria sketched above. These nodes are already weighted as Optimal/Sub-Optimal and thus given a specific relevance, which allows an analytically targeted and granular nuancing of the ontology.

A further step consists in the assessment of the overall ‘coherence’ of HOD ontology. Coherence in this context is understood as a further failure index, consisting of Lack of cross-reference between the Optimal principles-level rule and the corresponding Optimal implementing level policy (Table 3).

After manual annotation, to cross-validate amongst clauses across datasets, this process would help to further verify if there is cross-reference between the optimal pairs, or between the principles-level of the de iure disclosure and the application level of the de facto disclosures, given that they might come from policies drafted by different firms.

A solution could be to rely on ‘citation networks’, as proposed by Alschner and Skougarevskiy (2015). Focusing on the lexical component of coherence, citation network would help to calculate the linguistic ‘closeness’ between different, cross-referenced documentsFootnote 42 and to assess their coherence.Footnote 43

This way, we will be able to give evidence to the overall optimal linked disclosures (i.e. showing the highest scores assigned to each and every pair per single sector domain) and hence to validate the overall coherence of HOD per given domain.

In conclusion, out of the linked data ontology HOD, we should be able to select the texts that fail the least, under a comprehensive approach. These are linked texts, made of the optimal rules (disclosure duties), linked to their optimal implementations (policies), whose terms are clarified through the case law and that score optimal for each and every failure index.

HOD are self-executing algorithmic disclosures, which specifications can be used by the industry to directly implement their content. This however opens a plethora of legal and economic questions regarding their efficacy, legitimacy and proportionality.

3.1.4 Limitations of HOD: legitimacy and efficacy

HOD are selected that are the optimal available algorithmic disclosures, but they are still prone to failure. We do not know how effective they might be in leading to behavioral change; how well they could inform real consumers and have them make a sensible choice (for a skeptical take: Zamir and Teichman 2018), given their diverse preferences (Fung et al. 2007). We do not have evidence if the optimal disclosure text regarding a given clause will perform well or not. For instance, imagine we are ranking disclosures in the short-term online renting sector, and that the HOD regarding information provision on the service ranking indicates that the optimal pair is “CRD Art. 5”—“AirBnB Terms, Clause X”: what do we know about its efficacy? The HOD cannot tell.

Moreover, since the comprehensiveness of the proposed approach implies that HOD might complement or even partially substitute tasks that would normally be executed or at least supervised by democratically elected representatives, concerns of legitimacy arise. In the example done, once the optimal pairs identified through the HOD, the idea is that “CRD Art. 5”- “AirBnB Terms Clause X” would be automatically implementable. However, that would be problematic under legitimacy terms.

Lastly, HOD may lack proportionality, since they are addressed to undiversified, homogeneous consumers (the average ones), based on assumption of homogenous reading, understanding, evaluation, and acting capabilities (Di Porto and Maggiolino 2019; Casey and Niblett 2019). However, the same disclosure may well be excessively burdensome for less cultivated consumers, while being effective for well-informed, highly literate ones.

In the following, we explore these three issues separately.

-

1.

Untested efficacy of HOD

Although they are hypothetically optimal inter-linked texts, constantly updated with new rules, industry policies, and case-law, easily accessible and simplified, not so costly to read and understand, the overall efficacy of HOD remains untested.

On this land stand the enthusiasts, like Bartlett et al. who purport that the use of text analysis algorithmic tools, which summarize terms of contracts and display them in graphic charts, ‘greatly economize[s] on [consumers’] ability to parse contracts’ (Bartlett et al. 2019). However, they do not provide proof that this is really so (if one excludes the empirical evidence supporting their paper). Paradoxically, the same holds for those who oppose the validity of simplification strategies and information behavioral nudging, like Ben-Shahar (2016). They consider that ‘simplification techniques…have little or no effect on respondents’ comprehension of the disclosure.’ But again, this conclusion refers to the ‘best-practice they surveyed’.

-

2.

Legitimacy deficit of HOD

HOD suffer from a deficit of legitimacy. Because an algorithm is not democratically elected, nor is it a representative of the people, it cannot sic et simpliciter be delegated rulemaking power (Citron 2008, at 1297).

While in a not so far future it may well be that disclosure rules become fully algorithmic (produced through our HOD machine), a completely different question is whether disclosure we have selected as the hypothetically optimal might also become ‘self-applicable’, or, in other words, whether their adoption can become one step only, without any need for implementation. This is surely one of the objectives of HOD. By selecting the optimal rules together with the optimal implementation and linking them in an ontology, we aim at having self-implementing disclosure duties.

Hence, it is necessary to re-think of implementation as a technical process, strictly linked (not merged) with the disclosure enactment phase. But especially, we need to ensure some degree of transparency of the HOD algorithmic functioning and participation of the parties involved in the production of algorithmic disclosures.

Self-implementation of algorithmic rules is one of the least studied but probably the most relevant issues for the future. A lot has been written on the need to ensure accountability of AI-led decisions and due process of algorithmic rule-making and adjudication (Crawford and Schultz 2014; Citron 2008; Casey and Niblett 2019; Coglianese and Lehr 2016). However, whilesome literature exists on transparency and explicability of automated decision-making and profiling for the sake of compliance with privacy rules (Koene et al. 2019), the question of due process and disclosure algorithmic rule-making has been substantially neglected.

However, a problem might exist that the potential addressees of self-applicable algorithmic disclosure rules do not receive sufficient notice of the intended action. That might reduce their ability to become aware of the reasons for action (Crawford and Schultz at 23), respond and hence support their own rights.Footnote 44 Also comments and hearings are generally hardly compatible with an algorithmic production of disclosures; while they would be especially relevant, because they would provide all conflicting interests at issue to come about and leave a record for judicial review. The same goes for expert opinions, which are often essential parts of the hearings: technicians may discuss the code, how it works, what is the best algorithm to design, how to avoid errors, and suggest improvements.

In the US system, it is believed that hearings would hardly be granted in the wake of automated decisions because they would involve straight access to ‘a program’s access code’ or ‘the logic of a computer program’s decision’, something that would be found far too expensive under the so-called Mathews balancing test (Crawford and Schultz at 123, Citron at 1284).

In Europe too, firms would most probably refuse to collaborate in a notice and comment rulemaking, if they were the sole owner of the algorithm used to produce disclosures, since that might imply to disclose their source codes, and codes are qualified as trade secrets (thus, exempt from disclosure).

Moreover, as (pessimistically) noted by Devins et al., the chances for an algorithm to produce rules are nullified, because ‘Without human intervention, Big Data cannot update its “frame” to account for novelty, and thus cannot account for the creatively evolving nature of law.’ (at 388).

Clearly, all the described obstacles and the few proposals thus far advanced are signs that a way to make due process compatible with an algorithmic production of disclosure rules is urgent and strongly advisable.

-

3.

Lack of proportionality of HOD

Although it is undeniable that general undiversified disclosures may accommodate heterogeneous preferences of consumers (Sibony and Helleringer 2015), in practice, they may put too heavy a burden on the most vulnerable or less cultivated ones, while not generating outweighing benefits for other recipients or the society. In this sense, they may become disproportionate (Di Porto and Maggiolino 2019).

On the other side, also targeting disclosure rules at the individual level (or personalizing) (Casey and Niblett), as suggested by Busch (2019), may be equally disproportionate (Devins et al 2017) as can generate costs for individuals and the society. For instance, if messages are personalized, the individual would not be able to compare information and therefore make meaningful choices on the market (Di Porto and Maggiolino 2019). That, in turn, would endanger policies aimed at fostering competition among products, which are based on consumers’ ability to compare information about their qualities.Footnote 45 Also, targeting at the singular level requires necessarily to obtain individual consent to process personal data (for the sake of producing personalized messages) and also show one’s ‘own’ fittest disclosure.Footnote 46

3.2 Phase Two: Integrating behavioral data into HOD: getting to the best ever disclosures (BED)

3.2.1 Experimental sandboxes to pre-test HOD

One way to possibly overcome the three claims (ensure transparency, participation, proportionality and efficacy of HOD disclosures) would be to integrate real-time behavioral data into the HOD algorithm and have it produce targeted, yet dynamic (i.e. fed by real-time data) self-implementable disclosures.

To achieve that, we suggest exploiting the potential of ‘regulatory sandboxes’. In the following, we articulate how this tool could be used to conduct pretrial tests of HOD algorithmic disclosures. Such experiments serve the triple function of ensuring legitimacy of the algorithmic rulemaking by allowing participation and transparency; producing targeted disclosures to test their efficacy, and granting proportionality by clustering.

-

1.

Regulatory sandboxes

Regulatory sandboxes are not new (Tsang 2019; Mattli 2018; Picht 2018). They exist in the Fintech industry, where new rules are experimented in controlled environments (thanks to simulations run over big data) before being implemented at large scale.Footnote 47 For instance, the UK’s Financial Conduct Authority adopted a regulatory sandbox approach to allow firms ‘to test innovative propositions in the market, with real consumers’.Footnote 48 Regulatory sandboxes can be conceptualized as venues for experimenting with co-regulation, in the sense that they foster collaboration between the regulator (which takes the lead) and the stakeholders to experiment with new avenues for rule production (Yang and Li 2018). Given their increasing relevance, they are being disciplined by the forthcoming EU Regulation on Artificial Intelligence (Article 45 ff.).

We argue for a regulatory sandbox model where, under the auspices of the regulator, stakeholders come together to pre-test the HOD algorithm to develop self-implementable targeted disclosure rules for consumers.

-

2.

Pre-testing HOD to meet legitimacy claims

The main takeaway of the above discussion on having an algorithm legitimately producing disclosure rules, is that the human presence is irrepressible. That implies that a straight suppression of any transparency and participation guarantees (for the humans) in algorithmic rulemaking is not admissible.

Using regulatory sandboxes might remedy legitimacy concerns, as stakeholders will participate in real, and contribute to the regulatory process. Of this participation (i.e. of reactions, comments, etc.) data are tracked that feed the algorithm. Indeed, in the sandbox, the regulator sets up an agile group (of consumers, digital firms, legal experts, data scientists) for the ex-ante testing of HOD algorithmic disclosures in the course of a co-regulatory process. As real individuals interact with each other in the sandbox and their true responses to legal notices are registered and fed into the algorithm, they may constitute a good substitute for both notice and comment.

-

3.

Pre-testing HOD for targeting and gather evidence of efficacy

Another reason why HOD disclosures need to be tested with real people in the sandbox is to check if they may actually change the behavior of addressees in the real world: e.g. if optimal acceptance of cookies by those adversely affected increases.

However, as said, to overcome what we consider the main limitation of the current scholarship on disclosure, we deem that experiments should not be occasional, but conducted on a ‘real-time basis’ and repeated. The sandbox mode is a proxy for real-time evidence of the recipients’ actual reaction to the disclosures. The latter will be gathered later, when algorithmic disclosures will be implemented on the market (see below 1.1.3). Nonetheless, the sandbox mode would still greatly increase our understanding of what does not work, but most importantly, would provide behavioral data for reuse in the HOD algorithm to target the messages.

Elsewhere we purported that ‘targeted disclosure’ helps increase its effectiveness, as it allows to tackle the different groups of consumers showing homogeneous understanding capabilities and preferences with different messages. For instance, we might expect that consumers participating in the sandbox testing may react differently to the HOD-produced privacy disclosure and show different click-through attitudes. This might depend on their literacy, time availability, framing, and other bias. Exposing them to differentiated layouts instead of just one might increase their ability to overcome click-though.

But this needs to be tested. And the reactions of consumers traced by the algorithm. An example might clarify: only to the extent that targetization of privacy disclosure layouts also becomes optimal, meaning that it helps most consumers in a cluster overcome click-though, can HOD become really optimal, or Best.

-

4.

Clustering to meet proportionality claims

Thus, targeting disclosures at ‘clusters’ is preferable. However, clustering is not an easy task, since clusters should be made of individuals showing similar preferences (e.g. all those who prefer detailed, long boilerplate of fine-print terms vs those who prefer synthetic warning messages). And humans are nuanced. A criterion should be set to form clusters, that can be either descriptive (what consumers in that group typically want to know) or normative (what they ought to know). Either way it should reflect sufficiently homogeneous cognition capabilities and preferences to reduce information overload and increase disclosure utility (Ben-Shahar and Porat 2021).

If data gathered in the sandbox show that a big group of consumers is especially exposed to the risk of overdue payment, then that could constitute a cluster (and a disclosure rule highlighting the consequences of payment delay, instead of a standardized all-inclusive warning list may be tested).

Only if testing sessions are repeated enough evidence is gathered of individual reaction that allows for clusterization. As known, the more data is gathered on the reaction and interaction of individuals, the easier is for the algorithm to identify clusters, based on its predictive capabilities.

Diversification of rules by clusters allows rulemakers to strike a balance between the use of predictive capabilities of algorithms, while at the same time conceiving of disclosure regulation that is compliant with the proportionality principle. In Ben-Shahar and Porat’s words, a mandated disclosure regime that grants different people different warnings to account for the different risks they face gives all people better protection against uninformed and misguided choices than uniform disclosures do. (at 156).

Also, clusterization allows for targeted disclosure to be respectful of privacy and data protection rights, while preserving of innovation and the market dynamics (Di Porto and Maggiolino at 21). But even if personalized rules are permissible under a particular jurisdiction's privacy law, the state may economize by identifying clusters of people who share sufficiently similar characteristics and draft one disclosure rule for them instead of many disclosure rules for each of them.

3.2.2 Getting to best ever disclosures (BED) through regulatory sandboxes

-

1.

Governance Design Issues

It is on the rulemaker to propitiate a regulatory sandbox, pooling together experimental groups, which would include, the final consumers, individuals representing digital firms (inclusive of platforms and SMEs,Footnote 49 which of course vary depending on the topic of algorithmic disclosures), and technical experts. Special attention should be paid to equal representation of stakeholders in each sector-specific sandbox.Footnote 50 As said, the goal of the group is to train the selected algorithm (the HOD) for designing different layouts of the best disclosures (Di Porto 2018).

Repeated sessions of tests and feedback would lead to elaborate, with the agreement of all participants, the final sets of targeted disclosures. The latter, by then would become, very emphatically, the Best Available Disclosures or BEDs, to be deployed at large scale (see below, Sect. 3.2.3).

Indeed, insofar as algorithmic HOD are fed-in with behavioral data on the reactions of real people (in an anonymized and clustered format), they could become differentiated, targeted, and timely, thus meeting the different informational needs of recipients (Di Porto 2018 at 509; Busch 2019 at 312). So, for instance, to tackle the problem of online click-through contracts (people do not read standard form contracts before agreeing), one could provide different layouts to different groups of consumers, depending on their reading preferences. These layouts would target clusters of similar consumers and would be derived from behavioral data that was generated only in the sandbox as a separate, isolated environment, but not from data of every individual.

To be more concrete, each disclosure in the HOD algorithm would target each group showing similar characteristics. For instance, three groups, depending on their capabilities, may be detected:

-

i.

the modest (to whom a super-simplified format may be preferable),

-

ii.

the sophisticate (to be targeted through extensive disclosures), and

-

iii.

the intermediate (a mix of the previous ones).

Testing may prove successful if exposure to the three layouts results in increased reading, understanding and, especially, meaningful choice (e.g. they start refusing third party tracking cookies).

Every choice the participants make will be tracked during the test, and this data will then feed the algorithm (on the technicalities of such feeding see below), providing it with information on how to produce the best disclosures, meaning those that fail the least to be read, understood, and give due course of action. At each session, new data will be recorded regarding how groups of individuals (firms, the regulator, and consumers) react to the provided information. Also, choices from firms regarding disclosure clauses should be tracked and feed in the algorithm.

So for instance, pre-contractual information regarding the right of withdrawal from distant contracts must be provided to consumers according to new Arts. 6 and 8 of the Consumers Rights Directive.Footnote 51 In particular, Art. 8 deals with the information provided on ‘mobile devices’, stating that notice on the right of withdrawal should be:

‘provided by the trader to the consumer in an appropriate way’.

In a regulatory sandbox, various messages to provide such information would be tested before consumers and digital firms, meaning that both will respond to the different layouts. All such reactions would be coded, and feedback registered.

Testing is also relevant to implement rapid amendments to the algorithmic disclosures, both the texts and the graphic layouts (i.e. where the information is located in a mobile phone screen, or when is displayed on a mobile device) should reactions of consumers not occur.Footnote 52

To help further this, the regulator should enjoy real-time monitoring powers. Indeed, the pre-testing phase also allows detecting with some precision what are the informational needs and understanding capabilities of the users. In this sense, algorithmic disclosures would produce useful information, by dynamically adapting their content and format to what the cluster recipients need at the time they need.

-

2.

Technical issues: using knowledge graph/ontology

Diverse computational techniques could be used to develop algorithmic disclosures.

Like with HOD, also to get to the BED we suggest using a knowledge graph: this way, the textual libraries from the HOD can be enriched with behavioral data coming from the sandbox (hence, we start the process with three libraries).Footnote 53 In the knowledge graph, both the text and behavioral data will be integrated employing users’ experience. To make a parallel, this operation resembles (but differs) the way Google search engine operates (through domains and supra-domains). When Google users are shown a picture and asked to ‘confirm’ that what they see is X and not Y, by clicking ‘I confirm’ they reinforce a node of the graph. Similarly, human stakeholders in the sandbox provide behavioral data that confirm the layout and text of a proposed clause, thus reinforcing nodes, and gradually strengthening the links in our BED knowledge graph.

For instance, per each group of consumers (modest, intermediate, sophisticate), the stakeholders will have to confirm the layout of a clause of privacy disclosure. The confirmation data of each group will feed the knowledge graph. If they see different layouts of cookie banners, confirmation will tell which one performed best in increasing the ability to avoid click-though (Table 4).

Behavioral data coming from the regulatory sandbox are also relevant to confirm or contradict the links and reality described by the graph. The human presence, as said, is essential to monitor if errors occur in the building of the knowledge graph: technicians supervising in the sandbox may intervene to eventually deactivate any error that may affect the algorithm (Yang and Li 2018, at 3267). That explains why we need technicians to participate in the sandbox, besides regulators, firms, and consumers.

Technically speaking, for the knowledge graph to be implemented, we need to connect all the data: the linked texts of the HOD and the behavioral ones coming from the sandbox. All of these data and information shall remain in the knowledge graph.

To that end, we should use an ontology. The ontology serves to link all the pieces with concepts of the domain, supra-domain, and vertical domain. For instance, imagine we aim to link the term ‘fintech’ (domain) to the normative goal (supra-domain) to a sector-specific term, like ‘transparency in financial fintech’ (vertical domain).

Because most of the time, rules do not speak in such a detail, we need to use a meta-level to provide further instructions (Benjamins 2005, at 39). For instance, very often rules in the financial domain do not require retailers of financial products to disclaim full detailed composition of their products, but would instead require for general transparency. Therefore, we would need to provide a meta-level whereby to instruct the algorithm this way: ‘When using the word “rules”, link it to the concept “transparency”, then link it to “disclaimer”.

To sum up, the knowledge graph technology is used to refine the BED by performing the following tasks:

-

(i)

memorizing the linked texts prepared in the HOD,

-

(ii)

annotating them (through an ontology);

-

(iii)

building a grid of theoretical-legal concepts, specific to a subject and goal, and to a sector, like in privacy.

In conceptualizing the sandbox, we should elaborate on the concepts typical of a specific sector (like privacy or online consumer contracts). To do so, we need to create relationships with a natural language sandbox, which serves to allow humans to participate in the sandbox, to either confirm or reject them. On this basis, we will provide them to the final consumers and the firms (i.e. the stakeholders). By saying that they are ‘satisfied’ (or ‘confirm’ the clauses/layouts), they will feed into the sandbox.

This should be repeated in several formats and for several times (sessions) until we get to the point where all participants are mostly satisfied and least dissatisfied. We should repeat this with the clauses of each disclosure per each of the 3 or n layouts we want to target the cluster consumers. In this way, we get to the BED we can implement at large scale.

3.2.3 Implementing BED at large scale

-

1.

Automatic implementation of BED at large scale

After the sandbox testing, disclosure should be available, that are targeted at different groups and self-implementable at large scale: these are the BED. The expected output is that the BED algorithm can produce different rules with different messages to convey to each group of consumers (a); on the industry side, BED’s specifications will be used for implementation (b); thus, firms’ trade secrets will be safe (c).

-

(a)

Allocation of consumers in the diverse clusters.

Once the BED algorithm producing automatic disclosure rules is launched on the market (implemented at large scale), users are first allocated a default intermediate group (b). However, they remain free to switch from one group to the other by choosing the preferred disclosure option.

Interactions with the algorithm will produce more data, that will be tracked and help further refining it. Choices made by the consumers between the three (or n) rule layouts and the switches among them, after due pseudonymization, may feedback into the BED algorithm and ameliorate it. On the contrary, individual choices made due to the BED (hence, their effects on a large scale) would not possibly be registered nor further analyzed due to privacy constraints,Footnote 54 unless a law expressly authorizes that.Footnote 55

-

(b)

BED’s specifications in lieu of industry-led implementation

BED algorithmic disclosures are automatically implementable. However, for BED disclosure to be launched on the markets, the industry must make an effort to technically implement its specifications, which are made publicly available. Being the latter sector-specific, and thoroughly discussed among stakeholders in the sandbox, a lot of time and costs for producing disclosures will be saved to the industry.

Making specifications open to individuals and firms, is also a means to allow the regulator to monitor the efficacy of algorithmic disclosure. Furthermore, it allows for accountability of the disclosed information and the algorithmic decision.

-

(c)

(continued) Without disclosing any trade secrets

Despite a broad consensus on an increased need for transparency when algorithmic decisions are involved, ‘it is far from obvious what form such transparency should take’ (Yang and Li at 3266). While the most straightforward response to this heightened transparency requirement would probably be the disclosure of the source codes used by firms, this approach is not feasible as the latter are unintelligible to most lay persons and highly secretive.

When adopting a ‘regulatory sandbox’ solution, however, there would be no need for the platform to disclose any of its own algorithms (which might easily remain secret) to other stakeholders participating in the trials.Footnote 56 That is because the kinds of algorithms that are being used to get to the BED are publicly available.Footnote 57 The consumers, platforms and SMEs contribute with their behavioral data to feed the BED algorithm: for instance, in case of disclosures of standard form contracts, the experimental sandbox phase would consist of the stakeholders testing different formats of ToCs. Thus, they would be enabled to enhance their disclosures without having to publicize any of their algorithms or similarly sensitive information.

-

2.

Post-implementation modification of the BED

As far as amendments to algorithmic disclosures are concerned, these could be done in the regulatory sandbox, and consequently implemented at large scale in an automated way. This is still another step, different from both the creation of the HOD algorithm, its testing with behavioral data to become BED and the latter implementation at large scale. Suppose we have already a BED algorithm working on the market that produces targeted disclosures for short-term rental service terms. Imagine that a new EU Regulation is adopted (e.g. Art 12(1) of the Digital Services Act)Footnote 58 amending the CRD and mandating digital providers to inform users about potential “restrictions”Footnote 59 to their services contained in the terms and conditions in an “easily accessible format” and written in “clear and unambiguous” language. Such new piece of law would require a refinement of BED, that we suggest doing in the sandbox, instead of starting the whole process from scratch.

This way, all modifications to BED algorithmic disclosures, that participants to the sandbox accept—and the regulator certifies—could become directly implementable by the digital firms on large scale, given that they have been ‘pre-tested’ in the sandbox. That would comply with the best practice identified by the already mentioned Guidelines of the WP29, and would allow such modifications to feedback into the BED algorithm to ameliorate it and, consequently, the disclosures.

Technically speaking, BED algorithmic disclosures update constantly depending on three factors: changes in the law/regulation or the jurisprudence (in which case the text libraries and nodes in HOD ontology update); change from the sandbox (i.e. update in the behavioral library, and consequently links to the texts through validation/confirmation in BED); change from real-world behavior after implementation on large scale.

To make a step further, one could also think of using sandboxes to “suggest” and “approve” rule modifications. This path would be another innovative, venue: all proposed modifications could be discussed, tested, and approved directly in the regulatory sandbox. That would clearly substitute the usual democratic process. So for instance, the regulator might propitiate and stakeholders in the sandbox agree to change the wording of a rule: they may agree to modify Art. 6a(1)(a), CRD and make information on the ranking parameters of search queries available.

‘by means of n icons on the x-side of the presented offer’,

instead of

‘in a specific section of the online interface that is directly and easily accessible from the page where the offers are presented.’

as it currently is.

Once the change validated in the sandbox, the BED library is modified accordingly and can thus be directly implemented.

From a legal perspective, post-implementation modifications would not only be self-implementable, but could also be given a special effect: for instance, because they have been pre-tested and validated in the sandbox, they could produce a direct effect (or be enforceable) among the parties, or in some instances provide for safe harbors. For example, an amendment to the disclosure of a certain service’s Terms of Contract in a given sector, which is agreed upon in the sandbox, and implemented in the algorithmic disclosure, could become immediately effective. Also, some of its clauses might escape liability.

3.2.4 Discussion of BED

-

1.

Choice of algorithm provider

One possible limitation of our BED solution is selecting the algorithm provider. It seems problematic to have private parties providing the algorithm for rule-making purposes, since, as purported by Casey and Niblett, they would inevitably reflect their own interests in the definition of the objectives to pursue (Casey and Niblett, at 357). Also, there may be strong economic incentives for private parties for not disclosing information about how their rulemaking algorithm was created or why some results were generated. For instance, they might want to ‘heighten barriers to competition, or favor one side because of repeat-player issues’..Footnote 60

One possibility to overcome rent-seeking and riming rules by firm stakeholders could be for the state to open the provision of BED to competition, similarly to auctions hold for the provision of public goods (Levmore and Fagan 2021). Alternatively, the state could consider undergoing some type of approval process, similar to safety certification. However, that might prove costly.

-

2.

Liability of digital operators

Why would the digital firms want to participate in the BED instead of producing their own disclosures? In the end, they have greater technical skills, knowledge and data about consumers to stay away of BED.

In addition, anything that happens in the sandbox implies some disclosure of trade strategies to the regulator, competitors, and consumers. Information is an asset, and even in the little margins left by the disclosure duties, firms might not want to share the way to convey it to their clients.