Abstract

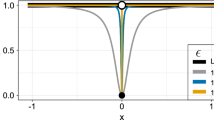

Variable selection problems are typically addressed under the regularization framework. In this paper, an exponential type penalty which very closely resembles the \(L_0\) penalty is proposed, we called it EXP penalty. The EXP penalized least squares procedure is shown to consistently select the correct model and is asymptotically normal, provided the number of variables grows slower than the number of observations. EXP is efficiently implemented using a coordinate descent algorithm. Furthermore, we propose a modified BIC tuning parameter selection method for EXP and show that it consistently identifies the correct model, while allowing the number of variables to diverge. Simulation results and data example show that the EXP procedure performs very well in a variety of settings.

Similar content being viewed by others

References

Akaike, H. (1973). Information theory and an extension of the maximum likelihood principle. In B. N. Petrov, F. Csaki (Eds.), Second international symposium on information theory (pp. 267–281). Budapest: Akademiai Kiado.

Breiman, L. (1995). Better subset regression using the non-negative garrote. Technometrics, 37, 373–384.

Breiman, L. (1996). Heuristics if instability and stabilization in model selection. Annals of Statistics, 24, 2350–2383.

Breheny, P. (2015). The group exponential lasso for bi-level variable selection. Biometrics, 71(3), 731–740.

Breheny, P., Huang, J. (2011). Coordinate descent algorithms for nonconvex penalized regression with applications to biological feature selection. Annals of Applied Statistics, 5(1), 232–253.

Daubechies, I., Defrise, M., De Mol, C. (2004). An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Communications on Pure and Applied Mathematics, 57, 1413–1457.

Dicker, L., Huang, B., Lin, X. (2013). Variable selection and estimation with the seamless-\(L_0\) penalty. Statistica Sinica, 23, 929–962.

Douglas, N. VanDerwerken (2011). Variable selection and parameter estimation using a continuous and differentiable approximation to the \(L_0\) penalty function. All Theses and Dissertations, Paper 2486.

Fan, J., Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96, 1348–1360.

Fan, J., Lv, J. (2011). Non-concave penalized likelihood with np-dimensionality. IEEE Transactions on Information Theory, 57, 5467–5484.

Fan, J., Peng, H. (2004). Nonconcave penalized likehood with a diverging number parameters. Annals of Statistics, 32, 928–961.

Frank, I. E., Friedman, J. H. (1993). A statistical view of some chemometrics regression tools. Technometrics, 35, 109–148.

Friedman, J. H., Hastie, T., Hoefling, H., Tibshirani, R. (2007). Pathwise coordinate optimization. Annals of Applied Statistics, 2(1), 302–332.

Friedman, J., Hastie, T., Tibshirani, R. (2010). Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software, 33, 1–22.

Foster, D., George, E. (1994). The risk inflation criterion for multiple regression. Annals of Statistics, 22, 1947–1975.

Fu, W. J. (1998). Penalized regression: the bridge versus the LASSO. Journal of Computational and Graphical Statistics, 7, 397–416.

Kim, Y., Choi, H., Oh, H. (2008). Smoothly clipped absolute deviation on high dimensions. Journal of the American Statistical Association, 103, 1665–1673.

Knight, K., Fu, W. (2000). Asymptotics for Lasso-Type Estimators. Annals of Statistics, 28, 1356–1378.

Lee, E. R., Noh, H., Park, B. U. (2014). Model selection via bayesian information criterion for quantile regression models. Journal of the American Statistical Association, 109, 216–229.

Lv, J., Fan, Y. (2009). A unified approach to model selection and sparse recovery using regularized least squares. Annals of Statistics, 37, 3498–3528.

Peng, B., Wang, L. (2014). An iterative coordinate descent algorithm for high-dimensional nonconvex penalized quantile regression. Journal of Computational and Graphical Statistics, 24, 00–00.

Schwarz, G. (1978). Estimating the dimension of a model. Annals of Statistics, 6, 461–464.

Shao, J. (1993). Linear model selection by cross-validation. Journal of the American Statistical Association, 88, 486–494.

Stamey, T., Kabalin, J., McNeal, J., Johnstone, I., Freiha, F., Redwine, E., et al. (1989). Prostate specific antigen in the diagnosis and treatment of adenocarcinoma of the prostate ii: radical prostatectomy treated patients. The Journal of Urology, 16, 1076–1083.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B Statistical Methodology, 58, 267–288.

Wang, H., Leng, C. (2007). Unified lasso estimation by least squares approximation. Journal of the American Statistical Association, 102, 1039–1048.

Wang, H., Li, R., Tsai, C. L. (2007). Tuning parameter selectors for the smoothly clipped absolute deviation method. Biometrika, 94, 553–568.

Wang, H., Li, B., Leng, C. (2009). Shrinkage tuning parameter selection with a diverging number of parameters. Journal of the Royal Statistical Society. Series B. Statistical Methodology, 71, 671–683.

Wu, T. T., Lange, K. (2008). Coordinate descent algorithms for LASSO penalized regression. Annals of Applied Statistics, 2, 224–244.

Zhang, C.-H. (2010). Nearly unbiased variable selection under minimax concave penalty. Annals of Statistics, 38(2), 894–942.

Zou, H. (2006). The adaptive lasso and its oracle properties. Journal of the American Statistical Association, 101, 1418–1429.

Zou, H., Hastie, T. (2005). Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society Series B Statistical Methodology, 67, 301–320.

Zou, H., Hastie, T. (2007). On the “degrees of freedom” of lasso. Annals of Statistics, 35, 2173–2192.

Zou, H., Zhang, H. H. (2009). On the adaptive elastic-net with a diverging number of parameters. Annals of Statistics, 37, 1733–1751.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the K. C. Wong Education Foundation, Hong Kong, Program of National Natural Science Foundation of China (No. 61179039) and the Project of Education of Zhejiang Province (No. Y201533324). The authors gratefully acknowledges the support of K. C. Wong Education Foundation, Hong Kong. And the authors are grateful to the editor, the associate editor and the anonymous referees for their constructive and helpful comments.

Appendix

Appendix

Proof of Theorem 1 Let \(\alpha _n=\sqrt{p\sigma ^2/n}\) and fix \(r \in (0,1)\). To prove the Theorem, it suffices to show that if \(C > 0\) is large enough, then

holds for all n sufficiently large, with probability at least \(1- r\). Define \(D_n(\mu )= Q_n(\beta ^*+\alpha _n\mu )-Q_n(\beta ^*)\) and note that

where \(K(\mu )=\{j; p_{\lambda ,a}(|\beta ^*_j+\alpha _n\mu _j|)-p_{\lambda ,a}(|\beta ^*_j|)<0\}\). The fact that \(p_{\lambda ,a}\) is concave on \([0,\infty )\) implies that

when n is sufficiently large.

Condition (B) implies that

Thus, for n big enough,

By (D),

On the other hand (D) implies,

Furthermore, (C) and (B) imply

From (15)–(18), we conclude that if \(C > 0\) is large enough, then \(\inf _{\Vert \mu \Vert =C}D_n(\mu )\) \(>0\) holds for all n sufficiently large, with probability at least \(1-r\). This proves the Theorem 1. \(\square \)

To prove Theorem 2, we first show that the EXP penalized estimator possesses the sparsity property by following lemma.

Lemma 1

Assume that (A)–(D) hold, and fix \(C > 0\). Then

where \(A^c = \{1, \ldots , p\} {\setminus } A\) is the complement of A in \(\{1, \ldots , p\}\).

Proof

Suppose that \(\beta \in R^p\) and that \(\Vert \beta -\beta ^*\Vert \le C\sqrt{{p\sigma ^2}/n}\). Define \(\tilde{\beta }\in R^p\) by \(\tilde{\beta }_{A^c}=0\) and \(\tilde{\beta }_{A}=\beta _{A}\). Similar to the proof of Theorem 1, let

where \(Q_n(\beta )\) is defined in (7). Then

On the other hand, since the EXP penalty is concave on \([0,\infty )\),

Thus,

By (C), it is clear that

and \(\lambda \sqrt{n/{(p\sigma ^2)}}\rightarrow \infty \). Combining these observations with (19) and (20) gives \(D_n(\beta ,\tilde{\beta })>0\) with probability tending to 1, as \(n\rightarrow \infty \). The result follows. \(\square \)

Proof of Theorem 2 Taken together, Theorem 1 and Lemma 1 imply that there exist a sequence of local minima \({\hat{\beta }}\) of (7) such that \(\Vert {\hat{\beta }}-\beta ^*\Vert =O_P(\sqrt{{p\sigma ^2}/n})\) and \({\hat{\beta }}_{A^c}=0\). Part (i) of the theorem follows immediately.

To prove part (ii), observe that on the event \(\{j; {\hat{\beta }}_j\ne 0\}=A\), we must have

where \(p'_A={(p'_{\lambda ,a}({\hat{\beta }}_j))}_{j\in A}\). It follows that

whenever \(\{j; {\hat{\beta }}_j\ne 0\}=A\). Now note that conditions (A)–(D) imply

Thus,

To complete the proof of (ii), we use the Lindeberg–Feller central limit theorem to show that

in distribution. Observe that

where \(\omega _{i,n}= B_n{(\sigma ^2 \mathbf{X }_A^\mathrm{T}\mathbf{X }_A)}^{-1/2}x_{i,A}\varepsilon _i\).

Fix \(\delta _0 > 0\) and let \(\eta _{i,n}=x_{i,A}^\mathrm{T}{(\mathbf{X }_A^\mathrm{T}\mathbf{X }_A)}^{-1/2}B_n^\mathrm{T}B_n{( \mathbf{X }_A^\mathrm{T}\mathbf{X }_A)}^{-1/2}x_{i,A}\) Then

Since \(\sum _{i=1}^n\eta _{i,n}=tr(B_n^\mathrm{T}B_n)\rightarrow tr(G)< \infty \) and since (E) implies

we must have

Thus, the Lindeberg condition is satisfied and (21) holds. \(\square \)

Proof of Theorem 3 Suppose we are on the event \(\{j; {\hat{\beta }}^*_j\ne 0\}=A\). The first order optimality conditions for (7) imply that

where \(p'_A(\beta )={(p'_{\lambda ,a}(\beta _j))}_{j\in A}\). Thus,

Now let \({\hat{\beta }}={\hat{\beta }}(\lambda ,a)\) be a local minimizer of (7) with \((\lambda ,a)\in \Omega \) and let \({\hat{A}}=\{j; {\hat{\beta }}_j\ne 0\}\). Note that

Thus, if \(A{\setminus }{\hat{A}}=\Phi \), then

where \(0< r < \lambda _\mathrm{min}(n^{-1}\mathbf{X }^\mathrm{T}\mathbf{X })\) is a positive constant. Furthermore, whenever \({\Vert \mathbf y -\mathbf{X }{{\hat{\beta }}}\Vert }^2-{\Vert \mathbf y -\mathbf{X }{{\hat{\beta }}}^*\Vert }^2>0\), we have

where \(\hat{p}_0 = |{\hat{A}}|\). By Condition (B’), it follows that

It remains to consider \({\hat{\beta }}\), where A is a proper subset of \({\hat{A}}\). Suppose that \(A {\setminus } {\hat{A}}\). Then

and

Since

it follows that

Thus,

We conclude that

Combining this with (22) proves the proposition. \(\square \)

About this article

Cite this article

Wang, Y., Fan, Q. & Zhu, L. Variable selection and estimation using a continuous approximation to the \(L_0\) penalty. Ann Inst Stat Math 70, 191–214 (2018). https://doi.org/10.1007/s10463-016-0588-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10463-016-0588-3