Abstract

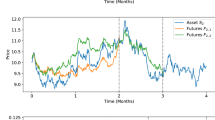

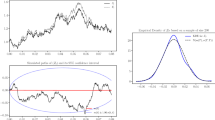

We study the problem of dynamically trading multiple futures contracts with different underlying assets. To capture the joint dynamics of stochastic bases for all traded futures, we propose a new model involving a multi-dimensional scaled Brownian bridge that is stopped before price convergence. This leads to the analysis of the corresponding Hamilton–Jacobi–Bellman equations, whose solutions are derived in semi-explicit form. The resulting optimal trading strategy is a long-short policy that accounts for whether the futures are in contango or backwardation. Our model also allows us to quantify and compare the values of trading in the futures markets when the underlying assets are traded or not. Numerical examples are provided to illustrate the optimal strategies and the effects of model parameters.

Similar content being viewed by others

Notes

See CFTC Press Release 5542-08: https://www.cftc.gov/PressRoom/PressReleases/pr5542-08.

According to CME group report: https://www.cmegroup.com/daily_bulletin/monthly_volume/Web_ADV_Report_CMEG.pdf.

See Cootner (1960).

A commodity is said to be contangoed if its forward curve (which is the plot of its futures prices against time-to-delivery) is increasing. The commodity is backwardated if its forward curve is decreasing.

That is, the number of futures contracts held multiplied by the futures price.

A notable exception is “basis trading”, see Angoshtari and Leung (2019) for further discussion.

References

Angoshtari, B., Leung, T.: Optimal dynamic basis trading. Ann Finance 15(3), 307–335 (2019)

Brennan, M.J.: The supply of storage. Am Econ Rev 48(1), 50–72 (1958)

Brennan, M.J., Schwartz, E.S.: Optimal arbitrage strategies under basis variability. In: Sarnat, M. (ed.) Essays in Financial Economics. Amsterdam: North Holland (1988)

Brennan, M.J., Schwartz, E.S.: Arbitrage in stock index futures. J Bus 63(1), S7–S31 (1990)

Carmona, R., Ludkovski, M.: Spot convenience yield models for the energy markets. Contemp Math 351, 65–80 (2004)

Cootner, P.H.: Returns to speculators: Telser versus Keynes. J Polit Econ 68(4), 396–404 (1960)

Dai, M., Zhong, Y., Kwok, Y.K.: Optimal arbitrage strategies on stock index futures under position limits. J Futures Mark 31(4), 394–406 (2011)

Fleming, W.H., Soner, H.M.: Controlled Markov Processes and Viscosity Solutions, vol. 25. New York: Springer (2006)

Gibson, R., Schwartz, E.S.: Stochastic convenience yield and the pricing of oil contingent claims. J Finance 45(3), 959–976 (1990)

Hilliard, J.E., Reis, J.: Valuation of commodity futures and options under stochastic convenience yields, interest rates, and jump diffusions in the spot. J Financ Quant Anal 33(1), 61–86 (1998)

Kaldor, N.: Speculation and economic stability. Rev Econ Stud 7(1), 1–27 (1939)

Karatzas, I., Shreve, S.: Brownian Motion and Stochastic Calculus. Berlin: Springer (1991)

Leung, T., Li, X.: Optimal Mean Reversion Trading: Mathematical Analysis and Practical Applications. Singapore: World Scientific (2016)

Leung, T., Yan, R.: Optimal dynamic pairs trading of futures under a two-factor mean-reverting model. Int J Financ Eng 5(3), 1850027 (2018)

Leung, T., Yan, R.: A stochastic control approach to managed futures portfolios. Int J Financ Eng 6(1), 1950005 (2019)

Leung, T., Li, J., Li, X., Wang, Z.: Speculative futures trading under mean reversion. Asia-Pac Financ Mark 23(4), 281–304 (2016)

Liu, J., Longstaff, F.A.: Losing money on arbitrage: optimal dynamic portfolio choice in markets with arbitrage opportunities. Rev Financ Stud 17(3), 611–641 (2004)

Miffre, J.: Long-short commodity investing: a review of the literature. J Commod Mark 1(1), 3–13 (2016)

Reid, T.W.: Riccati Differential Equations, Mathematics in Science and Engineering, vol. 86. New York: Academic Press (1972)

Schwartz, E.S.: The stochastic behavior of commodity prices: implications for valuation and hedging. J Finance 52(3), 923–973 (1997)

Working, H.: The theory of price of storage. Am Econ Rev 39(6), 1254–1262 (1949)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proof of Theorem 1

The proof relies on the following well-known comparison result for Riccati differential equations, which we include for readers’ convenience. Let \(A\ge 0\) (resp. \(A>0\)) denote that A is positive semi-definite (resp. positive definite) and \(A\ge B\) (resp. \(A>B\)) denote that \(A-B\ge 0\) (resp. \(A-B>0\)).

Lemma 2

(Reid (1972), Theorem 4.3, p. 122) Let A(t), B(t), C(t), and \(\widetilde{C}(t)\) be continuous \(N\times N\) matrix functions on an interval \([a,b]\in \mathbb {R}\), and \(H_0\) and \(\widetilde{H}_0\) be two symmetric \(N\times N\) matrices. Furthermore, assume that for all \(t\in [a,b]\), \(B(t)\ge 0\) and C(t) and \(\widetilde{C}(t)\) are symmetric such that \(C(t)\ge \widetilde{C}(t)\). Consider the Riccati matrix differential equations

and

If \(H_0>\widetilde{H}_0\) (resp. \(H_0\ge \widetilde{H}_0\)) and (31) has a symmetric solution \(\widetilde{H}(t)\), then (30) also has a symmetric solution H(t) such that \(H(t)>\widetilde{H}(t)\) (resp. \(H(t)\ge \widetilde{H}(t)\)) for all \(t\in [a,b]\).\(\square \)

Proof of (i): Consider the matrix Riccati differential equation

which has the trivial solution \(\widetilde{H}_0\equiv 0_{N\times N}\). Assume, for now, that \(\gamma \,C^\top \Sigma \,C + (1-\gamma )A >0\). By Lemma 2, it follows that (20) has a positive semidefinite solution \(H(\tau )\) on [0, T]. That \(H(\tau )\) is positive definite on (0, T] follows from the fact that \(H'(0) = \frac{\gamma -1}{\gamma ^2} {\varvec{\eta }}_F(T-\tau )^\top \Sigma _\mathbf F ^{-1}{\varvec{\eta }}_F(T-\tau )>0\) and Lemma 2. The uniqueness of the solution follows from the uniqueness theorem for first order differential equations.

It only remains to show that \(\gamma \,C^\top \Sigma \,C + (1-\gamma )A >0\). By (4), (18), and (19), we have

Finally, using the assumption \(\gamma >1\) and the last two results yield

as we set out to prove.

Proof of (ii) and (iii): As we argue later, \(V_F(t,x,\mathbf {z})\) is the solution of the Hamilton–Jacobi–Bellman (HJB) equation

for \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\), in which the differential operator \(\mathscr {J}_{\varvec{\theta }}\) is given by

for any \({\varvec{\theta }}\in \mathbb {R}^N\) and any \(\varphi (t,x,\mathbf {z}):[0,T]\times \mathbb {R}_+\times \mathbb {R}^N\rightarrow \mathbb {R}\) that is continuously twice differentiable in \((x,\mathbf {z})\). Here, \({\text {tr}}(A)\) is the trace of the matrix A and we have used the shorthand notations \(\varphi _\mathbf {z}\) and \(\varphi _{\mathbf {z}\mathbf {z}}\) to denote the gradient vector and the Hessian matrix of \(\varphi (t,x,\mathbf {z})\) with respect to \(\mathbf {z}\), that is,

By assuming that \(v_{xx}<0\) [which is verified by the form of the solution, i.e. (36)], the maximizer of the left side of the differential equation in (32) is

Substituting \(\sup _{{\varvec{\theta }}\in \mathbb {R}^N} \mathscr {J}_{\varvec{\theta }}v=\mathscr {J}_{{\varvec{\theta }}^*}v\) in (32) yields

for \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\), subject to the terminal condition

To solve (34), we consider the ansatz

for \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\), in which f(t), \(\mathbf {g}(t)=\big (g_1(t),\ldots ,g_N(t)\big )^\top \), and

are unknown functions to be determined. Without loss of generality, we further assume that H(t) is symmetric such that \(h_{ij}(t)=h_{ji}(t)\) for \(0\le t\le T\). Substituting this ansatz into (34) yields

for all \((t,\mathbf {z})\in [0,T]\times \mathbb {R}^N\), where we have omitted the t arguments to simplify the terms. Taking the terminal condition (35) into account, it then follows that H, \(\mathbf {g}\), and f must satisfy (20), (22), and (23), respectively.

By statement (i) of the theorem, (20) has a unique solution that is positive definite on (0, T]. Using the classical existence theorem of systems of ordinary differential equations, we then deduce that (22) also has a unique bounded solution on [0, T]. Finally, f given by (23) is continuously differentiable since the integrand on the right side is continuous. Thus, \(v(t,x,\mathbf {z})\) given by (21) is a solution of the HJB equation (32).

It only remains to show that the solution of the HJB equation is the value function, that is \(v(t,x,\mathbf {z})=V(t,x,\mathbf {z})\) for all \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\). Note that, for \(0\le t <T\), we have

in which \(\widetilde{H}(T-t)\) is the Cholesky factor of the positive definite matrix \(H(T-t)\). It then follows that \(v(t,x,\mathbf {z})\) in (36) is bounded in \(\mathbf {z}\) and has polynomial growth in x. A standard verification result such as Theorem 3.8.1 on page 135 of Fleming and Soner (2006) then yields that \(v(t,x,\mathbf {z})=V(t,x,\mathbf {z})\) for all \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\).

The verification result also states that the optimal control in feedback form is \({\varvec{\theta }}^*\) given by (33). Using (36), one obtains \({\varvec{\theta }}^*(t,x,\mathbf {z})\) in terms H and \(\mathbf {g}\) as in (24).

Proof of Theorem 2

The proof is similar to the proof of Theorem 1 and, thus, is presented in less detail.

(i): Similar to the proof of statement (i) of Theorem 1, the proof here involves comparing (26) with the homogenous equation

using Lemma 2.

(ii) and (iii): As we later verify, \(V(t,x,\mathbf {z})\) solves the HJB equation

for \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\), in which the differential operator \(\mathscr {L}_{\varvec{\Theta }}\) is given by

for any \({\varvec{\Theta }}\in \mathbb {R}^{2N}\) and any \(\varphi (t,x,\mathbf {z}):[0,T]\times \mathbb {R}_+\times \mathbb {R}^N\rightarrow \mathbb {R}\) that is continuously twice differentiable in \((x,\mathbf {z})\). Assuming that \(v_{xx}<0\), which is readily verified by the form of the solution in (27), we obtain that the maximizer \({\varvec{\Theta }}^*\) in (37) is given by

Substituting \({\varvec{\Theta }}^*\) into (37) yields

for \((t,x,\mathbf {z})\in [0,T]\times \mathbb {R}_+\times \mathbb {R}^N\), subject to the terminal condition

This partial differential equation is similar to (34) and can be solved using the same ansatz. Indeed, applying (36) yields that f(t), \(\mathbf {g}(t)\), and H(t) satisfy

for all \((t,\mathbf {z})\in [0,T]\times \mathbb {R}^N\), in which we have omitted the t arguments to simplify the notation. Taking the terminal condition (38) into account, it then follows that H, \(\mathbf {g}\), and f must satisfy (26), (28), and (29), respectively.

The verification result and the optimal trading strategy are obtained in a similar fashion as in the proof of Theorem 1.

Rights and permissions

About this article

Cite this article

Angoshtari, B., Leung, T. Optimal trading of a basket of futures contracts. Ann Finance 16, 253–280 (2020). https://doi.org/10.1007/s10436-019-00357-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10436-019-00357-w