Abstract

Purpose

An excessive amount of total hospitalization is caused by delays due to patients waiting to be placed in a rehabilitation facility or skilled nursing facility (RF/SNF). An accurate preoperative prediction of who would need a RF/SNF place after surgery could reduce costs and allow more efficient organizational planning. We aimed to develop a machine learning algorithm that predicts non-home discharge after elective surgery for lumbar spinal stenosis.

Methods

We used the American College of Surgeons National Surgical Quality Improvement Program to select patient that underwent elective surgery for lumbar spinal stenosis between 2009 and 2016. The primary outcome measure for the algorithm was non-home discharge. Four machine learning algorithms were developed to predict non-home discharge. Performance of the algorithms was measured with discrimination, calibration, and an overall performance score.

Results

We included 28,600 patients with a median age of 67 (interquartile range 58–74). The non-home discharge rate was 18.2%. Our final model consisted of the following variables: age, sex, body mass index, diabetes, functional status, ASA class, level, fusion, preoperative hematocrit, and preoperative serum creatinine. The neural network was the best model based on discrimination (c-statistic = 0.751), calibration (slope = 0.933; intercept = 0.037), and overall performance (Brier score = 0.131).

Conclusions

A machine learning algorithm is able to predict discharge placement after surgery for lumbar spinal stenosis with both good discrimination and calibration. Implementing this type of algorithm in clinical practice could avert risks associated with delayed discharge and lower costs.

Graphical abstract

These slides can be retrieved under Electronic Supplementary Material.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In recent years, there has been a trend toward quicker discharges after orthopedic surgery, which does not seem to affect patients’ outcomes inordinately [1, 2]. However, an excessive amount of total hospitalization is caused by delays due to patients waiting to be placed in a rehabilitation facility or skilled nursing facility (RF/SNF) [3,4,5,6,7,8]. Not only does this incur unnecessary costs and hamper efficient delivery of care, but more importantly delayed discharges are detrimental to the patient [9]. Increased length of stay has been associated with hospital acquired infections and adverse drug events [7, 9,10,11]. Although increasing the number of facilities seems the obvious solution, a study by Gaughan et al. [12] found that this would only have a small effect on delayed discharges and would actually cost more.

Previous studies have determined risk factors for non-home discharge placement. Some have developed scoring systems based on these risk factors aiming to predict who will likely not be discharged home after spine surgery [13,14,15,16]. However, no studies have looked at employing machine learning (ML) algorithms. The increased amounts of available data combined with more computational hardware are currently causing a rapid expansion of ML in medicine. ML is a form of artificial intelligence which allows algorithms to learn and self-improve from experience without explicit programming by a data scientist. The capacity of these algorithms to handle large datasets and incorporate nonlinear interactions allows for more accurate and personalized prediction than regular statistical methods.

An accurate personal preoperative prediction of who would need a RF/SNF place would allow reservation of a place in advance and earlier insurance precertification. This could reduce costs and avoid the risks of (unnecessary) prolonged hospitalization.

Lumbar spinal stenosis is a relatively common degenerative spine condition for which the SPORT trial has indicated surgical treatment to be superior to non-surgical treatment. Currently, it is one of the most common indications for spine surgery [17, 18].

In this study, we aim to develop a prediction tool using ML algorithms to predict discharge to a RF/SNF after elective surgery for lumbar spinal stenosis for patients living at home preoperatively. Second, we aim to select the best performing algorithm and develop an application to enable healthcare providers to arrange a place in a RF/SNF well in advance.

Methods

Data source

We used the American College of Surgeons National Surgical Quality Improvement Program (ACS-NSQIP) as our main data source. The ACS-NSQIP is a large clinical database with data of more than 680 US hospitals combined and has often been used in the spine literature.

We included patients based on the following criteria: (1) International Classification of Disease—Ninth Revision (ICD-9) code 724.02 or 724.03 for lumbar spinal stenosis; (2) year of surgery between 2009 and 2016; (3) Current Procedural Terminology (CPT) codes for decompression, fusion, or fixation. We included 28,600 patients in our dataset to train and test the algorithms.

Data analysis

Our primary outcome measure was non-home discharge defined as all discharges not to home. This variable was created by grouping together discharges to rehabilitation facilities, skilled nursing facilities, and unskilled nursing facilities. Variable selection for our algorithm was performed by entering all available variables in a random forest regression, which then ranks variables according to their predictive power for the outcome variable [19].

We performed a stratified 80:20 split of the dataset into a training set and a testing set. We used the training set for algorithm training and assessment of performance by tenfold cross-validation. Cross-validation means dividing the data into a selected number of groups, named folds. Each fold is withheld once and treated as the test set, while the other folds together are treated as the training set. Results are subsequently averaged across all repetitions of this sequence [20].

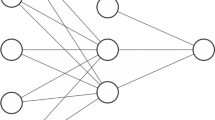

Four different algorithms (neural network, support vector machine, Bayes point machine, boosted decision tree) were trained using these variables to predict non-home discharge. We choose these four because they each have different merits for prediction (Appendix 1). Senders et al. [20] provide an accessible overview of the most commonly used algorithms. The model with the best performance was subsequently used in the testing set to predict discharge placement. These predictions were then compared with the actual outcomes of the testing set to assess the performance of the algorithm outside of the training set.

Model assessment

Performance of the algorithms was measured with discrimination, calibration, and an overall performance score [21, 22].

Discrimination is the algorithm’s ability to distinguish patients who were discharged to home from patients who were not discharged to home. We assessed discrimination with receiver operating curves (ROC) and c-statistics. A c-statistic of 1.0 indicates perfect discrimination, while a c-statistic of 0.5 indicates discrimination similar to chance [23].

Calibration determines whether the predicted probabilities of the algorithm are similar to the actual observed events. The calibration intercept determines whether the algorithm is over- or underestimating the probabilities; the calibration slope determines whether the predictor effects are similar in the training and the testing set. A perfect model has an intercept score of 0.0 and a slope score of 1.0.

Overall model performance was assessed with the Brier score, calculated by obtaining the mean squared error between the observed events in the testing set and the predictions given by the algorithm. A perfect algorithm would have a score of 0. The Brier score combines discrimination and calibration characteristics, but must always be interpreted in the context of the prevalence of the predicted outcome—in our study non-home discharge [22]. If the prevalence of the outcome variable is lower, the maximum score of a poor algorithm is lower as well. Therefore, the Brier score must be compared with the null Brier score, which is calculated by assigning each patient a probability equivalent to the prevalence of non-home discharge. Steyerberg et al. [22] offers a detailed framework of all performance metrics.

Web-based application

The algorithm with the best performance based on discrimination, calibration, and overall performance was subsequently incorporated in a Web-based application. This application is built to input the variable values collected by a healthcare provider into the algorithm, calculate the probability, and output the result to the healthcare provider in real-time.

Microsoft Azure, STATA 13 (StataCorp LP, College Station, TX, USA), RStudio version 1.0.153, and Python version 3.6 (Python Software Foundation) (Anaconda distribution) were used for data analysis, model creation, and application development.

Results

Of the 28,600 patients, 18.2% were not discharged to home. Baseline characteristics are given in Table 1. The following variables were included after variable selection: age (years), sex (male/female), body mass index (BMI), American Society of Anesthesiologists (ASA) class (I/II/III/IV), functional status (independent/dependent), number of levels included in surgery (1 or 2 levels/3 or more levels), fusion (yes/no), diabetes (no/oral medication/insulin dependent), preoperative hematocrit (vol.%), and preoperative serum creatinine (mg/dL).

Table 2 shows the list of the AUC, calibration slope and intercept, and Brier score for the four algorithms. The null Brier score was 0.150. Based on numerical and graphical assessment of these metrics, the neural network algorithm was chosen as the final model with a c-statistic of 0.751, a calibration slope of 0.933, a calibration intercept of 0.037, and a Brier score of 0.130 (Fig. 1).

When evaluating the neural network algorithm on the testing set a c-statistic of 0.744, a calibration slope of 0.915, a calibration intercept of − 0.131, and a Brier score of 0.131 were achieved (Figs. 2, 3).

The Web application based on the neural network can be accessed at https://sorg-apps.shinyapps.io/stenosisdisposition/. As an example, a 75-year-old male is scheduled for two-level surgery with fusion. He has a BMI of 34 and is classified as ASA II; he lives independently at home and does not have diabetes. His preoperative creatinine level is 2.9 mg/dL and preoperative hematocrit level is 34%. After filling out these values in the algorithm, this patient has a 24.4% chance of non-home discharge.

Discussion

We aimed to develop an ML algorithm that can predict discharge to a RF/SNF after elective surgery for lumbar stenosis. Our algorithm included age, sex, BMI, functional status, ASA class, level, fusion, diabetes, preoperative hematocrit, and preoperative serum creatinine. The neural network was picked as the best algorithm based on discrimination (c-statistic = 0.752), calibration (intercept = − 1.27 × 10−5; slope = 0.996), and overall performance (Brier score = 0.1257) in the training set and subsequent performance on internal validation.

Our study has several limitations. First, studies using a large clinical database are always affected by miscoding and other inaccuracies. Although widely used, few studies have assessed the actual accuracy of the NSQIP database. Rolston et al. [24] found many internal inconsistencies between procedure CPT codes and postoperative ICD-9 codes in neurosurgery. However, the codes for lumbar stenosis and lumbar surgery are more straightforward so we estimate that potential miscoding will not severely affect our algorithm. Second, certain variables of interest are not always available in the ACS-NSQIP. Considering preoperative patient-reported outcomes are known to be predictors of discharge placement after spine surgery, we consider this a major limitation of our work [25]. While the current AUC of 0.751 is fair, the algorithm could potentially be improved by adding these and other relevant variables. Third, although the ACS-NSQIP database consists of data of 680 US hospitals, these results may not be applicable to all the patients it is intended for due to differences in demographic or clinical characteristics. Fourth, the differences between the algorithms are small, which makes the choice for a neural network somewhat arbitrary. However, settling on an algorithm based on numerical and graphical assessment is the most reproducible method. Finally, it must be emphasized that this study focuses on accurate prediction of a, rather simple, prespecified outcome (here ‘non-home discharge’) in contrast to the explanation of this outcome, which is the focus of the vast majority of medical research. The variables in our model cannot simply be interpreted as independent explanatory variables.

Age, sex, diabetes, functional status, fusion, and preoperative hematocrit have previously been identified in other (explanatory) studies on discharge placement after spine surgery [26,27,28]. The inclusion of most variables in our model can likely be attributed to being independent risk factors for major complications after surgery for lumbar stenosis. Age, diabetes, BMI, functional status, ASA class, preoperative hematocrit, and preoperative creatinine have all been shown to be associated with major complications [29,30,31,32]. Number of levels and fusion are likely surrogates for longer procedural time which is also implicated in postoperative morbidity [30, 31].

The importance of eliminating delayed discharges for patients lies in averting the risks associated with longer hospitalization and the advantages of starting rehabilitation earlier. Umarji et al. [11] found that 58% of patients with a hip fracture acquire nosocomial infections when discharge was delayed beyond 8 days. Hauck et al. [33] found that each additional night in hospital increases the risk by 0.5% for adverse drug events and 1.6% for infections. With regard to rehabilitation, other studies have found worse post-rehabilitation scores for patients with delays in discharge [34, 35]. While those studies did not necessarily focus on elective spine patients, other spine centers have acknowledged the problem and aimed to construct risk scores for predicting discharge placement. McGirt et al. [15] created the Carolina-Semmes grading score for all degenerative lumbar spine surgery based on logistic regression. They included the variables age, ASA class, fusion, Oswestry disability index score, ambulation, and non-private insurance and achieved an area under the curve (AUC) of 0.731. Kanaan et al. [14] used age, prior level of function, and gait distance to create a model for discharge placement after lumbar laminectomy and achieved an AUC of 0.80. Slover et al. [13] stratified spine patients in low, medium, and high risk based on points for age, sex, walking distance, gait aid, community support, and availability of caregiver at home. They did not report an AUC. None of the above-mentioned studies assessed calibration.

Although often overlooked, assessment of calibration is an essential feature of studies creating prediction models. In our study, the neural network and the Bayes point machine had highly similar performance metrics. However, on graphical assessment the calibration of the Bayes point machine was slightly inaccurate between the predicted probabilities of 0.15 and 0.50, which represent a significant part of the study population (Fig. 3). This deviation means the algorithm slightly underestimates the chance of discharge to a RF/SNF, which for some patients would mean no placement has been arranged before surgery—the situation as it is right now. Assessing calibration over the full range of predictions is crucial in ensuring the model is useful [23]. Future studies aiming to create models should always feature a numerical and graphical assessment of calibration. As depicted in the calibration subplot in Fig. 3, the vast majority of patients have a 10–40% chance of discharge to an RF/SNF, as can be expected for an elective spine procedure. The algorithm is meant to trace and designate higher-risk patients so their potential discharge delay might be avoided.

Where hospitals set their threshold to arrange an RF/SNF placement in advance would differ per health system. There are major differences in the availability of RF/SNF beds, insurance regulations, and discharge practices between countries [36,37,38]. Length of stay for deforming dorsopathies ranges from 4.6 to 27 within Europe. American patients are three times more likely to be discharged to RF/SNF than Canadian patients with a hip fracture [39]. While these complex differences do exist, delayed discharges are a problem for patients and hospitals around the world [9]. Mirroring the differences between countries, a wide variety of policies have been implemented internationally to try to lower amount and duration of these delayed discharges [40, 41]. In Great Britain, imposing fines has reduced the number of delayed discharges, but simultaneously rising readmission rates brought up questions about the quality of discharges [42]. Sweden tried making local municipalities financially responsible for the care of elderly [43]. Others focused on developing allocation decision tools or the effect of increasing nursing home supply [12, 44].

At the very core of all these suggested policies, regardless of health system, is the inability to make an accurate assessment of who will need a RF/SNF placement with enough time to set things in motion. An ML algorithm can give an individualized prediction. Thorough external validation needs to be performed along with an assessment of where to place the threshold before these algorithms can be implemented, especially if the algorithm were to be used outside the USA.

Nevertheless, considering the risks for patients and the unnecessary costs involved with longer hospitalization due to delayed discharges, the use of predictive algorithms could be worth the initial effort.

Conclusion

A prediction tool based on an ML algorithm is able to predict discharge placement after surgery for lumbar spinal stenosis with both good discrimination and calibration. This methodology can be implemented for a variety of other diseases and elective treatments, which could avoid risks associated with delayed discharge and lower costs.

References

Regenbogen SE, Cain-Nielsen AH, Norton EC et al (2017) Costs and consequences of early hospital discharge after major inpatient surgery in older adults. JAMA Surg 152:e170123. https://doi.org/10.1001/jamasurg.2017.0123

Basques BA, Tetreault MW, Della Valle CJ (2017) Same-day discharge compared with inpatient hospitalization following hip and knee arthroplasty. J Bone Joint Surg Am 99:1969–1977. https://doi.org/10.2106/JBJS.16.00739

Hwabejire JO, Kaafarani HMA, Imam AM et al (2013) Excessively long hospital stays after trauma are not related to the severity of illness: let’s aim to the right target! JAMA Surg 148:956–961. https://doi.org/10.1001/jamasurg.2013.2148

Watkins JR, Soto JR, Bankhead-Kendall B et al (2014) What’s the hold up? Factors contributing to delays in discharge of trauma patients after medical clearance. Am J Surg 208:969–973. https://doi.org/10.1016/j.amjsurg.2014.07.002

Costa AP, Poss JW, Peirce T, Hirdes JP (2012) Acute care inpatients with long-term delayed discharge: evidence from a Canadian health region. BMC Health Serv Res 12:6–11. https://doi.org/10.1186/1472-6963-12-172

Smith AL, Kulhari A, Wolfram JA, Furlan A (2017) Impact of insurance precertification on discharge of stroke patients to acute rehabilitation or skilled nursing facility. J Stroke Cerebrovasc Dis 26:711–716. https://doi.org/10.1016/j.jstrokecerebrovasdis.2015.12.037

Rosman M, Rachminov O, Segal O, Segal G (2015) Prolonged patients’ In-Hospital Waiting Period after discharge eligibility is associated with increased risk of infection, morbidity and mortality: a retrospective cohort analysis. BMC Health Serv Res 15:1–5. https://doi.org/10.1186/s12913-015-0929-6

New PW, Andrianopoulos N, Cameron PA et al (2013) Reducing the length of stay for acute hospital patients needing admission into inpatient rehabilitation: a multicentre study of process barriers. Intern Med J 43:1005–1011. https://doi.org/10.1111/imj.12227

Rojas-García A, Turner S, Pizzo E et al (2018) Impact and experiences of delayed discharge: a mixed-studies systematic review. Health Expect 21:41–56. https://doi.org/10.1111/hex.12619

Härkänen M, Kervinen M, Ahonen J et al (2015) Patient-specific risk factors of adverse drug events in adult inpatients—evidence detected using the Global Trigger Tool method. J Clin Nurs 24:582–591. https://doi.org/10.1111/jocn.12714

Umarji SIM, Lankester BJA, Prothero D, Bannister GC (2006) Recovery after hip fracture. Injury 37:712–717. https://doi.org/10.1016/j.injury.2005.12.035

Gaughan J, Gravelle H, Siciliani L (2015) Testing the bed-blocking hypothesis: does nursing and care home supply reduce delayed hospital discharges? Health Econ 24:32–44. https://doi.org/10.1002/hec

Slover J, Mullaly K, Karia R et al (2017) The use of the Risk Assessment and Prediction Tool in surgical patients in a bundled payment program. Int J Surg 38:119–122. https://doi.org/10.1016/j.ijsu.2016.12.038

Kanaan SF, Yeh H-W, Waitman RL et al (2014) Predicting discharge placement and health care needs after lumbar spine laminectomy. J Allied Health 43:88–97

McGirt MJ, Parker SL, Chotai S et al (2017) Predictors of extended length of stay, discharge to inpatient rehab, and hospital readmission following elective lumbar spine surgery: introduction of the Carolina-Semmes Grading Scale. J Neurosurg Spine 27:382–390. https://doi.org/10.3171/2016.12.SPINE16928

Niedermeier S, Przybylowicz R, Virk SS et al (2017) Predictors of discharge to an inpatient rehabilitation facility after a single-level posterior spinal fusion procedure. Eur Spine J 26:771–776. https://doi.org/10.1007/s00586-016-4605-2

Weinstein JJN, Tosteson TTD, Lurie JD et al (2008) Surgical versus nonsurgical therapy for lumbar spinal stenosis. N Engl J Med 358:794–810. https://doi.org/10.1056/NEJMoa0707136.Surgical

Weinstein JN, Tosteson TD, Lurie JD et al (2010) Surgical versus nonoperative treatment for lumbar spinal stenosis four-year results of the Spine Patient Outcomes Research Trial. Spine 35:1329–1338. https://doi.org/10.1097/BRS.0b013e3181e0f04d

Degenhardt F, Seifert S, Szymczak S (2017) Evaluation of variable selection methods for random forests and omics data sets. Brief Bioinform. https://doi.org/10.1093/bib/bbx124

Senders JT, Staples PC, Karhade AV et al (2018) Machine learning and neurosurgical outcome prediction: a systematic review. World Neurosurg 109:476.e1–486.e1. https://doi.org/10.1016/j.wneu.2017.09.149

Alba AC, Agoritsas T, Walsh M et al (2017) Discrimination and calibration of clinical prediction models. JAMA 318:1377. https://doi.org/10.1001/jama.2017.12126

Steyerberg EW, Vickers AJ, Cook NR et al (2010) Assessing the performance of prediction models: a framework for some traditional and novel measures. Epidemiology 21:128–138. https://doi.org/10.1097/EDE.0b013e3181c30fb2.Assessing

Cook NR (2007) Use and misuse of the receiver operating characteristic curve in risk prediction. Circulation 115:928–935. https://doi.org/10.1161/CIRCULATIONAHA.106.672402

Rolston JD, Han SJ, Chang EF (2017) Systemic inaccuracies in the National Surgical Quality Improvement Program database: implications for accuracy and validity for neurosurgery outcomes research. J Clin Neurosci 37:44–47. https://doi.org/10.1016/j.jocn.2016.10.045

Mancuso CA, Duculan R, Craig CM, Girardi FP (2018) Psychosocial variables contribute to length of stay and discharge destination after lumbar surgery independent of demographic and clinical variables. Spine 43:281–286. https://doi.org/10.1097/BRS.0000000000002312

Best MJ, Buller LT, Falakassa J, Vecchione D (2015) Risk factors for nonroutine discharge in patients undergoing spinal fusion for intervertebral disc disorders. Iowa Orthop J 35:147–155

Abt NB, McCutcheon BA, Kerezoudis P et al (2017) Discharge to a rehabilitation facility is associated with decreased 30-day readmission in elective spinal surgery. J Clin Neurosci 36:37–42. https://doi.org/10.1016/j.jocn.2016.10.029

Murphy ME, Gilder H, Maloney PR et al (2017) Lumbar decompression in the elderly: increased age as a risk factor for complications and nonhome discharge. J Neurosurg Spine 26:353–362. https://doi.org/10.3171/2016.8.SPINE16616

Deyo RA, Hickam D, Duckart JP, Piedra M (2013) Complications after surgery for lumbar stenosis in a veteran population. Spine 38:1695–1702. https://doi.org/10.1097/BRS.0b013e31829f65c1

Schoenfeld AJ, Carey PA, Cleveland AW et al (2013) Patient factors, comorbidities, and surgical characteristics that increase mortality and complication risk after spinal arthrodesis: a prognostic study based on 5,887 patients. Spine J 13:1171–1179. https://doi.org/10.1016/j.spinee.2013.02.071

Veeravagu A, Patil CG, Lad SP, Boakye M (2009) Risk factors for postoperative spinal wound infections after spinal decompression and fusion surgeries. Spine 34:1869–1872. https://doi.org/10.1097/BRS.0b013e3181adc989

Lakomkin N, Goz V, Cheng JS et al (2018) The utility of preoperative laboratories in predicting postoperative complications following posterolateral lumbar fusion. Spine J 18:993–997. https://doi.org/10.1016/j.spinee.2017.10.010

Hauck K, Zhao X (2011) How dangerous is a day in hospital? Med Care 49:1068–1075. https://doi.org/10.1097/MLR.0b013e31822efb09

Young J, Green J (2010) Effects of delays in transfer on independence outcomes for older people requiring postacute care in community hospitals in England. J Clin Gerontol Geriatr 1:48–52. https://doi.org/10.1016/j.jcgg.2010.10.009

Sirois MJ, Lavoie A, Dionne CE (2004) Impact of transfer delays to rehabilitation in patients with severe trauma. Arch Phys Med Rehabil 85:184–191. https://doi.org/10.1016/j.apmr.2003.06.009

Kondo A, Zierler BK, Isokawa Y et al (2010) Comparison of lengths of hospital stay after surgery and mortality in elderly hip fracture patients between Japan and the United States—the relationship between the lengths of hospital stay after surgery and mortality. Disabil Rehabil 32:826–835. https://doi.org/10.3109/09638280903314051

Nikkel LE, Kates SL, Schreck M et al (2015) Length of hospital stay after hip fracture and risk of early mortality after discharge in New York state: retrospective cohort study. BMJ 351:1–10. https://doi.org/10.1136/bmj.h6246

Ribbe MW, Ljunggren G, Steel K et al (1997) Nursing homes in 10 nations: a comparison between countries and settings. Age Ageing 26:3–12. https://doi.org/10.1093/ageing/26.1.3

Beaupre LA, Wai EK, Hoover DR et al (2018) A comparison of outcomes between Canada and the United States in patients recovering from hip fracture repair: secondary analysis of the FOCUS trial. Int J Qual Health Care 30:97–103. https://doi.org/10.1093/intqhc/mzx199

Bryan K (2010) Policies for reducing delayed discharge from hospital. Br Med Bull 95:33–46. https://doi.org/10.1093/bmb/ldq020

Ou L, Chen J, Young L et al (2011) Effective discharge planning—timely assignment of an estimated date of discharge. Aust Health Rev 35:357. https://doi.org/10.1071/AH09843

McCoy D, Godden S, Pollock AM, Bianchessi C (2007) Carrot and sticks? The Community Care Act (2003) and the effect of financial incentives on delays in discharge from hospitals in England. J Public Health 29:281–287. https://doi.org/10.1093/pubmed/fdm026

Styrborn K, Thorslund M (1993) “Bed-blockers”: delayed discharge of hospital patients in a nationwide perspective in Sweden. Health Policy 26:155–170. https://doi.org/10.1016/0168-8510(93)90116-7

Zychlinski N (2017) Time-varying fluid networks with blocking: models supporting patient flow analysis in hospitals. Doctoral dissertation, Israel Institute of Technology

Acknowledgements

The American College of Surgeons National Surgical Quality Improvement Program and the hospitals participating in the ACS-NSQIP are the source of the data used herein; they have not verified and are not responsible for the statistical validity of the data analysis or the conclusions derived by the authors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have nothing to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix 1

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ogink, P.T., Karhade, A.V., Thio, Q.C.B.S. et al. Predicting discharge placement after elective surgery for lumbar spinal stenosis using machine learning methods. Eur Spine J 28, 1433–1440 (2019). https://doi.org/10.1007/s00586-019-05928-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00586-019-05928-z