Abstract

In this contribution, we extend the principle of integer bootstrapping (IB) to a vectorial form (VIB). The mathematical definition of the class of VIB-estimators is introduced together with their pull-in regions and other properties such as probability bounds and success rate approximations. The vectorial formulation allows sequential block-by-block processing of the ambiguities based on a user-chosen partitioning. In this way, flexibility is created, where for specific choices of partitioning, tailored VIB-estimators can be designed. This wide range of possibilities is discussed, supported by numerical simulations and analytical examples. Further guidelines are provided, as well as the possible extension to other classes of estimators.

Similar content being viewed by others

1 Introduction

Global navigation satellite system (GNSS) ambiguity resolution is the process of resolving the unknown integer ambiguities of the observed carrier phases. Once they are successfully resolved, advantage can be taken of the millimeter-level precision of the carrier-phase measurements, thereby de facto turning them into ultra-precise pseudo-ranges. The practical importance of this becomes clear when considering the great variety of current and future GNSS models to which integer ambiguity resolution applies. A comprehensive overview of these GNSS models, together with their applications in surveying, navigation, geodesy and geophysics, can be found in textbooks such as (Strang and Borre 1997; Teunissen and Kleusberg 1998; Kaplan and Hegarty 2006; Misra and Enge 2006; Hofmann-Wellenhof et al. 2008; Borre and Strang 2012; Leick et al. 2015; Teunissen and Montenbruck 2017; Morton et al. 2021).

In the current theory of integer ambiguity resolution, one can discriminate between three different classes of estimators: the class of integer (\(\mathrm{I}\)) estimators (Teunissen 1999), the class of integer-aperture (\(\mathrm{IA}\)) estimators (Teunissen 2003a) and the class of integer-equivariant (\(\mathrm{IE}\)) estimators (Teunissen 2003b). The classes are subsets of one another, with the \(\mathrm{I}\)-class being the smallest and the \(\mathrm{IE}\)-class the largest: \(\mathrm{I} \subset \mathrm{IA} \subset \mathrm{IE}\). Members from all three classes have found their application in a wide range of different GNSS models, see, e.g., (Brack et al. 2014; Hou et al. 2020; Odolinski and Teunissen 2020; Psychas and Verhagen 2020; Verhagen 2005; Wang et al. 2018; Zaminpardaz et al. 2018). In this contribution, we restrict attention to the \(\mathrm{I}\)-class.

Popular estimators in the \(\mathrm{I}\)-class are integer rounding (\(\mathrm{IR}\)), integer bootstrapping (\(\mathrm{IB}\)) and integer least-squares (\(\mathrm{ILS}\)). Integer rounding is the simplest, but has the poorest success rate performance, while integer least-squares has the best performance, but is computationally the most complex (Teunissen 1998, 1999). In terms of their success rate performance, the three integer estimators can thus be ordered as follows

where the equality holds if the ambiguities are perfectly decorrelated.

The \(\mathrm{IB}\)-estimator has the attractive property that it is simple to compute and that it can have a close to optimal success rate performance when used with a properly chosen ambiguity parametrization. The estimator is characterized by two operations that are alternately applied: a sequential conditional least-squares estimation and an integer mapping. With the \(\mathrm{IB}\) these are both applied at the scalar level. This is, however, not a necessity for the two principles to be applicable. In this contribution, we will generalize the scalar concept of integer bootstrapping to a vectorial form in which both the sequential conditional estimation and the integer mapping are vectorial. We will develop the associated theory and show that with the concept of vectorial integer bootstrapping (VIB) great flexibility is created for designing one’s integer estimators when balancing computational simplicity against success rate performance.

This contribution is organized as follows. In Sect. 2, we briefly review integer ambiguity resolution with a special emphasis on integer bootstrapping and its various properties. The concept of vectorial integer bootstrapping (VIB) is introduced in Sect. 3. Two different descriptions of the class of VIB-estimators are given, one that follows naturally from the definition of scalar integer bootstrapping and another that is more suitable to characterize its pull-in regions. In Sect. 4, we develop probabilistic properties of the VIB-estimators, with a special emphasis on their probability of correct integer estimation, the ambiguity success rate, together with its easy-to-compute lower bounds and upper bounds. In this section we also provide a generalized version of the performance I-ordering defined in (1). It includes newly defined VIB-estimators and it shows the great flexibility one has in working with vectorial integer bootstrapping, which we also demonstrate through numerical examples. In Sect. 5, we provide further considerations for VIB-usage, including the choice of ambiguity parametrization, efficiency enhancing options when solving the normal equations and the different ways in which the VIB concept can be extended to other classes of estimators. Finally a summary with concluding remarks is provided in Sect. 6.

The following notation is used throughout: \({\mathsf {E}}(\cdot )\) denotes the expectation operator, \({\mathsf {D}}(\cdot )\) the dispersion operator, \({\mathsf {P}}( \cdot )\) probability of an event, \(||\cdot ||_{Q}^{2}=(\cdot )^{T}Q^{-1}(\cdot )\) the square-weighted-norm in the metric of Q, and \({\mathcal {I}}: {\mathbb {R}}^{n} \mapsto {\mathbb {Z}}^{n}\) an admissible integer map.

2 Mixed-integer model estimation

In this section, a brief review is given of mixed-integer model estimation with an emphasis on the method of integer bootstrapping.

2.1 Mixed-integer model and ambiguity resolution

The basis of GNSS integer ambiguity resolution is formed by the mixed-integer model, given as

with \(y \in {\mathbb {R}}^{m}\) the vector of observables containing the pseudo-ranges and carrier-phases, \((A,B) \in {\mathbb {R}}^{m \times (n+p)}\) the full-rank design matrix, \(a \in {\mathbb {Z}}^{n}\) the vector of unknown integer ambiguities, \(b \in {\mathbb {R}}^{p}\) the vector of real-valued unknown parameters (e.g. baseline components and atmospheric delays), and \(Q_{yy}\) the positive-definite variance-covariance (vc) matrix of the observables.

In the following, we will refer to b simply as the baseline vector. The underlying distribution of the previous mixed-integer model will be assumed a multivariate normal distribution as common in many GNSS applications (Leick et al. 2015; Teunissen and Montenbruck 2017; Morton et al. 2021). The solution to the (2) is obtained through the following three steps:

Step 1: the model is solved by means of least-squares (LS) estimation whereby the integerness of the ambiguities is discarded. This gives the so-called float solution, together with its vc-matrix, expressed by

Step 2: an admissible integer map \({\mathcal {I}}: {\mathbb {R}}^{n} \mapsto {\mathbb {Z}}^{n}\) is chosen to compute the integer ambiguity vector as

The integer map is admissible when its pull-in regions \({\mathcal {P}}_{z} = \{ x \in {\mathbb {R}}^{n}|\; {\mathcal {I}}(x)=z \}\), \(z \in {\mathbb {Z}}^{n}\), cover \({\mathbb {R}}^{n}\), while being disjoint and integer translational invariant. It follows that those regions leave no gaps, have no overlaps, and obey an integer remove-restore principle (Teunissen 1999).

Some popular choices for \({\mathcal {I}}\) are integer rounding (IR), integer bootstrapping (IB), or integer least-squares (ILS). To enhance the probabilistic and/or numerical performance of ambiguity resolution, the integer map is often preceded by the decorrelating Z-transformation \({\hat{z}}=Z^{T}{\hat{a}}\) of the LAMBDA method (Teunissen 1995), in which case the integer estimate of a is computed as

Step 3: after \(\check{a}\in {\mathbb {Z}}^{n}\) has been validated, the ambiguity-resolved or fixed baseline solution can be given as

Its vc-matrix, in case the uncertainty of \(\check{a}\) may be neglected, is given as

which shows by how much the precision of \({\hat{b}}\) will be improved as a consequence of imposing the integer ambiguity constraint \(a \in {\mathbb {Z}}^{n}\).

2.2 Integer bootstrapping

The IB-estimator is one of the most popular integer ambiguity estimators. Its popularity stems from the ease with which it can be computed and from its close to optimal performance once the ambiguities are sufficiently decorrelated. The IB-estimator, following (Teunissen 1998) is here defined.

Definition 1

(Scalar integer bootstrapping). Let \({\hat{a}}=({\hat{a}}_{1}, \ldots , {\hat{a}}_{n})^{T} \in {\mathbb {R}}^{n}\) be the float solution and let \(\check{a}_{\mathrm{IB}}=(\check{a}_{1}, \ldots , \check{a}_{n})^{T} \in {\mathbb {Z}}^{n}\) denote the corresponding integer bootstrapped (IB) solution. Then

where \(\lceil \cdot \rfloor \) denotes integer rounding and \({\hat{a}}_{i|I}\) is the least-squares estimator of \(a_{i}\) conditioned on the values of the previous \(I=\{1, \ldots , (i-1) \}\) sequentially rounded components, \(\sigma _{i, j \mid J}\) is the covariance between \({\hat{a}}_{i}\) and \({\hat{a}}_{j \mid J}\), and \(\sigma _{j \mid J}^{2}\) is the variance of \({\hat{a}}_{j \mid J}\). For \(i=1\), \({\hat{a}}_{i \mid I}={\hat{a}}_{1}\). \(\square \)

This previous definition shows that the IB-estimator is driven by products of ambiguity conditional covariances and variances like \(\sigma _{i,j\mid J}\cdot \sigma _{j\mid J}^{-2}\). As shown in (Teunissen 2007), these are readily available when one works with the triangular decomposition of the ambiguity vc-matrix, \(Q_{{\hat{a}}{\hat{a}}}=LDL^{T}\), thus making the computation of the IB-estimator particularly easy. The matrix L is lower unitriangular, so its entries are defined as

while we have

Another attractive feature of the IB-estimator is that its probability of correct integer estimation, or success rate, can be easily computed. Its analytical expression is given by Teunissen (1998) as follows

with \(\varPhi (\cdot )\), i.e. cumulative normal distribution, being

Note that while the entries of L in \(Q_{{\hat{a}}{\hat{a}}}=LDL^{T}\) drive the IB-estimator, entries of D are the ones that determine its success rate. Moreover, the IB-estimator is not the best estimator within the class of I-estimators. Teunissen (1999) proved that of all admissible integer estimators, the ILS-estimator

is best in the sense that it has the largest possible success rate. The price one pays for this optimality is that, in contrast to the easy-to-compute IB-estimator, the computation of (13) is based on a more elaborate integer search (Teunissen 1995).

Although IB is not best in the class of integer estimators, it is best in a smaller class, namely the class of sequential integer estimators. This class was introduced in (Teunissen 2007) as any \({\mathcal {I}}: {\mathbb {R}}^{n} \mapsto {\mathbb {Z}}^{n}\) for which

where \([\cdot ]\) denotes component-wise integer rounding of its vectorial entry and R is an arbitrary unit lower triangular matrix. Note that both IR and IB belong to this class. IR is obtained with the choice \(R=I_{n}\) and IB with the choice \(R=L^{-1}\). In (Teunissen 2007) it is shown that of all sequential integer estimators, IB has the largest success rate. Hence, for the success rate of the three popular integer estimators, we have \({\mathsf {P}}(\check{a}_{\mathrm{IR}}=a) \le {\mathsf {P}}(\check{a}_{\mathrm{IB}}=a) \le {\mathsf {P}}(\check{a}_{\mathrm{ILS}}=a)\). Thus integer rounding has poorest performance and integer-least-squares the best. In the following section we will extend this ordering by including the success rate performance of a vectorial formulation for the integer bootstrapping.

3 Vectorial integer bootstrapping

In this section, we introduce the concept of vectorial integer bootstrapping (VIB) together with a description of its pull-in regions that are illustrated by means of a few three-dimensional examples.

3.1 The VIB-estimator

The IB-estimator is characterized by two elements that are alternately applied, the sequential conditional estimation and the integer mapping. With the IB-estimator they are both applied at the scalar level. This is however not a necessity for the two applied principles. Hence, we may generalize the scalar integer bootstrapping to a form in which both the sequential conditional estimation and the integer mapping are vectorial. As a result we have the following definition of vectorial integer bootstrapping.

Definition 2

(Vectorial integer bootstrapping) Let \({\hat{a}}=({\hat{a}}_{1}, \ldots , {\hat{a}}_{v})^{T} \in {\mathbb {R}}^{n}\) be the float ambiguity solution, with \({\hat{a}}_{i} \in {\mathbb {R}}^{n_{i}}\), \(i=1, \ldots , v\) and \(n=\sum _{i=1}^{v} n_{i}\), while let \(\check{a}_{\mathrm{VIB}}=(\check{a}_{1}, \ldots , \check{a}_{v})^{T} \in {\mathbb {Z}}^{n}\) denote the corresponding vectorial integer bootstrapped solution. Then

where \(\lceil \cdot \rfloor _{i} : {\mathbb {R}}^{n_{i}}\mapsto {\mathbb {Z}}^{n_{i}}\) is a still to be chosen admissible integer mapping, and \({\hat{a}}_{i|I}\) is the least-squares estimator of \(a_{i}\) conditioned on the values of the previous \(I=\{1, \ldots , (i-1) \}\) sequentially integer estimated vectors, \(Q_{ij \mid J}\) is the covariance matrix of \({\hat{a}}_{i}\) and \({\hat{a}}_{j \mid J}\), and \(Q_{jj \mid J}\) is the variance matrix of \({\hat{a}}_{j \mid J}\). For \(i=1\), \({\hat{a}}_{i \mid I}={\hat{a}}_{1}\). \(\square \)

Note that each v integer map, \(\lceil . \rfloor _{i}: {\mathbb {R}}^{n_{i}}\mapsto {\mathbb {Z}}^{n_{i}}\), in (15), can still be chosen freely. As an example consider the case where \({\hat{a}}\) is partitioned in two parts, \({\hat{a}}=({\hat{a}}_{1}^{T}, {\hat{a}}_{2}^{T})^{T}\). Then \(v=2\) and the conditional estimator of \(a_{2}\), when conditioned on \(a_{1}\), is given as

having as vc-matrix

Would we now choose both \(\lceil \cdot \rfloor _{1}\) and \(\lceil \cdot \rfloor _{2}\) as ILS-maps, the corresponding \(\mathrm{VIB}_{\mathrm{ILS}}\) solution follows as

Thus now two ILS-problems need to be solved, but both at a lower dimension than that of the original full ILS-problem, which was defined as

The two solutions, (18) and (19), can be compared if we make use of the following orthogonal decomposition (Teunissen 1995),

This shows that instead of minimizing the sum of quadratic forms, as done with (19), the solution in (18) is obtained by minimizing the two quadratic forms separately. The integer-valued vector \(z_1\) in (20) is obtained during the first minimization.

In general, the integer mapping in each block can be chosen as only IR, or only IB or only ILS, or combinations of them. Nonetheless, if for a certain \(n_i\)-dimensional block we consider IB as the estimator, this is equivalent to a further partitioning into \(n_i\) (scalar) blocks. It follows that adopting IB in each block, i.e., applying the \(\mathrm{VIB}_{\mathrm{IB}}\) estimator, will lead to the exactly same (scalar) IB formulation, and thence this is not separately analyzed.

3.2 The VIB pull-in regions

In our VIB-definition given in (15) we have described the components of the VIB-estimator using the analogy with its scalar variant in (8). For the purpose of describing the pull-in regions of the VIB-estimator, we now provide its vectorial form thereby drawing on the analogy with (14).

Lemma 1

(VIB-estimator) Let \({\mathcal {I}}_{\mathrm{VIB}}: {\mathbb {R}}^{n} \mapsto {\mathbb {Z}}^{n}\) be the VIB-defining admissible integer map. Then we have \(\check{a}_{{ VIB}} = {\mathcal {I}}_{\mathrm{VIB}}({\hat{a}})\), with

where \({\mathcal {I}}: {\mathbb {R}}^{n} \mapsto {\mathbb {Z}}^{n}\) is given as \({\mathcal {I}}(x)=(\lceil x_{1}\rfloor _{1}^{T}, \ldots , \lceil x_{v}\rfloor _{v}^{T})^{T}\) for \(x=(x_{1}^{T}, \ldots , x_{v}^{T})^{T}\). The \({\mathcal {L}}\in {\mathbb {R}}^{n\times n}\) is given as a lower block-triangular matrix, such that

Proof

The proof follows directly by substitution thereby using the lower block-triangularity of \({\mathcal {L}}\) in (21). \(\square \)

The pull-in region \({\mathcal {P}}_{z}\) of an integer estimator is defined as the region in which all float solutions are mapped to the same integer \(z \in {\mathbb {Z}}^{n}\) by the integer estimator. Hence, for the VIB-estimator, it is defined as

The following Lemma shows how the pull-in regions are indeed driven by the VIB characterizing integer maps \(\lceil . \rfloor _{i}: {\mathbb {R}}^{n_{i}} \mapsto {\mathbb {Z}}^{n_{i}}\) and sequential conditional estimation.

Lemma 2

(VIB pull-in region) The pull-in regions of \(\check{a}_{\mathrm{VIB}}={\mathcal {I}}_{\mathrm{VIB}}({\hat{a}})\) are given as

where \({\mathcal {I}}(x)=(\lceil x_{1}\rfloor _{1}^{T}, \ldots , \lceil x_{v}\rfloor _{v}^{T})^{T}\) and \({\mathcal {L}}\) is the lower block-triangular matrix given in (22).

Proof

Starting with (21), we have

from which, using (23), the result follows. \(\square \)

To gain further insights into the geometries of the VIB pull-in regions, in particular, under different choices for the integer maps \(\lceil . \rfloor _{i}: {\mathbb {R}}^{n_{i}} \mapsto {\mathbb {Z}}^{n_{i}}\), we provide a few graphical representations.

3.3 Graphics of VIB pull-in regions

We consider a three-dimensional float ambiguity vector \({\hat{a}}=({\hat{a}}_1,{\hat{a}}_2,{\hat{a}}_3)^{T}\in {\mathbb {R}}^{3}\), having as vc-matrix

Its lower unitriangular matrix L and the diagonal matrix D from \(Q_{{\hat{a}}{\hat{a}}}=LDL^T\) are given, respectively, as

The pull-in regions of the traditional integer estimators IR, IB and ILS are first considered. They are shown in Fig. 1 as a cube, parallelepiped, and parallelohedron, respectively. Their projections on the three mutually orthogonal coordinate planes are also shown. Note that generally it is wise to start with the most precise ambiguities in the IB, in this case \({\hat{a}}_{1}\).

For the three-dimensional VIB-estimators, two different ambiguity-partitionings can be considered, depending on whether \(\lceil \cdot \rfloor _{1}\) is a 1-dimensional or a 2-dimensional map. In Fig. 2, \(\lceil \cdot \rfloor _{1}\) is 2-dimensional for which IR (Fig. 2, left) and ILS (Fig. 2, right) is chosen. The third ambiguity is then conditionally updated and rounded to its nearest integer. For the alternative case that \(\lceil \cdot \rfloor _{1}\) is 1-dimensional, one starts with ordinary rounding, but then has different choices for the second step. In Fig. 3, the second step, where conditioning has taken place on the first ambiguity, is based on IR (Fig. 3, left) and ILS (Fig. 3, right).

As visible from these examples, different VIB-estimators have different geometries for their pull-in regions. Hence, their pull-in regions will have different fits to the float-ambiguity’s confidence region and therefore also different success rates.

The 3D pull-in regions are given for the \(\mathrm{VIB}_{\mathrm{IR}}\) \([(a_1,a_2)\rightarrow (a_3)]\) estimator (left) and for the \(\mathrm{VIB}_{\mathrm{ILS}}\) \([(a_1,a_2)\rightarrow (a_3)]\) estimator (right). The arrow refers to a conditioning of the last component on the first two ambiguities

The 3D pull-in regions are given for the \(\mathrm{VIB}_{\mathrm{IR}}\) \([(a_1)\rightarrow (a_2,a_3)]\) estimator (left) and for the \(\mathrm{VIB}_{\mathrm{ILS}}\) \([(a_1)\rightarrow (a_2,a_3)]\) estimator (right). The arrow refers to a conditioning of the last two components on the first ambiguity

4 VIB probability of correct integer estimation

In this section, we study the success rate of VIB-estimators, along with bounds and possible approximations.

4.1 The VIB success rate

As the success rate of an admissible integer estimator of \(a \in {\mathbb {Z}}^{n}\) is given by the amount of probability mass its a-centered pull-in region covers of the probability density function (PDF) of \({\hat{a}}\), \(f_{{\hat{a}}}(x)\), the VIB success rate is given by the integral

The following lemma shows how this success rate can be computed from a product of probabilities.

Lemma 3

(VIB success rate) Let \({\hat{a}} \sim {\mathcal {N}}(a, Q_{{\hat{a}}{\hat{a}}})\) and let \(\check{a}_{\mathrm{VIB}}\) be the VIB-estimator of \(a\in {\mathbb {Z}}^{n}\), as expressed in (15). Then

with

and \({\mathcal {P}}_{i} = \{ x \in {\mathbb {R}}^{n_{i}}\;| \; \lceil x\rfloor _{i}=0 \}\), \(i=1, \ldots , v\).

Proof

The proof is given in Appendix. \(\square \)

This general result has two familiar special cases. For \(v=n\), then the above VIB success rate reduces to that of the (scalar) integer bootstrapping given in (11). For \(v=1\), it depends on the chosen integer mapping \(\lceil \cdot \rfloor : {\mathbb {R}}^{n} \mapsto {\mathbb {Z}}^{n}\), for which IR, IB and ILS are the popular contenders.

4.2 Bounds and approximation of VIB success rate

We now discuss how existing bounds and approximations to the success rates of IR, IB, and ILS can be used in order to obtain overall bounds and approximations for the VIB success rate as well.

Lemma 4

(VIB success rate bounds) Let \({\mathcal {P}}_{i}= \{ x \in {\mathbb {R}}^{n_{i}}\;| \; \lceil x\rfloor _{i}=0 \}\) be the origin-centered pull-in region of the integer map \(\lceil . \rfloor _{i}: {\mathbb {R}}^{n_{i}} \mapsto {\mathbb {Z}}^{n_{i}}\) and let the bounds \(\mathrm{LB}_{i} \le {\mathsf {P}}({\hat{a}}_{i|I} \in a + {\mathcal {P}}_{i}) \le \mathrm{UB}_{i}\) be given. Then

Proof

This follows from using the individual bounds \(\mathrm{LB}_{i} \) and \(\mathrm{UB}_{i}\) in (30). \(\square \)

By making use of known bounds for IR and ILS, respectively, (32) can be easily applied to obtain the following two success rate bounds.

Lemma 5

( Success rate bounds for \(\mathrm{VIB}_{\mathrm{IR}}\) and \(\mathrm{VIB}_{\mathrm{ILS}}\)) Let \(\sigma _{j|I}^{2}\), for \(j=q_{i-1}+1,\ldots ,q_{i}\) (\(q_{0}=0, q_{i}=\sum _{j=1}^{i} n_{j}\)), and \(i=1,\ldots ,v\), be the variance of the jth ambiguity conditioned on \(a_{I}\), with \(I=\{1,\ldots ,{i-1}\}\). Then with the Ambiguity Dilution of Precision (ADOP) of the ith subset \({\hat{a}}_{i|I}\in {\mathbb {R}}^{n_i}\),

we have

where \(\chi ^{2}(n_{i},0)\) denotes a central Chi-squared distributed random variable with \(n_{i}\) degrees of freedom, while

with \(\varGamma \) being the gamma-function.

Proof

For the proof see Appendix. \(\square \)

These bounds can be used in the following sense:

-

\(\mathrm{VIB}_{\mathrm{IR}}\) can be considered good enough for ambiguity resolution if its lower bound is large enough.

-

\(\mathrm{VIB}_{\mathrm{ILS}}\) can be considered too poor if its upper bound is too small.

4.3 Performance ordering of VIB-estimators

We now determine a performance ordering for the different integer estimators discussed. Such ordering can also be adopted to determine additional bounds on the success rates of well-known integer estimators.

First we can start with a generalization of (1) given a fixed partitioning \(a=[a_{1}^{T}, \ldots , a_{v}^{T}]^{T} \in {\mathbb {Z}}^{n}\). In this case, the success rate in (30) is respectively the smallest and the largest when all \(\lceil . \rfloor _{i}\) correspond to IR and to ILS, i.e., \(\lceil x_{i} \rfloor _{i}=\arg \min \limits _{z_{i} \in {\mathbb {Z}}^{n_{i}}}||x_{i}-z_{i}||_{Q_{ii|I}}^{2}\). It follows that the bounds of (34) are a lower and an upper bound for all VIB-estimators given that same partitioning. Hence, for a fixed v, we have \(\mathrm{VIB}_{\mathrm{IR}} \le \mathrm{VIB} \le \mathrm{VIB}_{\mathrm{ILS}}\), whereas for \(v=1\) the relation simply reduces to (1).

Differently, we now allow v to vary, provided that it satisfies \(n= \sum _{i=1}^{v} n_{i}\), so we are processing all ambiguity components. It follows that for \(v=1\), the entire n-dimensional subset is considered and the relation in (1) holds, while for \(v=n\), each block is a scalar, so both \(\mathrm{VIB}_{\mathrm{IR}}\) and \(\mathrm{VIB}_{\mathrm{ILS}}\) are ultimately equivalent to IB. For the case \(v\in (1,n)\), I-estimators adopted in each block will define overall VIB performance. Starting with \(\mathrm{IR}\), which is poorer than \(\mathrm{IB}\), we thus have \(\mathrm{VIB_{IR}} \le \mathrm{IB}\), but also \(\mathrm{VIB_{IR}} \ge \mathrm{IR}\), since conditioning improves precision and an improved precision also improves the success rate of rounding (Teunissen 2007). Similarly, since ILS is better than or equal to IB, we have \(\mathrm{VIB_{ILS}} \ge \mathrm{IB}\), but always \(\mathrm{VIB_{ILS}} \le \mathrm{ILS}\) since ILS has the largest possible success rate in the class of I-estimators.

We summarize the above performance ordering results in the following Lemma.

Lemma 6

(VIB-performance ordering)

-

1.

For fixed v, i.e., a fixed partitioning \(a=[a_{1}^{T}, \ldots , a_{v}^{T}]^{T}\), with \(a_{i} \in {\mathbb {Z}}^{n_{i}}\), we have

$$\begin{aligned} \mathrm{VIB}_{\mathrm{IR}} \le \mathrm{VIB} \le \mathrm{VIB}_{\mathrm{ILS}} \end{aligned}$$(36) -

2.

For any \(v>0\), satisfying \(n= \sum _{i=1}^{v} n_{i}\), we have

$$\begin{aligned} \mathrm{IR} \le \mathrm{VIB_{IR}} \le \mathrm{IB} \le \mathrm{VIB_{ILS}} \le \mathrm{ILS} \end{aligned}$$(37)

\(\square \)

This result shows that an easy way to improve IR is to already define blocks of ambiguities, and include some conditioning. Similarly, also the performance of IB can be still improved, while avoiding the computational effort required for a full ILS solution. A simple way to graphically summarize the previous lemma is given in the next Fig. 4, where the different estimators are ordered in terms of their success rate.

Graphical illustration of the success rate ordering for well-known integer estimators, i.e., IR, IB and ILS. In addition, the \(\mathrm{VIB}_{\mathrm{IR}}\) and \(\mathrm{VIB}_{\mathrm{ILS}}\) are shown, when adopting only IR or ILS in each block, whose size is arbitrary. Lastly, with the cyan square a generic VIB is illustrated for a fixed block-size v, using arbitrarily selected I-estimators

In (34), we have already made use of the \(\mathrm{ADOP}\) quantity in order to formulate an upper bound. Given that the ADOP is a geometric average of the sequential conditional standard deviations of ambiguities (Teunissen 1997a), it gives an approximation to the average precision of ambiguities, and therefore, it can be used for obtaining an approximation to the ILS success rate as

with \(\mathrm{ADOP}= | Q_{{\hat{a}}{\hat{a}}}|^{\frac{1}{2n}}\), similar to (33), but here referring to the full set of ambiguities. In a similar way, it can be used to provide an approximation to the success rate of the \(\mathrm{VIB}_{\mathrm{ILS}}\) estimator.

Lemma 7

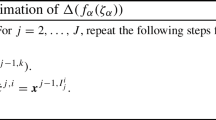

(\(\mathrm{VIB}_{\mathrm{ILS}}\) success rate approximation) Let \(\check{a}_{i}\in {\mathbb {Z}}^{n_i}\) be the ith integer vector of the VIB-estimator defined as

Then

with \(\mathrm{ADOP}_{i}\) given by (33). \(\square \)

This approximation becomes better the more decorrelated the ambiguities are. The error of approximation vanishes in case of a full decorrelation. Note that the approximation in (40) becomes equal to the success rate of IB when \(n_{i}=1\),\(\forall i\), and thus when \(v=n\).

Finally, we mention that if the bounds and approximations are not considered sharp enough, that one can still resort to Monte Carlo simulations of the required VIB-probabilities using the approaches as described in (Verhagen et al. 2013).

4.4 Numerical illustration

We now present two numerical examples, one low- and one high-dimensional, to illustrate and exemplify the performance orderings of Lemma 6.

Example 1

(Three-dimensional ambiguity space) We consider the performance of different I-estimators when the triangular decomposition of the float ambiguity vc-matrix is given in (27) and (28). The success rate is numerically computed using \(10^8\) samples, where the float ambiguity vectors are synthetically generated from a normal distribution with zero-mean and vc-matrix given in (26). Table 1 shows results for \(\mathrm{VIB_{IR}}\) and \(\mathrm{VIB_{ILS}}\), along with results for the well-know IR, IB and ILS estimators. Here the VIB-estimators have their third ambiguity conditioned on the other two, which are fixed by IR or ILS. The exact result for IB, together with lower bounds for IR and \(\mathrm{VIB_{IR}}\), and an ADOP approximation for ILS and \(\mathrm{VIB_{ILS}}\), are also given, together with a reference to their defining equation.

The success rate values are aligned with the VIB performance ordering of Lemma 6 (cf. 37). The \(\mathrm{VIB_{ILS}}\) shows indeed a quasi-optimal performance, i.e. ILS, without the need of an integer search over the entire domain. Moreover, the \(\mathrm{VIB_{IR}}\) estimator is better than IR, while being suboptimal to the IB method, whose success rate is available analytically. \(\diamond \)

Example 2

(VIB in regional network) A 9-station, dual-frequency GPS network for PPP-RTK processing with known station coordinates is considered (see Fig. 5). The data is processed based on a Kalman filter each 30s using precise orbits for DOY 293 in 2020, along with mathematical models and the software platform described in (Odijk et al. 2017).

For the purpose of illustrating the performance orderings, we focus attention on full-ambiguity resolution (FAR) and on a moment in time when the best FAR success rate is extremely low, which in the present example is the case at 7:15 (UTC), marked by epoch number 870, when a new satellite, PRN03, rises and is being tracked by all stations of the network. The dimension of the ambiguity space is \(n=146\). Figure 6 shows the success rates of the different integer estimators employed, with all ambiguities decorrelated using the LAMBDA transformation (Teunissen 1995). In both \(\mathrm{VIB_{ILS}}\) (top plot) and \(\mathrm{VIB_{IR}}\) (bottom plot), we consider two different partitioning: \(v=2\) and \(v=20\). The \(\mathrm{VIB_{ILS}}\) behaves optimally for \(v=2\), while smaller improvements are found for \(v=20\), i.e., when small subsets of 7–8 components are sequentially processed. We should observe that by decorrelating the ambiguities, an IB solution becomes quasi-optimal, thus a good lower bound to the ILS. The same holds also for the \(\mathrm{VIB_{ILS}}\).

In the bottom plot, the IR solution has a smaller success rate with respect to IB, but is extremely efficient. The \(\mathrm{VIB_{IR}}\) solution for \(v=20\) approaches the IB success rate, with a large improvement over the IR solution. This second result is relevant since it empathizes how some conditioning operations can substantially improve robustness of the straightforward integer rounding. Its success rate will be always smaller than IB, but once the precision increases, this difference becomes negligible and a quasi-optimal integer solution can be obtained almost instantaneously.\(\diamond \)

5 Further VIB considerations

5.1 Ambiguity parametrization

In order to enhance the success rate performance of VIB-estimation, considerations about the chosen ambiguity parametrization and their ordering are important. The general guide hereby is to aim forming blocks having the most precise ambiguities, followed by blocks that have the most precision gain from the conditioning, and this continues until the success rate drops below the required threshold. Although such can be achieved through the construction of the full-dimensional decorrelating Z-transformation (de Jonge and Tiberius 1996), this process can in many GNSS applications be significantly aided by the a priori construction of proper ambiguity re-parametrizations. Here we show three such examples, with the first working on frequencies, the second on satellites and the third on antennas.

Example 3

(Widelane-Narrowlane) Widelaning is a popular multi-frequency technique of taking differences between ambiguities of different frequencies so as to obtain transformed ambiguities with a better precision (Hatch 1989; Forsell et al. 1997; Teunissen 1997c). When placed in the framework of VIB-estimation, the following steps and flexibility in the widelaning procedure can be recognized (here given for the dual-frequency case, but easily generalized to the multi-frequency case):

-

1.

Apply the widelane transformation to get the float widelane and narrowlane ambiguity vectors, \({\hat{a}}_{w}\) and \({\hat{a}}_{n}\), respectively;

-

2.

Integer estimate the widelane ambiguities as \(\check{a}_{w}=\lceil {\hat{a}}_{w}\rfloor _{1}\), with \(\lceil \cdot \rfloor _{1}\) being the integer map of IR or IB;

-

3.

Float estimate the narrowlane ambiguities conditioned on the fixed widelane ambiguities as \({\hat{a}}_{n|w} = {\hat{a}}_{n} - Q_{{\hat{a}}_n{\hat{a}}_w}Q_{{\hat{a}}_w{\hat{a}}_w}^{-1} ({\hat{a}}_{w}-\check{a}_{w})\);

-

4.

Z-transform the narrowlane ambiguities to decorrelate, giving \({\hat{z}}_{n|w}=Z^{T}{\hat{a}}_{n|w}\) and its vc-matrix \(Q_{{\hat{z}}_{n|w}{\hat{z}}_{n|w}}\);

-

5.

Integer estimate the transformed narrowlane ambiguities as \(\check{z}_{n}=\lceil {\hat{z}}_{n|w}\rfloor _{2}\), with \(\lceil \cdot \rfloor _{2}\) being the integer map of IB or ILS.

The goal of the first step is to obtain ambiguities that are sufficiently precise so that simple estimators, like IR or IB, can achieve high-enough success rates in the second step. The goal of the third and fourth step is to benefit from the conditioning and decorrelation, before IB or ILS are applied. In case of ILS, the fourth step is aimed at improving the numerical efficiency of the ILS-computations, whereas for IB, it is aimed at improving the success rate. \(\diamond \)

Example 4

(Multivariate geometry-free model) Consider the dual-frequency, geometry-free model when tracking \(v+1\) satellites (Teunissen 1997b). Due to its special structure, the \(2v \times 2v\) vc-matrix of its DD float ambiguities is given as

in which \(D_{v} \in {\mathbb {R}}^{(v+1) \times v}\) represents the between-satellite differencing operator, \(\otimes \) the Kronecker product and Q the vc-matrix of the single-differenced, dual-frequency ambiguities. It was shown in (Teunissen 1997b), that by using the analytical \(LDL^{T}\)-decomposition of (41), the multivariate quadratic form of the ambiguities can be written in the form of a weighted sum of 2-dimensional quadratic forms,

in which the \(a_{i} \in {\mathbb {Z}}^{2}\), \(i= 1, \ldots , v\), are, with respect to the reference satellite, the dual-frequency DD ambiguities of satellite i, \(a_{I}=(a_{1}^{T}, \ldots , a_{i-1}^{T})^{T}\), and \({\hat{a}}_{i}(a_{I})\) is the conditional estimate

with \({\hat{a}}_{i}(a_{I})={\hat{a}}_{1}\) when \(i=1\). Instead of solving a high-dimensional ILS-problem by minimizing (42) over the 2v-dimensional space of integers, the v two-dimensional quadratic forms of (42) are minimized in a sequential fashion. Hence, in this case the main VIB-estimation steps, for \(i=1, \ldots , v\), are:

-

1.

Compute the conditional estimate \({\hat{a}}_{i|I} = {\hat{a}}_{i}(\check{a}_{I})\);

-

2.

ILS-estimate \(\check{a}_{i} = \arg \min \limits _{z \in {\mathbb {Z}}^{2}}||{\hat{a}}_{i|I}-z||_{Q}^2\);

-

3.

Update integer ambiguity vector: \(\check{a}_{I+1} =(\check{a}_{I}^{T}, \check{a}_{i}^{T})^{T}\).

Note, as each 2-dimensional ILS problem may still present highly correlated ambiguities, that a suitable \(2 \times 2\) decorrelating Z-transform can be constructed, which then only has to be applied once to Q. \(\diamond \)

Example 5

(Network array) This example is taken from the concept of array-aided PPP introduced in (Teunissen 2012). We assume to have an array with \(r+1\) antennas, tracking \(s+1\) GNSS satellites, on f frequencies. With \(z_{i} \in {\mathbb {Z}}^{fs \times 1}\) being the integer vector of DD ambiguities of the ith baseline, the integer network ambiguity matrix \({\mathcal {Z}}=(z_{1}, \ldots , z_{r}) \in {\mathbb {Z}}^{fs \times r}\) can be formed of which the \(fsr \times fsr\) vc-matrix of the float solution \({\hat{\zeta }}=\mathrm{vec}(\hat{{\mathcal {Z}}})\) can be shown to read

in which \(D_{r} \in {\mathbb {R}}^{(r+1) \times r}\) represents the between-antenna differencing matrix, \(Q_{r}\) is a cofactor matrix by which the relative quality of the array-antennas can be modelled (i.e. \(Q_{r}=I_{r+1}\) when all antennas have the same quality), and N is the \(sf \times sf\) reduced normal matrix of the single-baseline ambiguities. Note, although (44) resembles the structure of (41), that (44) is a Kronecker product of two different types of matrices. As the receiver-antennae dependency is made explicit in matrix \(D_{r}^{T}Q_{r}D_{r}\), differences in antenna-quality can be exploited in the VIB-conditioning. If we consider the case of 3 antennas, with \(D_{r}=(e_{2}, -I_{2})^{T}\), \(e_{2}=(1,1)^{T}\), and \(Q_{r}=\mathrm{diag}(\sigma _{1}^{2}, \sigma _{2}^{2}, \sigma _{3}^{2})\), then \(\zeta =(z_{1}^{T}, z_{2}^{T})^{T} \in {\mathbb {Z}}^{2fs \times 2fs}\) and (44) can be written as

Would we now condition the ambiguities of the second baseline, \({\hat{z}}_{2}\), on those of the first baseline, \({\hat{z}}_{1}\), the resulting vc-matrix is obtained with the help of (45) as

where \(\gamma _i=\sigma _i^2/\sigma _1^2\) is the variance-ratio between one of the two auxiliary antennas and the master. The precision of the ambiguities conditioned in a vectorial sense is improving by a factor 3/4 for antennas with the same precision. At the same time, the VIB improvement in the precision of the conditioned ambiguities \({\hat{z}}_{2|1}\) will actually be negligible if the second antenna (involved in the first baseline) has a very poor precision, a situation that thus should be avoided. \(\diamond \)

Next to frequencies, satellites and receivers, also other elements of the GNSS functional and stochastic model can in particular applications be exploited for the construction of a VIB-suitable ambiguity parametrization. Such can e.g. be driven by constellation, by satellite-elevation or by atmospheric impact. In a network, for instance, with very different baseline lengths, the ambiguities of the shorter baselines will generally be more precise and therefore candidates to be treated first (Blewitt 1989). A similar consideration holds for ambiguities of high-elevation satellites, which are usually more precise than those of lower-elevation satellites.

5.2 Practical considerations

As VIB-estimation is also aimed at reducing the computational complexities of integer estimation, it is important to recognize that in several of its computational steps a good use can be made of the, often readily available, Cholesky-decomposition of the system of normal equations. For instance, although in many of the expressions for ambiguity resolution the vc-matrix \(Q_{{\hat{a}}{\hat{a}}}\) and/or its inverse \(Q_{{\hat{a}}{\hat{a}}}^{-1}\) are needed, their explicit computation can often be avoided. Similarly, although the expressions of estimation often show the float ambiguity vector \({\hat{a}}\) explicitly, the computation of this full vector is not always needed, and this is particularly so in case of VIB-estimation.

To demonstrate this, let the partitioned system of normal equations, with the Cholesky decomposition for the normal matrix, be given as

Then the reduced system of normal equations for the ambiguities is given as \(C_{aa}C_{aa}^{T}{\hat{a}}={\bar{r}}_{a}\), from which it follows that the lower-triangular sub-matrix \(C_{aa}\in {\mathbb {R}}^{n\times n}\) is directly related to the vc-matrix of the float ambiguity vector: \(Q_{{\hat{a}}{\hat{a}}}^{-1}=C_{aa}C_{aa}^T\), and so \(Q_{{\hat{a}}{\hat{a}}}=C_{aa}^{-T} C_{aa}^{-1}\). The matrix \(C_{aa}^{-1}\) (also lower triangular) can then be directly used in the decorrelation process prior to the actual integer estimation, thereby saving several matrix operations. We refer to de Jonge et al. (1996) for a more comprehensive description of these computational aspects, in particular describing further advantages relatively to the computation of the decorrelating Z-transformation. Here we also point to the VIB-flexibility as far as the Z-decorrelation is concerned. One can apply such decorrelation to all ambiguities, or one can restrict the Z-decorrelation to only when the mappings \(\lceil . \rfloor _{i}: {\mathbb {R}}^{n_{i}}\mapsto {\mathbb {Z}}^{n_{i}}\) need to be computed.

In case of VIB, one can take another advantage of the triangular structure of \(C_{aa}\) by avoiding, through a proper ordering of the ambiguities, the explicit computation of their float values. With the triangular matrix \(C_{aa}\) partitioned as

it directly follows from the reduced normal equations \(C_{aa}C_{aa}^{T}{\hat{a}}={\bar{r}}_{a}\) that the conditional least-squares float solution of \(a_{1}\), when conditioned on \(a_{2}\), is given as \({\hat{a}}_{1|2} = C_{11}^{-T}[C_{11}^{-1}{\bar{r}}_{1}-C_{12}^{T}a_{2}]\). This shows that the computation of the conditional float solution \({\hat{a}}_{1|2}\) does not require the explicit computation of the float solution \({\hat{a}}_{1}\) and that it can be done efficiently by solving triangular systems of equations. Hence, when in case of VIB, the v-block partitioning is known, the ambiguities can be ordered accordingly to take advantage of this numerical gain.

As discussed in previous sections, a vectorial formulation enables also ad hoc parametrizations of the ambiguity components, where structure of a certain problem can be fully exploited. Given that the mathematical relations presented in this contribution are generalized for any different parametrization, it is indeed possible to also makes use of different decorrelation approaches (Jazaeri et al. 2014), which could further enhance efficiency of VIB-based strategies. The large variety of applications for such a vectorial formulation makes a comprehensive discussion about performances not practical within the scope of this work, therefore a subject of future researches. However, to further emphasize the available flexibility, we briefly highlight the possible extensions to other estimators.

5.3 Extensions to other classes of estimators

The flexibility of the VIB-formulation does not restrict it to the class of integer estimators only. It could include estimators from the IA-class (Teunissen 2003a) or IE-class (Teunissen 2002) as well. For each block, for instance, one can include IA-estimators having aperture pull-in regions that are particularly accommodated to the integer estimator \(\lceil \cdot \rfloor _{i}: {\mathbb {R}}^{n_{i}} \mapsto {\mathbb {Z}}^{n_{i}}\) of that block. Such can then be used to include ambiguity-validation for each block, thereby providing flexibility and options to skip blocks when block validation fails. Also IE-estimators can be given a place in the VIB framework, for instance when it turns out that the success rate drops below the required threshold when an additional block would be fixed. Instead of outputting the conditional float solution of the remaining ambiguities, one could then still apply, using their conditional vc-matrix, best integer equivariant estimation to these ambiguities to improve upon their mean squared errors. As such, and with the various options available, the concept of partial ambiguity resolution, introduced in (Teunissen et al. 1999), is generalized to the VIB-domain.

6 Summary and concluding remarks

In this contribution we introduced the concept of vectorial integer bootstrapping (VIB) as a generalization of the popular, but scalar, integer bootstrapping. As with integer bootstrapping, VIB-estimation is characterized by two alternating operations that are sequentially applied, conditioning and integer mapping. It is due to the vectorial formulation of these two principles, that the VIB-concept creates such flexibility in designing one’s integer estimators. Many new integer estimators can be formulated, in particular when balancing computational simplicity against success rate performance.

We presented the probabilistic properties of the VIB-estimators, with a special emphasis on their probability of correct integer estimation and the formulation of easy-to-compute lower bounds and upper bounds of their success rates. We provided a new ordering in the success rate performance of various different VIB-estimators, together with corresponding numerical illustrations.

In order to enhance the success rate performance of VIB-estimation, considerations about the chosen ambiguity parametrization and their ordering are important. The general guide hereby is to aim forming blocks having the most precise ambiguities, followed by blocks that have the most precision gain from the conditioning. Although such can be achieved through the construction of the full-dimensional decorrelating Z-transformation, it was shown by means of analytical examples that in many GNSS applications such can be significantly aided through the a-priori construction of proper ambiguity re-parametrizations.

We also discussed further considerations when implementing VIB. As it is aimed at reducing the computational complexities of integer estimation, we described how at several of its computational steps a good use can be made of the, often readily available, Cholesky-decomposition of the system of normal equations. This not only concerns the computation of the ambiguity vc-matrix, but also of the float solution itself. Finally, we indicated that the flexible VIB-concept lends itself to further extensions, in particular in combination with estimators from the IA- and IE-class.

Data Availability

Data are included in this published article, while further data are available from the corresponding author on reasonable request.

References

Blewitt G (1989) Carrier phase ambiguity resolution for the global positioning system applied to geodetic baselines up to 2000 km. J Geophys Res Solid Earth 94(B8):10187–10203

Borre K, Strang G (2012) Algorithms for global positioning. Wellesley-Cambridge Press, Wellesley

Brack A, Henkel P, Gunther C (2014) Sequential best integer equivariant estimation for GNSS. Navigation 61(2):149–158

Forsell B, Martin Neira M, Harris R (1997) Carrier phase ambiguity resolution in GNSS-2. Proc ION GPS 1997:1727–1736

Hatch R (1989) Ambiguity resolution in the fast lane. In: Proceedings of the ION GPS89, pp 45–50

Hofmann-Wellenhof B, Lichtenegger H, Wasle E (2008) GNSS-global navigation satellite systems: GPS, GLONASS, Galileo, and more. Springer-Verlag, Wien

Hou P, Zhang B, Liu T (2020) Integer-estimable GLONASS FDMA model as applied to Kalman-filter-based short- to long-baseline RTK positioning. GPS Solut 24:93

Jazaeri S, Amiri-Simkooei A, Sharifi MA (2014) On lattice reduction algorithms for solving weighted integer least squares problems: comparative study. GPS Solut 18(1):105–114. https://doi.org/10.1007/s10291-013-0314-z

de Jonge P, Tiberius CCJM (1996) The LAMBDA method for integer ambiguity estimation: implementation aspects. LGR-Series Publications of the Delft Geodetic Computing Centre 12

de Jonge P, Tiberius CCJM, Teunissen PJG (1996) Computational aspects of the LAMBDA method for GPS ambiguity resolution. In: Proceedings of the 9th international technical meeting of the satellite division of the institute of navigation (ION GPS 1996), Institute of Navigation, vol 9, pp 935–944

Kaplan ED, Hegarty C (2006) Understanding GPS, principles and applications, 2nd edn. Artech House, Massachusetts

Leick A, Rapoport L, Tatarnikov D (2015) GPS satellite surveying, 4th edn. Wiley, New Jersey

Misra P, Enge P (2006) Global positioning system : signals, measurements, and performance, 2nd edition. Ganga-Jamuna

Morton J, van Diggelen F, Spilker JJ, Parkinson BP, Lo S, Gao G (2021) Position, navigation, and timing technologies in the 21st century: integrated satellite navigation, sensor systems, and civil applications. Wiley IEEE Press, New Jersey

Odijk D, Khodabandeh A, Nadarajah N, Choudhury M, Zhang B, Li W, Teunissen PJG (2017) PPP-RTK by means of S-system theory: Australian network and user demonstration. J Spat Sci 62(1):3–27

Odolinski R, Teunissen PJG (2020) Best integer equivariant estimation: performance analysis using real data collected by low-cost, single- and dual-frequency, multi-GNSS receivers for short- to long-baseline rtk positioning. J Geodesy 94:91

Psychas D, Verhagen S (2020) Real-time PPP-RTK performance analysis using ionospheric corrections from multi-scale network configurations. Sensors 20(11):3012

Strang G, Borre K (1997) Linear algebra, geodesy, and GPS. Wellesley-Cambridge Press, Massachusetts

Teunissen PJG (1995) The least-squares ambiguity decorrelation adjustment: a method for fast GPS integer ambiguity estimation. J Geodesy 70:65–82

Teunissen PJG (1997a) A canonical theory for short GPS baselines. Part III: the geometry of the ambiguity search space. J Geodesy 71(8):486–501

Teunissen PJG (1997b) GPS double difference statistics: with and without using satellite geometry. J Geodesy 71(3):137–148. https://doi.org/10.1007/s001900050126

Teunissen PJG (1997c) On the GPS widelane and its decorrelating property. J Geodesy 71(9):577–587. https://doi.org/10.1007/s001900050126

Teunissen PJG (1998) Success probability of integer GPS ambiguity rounding and bootstrapping. J Geodesy 72(10):606–612

Teunissen PJG (1999) An optimality property of the integer least-squares estimator. J Geodesy 73(11):587–593

Teunissen PJG (2000) ADOP based upper bounds for the bootstrapped and the least squares ambiguity success. Artif Satell 35(4):171–179

Teunissen PJG (2002) A new class of GNSS ambiguity estimators. Artif Satell 37(4):111–120

Teunissen PJG (2003a) Integer aperture GNSS ambiguity resolution. Artif Satell 38(3):79–88

Teunissen PJG (2003b) Theory of integer equivariant estimation with application to GNSS. J Geodesy 77:402–410

Teunissen PJG (2007) Influence of ambiguity precision on the success rate of GNSS integer ambiguity bootstrapping. J Geodesy 81(5):351–358

Teunissen PJG (2012) A-PPP: array-aided precise point positioning with global navigation satellite systems. IEEE Trans Signal Process 60(6):2870–2881

Teunissen PJG, Kleusberg A (1998) GPS for geodesy, 2nd edn. Springer, Berlin, Heidelberg

Teunissen PJG, Montenbruck O (2017) Handbook of global navigation satellite systems. Springer Verlag, Berlin

Teunissen PJG, Joosten P, Tiberius CCJM (1999) Geometry-free ambiguity success rates in case of partial fixing. Proc ION NTM 1999, San Diego pp 201–207

Verhagen S (2005) The GNSS integer ambiguities: Estimation and validation. PhD thesis, Delft University of Technology

Verhagen S, Li B, Teunissen PJG (2013) Ps-LAMBDA: ambiguity success rate evaluation software for interferometric applications. Comput Geosci 54:361–376

Wang K, Khodabandeh A, Teunissen PJG (2018) Five-frequency Galileo long-baseline ambiguity resolution with multipath mitigation. GPS Solut 22(3):1–14

Zaminpardaz S, Wang K, Teunissen PJG (2018) Australia-first high-precision positioning results with new Japanese QZSS regional satellite system. GPS Solut 22(4):101

Author information

Authors and Affiliations

Contributions

PT developed the theory and wrote the paper together with LM. The numerical and graphical results are by LM. SV, LM and PT validated the paper.

Corresponding author

Ethics declarations

Conflict of interest

These authors declare that they have no conflict of interest.

Appendix

Appendix

Proof of Lemma 3

(VIB success rate) We make use of the transformation of integral formula

with \(|\partial _{x}T(x)|\) being the determinant (in absolute value) of the Jacobian matrix of partial derivatives. Moreover, in our case we have \({\mathcal {R}}={\mathcal {P}}_{a, \mathrm VIB}\) and \(f:{\mathbb {R}}^{n}\rightarrow {\mathbb {R}}\) as

As transformation \(y=T(x)\) we choose

which leads for the transformed pull-in region to

with \({\mathcal {I}}(x)=(\lceil x_{1}\rfloor _{1}^{T}, \ldots , \lceil x_{v}\rfloor _{v}^{T})^{T}\), and for the transformed integrand we can write

since \(|\partial _{x}T(x)|=1\). Substitution of (52) and (53) into (49) proves the result. \(\square \)

Proof Lemma 5

(Success rate bounds for \(\mathrm{VIB}_{\mathrm{IR}}\) and \(\mathrm{VIB}_{\mathrm{ILS}}\)) The lower bound for \(\mathrm{VIB}_{\mathrm{IR}}\) follows from the IR lower bound given in (Teunissen 1998), such that

Note that this lower bound is evaluated for the unconditional ambiguity standard deviations. The upper bound for \(\mathrm{VIB}_{\mathrm{ILS}}\) follows from the respective upper bound for an ILS estimator, also given as

with \(\mathrm{ADOP}=|Q_{{\hat{a}}{\hat{a}}}|^{\frac{1}{2n}}\), and \(c_n\) given in (35). For a proof of this upper bound, see (Teunissen 2000). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Teunissen, P.J.G., Massarweh, L. & Verhagen, S. Vectorial integer bootstrapping: flexible integer estimation with application to GNSS. J Geod 95, 99 (2021). https://doi.org/10.1007/s00190-021-01552-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00190-021-01552-2