Abstract

Metamodels are often used to replace expensive simulations of engineering problems. When a training set is given, a series of metamodels can be constructed, and then there are two strategies to deal with these metamodels: (1) picking out the best one with the highest accuracy as an approximation of the computationally intensive simulation; and (2) combining all of them into an ensemble model. However, since the choice of approximate model depends on design of experiments (DOEs), employing of the first strategy thus increases the risk of adopting an inappropriate model. Nevertheless, the second strategy also seems not to be a good choice, since adding redundant metamodels may lead to loss of accuracy. Therefore, it is a necessary step to eliminate the redundant metamodels from the set of the candidates before constructing the final ensemble. Illuminated by the method of variable selection widely used in polynomial regression, a metamodel selection method based on stepwise regression is proposed. In our method, just a subset of n ones (n ≤ p, where p is the number of all of the candidate metamodels) is used. In addition, a new ensemble technique is proposed from the view of polynomial regression in this work. This new ensemble technique, combined with metamodel selection method, has been evaluated using six benchmark problems. The results show that eliminating the redundant metamodels before constructing the ensemble can provide more ideal prediction accuracy than directly constructing the ensemble by utilizing all of the candidates.

Similar content being viewed by others

References

Acar E, Rais-Rohani M (2009) Ensemble of metamodels with optimized weight factors. Struct Multidiscip Optim 37:279–294

Box GEP, Draper NR (1987) Expirical model building and response surface. Wiley, New York

Copeland KAF, Nelson PR (1996) Dual response optimization via direct function minimization. J Qual Technol 28:331–336

Cressie N (1988) Spatial prediction and ordinary kriging. Math Geol 20(4):405–421

De Boor C, Ron A (1990) On multivariate polynomial interpolation. Constr Approx 6:287–302

Del Castillo E, Montgomery DC (1993) A nonlinear programming solution to the dual response problem. J Qual Technol 25:199–204

Fang KT, Li R, Sudjianto A (2006) Design and modeling for computer experiments. CRC Press, New York

Friedman JH (1991) Multivariate adaptive regressive splines. Ann Stat 19(1):1–67

Goel T, Haftka RT, Shyy W, Queipo NV (2007) Ensemble of surrogates. Struct Multidiscip Optim 33(3):199–216

Jin R, Chen W, Simpson TW (2001) Comparative studies of metamodeling techniques under multiple modeling criteria. Struct Multidiscip Optim 23(1):1–13

Kksoy O, Yalcinoz T (2005) A hopfield neural network approach to the dual response problem. Qual Reliab Eng Int 21:595–603

Kohavi R, et al. (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Ijcai, vol 14, pp 1137–1145

Langley P, Simon HA (1995) Applications of machine learning and rule induction. Commun ACM 38 (11):55–64

Lin DKJ, Tu W (1995) Dual response surface optimization. J Qual Technol 27:34–39

Lophaven SN, Nielsen HB, Sndergaard J (2002) Dace-a matlab kriging toolbox. Technical report IMM-TR-2002-12, Technical University of Denmark

McDonald DB, Grantham WJ, Tabor WL, Murphy M (2000) Response surface model development for global/local optimization using radial basis functions. In: Proceedings of the eighth AIAA/USAF/NASA/ISSMO symposium on multidisciplinary analysis and optimization, Long Beach, CA

Meckesheimer M, Barton RR, Simpson TW, Limayemn F, Yannou B (2001) Metamodeling of combined discrete/continuous responses. AIAA J 39(10):1950–1959

Meckesheimer M, Barton RR, Simpson TW, Booker AJ (2002) Computationally inexpensive metamodel assessment strategies. AIAA J 40(10):2053–2060

Picard RR, Cook RD (1984) Cross-validation of regression models. J Am Stat Assoc 79(387):575–583

Powell MJ (1987) Radial basis functions for multivariable interpolation: a review. In: Algorithms for approximation. Clarendon Press, pp 143–167

Queipo NV, Haftka RT, Shyy W, Goel T, Vaidyanathan R, Tucker PK (2005) Surrogate-based analysis and optimization. Prog Aerosp Sci 41:1–28

Sacks J, Welch WJ, Mitchell TJ, Wynn HP (1989) Design and analysis of computer experiments. Stat Sci 4(4):409–423

Shaibu AB, Cho BR (2009) Another view of dual response surface modeling and optimization in robust parameter design. Int J Adv Manuf Technol 41:631–641

Smola AJ, Schlkopf B (2004) A tutorial on support vector regression. Stat Comput 14(3):199–222

Viana FA, Gogu C, Haftka RT (2010) Making the most out of surrogate models: tricks of the trade. In: ASME 2010 international design engineering technical conferences and computers and information in engineering conference, American Society of Mechanical Engineers, pp 587–598

Viana FAC, Haftka RT, Steffen V (2009) Multiple surrogate: how cross-validation errors can help us to obtain the best predictor. Struct Multidiscip Optim 39:439–457

Vining GG, Bohn L (1998) Response surfaces for the mean and variance using a nonparametric approach. J Qual Technol 30:282– 291

Vining GG, Myers RH (1990) Combining taguchi and response surface philosophies: a dual response approach. J Qual Technol 22:38– 45

Zerpa L, Queipo NV, Pintos S, Salager J (2005) An optimization methodology of alkaline-surfactant-polymer flooding processes using field scale numerical simulation and multiple surrogates. J Pet Sci Eng 47:197–208

Zhou XJ, Ma YZ, Li XF (2011) Ensemble of surrogates with recursive arithmetic average. Struct Multidiscip Optim 44(5):651–671

Zhou XJ, Ma YZ, Tu YL, Feng Y (2012) Ensemble of surrogates for dual response surface modeling in robust parameter design. Qual Reliab Eng Int 29(2):173–197

Acknowledgments

The funding provided for this study by National Science Foundation for Young Scientists of China under Grant NO.71401080 and NO.201508320059, the University Social Science Foundation of Jiangsu under Grant NO.2013SJB6300072 & NO.TJS211021, the University Science Foundation of Jiangsu under Grant NO.12KJB630002, and the Talent Introduction Foundation of Nanjing University of Posts and Telecommunications under Grant NO.NYS212008 & NO.D/2013/01/104 are gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Several metamodeling techniques

Here, there are four metamodeling techniques (PRS, RBF, Kriging, SVR) are considered.

1.1 A.1 PRS

For PRS, the highest order is allowed to be 4 in this paper, but the used order in a specific problem is determined by the selected sample set. When the highest order of a polynomial model is 4, it can be expressed as:

where \(\widetilde {F}\) is the response surface approximation of the actual response function, N is the number of variables in the input vector x, and a,b,c,d,e are the unknown coefficients to be determined by the least squares technique.

Notice that 3rd and 4th order models in polynomial model do not have any mixed polynomial terms (interactions) of order 3 and 4. Only pure cubic and quadratic terms are included to reduce the amount of data required for model construction. A lower order model (Linear and Quadratic) includes only lower order polynomial terms (only linear and quadratic terms correspondingly).

1.2 A.2 RBF

The general form of the RBF approximation can be expressed as:

Powell (1987) consider several forms for the basis function φ(⋅):

-

1.

\(\varphi (r)=e^{\left (\left .-r^{2} \right /c^{2}\right )}\) Gaussian

-

2.

\(\varphi (r)=(r^{2}+c^{2})^{\frac {1}{2}}\) Multiquadrics

-

3.

\(\varphi (r)=(r^{2}+c^{2})^{-\frac {1}{2}}\) Reciprocal Multi-quadrics

-

4.

φ(r)=(r/c 2) log(r/c) Thin-Plate Spline

-

5.

\(\varphi (r)=\frac {1}{1+e^{r\left / c\right .}}\) Logistic

where c ≥ 0. Particularly, the multi-quadratic RBF form has been applied by Meckesheimer et al. (2002, 2001) to construct an approximation based on the Euclidean distance of the form:

where ∥⋅∥ represents the Euclidean norm. Replacing φ(x) with the vector of response observations, y yields a linear system of n equations and n variables, which is used to solve β. As described above, this technique can be viewed as an interpolating process. RBF metamodels have produced good fits to arbitrary contours of both deterministic and stochastic responses (Powell 1987). Different RBF forms were compared by McDonald et al. (2000) on a hydro code simulation, and the author found that the Gaussian and the multi-quadratic RBF forms performed best generally.

1.3 A.3 Kriging

For computer experiments, Kriging is viewed from a Bayesian perspective where the response is regarded as a realization of a stationary random process. The general form of this model is expressed as:

which is comprised of the linear model component of k specified function f i (x) (i.e., the expression of the function is given, which is defined below) with unknown coefficients β i (i = 1,...,k), and Z(⋅) is a stochastic process, commonly assumed to be Gaussian, with mean zero and covariance

where σ 2 is the process variance; parameter 𝜃, which is somewhat like the parameter c in Gaussian basis function of RBF, is estimated using maximum likelihood.

For the set S = {s 1, ⋯,s n }, we have the corresponding outputs y s ={y(s 1),⋯,y(s n )}T. Considering the linear predictor

with c = c(x)∈R n. Note that the members of the weight vector c are not constants (whereas β in formula (17) are) but decrease with the distance between the input x to be predicted and the sampled points S = {s 1, ⋯,s n }; this S = {s 1, ⋯,s n } determines the simulation output vector y s ={y(s 1),⋯,y(s n )}T. Here, we replace y s with the random vector Y s ={Y(s 1),⋯,Y(s n )}T. In order to keep the predictor unbiased, we demand

and under this condition minimize

And then, we have

where

Under the constraint (4), we get

where

and

Then the MSE-optimal predictor (i.e., the best linear unbiased predictor (BLUP)) is

where \(\widehat {\boldsymbol {\beta }} = {({\textbf {{F}}^{T}}{\textbf {{V}}^{- 1}}\textbf {{F}})^{- 1}}{\textbf {{F}}^{T}}{\textbf {{V}}^{- 1}}{\textbf {{y}}_{\textbf {s}}}\) and \(\widehat {\boldsymbol {\gamma }} = \textbf {{v}}_{\textbf {x}}^{T}{\textbf {{V}}^{- 1}}({\textbf {{y}}_{\textbf {s}}} - \textbf {{F}}\widehat {\boldsymbol {\beta }})\). Function f i (x) in (1) is usually defined with polynomials of orders 0, 1, and 2. More specific, with x j denoting the jth component of x,Constant, p = 1:

Linear, p = n + 1:

Quadratic, \(p=\frac {1}{2}(n+1)(n+2)\):

The r e g p o l y0 and r e g p o l y1 corresponds to (29) and (30) respectively in MATLAB toolbox developed by Lophaven et al. (2002).

1.4 A.4 ε-SVR

Given the data set {(x 1, y 1),......,(x l , y l )}(where l denotes the number of samples) and the kernel matrix K i j = K(x i ,x j ), and if the loss function in SVR is ε-insensitive loss function

then the ε-SVR is written as:

The Lagrange dual model of the above model is expressed as:

where K(⋅,⋅) is kernel function. After being worked out the parameter α (∗), the regression function f(x) can be gotten.

The kernel function should be a Mercer kernel which has to be continuous, symmetric, and positive definite. Commonly adopted choices for K(⋅,⋅) (Smola and Schlkopf 2004) are

-

1.

k(x i , x j )=(x i ⋅x j ) (linear)

-

2.

k(x i , x j )=(x i ⋅x j )m (m degree homogeneous polynomial)

-

3.

k(x i , x j )=(x i ⋅x j + c)m (m degree inhomogeneous polynomial)

-

4.

\(k(\mathbf {x}_{i},\mathbf {x}_{j}) = \exp (-\frac {{{{\left \| {{{\mathbf {x}}_{i}} - {{\mathbf {x}}_{j}}} \right \|}^{2}}}}{{{2\sigma ^{2}}}})\) (Gaussian)

-

5.

\(k(\mathbf {x}_{i},\mathbf {x}_{j}) = \exp (- \sum \limits _{k = 1}^{l} {\theta {{\left \| {{\mathbf {x}}_{_{i}}^{k} - {\mathbf {x}}_{_{j}}^{k}} \right \|}^{{p_{k}}}}} ))\) (Kriging)

No matter which form of k(⋅,⋅) is chosen, the technique of finding the support vector remains the same. In all of the kernel functions mentioned above, Gaussian kernel is the most popular kernel function, which is used in this paper.

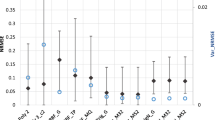

Appendix B: Box plots

In a box plot, the box is composed of lower quartile (25 %), median (50 %), and upper quartile (75 %) values. Besides the box, there are two lines extended from each end of the box, whose upper limit and lower limit are defined as follows:

where Q1 is the value of the line at lower quartile, Q3 is the value of the line at upper quartile, IQR = Q3−Q 1, X m i n i m u m and X m a x i m u m are the minimum and maximum value of the data. Outliers are data with values beyond the ends of the lines by placing a “ + ” sign for each point.

Rights and permissions

About this article

Cite this article

Zhou, X., Jiang, T. Metamodel selection based on stepwise regression. Struct Multidisc Optim 54, 641–657 (2016). https://doi.org/10.1007/s00158-016-1442-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-016-1442-1