Abstract

We describe a Transformer model for a retrosynthetic reaction prediction task. The model is trained on 45 033 experimental reaction examples extracted from USA patents. It can successfully predict the reactants set for 42.7% of cases on the external test set. During the training procedure, we applied different learning rate schedules and snapshot learning. These techniques can prevent overfitting and thus can be a reason to get rid of internal validation dataset that is advantageous for deep models with millions of parameters. We thoroughly investigated different approaches to train Transformer models and found that snapshot learning with averaging weights on learning rates minima works best. While decoding the model output probabilities there is a strong influence of the temperature that improves at \(\text {T}=1.3\) the accuracy of models up to 1–2%.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

New chemical compounds drive technological advances in material, agricultural, environmental, and medical sciences, thus, embracing all fields of scientific activities which have been bringing social and economic benefits throughout human history. Design of chemicals with predefined properties is an arena of QSAR/QSPR (Quantitative Structure Activity/Property Relationships) approaches aimed at finding correlations between molecular structures and their desired outcomes and then applying these models to optimise activity/property of compounds.

The advent of deep learning [3, 5] gave a new impulse for virtual modeling and also opened a venue for a promising set of generative methods based on Recurrent Neural Networks [10], Variational Autoencoders [13], and Generative Adversarial Networks trained with reinforcement learning [14, 23]. These techniques are changing the course of QSAR studies from the observation to the invention: from a virtual screening of available compounds to direct synthesis of new candidates. Generative models can produce big sets of promising molecules and impaired with SMILES-based QSAR methods [18] provide a strong foundation for creating highly optimized focussed libraries, but estimation of synthetic availability of these compounds is an open question though several approaches based on fragmentation [11] and machine learning [7] approaches have been developed. To synthesize a molecule, one should have a plan of a multi-step synthesis and also a set of available reactants. Finding an optimal combination of reactants, reactions, and conditions to obtain the compound with good yield, sufficient quality, and quantity is not a trivial task even for experts in organic chemistry. Recent advances in the computer-aided synthesis planning are reviewed in [2, 6, 9].

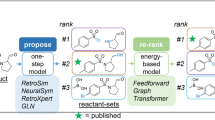

The retrosynthetic analysis worked out by Corey [8] tries to account for all factors while deriving the synthetic route. It iteratively decomposes the molecule on simpler blocks till all of them become available either by purchase or by synthesis described in the literature. At each step, Fig. 1, all possible disconnections (rules) with known reactions simplify the target molecule bringing to the scene less complex compounds. Some of them may be already available, while the others undergo the next step of retrosynthesis decomposition. Due to the recursive nature of the procedure, it can deal with thousands of putative compounds so computational retrosynthetic approaches can greatly help chemists in finding the best routes. Managing of the database of such rules is complicated and more critical the models based on it are not ready to accommodate new reactions and will always be outdated. Unfortunately, almost more than 60 years of developing rule-based systems ended with no remarkable success in synthesis planning programs [28]. Another approach to tackle the problem is to use so-called template-free methods inspired by the success of machine-translation. They don’t require the database of templates and rules due to an inherent possibility to derive this information during training directly from a database of organic reactions with clearly designated roles of reactants, products, reagents, and conditions.

The analogy between machine translation and retrosynthesis is evident: each target molecule has its predecessors from which it can be synthesized as every meaningful sentence one can translate from source language to target one. If all parts of a reaction are written in SMILES notation, then our source and target sentence are composed of valid SMILES tokens as words. The main goal of the work is to build a model which could for a given target molecule for our exampleFootnote 1 COC(=O)c1cccc(−c2nc3cccnc3[nH]2)c1 in Fig. 1 correctly predict the set of reactants. Namely, it should predict Nc1cccnc1N.COC(=O)c1cccc(C(=O)O)c1 in this case.

An example of a retrosynthetic reaction: on the left side of the arrow the target molecule is depicted, and on the right side the one possible set of reactants that can lead to the target is shown in common chemistry-like scheme and using SMILES notation. Here two successive amidation reactions result in cyclisation and aromatization.

Neural sequence-to-sequence (seq2seq) approach has been recently applied for a direct reaction prediction task [26, 27] with outstanding statistical parameters of final models – 90.4% of accuracy on test set. Seq2seq modeling has been also tested on retrosynthesis task [21], but due to the complex nature of retrosynthesis itself and difficulty in estimating the correct predictions of reactantsFootnote 2, accuracy on the test set was moderate 37.4% but still comparable to rule-based systems 35.4%. We questioned about the possibility of improvement models for one-step retrosynthesis utilizing modern neural network architectures and training techniques. Applying the Transformer Model [29], together with cyclical learning rate schedule [24], resulted in a model with accuracy 42.7%, that is >5% higher compare to the baseline model [21].

Our main contributions are:

-

We show that Transformer can be efficiently used for a retrosynthesis prediction task.

-

We show that for this particular task there is no advantage to use a validation dataset for early-stopping or other parameters optimization. We trained all the parameters directly from the training dataset.

-

Applying weights averaging and snapshot learning helped to train the most precise model for one-step retrosynthesis prediction. We averaged weights on 5 successive cycles of learning rate schedule.

-

Increasing the temperature while performing a beam-search procedure improves the accuracy up to 2%.

2 Approach

2.1 Dataset

In this study we used the same dataset of reactions as in [21]. This dataset was filtered from the USPTO database [22] originally derived from the USA patents and contains 50 000 reactions classified into 10 reaction types [25]. The authors [21] further preprocessed the database by splitting multiple products reactions into multiple single products reactions. The resulting dataset contains 40 029, 5 004, and 5 004 reactions for training, validation, and testing respectively. Information about the reaction type was discarded as we aimed at building a general model using SMILES of products and reactants only.

2.2 Model Input

The seq2seq models were developed to support machine translation where the input is a sentence in one language, and the output is a sentence with approximately the same meaning but in another language. String nature of data implies some tokenization procedures similar to word2vec to be used for preprocessing the input. Most of works in cheminformatics dealing with SMILES tokenize the input with a regexp equal or similar to [26].

Though such tokenization is more similar to way chemists think, it also has some drawbacks that confuse network by putting forward low represented molecular parts. For example, after applying this regexp to the database one can see some not frequent moieties such as [C@@], [C@@H], [S@@], [C@], [C@H], [N@@+], [se], [C−], [Cl+3]. The thing in brackets according to SMILES specification can be quite a complex gathering not only the element’s name itself, but also its isotopic value, stereochemistry configuration, the formal charge, and the number of hydrogensFootnote 3. Strictly speaking, to do tokenization right one should also parse the content of brackets just increasing the number of possible words in the vocabulary what eventually leads to the simplest tokenization only with letters. We tried different schemes of tokenization in this work but did not see any improvements in using them over simple character-based method.

Our final vocabulary has length of 66 symbolsFootnote 4:

To convert a token to a dense vector we used a trainable embeddingFootnote 5 of size 64. It is well known that training neural networks in batches is more stable, faster, and leads to more accurate models. To facilitate batch training we also used masks of input strings of shape (batch_size, max_length) with elements equal to 1 for those positions where are valid SMILES symbols and 0 everywhere else.

2.3 Transformer Model

We used a promising Transformer [29] model for this study which is a new generation of encoder-decoder neural networks family. The architecture is suited for exploration of the internal representation of data by deriving questions (Q) the data could be asked for, keys for its indexed knowledge (K), and answers written as values (V) corresponding to queries and keys. Technically these three entities are simply matrixes learned during the network training. Multiplying them with the input (X) gives keys (k), questions (q), and values (v) relevant to a current batch. Equipped with these calculated parameters of the input the self-attention layers transforms it pointing out to some encoding (decoding) parts based on the attention vector.

The Transformer has wholly got rid of any recurrences or convolutional operations. To tackle distances between elements of a string a positional encoding matrix was proposed with elements equal to the values of trigonometric functions depending on the position in a string and also the position in the embedding direction. Summed with learned embeddings positional encodings do their job linking far located parts of the input together. The output of self-attention layers is then mixed with original data, layer-wise normalized, and passed position-wise through a couple of ordinary dense layers to go further either in next level of self-attention layers or to a decoder as an information-rich vector representing the input. The decoder part of Transformer resembles the encoder but has an additional self-attention layer which corresponds to encoder’s output.

Transformer model shows the state-of-the-art results in machine translation and reaction prediction outcomes [27]. The latter work showed that training the Transformer on large and noisy datasets results in a model that can outperform not only other machine models but also well qualified and experienced organic chemists.

2.4 Model Inference

The model estimates the probability of the next symbol over the model’s vocabulary given all previous symbols in the string. Technically, the Transformer model first calculates logits, \(z_i\), and then transforms them to probabilities.

Here \(x_i\) is the input of the models at i position; L – the length of the input string; \(y_i\) is the decoded output of the model up to position \((i-1)\); and \(z_i\) – logits that are to be converted to probabilities:

where V is the size of the vocabulary (66 in this work) and T stands for the temperatureFootnote 6 usually assigned to 1.0 in standard softmax layers. With higher T the landscape of the probability distribution becomes more smooth. During the training the model adapts its weights to better predict \(q_i\), so \(y_i = q_i\).

During the inference however we have several possibilities how to convert \(q_i\) into \(y_i\), namely greedy and beam search. The first one picks up a symbol with maximum probability whereas the second one at each step holds \(top-K\) (K = beam’s size) suggestions of the model and summarises the overall likelihood for each of K final decodings. The beam search allows better inference and the probability landscape exploration compared to the greedy search because at a particular step of decoding it may choose a symbol with less than maximum probability, but the total likelihood of the result can be higher due to more significant probabilities on the next steps.

2.5 Training Heuristics

Training a Transformer model is a challenge, and several heuristics have been proposed [24], some of them were used in this study:

Using as Bigger Batch Size as Possible. Due to our hardware limitations we could not set the batch size more then 64Footnote 7;

Increasing the learning rate at the beginning of training up to warmup stepsFootnote 8. The authors of the original Transformer paper [29] used 4 000 steps for warming. The Transformer model for reaction prediction task from [27] used 8 000 steps. We analysed different values for warmup and eventually found that 16 000 works well with our model.

Applying Cyclic Learning Rate Schedules. This tips can generally improve any model [17] through better loss landscape exploration with bigger learning rates after the optimiser fell down to some local minima. For this study we used the following scheme for learning rate calculation depending on the step:

where cycle stands for the number of steps while the learning rate is decreasing before raising to the maximum again.

where \({ factor}\) is just a constant. Big values of \({ factor}\) introduce numerical instability during training, so after several trials we set \({ factor} = 20.0\). The curve for learning rate in this study is shown in Fig. 2, plot (4, f).

Averaging weights during last steps (usually 10–20) of training or at minima of learning rates in case of snapshop learning [16]. Also with cyclic learning rate schedules it is possible to average weights of those models that have minimum in losses just before increasing of the rate. Such approach leads to more stable and plain region in loss landscapes [17].

3 Results

3.1 Learning Details and Evaluation of Models for Retrosynthesis Prediction

For this study, we implemented The Transformer model in Tensorflow [1] library to support its integration in our in-house programs set (https://github.com/bigchem/retrosynthesis). All values reported are averages for three repetitive runs. Preliminary modeling showed that the architecture with 3 layers and 8 attention heads works well for the datasets, though we tried combinations of 2, 3, 4, 5 layers with 6, 8, 10, 12 heads. So all calculations were performed with these values fixed. The number of learnable parameters of the model is 1 882 176, embedding layer common for product and reactants has size 64.

Summary of learning curves for the Transformer model: (a) original learning rate schedule with warmup; (b) cyclic learning rate with warmup; (c) cross-entropy loss for training and (d) validation; (e) cross-entropy loss and (f) character-based accuracy for training a model wit cyclic learning schedule.

Following the standard machine learning protocol, we trained our first models (T1) using three datasets for training, validation, and external testing (8:1:1) as was done in [21]. Learning curves for T1 are depicted in Fig. 2, (c) and (d) for training and validation loss, respectively, (a) shows the original learning rate schedule developed by the authors of the Transformer but with 16 000 warmup steps. On reaching cross-entropy loss about 0.1 on the validation dataset, it stagnates without noticeable fluctuations as training loss steadily decreases. After warming up phase the learning rate begins fading and eventually after 1 000 epochs its value reaches \(2.8*10^{-5}\) inevitable causing to stop training because of too small updates.

During the decoding procedure, we explored the influence of the temperature parameter on the final quality of prediction and found that inferring at higher temperatures gives better result then at \(\text {T}=1\). This observation similarly repeated for all our models. Figure 3 shows the influence of this parameter on the reactants prediction of the part of the training set. Clearly, at \(\text {T}=1.3\) the model reaches the maximum of chemically-based accuracy. This fact one can explain that at higher temperatures the landscape of output probabilities of the model is softer letting the beam-search procedure to find more suitable ways during decoding. Of course, the temperature influences only relative distances between peaks, so it does not affect the greedy search method.

If we applied the early stopping technique, the training of a model is stopped around 200 epochFootnote 9. Effectiveness of such a model marked \(\text {T}1_1\) in Table 1 resulted in TOP-1 37.9% on the test set. If we chose the last one model obtained at 1 000 epoch, then the model \(\text {T}1_2\) gave us better value – 39.8%. In this case, we did not see any need of the validation dataset and keeping in mind that our model has almost 2 millions of parameters we decided to combine training and validation sets and train our next models on both data, e.g., without validation. The model T2 was trained on all data and with the same learning rate schedule as T1. The results obtained when applying T2 to the test set are better than for T1 model namely 41.8% vs. 39.8%, respectively.

Then we trained our model with cyclic learning rate schedule, Eq. 3, Fig. 2 (b) for better exploration of loss landscape. During training, we also saved the character-based accuracy of the model, Fig. 2, (f). This snapshot training regime [16] produces a set of different weights at each minimum of learning rate. Averaging them is to some extent equivalent to a consensus of models but within one model [17]. We tried different averaging regimes for T3 and found that averaging five last cycles gives better results.

Our final T3 model outperforms [21] by 5.3% with beam search and more critical it is also effective with greedy search 40.6%. The latter one is much faster and consequently more suitable for virtual screening campaigns.

It worth to notice that TOP-5 accuracy reaches almost 70%. That means the model can correctly predict reactants but sometimes scoring is wrong and TOP-1 is much less. We tried to improve TOP-1 scoring with internal confidence estimation.

3.2 Internal Scoring

The beam search calculates the sum of negative logarithms of probabilities of selecting a token at a particular step, and thus, this value can be a measure of internal confidence. To check this hypothesis, we selected T3-2 model and estimated its internal performance to distinguish between correct and invalid predictions. The parameters of the classifier were: \(\text {AUC} = 0.77\), \(\text {optimal threshold} = 0.00678\). Then we validated the model with an additional condition: if the score is less than optimal threshold we selected the answer, otherwise we went to the next candidate in the possible reactant sets returned by the beam search. The results were even worse than without thresholds, 28.45 vs. 42.42. A possible explanation is that the estimation does not deal with organic chemistry. The model tries to derive some character-based scoring relying only on tokens in a string and increasing this value does not influence the quality of prognosis. The same effect we saw during training when the character accuracy is 98% whereas chemistry-based metric is much lower.

Estimation of optimal thresholds on training sets almost always a bad idea due to the biasing of a model to its source data. The correct way is to use validation dataset instead. We built the classifier for the T1-2 with characteristics: \(\text {AUC} = 0.65\), optimal threshold 0.00396, and applied it for testing the model. The results were again worse, 14.1% vs. 40.85%. There are no significant differencies of accuracies when using unnormalized or normalized on the length of the reactants string scores. Figure 4 shows ROC curves for T1-2 and T3-2 models derived at \(\text {T}=1.3\). Evidently one cannot use this estimation to improve TOP-1 scoring.

4 Discussion

Much attention paid in the scientific literature for rule-based approaches [4, 28]. Since the authors of [20] have described the algorithm of automatic rule extraction from mapped reaction database several implementations of the procedure appeared, and then widely accepted by researchers. However, it should be noticed that, first, there is no algorithm to make atom-mapping [2] if it is absent (the typical situation with laboratory notebooks (ELN) for example). Second, all available information on synthesis usually contains only positive reactions, so all binary classification accuracies are inevitable overestimated because of artificial negative sets exploited in studies. Finally, the absence of commonly accepted dataset for testing makes the results of different groups practically disparate and biased to those problems the authors tried to solve. The authors of [4] selected 40 molecules from DrugBank database to test their multiscale models, whereas [21] used database specially prepared for classification [25].

Our model can correctly predict reactant set in TOP-5 with accuracy 69.8%. Internal confidence estimation cannot guarantee a correct ordering of reactants sets, so different scoring methods should be developed. One of the promising ways is to use a forward reaction prediction model to estimate whether it is possible to assemble a target molecule from reactants proposed. The scoring model should have excellent characteristics and probably it is possible to apply the same cycling learning rate and snapshot averaging to build it.

First work on applying reinforcement learning for the whole retrosynthetic path [28] showed superior performance compared to the rule-based methods developed before. More important if can deal with several steps of synthesis. But the policy learned during the training again used extracted rules limiting the method. Thus, the development of models for direct estimation of reactants is still of prime importance. During the encoding process, the Transformer finds an internal representation of a reaction which can be useful for multicomponent QSAR [19] for predicting rate constants [12] and yields of reactions. Embedding such systems in policy networks within reinforcement learning paradigm can bring forward an entirely data-driven approach to solve challenging organic synthesis problems.

5 Conclusions

We have described a Transformer model for retrosynthesis one-step prediction task. Our final model trained with cyclic learning rate schedule and its weights were averaged during last five loss minimum. The model outperforms the previous published retrosynthetic character-based model by 5.3%. It also does not require the extraction of specific rules, atom mappings, and reaction types in reaction dataset. We believe it is possible to improve the model further applying knowledge distillation method [15] for example. The current model can be used as a building block for reinforcement learning aimed at solving complex organic problems.

All source code and also models built are available online via github

Notes

- 1.

This reaction is in the test set and it was correctly predicted by our model.

- 2.

A target molecule usually can be synthesized with different reactions starting from different sets of reactants. The predictions of the model may be correct from organic chemist point of view but differ from the reactant set in ground truth. This may lead to underestimation of effectiveness of models.

- 3.

We do not want to exclude stereochemistry information from our model as well as charges and explicit hydrogens that will lead to reducing of the dataset. Moreover, work in generative models showed excellent abilities of models to close cycles, for example, c1cc(COC)cccc1. If the model can capture such a long distance relation why should it be cracked on much simplier substrings enclosed by brackets?

- 4.

This vocabulary derived from the complete USPTO set and is a little bit wider than needed for this study. But for future extending of the models it is better to fix the input shape to the biggest possible value.

- 5.

The encoder and the decoder share embeddings in this study.

- 6.

Similar to formula of Boltzmann (Gibbs) distribution used in statistical mechanics.

- 7.

Our first implementation of the model required a lot of memory to deal with masks of reactants and products. Though later we improved the code we still remained this size for consistency of the results.

- 8.

In our implementation 1 step is equivalent to 1 batch. The number of reactions for training is 40 029 + 5 004, so one epoch is equal to 704 batches.

- 9.

Though we trained our models for 1 000 epochs we also saved their weights after each epoch and for imitating early stopping technique selected those weights that correspond to minimum in validation loss function.

References

Abadi, M., et al.: TensorFlow: large-scale machine learning on heterogeneous systems (2015). https://www.tensorflow.org/

Baskin, I.I., Madzhidov, T.I., Antipin, I.S., Varnek, A.A.: Artificial intelligence in synthetic chemistry: achievements and prospects. Russ. Chem. Rev. 86(11), 1127–1156 (2017). https://doi.org/10.1070/RCR4746

Baskin, I.I., Winkler, D., Tetko, I.V.: A renaissance of neural networks in drug discovery. Expert Opin. Drug Discov. 11(8), 785–795 (2016). https://doi.org/10.1080/17460441.2016.1201262

Baylon, J.L., Cilfone, N.A., Gulcher, J.R., Chittenden, T.W.: Enhancing retrosynthetic reaction prediction with deep learning using multiscale reaction classification. J. Chem. Inf. Model. 59(2), 673–688 (2019). https://doi.org/10.1021/acs.jcim.8b00801

Chen, H., Engkvist, O., Wang, Y., Olivecrona, M., Blaschke, T.: The rise of deep learning in drug discovery. Drug Discov. Today 23(6), 1241–1250 (2018). https://doi.org/10.1016/j.drudis.2018.01.039

Coley, C.W., Green, W.H., Jensen, K.F.: Machine learning in computer-aided synthesis planning. Acc. Chem. Res. 51(5), 1281–1289 (2018). https://doi.org/10.1021/acs.accounts.8b00087

Coley, C.W., Rogers, L., Green, W.H., Jensen, K.F.: SCScore: synthetic complexity learned from a reaction corpus. J. Chem. Inf. Model. 58(2), 252–261 (2018). https://doi.org/10.1021/acs.jcim.7b00622

Corey, E.J., Cheng, X.M.: The Logic of Chemical Synthesis. Wiley, Hoboken (1995)

Engkvist, O., et al.: Computational prediction of chemical reactions: current status and outlook. Drug Discov. Today 23(6), 1203–1218 (2018). https://doi.org/10.1016/j.drudis.2018.02.014

Ertl, P., Lewis, R., Martin, E., Polyakov, V.: In silico generation of novel, drug-like chemical matter using the LSTM neural network. arXiv (2017). arXiv:1712.07449

Ertl, P., Schuffenhauer, A.: Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. J. Cheminform. 1(1), 8 (2009). https://doi.org/10.1186/1758-2946-1-8

Gimadiev, T., et al.: Bimolecular nucleophilic substitution reactions: predictive models for rate constants and molecular reaction pairs analysis. Mol. Inform. 37, 1800104 (2018). https://doi.org/10.1002/minf.201800104

Gómez-Bombarelli, R., et al.: Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent. Sci. 4(2), 268–276 (2018). https://doi.org/10.1021/acscentsci.7b00572

Guimaraes, G.L., Sanchez-Lengeling, B., Outeiral, C., Farias, P.L.C., Aspuru-Guzik, A.: Objective-reinforced generative adversarial networks (ORGAN) for sequence generation models. arXiv (2017). arXiv:1705.10843

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. arXiv (2015). arXiv:1503.02531

Huang, G., Li, Y., Pleiss, G., Liu, Z., Hopcroft, J.E., Weinberger, K.Q.: Snapshot ensembles: train 1, get M for free. arXiv (2017). arXiv:1704.00109

Izmailov, P., Podoprikhin, D., Garipov, T., Vetrov, D., Wilson, A.G.: Averaging weights leads to wider optima and better generalization. arXiv (2018). arXiv:1803.05407

Kimber, T.B., Engelke, S., Tetko, I.V., Bruno, E., Godin, G.: Synergy effect between convolutional neural networks and the multiplicity of SMILES for improvement of molecular prediction. arXiv (2018). arXiv:1812.04439

Kravtsov, A.A., Karpov, P.V., Baskin, I.I., Palyulin, V.A., Zefirov, N.S.: Prediction of rate constants of SN2 reactions by the multicomponent QSPR method. Dokl. Chem. 440(2), 299–301 (2011). https://doi.org/10.1134/S0012500811100107

Law, J., et al.: Route designer: a retrosynthetic analysis tool utilizing automated retrosynthetic rule generation. J. Chem. Inf. Model. 49(3), 593–602 (2009). https://doi.org/10.1021/ci800228y

Liu, B., et al.: Retrosynthetic reaction prediction using neural sequence-to-sequence models. ACS Cent. Sci. 3(10), 1103–1113 (2017). https://doi.org/10.1021/acscentsci.7b00303

Lowe, D.M.: Extraction of chemical structures and reactions from the literature. Ph.D. thesis, Pembroke College (2012). https://www.repository.cam.ac.uk/handle/1810/244727

Olivecrona, M., Blaschke, T., hongming Chen, O.E.: Molecular de-novo design through deep reinforcement learning. J Cheminform. 9(48), 1758–2946 (2017). https://doi.org/10.1186/s13321-017-0235-x

Popel, M., Bojar, O.: Training tips for the transformer model. arXiv (2018). https://doi.org/10.2478/pralin-2018-0002

Schneider, N., Stiefl, N., Landrum, G.A.: What’s what: the (nearly) definitive guide to reaction role assignment. J. Chem. Inf. Model. 56(12), 2336–2346 (2016). https://doi.org/10.1021/acs.jcim.6b00564

Schwaller, P., Gaudin, T., Lanyi, D., Bekas, C., Laino, T.: Found in translation: predicting outcomes of complex organic chemistry reactions using neural sequence-to-sequence models. arXiv (2018). arXiv:1711.04810

Schwaller, P., Laino, T., Gaudin, T., Bolgar, P., Bekas, C., Lee, A.A.: Molecular transformer for chemical reaction prediction and uncertainty estimation. arXiv (2018). arXiv:1811.02633

Segler, M.H., Preuss, M., Waller, M.P.: Planning chemical synthesis with deep neural networks and symbolic AI. Nature 555, 604–610 (2018). https://doi.org/10.1038/nature25978

Vaswani, A., et al.: Attention is all you need. arXiv (2017). arXiv:1706.03762

Acknowledgements

This study has been partially supported by ERA-CVD (https://era-cvd.eu) “Cardio-Oncology” project, BMBF 01KL1710 and by European Union’s Horizon 2020 research and innovation program under the Marie Skłodowska-Curie grant agreement No. 676434, “Big Data in Chemistry” (“BIGCHEM”, http://bigchem.eu). The authors thank NVIDIA Corporation for donating Quadro P6000 and Titan Xp and V graphics cards for this research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this paper

Cite this paper

Karpov, P., Godin, G., Tetko, I.V. (2019). A Transformer Model for Retrosynthesis. In: Tetko, I., Kůrková, V., Karpov, P., Theis, F. (eds) Artificial Neural Networks and Machine Learning – ICANN 2019: Workshop and Special Sessions. ICANN 2019. Lecture Notes in Computer Science(), vol 11731. Springer, Cham. https://doi.org/10.1007/978-3-030-30493-5_78

Download citation

DOI: https://doi.org/10.1007/978-3-030-30493-5_78

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30492-8

Online ISBN: 978-3-030-30493-5

eBook Packages: Computer ScienceComputer Science (R0)