Abstract

Background

Initial training and ongoing post-training consultation (i.e., ongoing support following training, provided by an expert) are among the most common implementation strategies used to change clinician practice. However, extant research has not experimentally investigated the optimal dosages of consultation necessary to produce desired outcomes. Moreover, the degree to which training and consultation engage theoretical implementation mechanisms—such as provider knowledge, skills, and attitudes—is not well understood. This study examined the effects of a brief online training and varying dosages of post-training consultation (BOLT+PTC) on implementation mechanisms and outcomes for measurement-based care (MBC) practices delivered in the context of education sector mental health services.

Methods

A national sample of 75 clinicians who provide mental health interventions to children and adolescents in schools were randomly assigned to BOLT+PTC or control (services as usual). Those in BOLT+PTC were further randomized to 2-, 4-, or 8-week consultation conditions. Self-reported MBC knowledge, skills, attitudes, and use (including standardized assessment, individualized assessment, and assessment-informed treatment modification) were collected for 32 weeks. Multilevel models were used to examine main effects of BOLT+PTC versus control on MBC use at the end of consultation and over time, as well as comparisons among PTC dosage conditions and theorized mechanisms (skills, attitudes, knowledge).

Results

There was a significant linear effect of BOLT+PTC over time on standardized assessment use (b = .02, p < .01), and a significant quadratic effect of BOLT+PTC over time on individualized assessment use (b = .04, p < .001), but no significant effect on treatment modification. BOLT + any level of PTC resulted in higher MBC knowledge and larger growth in MBC skill over the intervention period as compared to control. PTC dosage levels were inconsistently predictive of outcomes, providing no clear evidence for added benefit of higher PTC dosage.

Conclusions

Online training and consultation in MBC had effects on standardized and individualized assessment use among clinicians as compared to services as usual with no consistent benefit detected for increased consultation dosage. Continued research investigating optimal dosages and mechanisms of these established implementation strategies is needed to ensure training and consultation resources are deployed efficiently to impact clinician practices.

Trial registration

ClinicalTrials.gov NCT05041517. Retrospectively registered on 10 September 2021.

Similar content being viewed by others

Implementation strategies: training and consultation

Over the past two decades, studies have repeatedly explored the extent to which initial training must be supplemented with additional implementation supports to effect meaningful changes in professional practice. Many reviews indicate that post-training consultation (i.e., ongoing support following training, provided by an expert with the goal of improving implementation and practice of an EBP) [1] or other supports are necessary, but the strength of the evidence remains mixed [2,3,4,5]. Recent literature points to the limitations of “workshop only” trainings and suggests that they are likely to have a greater impact on the adherence and competence of practitioners when augmented with practice-specific implementation supports and/or ongoing consultation to promote workplace-specific adaptations and skill application [6,7,8]. As such, initial training and post-training consultation are two cornerstone—and complementary—implementation strategies to support the implementation of evidence-based practices [9, 10]. This is especially true in mental health care, where most evidence-based practices are complex psychosocial processes that require significant professional development support to implement effectively [11].

Among the numerous implementation strategies that have been identified to potentially promote adoption, delivery, and sustainment of interventions [12, 13], initial training and post-training consultation have often proven effective for evidence-based mental health practice implementation [4, 9, 10]. Initial training generally refers to a combination of didactic content covering intervention materials or protocols and active or experiential learning such as in vivo demonstration or role plays to apply skills and receive feedback [2]. Ongoing, post-training consultation refers to practice-specific coaching by one or more experts in the intervention strategy [14]. In the context of mental health service delivery, consultation is different from supervision in that the consultant may be internal or external to the organization and does not have direct authority over the implementer [15]. Moreover, supervision can be administrative in nature [16] and consultation specifically refers to case-specific skill application and refinement of an evidence-based clinical practice [17, 18]. Together, initial training and post-training consultation are complementary and foundational implementation strategies.

Training is the starting point for many professional behavior change efforts and is associated with initial, post-training improvements in provider attitudes, knowledge, and skill acquisition [5]. Increasingly, training for mental health clinicians is provided online, something that has only intensified following the emergence of the COVID-19 pandemic [19]. Fortunately, online training is at least as effective as in-person approaches [20,21,22,23], eliminates training-related travel time, reduces costs, and allows for self-paced administration of content, thus improving accessibility and feasibility [21, 24].

Importantly, initial training likely necessitates the addition of post-training consultation or support to effect changes in professional practice [2,3,4,5, 25, 26]. However, the optimal duration or frequency of ongoing supports following initial training is not well known. In fact, the most recent review on this topic found that post-training consultation was variably effective in improving uptake [5]. Inconsistencies in these findings suggest that it is important to better understand the processes through which consultation improves implementation outcomes.

Consultation dose

Although post-training consultation is a promising implementation strategy, very little is known about the optimal frequency and duration (i.e., dose) of post-training consultation to ensure its impact. In fact, a recent review of randomized controlled trials concluded that variations in consultation dose across studies precluded their ability to conclusively identify the effect of consultation practices [5]. Efficiency is important given that post-training consultation can easily increase the cost of training by 50% or more [27]. An uncontrolled study found that higher consultation doses were associated with greater changes in knowledge and attitudes surrounding an evidence-based intervention protocol for youth anxiety [28]. Although the moderating effects of consultation dose are typically examined observationally or quasi-experimentally [24], the current study explores consultation dose by manipulating it experimentally.

Implementation mechanisms: provider knowledge, skill, and attitudes

Despite increasingly precise identification of implementation strategies [13], there is little information available about how implementation strategies exert their influence. To ensure implementation strategies accurately match barriers and promote parsimonious and efficient change efforts, current research has focused on the identification of implementation mechanisms [29]. Implementation mechanisms are the processes or events through which an implementation strategy operates to affect desired implementation outcomes [30]. Unfortunately, systematic reviews indicate few studies have experimentally evaluated implementation mechanisms of change [30, 31]. Whether in schools or other healthcare delivery sectors, there is growing recognition that implementation strategy optimization will be greatly facilitated by clear identification and testing of the mechanisms through which implementation outcomes are improved [29, 32, 33].

Despite the ubiquity of training and consultation in the implementation of evidence-based mental health services, few studies have articulated or evaluated their mechanisms. Theorized mechanisms for training and consultation include provider changes in (1) knowledge, (2) attitudes, and (3) acquisition of skills. Knowledge and attitudes surrounding new practices are among the most frequently identified candidate mechanisms for training efforts [34]. Indeed, previous studies have shown that training often improves EBP knowledge, and, in turn, more knowledgeable and competent clinicians have been found to exhibit superior training and consultation outcomes [34,35,36]. Positive attitudes are often predictive of provider adoption, engagement in training and consultation, adherence, and skill [37,38,39,40]. Further, training has been found to enhance initial skill acquisition [5, 41] and post-training consultation subsequently reinforces these gains and helps to maintain clinician skills [42]. In one study of community mental health clinicians’ acquisition of evidence-based assessment practices following training in a flexible psychotherapy protocol, attitudes improved following training alone, but skill continued to improve over the course of consultation [43]. Other studies have found similar evidence for the value of consultation for maintaining and promoting these changes [25].

Measurement-based care in education sector mental health

The current study focuses on understanding the impact of training and consultation strategies for measurement-based care (MBC)—an evidence-based practice that involves the ongoing use of progress and outcome data to inform decision making and adjust treatment [44, 45]—on implementation mechanisms and outcomes within mental health services delivered in the education sector. MBC can be used to enhance any underlying treatment and has received extensive empirical support for its ability to improve outcomes in adult services [45, 46], with evidence rapidly accruing for children [47,48,49,50]. MBC involves both standardized (e.g., symptom rating scales with clinical norms/cutoffs) and individualized assessment measures (e.g., client-specific goals tracked quantitatively over time, such as days of school attendance) [51,52,53] as well as a focus on reviewing progress data with the patient to inform collaborative decisions about individualized treatment adjustments [54, 55].

MBC is particularly suited for treatment delivered in schools, where it is perceived by clinicians and students to be feasible, acceptable, and to facilitate progress toward treatment goals [56,57,58]. Research over the past 25 years has consistently found that schools are the most common service setting for the delivery of child and adolescent mental healthcare [59, 60]. Yet, use of evidence-based mental health treatment in school settings—including MBC—has lagged behind services in other sectors [61, 62].

The implementation of MBC in the education sector is consistent with calls to enhance the quality of services among settings, clinicians, and service recipients who would not otherwise have access to evidence-based care [63, 64]. However, while pragmatic implementation approaches are greatly needed to support MBC across service sectors, few established implementation strategies exist [54] and even fewer studies have evaluated strategies for improving MBC use among school clinicians. Existing training and consultation strategies used to implement MBC practices in schools have resulted in improvements in knowledge, attitudes, and use of practices initially and over time [43, 65], pointing to them as potential mechanisms for MBC implementation strategy effects.

Current study

The current study was designed to better understand the impact of a brief online training (BOLT) and post-training consultation (PTC) on putative implementation mechanisms as well as MBC penetration, an indicator of the number of service recipients receiving MBC out of the total number possible [66]. This study focused on increasing providers’ use of standardized assessments, individualized assessments, and assessment-informed treatment modifications (all components of MBC practice) [45]. Moreover, our design facilitated examination of the effects of PTC dosage levels on outcomes via its experimental design. Accordingly, the current study examined three specific aims by testing (1) the impact BOLT+PTC supports on MBC practices; (2) differential effects of a 2-, 4- or 8-week dosage of consultation on MBC practices; and (3) the impact on implementation mechanisms such as MBC knowledge, skill, and attitudes (i.e., perceived benefit and perceived harm) that are hypothesized to activate favorable MBC implementation outcomes.

Methods

Participants

Participants included a national sample of N = 75 clinicians who provide mental health interventions to students on their school campus. Participants were Master’s level or above; primarily female (n = 68, 91%) and White (n = 55, 73%); and working in elementary (students approximately 5–12 years old), middle (12–14 years), and high schools (14–19 years). The most common role was mental health counselor, followed by school social worker, school psychologist, school counselor, and other professional roles. Demographic and professional characteristics for participating clinicians are presented in Table 1.

Procedure

Recruitment

Participants were recruited via numerous professional networks, listservs, and social media (e.g., statewide organizations of school-based mental health practitioners in Illinois and North and South Carolina; a national newsletter devoted to school mental health; Twitter). Inclusion criteria were minimal to enhance generalizability and only included the requirements that (1) participants routinely provided individual-level mental health interventions or therapy and (2) spent ≥50% of their time providing services in schools. This was done to help ensure the representativeness of the sample compared to the clinicians who would ultimately access the training and consultation supports when later disseminated at a large scale. The study team conducted informed consent meetings via phone with prospective participants during which they were provided with a description of the study—including the conditions to which they could potentially be randomized—and its benefits. Participants provided verbal consent consistent with procedures approved by the University of Washington institutional review board. Recruitment lasted for a period of approximately 6 weeks to achieve the desired sample size.

Randomization

All participants were randomly assigned to either a BOLT+PTC condition or a service as usual control condition (see below) using the list randomizer function available at Random.org. For clinicians in the BOLT+PTC condition (n = 37), we conducted a scheduling survey to identify preferred times for consultation calls and then randomized the resulting consultation time blocks to our different consultation duration conditions. Using this approach, clinicians were placed into 2 (n = 14), 4 (n = 10), or 8 (n = 13) weeks of consultation. Clinicians in the control condition only completed study measures while continuing to provide services as usual. Blinding was not possible as clinicians were aware of their training and consultation obligations. See Additional File 1 for the study CONSORT diagram.

Data collection

All data were collected via online surveys and self-reported by participants. After enrolling in the study, all participants completed pre-training measures of their demographic, professional, and caseload characteristics and MBC knowledge, skill, attitudes, and use. These measures were collected weekly for 32 weeks following study enrollment. Participants received incentives in the form of $300 in the services as usual condition and $500 in the online training and consultation conditions for data collection activities (differences were due to differences in data collection burden).

Training and consultation

The online training and post-training consultation strategies were developed via an iterative human-centered design process intended to ensure their efficiency and contextual fit [67, 68].

Online training

After completing pre-training measures, participants assigned to any BOLT+PTC condition were asked to complete the online training within 2 weeks. Training included a series of interactive modules addressing the following content: (1) utility of MBC in school mental health; (2) administration and interpretation of measures; (3) delivery of collaborative feedback; (4) treatment goal-setting and prioritization of monitoring targets; (5) selecting and using standardized assessments; (6) selecting and using individualized assessments; (7) assessment-driven clinical decision-making; and (8) strategies to support continued use. The interactive online training modules are accompanied by a variety of support materials (e.g., tools to help integrate MBC into clinicians’ workflow; job-aids for introducing assessments and providing feedback on assessment results; a commonly used youth measures reference guide) via an online learning management system.

Consultation

The consultation model consisted of (1) 1-h small group (3–5 clinicians) live consultation sessions led by an expert MBC consultant which occurred every other week during the consultation period (e.g., clinicians in the 2-week condition had a single consultation call) and (2) asynchronous, message board discussions (hosted on the learning management system and moderated by the same consultant). Clinicians were asked to post a specified homework assignment on the message board (a) prior to their first call and (b) following each call during their assigned consultation period. Regardless of the consultation dosage, live consultation calls followed a standard sequence: (a) introduction and orientation to the session; (b) brief case presentations including MBC strategies used; (c) group discussion of appropriate next MBC steps for the case, including discussion of alternative therapeutic approaches/ strategies if MBC indicates that a change in treatment target or intervention strategy is needed; (d) expert consultant recommendations (as appropriate); and (e) wrap up, homework assignments, and additional resources. Asynchronous message board discussion provided a central location where clinicians reported on their experiences completing homework. Across the 2-, 4-, and 8-week groups, participating clinicians posted an average of 1.6 (range 0–3), 3.9 (range 0–6), and 6.2 (range 1–16) times, respectively.

Services as usual

Typical education sector mental health services tend to include a diverse array of assessment strategies that may include some inconsistent use of formal assessment and monitoring measures [56, 62]. Clinicians in this condition only completed study assessments.

Measures

Clinician demographic, professional, and caseload characteristics

Clinician demographic, professional, and caseload characteristics were collected using a self-reported questionnaire developed by the study team, informed by those used in prior school-based implementation research (e.g., [69]). Participants completed this questionnaire upon study enrollment.

MBC Knowledge Questionnaire (MBCKQ)

Modeled on the Knowledge of Evidence-Based Services Questionnaire [70], the MBCKQ was designed to assess factual and procedural knowledge about MBC. The 28-item, multiple-choice MBCKQ was iteratively developed based on the key content and learning objectives of the MBC training modules and administered at baseline and 2, 4, 6, 8, 10, 16, 20, 24, 28, and 32 weeks.

MBC skill

Clinicians responded to 10 Likert-style items assessing MBC skills including selection and administration of measures, progress monitoring, treatment integration/modification based on the results, and feedback to clients. Responses range from 1 (“Minimal”) to 5 (“Advanced”). This scale has previously demonstrated good internal consistency (α = .85) when used with school-based clinicians [56]. In the current sample, α = .90. It was also administered at baseline and subsequently at 2, 4, 6, 8, 10, 16, 20, 24, 28, and 32 weeks.

MBC attitudes

The Monitoring and Feedback Attitudes Scale (MFA) [71] was used to assess clinician attitudes toward ongoing assessment of mental health problems and the provision of client feedback (e.g., “negative feedback to clients would decrease motivation/engagement in treatment”). Responses range from 1 (“Strongly Disagree”) to 5 (“Strongly Agree”). The MFA has two subscales: (1) Benefit (i.e., facilitating collaboration with clients) and (2) Harm (i.e., harmful for therapeutic alliance, misuse by administrators). In the current sample, the MFA subscales demonstrated strong internal reliability (α = .91 and .88, respectively) and was administered at baseline and 2, 4, 6, 8, 10, 16, 20, 24, 28, and 32 weeks.

MBC practices

Clinician self-reported use of MBC practices was measured by their completion of the Current Assessment Practice Evaluation – Revised (CAPER) [52], a measure of MBC penetration. The CAPER is a 7-item self-report instrument that allows clinicians to self-report their use of assessments in their clinical practice during the previous month and previous week. Clinicians indicate the percentage of their caseload with whom they have engaged in each of the seven assessment activities. Response options for each activity are on a 4-point scale (1 = “None [0%],” 2 = “Some [1-39%],” 3 = “Half [40-60%],” 4 = “Most [61-100%]”). The CAPER has three subscales, which are (1) Standardized assessments (e.g., % of case load administered standardized assessment during the last week); (2) Individualized assessments (e.g., % of caseload systematically tracked individualized outcomes last week); and, (3) Treatment modification (e.g., % of clients whose overall treatment plan altered based on assessment data during the last week). Previous versions have been found to be sensitive to training [43]. The CAPER demonstrated good internal consistency across its three subscales in the current study (α = .85, .94, .87). Clinicians completed the CAPER every week for 32 weeks, including baseline; total CAPER scores for each subscale were used as implementation outcomes.

Analyses

The main goal of the current study was to test whether the BOLT+PTC strategies led to improvements in MBC implementation mechanisms and outcomes, relative to no-training services as usual condition. Specific MBC practices we measured using the CAPER were standardized and individualized assessment use and treatment modification informed by assessment data collected. Our second goal was to test whether the dose of PTC was differentially related to implementation outcomes (i.e., MBC practices). Finally, we tested the main effects of training and consultation dose on consultation mechanisms (i.e., MBC knowledge, attitudes, and skill).

We used R [72] for all analyses and tested our main hypotheses using multilevel models (MLM) with the statistical package ‘nlme’ [73]. MLMs allow for the analysis of clustered data, such as multiple observations of clinicians collected over time, and allows for missing data at the observation level. MLMs allowed us to simultaneously estimate all key hypothesis tests for each outcome in a single model: intervention effects on the levels of the outcome following training, intervention effects on the rate of change in the outcome over 32 weeks during and following training, and how much did PTC dose matter. These models are more appropriate than traditional ANCOVA models because they allow the integration of multiple time points into analyses while having more flexible assumptions (e.g., modeling both how clinicians changed over time in implementation outcomes as well as modeling differences across groups at specific time points).

We centered time such that the intercept reflected the end of consultation, regardless of consultation dosage. For instance, for those with 2 weeks of PTC, the intercept was centered at week 4 and for those with 4 weeks of PTC, the intercept was centered at week 6. This means that the main effects of intervention reflect differences across groups in levels of the outcomes at the end of consultation, or after the first 2 weeks of observations for the no-consultation control group.

To properly estimate the shape of change over time, we compared polynomials (quadratic and cubic effects) to a piecewise model, which estimated separate models of change during the consultation period and change in the post-consultation period. Models were chosen based on fit; we selected a given model as “better” fitting when all indices (e.g., BIC, AIC and -2LL test) agreed to avoid capitalizing on chance. These model fit indices allow comparison of the relative goodness of fit of different model specifications, balancing fit against parsimony [74]. Because we had a relatively small sample size of clinicians, we only estimated random intercepts and linear slopes; estimating random quadratic slopes (which estimate individual differences in quadratic change over time) produced model convergence problems.

We used 3 orthogonal contrast codes to compare the effects of intervention across conditions. The first compared the effects of BOLT+PTC to control. The second compared the effects of receiving PTC for 4 or 8 weeks to receiving PTC for 2 weeks, and the final contrast code compared receiving 4 vs. 8 weeks of PTC.

Power

The sample size balanced feasibility (e.g., the number of clinicians we expected to be able to recruit during the project period) against sensitivity of the data analytic models to detect the expected effects. We used Monte Carlo simulations in MPlus 6.0 to provide power estimates for the main effects models used in the current study. In a Monte Carlo simulation, data are simulated for a population based on estimated parameter values, multiple samples are drawn from that population, and a model is estimated for each one. Parameter values and standard errors are averaged over the samples, and power is derived based on the proportion of replications in which the null hypothesis is correctly rejected. We estimated power across 1000 replications for n = 75 participants measured at 32 time points. In general, our power analyses suggested that the current study had sufficient power (1 − b .80, a = .05) to detect small (b = .12) effects at Level 1 of the time-varying training and consultation effects across all models. On the other hand, power to detect between group differences, especially within the different consultation groups, was more limited. For example, we were powered (1 − b .80, a = .05) only to detect large (d = .98) differences between different consultation groups, and moderate (d = .58) differences between PTC and training only groups.

Results

Descriptive statistics

Table 1 presents overall descriptive statistics for the main sample for the current study. Table 2 presents means and SDs for implementation outcomes and mechanisms across time.

Main effects on implementation outcomes

First, we examined whether receiving BOLT+PTC produced changes in MBC practices during the post-consultation period (weeks 8–32), relative to the control group, and without accounting for change over time. Generally, mean differences were moderate for use of standardized assessment (Cohen’s d = .37, p = .11) and individualized assessment (Cohen’s d = .49, p = .04), but close to zero for treatment modification (Cohen’s d = .05, p = .84). However, it is important to note that these main effect estimates were non-significant or trending towards non-significance.

Change over time in outcomes

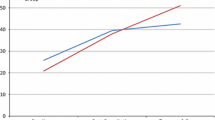

Next, we modeled change over time in the main outcomes, MBC practices. For standardized assessment, a quadratic term exhibited best fit to the data indicating a curvilinear pattern of change (see Fig. 1), but a linear only model fit the data for individualized assessment and treatment modification.

Main effects over time

We tested whether receiving BOLT+PTC led to post-training changes in MBC practices over time. Table 3 presents these findings. Results indicated relatively similar findings across outcomes, in that those clinicians who received training and any consultation exhibited better outcomes over time, while those in the control group exhibited declines in MBC practices over time, with those declines generally slowing with time. Figure 1 also illustrates the effects of BOLT+PTC over time on MBC practices. Specifically, for both standardized assessment (b = .02, p = <.01) and individualized assessment (b = .04, p < .001), there was a significant intervention effect on change over time, such that participants in the BOLT+PTC condition exhibited less decline relative to those in the control condition. While clinicians in BOLT+PTC continued to report higher levels of standardized and individualized assessments, control group participants reported declines over time. On the other hand, we observed no effects of BOLT+PTC on treatment modification.

Effects of PTC dose on main outcomes over time

We next explored whether PTC dose influenced outcomes, by replicating the analyses above with additional contrast codes for different PTC doses. Across models, there was little evidence of a consistent pattern of findings. Although some effects reached the traditional significance level, there was not consistent or strong evidence that any one PTC dose condition had a stronger impact on outcomes than any other. Because of the small sample size of the individual training groups, and the inconsistent effects, we refrain from interpreting them as evidence of a clear dose effect of PTC. See Table 4.

Effects on implementation mechanisms

Next, we tested whether BOLT+PTC was associated with change over time in the theoretical implementation mechanisms (MBC knowledge, attitudes, and skill). We used a similar approach to the analyses above, using multilevel models with time centered at the end of consultation for each group. At the end of consultation, participants in the BOLT+PTC conditions reported higher levels of MBC knowledge (b = .06, p = .019), but not higher levels of MBC skill (b = .13, p = .33), or more positive MBC attitudes including perceived benefit (b = .16, p = .06) or perceived harm (b = −.12, p = .34) compared to control group participants. Participants in the BOLT+PTC condition exhibited larger increases across the consultation period in MBC skills (b = .03, p <.001), although these increases slowed over time (b = −0.00091, p = <.001), and there were ultimately no differences at the end of consultation. We observed no other effects of BOLT+PTC on rates of change over time.

We also explored whether PTC dose was associated with differential outcomes for theorized mechanisms (skill, attitudes, and knowledge), by replicating the analyses above with additional contrast codes for different PTC doses. Across all outcomes, there was no evidence that PTC dose was associated with differences in mechanisms.

Discussion

This study tested the impact of an efficient, brief online training and post-training consultation program for MBC with school-based mental health clinicians. In addition to examining the effects of BOLT+PTC, it was the first study to experimentally manipulate PTC dose to evaluate the amount of support that may be needed to promote effective implementation. Consistent with current implementation research design guidelines to advance the precision of implementation science results, we examined the impact of BOLT and various dosages of BOLT+PTC on implementation outcomes (e.g., MBC practices) as well as implementation mechanisms (i.e., MBC knowledge, attitudes and skill).

Beyond examinations of dose, the precision of implementation science is increased by better specification of implementation outcomes. In the current project, effects were apparent over time for the impact of BOLT+PTC on both standardized and individualized assessment use, but not for treatment modification, even though each of these was an explicit focus of the training and consultation. Consistent with this, the developers of the CAPER have reported the lowest mean ratings for the treatment modification subscale [52]. Prior findings also have found less change—and less sustainment of changes—in treatment modifications following training and consultation in MBC practices [43]. It may be the case that changing one’s practice to collect standardized or individualized measures is more straightforward than using those measures to inform treatment modifications. Indeed, determining changes to treatment plans is often more complicated than instrument administration alone. Studies have pointed to the importance of ensuring clinical decision support during MBC implementation [75], which some measurement feedback systems and ongoing clinical consultation can provide. Although MBC has been associated with improved outcomes among a wide variety of presenting concerns and treatment modalities [44, 45], it is possible that using MBC for treatment planning is more ambiguous when implemented without a specific evidence-based intervention. The current sample of clinicians delivered MBC-enhanced “usual care” mental health services in schools. Treatment modification may be easier if MBC is implemented alongside a broader practice change initiative, such as those that include transdiagnostic or common elements of evidence-based practices [76,77,78], or even clear and consistent specification of usual care treatment elements [79].

Notably, these clinicians also delivered usual care services in the education sector, where there is limited research on evidence-based practice implementation [61]. Given the importance of better understanding setting-specific rates of change, deterioration, outcomes, and treatment interventions that may influence MBC implementation and effectiveness [80], additional research examining how MBC practices can more effectively inform treatment modifications in school mental health treatment—and beyond—is greatly needed.

Regarding implementation mechanisms, one of the three theorized mechanisms was impacted for the BOLT+PCT group, relative to controls. Specifically, MBC knowledge was higher immediately following consultation. In contrast, EBP attitudes have been inconsistently associated with implementation outcomes in prior research across service sectors and interventions [81, 82]. Given that some implementation strategies have been found to successfully shift practitioner attitudes in the education sector (e.g., Beliefs and Attitudes for Successful Implementation in Schools [BASIS] [83, 84]), there might be utility in focusing more explicitly on that mechanism to enhance the utility of BOLT approach.

Research is increasingly investigating “how low can you go” with regard to implementation processes and pursuing pragmatic and cost-effective implementation supports [37, 85, 86]. Related, our findings regarding consultation dose were not as anticipated. Specifically, we observed very small differences among the different PTC groups (2, 4, or 8 weeks), indicating that while post-training consultation is critical, higher doses may not have improved its impact in this sample. However, many questions remain surrounding optimal consultation dosage, and replication with larger samples of clinicians is needed. Aside from aforementioned between-group power limitations, there are several possible explanations for our findings. First, it may be that MBC practices are simpler, relative to evidence-based treatment protocols which often use training and PTC to support clinicians’ adoption and ongoing implementation with fidelity. Therefore, perhaps less consultation is more likely to be adequate for MBC than for manualized, evidence-based interventions. Indeed, it has been suggested that less complex interventions may need fewer implementation supports to be successfully adopted [87]. On the other hand, our findings that standardized and individualized assessment administration changed more over time as compared to treatment modifications suggests that treatment modification could be a more complex or challenging practice to change than just collecting new measures. Additionally, changes in treatment modification may be less frequently indicated than other changes in MBC practice, as they are often dependent on the results of the assessment (e.g., in cases of nonresponse or deterioration). It is also possible that none of the consultation conditions were sufficient to effect this change or to support clinicians in determining what other intervention changes were indicated when measurement suggests a lack of progress. Such a “ceiling effect” for our specific set of training and PTC strategies could necessitate the incorporation of some additional techniques, such as the BASIS strategy noted earlier. In BOLT+PTC, treatment modifications in response to assessment data were discussed more generally to enhance usual care, rather than in adherence to a particular set of intervention practices or expectation to deliver a manualized intervention. Future work should explore whether the brief model could be augmented to focus more explicitly on understanding when or how to adjust care. In this way and others, MBC—and MBC-facilitated treatment more generally—may continue to be a practice that is “simple, but not easy.”

Limitations

Findings of the current study must be interpreted within the context of several limitations. First, we were unable to include observational measures of MBC practices in this initial study. Although self-reported clinical practices are typical in implementation research for practicality and resource constraints particularly of pilot trials, observational measures would have been more robust and are a recommended future direction for related work. A review of clinical records was similarly infeasible due to the remote nature of this study and the diverse systems in which participants worked. Second, the frequency with which MBC practices were assessed may have had an impact on clinicians’ MBC behaviors, although this assessment approach was consistent across conditions. Third, it is not entirely clear why some deterioration of MBC practices was observed over time in our sample. It may be that the baseline ratings of MBC practices were inflated and that repeated assessments produced ratings that were more reflective of clinicians’ behaviors. The pattern of results observed from weeks 8 to 32 (Table 2) provides some support for this possibility. Fourth, based on previously described research documenting the limitations of workshop training alone, we opted not to include a training-only condition to examine the differential effects of training compared to training plus consultation. Fifth, collection of student clinical outcomes was outside the scope of this study due to its pilot nature and our explicit focus on the most proximal implementation mechanisms and outcomes linked to the implementation supports provided. Sixth, although we examined main effects on hypothesized implementation mechanisms, we did not conduct formal tests of mediation as they were beyond the scope of the current paper. Finally, as indicated above, the sample sizes of each PTC dosage condition limited the conclusions that could be drawn about the differential effects of a greater number of weeks of consultation.

Conclusion and future directions

Results from this study indicate that efficient training and consultation supports can produce meaningful practice change surrounding the administration of assessments. However, better understanding the optimal dosage of consultation when paired with high-quality, active training requires further investigation, particularly for MBC delivered in schools. This is in line with recent evidence that even a condition without any post-training consultation—but with other potentially low-cost (but high quality) supports like handouts, video tutorials, and access to online supports—was able to yield improvements in some aspects of fidelity, including those that might be most important for patient outcomes [8]. This points to the necessity of continuing to identify and test the mechanisms engaged by various implementation strategies, especially training and consultation processes, to determine the most parsimonious and efficient approaches. Certainly, the incremental impact of consultation relative to alternative or adjunctive post-training support strategies, such as measurement feedback systems [88, 89], should be further explored as research on successful MBC implementation strategies unfolds.

Availability of data and materials

The datasets generated during and/or analyzed during the current study are not publicly available but are available from the corresponding author on reasonable request.

Abbreviations

- BOLT:

-

Brief online training

- CAPER:

-

Current Assessment Practice Evaluation – Revised

- MBC:

-

Measurement-based care

- MBCKQ:

-

Measurement-Based Care Knowledge Questionnaire

- MFA:

-

Monitoring and Feedback Attitudes Scale

- MLM:

-

Multilevel model

- PTC:

-

Post-training consultation

References

Beidas RS, Edmunds JM, Cannuscio CC, Gallagher M, Downey MM, Kendall PC. Therapists perspectives on the effective elements of consultation following training. Adm Policy Ment Health Ment Health Serv Res. 2013;40(6):507–17. https://doi.org/10.1007/s10488-013-0475-7.

Beidas RS, Kendall PC. Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin Psychol Sci Pract. 2010;17(1):1–30 https://onlinelibrary.wiley.com/doi/abs/10.1111/j.1468-2850.2009.01187.x.

Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: a review and critique with recommendations. Clin Psychol Rev. 2010;30(4):448–66. https://doi.org/10.1016/j.cpr.2010.02.005.

Lyon AR, Stirman SW, Kerns SEU, Bruns EJ. Developing the mental health workforce: review and application of training approaches from multiple disciplines. Adm Policy Ment Health Ment Health Serv Res. 2011;38(4):238–53. https://doi.org/10.1007/s10488-010-0331-y.

Valenstein-Mah H, Greer N, McKenzie L, Hansen L, Strom TQ, Wiltsey Stirman S, et al. Effectiveness of training methods for delivery of evidence-based psychotherapies: a systematic review. Implement Sci. 2020;15(1):40. https://doi.org/10.1186/s13012-020-00998-w.

Apramian T, Cristancho S, Watling C, Ott M, Lingard L. “They have to adapt to learn”: surgeons’ perspectives on the role of procedural variation in surgical education. J Surg Educ. 2016;73(2):339–47. https://doi.org/10.1016/j.jsurg.2015.10.016.

Frank HE, Becker-Haimes EM, Kendall PC. Therapist training in evidence-based interventions for mental health: a systematic review of training approaches and outcomes. Clin Psychol Sci Pract. 2020;27(3):e12330 https://onlinelibrary.wiley.com/doi/abs/10.1111/cpsp.12330.

Weisz JR, Thomassin K, Hersh J, Santucci LC, MacPherson HA, Rodriguez GM, et al. Clinician training, then what? Randomized clinical trial of child STEPs psychotherapy using lower-cost implementation supports with versus without expert consultation. J Consult Clin Psychol. 2020;88(12):1065–78. https://doi.org/10.1037/ccp0000536.

Edmunds JM, Beidas RS, Kendall PC. Dissemination and implementation of evidence–based practices: training and consultation as implementation strategies. Clin Psychol Sci Pract. 2013;20(2):152–65. https://doi.org/10.1111/cpsp.12031.

Lyon AR, Pullmann MD, Walker SC, D’Angelo G. Community-sourced intervention programs: review of submissions in response to a statewide call for “promising practices”. Adm Policy Ment Health Ment Health Serv Res. 2017;44(1):16–28. https://doi.org/10.1007/s10488-015-0650-0.

Institute of Medicine, Board on Health Sciences Policy, Committee on Developing Evidence-Based Standards for Psychosocial Interventions for Mental Disorders. Psychosocial interventions for mental and substance use disorders: a framework for establishing evidence-based standards [Internet]. Washington, D.C.: The National Academies Press; 2015. Available from: https://doi.org/10.17226/19013. Cited 2022 Apr 27.

Cook CR, Lyon AR, Locke J, Waltz T, Powell BJ. Adapting a compilation of implementation strategies to advance school-based implementation research and practice. Prev Sci. 2019;20(6):914–35. https://doi.org/10.1007/s11121-019-01017-1.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21. https://doi.org/10.1186/s13012-015-0209-1.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):139. https://doi.org/10.1186/1748-5908-8-139.

Lyon A, Cook C, Locke J, Powell B, Waltz T. Importance and feasibility of an adapted set of strategies for implementing evidence-based behavioral health practices in the education sector. J Sch Psychol. 2019;76:66–77. https://doi.org/10.1016/j.jsp.2019.07.014.

Awad M, Connors E. Measurement Based Care as a function of ethical practice: the role of supervisors in supporting MBC values. 2021. Manuscript in preparation.

Chafouleas SM, Clonan SM, Vanauken TL. A national survey of current supervision and evaluation practices of school psychologists. Psychol Sch. 2002;39(3):317–25 https://onlinelibrary.wiley.com/doi/abs/10.1002/pits.10021.

Nadeem E, Gleacher A, Beidas RS. Consultation as an implementation strategy for evidence-based practices across multiple contexts: unpacking the black box. Adm Policy Ment Health Ment Health Serv Res. 2013;40(6):439–50. https://doi.org/10.1007/s10488-013-0502-8.

Olson JR, Lucy M, Kellogg MA, Schmitz K, Berntson T, Stuber J, et al. What happens when training goes virtual? Adapting training and technical assistance for the school mental health workforce in response to covid-19. Sch Ment Heal. 2021;13(1):160–73. https://doi.org/10.1007/s12310-020-09401-x.

Weingardt KR, Cucciare MA, Bellotti C, Lai WP. A randomized trial comparing two models of web-based training in cognitive–behavioral therapy for substance abuse counselors. J Subst Abus Treat. 2009;37(3):219–27. https://doi.org/10.1016/j.jsat.2009.01.002.

Dimeff LA, Koerner K, Woodcock EA, Beadnell B, Brown MZ, Skutch JM, et al. Which training method works best? A randomized controlled trial comparing three methods of training clinicians in dialectical behavior therapy skills. Behav Res Ther. 2009;47(11):921–30. https://doi.org/10.1016/j.brat.2009.07.011.

Hubley S, Woodcock EA, Dimeff LA, Dimidjian S. Disseminating behavioural activation for depression via online training: preliminary steps. Behav Cogn Psychother. 2015;43(2):224–38. https://doi.org/10.1017/S1352465813000842.

Dimeff LA, Harned MS, Woodcock EA, Skutch JM, Koerner K, Linehan MM. Investigating bang for your training buck: a randomized controlled trial comparing three methods of training clinicians in two core strategies of dialectical behavior therapy. Behav Ther. 2015;46(3):283–95. https://doi.org/10.1016/j.beth.2015.01.001.

Beidas RS, Koerner K, Weingardt KR, Kendall PC. Training research: Practical recommendations for maximum impact. Adm Policy Ment Health Ment Health Serv Res. 2011;38(4):223–37. https://doi.org/10.1007/s10488-011-0338-z.

Beidas RS, Edmunds JM, Marcus SC, Kendall PC. Training and consultation to promote implementation of an empirically supported treatment: a randomized trial. Psychiatr Serv. 2012;63(7):660–5 https://ps.psychiatryonline.org/doi/full/10.1176/appi.ps.201100401.

Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F, Fixsen DL, et al. Implementation research: a synthesis of the literature. 2005.

Olmstead T, Carroll KM, Canning-Ball M, Martino S. Cost and cost-effectiveness of three strategies for training clinicians in motivational interviewing. Drug Alcohol Depend. 2011;116(1):195–202. https://doi.org/10.1016/j.drugalcdep.2010.12.015.

Edmunds JM, Read KL, Ringle VA, Brodman DM, Kendall PC, Beidas RS. Sustaining clinician penetration, attitudes and knowledge in cognitive-behavioral therapy for youth anxiety. Implement Sci. 2014;9(1):89. https://doi.org/10.1186/s13012-014-0089-9.

Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front. Public Health. 2018;6 Available from: https://www.frontiersin.org/article/10.3389/fpubh.2018.00136. Cited 2022 Apr 26.

Lewis CC, Boyd MR, Walsh-Bailey C, Lyon AR, Beidas R, Mittman B, et al. A systematic review of empirical studies examining mechanisms of implementation in health. Implement Sci. 2020;15(1):21. https://doi.org/10.1186/s13012-020-00983-3.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: Integrating theory, research, and practice. Adm Policy Ment Health Ment Health Serv Res. 2016;43(5):783–98. https://doi.org/10.1007/s10488-015-0693-2.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, et al. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front. Public Health. 2019;7 Available from: https://www.frontiersin.org/article/10.3389/fpubh.2019.00003. Cited 2022 Apr 26.

Williams NJ, Beidas RS. Annual Research Review: the state of implementation science in child psychology and psychiatry: a review and suggestions to advance the field. J Child Psychol Psychiatry. 2019;60(4):430–50 https://onlinelibrary.wiley.com/doi/abs/10.1111/jcpp.12960.

Wong SC, McEvoy MP, Wiles LK, Lewis LK. Magnitude of change in outcomes following entry-level evidence-based practice training: a systematic review. Int J Med Educ. 2013;4:107–14. https://doi.org/10.5116/ijme.51a0.fd25.

Higa CK, Chorpita BF. Evidence-based therapies: translating research into practice. In: Steele RG, Elkin TD, Roberts MC, editors. Handbook of evidence-based therapies for children and adolescents: bridging science and practice [Internet]. Boston: Springer US; 2008. p. 45–61. (Issues in Clinical Child Psychology). Cited 2022 Apr 26. https://doi.org/10.1007/978-0-387-73691-4_4.

Siqueland L, Crits-Christoph P, Barber JP, Butler SF, Thase M, Najavits L, et al. The role of therapist characteristics in training effects in cognitive, supportive-expressive, and drug counseling therapies for cocaine dependence. J Psychother Pract Res. 2000;9(3):123–30 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3330597/.

Beidas RS, Cross W, Dorsey S. Show me, don’t tell me: behavioral rehearsal as a training and analogue fidelity tool. Cogn Behav Pract. 2014;21(1):1–11. https://doi.org/10.1016/j.cbpra.2013.04.002.

Nelson TD, Steele RG. Predictors of practitioner self-reported use of evidence-based practices: Practitioner training, clinical setting, and attitudes toward research. Adm Policy Ment Health Ment Health Serv Res. 2007;34(4):319–30. https://doi.org/10.1007/s10488-006-0111-x.

Nelson MM, Shanley JR, Funderburk BW, Bard E. Therapists’ attitudes toward evidence-based practices and implementation of parent–child interaction therapy. Child Maltreat. 2012;17(1):47–55. https://doi.org/10.1177/1077559512436674.

Pemberton JR, Conners-Burrow NA, Sigel BA, Sievers CM, Stokes LD, Kramer TL. Factors associated with clinician participation in TF-CBT post-workshop training components. Adm Policy Ment Health Ment Health Serv Res. 2017;44(4):524–33. https://doi.org/10.1007/s10488-015-0677-2.

Joyce BR, Showers B. Student achievement through staff development [Internet]. Association for Supervision and Curriculum Development; 2002; Alexandria, VA. Available from: https://www.unrwa.org/sites/default/files/joyce_and_showers_coaching_as_cpd.pdf

Mannix KA, Blackburn IM, Garland A, Gracie J, Moorey S, Reid B, et al. Effectiveness of brief training in cognitive behaviour therapy techniques for palliative care practitioners. Palliat Med. 2006;20(6):579–84. https://doi.org/10.1177/0269216306071058.

Lyon AR, Dorsey S, Pullmann M, Silbaugh-Cowdin J, Berliner L. Clinician use of standardized assessments following a common elements psychotherapy training and consultation program. Adm Policy Ment Health Ment Health Serv Res. 2015;42(1):47–60. https://doi.org/10.1007/s10488-014-0543-7.

Fortney JC, Unützer J, Wrenn G, Pyne JM, Smith GR, Schoenbaum M, et al. A tipping point for measurement-based care. FOCUS. 2018;16(3):341–50 https://focus.psychiatryonline.org/doi/10.1176/appi.focus.16303.

Scott K, Lewis CC. Using measurement-based care to enhance any treatment. Cogn Behav Pract. 2015;22(1):49–59. https://doi.org/10.1016/j.cbpra.2014.01.010.

Fortney JC, Unützer J, Wrenn G, Pyne JM, Smith GR, Schoenbaum M, et al. A tipping point for measurement-based care. Psychiatr Serv. 2017;68(2):179–88 https://ps.psychiatryonline.org/doi/full/10.1176/appi.ps.201500439.

Tam HE, Ronan K. The application of a feedback-informed approach in psychological service with youth: systematic review and meta-analysis. Clin Psychol Rev. 2017;55:41–55. https://doi.org/10.1016/j.cpr.2017.04.005.

Parikh A, Fristad MA, Axelson D, Krishna R. Evidence base for measurement-based care in child and adolescent psychiatry. Child Adolesc Psychiatr Clin. 2020;29(4):587–99. https://doi.org/10.1016/j.chc.2020.06.001.

Bickman L, Kelley SD, Breda C, de Andrade AR, Riemer M. Effects of routine feedback to clinicians on mental health outcomes of youths: results of a randomized trial. Psychiatr Serv. 2011;62(12):1423–9. https://doi.org/10.1176/appi.ps.002052011.

Cooper M, Duncan B, Golden S, Toth K. Systematic client feedback in therapy for children with psychological difficulties: pilot cluster randomised controlled trial. Couns Psychol Q. 2021;34(1):21–36. https://doi.org/10.1080/09515070.2019.1647142.

Connors EH, Douglas S, Jensen-Doss A, Landes SJ, Lewis CC, McLeod BD, et al. What gets measured gets done: how mental health agencies can leverage measurement-based care for better patient care, clinician supports, and organizational goals. Adm Policy Ment Health Ment Health Serv Res. 2021;48(2):250–65. https://doi.org/10.1007/s10488-020-01063-w.

Lyon AR, Pullmann MD, Dorsey S, Martin P, Grigore AA, Becker EM, et al. Reliability, validity, and factor structure of the Current Assessment Practice Evaluation-Revised (CAPER) in a national sample. J Behav Health Serv Res. 2019;46(1):43–63. https://doi.org/10.1007/s11414-018-9621-z.

Jensen-Doss A, Smith AM, Becker-Haimes EM, Mora Ringle V, Walsh LM, Nanda M, et al. Individualized progress measures are more acceptable to clinicians than standardized measures: results of a national survey. Adm Policy Ment Health Ment Health Serv Res. 2018;45(3):392–403. https://doi.org/10.1007/s10488-017-0833-y.

Lewis CC, Boyd M, Puspitasari A, Navarro E, Howard J, Kassab H, et al. Implementing measurement-based care in behavioral health: a review. JAMA Psychiatry. 2019;76(3):324–35. https://doi.org/10.1001/jamapsychiatry.2018.3329.

Resnick SG, Oehhlert ME, Hoff RA, Kearney LK. Measurement-based care and psychological assessment: using measurement to enhance psychological treatment. Psychol Serv. 2020;17(3):233–7. https://doi.org/10.1037/ser0000491.

Lyon AR, Ludwig K, Wasse JK, Bergstrom A, Hendrix E, McCauley E. Determinants and functions of standardized assessment use among school mental health clinicians: a mixed methods evaluation. Adm Policy Ment Health Ment Health Serv Res. 2016;43(1):122–34. https://doi.org/10.1007/s10488-015-0626-0.

Lyon AR, Ludwig K, Romano E, Leonard S, Stoep AV, McCauley E. “If it’s worth my time, i will make the time”: school-based providers’ decision-making about participating in an evidence-based psychotherapy consultation program. Adm Policy Ment Health Ment Health Serv Res. 2013;40(6):467–81. https://doi.org/10.1007/s10488-013-0494-4.

Duong MT, Lyon AR, Ludwig K, Wasse JK, McCauley E. Student perceptions of the acceptability and utility of standardized and idiographic assessment in school mental health. Int J Ment Health Promot. 2016;18(1):49–63. https://doi.org/10.1080/14623730.2015.1079429.

Duong MT, Bruns EJ, Lee K, Cox S, Coifman J, Mayworm A, et al. Rates of mental health service utilization by children and adolescents in schools and other common service settings: a systematic review and meta-analysis. Adm Policy Ment Health Ment Health Serv Res. 2021;48(3):420–39. https://doi.org/10.1007/s10488-020-01080-9.

Burns C, Dishman E, Johnson B, Verplank B. Informance": Min (d) ing future contexts for scenariobased interaction design. 1995.

Hagermoser Sanetti LM, Collier-Meek MA. Increasing implementation science literacy to address the research-to-practice gap in school psychology. J Sch Psychol. 2019;76:33–47. https://doi.org/10.1016/j.jsp.2019.07.008.

Connors EH, Arora P, Curtis L, Stephan SH. Evidence-based assessment in school mental health. Cogn Behav Pract. 2015;22(1):60–73. https://doi.org/10.1016/j.cbpra.2014.03.008.

Kazdin AE. Addressing the treatment gap: a key challenge for extending evidence-based psychosocial interventions. Behav Res Ther. 2017;88:7–18. https://doi.org/10.1016/j.brat.2016.06.004.

Lancet Global Mental Health Group. Scale up services for mental disorders: a call for action. Lancet. 2007;370(9594):1241–52. https://doi.org/10.1016/S0140-6736(07)61242-2.

Connors E, Lawson G, Wheatley-Rowe D, Hoover S. Exploration, preparation, and implementation of standardized assessment in a multi-agency school behavioral health network. Adm Policy Ment Health Ment Health Serv Res. 2021;48(3):464–81. https://doi.org/10.1007/s10488-020-01082-7.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38(2):65–76. https://doi.org/10.1007/s10488-010-0319-7.

Lyon AR, Coifman J, Cook H, McRee E, Liu FF, Ludwig K, et al. The Cognitive Walkthrough for Implementation Strategies (CWIS): a pragmatic method for assessing implementation strategy usability. Implement Sci Commun. 2021;2(1):78. https://doi.org/10.1186/s43058-021-00183-0.

Lyon A, Liu FF, Coifman JI, Cook H, King K, Ludwig K, et al. Randomized trial to optimize a brief online training and consultation strategy for measurement-based care in school mental health. In: Proceedings of the Fifth Biennial Conference of the Society for Implementation Research Collaboration (SIRC) 2019. BMC Campus, 4 Crinan St, London N1 9XW, England; 2020.

Bruns EJ, Pullmann MD, Nicodimos S, Lyon AR, Ludwig K, Namkung N, et al. Pilot test of an engagement, triage, and brief intervention strategy for school mental health. Sch Ment Heal. 2019;11(1):148–62. https://doi.org/10.1007/s12310-018-9277-0.

Stumpf RE, Higa-McMillan CK, Chorpita BF. Implementation of evidence-based services for youth: assessing provider knowledge. Behav Modif. 2009;33(1):48–65. https://doi.org/10.1177/0145445508322625.

Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, et al. Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Adm Policy Ment Health Ment Health Serv Res. 2018;45(1):48–61. https://doi.org/10.1007/s10488-016-0763-0.

R Core Team. R. A language and environment for statistical computing. Vienna: 2018. Available from: http://www.r-project.org/.

Pinheiro J, Bates D, DebRoy S, Sarkar D. R Core Team. nlme: linear and nonlinear mixed effects models. R package version 3.1-137. Vienna; 2018.

Hamaker EL, van Hattum P, Kuiper RM. Model selection based on information criteria in multilevel modeling. In: Handbook of advanced multilevel analysis: Routledge; 2011. p. 231–55.

Lambert MJ, Whipple JL, Hawkins EJ, Vermeersch DA, Nielsen SL, Smart DW. Is it time for clinicians to routinely track patient outcome? A meta-analysis. Clin Psychol Sci Pract. 2003;10(3):288–301. https://doi.org/10.1093/clipsy.bpg025/.

Chorpita BF, Weisz JR, Daleiden EL, Schoenwald SK, Palinkas LA, Miranda J, et al. Long-term outcomes for the Child STEPs randomized effectiveness trial: a comparison of modular and standard treatment designs with usual care. J Consult Clin Psychol. 2013;81(6):999–1009. https://doi.org/10.1037/a0034200.

Weisz JR, Chorpita BF, Palinkas LA, Schoenwald SK, Miranda J, Bearman SK, et al. Testing standard and modular designs for psychotherapy treating depression, anxiety, and conduct problems in youth: a randomized effectiveness trial. Arch Gen Psychiatry. 2012;69(3):274–82. https://doi.org/10.1001/archgenpsychiatry.2011.147.

Dorsey S, Berliner L, Lyon AR, Pullmann MD, Murray LK. A statewide common elements initiative for children’s mental health. J Behav Health Serv Res. 2016;43(2):246–61. https://doi.org/10.1007/s11414-014-9430-y.

Lyon AR, Stanick C, Pullmann MD. Toward high-fidelity treatment as usual: evidence-based intervention structures to improve usual care psychotherapy. Clin Psychol Sci Pract. 2018;25(4). https://doi.org/10.1111/cpsp.12265.

Warren JS, Nelson PL, Mondragon SA, Baldwin SA, Burlingame GM. Youth psychotherapy change trajectories and outcomes in usual care: community mental health versus managed care settings. J Consult Clin Psychol. 2010;78(2):144–55. https://doi.org/10.1037/a0018544.

Nelson TD, Steele RG. Influences on practitioner treatment selection: best research evidence and other considerations. J Behav Health Serv Res. 2008;35(2):170–8. https://doi.org/10.1007/s11414-007-9089-8.

Upton D, Stephens D, Williams B, Scurlock-Evans L. Occupational therapists’ attitudes, knowledge, and implementation of evidence-based practice: a systematic review of published research. Br J Occup Ther. 2014;77(1):24–38. https://doi.org/10.4276/030802214X13887685335544.

Larson M, Cook CR, Brewer SK, Pullmann MD, Hamlin C, Merle JL, et al. Examining the effects of a brief, group-based motivational implementation strategy on mechanisms of teacher behavior change. Prev Sci. 2021;22(6):722–36. https://doi.org/10.1007/s11121-020-01191-7.

Lyon AR, Cook CR, Duong MT, Nicodimos S, Pullmann MD, Brewer SK, et al. The influence of a blended, theoretically-informed pre-implementation strategy on school-based clinician implementation of an evidence-based trauma intervention. Implement Sci. 2019;14(1):54. https://doi.org/10.1186/s13012-019-0905-3.

Adrian M, Lyon AR, Nicodimos S, Pullmann MD, McCauley E. Enhanced “train and hope” for scalable, cost-effective professional development in youth suicide prevention. Crisis. 2018;39(4):235–46. https://doi.org/10.1027/0227-5910/a000489.

Glasgow RE, Fisher L, Strycker LA, Hessler D, Toobert DJ, King DK, et al. Minimal intervention needed for change: definition, use, and value for improving health and health research. Transl Behav Med. 2014;4(1):26–33. https://doi.org/10.1007/s13142-013-0232-1.

von Thiele SU, Aarons GA, Hasson H. The value equation: three complementary propositions for reconciling fidelity and adaptation in evidence-based practice implementation. BMC Health Serv Res. 2019;19(1):868. https://doi.org/10.1186/s12913-019-4668-y.

Bickman L. A Measurement Feedback System (MFS) is necessary to improve mental health outcomes. J Am Acad Child Adolesc Psychiatry. 2008;47(10):1114–9. https://doi.org/10.1097/CHI.0b013e3181825af8.

Lyon AR, Lewis CC. Designing health information technologies for uptake: development and implementation of measurement feedback systems in mental health service delivery. Adm Policy Ment Health Ment Health Serv Res. 2016;43(3):344–9. https://doi.org/10.1007/s10488-015-0704-3.

Funding

This publication was funded by the National Institute of Mental Health (R34MH109605, K08MH116119, and P50MH115837). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

ARL and EMcC developed the overarching scientific aims and design of the project. ARL, EMcC, FFL, and KL developed the clinical training and consultation content with support from SD. AL, JC, HC, ARL, EMcC, FFL, and KL iteratively developed the online training. FFL and KL conducted the post-training consultation. JC, HC, and EMcR conducted all data collection and cleaning and supported analyses. KMK developed and executed the data analysis plan, interpreted implementation outcome and mechanism data, and drafted the results. EHC supported writing all paper sections. All authors contributed to the development, drafting, or review of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All study procedures were reviewed and approved by the University of Washington institutional review board. The study team conducted informed consent meetings via phone with prospective participants and received written/verbal consent consistent with IRB-approved procedure.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

CONSORT Flow Diagram.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lyon, A.R., Liu, F.F., Connors, E.H. et al. How low can you go? Examining the effects of brief online training and post-training consultation dose on implementation mechanisms and outcomes for measurement-based care. Implement Sci Commun 3, 79 (2022). https://doi.org/10.1186/s43058-022-00325-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-022-00325-y