Abstract

Digital technologies have gained much popularity in education thanks to the cost efficiency they gain through scalability. While the acceptance of some of these new digital technologies has been investigated, there are few direct comparisons. The most established model for measuring acceptance is the technology acceptance model, which can predict the behavioral intention to use the technology. We compared four digital learning technologies (e-lectures, classroom response system, classroom chat, and mobile virtual reality) in terms of their technology acceptance. The classroom response system had the highest level of acceptance. It was closely followed by e-lectures, then the classroom chat and then mobile virtual reality. The students evaluated all tools favorably before and after usage, except for mobile virtual reality, which saw a substantial drop in perceived usefulness and behavioral intention after 3 months’ usage.

Similar content being viewed by others

Introduction

An advantage of digital technologies is that they are highly scalable. This also applies to the education sector, where large classes remain a popular method of instruction around the world because of their cost efficiency (Yardi, 2008). Technology is increasingly being used in classrooms to assist lecturers in achieving various pedagogical goals and scalable technologies can serve their objectives (Becker, Brown, Dahlstrom, Davis, DePaul, Diaz, & Pomerantz, 2018). Four such technologies are the following: classroom chat (CC), classroom response system (CRS), e-lectures, and mobile virtual reality (VR). User acceptance is a prerequisite for technology effectiveness. This can be assessed with the technology acceptance model (TAM) (Davis, 1989). By measuring the perceived usefulness (PU) and perceived ease of use (PEOU) after using the tools for several months, the model can predict the behavioral intention (BI) and actual usage. While the acceptance of some of these new digital technologies has been investigated elsewhere, there are no direct comparisons. Our aim was to compare the technology acceptance of the four tools in relation to each other after 3 months of usage.

To make these comparisons, 94 students used the digital tools for three months and then we measured the PU, PEOU and BI with a questionnaire.

In the remaining introduction, we first discuss the four digital learning technologies before we summarize the research on the technology acceptance model. We then briefly discuss the alignment theory and expected results.

Digital learning technologies

Classroom response system

CRSs, which are also known as student response systems, personal response systems, immediate response systems, electronic response systems, clickers, and audience response systems, have been highly accepted among educators (Hunsu, Adesope, & Bayly, 2016). A CRS allows lecturers to pose multichoice questions before, during, and after their lecture which students can answer on their own electronic devices. The answers are aggregated in real-time to display the results to individual students or the whole class. This allows lecturers to monitor the students’ understanding of topics (Caldwell, 2007) and, if necessary, elaborate on points that the students did not understand. Due to its anonymity, CRS helps to activate shy and hesitant students who would otherwise not ask questions in class (Graham, Tripp, Seawright, & Joeckel, 2007). Moreover, because students’ attention span lasts approximately 20 min (Burns, 1985), lecturers can use CRS to break up long presentations, directly activate students and let them actively process the content they just heard. Meta analyses have established small effects on cognitive learning outcomes and medium effects on noncognitive outcomes (Cain, Black, & Rohr, 2009; Castillo-Manzano, Castro-Nuno, Lopez-Valpuesta, Sanz-Diaz, & Yniguez, 2016).

We combined the CRS with course revision tasks because repetition is crucial for long term retention (Ebbinghaus, 2013; Pechenkina, Laurence, Oates, Eldridge, & Hunter, 2017). We posed the questions immediately after each lecture and then discussed the results at the beginning of the following lecture. This approach was motivated by several goals: (a) to encourage students to revisit the course content between lectures, (b) to activate students during lectures, (c) to give students a preview of typical exam questions, and (d) to provide feedback to students and the lecturer on students’ learning progress.

Classroom chat (CC)

While lecturers talk at the front of a class, students can also communicate with each other. These two simultaneous types of communication are called the frontchannel and backchannel (Aagard, Bowen, & Olesova, 2010) with the frontchannel referring to the lecturer communicating to the class and the backchannel referring to the communication the students have among each other. Lecturers have used chat tools to leverage backchannel communication with the goal of enabling student discussion of each other’s questions regarding the lecture. One disadvantage of this type of backchannel communication is its potential to distract students from following the lecture (Yardi, 2008). To avoid this problem, we adopted a new approach whereby the students can submit questions anonymously to the lecturer who in turn can respond during the lecture or at the beginning of the following lecture. In their meta-analysis, Schneider and Preckel (2017) found that questions can lead to higher levels of achievement. Moreover, using electronic applications that allow students to remain anonymous has been shown to encourage students who tend to be rather anxious and shy, especially when the topics are controversial (Stowell, Oldham, & Bennett, 2010).

E-lectures

Recording lectures has become popular at many universities (Brockfeld, Muller, & de Laffolie, 2018; Liu & Kender, 2004). Providing students with recordings allows individual reviewing of content and learning at their own pace (Demetriadis & Pombortsis, 2007). Moreover, e-lectures are useful when students miss a lecture due to illness or other reasons. This might explain why e-lectures are generally popular with students and lecturers (Gormley, Collins, Boohan, Bickle, & Stevenson, 2009). The impact of e-lectures on learning outcomes is currently under debate with conflicting evidence on its impacts (Demetriadis & Pombortsis, 2007; Jadin, Gruber, & Batinic, 2009; Spickard, Alrajeh, Cordray, & Gigante, 2002).

Mobile virtual reality

VR allows the creation of a virtual environment using a computer and a headset. Using visual—sometimes in addition to audio and tactile—input to achieve immersion into this virtual reality is a common goal in training and entertainment scenarios (Hawkins, 1995). Owing to the significant improvement in affordability and processing power, VR is being used in various educational settings (Cochrane, 2016; Merchant, Goetz, Cifuentes, Keeney-Kennicutt, & Davis, 2014). Building on the pedagogical model of constructivism, VR allows one to provide multiple representations of reality, knowledge construction, reflective practice, and context dependent knowledge by creating simulations, virtual worlds, and games (Merchant et al., 2014; Mikropoulos & Natsis, 2011). VR in education is increasingly used in science, technology, mathematics, and medicine (Radianti, Majchrzak, Fromm, & Wohlgenannt, 2020) with effect sizes ranging from 0.3 to 0.7 (Merchant et al., 2014). The use of VR in large psychology classes is, however, extremely rare (Mikropoulos & Natsis, 2011). VR has been shown to increase learning outcomes, particularly in individual gameplay tasks (Freina & Ott, 2015; Merchant et al., 2014), although some authors criticize the lack of quality and the number of studies focusing on learning outcomes (Jensen & Konradsen, 2018; Richards & Taylor, 2015).

Mobile VR is a specific subset of VR where instead of using a desktop computer and a specialized headset, the processor of a smartphone and its screen are used together with a carton headset (cardboard) to create the immersion experience. The advantages of mobile VR are the significantly lower costs for hardware and software. In tertiary education settings in developed countries, most students own a mobile VR capable smartphone, which translates to near complete availability. Despite its low costs, mobile VR remains less popular in educational settings—used only 20% of the time—than the higher quality and higher cost PC-based VR system (Radianti et al., 2020). The learning outcomes for mobile VR and desktop setups are similar (Moro, Stromberga, & Stirling, 2017).

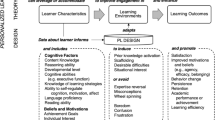

Technology acceptance model

Based on the theory of reasoned action from Ajzen and Fishbein (1977), Davis (1989) developed the TAM in an effort to predict the use of a technology. The TAM predicts BI with PU and PEOU, with BI considered a very good predictor for future actual usage (Sumak, Hericko, & Pusnik, 2011). The TAM is the most widely employed and best known model to measure acceptance of various technologies (Estriegana, Medina-Merodio, & Barchino, 2019). It was successfully applied to a multitude of technologies such as social media (Abrahim, Mir, Suhara, Mohamed, & Sato, 2019; Dumpit & Fernandez, 2017), virtual learning environments (Kurt & Tingöy, 2017), mobile and digital libraries (Hamaad Rafique, Shamim, & Anwar, 2019), learning analytics visualization (Papamitsiou & Economides, 2015), and gamification (Rahman, Ahmad, & Hashim, 2018) and across many cultures (Cheung & Vogel, 2013; H. Rafique et al., 2018). PU has a substantial effect on BI, but PEOU has often been found to have indirect effects—mediated by PU—with its direct effect on BI ranging from nonexistent to high (Gefen & Straub, 2000; King & He, 2006; Hamaad Rafique et al., 2019). Many extensions and adjustments to the TAM have been proposed, including by the original author (Abdullah & Ward, 2016; Cheung & Vogel, 2013; Estriegana et al., 2019; Venkatesh & Bala, 2008; Venkatesh & Davis, 2000). Notable of these adjustments is the Unified Theory of Acceptance and Use of Technology (UTAUT) (Venkatesh, Morris, Davis, & Davis, 2003), where the authors analyzed the TAM and its competing prediction models and proposed that performance expectancy, effort expectancy, social influence, and facilitating conditions—moderated by gender, age, experience, and voluntariness of use—influence BI and Use behavior. UTAUT was then further extended to UTAUT 2, where Venkatesh, Thong and Xu (2012) added hedonic motivation, price value and habit as influencing factors. While UTAUT 2 has garnered in excess of 3000 citations in Google Scholar (Tamilmani, Rana, Prakasam, & Dwivedi, 2019), its original application lies in predicting use of consumer technologies. As such, the factor price value is not suited to this study because the digital tools were made available to the students for free. Furthermore, with seven factors effecting BI and three moderators, UTAUT 2 takes many more variables into account but does not provide much more explanatory power than the TAM. The TAM’s high level of explanatory power and parsimony played a role in the TAM remaining a highly influential method of measuring technology acceptance (Granić & Marangunić, 2019; Scherer, Siddiq, & Tondeur, 2019). Considering that one factor in UTAUT 2 does not apply to our setting and that providing information for so many factors for four digital tools twice would have likely effected participation in the study negatively while not providing much additional explanatory power, we decided to implement the TAM model.

Alignment theory

In course design, alignment refers to the degree of how well the expectations regarding the course material and the assessment match (FitzPatrick, Hawboldt, Doyle, & Genge, 2015; Webb, 1997). When attending a course, students evaluate which information will be assessed and will steer their efforts towards that material. Building on this idea, Biggs (2003) proposed in his constructive alignment model that lecturers should first define the intended learning outcomes and then determine an appropriate assessment regime. For example, introductory courses to general psychology usually include a lecture about theories of cognitive processes and their applications. The intended learning outcomes of such lectures could be remembering, understanding, and applying the theories, which correspond to the first three levels of Bloom’s taxonomy (Bloom, 1956). End of semester course examinations often entail multiple-choice tests using items designed to assess those learning outcomes (Tozoglu, Tozoglu, Gurses, & Dogar, 2004). As we will explain in the next section, alignment theory is highly relevant for making predictions on technology acceptance.

Expected results

King and He (2006) found in their comprehensive meta-analysis about the TAM, that the average path loadings were 0.186 for PEOU—> BI, 0.505 for PU—> BI and 0.469 for PEOU—> PU. Since all tools are straightforward to use—the tools offer core functionalities without additional customization options—we expected them all to rate highly on PEOU. We expected that CRS and E-Lectures would both score high on PU and BI since both technologies are able to provide additional help for course assessment preparation and thus aligns well with student goals (Biggs, 2003). Additionally, CRS delivers students a preview of what exam questions might look like and provides direct feedback on how well exam-like questions were answered (Cain et al., 2009). E-lectures support students by allowing them to revisit lectures in order to prepare for the exam (Demetriadis & Pombortsis, 2007). Previous research indicates that both CRS and E-lectures are very popular with students (Gormley et al., 2009; Hunsu et al., 2016).

We expected CC and VR to score in the medium range on PU and BI. Previous research indicates that mainly shy students profit from CC (Stowell et al., 2010), so many students will only experience a small benefit, if any. VR might be exciting and visceral but it does not provide exam-relevant input and thus the alignment with student goals is low (Biggs, 2003; Merchant et al., 2014).

Method

Participants

The participants in this study consisted of first term students in the course “General Psychology 1” at the University of Applied Sciences and Arts Northwestern Switzerland. Participation in the study was voluntary. A total of 94 students of the 129 registered students participated (72 females and 22 males). The students completed the survey during class to increase completion rates by providing class time and to reduce potentially distracting activities while filling out the questionnaire (Stieger & Reips, 2010).

Study design

The dependent variables (DV) were PU, PEOU and BI, which are the three core factors of the TAM (Davis, 1989). The independent variable (IV) was the four tools (CC, CRS, e-lectures and mobile VR). We used a within-subjects design allowing each student to evaluate each tool.

Materials

TAM questionnaire

For our research, we used a two-part questionnaire: one part collecting demographic information and one part measuring our constructs. To assure comparability, we used items for PU and PEOU that were based on the original TAM items (Davis, 1989) but were slightly modified to better match the digital technologies used in our study. For BI we used items from previous studies measuring the TAM and BI (Davis & Venkatesh, 2004; Venkatesh & Bala, 2008; Venkatesh & Davis, 2000).The items were all translated by a bilingual (English/German) psychologist and then translated back into English by a native German-speaking psychologist. The translations were compared and minor adjustments were mutually agreed on in accordance with the backtranslation method (Behr, Braun, & Dorer, 2015). Items that did not translate well or produced items that could not be distinguished sufficiently were excluded until we had five items for each factor. As at least three items are recommended to form a factor (Byrne, 2010), we opted to use five items per factor in case we had to exclude any due to bad factor loadings. The items employed in this study are listed in Appendix A. We employed a 7-point Likert scale (Likert, 1932) with the scale ranging from 1 (completely disagree) to 7 (completely agree), as is common for TAM measurements (Cheung & Vogel, 2013; Davis & Venkatesh, 2004).

Digital learning technologies

Classroom chat

We used a custom-made CC developed by Waimanoo Ltd. During each lecture, students could ask questions anonymously through a web interface using their smartphones or laptop computer (Fig. 1a). The lecturer reviewed the questions during the breaks and presented them to the students after the breaks (Fig. 1b). Approximately 90% of the questions were answered directly by the lecturer, and approximately 10% of the questions were used for classroom discussion using the buzz group method (Berquist & Phillips, 1975).

a Students’ view of the CC for asking questions anonymously during lectures. The task (to ask questions) is displayed at the top of the screen and the students can write their questions in the box at the bottom of the screen. b Lecturer view for reviewing questions during breaks and showing these to the students after breaks

CRS

To implement our CRS, we used a free web-based service called Klicker UZH, which students could access on their laptops or mobile phones. At the end of each lecture, the lecturer provided three multiple choice questions (one for each of the first three levels according to Bloom (1956)) to the students. At the beginning of the following lecture, the students answered these questions using a web interface on their smartphone or laptop computer (Fig. 2a). The aggregated results and the correct answers were then shown to the students (Fig. 2b), which was followed by discussion and explanation when more than approximately 20% of the students answered incorrectly or when students requested this.

E-lectures

We used the free open source software Open Broadcaster System Studio (Jim, 2018) to record the lectures, i.e., both the slides and the lecturer input. The e-lectures were uploaded within a few days onto the course website from where the students were able to view the lectures (Fig. 3).

Mobile VR

In the course of the semester, we used four mobile VR modules that the students could experience using their smartphones and a carton headset—provided by the school—during the second month of lectures. Each module required some simple interaction, which was made possible by moving the circle in the center of vision (which was marked with a purple circle) to the preferred option by moving the head and looking at it. The first scenario allowed students to experience the additivity in color perception when mixing light of different wave lengths.

The second and third scenario were about depth and distance perception (Fig. 4). The fourth module was a 360-degree video showing a job interview.

Procedure

After 3 months of using all tools towards the end of the semester, the students were asked to fill out the TAM questionnaire online.

Analyses

We conducted analyses of variance (ANOVAs) and post hoc tests using Jamovi version 1.2.22 (the jamovi project, 2020). Alpha was set at 0.05 and we applied the Holm-Bonferroni method to account for multiple comparisons (Holm, 1979). We report the ANOVA effect sizes using ηp2 (partial eta-squared) whereas values of 0.01, 0.06, and 0.14 correspond to small, medium, and large effects, respectively (Cohn, 1988, p. 368). The Huyn–Feldt correction was applied to the p values of the ANOVAs with more than two within-factor levels to address any violation of the sphericity assumption. We used partial least squares (PLS) modeling to calculate the influence of PU and PEOU on the BI at the end of the Semester to ensure that the TAM was applicable to our setting and technologies. We used Smart PLS version 3.2.8, which can test the structural models while minimizing the residual variance of the entire model’s dependent variables (Hair Jr, Hult, Ringle, & Sarstedt, 2016).

Results

Analyses of variance (ANOVA)

Figure 5 shows the PU for the CC, CRS, e-lectures, and VR at the beginning of the semester (t1) and after three months of use (t2). All digital learning technologies were rated above 4 at both measurement times, except VR, which received lower ratings at the second measurement time.

We conducted an ANOVA with the tools (CC, CRS, E-lectures, VR) as the within-subjects factor and perceived usefulness as the dependent variable. This demonstrated a main effect of the tool with F(0.289,271.73) = 113, p < 0.001, and ηp2 = 0.545. Post hoc pairwise comparisons showed significant differences between all digital tools (ps < 0.001).

Figure 6 shows the PEOU for the CC, CRS, e-lectures, and VR at the beginning of the semester and after three months of use.

An ANOVA with the tools (CC, CRS, E-lectures, VR) as within factor measuring PEOU revealed main effects with F(2.43,228.48) = 39.6, p < 0.001, and ηp2 = 0.296. Post hoc comparisons at t1 showed that VR was significantly worse than the other tools (all p’s < 0.001) and EL was significantly worse than CRS (p = 0.049) while the remaining tool differences were not significant.

Figure 7 shows the BI for the CC, CRS, e-lectures, and VR after three months of usage.

An ANOVA with the tools (CC, CRS, E-lectures, and VR) as within factor measuring BI revealed a main effect with F (2.68, 251.63) = 81.7, p < 0.001 and ηp2 = 0.465 and the tool with F (3, 282) = 78.67, p < 0.001, and ηp2 = 0.456. These main effects were moderated. Post hoc pairwise comparisons showed that all tools were rated significantly differently (all p’s < 0.01).

Partial least squares analyses (PLSA)

We modeled the TAM for each tool to ensure that the TAM was a good fit for our technologies and setting. The PLS-SEM modeling for CRS is shown in Fig. 8.

Measurement models. The outer standardized loadings of the measurement models for each tool’s technology acceptance are shown in Table 1. Loadings lower than 0.7 (λ) are shown in italics and we did not consider them in further analysis, leaving at least four manifest variables to form each latent factor.

We calculated the internal consistency of the measurement scales using Cronbach's alpha, all values of which were above 0.80 and considered good (Nunnally, 1978) (Table 2); and Dillon-Goldstein rho (rho_A), which were all above 0.90, indicating very good internal consistency (Chin, 1998). The composite reliability values (composite reliability) were very good with values above 0.90 (Brunner & Suss, 2005) (Table 2). The average extracted variance was above 0.60, indicating acceptable levels of validity (Chin, 1998) (Table 2).

To determine the discriminant validity, we applied the heterotrait-multitrait (HTMT) approach (Hair, Hult, Ringle, & Sarstedt, 2016). The results are shown in Table 3. All HTMT values were below 0.90—which proves the discriminant validity between the two reflective constructs—except for VR at the end of the semester between PU and BI, where the value was 0.912.

Structural Models. Using the bootstrap method, the PLS is able to generate T-statistics for the significance testing of the inner and outer models (Chin, 1998). The bootstrap method takes many subsamples (in our case, 10,000) with replacement to compute the standard errors, which enable the PLS to estimate the T-values for significance testing. Table 4 shows the inner path loadings for all tools.

The structural model results for the R2 of PU and BI are shown in Table 5. The R2 should be larger than 0.10 with t > 1.64 for the model to have good explanatory power (Falk & Miller, 1992).

Discussion

We found that the CRS was rated the best, closely followed by e-lectures, then the CC and then mobile VR. The students evaluated all tools favorably after usage, except for mobile VR. These results are in line with our expectations based on the theory of course alignment with examinations (FitzPatrick et al., 2015). The CRS and e-lectures are well aligned, and students can use them to better prepare for end-of-semester exams. The popularity of both confirms previous findings (Gormley et al., 2009; Hunsu et al., 2016). CC and mobile VR do not have such a clear link to the exam, which could explain the comparatively lower acceptance of CC and mobile VR.

The comparatively poor performance of mobile VR in PU and BI after three months of usage was surprising and went contrary to our expected results. Feedback from students indicated that the setup of the mobile VR sequences took too much time—technical issues for some few students detained the entire class—which could have negatively impacted their technology acceptance. A further issue might have been that in comparison to commercially available VR products, our mobile VR modules were simple, which also might have been underwhelming and impacted the students’ ratings. This is consistent with other findings that high expectations can negatively influence the learning experience of VR (Rupp et al., 2016).

As is the case for most studies, our study revealed some limitations that could be addressed in future research. First, as mentioned above, the TAM is usually measured after three to twelve months of using the technology. We conducted our measurement after three months of use because the semester end was imminent. While this period is within the acceptable range, further research could investigate whether different results are obtained if technology acceptance is measured after longer usage, e.g., after 12 months. Second, PLS SEM has become a common tool to calculate structural models because it poses less stringent requirements on the number of manifest variables and the data distribution than covariance based structural equation modeling (PB-SEM) based approaches (Hair et al., 2016). Further research should try to replicate these findings using covariance-based modeling. Third, further research could also add further factors to the TAM to understand which specific factors drive the high and low levels of PU and PEOU on BI.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ANOVA:

-

Analysis of variance

- BI:

-

Behavioral intention

- CC:

-

Classroom chat

- CRS:

-

Classroom response system

- PEOU:

-

Perceived ease of use

- PLS:

-

Partial least squares

- PU:

-

Perceived usefulness

- R2 :

-

Explained variance

- TAM:

-

Technology acceptance model

- UTAUT:

-

Unified Theory of Acceptance and Use of Technology

- VR:

-

Virtual reality

References

Aagard, H., Bowen, K., & Olesova, L. (2010). Hotseat: Opening the backchannel in large lectures. Educause Quarterly, 33(3), 2.

Abdullah, F., & Ward, R. (2016). Developing a General Extended Technology Acceptance Model for E-Learning (GETAMEL) by analysing commonly used external factors. Computers in Human Behavior, 56, 238–256. https://doi.org/10.1016/j.chb.2015.11.036.

Abrahim, S., Mir, B. A., Suhara, H., Mohamed, F. A., & Sato, M. (2019). Structural equation modeling and confirmatory factor analysis of social media use and education. International Journal of Educational Technology in Higher Education, 16(1), 32.

Becker, S. A., Brown, M., Dahlstrom E., Davis, A., DePaul, K., Diaz, V. & Pomerantz, J. (2018). NMC Horizon Report: 2018 Higher Education Edition. CO: EDUCAUS. https://library.educause.edu/~/media/files/library/2018/8/2018horizonreport.pdf.

Behr, D., Braun, M., & Dorer, B. (2015). Messinstrumente in internationalen Studien. Retrieved from https://doi.org/10.15465/gesis-sg_006

Berquist, W., & Phillips, S. R. (1975). Classroom structures which encourage student participation. In: Gary H Quel (General Editor). A handbook for faculty development, pp. 118–121.

Biggs, J. (2003). Aligning teaching and assessing to course objectives. Teaching and Learning in Higher Education: New Trends and Innovations, 2(April), 13–17.

Bloom, B. S., Hastings, J. T., & Madaus, G. (Eds.). (1971).Handbook on formative and summative evaluation of student learning. New York: McGraw-Hill.

Brockfeld, T., Muller, B., & de Laffolie, J. (2018). Video versus live lecture courses: a comparative evaluation of lecture types and results. Medical Education Online, 23(1), 1555434. https://doi.org/10.1080/10872981.2018.1555434.

Brunner, M., & Suss, H. M. (2005). Analyzing the reliability of multidimensional measures: An example from intelligence research. Educational and Psychological Measurement, 65(2), 227–240. https://doi.org/10.1177/0013164404268669.

Burns, R. A. (1985, May 22-25). Information impact and factors affecting recall. Annual National Conference on Teaching Excellence and Conference of Administrators, Austin.

Byrne, B. M. (2010). Structural equation modeling with AMOS: basic concepts, applications, and programming (multivariate applications series) (pp. 396–7384). New York: Taylor & Francis Group.

Cain, J., Black, E. P., & Rohr, J. (2009). An audience response system strategy to improve student motivation, attention, and feedback. American Journal of Pharmaceutical Education, 73(2), 21. https://doi.org/10.5688/aj730221.

Caldwell, J. E. (2007). Clickers in the large classroom: current research and best-practice tips. CBE Life Science Education, 6(1), 9–20. https://doi.org/10.1187/cbe.06-12-0205.

Castillo-Manzano, J. I., Castro-Nuno, M., Lopez-Valpuesta, L., Sanz-Diaz, M. T., & Yniguez, R. (2016). Measuring the effect of ARS on academic performance: A global meta-analysis. Computers & Education, 96, 109–121. https://doi.org/10.1016/j.compedu.2016.02.007.

Cheung, R., & Vogel, D. (2013). Predicting user acceptance of collaborative technologies: An extension of the technology acceptance model for e-learning. Computers & Education, 63, 160–175. https://doi.org/10.1016/j.compedu.2012.12.003.

Chin, W. (1998). The partial least squares approach to structural equation modeling. Modern Methods for Business Research, 295(2), 295–336.

Cochrane, T. (2016). Mobile VR in education: From the fringe to the mainstream. International Journal of Mobile and Blended Learning, 8(4), 44–60. https://doi.org/10.4018/Ijmbl.2016100104.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112(1), 155.

Cohn, J. (1988). Statistical power analysis for the behavioral sciences. Hillsdale: Lawrence Earlbam Associates.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. https://doi.org/10.2307/249008.

Davis, F. D., & Venkatesh, V. (2004). Toward preprototype user acceptance testing of new information systems: Implications for software project management. IEEE Transactions on Engineering Management, 51(1), 31–46. https://doi.org/10.1109/Tem.2003.822468.

Demetriadis, S., & Pombortsis, A. (2007). e-Lectures for flexible learning: a study on their learning efficiency. Educational Technology & Society, 10(2), 147–157.

Dumpit, D. Z., & Fernandez, C. J. (2017). Analysis of the use of social media in Higher Education Institutions (HEIs) using the Technology Acceptance Model. International Journal of Educational Technology in Higher Education, 14(1), 5.

Ebbinghaus, H. (2013). Memory: a contribution to experimental psychology. Annals of Neuroscience, 20(4), 155–156. https://doi.org/10.5214/ans.0972.7531.200408.

Estriegana, R., Medina-Merodio, J. A., & Barchino, R. (2019). Student acceptance of virtual laboratory and practical work: An extension of the technology acceptance model. Computers & Education, 135, 1–14. https://doi.org/10.1016/j.compedu.2019.02.010.

Falk, R. F., & Miller, N. B. (1992). A primer for soft modeling. Akron: University of Akron Press.

Fishbein, M. & Ajzen, I. (1975). Belief, Attitude, Intention and Behavior: An Introduction to Theory and Research. Reading. Addison-Wesley Publishing Co.

FitzPatrick, B., Hawboldt, J., Doyle, D., & Genge, T. (2015). Alignment of learning objectives and assessments in therapeutics courses to foster higher-order thinking. American Journal of Pharmaceutical Education, 79(1), 10. https://doi.org/10.5688/ajpe79110.

Freina, L., & Ott, M. (2015). A literature review on immersive virtual reality in education: state of the art and perspectives. Paper presented at the The International Scientific Conference eLearning and Software for Education.

Gefen, D., & Straub, D. W. (2000). The relative importance of perceived ease of use in IS adoption: A study of e-commerce adoption. Journal of the Association for Information Systems, 1(1), 8.

Gormley, G. J., Collins, K., Boohan, M., Bickle, I. C., & Stevenson, M. (2009). Is there a place for e-learning in clinical skills? A survey of undergraduate medical students’ experiences and attitudes. Medical Teacher, 31(1), e6–e12.

Graham, C. R., Tripp, T. R., Seawright, L., & Joeckel, G. (2007). Empowering or compelling reluctant participators using audience response systems. Active Learning in Higher Education, 8(3), 233–258.

Granić, A., & Marangunić, N. (2019). Technology acceptance model in educational context: A systematic literature review. British Journal of Educational Technology, 50(5), 2572–2593.

Hair, J. F., Hult, G. T. M., Ringle, C., & Sarstedt, M. (2016). A primer on partial least squares structural equation modeling (PLS-SEM). Thousand Oaks: Sage Publications.

Hawkins, D. G. (1995). Virtual reality and passive simulators: the future of fun. In F. Biocca, & M. R. Levy (Eds.), Communication in the age of virtual reality (pp. 159–189). Lawrence Erlbaum.

Hunsu, N. J., Adesope, O., & Bayly, D. J. (2016). A meta-analysis of the effects of audience response systems (clicker-based technologies) on cognition and affect. Computers & Education, 94, 102–119. https://doi.org/10.1016/j.compedu.2015.11.013.

Jadin, T., Gruber, A., & Batinic, B. (2009). Learning with e-lectures: The meaning of learning strategies. Educational Technology & Society, 12(3), 282–288.

Jensen, L., & Konradsen, F. (2018). A review of the use of virtual reality head-mounted displays in education and training. Education and Information Technologies, 23(4), 1515–1529. https://doi.org/10.1007/s10639-017-9676-0.

Jim. (2018). Open Broadcaster System Studio. Retrieved from https://obsproject.com/

King, W. R., & He, J. (2006). A meta-analysis of the technology acceptance model. Information & Management, 43(6), 740–755. https://doi.org/10.1016/j.im.2006.05.003.

Kurt, Ö. E., & Tingöy, Ö. (2017). The acceptance and use of a virtual learning environment in higher education: an empirical study in Turkey, and the UK. International Journal of Educational Technology in Higher Education, 14(1), 26.

Likert, R. (1932). A technique for the measurement of attitudes. Archives of Psychology, 22(140).

Liu, T., & Kender, J. R. (2004). Lecture videos for e-learning: Current research and challenges. Paper presented at the IEEE Sixth International Symposium on Multimedia Software Engineering.

Merchant, Z., Goetz, E. T., Cifuentes, L., Keeney-Kennicutt, W., & Davis, T. J. (2014). Effectiveness of virtual reality-based instruction on students’ learning outcomes in K-12 and higher education: A meta-analysis. Computers & Education, 70, 29–40. https://doi.org/10.1016/j.compedu.2013.07.033.

Mikropoulos, T. A., & Natsis, A. (2011). Educational virtual environments: A ten-year review of empirical research (1999–2009). Computers & Education, 56(3), 769–780.

Moro, C., Stromberga, Z., & Stirling, A. (2017). Virtualisation devices for student learning: Comparison between desktop-based (Oculus Rift) and mobile-based (Gear VR) virtual reality in medical and health science education. Australasian Journal of Educational Technology, 33(6), 1–10. https://doi.org/10.14742/ajet.3840.

Nunnally, J. (1978). Psychometric methods. New York: McGraw-Hill.

Papamitsiou, Z., & Economides, A. A. (2015). Temporal learning analytics visualizations for increasing awareness during assessment. International Journal of Educational Technology in Higher Education, 12(3), 129–147.

Pechenkina, E., Laurence, D., Oates, G., Eldridge, D., & Hunter, D. (2017). Using a gamified mobile app to increase student engagement, retention and academic achievement. International Journal of Educational Technology in Higher Education, 14(1), 1–12.

Project, T. J. (2020). Jamovi. https://www.jamovi.org

Radianti, J., Majchrzak, T. A., Fromm, J., & Wohlgenannt, I. (2020). A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Computers & Education, 147, 103778.

Rafique, H., Anwer, F., Shamim, A., Minaei-Bidgoli, B., Qureshi, M. A., & Shamshirband, S. (2018). Factors affecting acceptance of mobile library applications: structural equation model. Libri, 68(2), 99–112. https://doi.org/10.1515/libri-2017-0041.

Rafique, H., Almagrabi, A. O., Shamim, A., Anwar, F., & Bashir, A. K. (2019). Investigating the acceptance of mobile library applications with an extended technology acceptance model (TAM). Computers & Education, 145, 103732. https://doi.org/10.1016/j.compedu.2019.103732.

Rahman, R. A., Ahmad, S., & Hashim, U. R. (2018). The effectiveness of gamification technique for higher education students engagement in polytechnic Muadzam Shah Pahang, Malaysia. International Journal of Educational Technology in Higher Education, 15(1), 41.

Richards, D., & Taylor, M. (2015). A Comparison of learning gains when using a 2D simulation tool versus a 3D virtual world: An experiment to find the right representation involving the Marginal Value Theorem. Computers & Education, 86, 157–171. https://doi.org/10.1016/j.compedu.2015.03.009.

Rupp, M. A., Kozachuk, J., Michaelis, J. R., Odette, K. L., Smither, J. A., & McConnell, D. S. (2016). The effects of immersiveness and future VR expectations on subjec-tive-experiences during an educational 360 video. Paper presented at the Proceedings of the human factors and ergonomics society annual meeting.

Saadé, R., & Bahli, B. (2005). The impact of cognitive absorption on perceived usefulness and perceived ease of use in on-line learning: an extension of the technology acceptance model. Information & Management, 42(2), 317–327.

Scherer, R., Siddiq, F., & Tondeur, J. (2019). The technology acceptance model (TAM): A meta-analytic structural equation modeling approach to explaining teachers’ adoption of digital technology in education. Computers & Education, 128, 13–35.

Schneider, M., & Preckel, F. (2017). Variables associated with achievement in higher education: A systematic review of meta-analyses. Psychological Bulletin, 143(6), 565–600. https://doi.org/10.1037/bul0000098.

Spickard, A., 3rd., Alrajeh, N., Cordray, D., & Gigante, J. (2002). Learning about screening using an online or live lecture: does it matter? Journal of General Internal Medicine, 17(7), 540–545. https://doi.org/10.1046/j.1525-1497.2002.10731.x.

Stieger, S., & Reips, U.-D. (2010). What are participants doing while filling in an online questionnaire: A paradata collection tool and an empirical study. Computers in Human Behavior, 26(6), 1488–1495.

Stowell, J. R., Oldham, T., & Bennett, D. (2010). Using student response systems (“clickers”) to combat conformity and shyness. Teaching of Psychology, 37(2), 135–140.

Sumak, B., Hericko, M., & Pusnik, M. (2011). A meta-analysis of e-learning technology acceptance: The role of user types and e-learning technology types. Computers in Human Behavior, 27(6), 2067–2077. https://doi.org/10.1016/j.chb.2011.08.005.

Tamilmani, K., Rana, N. P., Prakasam, N., & Dwivedi, Y. K. (2019). The battle of Brain vs. Heart: A literature review and meta-analysis of “hedonic motivation” use in UTAUT2. International Journal of Information Management, 46, 222–235.

Terzis, V., & Economides, A. A. (2011). The acceptance and use of computer based assessment. Computers & Education, 56(4), 1032–1044.

Tozogul, D., Tozogul, M., D., Gurses, A. & Dogar, C. (2004). The students’ perceptions: Essay versus multiple-choicetype exams. Journal of Baltic Science Education, 2(6), 52–59.

Venkatesh, V., & Bala, H. (2008). Technology acceptance model 3 and a research agenda on interventions. Decision Sciences, 39(2), 273–315. https://doi.org/10.1111/j.1540-5915.2008.00192.x.

Venkatesh, V., & Davis, F. D. (2000). A theoretical extension of the technology acceptance model: Four longitudinal field studies. Management Science, 46(2), 186–204. https://doi.org/10.1287/mnsc.46.2.186.11926.

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27, 425–478.

Venkatesh, V., Thong, J. Y., & Xu, X. (2012). Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Quarterly, 36, 157–178.

Webb, N. L. (1997). Criteria for alignment of expectations and assessments in mathematics and science education. Council of Chief State School Officers and National Institute for Science Education Research Monograph No. 6. Madison, WI: University of Wisconsin–Madison, Wisconsin Center for Education Research.

Yardi, S. (2008). Whispers in the classroom. In T. McPherson (Ed)., Digital Youth, Innovation, and the Unexpected (pp. 143–164). The MIT Press.

Acknowledgements

We thank Dorothee Tautz, Stefan Loosli, Nina Wyssenbach, Thomas Wyssenbach, and Melina Zeballos for their help in the data collection.

Funding

This research was fully sponsored by the University of Applied Sciences and Arts Northwestern Switzerland.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The items for PU, PEOU, and BI employed in this study. [Name des Tools] refers to the four digital learning technologies (CRS, CC, e-lectures, and VR). The English version is in brackets

Factor | Item |

|---|---|

PU | Der Einsatz von [Name of Tools] führt zu einer Verbesserung meiner Lernleistungen. (Using [name of tool] would help me learn.) |

PU | Ich halte [Name of Tools] für nützlich. (Using [name of tool] would be useful.) |

PU | [Name of Tools] erleichtern das Lernen und Verstehen der Modulinhalte. ([Name of tool] helps me learn and understand the course content.) |

PU | Mit dem Einsatz von [Name of Tools] würde ich die Modulinhalte schneller lernen. (Using [name of tool] would help me learn the course contents faster.) |

PU | Ich finde [Name of Tools] nützlich für mein Studium. (I find [name of tool] useful for my studies.) |

PEOU | Die Bedienung von [Name of Tools] zu erlernen ist einfach für mich. (Learning to use e-lectures would be easy for me.) |

PEOU | [Name of Tools] sind einfach zu benutzen. (E-lectures are easy to use.) |

PEOU | Mit [Name Tools] zu interagieren braucht nicht viel mentale Anstrengung. (I would find interacting with e-lectures easy to interact with.) |

PEOU | Insgesamt denke ich, dass es einfach ist [Name of Tools] zu benutzen. (All in all, I think it is easy to use e-lectures.) |

PEOU | Mit [Name of Tools] zu interagieren ist klar und verständlich. (Interacting with [name of tool] is clear and understandable.) |

BI | Wenn sie verfügbar sind, plane ich [Name of Tools] häufig für mein Studium einzusetzen. (Assuming I had access to [name of tool], I would use it often for my studies.) |

BI | Wenn [Name of Tools] verfügbar sind, beabsichtige ich sie während dem Semester häufig zu benutzen. (Assuming I had access to [name of tool], I would intend to use it often during the semester.) |

BI | Wenn [Name of Tools] verfügbar sind, werde ich sie versuchen häufig einzusetzen |

(Assuming I had access to [name of tool], I would try to use it often.) | |

BI | Angenommen ich hätte Zugang zu [Name of Tools], würde ich sie benutzen. (Given I had access to [name of tool], I would intend to use it) |

BI | Wenn ich Zugang zu [Name of Tools] hätte, gehe ich davon aus, dass ich es benutzen würde. (Given that I had access to [name of tool], I predict that I would use it.) |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sprenger, D.A., Schwaninger, A. Technology acceptance of four digital learning technologies (classroom response system, classroom chat, e-lectures, and mobile virtual reality) after three months’ usage. Int J Educ Technol High Educ 18, 8 (2021). https://doi.org/10.1186/s41239-021-00243-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41239-021-00243-4