Abstract

The study of digital competence remains an issue of interest for both the scientific community and the supranational political agenda. This study uses the Delphi method to validate the design of a questionnaire to determine the perceived importance of digital competence in higher education. The questionnaire was constructed from different framework documents in digital competence standards (NETS, ACLR, UNESCO). The triangulation of non-parametric techniques made it possible to consolidate the results obtained through the Delphi panel, the suitability of which was highlighted through the expert competence index (K). The resulting questionnaire emerges as a good tool for undertaking future national and international studies on digital competence in higher education.

Similar content being viewed by others

Introduction

Information and communication technologies (ICTs) have brought about major changes in the way learning is approached. This in turn has led digital competence to be considered as a means of achieving a degree of literacy suited to present-day society’s needs. Thus, such digital competence has come to form part of the political agenda (European Commission, 2010; UN, 2010) and is currently the focus of attention in numerous and important general studies (Greene, Yu, & Copeland, 2014; Ilomäki, Paavola, Lakkala, & Kantosalo, 2014; Liyanagunawardena, Adams, Rassool, & Williams, 2014; Mohammadyari & Singh 2015; Ng, 2012; Pangrazio, 2014). The literature on digital competences in higher education is not extensive, though. The task carried out by ISTE (International Society for Technology in Education) through the NETS (National Education Technology Standards) program in the general context deserves to be highlighted. Furthermore, research initiatives have been undertaken for the purpose of analyzing the implementation of digital competences as well as their implications for teachers (Niederhauser, Lindstrom, & Strobel, 2007) or for the training of future teachers in such ICT standards (Klein & Weaver, 2010). Likewise, Jeffs and Banister (2006) examined how the NETS program influenced the professional development of undergraduate lecturers, and Ching (2009) studied the consequences of putting into practice NETS-based ICT competences in the educational context of the Philippines.

Voithofer (2005) analyzed the consequences that ‘service-learning’ had in relation to the short-term and long-term effects caused by ICT integration into classrooms characterized by their cultural diversity on the acquisition of technical skills and reflexive knowledge.

Along similar lines, NETS standards have inspired other studies (Kadijevich & Haapasalo, 2008; Masood, 2010; Naci & Ferhan, 2009; Rong & Ling 2008) and numerous proposals for standards and indicators of digital competence in an array of dimensions, including ILCS (Information Literacy Competency Standards for Higher Education; ACRL-Association of College and Research Libraries, 2000); SAILS (Standardized Assessments of Information Literacy Skills, SAILS, 2011); iSkills developed by the company Educational Testing Service; TRAILS, developed by the Kent State University Libraries; Information Skill Survey developed by the Council of Australian University Librarians (CAUL); the Australian and New Zealand Institute for Information Literacy (ANZIIL) standards; the French B2i framework of reference; the NETS-based INSA Colombian curriculum indications; or the European Commission’s DIGCOMP project (Ferrari, 2012), which involved 90 experts from universities and research centers. Finally, the following stand out in the specific Spanish context: the proposal for information literacy or ALFIN (Area, 2008); computer and information competences in degree studies (CRUE-TIC, 2009); and the IL-HUMAS project (Pinto, 2010).

In short, broad references exist to the involvement of different organizations (UN, 2010; Organisation for Economic Co-operation and Development, 2005; UNESCO, 2008) and professional associations (ISTE, ALA, ACLR, AASL) aimed at raising awareness of the fact that citizens increasingly need to acquire digital competences. This acquisition of cross-disciplinary competences is closely linked to the university education stage (Hernández, 2010) and makes it easier for graduates to have a greater chance of success in the scientific and professional fields where they will develop their professional activity (Area, 2010).

In the light of all the above, the aim of this Delphi study is to design and validate a questionnaire constructed on the basis of various digital competence standards through which the perceived importance of the acquisition of digital competences in higher education can be specifically assessed by a panel of experts.

Method

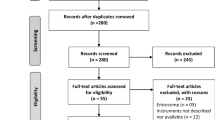

The study was performed through a two-round Delphi iterative consultation process with experts (Keeney, Hasson, & McKenna, 2006). This technique is widely utilized in the research context (Peeraer & Van Petegem, 2015; Yeh & Cheng, 2015) and its validity for questionnaire development has already been described (Blasco, López, & Mengual, 2010).

Participants

The panel was made up of 27 researchers from 15 Spanish universities. Our selection criterion was that they had to be senior university lecturers or professors with a recognized academic career – in the areas of both teaching and research – relating to the study topic. Intentional sampling (Landeta, 2002) was used for those panelists who complied with the aforementioned criteria, who were contacted by e-mail. The procedure specified in detail below began after their confirmation of participation had arrived. Furthermore, an analysis was carried out regarding their pertinence as experts through the calculation of the expert competence index (K) (López, 2008).

Procedure

The construction of the CDESFootnote 1 (Cuestionario de Competencias Digitales en Educación Superior [Questionnaire about Digital Competences in Higher Education]) took place in three distinct stages (Bravo & Arrieta, 2005) (Fig. 1).

A focus group formed by the members of the University of Alicante EDUTIC-ADEI research group was created during the first stage. The work undertaken in several sessions led to the first version of the questionnaire that would subsequently be submitted to the panelists. The LimeSurvey application was used both to contact panelists and to collect data. In successive rounds, our panelists received the complete questionnaire together with a scale on which they had to assess the degree of adequacy of the items and dimensions proposed (1 = not adequate; 2 = hardly adequate; 3 = adequate; 4 = quite adequate; 5 = totally adequate). The first round additionally included a scale that allowed the K coefficient to be determined. An analysis of answers was performed during the exploratory and final stages with the aim of modifying or adding items contained in this questionnaire.

Questionnaire

The CDES (Mengual, 2011) consists of 5 dimensions: (1) Technological Literacy [15 items]; (2) Information Access and Use [8 items]; (3) Communication and Collaboration [8 items]; (4) Digital Citizenship [8 items]; and (5) Creativity and Innovation [13 items].

The dimensions and items were carefully examined from various proposals for digital competence standards. Mengual (2011) justifies these dimensions and items in his paper for their connection with the different framework documents utilized to construct the CDES (NETS, AASL, CI2, ACRL, ILCS, SAILS and OCT-CTS).

Expert competence level analysis

The experts’ competence was analyzed by means of the K coefficient, an index recently used in numerous works (García & Fernández, 2008; López, Stuart, & Granado, 2011; Llorente, 2013) and calculated in accordance with the actual experts’ opinion of their respective levels of knowledge of the problem being solved.

These K analyses revealed that our panelists had proven competence and were suitable to form part of the panel. The value of K exceeded the average competence values in every case; 77 % (n = 21) of them obtained a high coefficient value, whereas 22 % (n = 6) showed a medium competence coefficient value.

Consensus definition

This paper followed the methodology previously adopted in several studies (Lee, Altschuld, & Hung, 2009; Williams, 2003; Zawacki-Richter, 2009). Data collection during the first consultation round and the determination of the K coefficient led to the establishment of consensus criteria among panelists (See Table 1).

Ancillary techniques

Our research work implemented strategies contained in Delphi studies through which it was possible to triangulate the data obtained from the panel of experts: a) Central tendency and dispersion measures; b) Analysis of consensus level between rounds (IQR, RIR, VCV); c) Reliability analysis (Cronbach’s alpha); and d) Analysis of group stability in answers (median test and U Test).

Data analysis

Data analysis during R1 revealed that there was broad agreement on the five suggested dimensions. Seven items were proposed for a second round and two were discarded (Table 2).

After the second round (R2), a specification was made of the definitive item selection criteria through the interpretation of a contingency table with the consensus results (Table 2). The highly significant items responded to a unanimous consensus criterion in both rounds; the significant ones to the criteria R1 = N/A and R2 = N/A; and the non-significant items were discarded in each of the rounds.

Factor 1 included 11 of the 15 initially proposed items; the structure of the remaining factors was unaltered.

Analysis of consensus level and group stability in answers

A decision was made to calculate the acceptable degree of proximity and stability in the answers obtained during the rounds through the calculation of the interquartile range (IQR) and the variation in the coefficient of variation (VCV). The analysis of the consensus reached between panelists’ opinions revealed an acceptable degree of proximity and stability (IQR < .5) (Ortega, 2008).

Group stability is understood to exist if the variation in the relative interquartile range (RIR) between rounds is lower than .30. Similarly, consensus is understood to exist if the variation in the coefficient of variation (CV) is below 40 % in most items. Figure 2 shows that the VRIR between rounds is lower than .30 in each and every item, thus describing group stability in answers. The consensus between panelists is thus illustrated by the VCV data (Fig. 3).

The Delphi process is considered complete when consensus and stability levels have been defined, since the application of another round would not provide significant variations in results.

Multivariate analysis

The previous analyses were supported by non-parametric means difference tests. The results obtained with Mann-Whitney’s U test did not reveal statistically significant differences in the scores corresponding to CDES factors between consultation rounds at a p ≤ .05 level [Factor 1 (z = −.438, p = .661), Factor 2 (z = −.052, p = .959), Factor 3 (z = −.181, p = .856), Factor 4 (z = −.206, p = .837), Factor 5 (z = −.489, p = .625)]. Nor were significant differences described in the item scores at a p ≤ .05 level.

In parallel, the median test complemented the preceding hypotheses. The analysis of scores did not confirm the existence of differences between rounds, neither in dimension scores [Factor 1 (χ 2 = 3.8000, p = .695), Factor 2 (χ 2 = 4.1250, p = 1.000), Factor 3 (χ 2 = 4.2500, p=. 406), Factor 4 (χ 2 = 4.1250, p = 1.000), Factor 5 (χ 2 = 4.2308, p = .695)] nor in item scores; p ≥ .05 values were obtained in all cases.

Median test values confirm those yielded by the U test, thus allowing us to state that panelists’ scores present stability. These analyses highlight the suitability of the technique used for item discrimination and of the statistics utilized for the consensus definition.

Questionnaire validity and reliability analysis

The resulting questionnaire was finally administered to a random sample of students enrolled in the Faculty of Education at the University of Alicante (n = 100) in the course of a pilot test, observing all ethical issues during the process (Mesía Maraví, 2007). Cronbach’s alpha test results were .962. The individual scores for each of the dimensions were above .8 [F1 = .86; F2 = .89; F3 = .89; F4 = .87; F5 = .92], resulting in high and suitable reliability indices.

As for the item-total correlation analysis, its results described an optimum inter-item correlation range, and the deletion of several items did not result in an improvement in the reliability coefficient of either the dimensions or the questionnaire.

Discussion and conclusions

The CDES that we designed is based on a series of referents and models specified in the introduction to this paper. This does not mean that its importance should be minimized, since it constitutes one of the few existing models for the determination of generic digital competences within the higher education study framework. In this respect, our study complements other proposals made from different perspectives. By way of example, it is worth mentioning models that determine the importance of digital competences in higher education lecturers (Jeffs & Banister, 2006; Masood, 2010; Naci & Ferhan, 2009; Rong & Ling, 2008), to which it is necessary to add those studies describing the importance of standards related to digital competences in the training of higher education students (Alan & Pitt, 2010; Hong & Jung, 2010; Kadijevich & Haapasalo, 2008; Kaminski, Switzer, & Gloeckner, 2009; Li-Ping & Jill, 2009; Niederhauser, Lindstrom, & Strobel, 2007; Voithofer, 2005).

In relation to our model, and concerning the taxonomy of dimensions and indicators proposed, it can be said that emphasis is not placed on the importance of the teacher or student role, but rather on those aspects from which the teaching-learning model should be approached. That is why the questionnaire represents a good framework document that can serve as a referent to undertake future research initiatives, as well as to analyze the study of digital competence from this point of view, to examine its usage in other contexts, to establish connections between different groups or to subject the questionnaire to a review, adaptation or change process.

Criteria should be set to recognize the specific competences that teachers and students possess so that the teaching-learning models can be adjusted accordingly. Our approach in this regard coincides with that of Rong and Ling (2008), who state that the proposals for ICT competence and literacy models and elements based on standards and derived from consultation processes with experts or Delphi techniques provide the dimensions and competences that need to be included therein. The utilization of the Delphi technique during this process “has great impacts on improving information literacy competence and the willingness of applying information technology on teaching” (Rong & Ling, 2008, p.796).

The results of this study must be interpreted in accordance with the limitations that, in our opinion, it has faced. In this sense, it is worth saying that, even though the Delphi technique is very popular and widely accepted within the scientific community, it would be particularly interesting to undertake the validation of this work using other multivariate analysis modalities. This approach would make it possible to give validity to the questionnaire and to describe its suitability for use in diverse contexts. It is for this reason that our recommendations for future research works include the collection of a significant university student sample (both national and international) for the purpose of suggesting an evaluation of the questionnaire through a content validity index (CVI) and a factor analysis.

Our stance coincides with that of Tello and Aguaded (2009), according to whom it is necessary to utilize reliable and valid instruments in research processes. Our intention was thus to suggest a measurement instrument of proven reliability and validity on the perceived importance associated with the inclusion of digital competence within the university environment. This becomes a must when shaping the 21st-century citizen, and even more so in the light of the importance that technology is gradually acquiring within this context.

Notes

Versions of the CDES in Spanish and Italian available at http://www.edutic.ua.es/cdes.

References

ACRL-Association of College and Research Libraries (2000). Information Literacy Competency Standards for Higher Education. Retrieved from http://www.ala.org/ala/mgrps/divs/acrl/standards/standards.pdf

Alan, C., & Pitt, E. (2010). Students’ approaches to study, conceptions of learning and judgements about the value of networked technologies. Active Learning in Higher Education, 11(1), 55–65. doi:10.1177/1469787409355875

Area, M. (2008). Innovación pedagógica con TIC y el desarrollo de las competencias informacionales y digitales. Investigación en la Escuela, 64, 5–18.

Area, M. (2010). Why offer information and digital competency training in higher education? RUSC. Universities and Knowledge Society Journal, 7(2), 2–5. doi:10.7238/rusc.v7i2.976

Blasco, J. E., López, A., & Mengual, S. (2010). Validación mediante método Delphi de un cuestionario para conocer las experiencias e interés hacia las actividades acuáticas. AGORA, 12(1), 75–9.

Bravo, M. L., & Arrieta, J. J. (2005). El Método Delphi. Su implementación en una estrategia didáctica para la enseñanza de las demostraciones geométricas. Revista Iberoamericana de Educación, 35(3), 1–10.

Ching, G. (2009). Implications of an experimental information technology curriculum for elementary students. Computers and Education, 53(2), 419–428. doi:10.1016/j.compedu.2009.02.019

CRUE-TIC & REBIUN (2009). Competencias informáticas e informacionales en los estudios de grado. Retrieved from http://www.uv.es/websbd/formacio/ci2.pdf

European Commission. (2010). A Digital Agenda for Europe. Retrieved from http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=COM:2010:0245:FIN:EN:PDF

Ferrari, A. (2012). Digital Competence in Practice: An Analysis of Frameworks. Luxembourg: JRC Technical Reports. Publications Office of the European Union.

García, L., & Fernández, S. (2008). Procedimiento de aplicación del trabajo creativo en grupo de expertos. Energética, 29(2), 46–50.

Greene, J. A., Yu, S. B., & Copeland, D. Z. (2014). Measuring critical components of digital literacy and their relationships with learning. Computers and Education, 76, 55–69. doi:10.1016/j.compedu.2014.03.008

Hernández, C. J. (2010). A Plan for Information Competency Training via Virtual Classrooms: Analysis of an Experience Involving University Students. RUSC. Universities and Knowledge Society Journal (RUSC), 7(2), 50–62. doi:10.7238/rusc.v7i2.981

Hong, S., & Jung, I. (2010). The distance learner competencies: a three-phased empirical approach. Educational Technology Research and Development, 59(1), 21–42. doi:10.1007/s11423-010-9164-3

Ilomäki, L., Paavola, S., Lakkala, M., & Kantosalo, A. (2014). Digital competence – an emergent boundary concept for policy and educational research. Education and Information Technologies. Advance online publication.. doi:10.1007/s10639-014-9346-4

Jeffs, T., & Banister, S. (2006). Enhancing Collaboration and Skill Acquisition Through the Use of Technology. Journal of Technology and Teacher Education, 14(2), 407–433.

Kadijevich, D., & Haapasalo, L. (2008). Factors that influence student teachers’ interest to achieve educational technology standards. Computers and Education, 50(1), 262–270. doi:10.1016/j.compedu.2006.05.005

Kaminski, K., Switzer, J., & Gloeckner, G. (2009). Workforce readiness: A study of university students’ fluency with information technology. Computers and Education, 53(2), 228–233. doi:10.1016/j.compedu.2009.01.017

Keeney, S., Hasson, F., & McKenna, H. (2006). Consulting the oracle: Ten lessons from using the Delphi technique in nursing research. Journal of Advanced Nursing, 53(2), 205–12. doi:10.1111/j.1365-2648.2006.03716.x

Klein, B. S., & Weaver, S. (2010). Using podcasting to address nature-deficit disorder. The Inclusion of Environmental Education in Science Teacher Education (pp. 311–321). doi:10.1007/978-90-481-9222-9_21

Landeta, J. (2002). El método Delphi: una técnica de previsión del futuro. Barcelona, Spain: Ariel Social.

Lee, Y.-F., Altschuld, J. W., & Hung, H.-L. (2009). Practices and challenges in educational program evaluation in the Asia-Pacific Region: Results of a Delphi study. Evaluation and Program Planning, 31(4), 368–375. doi:10.1016/j.evalprogplan.2008.08.003

Li-Ping, T., & Jill, M. (2009). Students’ perceptions of teaching technologies, application of technologies, and academic performance. Computers and Education, 53, 1241–1255. doi:10.1016/j.compedu.2009.06.007

Liyanagunawardena, T., Adams, A., Rassool, N., & Williams, S. (2014). Developing government policies for distance education: Lessons learnt from two Sri Lankan case studies. International Review of Education, 60(6), 821–839. doi:10.1007/s11159-014-9442-0

Llorente, M. (2013). Assessing Personal Learning Environments (PLEs). An expert evaluation. Journal of New Approaches in Educational Research, 2(1), 39–44. doi:10.7821/naer.2.1.39-44

López, A. (2008). La modelación de la habilidad diagnóstico patológico desde el enfoque histórico cultural para la asignatura Patología Veterinaria. Revista Pedagogía Universitaria, 13(5), 51–71.

López, C., Stuart, A., & Granado, A. (2011). Establecimiento de conceptos básicos para una Educación Física saludable a través del Método Experto. Revista Electrónica de Investigación Educativa, 13(2), 22–40.

Masood, M. (2010). An initial comparison of educational technology courses for training teachers at Malaysian universities: A comparative study. Turkish Online Journal of Educational Technology, 9(1), 23–27.

Mengual, S. (2011). La importancia percibida por el profesorado y el alumnado sobre la inclusión de la competencia digital en educación Superior (Doctoral Thesis). Alicante, Spain: Universitat d’Alacant.

Mesía Maraví, R. (2007). Contexto ético de la investigación social. Investigación Educativa, 11(19), 137–151. Retrieved from http://goo.gl/ayOGPU

Mohammadyari, S., & Singh, H. (2015). Understanding the effect of e-learning on individual performance: The role of digital literacy. Computers and Education, 82, 11–25. doi:10.1016/j.compedu.2014.10.025

Naci, A., & Ferhan, H. (2009). Educational Technology Standards Scale (ETSS): A study of reliability and validity for Turkish preservice teachers. Journal of Computing in Teacher Education, 25(4), 135–142.

Ng, W. (2012). Can we teach digital natives digital literacy? Computers and Education, 59(3), 1065–1078. doi:10.1016/j.compedu.2012.04.016

Niederhauser, D., Lindstrom, D., & Strobel, J. (2007). Evidence of the NETS*S in K-12 Classrooms: Implications for Teacher Education. Journal of Technology and Teacher Education, 15(4), 483–512.

Organisation for Economic Co-operation and Development (2005). The definition and selection of key competencies. Executive summary. The DeSeCo Project. Retrieved from http://www.oecd.org/dataoecd/47/61/35070367.pdf

Ortega, F. (2008). El método Delphi, prospectiva en Ciencias Sociales. Revista EAN, 64, 31–54.

Pangrazio, L. (2014). Reconceptualising critical digital literacy. Discourse: Studies in the Cultural Politics of Education. Advance online publication. doi:10.1080/01596306.2014.942836

Peeraer, J., & Van Petegem, P. (2015). Integration or transformation? looking in the future of information and communication technology in education in Vietnam. Evaluation and Program Planning, 48, 47–56. doi:10.1016/j.evalprogplan.2014.09.005

Pinto, M. (2010). Design of the IL-HUMASS survey on information literacy in higher education: a self-assessment approach. Journal of Information Science, 36(1), 86–103. doi:10.1177/0165551509351198

Rong, J., & Ling, W. (2008). Exploring the information literacy competence standards for elementary and high school teachers. Computers and Education, 50(3), 787–806. doi:10.1016/j.compedu.2006.08.011

Tello, J., & Aguaded, I. (2009). Desarrollo profesional docente ante los nuevos retos de las tecnologías de la información y la comunicación en los centros educativos. Pixel Bit, 34, 31–47. Retrieved from http://www.sav.us.es/pixelbit/pixelbit/articulos/n34/3.html

UN. (2010). 2010 Joint progress report of the council and the commission on the implementation of the education and training 2010 work programme. Official Journal of the European Union, C, 117. Retrieved from http://aei.pitt.edu/42901/

UNESCO (2008). Estándares en competencia en TIC para Docentes. Retrieved from http://cst.unesco-ci.org/sites/projects/cst/default.aspx

Voithofer, R. (2005). Integrating service-learning into technology training in teacher preparation: A study of an educational technology course for preservice teachers. Journal of Computing in Teacher Education, 21(3), 103–108.

Williams, P. E. (2003). Roles and competencies for distance education programs in higher education institutions. American Journal of Distance Education, 17(1), 45–57. doi:10.1207/S15389286AJDE1701_4

Yeh, D. Y., & Cheng, C. H. (2015). Recommendation system for popular tourist attractions in Taiwan using Delphi panel and repertory grid techniques. Tourism Management, 46, 164–176. doi:10.1016/j.tourman.2014.07.002

Zawacki-Richter, O. (2009). Research areas in distance education: a Delphi study. Int Rev Res Open Distance Learning, 10(3). Retrieved from http://www.irrodl.org/index.php/irrodl/article/view/674/1260

Author information

Authors and Affiliations

Corresponding author

Additional information

About the authors

Santiago Mengual-Andrés PhD and BA in Psychopedagogy. Lecturer in the Department of Comparative Education and History of Education, Faculty of Letters, at the University of Valencia. Executive editor of the Journal of New Approaches in Educational Research. His research interests focus on the analysis of national as well as international policies centered on ICT inclusion, digital competence and new technology-mediated education scenarios. He has participated and continues to participate as a lecturer in various master’s courses and postgraduate programs both in Spain and abroad.

ORCID ID: http://orcid.org/0000-0002-1588-9741

Rosabel Roig Vila holds a PhD in Pedagogy and is a senior lecturer in the Department of General and Specific Didactics at the University of Alicante. She is also head of the EDUTIC-ADEI research group and director of the master’s degree program in Education and ICTs at the University of Alicante. She is the editor of the Journal of New Approaches in Educational Research (http://www.naerjournal.ua.es), and her research revolves around ICTs and teaching-learning processes. She was the dean of the Faculty of Education at the University of Alicante.

ORCID ID: http://orcid.org/0000-0002-9731-430X

Josefa Blasco Mira PhD in Pedagogy. Senior lecturer in the Department of General and Specific Didactics at the University of Alicante. Member of the EDUTIC-ADEI research group. She was vice-dean of the Faculty of Education at the University of Alicante. Her main lines of research are qualitative analysis in educational research, educational research in physical activity and sports sciences, as well as motivation and sports practice.

ORCID ID: http://orcid.org/0000-0003-3671-055X

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Mengual-Andrés, S., Roig-Vila, R. & Mira, J.B. Delphi study for the design and validation of a questionnaire about digital competences in higher education. Int J Educ Technol High Educ 13, 12 (2016). https://doi.org/10.1186/s41239-016-0009-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41239-016-0009-y