Abstract

Background

In Italy, there is no framework of procedural skills that all medical students should be able to perform autonomously at graduation. The study aims at identifying (1) a set of essential procedural skills and (2) which abilities could be potentially taught with simulation. Desirability score was calculated for each procedure to determine the most effective manner to proceed with simulation curriculum development.

Methods

A web poll was conducted at the School of Medicine in Novara, looking at the level of expected and self-perceived competency for common medical procedures. Three groups were enrolled: (1) faculty, (2) junior doctors in their first years of practice, and (3) recently graduated medical students. Level of importance of procedural skills for independent practice expressed by teachers, level of mastery self-perceived by learners (students and junior doctors) and suitability of simulation training for the given technical skills were measured. Desirability function was used to set priorities for future learning.

Results

The overall mean expected level of competency for the procedural skills was 7.9/9. Mean level of self reported competency was 4.7/9 for junior doctors and 4.4/9 for recently graduated students. The highest priority skills according to the desirability function were urinary catheter placement, nasogastric tube insertion, and incision and drainage of superficial abscesses.

Conclusions

This study identifies those technical competencies thought by faculty to be important and assessed the junior doctors and recent graduates level of self-perceived confidence in performing these skills. The study also identifies the perceived utility of teaching these skills by simulation. The study prioritizes those skills that have a gap between expected and observed competency and are also thought to be amenable to teaching by simulation. This allows immediate priorities for simulation curriculum development in the most effective manner. This methodology may be useful to researchers in other centers to prioritize simulation training.

Similar content being viewed by others

Introduction

Theoretical knowledge and clinical skills are two equally important parts of medical education [1]. While many authorities agree on the importance of acquisition of technical skills, few guidelines detail the particular competencies medical students ought to acquire prior to graduation. Some studies suggest that the competencies among graduates may be highly variable, and that medical schools are not always effective in teaching important procedurals skills [2, 3]. In Italy, there is no published list of competencies for basic technical skills. In the 32 points of the “Qualifying Educational Goals” chart published by the Ministry of Education, University and Research (MIUR) in 2007, non-technical skills are widely discussed and recommended, but technical skills are only vaguely addressed [4]. To the authors’ knowledge, only Vettore et al. attempted to identify the technical skills which should be included in the medical school core curriculum [5].

The effectiveness and utility of simulation-based medical educations (SBME) has been well documented [2]. Simulation training offers opportunity for learners to practice both technical and non-technical skill in a low-risk setting. Learners can learn from both success and errors without the untoward patient risks [6,7,8,9,10]. As simulation technology and experience advance, the breadth of skills that can be simulated continues to widen. Simulation offers an opportunity previously unavailable for safe practice of psycho-motor and technical skills at any level of expertise.

Thus, although simulation may be a valuable tool for acquisition of technical skills, it may be difficult for medical schools to identify how to proceed in developing a simulation curriculum. Simulation can be costly in terms of staff, resources, simulation equipment, and giving learners time away from their clinical duties. It is unlikely that most medical schools will be able to immediately provide simulation training for all clinical skills. With no clear published guidelines on how to prioritize simulation training, it may be difficult to form an effective curriculum.

The objectives of this study were to (1) list technical skill competencies that medical students should possess prior to graduation, (2) determine the perceptions of faculty and learners about suitability of simulation training for technical skills, and (3) provide a prioritized list of these competencies for further curriculum development. It is the hope that curriculum designers will find precious tools they could benefit from.

Methods

Study design and population

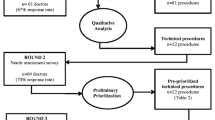

The study is prospective observational study using a web-based survey tool. Three distinct groups participated in the study:

-

1)

Faculty at the School of Medicine at the Università del Piemonte Orientale;

-

2)

Junior doctors graduated in our medical school with 1–2 years clinical experience at the time of the study;

-

3)

Medical students having successfully graduated but have not worked yet.

The study included all faculty members involved in teaching skills in the in the 2014–15 academic year, all medical students graduating in the same academic year, and all junior doctor with 1 or 2 years of clinical experience. An e-mail invitation including a brief presentation of the study, a consent form, and a link to the online questionnaire was sent to each participant. A maximum of three reminder e-mails were further forwarded to non-responders from September 2015 through January 2016. Each participant could answer the poll only once.

Participation was voluntary. Respondents were assured confidentiality of their responses and received no financial incentives. As the study results were presented in aggregate with no identifiers, the study was deemed exempt from formal institutional approval by the local ethics committee (Comitato Etico Interaziendale, Novara, Italy; study number Prot. 639/CE).

Survey tool

The initial list of technical procedures was created by a brief review of current national and international literature. The list was intended to be comprehensive and inclusive. The survey was administered using SurveyMonkey (SurveyMonkey, Palo Alto, California, USA). The survey given to the faculty consisted of two main sections: the first rating their perception of the importance of each of the skills for independent practice, and the second rating their perception of the suitability of each skill for teaching by simulation. The survey for the junior doctors and students also consisted of two sections: the first rating their self-perceived mastery of the skill, and the second rating their perception of the suitability of the skill for teaching by simulation. In all cases, participants used a semantic differential scale for importance from 1 to 9 (1 being the least important and 9 being the most important). All participants were also given opportunity to add further skills that they felt should be included in the list. A detailed description of the survey instrument is provided in Table 1.

Definitions

The following terms are used and measured in the manuscript:

“essentialness” which estimates the level of faculty consensus about the importance that students at the end of medical school perform certain abilities in an autonomous and automatic manner;

“autonomy” which expresses the current level of mastery of these technical skills immediately after the graduation and after at least 1 year of experience in clinical setting for medical students and junior doctors respectively; and “utility” which refers to perceived suitability of simulation training for the given technical skills.

Data analysis

Data from SurveyMonkey were transcribed to a spreadsheet using Microsoft Excel (Version 2003, Microsoft Corporation, Redmond, WA, USA). Data analysis was performed using R: A Language and Environment for Statistical Computing (R Foundation for Statistical Computing, Vienna, Austria). Data are presented as mean and interquartile range (IQR).

Desirability functions are well documented and frequently used in science and engineering when several responses must be optimized simultaneously [11]. These functions serve to transpose a multiple response problem into a single response problem [11]. In this study, the desirability function is written to deem to be of highest priority those abilities that (a) were thought to be very important to faculty (high score for autonomy), (b) were felt to be lacking in confidence for the recent graduates and junior doctors (low score for autonomy), and were believed teachable by simulation by all three groups (high score for utility). Desirability functions are usually developed by standardizing the value for each response over a scale of 0 to 1 and then taking the geometric mean of the response [11]. The final desirability function was the following:

For the faculty group:

For both the junior doctor group and the student group:

Finally, the overall desirability was calculated by combining the desirability scores from each of the three groups:

This function gives results ranging from 0 to 1, where 0 is the lowest priority and 1 is the highest priority.

Results

In total, 198 participants were invited to complete the survey: 43 faculty, 102 junior doctors, and 53 students. Overall, 132 agreed to participate (response rate 66.7%). The overall mean level of importance for each of the skills by group is presented in Table 2. Among the faculty, the skills rated most important (essentialness) were personal protective equipment (PPE) usage (9/9), venous puncture (8.9/9), intramuscular injection (9.9/9), and subcutaneous injection (8.9/9). Among junior doctors, ratings for self-perceived competency (autonomy) ranged from a high of 7.9/9 for personal protective equipment (PPE) usage to a low of 1.1/9 for cricothyrotomy. Recent graduates showed the same skills as highest for autonomy (7.6/9 for self-protection) and lowest for autonomy (1.3/9 for cricothyrotomy). Overall mean rating among all competencies for autonomy was 4.6 for junior doctors and 4.4 for recently graduated students.

In all three groups, the majority of skills were rated highly utility (the ability to be taught by simulation). Overall rating for utility among the procedures was 7.4/9 for faculty, 7.1/9 for junior doctors, and 7.3/9 for students. Figure 1 gives an overview of the results and their trend; data are all scattered in the right quadrants, which suggests the high level of perceived utility. The lowest utility is 5.9/9 for Spirometry, and highest is for Cardiopulmonary resuscitation with 8.6/9.

The highest calculated desirability overall was for urinary catheter placement (0.77), nasogastric tube insertion (0.75), and pleural tap (0.70).

Forty respondents (30%) listed additional procedures in the open-ended part of the survey (8 faculty, 16 students, and 16 junior doctors). A total of 76 suggestions were collected. After the exclusion of the non-technical skills (23), duplication (11), and replication (10), 22 new additional procedural skills were suggested (Table 3). Among these, the most often recommended were first line ultrasounds utilization (five times), ear examination (five times), and arterial blood pressure measurement (four times).

Discussion

To the authors’ knowledge, this is the first study to look at competency development using simulation from a holistic approach; collecting input from faculty, recent graduates, and junior doctors.

Often, one of the barriers to developing a school-wide program is reaching consensus among faculty. In this study, faculty showed a strong general consensus about the importance of the selected procedural skills. Teachers expressed a strong desire that recent graduates be highly proficient to perform all the selected abilities; no ability was rated less than 5.3. In 2007, the Association of American Medical Colleges (AAMC) convened a task-force on clinical skills teaching to provide coherent and broadly applicable model for clinical skills curriculum and performance standards [12]. The identified procedural skills curriculum is similar to our results. Sullivan et al. used a Delphi process to reach consensus among educational leaders at his institution regarding which skills to include in the simulation-based curriculum for all students [13]. Similar attempts are present in literature [3, 14].

The results from student’s self-perception of level of autonomy in performing clinical skill clearly demonstrated a need to establish a structured approach to teach procedural skills at our institution. Students and junior doctors show low self-perceived confidence in many procedural skills. Although there are limitations in the use of self-perceived scores, self-reported level of proficiency or confidence can be an indicator to assess competence [14, 15]. Confidence at carrying out procedures has also been shown to affect performance [16]. Previous studies have identified similar problems with skill acquisition among medical students [3, 17,18,19,20] and junior doctors [21,22,23,24]. Competency in technical skills is mandatory as deficiency in essential clinical skills could jeopardize the safety of patients [25].

The majority of respondents supported the utility of simulation training for technical skills. Previous studies have demonstrated that the preclinical period is a critical time for providing students a strong foundation in core clinical skills [26]. Bandali et al. have shown that a simulation-enhanced curriculum “successfully bridges the common gap between didactic education and clinical practice.” In addition, learners who have had the opportunity for deliberate practice of skills in a simulation setting have shown to have increased levels of confidence and decreased levels of anxiety when performing skills in the clinical setting [27]. At our institution, students typically learn technical skills by performing supervised procedures on actual patients during clinical rotations. Remmen et al. found that clinical clerkships did not automatically provide an effective learning environment for medical students; clinical rotations are often not adequately focused on technical skills, and students are often passive learners [28].

The desirability function provides an objective metric to prioritize future learning by simulation. By combining the expected level of mastery rated by the faculty, the current level of self-reported competency of the junior doctors and students, and the utility ratings for all groups into a single metric, future simulation activities can be prioritized. For instance, at our institution, urinary catheter placement is the highest priority skill for simulation training; faculty feel that the skill is necessary, the clinical groups did not feel highly competent in the skill, and all groups feel that the skill is highly trainable by simulation. In contrast, personal protective equipment (PPE) usage is low on the priority list; faculty feel they are important, but the junior doctors and medical students are confident in their abilities. Likewise, for cricothyrotomy, although junior doctors and medical students felt that they lacked the skill, faculty gave low importance to acquisitions of the skill, leading to a sixth place priority in the list. In general, desirability functions can be very useful to find an optimal solution in a situation where improvement in one area may be at the expense of another. The function can support decision-making by giving some objectivity to justify such decisions. In this case, the desirability function is designed and implemented to help the organization determine high priority use of simulation to fully developed simulation programs.

The AAMC suggested in 2003 that medical schools be more explicit in the teaching of technical skills [12] Nonetheless, most educational guidelines provide only broad recommendations and do not stipulate the exact technical skills required for graduation. As many educational programs move to competency rather than time-based education, the role of identifying these skills becomes paramount. Although simulation is undoubtedly important for acquisition of these skills, it is only gradually being implemented in most programs. Simulation can be costly, both in technical equipment, and in teaching resources, and it is unlikely most institutions will be able to immediately implement a simulation training program to teach all necessary skills. Use of the survey methodology and the desirability function may serve as a useful tool to prioritize simulation-based technical training. At our own institution, the findings of this study have led to the introduction of a mandatory skills training program in core clinical procedures. It is the hope that this study will prompt similar research within the global framework of medical schools at national and international level.

Limitations

Although this study describes a methodology that appears to be promising, there are several limitations in the study design. Although the participation rate for the survey was impressive, the study is from a single institution. Priorities will of course vary from site to site, and curriculum development may need to follow the same methodology performed at different sites to find site-specific priorities. In addition, several procedures that were not part of the initial list were added by the study participants: further studies should most likely also include these procedures. The study also relies heavily on the use of self-perceived confidence, which, despite the fact that many studies have shown its utility, must always be considered somewhat biased. A further study conducted using objective measures of competence rather than self-perceived confidence would certainly be useful to validate the present findings. Finally, there are some limitations in the use of the desirability function. Although desirability functions have been in use in industry for decades as a means to compress a multi-dimensional problem to a single metric, there is always a risk of oversimplification inherent in their use. In addition, although in this study, the three components of the overall desirability function were weighted equally, desirability functions can be modified to include differential weighting of the individual components [29].

Conclusion

Through the use of survey tools this study identified those technical competencies thought by faculty to be important and assessed the junior doctors and recent graduates level of self-perceived confidence in performing these skills. In addition, the study identified how the three groups perceive the utility of teaching these skills by simulation. Finally, the study prioritizes those skills that have a gap between expected and observed competency and are also thought to be amenable to teaching by simulation so that immediate priorities for curriculum simulation development can proceed in the most effective manner.

Although the results of this study are from a single institution, it is hoped that the methodology of survey tools to measure the overall desirability may be useful to researchers and curriculum designers in other centers.

Abbreviations

- AAMC:

-

Association of American Medical Colleges

- IQR:

-

Interquartile range

- MIUR:

-

Ministry of Education, University and Research

- SBME:

-

Simulation-based medical educations

References

Sičaja M, Romić D, Prka Ž. Medical students’ clinical skills do not match their teachers’ expectations: survey at Zagreb University School of Medicine, Croatia. Croat Med J. 2006;47:169–75.

McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 2011;86:706–11.

Boots RJ, Egerton W, McKeering H, Winter H. They just don’t get enough! Variable intern experience in bedside procedural skills. Intern Med J. 2009;39:222–7.

Ministry of Education University and Research. Tabella classi di laurea magistrale. In: Decreto Ministeriale del 16 marzo 2007. http://attiministeriali.miur.it/media/155598/dmcdl_magistrale.pdf. Accessed in Jan 2015.

Vettore L, Tenore A. Presentazione del core curriculum per le abilità pratiche. Medicina e Chirurgia. 2004;24: 955–61

Bandali K, Parker K, Mummery M, Preece M. Skills integration in a simulated and interprofessional environment: an innovative undergraduate applied health curriculum. J Interprof Care. 2008;22(2):179–89.

Berkenstadt H, Erez D, Munz Y, Simon D, Ziv A. Training and assessment of trauma management: the role of simulation-based medical education. Anesthesiol Clin. 2007a;25(1):65–74.

Lewis CB, Vealé BL. Patient simulation as an active learning tool in medical education. JMIRS. 2010;41(4):196–200.

McGaghie WC, Issenberg SB, Petrusa ER, Scalese RJ. A critical review of simulation-based medical education research: 2003–2009. Med Educ. 2010;44(1):50–63.

Ziv A, Ben-David S, Ziv M. Simulation based medical education: an opportunity to learn from errors. Med Teach. 2005;27(3):193–9.

Del Castillo E, Montgomery DC, McCarville DR. Modified desirability functions for multiple response optimization. J Qual Technol. 1996;28:337–45.

Association of American Medical Colleges. Task Force on the Clinical Skills Education of Medical Students. Recommendations for preclerkship clinical skills education for undergraduate medical education. Washington: Association of American Medical Colleges; 2008. Available at: https://www.aamc.org/download/130608/data/clinicalskills_oct09.qxd.pdf.pdf. Accessed Jan 2015

Sullivan M, et al. The development of a comprehensive school-wide simulation-based procedural skills curriculum for medical students. J Surg Educ. 2010;67(5):309–15.

Fitzgerald JT, White CB, Gruppen LD. A longitudinal study of self-assessment accuracy. Med Educ. 2003;37(7):645–9.

Morgan PJ, Cleave-Hogg D. Comparison between medical students’ experience, confidence and competence. Med Educ. 2002;36(6):534–9.

Byrne AJ, Blagrove MT, McDougall SJ. Dynamic confidence during simulated clinical tasks. Postgrad Med J. 2005;81(962):785–8.

Olajide T, Seyi-Olajide J, Ugburo A, Oridota E. Self-assessment of final year medical students’ proficiency at basic procedures. Maced J Med Sci. 2014;7(3):532–5.

Engum SA. Do you know your students’ basic clinical skills exposure? Am J Surg. 2003;186:175–81.

Ringsted C, Schroeder T, Henriksen J, et al. Medical students’ experience in practical skills is far from stakeholders’ expectations. Med Teach. 2001;4:412–6.

Dehmer JJ, Amos KD, Farrell TM, Meyer AA, Newton WP, Meyers MO. Competence and confidence with basic procedural skills: the experience and opinions of fourth-year medical students at a single institution. Acad Med. 2013;88:682–7.

Burch VC, Nash RC, Babow T, et al. A structured assessment of newly qualified medical graduates. Med Educ. 2005;39:723–31.

Remmen R, Scherpbier A, Derese A, et al. Unsatisfactory basic skills performance by students in traditional medical curricula. Med Teach. 1998;20:579–82.

Sachdeva AK, Loicano LA, Amiel GE, et al. Variability in the clinical skills of residents entering training programs in surgery. Surgery. 1989;118:300–9.

Sharp LK, Wang R, Lipsky MS. Perceptions of competency to perform procedures and future practice intent: a national survey of family practice residents. Acad Med. 2003;78:926–32.

Tekian A. Have newly graduated physicians mastered essential clinical skills? Med Educ. 2002;36(5):406–7.

Omori DM, Wong RY, Aontonelli MA, Hemmer PA. Introduction to clinical medicine: a time for consensus and integration. Am J Med. 2006;118:189–94.

Stewart RA, Hauge LS, Stewart RD, et al. A CRASH course in procedural skills improves medical students’ self assessment of proficiency, confidence and anxiety. Am J Surg. 2007;193:771–3.

Remmen R, Denekens J, Scherpbier A, Hermann I, van der Vleuten C, Royen PV, et al. An evaluation study of the didactic quality of clerkships. Med Educ. 2000;34:460–4.

Derringer GC. A balancing act: optimizing a product’s properties. Qual Prog. 1994;27:51–8.

Acknowledgement

The authors would like to thank all faculty members and students of the Medical School of the Università del Piemonte Orientale.

Funding

None.

Availability of data and materials

Data will not be shared publicly to prevent possible identification of individual responders.

This aligns with ethical consent requirements for this project.

Author information

Authors and Affiliations

Contributions

PLI, LGB, and JMF designed the study. PLI and LGB were responsible for the acquisition of data. LGB and JMF performed the analyses of data. All authors contributed in the interpretation of the data and were involved in drafting the manuscript or revising it critically for important intellectual content. All authors had given final approval of the version to be published.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The participation into the study was voluntary and participants could drop out at any time. Authors ensured confidentiality of information, and no financial incentive to participate in the study was offered. Since all data were de-identified and reported in aggregate, the study was deemed exempt from institutional review approval by the local Ethics Committee (Comitato Etico Interaziendale, Novara, Italy; study number Prot. 639/CE).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Ingrassia, P.L., Barozza, L.G. & Franc, J.M. Prioritization in medical school simulation curriculum development using survey tools and desirability function: a pilot experiment. Adv Simul 3, 4 (2018). https://doi.org/10.1186/s41077-018-0061-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41077-018-0061-x