Abstract

Background

There is no agreed way to measure the effects of social accountability interventions. Studies to examine whether and how social accountability and collective action processes contribute to better health and healthcare services are underway in different areas of health, and health effects are captured using a range of different research designs.

Objectives

The objective of our review is to help inform evaluation efforts by identifying, summarizing, and critically appraising study designs used to assess and measure social accountability interventions' effects on health, including data collection methods and outcome measures. Specifically, we consider the designs used to assess social accountability interventions for reproductive, maternal, newborn, child, and adolescent health (RMNCAH).

Data sources

Data were obtained from the Cochrane Library, EMBASE, MEDLINE, SCOPUS, and Social Policy & Practice databases.

Eligibility criteria

We included papers published on or after 1 January 2009 that described an evaluation of the effects of a social accountability intervention on RMNCAH.

Results

Twenty-two papers met our inclusion criteria. Methods for assessing or reporting health effects of social accountability interventions varied widely and included longitudinal, ethnographic, and experimental designs. Surprisingly, given the topic area, there were no studies that took an explicit systems-orientated approach. Data collection methods ranged from quantitative scorecard data through to in-depth interviews and observations. Analysis of how interventions achieved their effects relied on qualitative data, whereas quantitative data often raised rather than answered questions, and/or seemed likely to be poor quality. Few studies reported on negative effects or harms; studies did not always draw on any particular theoretical framework. None of the studies where there appeared to be financial dependencies between the evaluators and the intervention implementation teams reflected on whether or how these dependencies might have affected the evaluation. The interventions evaluated in the included studies fell into the following categories: aid chain partnership, social audit, community-based monitoring, community-linked maternal death review, community mobilization for improved health, community reporting hotline, evidence for action, report cards, scorecards, and strengthening health communities.

Conclusions

A wide range of methods are currently being used to attempt to evaluate effects of social accountability interventions. The wider context of interventions including the historical or social context is important, as shown in the few studies to consider these dimensions. While many studies collect useful qualitative data that help illuminate how and whether interventions work, the data and analysis are often limited in scope with little attention to the wider context. Future studies taking into account broader sociopolitical dimensions are likely to help illuminate processes of accountability and inform questions of transferability of interventions. The review protocol was registered with PROSPERO (registration # CRD42018108252).

Similar content being viewed by others

Background

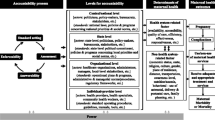

Accountability is increasingly seen as central to improving equitable access to health services [1, 2]. Despite the fact that social accountability mechanisms are “multiplying in the broader global context of the booming transparency and accountability field” [3, p. 346], whether and how these interventions work to improve health is often not adequately described. Measuring effects of social accountability interventions on health is difficult and there is no consensus on how social accountability should best be defined, developed, implemented, and measured.

The term accountability encompasses the processes by which government actors are responsible and answerable for the provision of high-quality and non-discriminatory goods and services (including regulation of private providers) and the enforcement of sanctions and remedies for failures to meet these obligations [4]. The Global Strategy for Women’s, Children’s and Adolescents’ Health, 2016–2030 defines accountability as one of nine key action areas to, “end preventable mortality and enable women, children and adolescents to enjoy good health while playing a full role in contributing to transformative change and sustainable development” [2 p. 39]. The Global Strategy’s enhanced Accountability Framework further aims to “establish a clear structure and system to strengthen accountability at the country, regional, and global levels and between different sectors” [2].

Social accountability, as a subset of accountability more broadly comprises “…citizens’ efforts at ongoing meaningful collective engagement with public institutions for accountability in the provision of public goods” [5 p. 161]. It has transformative potential for development and democracy [1, 6,7,8,9]. Successful efforts depend on effective citizen engagement, and the responsiveness of states and other duty bearers [3, 10]. Social accountability and collective action processes may contribute to better health and healthcare services by supporting, for example, better delivery of services (e.g., via citizen report cards, community monitoring of services, social audits, public expenditure tracking surveys, and community-based freedom of information strategies); better budget utilization (e.g., via public expenditure tracking surveys, complaint mechanisms, participatory budgeting, budget monitoring, budget advocacy, and aid transparency initiatives); improved governance outcomes (e.g., via community scorecards, freedom of information, World Bank Inspection Panels, and Extractives Industries Transparency Initiatives); and more effective community involvement and empowerment (e.g., via right to information campaigns/initiatives, and aid accountability mechanisms that emphasize accountability to beneficiaries) [10,11,12].

An early attempt to evaluate a social accountability intervention using an experimental study design was a 2009 paper presenting the evaluation of community-based monitoring of public primary health care providers in Uganda by Bjorkman and Svensson [13]. The authors conclude that, “…experimentation and evaluation of new tools to enhance accountability should be an integral part of the research agenda on improving the outcomes of social services” [13 p. 26]. Since then, various study designs have been used to assess social accountability initiatives. These include randomized trials, quantitative surveys, qualitative studies, participatory approaches, indices and rankings, and outcome mapping [10].

In common with other fields, social accountability interventions are increasingly popular in the area of reproductive, maternal, neonatal, child, and adolescent health (RMNCAH). Also in common with the broader area of social accountability, measuring effects of these interventions on RMCAH is challenging.

In this paper, we review and critically analyze methods used to evaluate the health outcomes of social accountability interventions in the area of RMNCAH, to inform evaluation designs for these types of interventions.

Methods

Eligibility criteria

We searched for original, empirical studies published in peer-reviewed journals between 1 January 2009 and 26 March 2019 in any language. We included papers which described an evaluation of the health effects of interventions aiming to increase social accountability of the healthcare system or specific parts of the healthcare system, within a clearly defined population. We included papers that reported one or more RMNCAH outcome. Because many papers did not include direct health outcome measures or commentary, we also included studies that reported on health service outcomes such as improvements in quality, on the grounds that this was likely to have some effect on health. Because we were interested in methods for measuring effects of social accountability interventions on health, we excluded papers that did not report at least one health (RMNCAH) outcome, for instance we excluded papers which only discussed how the intervention had been set up or how it was received and did not mention any health-related consequences of the interventions.

We excluded papers that described only top-down community health promotion type initiatives (e.g., improving community response to obesity); interventions aiming to improve accountability of communities themselves (e.g., community responsibilities toward women during childbirth); clinician training interventions (e.g., to reduce abuse of women during childbirth); quality improvement interventions for clinical care (e.g., patient participation in service quality improvement relating to their own care and treatment and not addressing collective accountability); intervention development (e.g., testing out report cards as there was no evaluation of the effects of using these); natural settings where people held others to account (i.e., there was no specific intervention designed to catalyze this); or papers that exclusively discussed litigation and legal redress.

Information sources

We searched the following databases via Ovid: MEDLINE, EMBASE, and Social Policy & Practice. Both SCOPUS and The Cochrane Library were searched using their native search engines. All database searches were carried out on 28 August 2018 and updated on 26 March 2019. We reviewed reference lists and consulted subject experts to identify additional relevant papers.

Search

We developed search terms based, in part, on specific methods for achieving social accountability as defined in Gaventa and McGee 2013 [10]. The search combined three domains relating to accountability, RMNCAH, and health. The complete search strategy used for all five databases is included in Table 1.

Study selection

Papers were screened on title and abstract by CM and CRM and lack of agreement was resolved by VB. Full text papers were screened by CM and VB.

Data collection and data items

Data were extracted by CM and CRM. Data items included intervention, study aims, population, study design, data collection methods, outcome measures, social accountability evidence reported/claimed, cost, relationship between evaluator and intervention/funder, which theoretical framework (if any) was used to inform the evaluation, and if so, whether or not the evaluation reported against the framework.

Social interventions are complex and can have unexpected consequences. Because these may not always be positive, we were interested to explore how this issue had been addressed in the included studies. We extracted from the studies any discussion of how such negative effects were measured, whether they were measured, and whether any such effects were reported on. We defined harms and negative effects very broadly and included any consideration at all of negative impacts or harms, even if they were mentioned only in passing.

Because we were examining accounts of interventions that increase accountability in various ways, we were interested in the extent to which the authors included information that would promote their own accountability to their readers. We examined whether the studies contained information about the funding source for the intervention and for the evaluation, or any other information about possible conflicts of interest.

Risk of bias

For this review, we wished to describe the study designs used to evaluate social accountability interventions to improve RMNCAH. Papers reporting on interventions that aimed to affect comprehensive health services where the studies did not explicitly reference RMNCAH components (or which have not been indexed in MEDLINE using related keywords and/or MeSH terms) were not included. Interventions in general areas of health are likely to employ similar methods to evaluate social accountability interventions as those in RMNCAH-specific areas. However, if not, these additional methods would not have appeared in our search and will be omitted from the discussion below.

Synthesis of results

We present a critical, configurative review (i.e., the synthesis involves organizing data from included studies) [14] of the methodologies used in the included evaluations. We extracted data describing the social accountability intervention and the evaluation of it (i.e., evaluation aims, population, theoretical framework/theory of change, data collection methods, outcome measures, harms reported, social accountability evidence reported, cost/sustainability, and relationship between the funder of the intervention and the evaluation team). We presented the findings from this review at the WHO Community of Practice on Social Accountability meeting in November 2018, and updated the search afterwards to include more recent studies.

Registration

The review protocol is registered in the PROSPERO prospective register of systematic reviews (registration # CRD42018108252).Footnote 1 This review is reported against PRISMA guidelines [15].

Results

The search yielded 5266 papers and we found an additional six papers through other sources. One hundred and seventy-six full text papers were assessed for eligibility and of these, 22 met the inclusion criteria (Fig. 1).

Interventions measured

We took an inclusive approach to what we considered to be relevant interventions, as reflected in our search terms. Our final included papers referred to a range of social accountability interventions for improving RMNCAH. Eight types of interventions were examined in the included papers (Table 2).

Study aims

To be included in this review, all studies had to report on health effects of the interventions and be explicitly orientated around improving social accountability. The different studies had somewhat different aims, with some more exploratory and implementation-focused, and some more effectiveness-orientated. Exploratory studies were conducted for maternal death reviews [16], social accountability interventions for family planning and reproductive health [17], civil society action around maternal mortality [18], community mobilization of sex workers [19], community participation for improved health service accountability in resource-poor settings [20], and exploring a community voice and action intervention within the health sector [21]. These aimed to describe contextual factors affecting the intervention, often focusing more on implementation than outcomes. Others explicitly aimed to examine how the interventions could affect specific outcomes. This was the case for studies of an HIV/AIDS programme for military families [22]; effects of community-based monitoring on service delivery [13]; effectiveness of engaging various stakeholders to improve maternal and newborn health services [23]; acceptability and effectiveness of a telephone hotline to monitor demands for informal payments [24]; effectiveness of CARE’s community score cards in improving reproductive health outcomes [25]; assess effects of quality management intervention on the uptake of services [26]; examine structural change in the Connect 2 Protect partnership [27]; improve “intercultural maternal health care” [28]; and whether and how scale up of HIV services influenced accountability and hence service quality [29]. Some studies were unclear in the write up what the original aims were, but appeared to try to document both implementation and effectiveness, for example the papers reporting on scorecards used in Evidence4Action (E4A) [30, 31].

Study designs used

Study designs varied from quantitative surveys to ethnographic approaches and included either quantitative or qualitative data collection and analysis or a mix of both (see Table 3). Direct evidence that the intervention had affected social accountability was almost always qualitative, with quantitative data from the intervention itself used to show changes, e.g., in health facility scores. The possibility that those conducting the intervention may have had an interest in showing an improvement which might have biased the scoring was not discussed.

Qualitative data were essential to provide information about accountability mechanisms, and to support causal claims that were sometimes only weakly supported by the quantitative data alone. For example, this was the case in the many studies where the quantitative data were before-and-after type data that could have been biased by secular trends, i.e., where it would be difficult to make credible causal claims based only on those data. Qualitative data were primarily generated via interviews, focus group discussions, and ethnographic methods including observations.

Additionally, some papers contained broader structural analysis contextualizing interventions in relation to relevant, longstanding processes of marginalization. For instance, Dasgupta 2011 notes that “in addition to the health system issues discussed [earlier in the paper], the duty bearers appear to hold a world view that precludes seeing Dalit and other disadvantaged women as human beings of equivalent worth: you can in fact die even after reaching a well-resourced institution if you are likely to be turned away or harassed for money and denied care” [18, p. 9].

There were very few outcome measures reported in the studies which directly related to social accountability. Instead, they usually related to the intervention (e.g., number of meetings, number of action points recorded). Outcome measures included quantitative process measures such as total participants attending meetings (e.g., [16]), how many calls were made to a hotline (e.g., [24]), numbers of services provided, and outcome measures such as measures of satisfaction (e.g., [25, 32, 33]). Qualitative studies examined how changes had been achieved (for instance by exploring involvement of civil society organisations in promotion and advocacy), or perceptions of programme improvement (e.g., [20, 22, 34]). Many of the health outcomes were reported using proxy measures (e.g., home visits from a community health worker, care-seeking) [32, 35].

There were various attempts to capture the impact of the intervention on decision-making and policy change. For example, “process tracing” was used, “to assess whether and how scorecard process contributed to changes in policies or changes in attitudes or practices among key stakeholders” [23 p. 374], and “outcome mapping” (defined as, “emphasis on capturing changes in the behavior, relationships, activities, or actions of the people, groups, and organizations with whom an entity such as a coalition works”) [27, p. 6] was used to assess effects of the intervention on systems and staff.

Theoretical frameworks

In 10 out of 22 cases, we found an explicit theoretical framework that guided the evaluation of the intervention. In some additional cases, there appeared to be an implicit theoretical approach or there is reference to a “theory of change” but these were not spelled out clearly.

Harms or negative effects reported

Studies which emphasised quantitative data either alone or as a part of a mixed methods data collection strategy did not report harms or intent to measure any. The only studies reporting negative aspects of the intervention—either its implementation or its effects—emphasised qualitative data in their reporting. Not all qualitative studies reported negative aspects of the intervention, but it was notable that the more detailed qualitative work considered a wider range of possible outcomes including unintended or undesirable outcomes.

Studies reporting any types of negative effects varied in terms of the type of harms or other negative aspects of interventions reported, although complex relationships with donors was mentioned more than once. For instance, Aveling et al note:

…relations of dependence encourage accountability toward donors, rather than to the communities which interventions aim to serve […] far more time is spent clarifying reporting procedures and discussing strategies to meet high quantitative targets than is spent discussing how to develop peer facilitators’ skills or strategies to facilitate participatory peer education. [22, p. 1594–5]

Some authors did not report on negative effects as such, but did acknowledge the limitations of the interventions they examined—for instance, that encouraging communities to speak out about problems will not necessarily be enough to promote improvement [16]. Similarly Dasgupta reported how, “[t]he unrelenting media coverage of corruption in hospitals, maternal and infant deaths and the dysfunctional aspects of the health system over the last six years, occasionally spurred the health department to take some action, though usually against the lowest cadre of staff” [18 p. 7] and “[w]hen civil society organizations, speaking on behalf of the poor initially mediated the rights-claiming to address powerful policy actors such as the Chief Minister, it did not stimulate any accountability mechanism within the state to address the issue” [18p. 7]. In their 2015 study, Dasgupta et al. address the potential harms that could have been caused by the intervention—a hotline for individuals to report demands for informal payments—and explain how the intervention was designed to avoid these [24].

Costs and sustainability

Only four studies contained even passing reference to the cost or sustainability of the interventions. One study indicated that reproductive health services had been secured for soldiers and their wives [22]. One mentioned that although direct assistance had ceased, activities continued with technical support provided on a volunteer basis [28], one (a protocol) set out how costs would be calculated in the final study [26], and one mentioned in passing that a district had not allocated funds to cover costs associated with additional stakeholders [20].

Challenges to sustainability were noted in several studies [16, 20,21,22,23,24,25, 32, 33].

Accountability of the authors to the reader

Very few studies specified the relationship between the evaluation team and the implementation team and in many cases, they appear to be the same team, or have team members in common. In most cases, there was no clear statement explaining any relationships that might be considered to constitute a conflict of interest, or how these were handled.

Information about evaluation funding was more often provided, although again it was not clear whether the funder had also funded the intervention, or if they had, to what extent the evaluation was conducted independently from the funders.

Discussion

Most studies reported a mix of qualitative and quantitative data, with most analyses based on the qualitative data. Two studies used a trial design to test the intervention—one examined the effects of implementing CARE community score cards [32] and the other tested the effects of a community mobilization intervention [36]. This relative lack of trials is notable given the number of trials related to social accountability in other sectors [3, 9]. The more exploratory studies which attempted to capture aspects of the interventions—such as how they were taken up—used predominantly qualitative data collection methods.

The studies we identified show the clear benefits of including qualitative data collection to assess social accountability processes and outcomes, with indicative quantitative data to assess specific health or service improvement outcomes. High-quality collection and analysis of qualitative data should be considered as at least a part of subsequent studies in this complex area. The “pure” qualitative studies were the only ones where any less-positive findings about the interventions were reported, perhaps because of the emphasis on reflexivity in analysis of qualitative data, which might encourage transparency. We were curious about whether there was any relationship between harms being reported and independence of studies from the funded intervention, but we found no particular evidence from our included studies to indicate any association. One study mentioned that lack of in-country participation in the design process led to lack of interest in using the findings to help plan country strategy [31].

It was notable that studies often did not specify their evaluation methods clearly. In these cases, methods sections of the papers were devoted to discussing methods for the intervention rather than its evaluation.

When trying to measure interventions intended to influence complex systems (as social accountability interventions attempt to do), it is important to understand what the intervention intends to change and why in order to assess whether its effects are as expected, and understand how any effects have been achieved. There was a notable lack of any such specification in many of the included studies. For example, there were few theoretical frameworks cited to support choices made about evaluation methods and, related to this, there were few references to relevant literature that might have informed both the interventions and the evaluation methodologies. The literature on public and patient involvement, for instance, was not mentioned despite this literature containing relevant experiences of trying to evaluate these types of complex, participatory processes in health. It is possible that some of the studies were guided by hypotheses and theoretical frameworks that were not described in the papers we retrieved.

Sustainability of the interventions and their effects after the funded period of the intervention was rarely discussed or examined. A small, enduring change for the better that also creates positive ripple effects over time may be preferable to larger, temporary effects that end with the end of the intervention funding. It would also be useful to discuss with funders and communities in advance what type of outcome would indicate success and over what period of time, to ensure that measures take into account what is considered important to the people who will use them. Sustainability and effectiveness are known to diminish after the funded period of the intervention [37]. Longer term follow-up may be hindered because of the way funding is generally allocated over short periods. It would be interesting to see a greater number of longer-term follow up studies examining what happened “after” the intervention had finished in order to inform policymakers about what the truly “cost-effective” programmes are likely to be. For example, some studies have traced unfolding outcomes after the intervention has finished; these may be important to take into account in any effectiveness considerations.

There was little transparency about funding and any conflicts of interest—which seemed surprising in studies of social accountability interventions. We strongly recommend that these details be provided in future work and be required by journals before publication.

A limitation of this study was that our searches yielded studies where accountability of health workers to communities or to donors appeared to be the main area of interest. A broader understanding of accountability might yield further useful insights. For instance, it seems likely that an intersectional perspective might put different forms of social accountability in the spotlight (e.g., retribution or justice connected with sexual violence or war crime, examining the differentiated effects on sexual and reproductive health, rather than solely accountability in a more bounded sense) [38]. By limiting our view of what “accountability” interventions can address within health, we may unintentionally imply broader questions of accountability are not relevant—e.g., effects of accountability in policing practices on health, effects of accountability in education policy on health, and so on.

With only a few notable exceptions, we lack broader sociohistorical accounts of the ways in which these interventions are influenced by the political, historical, and geographical context in which they appear, and how dynamic social change and “tipping point” events might interrelate with the official “intervention” activities—pushing the intervention on, or holding it back, co-opting it for political ends, or losing control of it completely during civil unrest. While the studies we identified did use more qualitative approaches to assessing what had happened during interventions, the scope of the studies was often far narrower than this—for instance lacking information on broader political issues that affected the intervention at different points in time. In future, studies examining health effects of social accountability interventions should consider taking a more theoretical approach—setting out in more detail what social processes are happening in what historical/geographical/social context so that studies develop a deeper understanding, including using and further developing theories of social change to improve the transferability of the findings. For instance, lessons on conducting and evaluating patient involvement interventions in the UK may well have a bearing on improving social accountability and its measurement in India and vice versa. Related to this, we note that although there is clear guidance from the evaluation literature that it is important to take a systems approach to understanding complex interventions, none of our included studies explicitly took a systems approach—applying these types of approaches more systematically to social accountability interventions is a fertile area for future investigation. Without such studies, we risk implying that frontline workers are the only site of “accountability” and, by omission, fail to examine the role of more powerful actors and social structures which may act to limit the options of frontline workers, as well as failing to explore and address the ways in which existing structural inequalities might hamper equitable provision and uptake of health services.

Terminology may be hampering transfer of theoretically relevant material into and out of the “social accountability” field. The term “social accountability” may imply an adversarial relationship where certain individuals are acting in bad faith. One of the studies in our review used different terminology—“collaborative synergy”—referring to the work of coalitions in the Connect2Protect intervention [27]. We speculate that lack of agreed, common terminology may hinder learning from other areas of research—the phrase “social accountability” is not commonly used in the patient and public involvement (PPI) literature, possibly because of the greater emphasis in high income settings on co-production and sustainability compared with more of a “policing” emphasis in the literature reporting on LMIC settings. Yet one of the purposes of PPI interventions is to improve services and this may well include healthcare providers being held accountable for the services they provide. Litigation was outside the scope of this article, but legally enshrined rights to better healthcare are crucial and litigation is a key route to ensuring these rights are achieved in practice. A more nuanced account of these types of interventions in context would be valuable in understanding “what works where and why,” to inform future policy and programmes.

Dasgupta et al. comment on how hard it is to attribute change to any particular aspect of a social accountability intervention because successful efforts are led by individuals in many different roles whose relationships with one another are constantly changing and adapting. Attributing success is difficult because these changing relationships shape how and whether any individual can have an impact through their actions.

Evaluation tools, particularly those used within and for a specific time frame, have a limited capacity to capture the iterative nature of social accountability campaigns, as well as to measure important impacts like empowerment, changes in the structures that give rise to rights violations, and changes in relationships between the government and citizens. [24, p. 140]

Conclusions

Designing adequate evaluation strategies for social accountability interventions is challenging. It can be difficult to define the boundaries of the intervention (e.g., to what extent does it make conceptual sense to report on the intervention without detailing the very specific social context?), or the boundaries of what should be evaluated (e.g., political change or only changes in specific health outcomes). What is clear is that quantitative measures are generally too limited on their own to provide useful data on attribution, and the majority of evaluations appear to acknowledge this by including qualitative data as part of the evidence. The goals and processes of the interventions are inherently social. By examining social dimensions in detail, studies can start to provide useful information about what could work elsewhere, or provide ideas that others can adapt to their settings. More lessons should be drawn from existing evaluation and accountability work in high-income settings—the apparent lack of cross-learning or collaborative working between HIC and LMIC settings is a wasted opportunity, particularly when so much good practice exists in HIC and in LMIC settings—there are ample opportunities to learn from one another that are often not taken up and this is clear from the literature which tends to be siloed along country-income lines. Finally, more transparency about funding and histories of these interventions is essential.

Availability of data and materials

Not applicable.

References

Kruk ME, Gage AD, Arsenault C, Jordan K, Leslie HH, Roder-DeWan S, et al. High-quality health systems in the Sustainable Development Goals era: time for a revolution. Lancet Glob Health. 2018;6(11):e1196–e252.

World Health Organization. The global strategy for women’s, children’s and adolescents’ health (2016-2030). Geneva: WHO; 2015.

Fox JA. Social accountability: what does the evidence really say? World Dev. 2015;72:346–61.

Boydell V, Schaaf M, George A, Brinkerhoff DW, Van Belle S, Khosla R. Building a transformative agenda for accountability in SRHR: lessons learned from SRHR and accountability literatures. Sex Reprod Health Matters. 2019;27(2):1622357.

Joshi A. Legal empowerment and social accountability: complementary strategies toward rights-based development in health? World Dev. 2017;99:160–72.

Brinkerhoff DW, Jacobstein D, Kanthor J, Rajan D, Shepard K. Accountability, health governance, and health systems: uncovering the linkages. Washington, DC: USAID; 2017.

Hilber AM, Squires F. Mapping social accountability in health: background document for the symposium on social accountability for improving the health and nutrition of women, children and adolescents. Washington, DC: The Partnership for Maternal, Newborn, and Child Health; IPPF; Save the Children; The White Ribbon Alliance; World Vision; 2018.

Holland J, Schatz F, Befani B, Hughes C. Macro evaluation of DFID’s policy frame for empowerment and accountability: empowerment and accountability annual technical report 2016: what works for social accountability. Oxford: e-Pact; 2016.

Ringold D, Holla A, Koziol M, Srinivasan S. Citizens and service delivery assessing the use of social accountability approaches in the human development sectors. Washington, DC: The World Bank; 2012.

Gaventa J, McGee R. The impact of transparency and accountability initiatives. Dev Policy Rev. 2013;31(s1):s3–s28.

Ringold D, Holla A, Koziol M, Srinivasan S. Citizens and service delivery: assessing the use of social accountability approaches in the human development sectors. Washington D.C.: World Bank; 2012.

Brinkerhoff DW, Jacobstein J, Rayjan D, Shepard K, for the Health Finance and Governance Project. Accountability, health governance and health systems: uncovering the linkages. Washington D.C.: USAID, WHO, HFG; 2017.

Björkman M, Svensson J. Power to the people: evidence from a randomized field experiment on community-based monitoring in Uganda. Q J Econ. 2009;124(2):735–69.

Gough D, Oliver S, Thomas J. An introduction to systematic reviews. London: SAGE Publications Ltd; 2012.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339.

Bayley O, Chapota H, Kainja E, Phiri T, Gondwe C, King C, et al. Community-linked maternal death review (CLMDR) to measure and prevent maternal mortality: a pilot study in rural Malawi. BMJ Open. 2015;5(4):e007753.

Boydell V, Neema S, Wright K, Hardee K. Closing the gap between people and programs: lessons from implementation of social accountability for family planning and reproductive health in Uganda. Afr J Reprod Health. 2018;22(1):73–84.

Dasgupta J. Ten years of negotiating rights around maternal health in Uttar Pradesh, India. BMC Int Health Hum Rights. 2011;11(SUPPL. 3):S4.

de Souza R. Creating “communicative spaces”: a case of NGO community organizing for HIV/AIDS prevention. Health Commun. 2009;24(8):692–702.

Kamuzora P, Maluka S, Ndawi B, Byskov J, Hurtig AK. Promoting community participation in priority setting in district health systems: experiences from Mbarali district, Tanzania. Glob Health Action. 2013;6:22669.

Schaaf M, Topp SM, Ngulube M. From favours to entitlements: community voice and action and health service quality in Zambia. Health Policy Plan. 2017;32(6):847–59.

Aveling EL. The impact of aid chains: Relations of dependence or supportive partnerships for community-led responses to HIV/AIDS? AIDS Care. 2010;22(SUPPL. 2):1588–97.

Blake C, Annorbah-Sarpei NA, Bailey C, Ismaila Y, Deganus S, Bosomprah S, et al. Scorecards and social accountability for improved maternal and newborn health services: a pilot in the Ashanti and Volta regions of Ghana. Int J Gynecol Obstet. 2016;135(3):372–9.

Dasgupta J, Sandhya YK, Lobis S, Verma P, Schaaf M. Using technology to claim rights to free maternal health care: lessons about impact from the my health, my voice pilot project in India. Health Hum Rights. 2015;17(2):135–47.

Sebert Kuhlmann AK, Gullo S, Galavotti C, Grant C, Cavatore M, Posnock S. Women’s and health workers’ voices in open, inclusive communities and effective spaces (VOICES): measuring governance outcomes in reproductive and maternal health programmes. Dev Policy Rev. 2017;35(2):289–311.

Hanson C, Waiswa P, Marchant T, Marx M, Manzi F, Mbaruku G, et al. Expanded quality management using information power (EQUIP): protocol for a quasi-experimental study to improve maternal and newborn health in Tanzania and Uganda. Implement Sci. 2014;9(1):41.

Miller RL, Reed SJ, Chiaramonte D, Strzyzykowski T, Spring H, Acevedo-Polakovich ID, et al. Structural and community change outcomes of the connect-to-protect coalitions: trials and triumphs securing adolescent access to HIV prevention, testing, and medical care. Am J Community Psychol. 2017;60(1-2):199–214.

Samuel J. The role of civil society in strengthening intercultural maternal health care in local health facilities: Puno, Peru. Glob Health Action. 2016;9(1):33355.

Topp SM, Black J, Morrow M, Chipukuma JM, Van Damme W. The impact of human immunodeficiency virus (HIV) service scale-up on mechanisms of accountability in Zambian primary health centres: a case-based health systems analysis. BMC Health Serv Res. 2015;15:67.

Hulton L, Matthews Z, Martin-Hilber A, Adanu R, Ferla C, Getachew A, et al. Using evidence to drive action: a “revolution in accountability” to implement quality care for better maternal and newborn health in Africa. Int J Gynecol Obstet. 2014;127(1):96–101.

Nove A, Hulton L, Martin-Hilber A, Matthews Z. Establishing a baseline to measure change in political will and the use of data for decision-making in maternal and newborn health in six African countries. Int J Gynaecol Obstet. 2014;127(1):102–7.

Gullo S, Galavotti C, Kuhlmann AS, Msiska T, Hastings P, Marti CN. Effects of a social accountability approach, CARE’s Community Score Card, on reproductive health-related outcomes in Malawi: a cluster-randomized controlled evaluation. PLoS ONE. 2017;12(2):e0171316.

Gullo S, Kuhlmann AS, Galavotti C, Msiska T, Nathan Marti C, Hastings P. Creating spaces for dialogue: a cluster-randomized evaluation of CARE’s Community Score Card on health governance outcomes. BMC Health Serv Res. 2018;18(1):858.

Hamal M, de Cock BT, De Brouwere V, Bardaji A, Dieleman M. How does social accountability contribute to better maternal health outcomes? A qualitative study on perceived changes with government and civil society actors in Gujarat, India. BMC Health Serv Res. 2018;18(1):653.

George AS, Mohan D, Gupta J, LeFevre AE, Balakrishnan S, Ved R, et al. Can community action improve equity for maternal health and how does it do so? Research findings from Gujarat, India. Int J Equity Health. 2018;17(1):125.

Lippman SA, Leddy AM, Neilands TB, Ahern J, MacPhail C, Wagner RG, et al. Village community mobilization is associated with reduced HIV incidence in young South African women participating in the HPTN 068 study cohort. J Int AIDS Soc. 2018;21:e25182.

Campbell C, Nair Y. From rhetoric to reality? Putting HIV and AIDS rights talk into practice in a South African rural community. Cult Health Sex. 2014;16(10):1216–30.

Crosby A, Lykes MB. Mayan women survivors speak: the gendered relations of truth telling in postwar Guatemala. Int J Transit Justice. 2011;5(3):456–76.

Acknowledgements

We would like to acknowledge the participants at the WHO RHR/HRP: 2nd community of practice on measuring social accountability and health outcomes meeting (4–5 October 2018 Montreux, Switzerland) at which we presented the findings of this study. The resulting discussion helped refine this version and we thank all the participants for their contributions. The authors alone are responsible for the views expressed in this paper and they do not necessarily represent the views, decisions, or policies of the institutions with which they are affiliated.

Funding

This work was produced with the support of the UNDP-UNFPA-UNICEF-WHO-World Bank Special Programme of Research, Development and Research Training in Human Reproduction (HRP), a cosponsored program executed by the World Health Organization (WHO). The work was partially funded with UK aid from the UK Government via the ACCESS Consortium.

Author information

Authors and Affiliations

Contributions

VB and PS conceived the study. CM led the work, designed the study, analysed the data, and wrote the manuscript. CRM designed and conducted the searches. CM, CRM, and VB screened papers and extracted data. All authors contributed to the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Marston, C., McGowan, C.R., Boydell, V. et al. Methods to measure effects of social accountability interventions in reproductive, maternal, newborn, child, and adolescent health programs: systematic review and critique. J Health Popul Nutr 39, 13 (2020). https://doi.org/10.1186/s41043-020-00220-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41043-020-00220-z