Abstract

Background

Video analysis is a common tool used in rugby union research to describe match performance. Studies using video analysis range from broad statistical studies of commercial databases to in-depth case-studies of specific match events. The range of types of studies using video analysis in rugby union, and how different studies apply the methodology, can make it difficult to compare the results of studies and translate the findings to a real-world setting. In attempt to consolidate the information on video analysis in rugby, a critical review of the literature was performed.

Main body

Ninety-two studies were identified. The studies were categorised based on the outcome of the study and the type of research question, sub-categorised as ‘what’ and ‘how’ studies. Each study was reviewed using a number of questions related to the application of video analysis in research.

There was a large range in the sample sizes of the studies reviewed, with some of the studies being under-powered. Concerns were raised of the generalisability of some of the samples. One hundred percent of ‘how’ studies included at least one contextual variables in their analyses, with 86% of ‘how’ studies including two or more contextual variables. These findings show that the majority of studies describing how events occur in matches attempted to provide context to their findings. The majority of studies (93%) provided practical applications for their findings.

Conclusion

The review raised concerns about the usefulness of the some of the findings to coaches and practitioners. To facilitate the transfer and adoption of research findings into practice, the authors recommend that the results of ‘what’ studies inform the research questions of ‘how’ studies, and the findings of ‘how’ studies provide the practical applications for coaches and practitioners.

Similar content being viewed by others

-

Sample size calculations should be adopted in video analysis research.

-

A consensus is needed for the definition and use of variables in video analysis research of rugby union.

-

To facilitate the transfer and adoption of research findings into practice, a sequence of applied video analysis research should be adopted

Background

Rugby union is a high-intensity collision based sport [1]. It is played by over 6.6 million players, across 199 countries, which makes it one of the most played sports in world [2]. The sport generated £385 million revenue in 2015 and winning major international competitions is the ultimate goal for national teams [3]. Rugby union is also associated with a higher risk of injury, compared to other sports like Association Football [4]. The higher injury risk is due to the dynamic environment in which physical contact occurs between players, with the tackle accounting for more than 50% of all match-related injuries [5].

The drive to reduce the risk of injury and improve performance in rugby has set in motion a high volume of scientific research including the analysis of match video footage to identify and describe player and team actions [6, 7], usually in relation to performance or injury outcomes [8]. Arguably, a strength of video analysis is that it allows for dynamic and complex situations in sports to be quantified in an objective, reliable and valid manner [9].

Video analysis research in rugby union frequently includes what studies that identify key events (for example, number of tackles in a match) to how studies that describe key events (for example, tackle technique relates to injury). Furthermore, the scope of these studies range from the description of in-depth case studies [10,11,12] to the broad analysis of commercial data bases [13,14,15]; and from studies that apply sophisticated statistical modelling that accounts for context [16,17,18] to studies that only report on the frequencies of events [19,20,21]. The sizes and types of samples used in these studies also vary considerably, a similar finding to that in Association Football (for a review: see Mackenzie and Cushion, 2013 [22]).

Due to the many different types of studies using video analyses in rugby, it is difficult to standardise the techniques. This makes it difficult to compare studies and translate the findings to a real-world setting. In response to this, a critical review of the literature on video analysis research in rugby union was performed. The aim was to critically appraise the studies to determine how the findings can be used to inform practise.

Main text

Methods

The purpose of a critical review is to show an extensive overview of the literature, as well as a critical evaluation of the quality of the literature [23]. It exceeds a narrative review of the studies by including a degree of analyses [23]. The methods of a systematic review were used in the literature search [24, 25]. This was done to ensure that all the available relevant literature were included in the review [23]. The critical evaluation of the literature was performed through the use of a series of polar questions (Table 1). In line with the purpose of the review, these questions were related to the methodology of the studies, namely, how the researchers used video analysis methods to collect data and answer specific research questions. Polar questions were used to attempt to provide a level of objectivity to the evaluation.

Systematic literature search

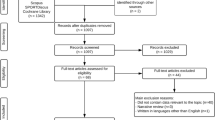

Specific search terms were used to identify peer-reviewed articles in three electronic databases, SCOPUS, PubMed and Web of Science. The search terms were ‘rugby union’ in the title, keywords or abstract linked with either ‘performance analysis’, ‘video analysis’, ‘tackle performance’, ‘video’, ‘notational analysis’, ‘match performance’, ‘match analysis’, ‘time motion analysis’, ‘attacking strategies’, ‘defensive strategies’, ‘performance indicators’, ‘injury risk’, ‘injury mechanisms’ or ‘injury rates’ anywhere in the text. The time frame for the literature search was any study published before 2017. The search results from the three databases were merged, and any duplicates were removed.

The inclusion criteria were as follows: the article needed to use video analysis to quantitatively study rugby union match footage and needed to be published, in English, in a peer-reviewed journal. Inclusion criteria were applied at the title, abstract and full-text level, and any article not meeting the criteria was omitted from the review. Inter-rater reliability testing was conducted for this process of the literature search. A second author applied the inclusion criteria to the merged database at the title, abstract and full-text level. Where there were any disparities between the two databases, the reasons for including or excluding the relevant papers were discussed and the studies were either included or excluded from the final database.

The reference lists of the papers that met the inclusion criteria were checked, and any relevant papers were added to a separate database. Inclusion criteria were applied to this database, at abstract and full-text levels. The papers that met the criteria were merged into the original database. The outcome of this process was a total of 92 papers (Fig. 1).

Critical evaluation

Data related to the aims, outcomes, variables investigated, sample sizes and type, and key findings of the studies were extracted from the identified papers. The identified papers were categorised into three groups based on the outcomes of the paper; physical demands, performance and injury. Seventeen studies did not fall under these groups and were reviewed under the category other. Within these categories, the studies were further categorised into ‘what’ and ‘how’ studies, based on the research question. Studies that identified the frequencies of specific variables were categorised as what studies. These were typically studies which used broad statistical analyses of large databases. Studies that identified the associations between different variables to describe how an event occurred were categorised as how studies. Grouping the studies into these two categories allowed for more homogenous comparisons during the review process.

Furthermore, classifying the studies into these two groups also allowed for different requirements for the different types of video analysis studies. Video analysis research involves the analysis of the frequencies or counts of specific variables, termed key performance indicators (KPI) [26]. Typically, ‘what’ studies identify KPIs associated with specific outcome. The primary requirement for ‘what’ studies is that the samples used are sufficiently large so that the findings are generalisable. It is also important that the samples are representative of the general rugby population, including multiple teams, seasons or levels of play, for the findings to be considered useful. The crucial requirement for ‘how’ studies are that contextual variables are included in their analyses. The purpose of these studies is to understand how an outcome occurs. As rugby is a dynamic sport, any finding must provide or account for the context in which the finding occurred for it to be applicable [27]. This brings up the final requisite for the studies. With the view that video analysis research should be progressive, the research questions of how studies should be based on the findings of what studies, and the practical applications of the research, based on the findings of how studies (Fig. 2).

With these requirements in mind, a number of polar questions (Table 1) were developed to review the studies. The questions were developed through the use of previous literature [22], and questions developed specifically for this review. The questions specifically addressed areas of criticism of performance analysis research [8, 22, 27]. The first set of questions evaluated the sample selected for the study, and the second the provision of definitions for the variables used in the analysis. The third group of questions evaluated the inclusion of variables that provide context to the event analysed. A common criticism of video analysis is that it has a tendency towards reductionism [8, 28, 29]. If the actions identified and described in these studies are analysed in isolation, the context in which they occur can be lost. A number of approaches have been suggested on how to provide context [8, 27, 29, 30], which all involve identifying patterns between the event identified in the study and specific task and environmental variables (contextual variables) related to the analysed event or match. The questions used in this review evaluated the number of contextual variables included in studies. The final question identified whether or not the studies provided practical applications for their findings.

Statistical analysis

The results of the critical evaluation were analysed using descriptive statistics, to describe and compare the frequency of occurrences.

Results

A total of 92 studies were included in the review. The papers were categorised into three groups (i.e., performance, physical demands, injury) based on the outcomes of the paper (Fig. 3). Seventeen papers did not fall into these categories; the outcomes of these papers included the development and comparisons of tools [31,32,33,34,35,36], touchline safety [37], decision-making behaviours [38], and the effects of law changes [39,40,41,42,43], professionalism [44,45,46], and time [47] on various match characteristics.

Sample size and selection

Three out of 21 performance-related studies in the sub-category ‘what’ had sample sizes larger than 100 games. Forty-seven percent of these studies included data from multiple competitions or seasons, and 38% of the samples were from one-off tournaments that do not occur annually. Tables 2 and 3 provide a summary of the sample sizes and types used in the studies.

Definitions of variables

Fifty percent of the studies provided full definitions for the variables used in the analyses. In 19% of the studies, the variables were partially defined, 5% made reference to definitions published elsewhere and 26% provided insufficient definitions. A summary of the operational definitions provided can be found in Table 4.

Context

Less than half of the sub-category ‘how’ studies included match-related contextual variables in their analyses (16 out of 35). Twenty-six percent of the studies included variables related to the opposition strength, 8% variables related to match location and 6% of studies included variables related to environmental conditions.

Nineteen out of 35 sub-category ‘how’ studies (54%) included more than three event-related contextual variables in their analysis. Eighty-four percent of performance related studies and 64% of injury studies included variables related to the outcome of the event. One hundred percent of studies in the category physical demands included and differentiated between variables related to playing position, compared to 47% of performance studies and 45% of injury studies. Seventy-three percent of injury-related studies and 59% of performance studies included variables which describe the playing situation. A summary of the use of contextual variables can be found in Tables 5 and 6.

Practical application of studies

Eighty-one percent of studies identified in this review provided practical applications for their findings. Differentiating between ‘what’ and ‘how’ studies showed that 76% of ‘what’ studies provided practical applications compared to 86% of ‘how’ studies. Table 7 provides a summary of these results.

Discussion

The video analysis of match footage is a common tool used to provide researchers with objective, quantifiable data about match performance [7]. Although video analysis studies are often grouped together, there is a large disparity in the type of data gathered and the level of analysis conducted within these studies. The studies range from broad statistical analyses of commercial databases to more in-depth case studies [48]. As a result of this disparity, the findings of these studies have been challenged because of the questionable generalisability of the findings, and the reductionist nature of some of the analyses [22, 27, 29, 30]. In response to this a critical review of video analysis research in rugby union was performed, appraising the samples used, the provision of definitions to the variables analysed, the inclusion of contextual variables in the analysis and the provision of practical applications for the findings.

Sample size and selection

There was a large range in the sample sizes of the studies identified in this review. Sample sizes range from three studies with samples of less than five matches [11, 49, 50], to four studies analysing over 300 matches [5, 14, 51, 52]. Two of the studies with samples of less than 5 matches [49, 50] were not purely video analysis studies and involved taking blood samples of the players. This may account for the small samples. The other study, a case study [11], was categorised as a ‘how’ study and required the analyst to code each match manually. The four studies with large samples were all categorised as ‘what’ studies and had access to large commercial or team databases for their analyses. However, differentiating the studies into ‘what’ and ‘how’ studies did not drastically reduce the range in sample size. Within the sub-category ‘what’, 13 studies had samples of less than 10 games, in contrast to the four studies with samples of over 300 games. Similarly, within the ‘how’ sub-category, samples ranged from one study with a sample of 35 min of four games [49] to two studies which analysed 125 matches [53, 54]. There is, therefore, a need for a consensus on the sample size that would accurately reflect the rugby union population.

Not all studies described the samples used in terms of the number of matches analysed. Some studies described their samples in terms of the number of players investigated, and some by the number of events analysed (Table 2). Interestingly, there was an association between the three outcome categories of studies identified in this review and the description of the sample. For example, ‘physical demands’ studies predominantly describe their samples in terms of players analysed, whereas ‘performance’ studies refer to the number of matches analysed, and ‘how’ ‘performance’ studies focus largely on the number of events. The ‘injury’ studies described matches in the sub-category of ‘what’ studies and events in the ‘how’ sub-category of studies. This suggests that any consensus statement would need to differentiate between the different categories and/or sub-categories.

A requisite of ‘what’ studies is that the samples are sufficiently large to allow for general claims to be made from their results. In the context of 129 games in an English Premiership season, or 135 in a Super Rugby season, only 3 of the 21 performance studies (14%) and 3 of the 6 injury studies (50%) investigated 100 matches or more. One third of the performance studies specifically analysed matches from the Rugby World Cup, a competition that only consists of 48 matches. Only one of these studies [55] analysed all 48 matches, in comparison with two studies with samples of five matches [56, 57]. Furthermore, the effect of the change of time [44,45,46,47] and competition [58, 59] on match characteristics questions the validity of analysing one-off tournaments and highlights the importance of including multiple seasons or competitions in samples to improve the generalisability of the results. However, 10 out of 21 performance studies included only one season or competition in their sample, and 8 studies were from one-off tournaments. These findings question the generalisability of the samples, and subsequently the results. The results from the injury-related ‘what’ studies are more positive, with 67% of studies including data from multiple seasons or competitions, and none of the studies analysing one-off tournaments.

In ‘how’ studies, it was more applicable to refer to the number of events analysed, than matches. Although all 17 studies in this sub-category reported the number of matches analysed, with the exception of George et al. (2015) [53], the studies did not analyse entire matches; instead they analysed certain events and outcomes identified in matches which were specific to the aims of the particular study. There is a large range in the number of events analysed in these studies, with some studies reporting samples of 20–30 events [11, 12, 60], and others with more than 5000 events [61,62,63]. However, as the frequency of different events differs within matches, the statistical power of a sample cannot simply be assessed by the number of events analysed. For example, at first glance, a study of 8653 events [62] would seem to have more statistical power than a study of 362 events [54]. The first study analysed rucks and the second line breaks. In a match, there are approximately 142 rucks [62], compared to an average of three line breaks per match [54]. The line breaks study, thus, coded 125 matches to identify and analyse the 362 line break events [54]. The study that analysed rucks, analysed 8563 rucks in 60 matches [62]. Therefore, although the one study analysed far fewer events than the other, it analysed more than twice as many matches. This provides a challenge when assessing the individual merits of each study. Reporting sample size calculations may provide a more suitable basis to evaluate sample sizes [22]. Unfortunately, only one of the 35 sub-category ‘how’ studies identified in this review reported a sample size calculation [61].

Studies in the category physical demands aim to identify and describe the physical demands of playing a rugby union match. A study of the match-to-match variability of high-speed activities in football [64] showed that a sample size of at least 80 players would have sufficient statistical power to make meaningful inferences about the physical demands of match play. If that number is taken as a sufficiently powered sample, only three ‘physical demands’ studies had samples larger than 80 players. This suggests that 76% of the studies were underpowered.

Definitions of variables

There was a lack of clarity and transparency in the definitions of the variables used in the studies. Only 50% of studies fully defined the variables used in their analysis, with 26% providing no definitions. As a result, it becomes difficult for other researchers to compare the results of these studies or replicate them [22]. What further compounds this problem is that definitions of variables differ. For example, one study [65] used the International Rugby Board’s definition of a tackle, where a ball carrier needs to be brought to ground for a tackle to occur [66], whereas other studies have defined a tackle as any attempt to stop or impede a ball carrier, whether or not the ball carrier is brought to ground [5, 61]. Although both studies are analysing tackles, they may not always be analysing the same event. Therefore, comparisons between the findings of these studies need to be interpreted with caution. This review highlights the need for a consensus among researchers using video analysis in rugby union, on the operational definitions of variables used in rugby research.

Context

Particularly in ‘how’ studies, it is important that the frequency of KPIs are not analysed in isolation, but that the context in which the KPI occurs is included in the analysis. A number of approaches have been suggested on how to provide context to the KPIs; through the use of ecological system dynamics [8, 27], through a constraints-based approach [29] or through temporal pattern analyses [7]. All of these approaches involve identifying patterns between the identified KPIs and specific task and environmental variables (contextual variables) related to the analysed event or match.

The first group of variables provide context to the match that was analysed. The relative strength of the opposition, the location of the match or the environmental conditions may alter a team’s tactics and, therefore, have an effect on the frequency of a KPI [54, 67]. In an analysis of line breaks, den Hollander and colleagues found that teams created more line breaks when playing against weaker opposition, compared to equally ranked or stronger opposition [54]. Similarly, George and colleagues (2015) found that teams created more line breaks, missed fewer tackles and scored more points playing at home, compared to playing away [53]. Yet, only 9 out of 35 of the studies (26%) accounted for opposition strength, 8% differentiated between match location, and only 2 studies (6%), (1 study on physical demands [68] and 1 injury study [69]) included environmental conditions in their analysis. Information regarding environmental conditions, like rainfall, can be difficult to gather retrospectively. Weather websites usually provide information about the amount of precipitation there was on the day of the match, but not the specific time or consistency of the rainfall. Overall, the inclusion of variables that give context to the match was poor. Over half the studies reviewed did not include any match-related variables in their analysis, and only three studies included two of the three categories of match variables in their analyses.

The results of studies that included variables that provide context to the event analysed were more positive. The majority of studies included more than three out of a possible four categories and only one study did not include any contextual variables [70]. The category of context included seemed to depend on the type of study. The majority of performance studies included the match or event outcome in their analysis, most of injury studies included variables which described the playing situation in their analysis, and every physical demands study included playing position in their analysis.

To be useful, KPIs need to relate to an outcome [30]. For example, comparing the frequencies of KPIs with successful and unsuccessful events, injury and non-injury events or different outcomes to a phase of play enables the researcher to determine if a variable is specifically related to the event or if it occurs in general. In this way, one outcome acts as a control for another outcome which also allows researchers to apply more sophisticated probability statistics [54]. The comparison of outcomes was common in both performance (84%) and injury (64%) studies. The inclusion of outcome variables was less common in physical demands studies. Only three of the seven studies compared match or event outcomes, and only one of those studies was related to the distances players cover in a match. Interestingly, this study found no differences in the physical movement patterns between winning and losing teams [71].

There are clear physiological differences in the match demands between forwards and backline players in rugby union [67], and therefore it is not surprising that 100% of the physical demands studies differentiated between playing positions. Studies have also shown differences in skill demands between playing positions [15, 19, 54]. Van Rooyen (2012) reported differences between the number of tackles made by forwards and backs, with back row forwards attempting and completing more tackles than any other positional group [15]. Positional differences have also been found in the number of line breaks made, with backline players more likely to complete line breaks, compared to forwards [19, 54], and significant differences in the types of skills used by inside and outside backs in the build-up play leading to line breaks [54]. Despite these findings highlighting the difference in skill demands between positions, only 47% of performance studies and 45% of injury studies differentiated between playing position.

The category playing situation accounts for variables that describe the situation in which the event occurred. These can be variables that describe the interactions between teammates and opposition players. Examples of this are studies that analysed the interactions between attacking and defensive line shapes and movements when identifying key variables [17, 54, 62, 72]. Similarly, some studies analysed the interactions between opposing players in contact [16, 60, 61, 73, 74]. As this category was specific to events, and physical demands studies mainly described the demands of entire matches and not events, only studies related to performance and injuries were reviewed in this category. Most of the studies reviewed attempted to account for the playing situation, with 73% of injury studies and 59% of performance studies including variables related to the playing situation.

These findings show that most of the ‘how’ studies reviewed attempted to provide context for their results, although perhaps more attention could be given to variables related to the match context. The authors also acknowledge there are restrictions and limitations in including too many variables in an analysis. Many journals have word count restrictions, which impacts on the number of variables a study can report on. A study may, thus, have initially included variables in their analysis, but not included them in the publication as the findings were insignificant. Authors may also divide their study up into multiple papers, and unless read together the context of their findings may be lost. Despite these limitations, all of the ‘how’ studies reviewed included at least one contextual variable in their analyses, and 30 of the 35 papers included at least two types of contextual variables in their analyses.

Practical application of studies

A primary purpose of video analysis is to provide individuals involved in sports with objective and reliable information which can be used to inform practice [26]. Therefore, it is not surprising that 93% of studies gave practical applications for their findings. However, it is debatable whether all these findings, specifically those from ‘what’ studies, provide practical information [22]. For example, a study by Ortega and colleagues identified the differences between winning and losing teams in 58 Six Nations games [75]. They found that winning teams scored more points and lost fewer set-pieces, compared to losing teams [75]. The practical applications for their findings were that ‘teams can use the information to set goals for players and teams in both practices and matches’ [75]. As most teams set themselves out to out-score the opposition, as well as win all of their set-pieces, the practical applications offered by the study offers very little applicable information to coaches. However, from a research perspective, the study has identified three areas for future studies to investigate; how teams score points, win line-outs and win scrums. A series of studies by Wheeler and colleagues [72, 76], analysed the skills that led to tackle breaks, an outcome identified as an effective means of scoring points in rugby union [72]. The key skills associated with tackle breaks were fending and evasive manoeuvres. Thus, the researchers suggested coaches develop evasive agility training programmes to improve their players’ ability. As these ‘how’ studies were able to investigate further into specific skills and events, the authors were able to provide more specific practical applications for those directly involved in rugby. To facilitate the transfer and adoption of research outcomes from research to practice, it is suggested that the practical application provided by video analysis research come from the findings of ‘how’ studies, and the results of ‘what’ studies inform the research questions of ‘how’ studies.

Conclusions

The aim of this paper was to provide a critical review of video analysis research in rugby union. The review identified a large disparity in the type of data gathered in the studies and the level of statistical analysis conducted within the studies. The studies were categorised based on the outcome of the study (‘physical demands’, ‘performance’ or ‘injury related’) and the type of analysis (‘what’ or ‘how’) to facilitate more homogenous comparisons during the review process.

There was a large range in the sample sizes of the studies. The review raised concerns over the generalisability of the findings used in the majority of the studies reviewed and recommends that researchers adopt the practice of sample size calculations to ensure that studies are adequately powered.

Half of the studies appraised did not fully define the variables used in their analyses. There were also differing definitions of a variable between studies. These findings highlight the need for a consensus on the definitions of variables used in rugby union research so that the findings from different studies are more comparable (i.e. like the injury definitions for rugby union [77]).

Despite a common criticism that video analysis research has a tendency towards reductionism [8, 22, 27], all the ‘how’ studies reviewed included contextual variables in their analysis with 86% including more than two categories.

Finally, an aim of video analysis research is to provide information to coaches and practitioners to inform practice [26]. This information should be useful to a coach by not only answering the question of what happens in a match but also how it happens [77]. To assist in this process, it is suggested that researchers in this field start by developing research questions to identify the what, to provide novel findings used to develop the research questions to understand the how. This process will allow researchers to provide coaches with practical information, based on the results of how studies, which is useful and applicable to develop practice.

Abbreviations

- KPIs:

-

Key performance indicators

References

Lindsay A, Draper N, Lewis J, Gieseg SP, Gill N. Positional demands of professional rugby. Eur J Sport Sci. 2015;15:480–7.

Arnold P, Grice M. The economic impact of rugby world cup 2015. In: London; 2016.

World Rugby. World rugby: consolidated financial statement. Financial year ended 31 December 2016. 2017;1–24. Retrieved from https://www.worldrugby.org/documents/annual-reports?lang=en.

Brown JC, Verhagen E, Viljoen W, Readhead C, Van Mechelen W, Hendricks S, et al. The incidence and severity of injuries at the 2011 South African Rugby Union (SARU) Youth Week tournaments. S Afr J Sport Med. 2012;24:49–54.

Quarrie KL, Hopkins WG. Tackle injuries in professional rugby union. Am J Sports Med [Internet]. 2008 [cited 2014 Aug 30;36:1705–16.

Mellalieu S, Trewartha G, Stokes K. Science and rugby union. J Sports Sci. 2008;26:791–4.

Borrie A, Jonsson GK, Magnusson MS. Temporal pattern analysis and its applicability in sport: an explanation and exemplar data. J Sports Sci. 2002;20:845–52.

Vilar L, Araujo D, Davids K, Button C. The role of ecological dynamics in analysing performance in team sports. Sport Med. 2012;42:1–10.

Hughes MDM, Franks IMI. Notational analysis of sport: systems for better coaching and performance in sport. Second. Oxon: Psychology Press; 2004.

Longo UG, Huijsmans PE, Maffulli N, Denaro V, De Beer JF. Video analysis of the mechanisms of shoulder dislocation in four elite rugby players. J Orthop Sci. 2011;16:389–97.

Jackson RC, Baker JS. Routines, rituals, and rugby: case study of a world class goal kicker. Sport Psychol [Internet]. 2001;15:48–65.

Correia V, Araújo D, Davids K, Fernandes O, Fonseca S. Territorial gain dynamics regulates success in attacking sub-phases of team sports. Psychol Sport Exerc. 2011;12:662–9.

Smart D, Hopkins WG, Quarrie KL, Gill N. The relationship between physical fitness and game behaviours in rugby union players. Eur J Sport Sci. [Internet]. Taylor & Francis. 2011;14(Suppl 1):S8–17.

Vaz L, Mouchet A, Carreras D, Morente H. The importance of rugby game-related statistics to discriminate winners and losers at the elite level competitions in close and balanced games. Int J Perform Anal Sport. 2011;11:130–41.

van Rooyen M. A statistical analysis of tackling performance during international rugby union matches from 2011. Int J Perform Anal Sport. 2012;12:517–30.

Hendricks S, Matthews B, Roode B, Lambert M. Tackler characteristics associated with tackle performance in rugby union. Eur J Sport Sci [Internet] Taylor & Francis. 2014;14:753–62.

Hendricks S, Roode B, Matthews B, Lambert M. Defensive strategies in rugby union. Percept Mot Skills. 2013;117(1):65–87.

Bremner S, Robinson G, Williams MD. A retrospective evaluation of team performance indicators in rugby union. Int J Perform Anal Sport. 2013;13:15.

Diedrick E, van Rooyen MK. Line break situations in international rugby. Int J Perform Anal Sport. 2011;11:522–34.

Laird P, Lorimer R. An examination of try scoring in rugby union: a review of international rugby statistics. J Chem Inf Model. 2004;4:9.

van Rooyen MK, Diedrick E, Noakes TD. Ruck frequency as a predictor of success in the 2007 rugby World Cup tournament. Int J Perform Anal Sport. 2010;10:33–46.

Mackenzie R, Cushion C. Performance analysis in football: a critical review and implications for future research. J Sports Sci. 2013;31:639–76.

Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Inf Libr J. 2009;26:91–108.

Egger M, Jüni P, Bartlett C, Holenstein F, Sterne J. How important are comprehensive literature searches and the assessment of trial quality in systematic reviews? Empirical study. Health Technol Assess (Rockv). 2003;7:1–3.

Holt NL, Tamminen KA. Improving grounded theory research in sport and exercise psychology: further reflections as a response to Mike weed. Psychol sport Exerc. [Internet]. Elsevier Ltd. 2010;11:405–13.

O’Donoghue P. Research methods for sports performance analysis. Oxon: Routledge; 2010.

McGarry T, Anderson DI, Wallace SA, Hughes MD, Franks IM. Sport competition as a dynamical self-organizing system. J Sports Sci. 2002;20:771–81.

Bishop D. An applied research model for the sport sciences. Sport Med [Internet]. 2008;38:253–63.

Glazier PS. Game, set and match? Substantive issues and future directions in performance analysis. Sport Med. 2010;40:625–34.

Garganta J. Trends of tactical performance analysis in team sports: bridging the gap between research, training and competition. Rev do Porto Ciencias do Desporto. 2009;9:81–9.

Calder JM, Durbach IN. Decision support for evaluating player performance in rugby union. Int J Sports Sci Coach [Internet]. 2015;10:21–37.

Duthie G, Pyne D, Hooper S. The reliability of video based time motion analysis. J Hum Mov Stud. [Internet]. 2003;44:259–71.

James N, Mellalieu SD, Jones NMP. The development of position-specific performance indicators in professional rugby union. J Sports Sci. 2005;23:63–72.

Lim E, Lay B, Dawson B, Wallman K, Anderson S. Predicting try scoring in super 14 rugby union—the development of a superior attacking team scoring system. Int J Perform Anal Sport. 2011;11:464–75.

Reardon C, Tobin DP, Tierney P, Delahunt E, Reardon C, Tobin DP, et al. Collision count in rugby union: a comparison of micro-technology and video analysis methods. J Sports Sci. [Internet]. Routledge. 2017;35:2028–34.

Quarrie KL, Hopkins WG. Evaluation of goal kicking performance in international rugby union matches. J Sci Med Sport. 2015;18:195–8.

Fuller CW, Jones R, Fuller AD. Defining a safe player run-off zone around rugby union playing areas. Inj Prev [Internet]. 2015;21:309–13.

Correia V, Araujo D, Craig C, Passos P. Prospective information for pass decisional behavior in rugby union. Hum Mov Sci [Internet]. 2011;30:984–97.

Spencer K, Brady H. Examining the effects of a variation to the ruck law in Rugby Union. J Hum Sport Exerc. 2015;10:550–62.

Thomas GL, Wilson MR. Playing by the rules: a developmentally appropriate introduction to Rugby Union. Int J Sports Sci Coach. 2015;10:413–23.

Vahed Y, Kraak W, Venter R. The effect of the law changes on time variables of the South African Currie Cup Tournament during 2007 and 2013. Int J Perform Anal Sport. 2014;14:866–83.

Williams J, Hughes M, O’Donoghue P. The effect of rule changes on match and ball in play time in rugby union. Int J Perform Anal Sport. 2005;5:1–11.

Vahed Y, Kraak W, Venter R. Changes on the match profile of the South African Currie Cup tournament during 2007 and 2013. Int J Sports Sci Coach. [Internet]. 2016;11:85–97.

Quarrie KL, Hopkins WG. Changes in player characteristics and match activities in Bledisloe Cup rugby union from 1972 to 2004. J Sports Sci. 2007;25:895–903.

Eaves S, Hughes M, Lamb K. The consequences of the introduction of professional playing status on game action variables in international northen hemisphere rugby union football. Int J Perform Anal Sport. 2005;5:58–86.

Eaves SJ, Hughes M. Patterns of play of international rugby union teams before and after the introduction of professional status. Int J Perform Anal Sport [Internet]. 2003;3:103–11.

Sasaki K, Furukawa T, Murakami J, Shimozono H, Nagamatsu M, Miyao M, et al. Scoring profiles and defense performance analysis in rugby union. Int J Perform Anal Sport. 2007;7:46–53.

Carling C, Wright C, Nelson LJEEJ, Bradley PS. Comment on “ performance analysis in football: a critical review and implications for future research.”. J Sports Sci [Internet] Routledge. 2014;32:2–7.

Deutsch MU, Maw GJ, Jenkins D, Reaburn P. Heart rate, blood lactate and kinematic data of elite colts (under-19) rugby union players during competition. J Sports Sci. 1998;16:561–70.

Jones MR, West DJ, Harrington BJ, Cook CJ, Bracken RM, Shearer DA, et al. Match play performance characteristics that predict post-match creatine kinase responses in professional rugby union players. BMC Sports Sci Med Rehabil. [Internet]. 2014;6:38.

Vaz L, van Rooyen M, Sampaio J. Rugby game-related statistics that discriminate between winning and losing teams in IRB and super twelve close games. J Sport Sci Med. 2010;9:51–5.

Kemp SPT, Hudson Z, Brooks JHM, Fuller CW. The epidemiology of head injuries in English professional rugby union. Clin J Sport Med [Internet]. 2009;18:227–34.

George TM, Olsen PD, Kimber NE, Shearman JP, Hamilton JG, Hamlin MJ. The effect of altitude and travel on rugby union performance: analysis of the 2012 super rugby competition. J Strength Cond Res. [Internet]. 2015;29:3360–6.

den Hollander S, Brown J, Lambert M, Treu P, Hendricks S. Skills associated with line breaks in elite rugby union. J Sport Sci Med. 2016;15:501–8.

Villarejo D, Palao JM, Ortega E, Gomez-Ruano MÁ, Kraak W. Match-related statistics discriminating between playing positions during the men’s 2011 Rugby World Cup. Int J Perform Anal Sport. 2015;15:97–111.

van Rooyen M, Lambert MI, Noakes TD. A retrospective analysis of the IRB statistics and video analysis of match play to explain the performance of four teams in the 2003 Rugby World Cup. Int J Perform Anal Sport [Internet]. 2006;6:57–72.

Boddington M, Lambert M. Quantitative and qualitative evaluation of scoring opportunities by South Africa in World Cup Rugby 2003. Int J Perform Anal Sport. 2004;4:32–5.

Pulling C, Stenning M, van Rooyen MK. Offloads in rugby union: northern and southern hemisphere international teams. Int J Perform Anal Sport. 2015;15:217–28.

Jones NMP, Mellalieu SD, James N, Moise J. Contact area playing styles of northern and southern hemisphere international rugby union teams. In: O’Donoghue P, Hughes MD, editors. Perform anal sport IX. VI; 2005. p. 119–24.

Wilson BD, Quarrie KL, Milburn PD, Chalmers DJ. The nature and circumstances of tackle injuries in rugby union. J Sci Med Sport. 1999;2:153–62.

Fuller CW, Ashton T, Brooks JHM, Cancea RJ, Hall J, Kemp SPT. Injury risks associated with tackling in rugby union. Br J Sports Med. [Internet]. 2010;44:159–67.

Wheeler KW, Mills D, Lyons K, Harrinton W. Effective defensive strategies at the ruck contest in rugby union. Int J Sports Sci Coach. [Internet]. 2013;8:481–92.

McIntosh AS, Savage TN, McCrory P, FréchÈde BO, Wolfe R. Tackle characteristics and injury in a cross section of rugby union football. Med Sci Sports Exerc. 2010;42:977–84.

Gregson W, Drust B, Atkinson G, Salvo VD, Sciences E, Kingdom U. Match-to-match variability of high-speed activities in premier league soccer. Int J Sports Med. 2010;31:237–42.

van Rooyen M, Yasin N, Viljoen W. Characteristics of an “effective” tackle outcome in Six Nations rugby. Eur J Sport Sci [Internet]. 2014;14:123–9.

International Rugby Board. Laws of the game. In: Rugby union; 2014.

Duthie GM, Pyne DB, Hooper SL. Applied physiology and game analysis of rugby union. Sport med. [Internet]. 2003;33:973–91.

Roberts SP, Trewartha G, Higgitt RJ, El-Abd J, Stokes KA. The physical demands of elite English rugby union. J Sports Sci. 2008;26:825–33.

Montgomery C, Blackburn J, Withers D, Tierney G, Moran C, Simms C, et al. Mechanisms of ACL injury in professional rugby union: a systematic video analysis of 36 cases. Br J Sports Med. [Internet]. 2016;0:1–8.

McIntosh AS, McCrory P, Comerford J. The dynamics of concussive head impacts in rugby and Australian rules football. Med Sci sports Exerc. [Internet]. 2000;32:1980–4.

Schoeman R, Coetzee DF. Time-motion analysis: discriminating between winning and losing teams in professional rugby. South African J Res Sport Phys Educ Recreat. 2014;36:167–78.

Wheeler KW, Askew CD, Sayers MG. Effective attacking strategies in rugby union. Eur J Sport Sci. 2010;10:237–42.

Tierney GJ, Lawler J, Denvir K, McQuilkin K, Simms CK. Risks associated with significant head impact events in elite rugby union. Brain Inj. [Internet]. 2016;30:1350–61.

Hendricks S, Connor SO, Lambert MMI, Brown JC, Burger N, Fie SM, et al. Video analysis of concussion injury mechanism in under-18 rugby. BMJ Open Sport Exerc. Med Int. 2016;2:e000053.

Ortega E, Villarejo D, Palao JM. Differences in game statistics between winning and losing rugby teams in the six nations tournament. J Sport Sci Med. 2009;8:523–7.

Wheeler KW, Sayers MGL. Contact skills predicting tackle-breaks in rugby union. Int J Sport Sci Coach. 2009;4:535–44.

Fuller CW, Molloy MG, Bagate C, Bahr R, Brooks JHM, Donson H, et al. Consensus statement on injury definitions and data collection procedures for studies of injuries in rugby union. Clin J Sport Med. 2007;17:177–81.

Austin D, Gabbett T, Jenkins D. Repeated high-intensity exercise in professional rugby union. J Sports Sci. 2011;29:1105–12.

Austin D, Gabbett T, Jenkins D. The physical demands of Super 14 rugby union. J Sci Med Sport [Internet]. 2011;14:259–63.

Deutsch MU, Kearney GA, Rehrer NJ. Time-motion analysis of professional rugby union players during match-play. J Sports Sci. 2007;25:461–72.

Hendricks S, Karpul D, Nicolls F, Lambert M. Velocity and acceleration before contact in the tackle during rugby union matches. J Sports Sci. 2012;30:1215–24.

McLean DA. Analysis of the physical demands of international rugby union. J Sports Sci [Internet]. 1992;10:285–96.

Lacome M, Piscione J, Hager JP, Bourdin M. A new approach to quantifying physical demand in rugby union. J Sports Sci. 2014;32:290–300.

Hendricks S, Karpul D, Lambert M. Momentum and kinetic energy before the tackle in rugby union. J Sport Sci Med. 2014;13:557–63.

van Rooyen M, Rock K, Prim SK, Lambert MI. The quantification of contacts with impact during professional rugby matches. Int J Perform Anal Sport [Internet]. 2008;8:113–26.

Prim S, van Rooyen MK, Lambert M. A comparison of performance indicators between the four South African teams and the winners of the 2005 Super 12 Rugby competition. What seperates top from bottom? Int J Perform Anal Sport [Internet]. 2006;5:126–33.

Bishop L, Barnes A. Performance indicators that discriminate winning and losing in the knockout stages of the 2011 Rugby World. Int J Perform Anal Sport. 2013;13:149–59.

McKenzie AD, Holmyard DJ, Docherty D. Quantitative analysis of rugby: factors associated with success. J Hum Mov Stud [Internet]. 1989;17:101–13.

Smart DJ, Gill ND, Beaven CM, Cook CJ, Blazevich AJ. The relationship between changes in interstitial creatine kinase and game-related impacts in rugby union. Br J Sports Med. [Internet]. 2008;42:198–201.

Duthie GM, Pyne DB, Marsh DJ, Hooper SL. Sprint patterns in rugby union players during competition. J Strength Cond Res. [Internet]. 2006;20:208.

Virr JL, Game A, Bell GJ, Syrotuik D. Physiological demands of women’s rugby union: time-motion analysis and heart rate response. J Sports Sci. 2014;32:239–47.

Schoeman R, Coetzee D, Schall R. Positional tackle and collision rates in super rugby. Int J Perform Anal Sport. 2015;15:1022–36.

Duthie G, Pyne D, Hooper S. Time motion analysis of 2001 and 2002 super 12 rugby. J Sports Sci. 2005;23:523–30.

Hartwig TB, Naughton G, Searl J. Motion analyses of adolescent rugby union players: a comparison of training and game demands. J Strength Cond Res [Internet]. 2011;25:966–72.

van Rooyen M, Noakes TD. An analysis of the movements, both duration and field location, of 4 teams in the 2004 Rugby World Cup. Int J Perform Anal Sport. 2006;6:40–56.

Lacome M, Piscione J, Hager J-PP, Carling C. Analysis of running and technical performance in substitute players in international male rugby union competition. Int J Sports Physiol Perform. 2016;11:783–92.

Coetzee B b, Van Den Berg PH. Game analysis of the eight top ranked tertiary institution rugby teams in South Africa. J Hum Mov Stud. [Internet]. 2007;52:49–63.

Jones NMP, Mellalieu SD, James N. Team performance indicators as a function of winning and losing in rugby union. Int J Perform Anal Sport [Internet]. 2004;4:61–71.

Van Rooyen MK, Noakes TD, Van Rooyen M. Movement time as a predictor of success in the 2003 Rugby World Cup Tournament. Int J Perform Anal Sport. 2006;6:30–9.

Vaz L, Vasilica I, Kraak W, Arrones LS. Comparison of scoring profile and game related statistics of the two finalist during the different stages of the 2011 rugby world cup. Int J Perform Anal Sport. 2015;15:967–82.

Evans L, O’Donoghue P. The effectiveness of the chop tackle in elite and semi-professional rugby union. Int J Perform Anal Sport [Internet]. 2013;6:602–11.

Kraak WJ, Welman KE. Ruck-play as performance indicator during the 2010 six nations championship. Int J Sports Sci Coach [Internet]. 2014 [cited 2015 Apr 14;9:525–38.

Sewry N, Lambert M, Roode B, Matthews B, Hendricks S. The relationship between playing situation, defence and tackle technique in rugby union. Int J Sport Sci Coach. 2015;10:1115–28.

Lacome M, Piscione J, Hager JP, Carling C. Fluctuations in running and skill-related performance in elite rugby union match-play. Eur J Sport Sci. [Internet]. 2016;17:132–43.

Roberts SP, Trewartha G, England M, Stokes KA. Collapsed scrums and collision tackles: what is the injury risk? Br J Sports Med. [Internet]. 2015;49:536–40.

Quarrie KL, Hopkins WG, Anthony MJ, Gill ND. Positional demands of international rugby union: evaluation of player actions and movements. J Sci Med Sport [Internet]. 2013;16:353–9.

Villarejo D, Palao J-M, Toro EO. Match profiles for establishing position specific rehabilitation for rugby union players. Int J Perform Anal Sport [Internet]. 2013;13:567–71.

Vaz L, Carreras D, Kraak W. Analysis of the effect of alternating home and away field advantage during the Six Nations Rugby Championship. Int J Perform Anal Sport [Internet]. 2012;12:593–607.

Jackson RC. Pre-performance routine consistency: temporal analysis of goal kicking in the Rugby Union World Cup. J Sports Sci. 2003;21:803–14.

Fuller CW, Brooks JHM, Cancea RJ, Hall J, Kemp SPT. Contact events in rugby union and their propensity to cause injury. Br J Sports Med. [Internet]. 2007;41:862–7.

Taylor AE, Kemp S, Trewartha G, Stokes KA. Scrum injury risk in English professional rugby union. Br J Sports Med [Internet]. 2014;48:1066–8.

Usman J, McIntosh AS, Quarrie K, Targett S. Shoulder injuries in elite rugby union football matches: epidemiology and mechanisms. J Sci Med Sport [Internet]. 2015;18:529–33.

Hendricks S, O’Connor S, Lambert M, Brown J, Burger N, Fie SM, et al. Contact technique and concussions in the south African under-18 Coca-Cola craven week rugby tournament. Eur J Sport Sci. 2015;15:557–64.

Burger N, Lambert MI, Viljoen W, Brown JC, Readhead C, Hendricks S. Tackle technique and tackle-related injuries in high-level South African Rugby Union under-18 players: real-match video analysis. Br J Sports Med. [Internet]. 2016;50:932–8.

Funding

The authors would like to acknowledge the National Research Foundation of South Africa and the Frank Foreman grant for support during the study.

Availability of data and materials

The database is available as Additional file 1 to this manuscript.

Author information

Authors and Affiliations

Contributions

The first and last author designed the research question and drafted the first manuscript. The first author conducted the entire literature search, critically reviewed the papers and performed the statistical analyses. The second and third authors contributed substantially to all sections of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Competing interests

The authors, Steven den Hollander, Ben Jones, Michael Lambert and Sharief Hendricks, declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Rugby union video analysis research database. (XLSX 73 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

den Hollander, S., Jones, B., Lambert, M. et al. The what and how of video analysis research in rugby union: a critical review. Sports Med - Open 4, 27 (2018). https://doi.org/10.1186/s40798-018-0142-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40798-018-0142-3