Abstract

Background

The importance of optimal and/or superior vision for participation in high-level sports remains the subject of considerable clinical research interest. Here, we examine the vision and visual history of elite/near-elite cricketers and rugby-league players.

Methods

Stereoacuity (TNO), colour vision, and distance (with/without pinhole) and near visual acuity (VA) were measured in two cricket squads (elite/international-level, female, n = 16; near-elite, male, n = 23) and one professional rugby-league squad (male, n = 20). Refractive error was determined, and details of any correction worn and visual history were recorded.

Results

Overall, 63% had their last eye examination within 2 years. However, some had not had an eye examination for 5 years or had never had one (near-elite cricketers 30%; rugby-league players 15%; elite cricketers 6%). Comparing our results for all participants to published data for young, optimally corrected, non-sporting adults, distance VA was ~ 1 line of letters worse than expected. Adopting α = 0.01, the deficit in distance VA was significant, but only for elite cricketers (p < 0.001) (near-elite cricketers, p = 0.02; rugby-league players, p = 0.03). Near VA did not differ between subgroups or relative to published norms for young adults (p > 0.02 for all comparisons). On average, near stereoacuity was better than in young adults, but only in elite cricketers (p < 0.001; p = 0.03, near-elite cricketers; p = 0.47, rugby-league players). On-field visual issues were present in 27% of participants and mostly (in 75% of cases) comprised uncorrected ametropia. Some cricketers (near-elite 17.4%; elite 38%) wore refractive correction during play, but no rugby-league player did. Some individuals with prescribed correction choose not to wear it when playing.

Conclusions

Aside from near stereoacuity in elite cricketers, the basic visual abilities we measured were not better than equivalent, published data for optimally corrected adults; 20–25% exhibited sub-optimal vision, suggesting that the clearest possible vision might not be critical for participation at the highest levels in the sports of cricket or rugby league. Although vision could be improved in a sizeable proportion of our sample, the impact of correcting these, mostly subtle, refractive anomalies on playing performance is unknown.

Similar content being viewed by others

Key Points

-

Around two thirds of elite- and near-elite-level rugby-league players and cricketers had their eyes examined in the past 2 years, but 20–25% had either never had an eye examination or had had their last examination ≥ 5 years ago.

-

Twenty to twenty-five per cent had an anomaly of vision in their habitual playing state, which, in ~ 75% of cases, was due to uncorrected refractive error; such errors are easily treatable using contact lenses.

-

Findings suggest that the basic, clinically measured vision of high-level cricket and rugby-league players is frequently sub-optimal. However, the extent to which correction of these, mostly subtle, anomalies of refraction would lead to improved, on-field performance is not known.

Background

Vision plays a key role in interceptive tasks that are ubiquitous features of human action and interaction in our world, for example when shaking hands or crossing the street. Interceptive tasks are also a key component of many sports, such as when catching and/or striking a ball.

While some minimum level of vision is obviously important for participation in most sports, the requirement to optimise retinal image clarity in order to maximise sporting performance is contested. There are claims that vision is superior in elite athletes compared to the general population [1,2,3,4,5,6] or in elites compared to sub-elite athletes or novices [7,8,9]. Most of these claims have been made for sports which feature a small, fast moving target such as in baseball [5]. Also, vision measures and oculomotor behaviour (e.g. where and/or when the eyes are looking) may differ between elite individuals from different sports [10, 11] or between players in different positions in the same sports (e.g. fielders and pitchers in baseball [12], hitters and pitchers in baseball [13]; but see [14]). One interpretation of these findings is that excellent vision has contributed to the potential for ‘eliteness’. This is supported by studies suggesting (i) that vision can be trained (e.g. in terms of where and/or when to look) or possibly even improved (i.e. made more acute) [2, 14,15,16,17], (ii) that better vision is associated with better on-field performance [18, 19], and (iii) that vision training can enhance performance in the field [16, 20].

However, counter evidence suggests that optimal and/or superior vision is not required to fulfil the potential for eliteness. Firstly, there are examples of elite-sporting individuals whose vision was sub-optimal. Mansoor Ali Khan (1941–2011) became the captain of the Indian cricket team at the age of 21 having lost sight in one eye when aged 16 [21], and there are also questions about the vision of the legendary Babe Ruth [22]. Secondly, low to moderate levels of retinal-image blur, simulating uncorrected myopia, may not necessarily impact negatively on performance in sporting tasks [23, 24], even in tasks where the visual demands are high [25,26,27]. Also, the uptake of eye care amongst elite-level athletes may be low; hence, suboptimal vision (e.g. due to uncorrected refractive error) may well exist amongst some elite sportspeople [28,29,30,31,32]. Finally, a number of studies have concluded that claims that vision can be trained or improved remain unproven [18, 33,34,35].

As outlined above, the evidence that high-level sports players need to have superior vision is equivocal. The importance of ‘vision’ depends not only on the sport, and in some cases, on the position played in that sport (e.g. bowling or batting or fielding in cricket), but also on precisely which aspect of vision is being considered (e.g. which of the many different tests of vision are used to evaluate vision). While visual acuity (the ability to resolve static, black letters of decreasing size on a white background) is the most well-known measure of acuteness of vision, there are many measures that reflect different visual abilities; these include stereoacuity, visual acuity for dynamic targets (‘dynamic VA’) [4, 12, 36], contrast sensitivity [37], and positional acuity [38], to name but a few. Different measures of vision may reflect, to a greater or lesser extent, the demands associated with particular tasks on the field of play. Further, if they exist at all, differences in vision between elites and sub-elites or novices may not emerge as differences in raw visual performance measures, but they might instead arise from differences in how vision is used. That is, elites, either through experience or through training, may adopt a better strategy than sub-elites for knowing where and when to look in order to access the most useful information at the most appropriate time [39]. For example, in a study of anticipation and visual search behaviour by Savelsbergh et al. [40] in which expert soccer goalkeepers were classified as successful or unsuccessful based on performance on a film-based test of anticipation, the ‘successful’ experts appeared to spend longer periods of time fixating on the non-kicking leg compared with non-successful experts. In putting, Vickers [41] found that better golfers exhibited longer fixation durations on the ball and target and fewer fixations on the club and surface. In cricket, Land and McLeod’s study [42] led them to conclude that a cricket player’s eye movement strategy contributes to skill in the game (see also [43]).The distinction between visual ability measures and the use of different visual strategies has been compared to the hardware versus software (respectively) distinction in computing [44]. Adopting this analogy, many believe that while changes to the hardware (improvements in visual ability, e.g. visual acuity and stereoacuity) are not achievable (but see [20]), changes to the software (e.g. improvements in visual strategies) may be both possible and effective [18]. It is possible that either ‘hardware’ or ‘software’ differences in vision contribute to the potential for sporting eliteness or that neither do. It is also possible that the two might interact so that for example, reduced vision (e.g. due to significant uncorrected refractive error) may limit a player’s ability to employ a particular visual strategy.

Based upon the computing analogy outlined above, investigations into whether differences exist in vision between elites and sub-elite or novices, it is possible to divide such studies into comparisons between players and non-players in terms of vision abilities or of visual strategy. The current study is concerned with the former. Surprisingly, there have been no studies of eye care and basic (i.e. standard) visual abilities amongst UK-based high-level sportspeople. Here, we examine vision and visual history in UK-based elite/sub-elite players from two sports with very different visual demands, cricket and rugby league. The impact of sub-optimal vision may be greater in cricketers due to the demands of the game which features a small, often very fast moving object (the cricket ball). We gathered information about the visual history of our sports players to examine basic visual abilities in high-level cricketers and rugby-league players and to compare these visual measures with published normative values from young adults. Our aim was to understand the importance of optimally corrected vision for high-level participation in these sports and to look for evidence for better-than-normal vision. To our knowledge, this is the first study to measure the basic vision abilities and visual history of athletes who play the popular UK sports of cricket and rugby league.

Methods

Participants

Between September 2014 and October 2015, we conducted clinical visual assessments in 59 high-level sports players. Our rugby-league player sample consisted of 20 males from a ‘Super-League’ (professional) team. There were two cricketing samples. The first consisted of 23 male, near-elite-level players who represent the best players from universities in the north of England and who formed the Leeds/Bradford Marylebone Cricket Club. Several of these cricketers had played for periods with English county teams and together this group had played as a team against first-class, English county cricket teams. The second sample of cricketers was female and consisted of 16 members of England’s international women’s cricket team. Age details are provided in Table 1.

Protocols and Clinical Data Gathered

We gathered data from the participant groups described above. The results from our participants were compared with published data from young non-sporting individuals (see the ‘Statistical Analysis ’ section below).

We measured the monocular (each eye) and binocular visual acuities (VA) at distance (6 m) and near (40 cm). Vision was measured in the habitual ‘sports participation’ state, i.e. with optical correction if worn when playing sports. Distance vison measures were taken using a logMAR chart [45, 46], and near vision was measured using an MNRead chart [47], which has a similar scoring system to logMAR distance VA measurement. We also determined whether distance VA in each eye improved when participants viewed through a pinhole (1 mm) because any improvement would suggest that the existing habitual refractive status was non-optimal [48]. We assessed stereoacuity using the TNO stereotest (version no. 14, 2014 TNO Stereotest. Boca Raton, FL: Richmond Products). When only one of the two plates at the next level was correctly identified, stereoacuity (in seconds of arc, ″) was taken as the average of the two levels. Colour vision was assessed using the Ishihara test (24-plate edition, Kanehara & Co. Ltd., Tokyo, Japan), and we determined the type and extent of any refractive error using an auto-refractor (Shin-Nippon, NVision-K 5001, Shin-Nippon Corporation, Japan).

We recorded the frequency of eye examinations, whether glasses or contact lenses are worn while playing, and whether there was any history of eye injury or eye disease. Using a questionnaire, participants were asked about the use of an eye patch as a child and any previous participation in eye/vision-training programmes. The complete list of questions is given in Table 2. Our aim was to gather detailed information about the clinically measured visual function, the perceived level of vision, and the visual history of each participant. All but two questionnaires (both from the rugby-league sample) were completed, though not all questions were answered by every participant (Table 2).

Statistical Analyses

For each sub-group, we used z tests to compare near and distance VA, and stereoacuity against published values from young adults. We also compared performance on each of these tests between the participant sub-groups using t tests. For both z and t tests, we adopted an α-criterion of 0.01.

Ethics, Consent, and Permissions

The study was approved by the ethics committee at the University of Bradford, and the tenets of the Declaration of Helsinki were followed. Written, informed consent was obtained from all participants included in the study.

Results

Clinically Measured Level of Visual Function

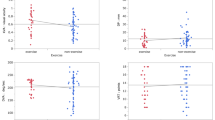

Stereoacuity and VA data are presented in Table 1 and Fig. 1. There were no statistically significant differences in VA (distance or near) between the three subgroups (p > 0.01 for all comparisons). In all sub-groups, the average distance VA was ~ 1 line of letters worse than the level which the published literature indicates can be expected in young, optimally corrected, non-sporting adults [49], i.e. those who have undergone an eye examination and are wearing the optical prescription that maximises their visual acuity. However, the deficit we identified in distance VA was only statistically significant for the elite cricketers (Table 1). The modest levels of distance VA mainly reflect the fact that a number of individuals had under- or uncorrected refractive error (see below). This is consistent with the finding that pinhole viewing improved VA by ≥ 1 line in 8.5% of participants (Table 1). Levels of near VA were consistent with those expected in young adults [50] (Table 1, Fig. 1).

a Distance visual acuity (VA) with habitual prescription worn for sports, if any. Circles represent data for individual subjects. These data are jittered on the x-axis to enhance legibility. Pale and darker diamond symbols represent the average and median values, respectively (see Table 1). b Same as for a, except for near VA. c Same as a except for TNO stereoacuity. Results from previous studies of near stereoacuity in young adults are also shown. From left to right, these data are from [52](median), [52] (average), [51] (average), [53] (average), [54] (average), and [53] (median). There were no differences in distance VA (a), near VA (b), or stereoacuity (c) between the three samples that participated in this study (all p > 0.10)

Overall, the median near stereoacuity was 30″. Stereoacuity did not differ significantly between the three sub-groups (Table 1, Fig. 1). Stereoacuity in elite cricketers was significantly better (p < 0.001) than 50″, which is a consistently reported average value for TNO stereoacuity in young adults [51,52,53,54,55]. However, stereoacuity in the near-elite cricketers (p = 0.03) and rugby-league players (p = 0.47) was not better than the 50″ criterion. While median stereoacuity was good in all sub-groups (Table 1), three rugby-league players (15%) and two near-elite cricketers (~ 9%) had stereoacuity worse than 60″. These two cricketers were ‘lower-order’ batters, meaning that they are less-able at batting than some of their teammates and that their primary contribution to the team is in the form of bowling and fielding. None of the elite cricketers had stereoacuity worse than 60″.

Colour vision testing suggested normal performance in all participants except two near-elite cricketers. Thus, overall, 4.7% of our males had red/green colour deficiency compared to an expected 8% in European Caucasian males [56], who comprised the overwhelming majority of our males. This discrepancy may be due to our modest sample size, but it is consistent with findings that colour vision deficits are under-represented in high-level cricketers in England [57] and with another study [58] in which fewer first-grade cricketers than expected were found to be colour-vision deficient. None of the female cricketers were found to be colour deficient, and this is consistent with the much lower prevalence of red/green deficiency expected in females compared to males [56].

Residual Visual Issues

Residual visual issues (i.e. uncorrected, possibly unknown, anomalies of vision/visual system that could impact upon play) were found in 27.1% (16/59) of participants. Amongst the sub-groups, this varied from 18.8 to 35% (Table 2). Of the 16 individuals, most (n = 12, 75%) had uncorrected (92%) or under-corrected (8%) refractive error, and the pinhole test revealed improved VA in 5 of these 12 cases. In 6 of the 12 cases, the last eye examination had taken place > 5 years previously or no examination had ever taken place. In 4 of the 12 cases, correction for mild refractive error (up to 1 dioptre of myopia or astigmatism) was worn but not during play.

No cases of undetected ocular pathology existed, but one player (near-elite bowler) had significant, known ocular pathology that impacted on vision during play (Table 3). Three cases of binocular vision anomalies existed (Table 3).

Uptake of Eye Care

Sixty-three per cent of all participants had their last eye examination within the past 2 years. However, there were substantial differences between the groups (Table 2); ~ 30% of near-elite cricketers had their last eye examination > 5 years before or had never had one, compared to 15% of rugby-league players and 6% of elite cricketers. Across the full sample, the figure was 18.6% (Table 2). In the questionnaire, only ~ 50% of the cricketers (elite and near-elite) and ~ 40% of the rugby-league players indicated that they regularly undergo eye examinations at least every 2 years (Table 3). Overall, 24.6% (Table 3) said they have eye examinations at ≥ 5 yearly intervals.

Refractive Correction Worn During Play

17.4% of near-elite cricketers and 38% of elite cricketers reported using refractive correction during play, but none of the rugby-league players did (Table 3). In all except one case, this consisted of contact lenses. 62.2% of cricketers wore (non-corrective) sunglasses in bright playing conditions (Table 3). No participant had refractive surgery.

Perceived Level of Visual Function

Overall, 84.2% of players rated their vison as ‘good’ or ‘excellent’ (Table 3). Around 20% of the near-elite cricketers rated their vision as only ‘moderate’ or ‘poor’, compared to 11 and 6% of the rugby-league players and elite cricketers, respectively (Table 3). The majority of players (5/8, 62.5%) who rated their vision as ‘moderate’ or ‘poor’ had uncorrected/under-corrected refractive error.

Although over 90% of elite cricketers rated their vision as ‘good’ or ‘excellent’, ~ 30% reported visual difficulties during play, compared with only 16.7% of rugby-league players and 8.7% of near-elite cricketers (Table 3). The discrepancy between the proportion of players with residual visual issues (see above) and the proportion who report visual problems during play for the elite cricketers (18.8 versus 30%, respectively), near-elite-level cricketers (35 versus 8.7%), and rugby-league players (25 versus 16.7%) suggests that perceived level of vision may not be a reliable guide to clinically measured vision and vice-versa (Tables 2 and 3).

History of Eye Disease, Eye Accidents, and Vision Training

Table 3 contains details of sports-related eye injuries (one rugby-league player), ocular disease (one near-elite cricketer), and vision training (two near-elite cricketers) to improve sports performance.

Discussion

To our knowledge, there have been no previous studies of eye care and basic (i.e. standard) visual abilities amongst UK-based high-level sports players in the games of cricket or rugby league. This work represents a preliminary attempt to understand whether having optimal and/or superior vision is important for elite-level participation in these sports. Our sample size is modest which reduces the power of our statistical analyses. Having said this, groups of elites are by their very nature small because they represent the best of their sports, e.g. our cricketing elites were, at the time, more or less the entire England’s ladies cricket squad. We did not gather equivalent data in a control group of non-sporting, age-matched individuals. Instead, we compared the results in our sports players with published results from young, optimally corrected, non-sporting adults. Thus, while these comparisons are between samples of broadly similar age, they are not specifically age-matched nor of the same sample size. We believe this was a better approach because published data on young adults are generally of studies involving larger sample sizes, which we believed there was no need to try to replicate.

Is ‘Excellent’ Vision a Prerequisite for Participation in Elite Cricket/Rugby League?

Our results show that our samples of elite and near-elite sports players do not have basic visual characteristics which could be considered excellent/superior. Aside from the possible exception of near stereoacuity, we did not find superior visual function relative to published values for young, optimally corrected adults [48,49,50,51,52,53,54,55]. The latter finding is at odds with studies of sports people in which the average level of vision (e.g. distance VA) was found to be excellent [5, 59, 60], but it is supported by studies which, like the present study, found that the average level of vision was no better than would be expected compared to the levels found in young adults (e.g. [61, 62]). On a similar note, we are not the first to find a significant proportion of high-performance athletes with either sub-optimal VA or other visual issues [28, 30]. For example, one study [63] reported that 28% of their sample of elite sportspersons had distance VA poorer than + 0.10logMAR using the refractive correction habitually worn for sports.

Since uncorrected/under-corrected refractive error in young adults is more likely to impact on distance vision than on near vision [64], it follows that there might not be an adverse effect on stereoacuity taken at near (40 cm). Indeed, this is what we found, with very good median near stereoacuity in all sub-groups. Previous studies have found better-than-average stereoacuity in elite ball-sports athletes (i.e. baseball) [5, 8, 59], though whether this applies to both distance and near stereoacuity remains unclear [5, 65]. The possible importance of stereoacuity for sports is strengthened by the fact that individuals with naturally occurring, poor stereopsis exhibit poorer catching ability [66] and much reduced learning on a catching task by comparison with those with good stereopsis [67]. Interestingly, with some notable exceptions [21, 22], there are few reports of individuals with poor stereopsis reaching elite levels in sports, though of course this could be the result of children with a binocular disorder not being encouraged to participate in sports. If good or excellent stereoacuity is associated with elite-level sports, it is not clear what the nature of this association is, since disparity processing is temporally slow compared with that of luminance [68]. It is also not clear whether the better stereopsis that we and others [5, 8, 59] have reported in elite sportspeople leads to faster motion-in-depth perception. There appear to be two mechanisms for motion-in-depth perception, one based on sensitivity to retinal disparity and the other based on a comparison of motion signals in the two eyes. The relative importance of these two mechanisms for judging motion-in-depth is still debated [69, 70], but the mechanism comparing motion signals is thought to be independent of the one that uses binocular disparity. Thus, good motion in depth perception is not necessarily dependent on good stereopsis.

Uptake of Eye Examinations and Prevalence of Visual Disorders

Twenty to twenty-five per cent of our participants reported either never having an eye examination or that their examination interval was ≥ 5 years. This is consistent with a study [63] conducted in the USA between 1992 and 1995 on junior Olympic competitors and in other elites in which 25% had never had an eye examination. Given that we also found that 20–25% of the players had a vision/visual system anomaly in the habitual sports visual status, it is tempting to conclude that simply uncovering and addressing these anomalies through more frequent eye examinations would have a significant impact on the on-field vision of a sizeable proportion of players. This is partly supported by our finding that most residual visual anomalies consisted of uncorrected refractive error in individuals who had not had an eye examination within the past 5 years. However, while some players only became aware of the fact that their vision could be improved as a result of the examination we conducted, this was not the case for all of the players. Notably, four players (25% of those with residual visual anomalies) were aware they required refractive correction, but they chose not to wear it while playing. Also, another four players had anomalies that were non-refractive and which may not be treatable or their impact on vision during play was uncertain. Thus, while greater uptake of eye examinations will improve on-field VA in a selection of players, the proportion likely to benefit is probably considerably smaller than the overall 20–25% in whom we, and others [63], found anomalies.

Beckermann and Hitzeman [63] found that 29% of their sample had visual symptoms. We found that overall 17.5% reported vision difficulties when playing, with the highest proportion of these coming from the elite cricketers. Curiously, this group had the lowest proportion of residual visual anomalies found during clinical examination (Table 2). Discrepancies between perceived and clinically measured deficits in vision may arise because clinical measures do not reflect the visual demands on-the-field or because some reduction in clinically measured visual function can exist without impacting on perceived vision on the field (or both).

Other ways to Study the Nature of the Relationship between Vision and Elite Sports

The current study has been concerned with understanding whether superior vision is important for elite sports participation, and as such, we examined the basic visual attributes and the visual history of elite/near-elite cricketers and rugby-league players. Other ways to examine the relationship between vision and high-level sports participation/performance include artificially generating a visual problem in visual normals. Mann and colleagues [25, 26] found that low-to-moderate levels of simulated myopia did not affect cricket batting and there are other examples of resistance to defocus in other interceptive tasks [71] and in other sporting tasks (e.g. basketball [23], golf [24]). One interpretation of such findings, and of ours, is that while good or excellent vision may be desirable for elite sports, it may not be essential. Another possibility is that a deficit in basic visual abilities might be overcome by adopting different visual strategies or by gathering the critical information from different cues. An example of the latter is that depth information is recoverable from retinal disparity but may also be available via different means if the disparity signal is reduced (see the ‘Is ‘Excellent’ Vision a Prerequisite for Participation in Elite Cricket/Rugby League?’ section). Investigating whether different visual strategies (e.g. where the eyes are looking, stability of fixation) protect against visual loss due to, for example, uncorrected myopia can be carried out by artificially degrading the retinal image quality in visual normal (e.g. [25, 26]). However, given that it may take time for the visual system to adapt to the deficit it is experiencing, it is likely to prove more informative to conduct such studies in elite-sporting individuals who have been unable to adapt following an acute reduction in vision (e.g. following an accident, [72]) or conversely in those who have reached elite levels in their sports despite habitual, sub-optimal vision. Only a small number of such studies have previously been conducted [21, 22].

To fully understand the nature of the relationship between vision and elite sports, it is important to determine the visual demands during play. Given the dynamic environment of many sports, clinical visual measures gathered in static testing conditions do not reflect the visual demands experienced during play. Hence, researchers have measured performance on non-standard vision tasks (e.g. dynamic VA [4, 12, 36, 73]) and other visual-mediated, cognitive tasks (e.g. [74]). However, different sports can have very different visual demands (e.g. golf versus squash), and even within the same sports, the demands can differ markedly (e.g. bowling versus batting). Identifying the visual demands of different sporting tasks presents a challenge in understanding how vision could limit or enhance the potential for sporting ‘eliteness’. While there have been attempts to study this topic [15, 59, 75, 76], it deserves considerably more research attention. Indeed, it is notable that while there is a growing number of online, web-based training programmes claiming to improve on-field performance (e.g. ‘EyeGym’, ‘Nike Sparq’, ‘Neurotracker’), for the most part, the literature to support their use is scarce [77].

A different, though not incompatible view of the contribution of good vision to eliteness is that visual abilities as measured during standard, clinical testing play a lesser role and that the contribution of vision is to facilitate perceptual-cognitive expertise that emerges via information pickup from anticipatory cues [18]. This is apparent from differences in where and when elite players and elite-sports officials look during play (e.g. [39,40,41,42,43, 78, 79]), in the ability to recall patterns of play (e.g. [80]), and to perform successfully with limited information (e.g. [81]). There is a large volume of literature to support the contribution of perceptual-cognitive expertise to elite sports; specifically, there may not be better vision in elites, just better use of vision (reviewed in [39]). This is compatible with our findings and those of others (e.g. [61,62,63]) who have concluded that, for the most part, vision as measured in clinical settings is not superior in elite-level sports individuals. However, the importance of optimising vision in elite sportspeople is far from settled because there have been positive outcomes from recent vision training studies, as well as claims that the benefits transfer to better performance on the field [20]. One area of study that is notably missing from the literature concerns intra-individual improvements in performance following the correction of visual anomalies. Such studies need to be carefully designed because of the risk of contamination from placebo effects, but they may provide a more direct answer to the question concerning the importance of optimum vision for elite sporting performance. Other studies that may also prove useful, and which are similarly absent from the literature, would involve comparing visual strategies in those with different habitual levels of basic visual abilities (e.g. due to uncorrected refractive error). Studies of this nature may reveal the extent to which visual deficits might be fully or partially compensated for through the use of different visual strategies.

Conclusions

With the exception of near stereoacuity (which was superior in our elite cricketers), vision was not superior in our modestly sized sample of high-level, sports players when compared to published values for young adults, and for some measures, it was slightly worse. Moreover, since 20–25% of our sample had non-optimal vision, our findings suggest it is not critical to have the clearest possible vision for high-level sports. In cross-sectional studies like this, it is not possible to say that on-field performance would not be better if vision was improved. Studies of change in performance following the optimization of vision in those with correctable visual anomalies would be useful and would help to better characterise the nature of the relationship between vision and elite sports performance.

References

Winograd S. The relationship of timing and vision to baseball performance. Res Q. 1942;13:481–93.2.

Stine CD, Arterburn MR, Stern NS. Vision and : a review of the literature. J Am Optom Assoc. 1982;53:627–33.

Christenson GN, Winkelstein AM. Visual skills of athletes versus non-athletes: development of a sports vision testing battery. J Am Optom Assoc. 1988;59:666–75.

Rouse MW, DeLand P, Christian R, et al. A comparison study of dynamic visual acuity between athletes and non-athletes. J Am OptomAssoc. 1988;59:946–50.

Laby DM, Rosenbaum AL, Kirschen DG, et al. The visual function of professional baseball players. Am J Ophthalmol. 1996;122:476–85.

Gao Y, Chen L, Yang SN, et al. Contributions of visuo-oculomotor abilities to interceptive skills in sports. Optom Vis Sci. 2015;92:679–89.

Melcher MH, Lund DR. Sports vision and the high-school athlete. J Am Optom Assoc. 1992;63:466–74.

Boden LM, Rosengren KJ, Martin DF, et al. A comparison of static near stereo acuity in youth baseball/softball players and non-ball players. Optometry. 2009;80:121–5.

Savelsbergh GJ, Williams AM, Van der Kamp J, et al. Visual search, anticipation and expertise in soccer goalkeepers. J Sports Sci 2002;20:279-287.

Morgan S, Patterson J. Differences in oculomotor behaviour between elite athletes from visually and non-visually oriented sports. Int J Sport Psychol. 2009;40:489–505.

Laby DM, Kirschen DG, Pantall P. The visual function of olympic-level athletes-an initial report. Eye & Contact Lens. 2011;37:116–22.

Hoshina K, Tagami Y, Mimura O, et al. A study of static, kinetic, and dynamic visual acuity in 102 Japanese professional baseball players. Clin Ophthalmol. 2013;7:627–32.

Klemish D, Ramger B, Vittetoe K, et al. Visual abilities distinguish pitchers from hitters in professional baseball. J Sports Sci. 2017;15:1–9.

Wimshurst ZL, Sowden PT, Cardinale M. Visual skills and playing positions of Olympic field hockey players. Percept Mot Skills. 2012;114:204–16.

Schwab S, Memmert D. The impact of a sports vision training program in youth field hockey players. J Sports Sci Med. 2012;11:624–31.

Deveau J, Ozer DJ, Seitz AR. Improved vision and on-field performance in baseball through perceptual learning. Curr Biol. 2014;24:R146–7.

Zwierko T, Puchalska-Niedbał L, Krzepota J, et al. The effects of sports vision training on binocular vision function in female university athletes. J Hum Kinet. 2015;49:287–96.

The BASES expert statement on the effectiveness of vision training programmes. Available at http://www.bases.org.uk/Effectiveness-of-Vision-Training-Programmes [Accessed 16th May 2017].

Classé JG, Semes LP, Daum KM, et al. Association between visual reaction time and batting, fielding, and earned run averages among players of the Southern Baseball League. J Am Optom Assoc. 1997;68:43–9.

Clark JF, Ellis JK, Bench J, et al. High-performance vision training improves batting statistics for University of Cincinnati baseball players. PLoS One. 2012;7(1):e29109. https://doi.org/10.1371/journal.pone.0029109.

Downloaded from: https://sports.ndtv.com/cricket/how-i-lost-my-eye-excerpts-from-pataudis-biography-1565773 [Accessed 24th Apr 2017].

Voisin A, Elliott DB, Regan D. Babe Ruth: with vision like that, how could he hit the ball? Optom Vis Sci. 1997;74:144–6.

Bulson RC, Ciuffreda KJ, Hung GK. The effect of retinal defocus on golf putting. Ophthalmic Physiol Opt. 2008;28:334–44.

Bulson RC, Ciuffreda KJ, Hayes J, et al. Effect of retinal defocus on basketball free throw shooting performance. Clin Exp Optom. 2015;98:330–4.

Mann DL, Ho NY, De Souza NJ, et al. Is optimal vision required for the successful execution of an interceptive task? Hum Mov Sci. 2007;26:343–56.

Mann DL, Abernethy B, Farrow D. The resilience of natural interceptive actions to refractive blur. Hum Mov Sci. 2010a;29:386–400.

Mann DL, Abernethy B, Farrow D. Visual information underpinning skilled anticipation: the effect of blur on a coupled and uncoupled in situ anticipatory response. Atten Percept Psychophys. 2010b;72:1317–26.

Gottin M, Giacchino M, Oberto M, et al. Vision and sport: new forensic aspects. Med Sport (Roma). 1999;52:277–86.

Beckerman S, Hitzeman SA. Sports vision testing of selected athletic participants in the 1997 and 1998 AAU Junior Olympic Games. Optometry. 2003;74:502–16.

Sapkota K, Koirala S, Shakya S, et al. Visual status of Nepalese national football and cricket players. Nepal Med Coll J. 2006;8:280–3.

Callaghan MJ, Jarvis C. Evaluation of elite British cyclists: the role of the squad medical. Br J Sports Med. 1996;30:349–53.35.

D'Ath PJ, Thomson WD, Wilson CM. Seeing you through London 2012: eye care at the Olympics. Br J Sports Med. 2013;47:463–6.

Wood JM, Abernethy B. An assessment of the efficacy of sports vision training programs. Optom Vis Sci. 1997;74:646–59.

Abernethy B, Wood JM. Do generalized visual training programmes for sport really work? An experimental investigation. J Sports Sci. 2001;19:203–22.

Rawstron JA, Burley CD, Elder MJ. A systematic review of the applicability and efficacy of eye exercises. J Pediatr Ophthalmol Strabismus. 2005;42:82–8.

Muiños M, Ballesteros S. Sports can protect dynamic visual acuity from aging: a study with young and older judo and karate martial arts athletes. Atten Percept Psychophys. 2015;77:2061–73.

Zimmerman AB, Lust KL, Bullimore MA. Visual acuity and contrast sensitivity testing for sports vision. Eye Contact Lens. 2011;37:153–9.

Carkeet A, Brown B, Chan P. Spatial interference with vertical pistol sight alignment. Ophthalmic Physiol Opt. 1996;16:158–62.

Mann DT, Williams AM, Ward P, et al. Perceptual-cognitive expertise in sport: a meta-analysis. J Sport Exerc Psychol. 2007;29:457–78.

Savelsbergh GJ, Van der Kamp J, Williams AM, Ward P. Anticipation and visual search behaviour in expert soccer goalkeepers. Ergonomics. 2005;48:1686–97.

Vickers JN. Gaze control in putting. Perception. 1992;21:117–32.

Land MF, McLeod P. From eye movements to actions: how batsmen hit the ball. Nat Neurosci. 2000;3:1340–5.

Mann DL, Spratford W, Abernethy B. The head tracks and gaze predicts: how the world's best batters hit a ball. PLoS One. 2013;8:e58289.

Elmurr P. The relationship of vision and skilled movement-a general review using cricket batting. Eye Contact Lens. 2011;37:164–6.

Bailey IL, Lovie JE. New design principles for visual acuity letter charts. Am J Optom Physiol Optic. 1976;53:740–5.

Ferris FL, Bailey IL. Standardizing the measurement of visual acuity for clinical research studies: guidelines from the eye care technology forum. Ophthalmology. 1996;103:181–2.

Ahn JS, Legge GE, Luebker A. Printed cards for measuring low-vision reading speed. Vis Res. 1995;35:1939–44.

Loewenstein JI, Palmberg PF, Connett JE, et al. Effectiveness of a pinhole method for visual acuity screening. Arch Ophthalmol. 1985;103:222–3.

Elliott DB, Yang KC, Whitaker D. Visual acuity changes throughout adulthood in normal, healthy eyes: seeing beyond 6/6. Optom Vis Sci. 1995;72:186–91.

Elliott DB, Flanagan JG. Assessment of visual function. In: Elliott DB, Clinical Procedures in Primary Eye Care (4th edition). Philadelphia: Elsevier Saunders; 2014:32–67.

Lee SY, Koo NK. Change of stereoacuity with aging in normal eyes. Korean J Ophthalmol. 2005;19:136–9.

Garnham L, Sloper JJ. Effect of age on adult stereoacuity as measured by different types of stereotest. Br J Ophthalmol. 2006;90:91–5.

Piano ME, Tidbury LP, O'Connor AR. Normative values for near and distance clinical tests of stereoacuity. Strabismus. 2016;24:169–72.

Yildirim C, Altinsoy HI, Yakut E. Distance stereoacuity norms for the mentor B-VAT II-SG video acuity tester in young children and young adults. J AAPOS. 1998;2:26–32.

Yekta AA, Pickwell LD, Jenkins TC. Binocular vision, age and symptoms. Ophthalmic Physiol Opt. 1989;9:115–20.

Birch J. Worldwide prevalence of red-green color deficiency. J Opt Soc Am A Opt Image Sci Vis. 2012;29:313–20.

Goddard N, Coull D. Colour blind cricketers and snowballs. BMJ. 1994;309:1684–5.

Harris RW, Cole BL. Five cricketers with abnormal colour vision. Clin Exp Optom. 2007;90:451–6.

Molia LM, Rubin SE, Kohn N. Assessment of stereopsis in college baseball pitchers and batters. J AAPOS. 1998;2:86–90.

Sillero QM, Refoyo RI, Lorenzo CA, et al. Perceptual visual skills in young highly skilled basketball players. Percept Mot Skills. 2007;104:547–61.

Abernethy B, Neal RJ. Visual characteristics of clay target shooters. J Sci Med Sport. 1999;2:1–19.

Eccles DW. Thinking outside the box: the role of environmental adaptation in the acquisition of skilled and expert performance. J Sports Sci. 2006;24:1103–14.

Beckerman SA, Hitzeman IS. The ocular and visual characteristics of an athletic population. Optometry. 2001;72:498–509.

Rabaei D, Huynh SC, Kilfey A, et al. Stereoacuity and ocular associations at age 12 years: findings from a population-based study. J AAPOS. 2007;11:356–61.

Solomon H, Zinn WJ, Vacroux A. Dynamic stereoacuity: a test for hitting a baseball? J Am Optom Assoc. 1988;59:522–6.

Mazyn LI, Lenoir M, Montagne G, et al. The contribution of stereo vision to one-handed catching. Exp Brain Res. 2004;157:383–90.

Mazyn LI, Lenoir M, Montagne G, et al. Stereo vision enhances the learning of a catching skill. Exp Brain Res. 2007;179:723–6.

Tyler CW. The horopter and binocular fusion. In: Vision and visual dysfunction, vol 9, Binocular Vision (ed D Regan) MacMillan, London; 1991.

Nefs HT, O'Hare L, Harris JM. Two independent mechanisms for motion-in-depth perception: evidence from individual differences. Front Psychol. 2010;1:155.

Czuba TB, Huk AC, Cormack LK, et al. Area MT encodes three-dimensional motion. J Neurosci. 2014;34:15522–33.

Higgins KE, Wood JM. Predicting components of closed road driving performance from vision tests. Optom Vis Sci. 2005;82:647–56.

Downloaded from : https://www.theguardian.com/sport/blog/2016/oct/21/colin-milburn-play-when-the-eye-has-gone [Accessed 26th Sep 2017].

Uchida Y, Kudoh D, Higuchi T, et al. Dynamic visual acuity in baseball players is due to superior tracking abilities. Med Sci Sports Exerc. 2013;45:319–25.

Overney LS, Blanke O, Herzog MH. Enhanced temporal but not attentional processing in expert tennis players. PLoS One. 2008;3:e2380. doi: 10.1371/journal.pone.0002380.

Erickson GB. Sports vision: vision care for the enhancement of sports performance. Oxford: Butterworth-Heineman; 2007.

Hitzeman SA, Beckerman SA. What the literature says about sports vision. Optom Clin. 1993;3:145–69.

Poltavski D, Biberdorf D. The role of visual perception measures used in sports vision programmes in predicting actual game performance in division I collegiate hockey players. J Sports Sci. 2015;33:597–608.

Spitz J, Put K, Wagemans J, et al. Visual search behaviors of association football referees during assessment of foul play situations. Cogn Res Princ Implic. 2016;1(1):12.

Gonzalez CC, Causer J, Miall RC, et al. Identifying the causal mechanisms of the quiet eye. Eur J Sport Sci. 2017;17(1):74–84.

van Maarseveen MJ, Oudejans RR, Savelsbergh GJ. Pattern recall skills of talented soccer players: two new methods applied. Hum Mov Sci. 2015;41:59–75.

Stone JA, Maynard IW, North JS, et al. Temporal and spatial occlusion of advanced visual information constrains movement (re)organization in one-handed catching behaviors. Acta Psychol. 2017;174:80–8.

Acknowledgements

We are grateful to the players and officials associated with the Huddersfield Giants, Leeds/Bradford MCC Universities, and the England national women’s cricket team. The assistance of Nathan Beebe is also gratefully acknowledged.

Funding

This study was funded by grants BB/J018163/1, BB/J016365/1, and BB/J018872/1 from the UK’s Biotechnology and Biological Sciences Research Council (BBSRC).

Availability of Data and Materials

The database supporting the conclusions of this article is included with Additional file 1.

Author information

Authors and Affiliations

Contributions

BTB designed the study, gathered some of the data, analysed the data, wrote the first draft of the manuscript, and modified it in light of comments received from the other authors. JMH, JCF, AGC, SJB and JGB helped to design the study, contributed to the analysis of the data, and commented on the drafts of the manuscript. AM gathered some of the clinical data and commented on earlier drafts of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Authors’ Information

N/A

Ethics Approval and Consent to Participate

The study was approved by the ethics committee at the University of Bradford and the tenets of the Declaration of Helsinki were followed. Written, informed consent was obtained from all participants included in the study.

Consent for Publication

Consent to publish has been obtained from participants.

Competing Interests

Brendan T. Barrett: none, Jonathan C. Flavell: none, Simon J. Bennett: none, Alice G. Cruickshank: none, Alex Mankowska: none, Julie M. Harris: none, and John G. Buckley: none.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Raw Data for Uploading to Sports Med Open Sept 2017 (XLSX 64 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Barrett, B.T., Flavell, J.C., Bennett, S.J. et al. Vision and Visual History in Elite/Near-Elite-Level Cricketers and Rugby-League Players. Sports Med - Open 3, 39 (2017). https://doi.org/10.1186/s40798-017-0106-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40798-017-0106-z