Abstract

Background

Alzheimer’s disease (AD) is a neurodegenerative brain pathology formed due to piling up of amyloid proteins, development of plaques and disappearance of neurons. Another common subtype of dementia like AD, Parkinson’s disease (PD) is determined by the disappearance of dopaminergic neurons in the region known as substantia nigra pars compacta located in the midbrain. Both AD and PD target aged population worldwide forming a major chunk of healthcare costs. Hence, there is a need for methods that help in the early diagnosis of these diseases. PD subjects especially those who have confirmed postmortem plaque are a strong candidate for a second AD diagnosis. Modalities such as positron emission tomography (PET) and single photon emission computed tomography (SPECT) can be combined with deep learning methods to diagnose these two diseases for the benefit of clinicians.

Result

In this work, we deployed a 3D Convolutional Neural Network (CNN) to extract features for multiclass classification of both AD and PD in the frequency and spatial domains using PET and SPECT neuroimaging modalities to differentiate between AD, PD and Normal Control (NC) classes. Discrete Cosine Transform has been deployed as a frequency domain learning method along with random weak Gaussian blurring and random zooming in/out augmentation methods in both frequency and spatial domains. To select the hyperparameters of the 3D-CNN model, we deployed both 5- and 10-fold cross-validation (CV) approaches. The best performing model was found to be AD/NC(SPECT)/PD classification with random weak Gaussian blurred augmentation in the spatial domain using fivefold CV approach while the worst performing model happens to be AD/NC(PET)/PD classification without augmentation in the frequency domain using tenfold CV approach. We also found that spatial domain methods tend to perform better than their frequency domain counterparts.

Conclusion

The proposed model provides a good performance in discriminating AD and PD subjects due to minimal correlation between these two dementia types on the clinicopathological continuum between AD and PD subjects from a neuroimaging perspective.

Similar content being viewed by others

1 Introduction

Alzheimer’s disease (AD) is a widely spread subtype of dementia and a major target for healthcare applications. It is an irremediable and progressive disease with millions of cases worldwide. Staggering costs are associated with the management of AD due to which this disease remains a focal point of healthcare authorities worldwide. The brain parts that are normally affected during the course of progression of AD are hippocampus, lateral ventricle, insula, putamen, entorhinal cortex, lingual gyrus, amygdala, thalamus, supramarginal gyrus, caudate nucleus, uncus, etc. [1, 2].

Parkinson’s disease (PD) is another brain disorder that is affecting millions of people worldwide and has variable prevalence rates with aged individuals getting affected substantially in comparison to younger counterparts just like AD. This disease is defined by neuronal loss in the region known as substantia nigra pars compacta located in the midbrain and formation of neuromelanin. PD is prevalent in both males and females with higher prevalence in males. It affects speech resulting in dysarthria, hypophonia, tachyphemia, etc., affecting the voice of an individual [3, 4].

Both AD and PD are incurable diseases, but medication is available to keep the symptoms under control [5]. Coexistence of both these subtypes of dementia is possible in the presence of visual hallucinations, sleep behavior disorder, fluctuations in attention or cognition, tau phosphorylation, inflammation, and synaptic degeneration [6].

Deep learning methods are widely deployed in the literature for classification, action recognition, speech recognition as well as other tasks, etc. These methods are extremely good at learning features that optimally represent data for the problem at hand. They tend to act like black boxes where information is processed by keeping the operator of the loop. Features learned by Convolutional Neural Networks (CNNs) are known to possess invariance, equivariance and equivalence properties. Architectures such as 3D-CNNs can extract both spectral and spatial domain features simultaneously from the input volume. The building blocks of these architectures are convolutional layer, pooling layer, batch normalization, dropout regularization as well as fully connected layer, etc. [7].

In the literature, studies have been proposed for the classification tasks such as AD vs Normal Control (NC), progressive mild cognitive impairment (pMCI) vs static mild cognitive impairment (sMCI), pMCI vs NC using a combination of modalities such as magnetic resonance imaging (MRI), positron emission tomography (PET), functional MRI as well as other modalities and non-imaging data such as ApoE genotype, cerebrospinal fluid (CSF) concentration of Aβ1–42, Mini-Mental State Examination (MMSE), Alzheimer’s Disease Assessment Scale-Cognitive subscale (ADAS-Cog), Rey Auditory Verbal Learning Test (RAVLT), Functional Assessment Questionnaire (FAQ) Neuropsychiatric Inventory Questionnaire (NPI-Q), etc., using different deep learning models [8,9,10].

Similarly for PD diagnosis, research has been conducted using voice datasets [11, 12], using isosurfaces-based features, using statistics-based learning methods, to discover hidden patterns of PD using CNNs, and also using neuromelanin-sensitive MRI modality achieving high performance on assessment metrics [13]. While learning features in the spatial domain using CNNs has its own advantages, learning in the frequency domain might offer advantages that spatial domain methods are unable to provide. In the CNN models, low-frequency domain components are better learned than the higher ones offering advantages such as better preservation of image information in the pre-processing stage as well as other advantages [14]. Discrete Cosine Transform (DCT) is a frequency domain method often used to define a sequence of data points using cosine functions offering advantages in terms of compactness of information.

Data augmentation methods such as adversarial techniques improve the performance of models expanding limited datasets so as to enable them to expand their generalization power.

There is a growing body of works available in the literature to study correlation between different types of dementia. David Irwin et al. [15] examined PD cases along with correlates of co-morbid AD confirming that there is an abundance of AD pathology in PD subjects which may result in modifying the clinical phenotype. As a matter of fact, co-morbid AD is also strongly associated with the changes in PD suggesting a potential clinicopathological continuum between AD and PD.

To add to the growing body of works available in the literature for understanding the clinicopathological continuum between co-morbid AD and PD cases from a neuroimaging perspective, this research effort is aimed at studying correlation between these two dementia subtypes using PET and SPECT neuroimaging modalities and deep learning methods for joint multiclass classification task.

In this work, we utilized both spatial and frequency (DCT) domain methods to learn features extracted from whole-brain images of PET scans and single photon emission computed tomography (SPECT) scans of AD and PD subjects using a 3D-CNN architecture. We deployed random weak Gaussian blurring and random zoomed in–out as data augmentation methods individually and in combination. Different from other studies in the literature where focus is on the binary or multiclass classification of AD or PD subjects, we focused on the joint multiclass classification of both AD and PD subjects and extracted features from whole-brain image scans using 3D-CNN architectures.

Rest of the paper is organized as follows. A description of the datasets is given in Sect. 2, methodology in Sect. 3, experiments in Sect. 4, results and their discussion in Sect. 5, and finally, conclusion in Sect. 6.

2 Datasets description

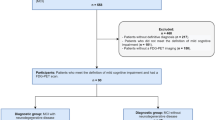

We used PPMI [16] and ADNI databases [17] for the experiments. We utilized 3D-SPECT scans from the PPMI database and 3D-PET scans from the ADNI database. Demographics of the subjects considered for this study are given in Table 1 and Table 2.

3 Methodology

To carry out the experiments for the joint multiclass (3-classes) classification between AD, PD and NC classes, we deployed a 3D-CNN architecture for all the experiments as shown in Fig. 1. An input layer accepts a volume of size 79 × 95 × 69 normalized through zero-center procedure. It works by dividing each channel with its standard deviation, subtracting the mean in the process to center the volume towards the origin. Then, a convolutional layer extracts the features from this volume. The tensor of convolutional filter weights is dependent on the number of channels, temporal depth, width and height of the filter. Mathematically, this process can be defined as:

where Pi, Qi, Ri are the kernel sizes along the three dimensions, respectively. \(v_{ij}^{abc}\) is the value of the (a, b, c)th element of the jth feature map in the ith layer, \(w_{ijm}^{pqr}\) denotes the value of (p, q, r)th element of the 3D convolution kernel connected to the mth feature map.

Stride of the layer is another important parameter that represents the number of pixels skipped during the convolution operation. Setting its value to one convolves every input pixel with the kernel. Another important parameter is L2 regularization also known as weight decay that is used to drive the weights towards the origin without making them exactly zero. We add L2 regularization to convolutional and fully connected layers to help the network in avoiding over-fitting. Batch normalization technique is then used on the mini-batches to effectively avoid over-fitting and for speeding up the learning process. We then used Exponential Linear Unit (ELU) as activation function for nonlinear mapping of inputs. Mathematically, it can be defined as:

We deployed max pooling to learn effective fast representations of inputs by reducing the dimensions. After that, we deployed dense or fully connected layers and a dropout layer with a probability of 10%. Finally, a combination of softmax and classification layers classify the input into one of the three classes: AD, PD and NC. Table 3 shows our detailed architecture hyperparameters.

In the model design, to mitigate the internal covariance shift between the batch normalization and dropout techniques, we added only one dropout layer right before the softmax layer to reduce over-fitting since there are no following batch normalization layers. We add five max-pooling layers to our model with kernel and stride size 2 × 2 × 2 in order to scale down the output feature map size by a factor of 25 compared with the original input.

We used small spatial receptive field of size 3 × 3 × 3 to increase the performance of our convolutional layers and we add padding in these layers. The softmax layer is a 1 × 1 convolutional layer followed by softmax activation. Our model is designed efficiently to get maximum performance while offering less number of computations.

4 Experiments

We performed splitting at the subject level for the experiments for the multiclass classification task. We deployed two data augmentation methods: (1) random weak Gaussian blurring and (2) random zooming in/out. We set the σ value to 1.5 for the random weak Gaussian blurring, and scale value to 0.99 and 1.03 for the random zooming in/out augmentations to decrease as well as increase the size of the input volumes at random. We performed experiments in the spatial and frequency (DCT) domain without augmentation and in the presence of single and combined augmentation methods. Here combined augmentations are methods that used samples of both random weak Gaussian blurred augmentation and random zoomed in/out augmentation in the training set. We used augmented samples for training purposes and non-augmented samples for validation and test purposes.

We deployed fivefold and tenfold CV procedures for the experiments on balanced datasets. We also created a test subset, and in this subset, we placed 4 samples of NC(SPECT) class, 9 samples of PD class, 4 samples of AD class, and 12 samples of NC(PET) class.

Other settings are as follows. Mini-batch is set to a size of 2, initial learning rate is set to 0.001, maximum number of epochs are set to 30, and optimizer is set to Adam. We run a total of 170 experiments and completed all of our simulations in approximately 3242 min or 54.03 h.

5 Results and discussion

The methods and results of the experiments are presented in Tables 4, 5, 6, 7 and 8, respectively. We used Relative Classifier Information (RCI), Confusion Entropy (CEN), Index of Balanced Accuracy (IBA), Geometric Mean (GM), and Matthew’s Correlation Coefficient (MCC) as our performance assessment metrics. A description of methods employed in the study is given in Table 4. The Serial # column in Table 4 through Table 8 is the same.

As given in Tables 5 and 6, we considered class-wise (AD, PD, NC classes) statistics for CEN, IBA, GM and MCC performance metrics. This is helpful in finding the impact of class imbalance on the performance metrics. In Table 5 which is derived from Table 4, we report values of the RCI metric, and average of CEN, IBA, GM and MCC values. This is helpful in finding the best performing architectures based on these metrics.

A consolidated view of the best performing models is given in Table 8. As given in Table 7, we considered minimum of the balanced average of the individual class-based CEN values, while maximum of the balanced average of the individual class-based IBA, GM and MCC values. After that, as given in Table 8, we assigned a ranking to every method based on RCI, CEN, IBA, GM and MCC values. Then, we sum the ranking scores as given under overall score column in Table 8 and find the minimum among these scores. We form a new ranking system where minimum among these scores is given the best possible ranking.

As given in Tables 5 and 6, there is a variation between performances of different architectures which is natural. The architectures are correlating well with each other especially when it comes to learning the intricacies of AD class under CEN, IBA, GM and MCC metrics. It can be observed that average CEN metric is offering much greater variation in its values as compared to other performance metrics. On the other hand, RCI, average GM, average IBA and average MCC are offering better correlation among their values. For example, average CEN between AD/NC(SPECT)/PD classification with combined augmentations in the spatial domain using tenfold CV approach and AD/NC(SPECT)/PD classification with combined augmentations in the frequency domain using tenfold CV approach is ≈ 16% while other performance metrics offer a variation between 1 and 4%.

As given in Table 8, the best performing model has been found to be AD/NC(SPECT)/PD classification with random weak Gaussian blurred augmentation in the spatial domain using fivefold CV approach while the worst performing one has been found to be AD/NC(PET)/PD classification without augmentation in the frequency domain using tenfold CV approach. We can see that there is a strong correlation between the rankings provided by the individual performance metrics such as RCI, CEN, IBA, GM and MCC and the overall ranking for a method. We can see the advantages brought forth by the assessment based on multiple performance metrics rather than just one metric alone. Methods that employed augmentations clearly outperformed those that do not. In addition, we can see that methods that do not combine augmentations have a slight edge over those that combine them. We can also see that the spatial domain methods fared better in comparison to frequency domain counterparts which could be due to the fact that intensity values of image pixels in the spatial domain allow for a better representation of data than in frequency domain. Another point worth mentioning is that methods deploying less data have an edge over those that used more. Further, we can see that methods that employ NC(PET) class performed the worst and those that employed random weak Gaussian blurred augmentation fared better than their random zoomed in/out augmentation counterparts. We also noticed that methods that employed NC(PET) class are able to detect PD class instances perfectly while those that employed NC(SPECT) class are able to detect AD class instances perfectly. One reason for this accurate detection could be attributed to very weak correlation between the samples of both AD and PD subjects which are considered for this study.

In exploring the clinicopathological continuum between AD and PD subjects using PET and SPECT neuroimaging modalities and deep learning methods, we found that 3D-CNN architectures are an effective tool in discriminating the subjects of both these dementia types. The impairment of the brain in PD increases at a rapid pace due to a large number of factors such as age, tau pathology and lower CSF Aβ levels [18, 19]. Neuroimaging abnormalities in PD could be due to co-morbid AD developing memory impairment and dementia in patients [20].

In the experiments that we performed, we can completely discriminate between AD and PD subjects using different deep learning methods. However, there is a need for further research in this domain using more representative samples of AD and PD subjects as well as co-morbid AD/PD subjects using deep learning methods. In addition, there is a need for explaining the findings of a black box deep learning model in the exploration of clinicopathological continuum between AD and PD subjects.

6 Conclusion

To conclude, we presented a study for the combined multiclass classification of AD, NC and PD subjects in the spatial and frequency domains using different data augmentation methods and a 3D-CNN architecture. The best performing model is AD/NC(SPECT)/PD classification with random weak Gaussian blurred augmentation in the spatial domain using fivefold CV approach while the worst performing model was AD/NC(PET)/PD classification without augmentation in the frequency domain using tenfold CV approach. We found that spatial domain methods have an edge over their frequency domain counterparts.

In the future, we are planning to extend this study using other frequency domain methods, data from other modalities, data augmentation techniques and novel architectures such as graph convolutional networks.

Availability of data and materials

The data used in the preparation of this manuscript is obtained from the Parkinson’s Progression Markers Initiative and Alzheimer’s Disease Neuroimaging Initiative databases.

References

Langa KM, Levine DA (2014) The diagnosis and management of mild cognitive impairment: a clinical review. JAMA 312(23):2551–2561

Tse KH, Cheng A, Ma F et al (2018) DNA damage-associated oligodendrocyte degeneration precedes amyloid pathology and contributes to Alzheimer’s disease and dementia. Alzheimers Dement 14(5):664–679

Parkinson’s disease statistics. https://parkinsonsnewstoday.com/parkinsons-disease-statistics/. Accessed 29 Sept 2021

Men more likely to get Parkinson’s disease? https://www.webmd.com/parkinsons-disease/news/20040317/men-more-likely-to-get-parkinsons-disease. Accessed 29 Sept 2021

Sajal MSR, Ehsan MT, Vaidyanathan R et al (2020) Telemonitoring Parkinson’s disease using machine learning by combining tremor and voice analysis. Brain Inf 7:12

Pontecorvo MJ, Mintun MA (2011) PET amyloid imaging as a tool for early diagnosis and identifying patients at risk for progression to Alzheimer’s disease. Alz Res Ther 3(2):11

Litjens G, Kooi T, Bejnordi BE et al (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88

Wolz R, Aljabar P, Hajnal JV et al (2012) Nonlinear dimensionality reduction combining MR imaging with non-imaging information. Med Image Anal 16(4):819–830

Noor MBT, Zenia NZ, Kaiser MS et al (2020) Application of deep learning in detecting neurological disorders from magnetic resonance images: a survey on the detection of Alzheimer’s disease Parkinson’s disease and schizophrenia. Brain Inf 7:11

Mahmud M, Kaiser MS, McGinnity TM et al (2021) Deep learning in mining biological data. Cogn Comput 13:1–33

Haq AU, Li JP, Memon MH et al (2019) Feature selection based on L1-norm support vector machine and effective recognition system for Parkinson’s disease using voice recordings. IEEE Access 7:37718–37734

Arora S, Baghai-Ravary L, Tsanas A (2019) Developing a large scale population screening tool for the assessment of Parkinson’s disease using telephone-quality voice. J Acoust Soc Am 145(5):2871

Rojas A, Górriz JM, Ramírez J et al (2013) Application of empirical mode decomposition (EMD) on datscan SPECT images to explore Parkinson disease. Exp Syst Appl 40(7):2756–2766

Torfason R, Mentzer F, Agustsson E et al (2018) Towards image understanding from deep compression without decoding. In: 2018 International conference on learning representations (ICLR), Canada

Irwin DJ, White MT, Toledo JB et al (2012) Neuropathologic substrates of Parkinson disease dementia. Ann Neurol 72(4):587–598

Marek K, Jennings D, Lasch S et al (2011) The Parkinson progression marker initiative (PPMI). Prog Neurobiol 95(4):629–635

Mueller SG, Weiner MW, Thal LJ et al (2005) Ways toward an early diagnosis in Alzheimer’s disease: the Alzheimer’s disease neuroimaging initiative (ADNI). Alzheimers Dement 1(1):55–66

Caspell-Garcia C, Simuni T, Tosun-Turgut D et al (2017) Multiple modality biomarker prediction of cognitive impairment in prospectively followed de novo Parkinson disease. PLoS ONE 12(5):e0175674

Ping L, Duong DM, Yin L et al (2018) Data descriptor: global quantitative analysis of the human brain proteome in Alzheimer’s and Parkinson’s disease. Sci Data 5:180036

Weil RS, Hsu JK, Darby RR et al (2019) Neuroimaging in Parkinson’s disease dementia: connecting the dots. Brain Commun 1(1):fcz006

Acknowledgements

This work is an extended version of the publication entitled Joint Multiclass Classification of the subjects of Alzheimer’s and Parkinson’s Diseases through Neuroimaging Modalities and Convolutional Neural Networks (https://doi.org/10.1109/BIBM49941.2020.9313341) presented at the 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Seoul, South Korea.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

Conceived and designed the experiments: ABT, YKM, QNZ, AK, LZ. Performed the experiments: ABT, AK. Analyzed the data: ABT, YKM, QNZ, LZ. Contributed reagents/materials/analysis tools: YKM, QY, LZ, MA, RK. Wrote the paper: ABT, QNZ, AK, LZ, RK, MA. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tufail, A.B., Ma, YK., Zhang, QN. et al. 3D convolutional neural networks-based multiclass classification of Alzheimer’s and Parkinson’s diseases using PET and SPECT neuroimaging modalities. Brain Inf. 8, 23 (2021). https://doi.org/10.1186/s40708-021-00144-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40708-021-00144-2