Abstract

Geotechnical engineers have long used the lumped factor of safety approach in design of retaining walls. In recent years, uncertainty inherent in soil properties has caught more attentions from researchers, thus reliability analyses are used widely. In short, combining the probabilistic and deterministic analysis is common practice nowadays and failure probability calculated by risk analysis is a kind of complement of safety factor. In this paper, reliability analysis of a deep excavation is presented using two probabilistic methods called Point Estimate Method (PEM) and Monte Carlo Simulation (MCS) method. Horizontal displacement of the excavated wall, as well as safety of factor, are adopted as a basis for assessing the performance stability of the system. The paper explains why probabilistic analysis with numerical methods is challenging, and how PEM and MCS can be used to calculate the statistical moments of output variables and to estimate probability of failure. The results are presented and compared in terms of statistical moments, probability of occurrence and most likely values at every stage of construction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Due to the deposition nature of soil masses, their properties can vary significantly from place to place, even over short distances. As well, the measurement of geotechnical properties, especially in situ, is a very challenging undertaking. Even when soil properties can be readily determined, inaccuracies in measurement and differences between laboratory and field-scale behavior introduce significant error. As a result, the engineering of excavations in soil involves large uncertainties and predictions based on single evaluations have practically zero probability of ever being realized, and design decisions based on them are therefore open to question.

Various researchers have studied different aspects of deep excavations considering it as a deterministic problem in which the input parameters are assumed to be single valued (see, e.g. [6, 25]). They solved these problems by deterministic calculation using safety factors and adopting conservative assumptions in the process of engineering planning and design. In this way, an engineering system can be over-designed and extremely expensive. Furthermore, even advanced deterministic methods become useless when the uncertainty is very high. As a result, the reliability level of a geotechnical structure cannot be estimated quantitatively by deterministic analysis of problem.

For a long time, reliability based design approach has been used for slope stability and excavation problems (e.g., [2, 5, 7, 10, 17, 34]) employing various types of probabilistic methods such as First Order Reliability Method (FORM), Second Order Reliability Method (SORM) and Monte Carlo Simulation (MCS) method. The drawback of FORM and SORM methods is that the results depend on the mean value of input variables at which the partial derivatives of the safety margin are evaluated (invariance problem). Therefore, these methods are accurate only for linear functions, and the error will be quite large when the degree of non-linearity of performance function is higher. Thus, it is necessary to look for a more effective and accurate reliability calculation method. As a result, researchers appreciate Monte Carlo Simulation (MCS). MCS is usually employed as a reference to check the accuracy of other methods. The disadvantage of MCS is that the number of simulations increases substantially with the reduction of target failure probability (i.e., reduction of accepted level of risk), and the calculation time will be prolonged immensely.

Another alternative method to evaluate statistical moments of a performance function is the Point Estimate Method, or shortly PEM. The basic idea of this method is to replace the probability distributions of continuous random variables by discrete equivalent distributions having the same first three central moments, to calculate the mean value, standard deviation, and skewness of a performance function, which depends on the input variables. While the PEM does not provide a full distribution of the output variable, as Monte Carlo does, it requires little knowledge of probability concepts and could be applied for any probability distribution. In future, it might be widely used for reliability analysis and for the evaluation of failure probability of engineering systems. The common ground of probabilistic studies regarding slope and excavation engineering is that, the reliability index or failure probability of slopes is solved by the combination of the limit equilibrium slice method and some probabilistic methods (mainly FORM and MCS) (e.g., [22, 24, 30]) and there exists a wide gap in implementing these non-deterministic methods in complex analysis, such as finite different difference method of analysis.

In this paper, while trying to cover the existing gap in literature concerning probabilistic analysis of deep excavation problems, a comparison is made between Point Estimate Method (PEM) and Monte Carlo Simulation method (MCS) in stability analysis of a real deep excavation. A technique is employed for constructing Probability Distribution Functions (PDF) of basic uncertain variables, without any need to collect numerous data, which is a great concern in probabilistic analysis of geotechnical problems. Statistical moments of system response, the probability of failure and most likely values obtained in every construction stages and the sampling procedure in each of methods will be compared and discussed. Finally, a proposal is suggested for reliability analysis of deep excavation using available data in common geotechnical investigation schemes. The process of numerical modeling and generation of trial numbers in MCS is conducted in a finite difference code FLAC, which will be mentioned in the following sections.

Soheil commercial complex case study

Subsurface condition and construction details

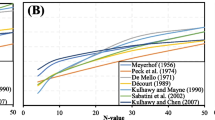

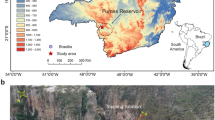

The project site of Soheil commercial complex is located in northern regions of Tehran, Iran that is surrounded by some buildings and one major street. The city of Tehran is located at the foot of the southern slopes of the Alborz Mountains Range and sits on an alluvial plain formed over time by flood erosion of the mountains. The behavior of the subsoil was characterized by soil parameters established from a number of laboratory and in situ tests such as Standard Penetration Test (SPT), Plate Load Test (PLT) and in situ direct shear test. According to the subsurface investigations, the soil profile of the site consists of three main layers that are given in Fig. 1. No groundwater was observed during the construction stages.

Soheil project involves construction of a high-rise building with a five-story basement car park. The depth of entire excavation varies in different sides of the site plan, due to the ground slope and the existing basements of adjacent structures, but the depth of excavation in the desired section is about 21 m. Due to the neighboring structures and the depth of the foreseen foundation excavation, the necessity for constructing a retaining system has risen. Table 1 summarizes the geometric configuration and Table 2 presents other design details of the excavated wall.

Numerical modeling

The soil model was a modified Duncan-Chang hyperbolic model [11, 32]. This nonlinear model includes the influence of the stress level on stiffness, strength, and volume change characteristics of the soil. With this soil model it is possible to simulate the hysteresis behavior of the soil. Considering the parameters H, We, Be, and Db as defined in Fig. 2, it was found in separate studies [3, 12, 20] that We = 3Db and Be = 3(H + Db) were appropriate values for We and Be; indeed, beyond these values, We and Be have little influence on the horizontal deflection of the wall due to the excavation of the soil. For the instrumented wall to be simulated, H was 21 m, Db was 63 m, Be was 240 m, and We was 30 m. FLAC2D V7.0 was used to carry out the finite difference based simulations of the excavated wall considering it as a plane strain problem and accounting for the long term behavior using drained conditions. For the studied wall section in current project located about 80 m from the excavation corner with the ratio of L/H equal to 8.0 (greater than 6 according to Finno et al. [14] and Ou et al. [26]) supported on a very dense cemented layer, the plane strain condition can be reasonably assumed. Thus, in order to compare the results of non-deterministic methods with the field measurements, the horizontal displacement measured at the top and center of the studied wall was selected in current study.

Uncertainty model

In order to perform a probabilistic analysis, it is necessary to build the probabilistic distribution function (PDF) of input parameters, which is regarded as an uncertainty model. To do this, there is some methods including: (1) fitting distribution function to the collected data, (2) assuming a PDF for input parameters based on previous studies available in literature and (3) providing the p-box model of input parameters on the basis of Random Set (RS) theory. In short, in random set theory, set valued information with given probability measures are combined together leading to probability bounds in terms of discrete cumulative distribution functions (CDF). The bounds comprise any distribution compatible with the existing data including the actual distribution, if numerous data existed. Since in the common geotechnical surveys there is often limited data available, which is insufficient for fitting a suitable PDF to the samples, the random set procedure seems to be a suitable alternative. Moreover, the results of numerical analysis is very sensitive to variation of input distribution parameters; thus considering a PDF (i.e., normal, lognormal etc.) with assumptive statistical moments for the variables seems misleading. In the current research, in order to solve the mentioned obstacles, a pertinent technique is employed.

Random set theory provides an appropriate mathematical framework for combining probabilistic as well as set-based information [35]. The incomplete knowledge about the vector of basic variables x = (x1,…,xN) can be expressed as a random relation R, which is a random set \(\left( {{\Im },m} \right)\) on the Cartesian product \(X_{1} \times \cdots \times X_{N}\); where \({\Im } = \left\{ {A_{i} :i = 1, \ldots ,n} \right\}\) and m is a mapping, \({\Im } \to \left[ {0.1} \right]\), so that \(m\left( \emptyset \right) = 0\) and

\({\Im }\) is called the support of the random set, the sets Ai are the focal elements (Ai \(\subseteq\) X) and m is called the basic probability assignment. Each set, \(A \in {\Im }\), contains some possible values of the variable, x, and m(A) can be viewed as the probability that A is in the range of x. In current research, m is assumed equal to 0.5, which indicates that both sources of information provided for input parameters are equally reliable. Alternatively, the sets Ai (i.e., each sets of spatially correlated data presented in Fig. 3) could be ranges of a variable obtained from source number i with relative credibility mi. Because of the imprecise nature of this formulation it is not possible to calculate the ‘precise’ probability, Pro, of a generic x ∈ X or of a generic subset E ⊂ X, but only lower and upper bounds on this probability (Fig. 3): Bel(E) ≤ Pro(E) ≤ Pl(E). In the limiting case, when i is composed of single values only (singletons), then Bel(E) = Pro(E) = Pl(E) and m is a probability distribution function.

Various researchers (e.g., [1, 9, 23, 29, 38, 39]) have pointed out that soil properties should be modeled as spatially correlated random variables or ‘random fields’, because the use of perfectly correlated soil properties gives rise to unrealistically large values of failure probabilities for geotechnical structures (see, e.g. [15]). Due to the fact of spatial averaging of soil properties, the inherent spatial variability of a parameter, as measured by its standard deviation, can be reduced significantly if the ratio of Θ/L is small where Θ is the spatial correlation length and L is a characteristic length, e.g., that of a potential failure surface. The variance of these spatial averages can be correlated to the point variance using the variance reduction factor, Γ2, as discussed by Vanmarcke [39] through σΓ = Γ·σ where σ is the standard deviation of field data (point statistics) and σΓ is the standard deviation of the spatial average of the data over volume V. The variance reduction factor depends on the averaging volume and the type of correlation structure in the form:

In this study, an alternative approach introduced by Peschl [27] based on the Vanmarcke method has been adopted that applies the variance reduction technique. If n sources of information are assumed, the function of the spatial average of the data xi,Γ can be calculated from the discrete cumulative probability distribution of the field data xi

The material parameters for the soil layers, which were treated as basic variables, are summarized in Table 3. The parameters were established not only from laboratory and in situ tests (geotechnical report; source 1), but also from previous experience of the region (expert knowledge: source 2). Since the expert knowledge is based on a number of similar projects, the geotechnical report however forms the basis for design, both source have been equally weighted in this particular case. It should be noted that Kur was select as Kur = 3 K, based on in situ Plate Load Tests, n constant was assumed to be equal to 0.5 and Rf was 0.93 for all three layers.

Typical values of spatial correlation lengths, Θ, for soils as given e.g. by Li et al. [19] are in the range 0.1 to 5 m for Θy and from 2 to 30 m for Θx. Based on the subsurface condition in the project and some specific regulations in the region of interest (e.g., the minimum distance between the boreholes must be at least 15 m based on the uniformity level of subsurface soils), the average spatial correlation length for this study is assumed to be 10 m. The characteristic length, L, was taken as 30 m, which is based on analyses investigating potential failure mechanisms for this problem. Because of considering spatial correlation (Fig. 3), the discrete cumulative probability function becomes steeper.

Sensitivity analysis

In order to reduce the number of required finite difference runs in PEM, it is required to perform a sensitivity analysis. For the nine basic variables given in Table 3, 4n + 1 (i.e., 37) calculations are required according to the procedure proposed by U.S. EPA: TRIM [37] to obtain a relative sensitivity score for each variable. Similarly, for six basic variables (i.e., strength parameters of all layers) considered in stability analysis, 25 simulations were performed using Geostudio Limit Equilibrium Analysis (LEA) software. In this case, the horizontal displacement at top of the wall in all construction stages, ux−A, and safety factor of stability at the final construction step are evaluated. Figure 4 shows the relative sensitivity obtained for each of variables for both criteria and indicates that C2-Φ2-C3-Φ3 and C2-K2-K3 are determined as uncertain basic variables in stability and deformation analysis, respectively; considering the threshold value equal to 5%.

Probabilistic methods

Point Estimate Method (PEM)

There are two main distinguishable approaches for the Point Estimate Method: first, the original approach proposed by Rosenblueth [31] and its modified method given by his followers (e.g. [8, 16, 18, 21]) that attempted to save computational cost by reducing the number of prefixed sampling points. Second, the approach proposed by Zhou and Nowak [40] including three different integration rules, namely n + 1, 2n, 2n2 + 1 (n is the number of basic variables involved in the analysis). Thurner [36] concluded that the 2n2 + 1 integration rule results in an optimum compromise between accuracy and computational effort. The reason for difference between these methods is the sampling and weighting technique employed in each of them. The weights assigned to all permutations in n + 1 and 2n are equal, which is considered clearly as a shortcoming, while in 2n2 + 1 different weights are assigned to provided permutations (i.e., greater weights to more probable permutations). Additionally, the number of sampling points in tales of PDFs in 2n2 + 1 is much greater than the other two, thus more precise values of probability of failure can be derived in this method. For these reasons, in this research the 2n2 + 1 rule is used for generating sampling points.

Zhou and Nowak [40] have presented a general procedure to numerically approximate Eq. (4), considering various possibilities using the Gauss–Hermite quadrature formula. This method has been established for a multivariate case in standard normal space, which gives the approximation of the Eq. (4) as follows:

where n is the number of variables, m is the number of considered points, zj are typical predetermined points and wj are the respective weights given in Table 4. The sum of the weights is always 1.0 and the number of required calculations thus depends on the integration rule. Table 5 presents sampling points of basic variables in deformation analysis (i.e., C2, K2 and K3) along with assigned weights to each combination.

Monte Carlo Simulation (MCS)

The Monte Carlo Simulation (MCS) technique is considered as a very powerful tool for engineers and can be quite accurate if enough simulations are performed. The MCS consists of sampling a set of properties for the materials from their joint PDF and introducing them in the model; thus, a set of results (e.g., displacements) can then be obtained. This operation is repeated a large number of times and an empirical frequency-based probability distribution can be defined for each result. Information on the distribution and statistical moments of the response variable is then obtained from the resulting simulations. The accuracy of the MCS technique increases with the increase in the number of simulations. However, this can be disadvantageous as it becomes computationally expensive, and as such, the simulator’s task is to increase the efficiency of the simulation by expediting the execution and minimizing the computer storage requirements. In current study, the PDFs of basic input variables determined in sensitivity analysis are built and subsequently the random variables will be generated based on the aforementioned PDFs. Then, using the obtained results, the PDF of system response (i.e., horizontal deformation of point A in Fig. 2) for 10, 100, 200, 300 and 400 simulations will be sketched and compared in deformation analysis of the problem, while much greater trials will be performed in stability analysis section of the research.

Constructing PDF of input variables

In order to apply PEM and MCS in Soheil project, it is necessary to have a probability distribution function for each random variable. To determine the distribution function for a particular soil property, more than 40 data points are needed [4]. In fact, due to the lack of test results and limited sampling in usual geotechnical projects, it is not usually feasible to build an exact PDF of the considered random variables. Thus, the following two alternatives are discussed to properly match the random set input variable given in Fig. 3 with a corresponding PDF used in the prospective probabilistic calculations. At the end, one alternative is selected for this purpose.

Alternative I, the “three-sigma” rule

Based on the relationship held for a normally distributed variable, as a rule of thumb, an event is considered to be “practically impossible” if it falls away from its mean by more than three times the standard deviation. This is known as the “three-sigma” rule. For non-normal distributions, the 3-sigma rule possesses 95% confidence level [28]. Schneider [33] proposed the relationships given below implicitly using the 3-sigma rule to determine the true mean and standard deviation of soil parameters:

where μ and σ are mean and standard deviation of variables, respectively, xl is the estimated minimum value, xr the estimated maximum value, and n is the most likely value or mode. As an example, Fig. 5a shows an application of 3-σ rule to the random set of the modulus factor of layer two using the relationship given by Schneider. The most probable value is obtained by averaging all random set extreme values. The estimated extreme values (xl, xr) would be the most outer values of the random set bounds. This method can be used, but it could pose a drawback that the probability measure of the values around the random set bounds is very low. Therefore, this approach may underestimate some values around the edge of the random set. It might be more reasonable to use an approach that can allow for a more appropriate probability share for extreme values in the random set to provide a better match between the reference data and PEM/MCS input variable.

Alternative II, the selection of a best fit to a uniform distribution

In this alternative, first a uniform distribution is constructed whose left and right extreme values are respectively medians of left and right random set bounds (gray dotted distribution in Fig. 5b). Then, typical and well-known distributions are fitted to the obtained distribution and depending on the shape of the random bounds and the variable itself, one can judge to select an appropriate distribution for further analysis. The following equations can be used to calculate the μ and σ of a uniform distribution:

where, a and b parameters are minimum and maximum values in uniform distribution function, respectively. The approach was applied again to the modulus factor of second layer using triangular, normal, and lognormal distributions as depicted in Fig. 5b. As can be seen, this method looks more reasonable since it covers the whole range of random set values and so this alternative is chosen for providing the equivalent probability distribution for further non-deterministic analysis. The lognormal distribution function is thus selected for this special parameter, which exhibits some kind of skewness comparable to those of random set bounds, and is a commonly used distribution for the modulus factor in the literature because it always gives positive values. The approach has been applied to other random variables of the problem whose results are given in Table 6.

Results and discussion

Generating random numbers

As it was mentioned earlier, the first step in probabilistic analysis is to generate the random variables of the assigned PDFs. In PEM, based on the equation presented in Table 4 and the assigned weights to each of samples, different permutations of input parameters (i.e., 2n2 + 1) are evaluated (see Table 5). Figure 6 shows the sampling points of basic variables on their PDF graphs. It is clear that in this method, some points replace the PDF and the combination of these points determines the number of permutations. Moreover, the samples are distributed properly along the tales of PDF, which induces reasonable estimation of statistical moments of system response.

In order to generate the random numbers in MCS method, it was required to write a pertinent code in FISH language of FLAC2D software. The programming environment of FLAC2D has only the ability to generate uniform random numbers by its default commands. Thus, it seems necessary to write a code for generating normal and lognormal random numbers. For this purpose, the normal random numbers were generated using the existing commands and then by using existing formulas for transformation of normal to lognormal variables; the lognormal random numbers were created.

As it was mentioned previously, the MCS is the most precise probabilistic method, which due to building the correct tales of response PDF, there is a need to simulate a great number of realizations. Although this procedure is possible in simple geotechnical solutions, such as limit equilibrium analysis, but in complex problems such as Finite Difference (FD) analysis, this method needs great computational effort, which is practically impossible. Because of these limitations, the study of MCS results regarding deformation FD analysis, were concentrated on 10, 100, 200, 300 and 400 simulations and an evaluation is carried out between the results.

The discrete probability functions of K2 are presented in Fig. 7 for the mentioned number of simulations against 10,000 number of simulations. It is obvious that the shape of PDF in n = 400 and n = 10,000 trials is very close to each other, but the main concern is put on the sampled variables which shows some difference. It is clear that with increasing the number of simulations, the shape of PDFs becomes uniform and the difference between sampling is minimized. For instance, the mean, standard deviation and skewness in n = 400 and 10,000 trials indicate little difference between statistical moments. This means that statistical moments are modeled with reasonable accuracy in n = 400. It should be noted that although the shape of PDF in both n = 400 and n = 10,000 trials are similar, but the range of variation of parameters became a little wider. For example, in n = 10,000, there exists some sampling points between 280 and 320, which can effect on some system responses.

Comparison of results

In accordance with Fig. 6, the sampling points of K2 in PEM are 133, 236, 74, 200, and 88. From the histograms presented in Fig. 7 for K2 in MCS, it can be observed that PEM sampling procedure revealed a narrower range for parameter K2, which is a critical variable in evaluation of Ux,A according to sensitivity analysis performed earlier. Accordingly, the discrepancy observed in estimation of PEM and MCS method can be attributed to different sample range derived for K2. Table 7 presents the sampling range provided for PEM and MCS. From this table, it can be deduced that this mismatch decreases with changing PDF of input variables from non-normal to normal (i.e., from asymmetric to symmetric or from highly skewed to low skewed distributions). The mean value obtained for Ux,A in all numbers of simulations in MCS are presented against the mean value of system response in PEM in Fig. 8 for all construction stages. It is obvious that there is a significant difference between these two probabilistic methods in predicting the mean value. According to previous explanations, it can be concluded that if the probability distribution function of all variables were normal, then the difference between results would be minimized.

Standard deviation, σ, reflects the dispersion and uncertainty of a parameter. The standard deviation of Ux,A in both PEM and MCS methods are presented in Fig. 9 for all construction stages. It can be observed that the fluctuation trend of σUx,A for both MCS and PEM are in good agreement and the difference between obtained values is much lower than the mean values of Ux,A. This indicates that despite the limited number of simulations employed in PEM; this method presents reasonable estimation for predicting the dispersion of results by employing much less number of simulations from MCS.

Figure 10 shows the most likely values obtained by PEM and MCS methods (i.e., (μ − 2σ, μ + 2σ)). The range, ± 2σ, has been identified, because from probability theory [28] it is known that the interval, ± 2σ, represents the 95% confidence interval irrespective of what distribution the target variable has, on condition that the distribution is unimodal. From Fig. 10, it is obvious that significant difference exists between most likely values obtained in PEM and MCS, which can be attributed to the difference between μ values predicted by these methods, since σ of them, are reasonably close to each other. Moving outside the most likely values zone indicates that the actual ground condition is gradually moving away from the assumed conditions. Thus, from MCS results in Fig. 10, it is obvious that the soil parameters of the model (especially layer two which has the most influence on results of deformation analysis) are partially overestimated which could be reduced by employing complementary subsurface investigations; so the uncertainty model would capture the variability of input parameters in a more realistic manner leading to improved safety in the project.

A value of about H/500 [13] is used here as a limiting value for the evaluation of the limit state function in order to obtain the probability of failure, pf, in terms of serviceability, where H is the wall height. It can be seen from Table 8 that the probability of failure in all construction stages are very different in PEM and MCS methods, which can be referred to narrower range of sampling in PEM leading to larger values of μ in PEM than MCS, consequently shifting the PDF of system response (i.e., Ux,A) to the right direction (i.e., increasing the pf). It is worth mentioning that the unstable trend in pf, both in progressing of construction stages and increasing the number of trials could be a clear indication that much more simulations are required in MCS analysis in order to achieve precise values of probability of failure in a deep excavation problem.

As it was previously mentioned, MCS requires many simulations to achieve a stable output, which is impractical in complex problems such as finite difference analysis of deep excavations. An alternative solution in deep excavation problems with low target levels of risk (i.e., low target probability of failure) is to implement MCS in determining the Factor of Safety (FS) by limit equilibrium methods of analysis. For this purpose, Soheil Complex excavated wall was analyzed probabilistically in a LEA software to determine the FS of project. Here, the Geostudio2007 software is used to determine the statistical moments and probability of failure regarding stability aspect of the problem. The simulations were performed for various numbers of trials (i.e., n = 10, 100, 1000, 10,000, 100,000 and 1,000,000) and the results are presented in Table 9. It can be seen from this table that with increasing the number of simulation from n = 1000, the reliability index (β) increases slightly and in n = 100,000 it becomes nearly constant. Figure 11 shows the histogram of Ux,A in final excavation stage for n = 10, 100 and 1000. It is obvious that a significant difference exists in scattering and convergence of results in these simulations, which clearly is the key factor in discrepancy between results presented in Table 9.

According to Table 9, it is obvious that standard deviation decreases with increasing the number of simulation, which is rational due to the growth of statistical population. In the other hand, this decrease of σ with increasing trial numbers is negligible which shows insignificant effect of number of simulations on FS evaluation from n = 1000. Comparing pf obtained by MCS indicates that in every stage of construction the pf (pFS < 1) is equal to zero, which means that the original design was too conservative and significant savings could be made in the case of performing probabilistic analysis before and during the project.

Conclusion

In this paper, two probabilistic methods of analysis (i.e., Monte Carlo Simulation and Point Estimate Methods) was employed in a real deep excavation problem. Comparing sampling range, statistical moments and most likely values of system response and probability of failure obtained in each method, it was deduced that the major factor in difference between these methods is the mean value determined, which induces significant difference in most likely values and pf. This can be attributed to narrower range of samples obtained in PEM than MCS in lognormal distribution functions of input parameters and can be minimized, if normal (or nearly normal) PDFs were assigned to all variables. Although, the sampling procedure in PEM and MCS were very different, but both of these methods presented relatively similar values of standard deviation.

The MCS results in FD analysis presented an unstable condition in trials up to n = 400, which indicates that much more simulations are required in complex types of analysis; thus, PEM, despite differences mentioned earlier, can be preferable to MCS in predicting pf values in serviceability reliability analysis of deep excavations with much less computational effort needed. The sampling procedure in PEM and MCS are quiet different, but it was observed that the statistical moments of input variables in both of these methods was in good agreement for normal distribution functions. From the parametric study performed in limit equilibrium analysis, it was found that the effect of varying the number of random trials was not significant beyond 1000 random trials in MCS and reasonable results regarding stability analysis can be achieved by this method in stability analysis of deep excavations. The paper showed that combining the probabilistic deformation analysis using PEM (under specific conditions) and MCS using finite difference methods with probabilistic stability analysis by MCS using limit equilibrium analysis could be a desirable procedure which is recommended in reliability analysis of deep excavations problems.

References

Alonso EE, Krizek RJ (1975) Stochastic formulation of soil profiles. In: Proc Int Conf Appl Stat Probab (ICASP2), Aachen, Germany, pp 9–32

Babu GL, Singh VP (2009) Reliability analysis of soil nail walls. J Georisk 3(1):44–54

Briaud JL, Lim Y (1999) Tieback walls in sand: numerical simulations and design implications. J Geotech Geoenviron Eng 125:101–110

Carter JP, Desai CS, Potts DM, Schweiger HF, Sloan SW (2000) Computing and computer modeling in geotechnical engineering. In: Proc Geoeng 2000, Melbourne, Australia, vol 1, pp 1157–1252

Chowdhury SS (2017) Reliability analysis of excavation induced basal heave. J Geotech Geol Eng 35(6):2705–2714

Chowdhury SS, Deb K, Sengupta A (2016) Effect of fines on behavior of braced excavation in sand: experimental and numerical study. Int J Geomech ASCE. https://doi.org/10.1061/(ASCE)GM.1943-5622.0000487

Christian JT, Baecher GB (1999) Point estimate method as numerical quadrature. J Geotech Geoenviron Eng (ASCE) 125:779–786

Christian JT, Baecher GB (2002) The point-estimate method with large numbers of variables. Int J Numer Anal Meth Geomech 26:1515–1529

Cornell CA (1971) First-order uncertainty analysis of soils deformation and stability. In: Proc Int Conf Appl Stat Probab (ICASP1), pp 129–144

Duncan JM (2000) Factors of safety and reliability in geotechnical engineering. J Geotech Geoenviron Eng (ASCE) 126:307–316

Duncan JM, Byrne PM, Wong KS, Marby P (1980) Strength, stress–strain and bulk modulus parameters for finite element analysis of stresses and movements in soil mass. Rep. No. UCB/GT/80-01, University of California, Berkeley, California

Dunlop P, Duncan JM (1970) Development of failure around excavated slopes. J Soil Mech Found Div (ASCE) 96:471–493

FHWA (2015) Soil nail walls reference manual. U.S. Department of Transportation Federal Highway Administration, Washington

Finno RJ, Blackburn JT, Roboski JF (2007) Three dimensional effects for supported excavations in clay. J Geotech Geoenviron Eng (ASCE) 133(1):30. https://doi.org/10.1061/(asce)1090-0241(2007)133:1(30)

Griffiths DV, Fenton GA (2000) Influence of soil strength spatial variability on the stability of an undrained clay slope by finite elements. In: Slope Stab 2000 (ASCE), pp 184–193

Harr ME (1989) Probabilistic estimates for multivariate analyses. Appl Math Model 13(5):313–318

Hoeg K, Muruka RP (1974) Probabilistic analysis and design of a retaining wall. J Geotech Eng Div (ASCE) 100(3):349–365

Hong HP (1998) An efficient point estimate method for probabilistic analysis. Reliab Eng Syst Saf 59(3):261–267

Li IK, White W, Ingles OG (1983) Geotechnical engineering. Pitman, Boston

Lim Y, Briaud JL (1996) Three dimensional nonlinear finite element analysis of tieback walls and of soil nailed walls under piled bridge abutment. Report to the Federal Highway Administration and the Texas Department of Transportation, Department of Civil Engineering, Texas A&M University, College Station, Texas

Lind NC (1983) Modelling uncertainty in discrete dynamical systems. Appl Math Model 7(3):146–152

Low BK, Gilbert RB, Wright SG (1998) Slope reliability analysis using generalized method of slices. J Geotech Geoenviron Eng 124(4):350–362

Lumb P (1975) Spatial variability of soil properties. In: Proc 2nd Int Conf Appl Stat Probab Soil Struct Eng, pp 397–421

Malkawi AIH, Hassan WF, Abdulla FA (2000) Uncertainty and reliability analysis applied to slope stability. J Struct Saf 22(2):161–187

Nogueira CL, Azevedo RF, Zornberg JG (2009) Coupled analyses of excavations in saturated soil. Int J Geomech (ASCE). https://doi.org/10.1061/(ASCE)1532-3641(2009)9:2(73)

Ou CY, Chiou DC, Wu TS (1996) Three-dimensional finite element analysis of deep excavations. J Geotech Eng (ASCE). https://doi.org/10.1061/(asce)0733-9410(1996)122:5(337)

Peschl GM (2004) Reliability analyses in geotechnics with the random set finite element method. Graz University of Technology, Graz

Pukelsheim F (1994) The three sigma rule. Am Stat 48(2):88–91

Rackwitz R (2000) Reviewing probabilistic soils modeling. Comput Geotech 26:199–223

Ramly HE, Morgenstern NR, Cruden DM (2002) Probabilistic slope stability analysis for practice. Can Geotech J 39:665–683

Rosenblueth E (1975) Point estimates for probability moments. Proc Natl Acad Sci 72(10):3812–3814

Seed RB, Duncan JM (1984) SSCOMP: a finite element analysis program for evaluation of soil structure interaction and compaction effects. Report No. UCB/GT/84-02, University of California, Berkeley, California

Schneider HR (1999) Determination of characteristic soil properties. In: Barends et al., editors. Proc XII Int Conf Soil Mech Geotech Eng, vol 1, pp 273–281

Tobutt DC (1982) Monte Carlo simulation methods for slope stability. Comput Geosci 8(2):199–208

Tonon F, Bernardini A, Mammino A (2000) Determination of parameters range in rock engineering by means of Random Ret Theory. Reliab Eng Syst Saf 70:241–261

Thurner R (2000) Probabilistische Untersuchungen in der Geotechnik mittels Deterministischer Finite Elemente-Methode. Institut für Bodenmechanik und Grundbau, Graz

U.S. EPA: TRIM (1999) TRIM, Total Risk Integrated Methodology. TRIM FATE technical support document volume I: description of module. EPA/43/D-99/002A. Office of Air Quality Planning and Standards

Vanmarcke EH (1977) Probabilistic modeling of soil profiles. J Geotech Eng Div (ASCE) 103:1227–1246

Vanmarcke EH (1983) Random fields—analysis and synthesis. MIT-Press, Cambridge

Zhou J, Nowak AS (1988) Integration formulas to evaluate functions of random variables. Struct Saf 5(4):267–284

Authors’ contributions

AS collected the data, carried out numerical modeling, interpreted the results and wrote the manuscript in consultant with AJC. AJC supervised the project. Both authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sekhavatian, A., Janalizadeh Choobbasti, A. Comparison of Point Estimate and Monte Carlo probabilistic methods in stability analysis of a deep excavation. Geo-Engineering 9, 20 (2018). https://doi.org/10.1186/s40703-018-0089-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40703-018-0089-8