Abstract

An informationally structured environment (ISE) is a key technology for realizing service robots in daily life in the near future. In ISE, the information of the service robot and its surroundings is structured and provided to the robot on time and on demand. We started the development of a management system for ISE named TMS (Town Management System) in Robot Town Project in 2005. Since then we are continuing our efforts for the improvement of the performance and the enhancement of the functions of TMS. In this paper, we propose the latest system of TMS named ROS-TMS 5.0, which adopts ROS to utilize high scalability and rich resources of ROS. Next, we introduce the hardware platform of ISE called Big Sensor Box which incorporates various sensors under ROS-TMS management and operates service robots based on structured information. Robot service experiments including watching service of a care receiver, voice control of a communication robot and a robotic bed, and ing information by voice are also conducted in Big Sensor Box.

Similar content being viewed by others

Introduction

An informationally structured environment (ISE) is a key technology for realizing service robots in daily life in the near future. Instead of developing an intelligent robot equipped with many sensors, the environment itself is designed to be intelligent by structuring the information of the robot and its surroundings and providing the required information to the service robot on time and on demand. To realize an ISE, various sensors are embedded in the space, including walls, ceilings, furniture, or objects, to acquire a variety of information, such as positions of objects, humans, and robots, or the current status of environments. Acquired information is stored in a cloud database, analyzed in cyber space, and provided to robots and users in real space. A number of studies related to ISE have been published so far. These include “Robotic Room” [1] and “Intelligent Space” [2] at The University of Tokyo, “Smart Room” at MIT MediaLab [3], “Intelligent Room” at AILab [4], “Aware Home” at Georgia Tech. [5], and “Wabot House” at Waseda University [6]. Many of these are still studied actively in laboratories [7,8,9,10,11].

The authors also started to develop a town-scale ISE named “Robot Town Project” in 2005 and have been developing a software platform named Town Management System (TMS) [12,13,14]. In [14], we proposed ROS-TMS 4.0, which adopted the Robot Operating System (ROS) [15] as the middleware of TMS to utilize high scalability and rich resources of ROS. In addition, the hardware platform of ISE called Big Sensor Box which incorporates various sensors under ROS-TMS management and operates service robots based on structured information has been developed. In 2017 we announced the latest version named ROS-TMS 5.0 [16], in which several service tasks required in hospitals or care facilities are newly added such as monitoring of vital data of a care receiver or voice control of a robotic bed. In this paper, we explain some new functions, sensors, and robots of ROS-TMS 5.0 and Big Sensor Box, especially watching service of a care receiver and voice control of service robots.

Related works

The idea of using a variety of sensors embedded in the environment and adapting the behavior of the system to the current situation is also called “ambient intelligence” [17]. The synergy effect of ambient intelligence and robots was studied in “CompanionAble project” [18] in the FP7 project. Additionally, in Horizon 2020, some projects related to “personalising health and care (PHC)” have been planned to utilize service robots for health-care purposes.

Although our research is closely related to ambient intelligence or smart homes [19, 20], and more recently, Internet of Things (IoT) [21] or a cyber-physical system (CPS), one of the characteristics of our approach is that we focus on the development of not only several sensor systems but also a total framework of the ISE including service robots and robotic appliances. We are also developing a software platform with high flexibility and expandability named “ROS-TMS”.

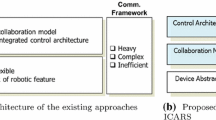

Hierarchical modular structures similar to ROS-TMS can be seen in other robot control architectures and smart house controllers [22,23,24,25,26,27,28,29,30]. For example, Fong et al. [27] proposed a middleware called “Human-Robot Interaction Operating System” (HRI/OS) for collaboration of humans and robots, and adopted a similar hierarchical structure consisting of distributed processing modules. Techniques that divide the total process into several small processes as modules, which are connected freely according to the required tasks, are popular ways to improve the flexibility and the efficiency in the development of large-scale systems. The characteristics of the proposed ROS-TMS are as follows: the proposed system is based on ROS as a middleware, the flexibility and the expandability are quite high, and a variety of open resources in the world can be utilized by adopting ROS as a middleware.

Network robot system which connects distributed sensors and a centralized control system through a communication network has also been proposed so far, such as ubiquitous robot network [31, 32] and RoboEarth project [33]. Johnson et al. [34] proposes a GIVING-A-HAND System, which can connect several modules of robot appliances and service robots easily and develop a low cost and easy to use robot system. Furthermore, cloud based robot controllers using ROS are also proposed such as DAvinCi [35] and a generic and security enhanced system named Rapyuta [36]. Survey of an ambient intelligence related to robot services can be found in [37].

ROS-TMS 5.0

The CPS is a fundamental social system for the next IT generation. In a CPS, actual situations, events, and behaviors in the real world are measured by distributed sensors or acquired as open/social data. Optimum solutions are derived in the cyber world based on huge computer resources. Then, the real world is managed and controlled according to the optimum solutions given in the cyber world to realize an ideal real world. A “smart grid”, which optimizes generation, transmission, and storage of electricity, is a representative example of a CPS. After the National Science Foundation (NSF) identified the CPS as an important research area in 2006 [38], this concept has been attracting much attention and a number of studies have been presented so far. To realize a CPS, IoT (Internet of Things) is one of the key technologies for gathering real-world information. In addition, to realize designed plans in the real world, robot technology (RT) is also quite important. Therefore, IoT and RT are indispensable for driving the CPS, and we refer to these two key technologies as IoRT (Internet of Things and Robot Technology), as shown in Fig. 1.

ROS-TMS [13, 14, 16] is a core software platform for IoRT. We released the latest version, ROS-TMS 5.0, in June 2017. We briefly summarize ROS-TMS 5.0 in this section.

ROS-TMS 5.0 consists of more than 150 informationally structured nodes classified into several sensor processing modules, such as laser range finders or IC card readers, robot planning modules, task planners and schedulers, human interface modules, and database modules. The functions required for supporting life services by service robots are designed and implemented as nodes. These nodes are connected and reconfigured flexibly according to the desired service tasks. Figure 2 shows the total structure of ROS-TMS. The summary of each module is as follows:

-

TMS_DB: nodes for communicating with a database (DB Server and File Server). TMS_DB is an interface between nodes in all modules in ROS-TMS and a database. If we replace a database system, for example, from MySQL in ROS-TMS 3.4.2 to mongodb in RMS-TMS 4.0, we don’t need to rewrite nodes other than TMS_DB and we can easily replace a database system.

-

TMS_UR: nodes for communicating with users. Requests received from users are sent to TMS_TS. TMS_UR includes a variety of UI systems such as smart phone interface or voice recognition system as shown in this paper.

-

TMS_TS: nodes for planning service tasks performed by robots. According to a task requested via TMS_UR, TMS_TS inquires of the database a sequence of subtasks to accomplish the requested task, plans an execution schedule of these subtasks, and manages the execution of subtasks using a state machine “SMASH”.

-

TMS_SD: nodes for controlling sensors such as laser range finders, cameras, or RFID tag readers. Device drivers of theses sensors and low-level processing functions are included.

-

TMS_SS: nodes for integrating sensor data. TMS_SS receives multiple sensory data from TMS_SD and integrates and interprets them. Currently TMS_SS consists of a floor sensing system for detecting objects on the floor using a laser range finder and RGB-D cameras, an intelligent cabinet system to detect the type and position of objects through RFID tag readers and load cells, motion tracking using RGB-D cameras, and position tracking of moving objects (robots and furniture) using an optical motion tracker (Vicon).

-

TMS_SA: nodes for estimating the status of the environment. TMS_SA estimates the current situation of the environment by combining the information from TMS_SS. For example, a human behavior such as reading a book, sleeping on a bed, or sitting on a chair and having a meal, is estimated from position information of human, objects, and furniture [13].

-

TMS_RP: nodes for planning robot motions. TMS_RP contains a motion planner of each robot, such as moving and grasping.

-

TMS_RC: nodes for executing robot motions. TMS_RC contains various robot controllers including a humanoid-type robot and a wheel chair robot.

Among them, User Request (TMS_UR), Sensor Driver (TMS_SD), and Robot Controller (TMS_RC) modules are interface modules between users, sensors, and robots. The Task Scheduler (TMS_TS) module receives a sequence of subtasks from the Database (TMS_DB) module according to the user’s requests. Task Scheduler is designed to achieve the desired task and to schedule the execution timings of subtasks. The Robot Planner (TMS_RP) module manages the execution of each subtask and sends execution commands to the Robot Controller (TMS_RC) module with proper timing.

Big Sensor Box

We have been developing a hardware platform for an ISE named Big Sensor Box [14] for demonstrating the performance of the software platform ROS-TMS in the daily life environment. The environment, shown Fig. 3a, consists of a bedroom, a dining room, and a kitchen.

In this platform, several sensors, robots, intelligent furniture, and robotic appliances are installed. These include the following.

Sensors

-

Optical tracking system (Vicon Bonita)

-

Distributed RGB-D camera system (Kinect, Xtion)

-

UWB beacon (Pozyx)

-

Intelligent electric appliances

Service robots

-

Humanoid-type service robot (SmartPal V)

-

Mobile robot (KXP, Kobuki)

-

Communication robot (Double)

-

Wheelchair robot (Mimamoru-kun)

-

Robotic bed

Intelligent furniture and robotic appliances

-

Intelligent refrigerator

-

Intelligent cabinet

We have already introduced some sensors, intelligent furniture, and service robots in Big Sensor Box in [14]. In this paper, we explain some sensors and service robots which are critical or newly installed in Big Sensor Box for ROS-TMS 5.0.

Sensors

Optical tracking system

In Big Sensor Box, 18 infrared cameras (Bonita, Vicon Motion Systems) are installed as shown in Fig. 3a, and the three-dimensional positions of infrared markers are tracked. By attaching markers on robots or furniture as shown in Fig. 3b, not only the human motion but also the motion of a robot and the position of furniture are continuously measured with a high level of accuracy that is under 1 ml. In the experiments in “Robot service experiments using ROS-TMS and Big Sensor Box” section, several markers are attached on a communication robot and the position of the robot is measured by the optical tracking system in real time.

UWB beacon

In Big Sensor Box, a UWB (ultra wide band) beacon system (Pozyx, Pozyx Labs) is installed. The position of the UWB tag is measured by six anchors (Fig. 4a) on the wall with the accuracy of about 10 [cm]. We also developed a small and wearable UWB tag as shown in Fig. 4b and the position of a user can be measured by winding it in his/her arm in real time (Fig. 5). In the UWB beacon tag, a nine axes velocity/acceleration sensors is also installed and abnormal behaviors such as falling down can be detected (“Watching sensors of a care receiver” section).

Watching sensors of a care receiver

Figure 6 shows a sensor terminal for watching over a human such as a care receiver. The sensor terminal consists of a RGB-D camera (Kinect 2) on the top and a laser range finder (URG-04LX, Hokuyo) on the bottom. The RGB-D camera is used to measure the heartbeat rate and respiratory rate from depth images and the laser range finder is used to detect a raising motion and a position of the care receiver walking in the room. A monitor displays the heartbeat rate, respiratory rate, and the position of the care receiver. Figure 7 shows the measurement of the vital data of the care receiver on the bed. Table 1 shows the average and standard deviation of the measurement errors for five subjects of breath rate and respiratory rate at rest and during exercise. From this table, it is clear that the accuracy of the measurement of the respiratory rate is very high with or without a blanket and almost coincides with the actual data measured manually. However, the heartbeat rate cannot be measured accurately and large errors such as ± 20 times per minutes is obtained in some cases. We compared the accuracy of the RGB-D sensor with a microwave sensor (DC6M4JN3000, Sharp) and found that the accuracy of the RGB-D camera for the respiratory rate is higher than the microwave sensor, and the accuracy of both sensors for the heartbeat rate is very low.

In order to measure the vital data more precisely, we developed a wearable sensor system (Fig. 8) consisting of a high precise electrocardiograph (WHS-1, Union Tool) and an UWB beacon tag (“UWB beacon” section). By waring this sensor system, an electrocardiogram of a patient and his/her current position in the room are measured and recorded to the database through ROS-TMS, simultaneously. Figure 9 shows a measurement experiment of electrocardiogram and the position using the wearable sensor system while walking. The UWB beacon tag is equipped with a nine axes velocity/acceleration sensors and it is possible to detect normal and abnormal behavior of the patient such as sleeping or falling as shown in Fig. 9.

Distributed microphones and service request by voice

As shown in Fig. 10, small PCs (Raspberry Pi3) equipped with microphones (MM-MCUSB22, Sanwa Supply) are distributed throughout the room, and care receivers can request ROS-TMS services described below by voice. In addition, we also installed a small PC with a speaker so that we can provide voice guidance.

We utilized two kinds of voice recognition engines (Julius [39] on local PCs and Google Cloud Speech API on cloud) to accelerate the processing speed and provide a quick response. The voice is processed on local PCs at first and, if necessary, transfered to the cloud engine and processed accurately. More concretely, once a user speaks, the voice recognition engine on local PCs starts to recognize the sentence by Julius. If the PCs recognize the wake word “ROS-TMS”, the PC, which can send a fastest response among all PCs, replies with a boozer, and listens and transfers the following sentence to the cloud engine. From the cloud engine, the string of speech content is obtained and morphological analysis is performed to recognize the contents of the instruction. In this system, we utilize the morphological analysis library, “janome”, running on Python. After the morphological analysis, the string of speech content is divided into nouns and verbs. Next, by querying TMS_DB with the obtained nouns and verbs, the corresponding information about objects and tasks is obtained. Figure 11 shows the table stored in TMS_DB.

If the label of the word in the “type” column is “task”, the corresponding task is performed by interpolating the required information from other nouns and verbs. To accomplish a particular task, a service request has to include the information of the robot, the user, the objects, and the locations. By fitting the corresponding information in the extracted nouns and verbs, the desired task is completely planned and performed by the desired robot. For example, if the user asks to ROS-TMS as “SmartPal, bring a snack”, the task named “get_object” is activated according to the verb “bring”. Then the robot ID is set to 2003, which corresponds to “Smart Pal”, the user ID is set to the default value, 1100, the object ID is set to 7001, which is “snack”, and the location ID is set to the default value, 0. Then, TMS_TS (Task Scheduler) module in ROS-TMS makes a motion plan of a service robot for the desired robot service and TMS_RP (Robot Planning) and TMS_RC (Robot Controller) modules execute the planned motion.

In addition, corresponding sentences stored in the “announce” column in TMS_DB (Fig. 11) are announced from the speaker. For example, if the user asks to ROS-TMS as “SmartPal, bring a snack”, the “announce” column in the get_object task, that is “$robot$ will bring $object$ to $user$”, is read out. Then the $robot$ is replaced with “Smart Pal”, $user$ is replaced with default user name “Taro”, and $object$ is replaced with “a snack” as a target object. Finally, the sentence “Smart Pal will bring a snack to Taro” is announced from the speaker as shown in Fig. 13. For synthesizing speech, Open JTalk was used.

On the other hand, if the instruction is the inquiry of the object information in the room or other general information, the TMS_UR (User Interface) replies the appropriate answer by referring the database or searching on Internet.

Service robots

Humanoid-type service robot

Figure 12a shows a humanoid-type service robot (SmartPal V, Yaskawa Electric). On top of the robot, an omnidirectional laser scanner (Velodyne HDL-32E) and an RGB-D camera (Xtion, ASUS) are attached, and so the robot can avoid collisions with an environment, human, and object in real time.

Communication robot

Figure 12b is the communication robot (Double, Double Robotics), which is equipped with a tablet PC (iPad, Apple), a microphone, and a RGB-D camera (Xtion2, ASUS). This communication robot is connect to ROS-TMS 5.0 via ROSiOS on the tablet PC and transfers camera images, voice, heart rate, and respiratory rate measure by the RGB-D camera to a remote computer.

Robot service experiments using ROS-TMS and Big Sensor Box

We carried out robot service experiments in Big Sensor Box. The aim of these experiments is to confirm that the developed software platform ROS-TMS 5.0 and the hardware platform Big Sensor Box have competent ability and performance capable of providing some typical robot services. Especially in this paper, we focus on control experiments of service functions by the voice commands, which are implemented and installed in ROS-TMS 5.0 and Big Sensor Box as explained in “Distributed microphones and service request by voice” section. In this section, several robot tasks such as a fetch-and-give task by the humanoid robot and motion control of the robotic bed are demonstrated. In addition, we introduce a calling task of a mobile communication robot by the voice command.

Voice control experiment of service functions

As explained in “Distributed microphones and service request by voice” section, distributed microphones acquire voice instructions by a user and voice instructions are analyzed by voice recognition engines on edge and cloud and morphological analysis.

Request of robot service

If the instruction is related to the robot service, the requested service is executed by several modules such as TMS_TS (Task Scheduler) in ROS-TMS as explained above. Figure 13 shows a fetch-and-give task by the humanoid robot requested by the voice command. Figure 14 shows that the robotic bed changes the angle of the backrest and the height of the bed.

Inquiry of information

If the instruction is the inquiry of the information or a command which is not related to a robot service, ROS-TMS executes several services as shown bellow.

-

search_object

Replay the status of the desired object by accessing the database in ROS-TMS (Fig.15a).

-

weather_forecast

Reply the whether forecast in few days using the whether information service “Weather Hacks” provided by Livedoor (Fig.15b).

-

set_alarm

Set the clock alarm at the desired time and date (Fig.15c). If the wearable sensor system (Fig. 8) senses the waking up motion by the user, the alarm stops.

-

control_light

Control the light in the room (turning off and on, Fig.15d).

-

Knowledge Q & A

If the instruction/question is not included in above, the sentence is passed to the Knowledge Q & A service on the web provided by NTT DOCOMO in Japan. The Knowledge Q & A service replies the search results on its database or on the Internet. Thus even if the inquired information is not found in the database in ROS-TMS, the user can obtain proper answers by voice (Fig. 15e).

We measured the success rate and the response time of the voice control. Table 2 shows the success rate of the voice control for ten trials for five subjects. The success rate is over 90 (%) except the subject E and it shows that the performance strongly depends on users. Table 3 shows the number of failures in the recognition of the wake word (Julius) and the following command (Google Cloud Speech API). Most of failures are occurred on the recognition of the wake word.

Table 4 shows the average of the response time for each command after all commands (wake word and following command) are invoked We tested four kinds of commands, which are search_object, weather_forecast, Knowledge Q&A in case that the answer is found in the database in NTT DOCOMO, Knowledge Q&A in case that the answer is not found in the database and searched on the Internet. The response time of the proposed system is 3.86 to 6.25 s. According to the study [40], the response time of commercial AI services is 0.21–2.06 s. The proposed system takes more time than these commercial systems and thus the performance need to be further improved.

Voice control of communication robot

We developed a communication robot (Fig. 16) and a control system by voice. The communication robot (Double, Double Robotics) is equipped with optical markers, a RGB-D camera (Xtion 2, ASUS), and a single board PC (Intel Joule). Once the user calls the robot by voice, the robot automatically moves in front of the user and starts remote communication with other users. As explained in “Communication robot” section, this communication robot is connect to ROS-TMS 5.0 via ROSiOS and transfers camera images, voice, heart rate, and respiratory rate measure by the RGB-D camera to a remote user and stored to the TMS_DB module in ROS-TMS. Figure 17 shows the motion of the communication robot when the user calls it. The position and direction of the user are detected by the UWB beacon tag (“UWB beacon” section) and the robot approaches automatically to the user from the front direction along the planned trajectory by the TMS_RP module in ROS-TM [14]. Facial images, voice, and vital data measured by the communication robot are transfered and displayed on the remote PC as shown in Fig. 18. Figure 19 shows the heartbeat rate and the respiratory rate transferred by the ROS topic.

Conclusions

In this paper, we introduced the software platform ROS-TMS 5.0 for an informationally structured environment (ISE). In addition, we introduced the hardware platform Big Sensor Box for the ISE. Moreover, the hierarchical structure of ROS-TMS and the execution procedure of service tasks in Big Sensor Box were explained with some examples. Some service tasks such as fetch-and-give task by a humanoid robot and voice control of a communication robot and a robotic bed, which are activities occurring frequently in daily life, were demonstrated by using ROS-TMS 5.0 and Big Sensor Box. One of the main contributions of this study is that we built a house-sized IoT environment based on ROS and showed the feasibility of ROS-based sensor/robot control architectures for realizing a CPS.

The aim of this project is to develop a home-scale IoT-based informationally structured environment for a service robot based on a robot middleware ROS, and to release it as open source software [16, 41]. Therefore, those who wish to use or refer to our codes can download and install them freely. For example, if readers want to know how we implemented the connection code to the Google cloud engine or how we allocate a voice recognition task to edge PCs and a cloud server, they can download all codes from the above page, read them, and test the performance on their own environment. In our best knowledge, this is the first attempt to develop a home-scale IoT environment based on ROS and to share all source code. So, we think this project will be beneficial for researchers who are developing an IoT environment using robot technology and ROS in our community.

As explained above, the aim of this research is to develop an IoT-based informationally structured environment. Especially, the voice control of IoT devices including service robots will play an important role in the future IoT society. In addition, watching service of a care receiver is one of the key applications using IoT devices in a super-aging society. Thus, this paper focused on the development of the voice control system in a care room using open source software platforms. To evaluate the performance of the developed system, we conducted the experiments of typical voice control tasks. We think strongly the development of these systems by integrating open source software itself is challenging and valuable contributions of this research.

We are now preparing to apply the proposed ROS-TMS and Big Sensor Box, especially the monitoring system of a patient and the communication robot, to elderly care homes and to confirm the practicality of the proposed platforms.

References

Sato T, Nishida Y, Mizoguchi H (1996) Robotic room: symbiosis with human through behavior media. Robot Auton Syst 18(1–2):185–194. https://doi.org/10.1016/0921-8890(96)00004-8

Lee J-H, Ando N, Hashimoto H (1999) Design policy of intelligent space. In: Proceedings on 1999 IEEE International Conference on Systems, Man, and Cybernetics. Vol. 3, pp 1077–10823. https://doi.org/10.1109/ICSMC.1999.823378

Pentland AP (1996) Smart rooms. Sci Am 274(4):54–62

Brooks RA (1997) The intelligent room project. In: IEEE Computer Society proceedings of the 2nd international conference on cognitive technology (CT ’97). pp 271–278. , Washington. http://dl.acm.org/citation.cfm?id=794204.795308

Kientz JA, Patel SN, Jones B, Price E, Mynatt ED, Abowd GD (2008) The georgia tech aware home. In: CHI ’08 Extended Abstracts on Human Factors in Computing Systems. CHI EA ’08, pp. 3675–3680. ACM, New York. https://doi.org/10.1145/1358628.1358911. http://doi.acm.org/10.1145/1358628.1358911

Sugano S, Shirai Y (2006) Robot design and environment design—waseda robot-house project. Proc Int Joint Conf SICE-ICASE 2006:31–34. https://doi.org/10.1109/SICE.2006.314981

Noguchi H, Mori T, Sato T (2006) Automatic generation and connection of program components based on rdf sensor description in network middleware. In: Proceedings of IEEE/RSJ international conference on intelligent robots and systems. pp 2008–2014

Park K-H, Bien Z, Lee J-J, Kim BK, Lim J-T, Kim J-O, Lee H, Stefanov DH, Kim D-J, Jung J-W et al (2007) Robotic smart house to assist people with movement disabilities. Auton Robots 22(2):183–198

Kato Y, Izui T, Tsuchiya Y, Narita M, Ueki M, Murakawa Y, Okabayashi K (2011) Rsi-cloud for integrating robot services with internet services. In: Proceedings of IECON 2011-37th Annual Conference on IEEE Industrial Electronics Society. pp 2158–2163

Gross H, Schroeter C, Mueller S, Volkhardt M, Einhorn E, Bley A, Martin C, Langner T, Merten M (2011) I’ll keep an eye on you: Home robot companion for elderly people with cognitive impairment. In: Proceedings of IEEE International Conference on Systems, Man, and Cybernetics. pp 2481–2488

Tenorth M, Perzylo AC, Lafrenz R, Beetz M (2012) The roboearth language: Representing and exchanging knowledge about actions, objects, and environments. In: Proceedings of IEEE international conference on on robotics and automation. pp 1284–1289

Hasegawa T, Murakami K, Kurazume R, Senta Y, Kimuro Y, Ienaga T (2007) Robot town project: Sensory data management and interaction with robot of intelligent environment for daily life. In: 2007 International conference on ubiquitous robots and ambient intelligence (URAI07). pp 369–373

Pyo Y, Nakashima K, Kuwahata S, Kurazume R, Tsuji T, Morooka K, Hasegawa T (2015) Service robot system with an informationally structured environment. Robot Auton Syst 74(Part A):148–165. https://doi.org/10.1016/j.robot.2015.07.010

Kurazume R, Pyo Y, Nakashima K, Tsuji T, Kawamura A (2017) Feasibility study of iort platform “big sensor box”. In: Proc. IEEE international conference on robotics and automation. pp 3664–3671

http://www.ros.org/. Accessed 19 Sept 2018

http://irvs.github.io/ros_tms/. Accessed 19 Sept 2018

Aarts E, Wichert R (2009) Ambient intelligence. Technology guide. Springer, Berlin

http://www.companionable.net/. Accessed 19 Sept 2018

Chan M, Esteve D, Escriba C, Campo E (2008) A review of smart homes-present state and future challenges. Comput Methods Prog Biomed 91(1):55–81. https://doi.org/10.1016/j.cmpb.2008.02.001

Stefanov DH, Bien Z, Bang W-C (2004) The smart house for older persons and persons with physical disabilities: structure, technology arrangements, and perspectives. IEEE Trans Neural Syst Rehabil Eng 12(2):228–250. https://doi.org/10.1109/TNSRE.2004.828423

Ashton K (2009) That ’internet of things’ thing. RFiD J 22(7):97–114

Simmons RG (1994) Structured control for autonomous robots. IEEE Trans Robot Autom 10(1):34–43

Simmons R, Goodwin R, Haigh KZ, Koenig S, O’Sullivan J (1997) A layered architecture for office delivery robots. In: Proceedings of the first international conference on autonomous agents. ACM, New York, pp 245–252

Simmons RG, Goodwin R, Haigh KZ, Koenig S, O’Sullivan J, Veloso MM (1997) Xavier: experience with a layered robot architecture. ACM Sigart Bull 8(1–4):22–33

Alami R, Chatila R, Fleury S, Ghallab M, Ingrand F (1998) An architecture for autonomy. Int J Robot Res 17(4):315–337

Alami R, Fleury S, Herrb M, Ingrand F, Robert F (1998) Multi-robot cooperation in the martha project. IEEE Robot Autom Magaz 5(1):36–47

Fong T, Kunz C, Hiatt LM, Bugajska M (2006) The human-robot interaction operating system. In: Proceedings of the 1st ACM SIGCHI/SIGART conference on human–robot interaction. ACM, New York, pp 41–48

Kim J-H, Jeong I-B, Park I-W, Lee K-H (2009) Multi-layer architecture of ubiquitous robot system for integrated services. Int J Soc Robot 1(1):19–28

Wu C-L, Liao C-F, Fu L-C (2007) Service-oriented smart-home architecture based on osgi and mobile-agent technology. IEEE Trans Syst Man Cybern C 37(2):193–205. https://doi.org/10.1109/TSMCC.2006.886997

Das SK, Cook DJ, Battacharya A, Heierman IEO, Lin T-Y (2002) The role of prediction algorithms in the mavhome smart home architecture. IEEE Wireless Commun 9(6):77–84. https://doi.org/10.1109/MWC.2002.1160085

Kim J-H, Jeong I-B, Park I-W, Lee K-H (2009) Multi-layer architecture of ubiquitous robot system for integrated services. Int J Soc Robot 1(1):19–28. https://doi.org/10.1007/s12369-008-0005-z

Kim JH, Lee KH, Kim YD, Kuppuswamy NS, Jo J (2007) Ubiquitous robot: A new paradigm for integrated services. In: Proceedings 2007 IEEE international conference on robotics and automation, pp 2853–2858. https://doi.org/10.1109/ROBOT.2007.363904

Janssen R, van de Molengraft R, Bruyninckx H, Steinbuch M (2016) Cloud based centralized task control for human domain multi-robot operations. Intell Serv Robot 9(1):63–77. https://doi.org/10.1007/s11370-015-0185-y

Johnson M, Guglielmelli E, Di Lauro G, Laschi C, Carrozza M, Dario P (2004) 6 giving-a-hand system: The development of a task-specific robot appliance. Advances in Rehabilitation Robotics. Springer, Berlin, pp 127–141

Arumugam R, Enti VR, Bingbing L, Xiaojun W, Baskaran K, Kong FF, Kumar AS, Meng KD, Kit GW (2010) Davinci: a cloud computing framework for service robots. In: 2010 IEEE international conference on robotics and automation, pp 3084–3089. https://doi.org/10.1109/ROBOT.2010.5509469

Mohanarajah G, Hunziker D, D’Andrea R, Waibel M (2015) Rapyuta: a cloud robotics platform. IEEE Trans Autom Sci Eng 12(2):481–493. https://doi.org/10.1109/TASE.2014.2329556

Fulvio M, Antonio S, Renato Z (2010) autonomous robots to artificial ecosystems. In: Handbook of ambient intelligence and smart environments. pp 635–668

NFS-07-504 https://www.nsf.gov/pubs/2007/nsf07504/nsf07504.htm. Accessed 19 Sept 2018

http://julius.osdn.jp. Accessed 19 Sept 2018

https://www.slideshare.net/KonstantinSavenkov/nlu-intent-detection-benchmark-by-intento-august-2017. Accessed 19 Sept 2018

https://github.com/irvs/ros_tms/wiki. Accessed 19 Sept 2018

Authors' contributions

RK constructed the study concept and drafted the manuscript. YP and AK managed the study. JS, KK, and KM developed the system and carried out the experiments. All members verified the content of their contributions. All authors read and approved the final manuscript.

Acknowledgements

This research is supported by The Japan Science and Technology Agency (JST) through its “Center of Innovation Science and Technology based Radical Innovation and Entrepreneurship Program (COI Program).”

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

Not applicable.

Funding

This research is partially supported by the Center of Innovation Program from Japan Science and Technology Agency, JST and JST CREST Grant Number JPMJCR17A5, Japan.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sakamoto, J., Kiyoyama, K., Matsumoto, K. et al. Development of ROS-TMS 5.0 for informationally structured environment. Robomech J 5, 24 (2018). https://doi.org/10.1186/s40648-018-0123-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40648-018-0123-9