Abstract

The generalized viscosity implicit rules of nonexpansive mappings in Hilbert spaces are established. The strong convergence theorems of the rules are proved under certain assumptions imposed on the sequences of parameters. The results presented in this paper extend and improve the main results of Refs. (Moudafi in J. Math. Anal. Appl. 241:46-55, 2000; Xu et al. in Fixed Point Theory Appl. 2015:41, 2015). Moreover, applications to a more general system of variational inequalities, the constrained convex minimization problem and K-mapping are included.

Similar content being viewed by others

1 Introduction

In this paper, we assume that H is a real Hilbert space with the inner product \(\langle\cdot,\cdot\rangle\) and the induced norm \(\|\cdot\|\), and C is a nonempty closed convex subset of H. Let \(T: H\rightarrow H\) be a mapping and \(F(T )\) be the set of fixed points of the mapping T, i.e., \(F(T )=\{x\in H:Tx=x\}\). A mapping \(T: H\rightarrow H\) is called nonexpansive, if

for all \(x, y\in H\). A mapping \(f: H\rightarrow H\) is called a contraction, if

for all \(x, y\in H\) and some \(\theta\in[0,1)\).

In 2000, Moudafi [1] proved the following strong convergence theorem for nonexpansive mappings in real Hilbert spaces.

Theorem 1.1

[1]

Let C be a nonempty closed convex subset of the real Hilbert space H. Let T be a nonexpansive mapping of C into itself such that \(F(T)\) is nonempty. Let f be a contraction of C into itself with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\{\varepsilon_{n}\}\in(0,1)\) satisfies

-

(1)

\(\lim_{n\rightarrow\infty}\varepsilon_{n}=0\);

-

(2)

\(\sum_{n=0}^{\infty}\varepsilon_{n}=\infty\);

-

(3)

\(\lim_{n\rightarrow\infty} |\frac{1}{\varepsilon _{n+1}}-\frac{1}{\varepsilon_{n}} |=0\).

Then \(\{x_{n}\}\) converges strongly to a fixed point \(x^{*}\) of the nonexpansive mapping T, which is also the unique solution of the variational inequality (VI)

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{F(T)}f\), that is, \(P_{F(T)}f(x^{*})=x^{*}\).

Such a method for approximation of fixed points is called the viscosity approximation method. In 2015, Xu et al. [2] applied the viscosity technique to the implicit midpoint rule for nonexpansive mappings and proposed the following viscosity implicit midpoint rule (VIMR):

The idea was to use contractions to regularize the implicit midpoint rule for nonexpansive mappings. They also proved that VIMR converges strongly to a fixed point of T, which also solved VI (1.1).

In this paper, motivated and inspired by Xu et al. [2], we give the following generalized viscosity implicit rules:

and

for \(n\geqslant0\). We will prove that the generalized viscosity implicit rules (1.2) and (1.3) converge strongly to a fixed point of T under certain assumptions imposed on the sequences of parameters, which also solve VI (1.1).

The organization of this paper is as follows. In Section 2, we recall the notion of the metric projection, the demiclosedness principle of nonexpansive mappings and a convergence lemma. In Section 3, the strong convergence theorems of the generalized viscosity implicit rules (1.2) and (1.3) are proved under some conditions, respectively. Applications to a more general system of variational inequalities, the constrained convex minimization problem, and the K-mapping are presented in Section 4.

2 Preliminaries

Firstly, we recall the notion and some properties of the metric projection.

Definition 2.1

\(P_{C}: H \rightarrow C\) is called a metric projection if for every point \(x\in H\), there exists a unique nearest point in C, denoted by \(P_{C}x\), such that

Lemma 2.1

Let C be a nonempty closed convex subset of the real Hilbert space H and \(P_{C}: H \rightarrow C\) be a metric projection. Then

-

(1)

\(\| P_{C}x-P_{C}y\|^{2} \leqslant\langle x-y,P_{C}x-P_{C}y\rangle\), \(\forall x, y\in H\);

-

(2)

\(P_{C}\) is a nonexpansive mapping, i.e., \(\| P_{C}x-P_{C}y\|\leqslant\| x-y\|\), \(\forall x, y\in H\);

-

(3)

\(\langle x-P_{C}x,y-P_{C}x\rangle\leqslant0\), \(\forall x\in H, y\in C \).

In order to prove our results, we need the demiclosedness principle of nonexpansive mappings, which is quite helpful in verifying the weak convergence of an algorithm to a fixed point of a nonexpansive mapping.

Lemma 2.2

(The demiclosedness principle)

Let C be a nonempty closed convex subset of the real Hilbert space H and \(T : C \rightarrow C\) be a nonexpansive mapping with \(F(T)\neq\emptyset\). If \(\{x_{n}\}\) is a sequence in C such that

where → (resp. ⇀) denotes strong (resp. weak) convergence.

In addition, we also need the following convergence lemma.

Lemma 2.3

[2]

Assume that \(\{a_{n}\}\) is a sequence of nonnegative real numbers such that

where \(\{\gamma_{n}\}\) is a sequence in \((0,1)\) and \(\{\delta_{n}\}\) is a sequence such that:

-

(1)

\(\sum_{n=0}^{\infty}\gamma_{n}=\infty\);

-

(2)

\(\limsup_{n\rightarrow\infty}\frac{\delta_{n}}{\gamma _{n}}\leqslant0\) or \(\sum_{n=0}^{\infty}|\delta_{n}|<\infty\).

Then \(\lim_{n\rightarrow\infty}a_{n}=0\).

3 Main results

Theorem 3.1

Let C be a nonempty closed convex subset of the real Hilbert space H. Let \(T: C \rightarrow C\) be a nonexpansive mapping with \(F(T)\neq \emptyset\) and \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\{\alpha_{n}\}, \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\lim_{n\rightarrow\infty}\alpha_{n}=0\);

-

(2)

\(\sum_{n=0}^{\infty}\alpha_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a fixed point \(x^{*}\) of the nonexpansive mapping T, which is also the unique solution of the variational inequality

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{F(T)}f\), that is, \(P_{F(T)}f(x^{*})=x^{*}\).

Proof

We divide the proof into five steps.

Step 1. Firstly, we show that \(\{x_{n}\}\) is bounded.

Indeed, take \(p\in F(T)\) arbitrarily, we have

It follows that

Since \(\alpha_{n},s_{n}\in(0,1)\), \(1-(1-\alpha_{n})(1-s_{n})>0\). Moreover, by (3.2), we get

Thus, we have

By induction, we obtain

Hence, it turns out that \(\{x_{n}\}\) is bounded. Consequently, we deduce immediately that \(\{f(x_{n}) \}\), \(\{T (s_{n}x_{x}+(1-s_{n})x_{n+1} ) \}\) are bounded.

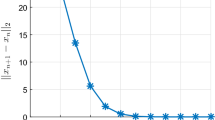

Step 2. Next, we prove that \(\lim_{n\rightarrow\infty} \| x_{n+1}-x_{n}\|=0\).

To see this, we apply (3.1) to get

where \(M_{1}>0\) is a constant such that

It turns out that

that is,

Note that \(0<\varepsilon\leqslant s_{n-1}\leqslant s_{n}<1\), we have

and

Thus,

Since \(\sum_{n=0}^{\infty}\alpha_{n}=\infty\) and \(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\), by Lemma 2.3, we can get \(\| x_{n+1}-x_{n}\|\rightarrow0\) as \(n\rightarrow\infty\).

Step 3. Now, we prove that \(\lim_{n\rightarrow\infty} \|x_{n}-Tx_{n}\|=0\).

In fact, we can see that

Then, by \(\lim_{n\rightarrow\infty}\|x_{n+1}-x_{n}\|=0\) and \(\lim_{n\rightarrow\infty}\alpha_{n}=0\), we get \(\|x_{n}-Tx_{n}\|\rightarrow0\) as \(n\rightarrow\infty\). Moreover, we have

Step 4. In this step, we claim that \(\limsup_{n\rightarrow\infty }\langle x^{*}-f(x^{*}), x^{*}-x_{n}\rangle\leqslant0\), where \(x^{*}=P_{F(T)}f(x^{*})\).

Indeed, take a subsequence \(\{x_{n_{i}}\}\) of \(\{x_{n}\}\) such that

Since \(\{x_{n}\}\) is bounded, there exists a subsequence of \(\{x_{n}\}\) which converges weakly to p. Without loss of generality, we may assume that \(x_{n_{i}}\rightharpoonup p\). From \(\lim_{n\rightarrow\infty}\|x_{n}-Tx_{n}\|=0\) and Lemma 2.2 we have \(p=Tp\), that is, \(p\in F(T)\). This together with the property of the metric projection implies that

Step 5. Finally, we show that \(x_{n}\rightarrow x^{*}\) as \(n\rightarrow\infty\). Here again \(x^{*}\in F(T)\) is the unique fixed point of the contraction \(P_{F(T)}f\) or in other words, \(x^{*}=P_{F(T)}f(x^{*})\).

In fact, we have

where

It turns out that

Solving this quadratic inequality for \(\|s_{n} x_{n}+(1-s_{n})x_{n+1}-x^{*}\|\) yields

This implies that

namely,

Then

which is reduced to the inequality

that is,

It follows that

Let

Since the sequence \(\{s_{n}\}\) satisfies \(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\), \(\lim_{n\rightarrow \infty}s_{n}\) exists; assume that

Then

Let \(\rho_{1}\) satisfy

then there exists an integer \(N_{1}\) big enough such that \(w_{n}>\rho_{1}\) for all \(n\geqslant N_{1}\). Hence, we have

for all \(n\geqslant N_{1}\). It turns out from (3.4) that, for all \(n\geqslant N_{1}\),

By \(\lim_{n\rightarrow\infty}\alpha_{n}=0\), (3.3), and Step 4, we have

From (3.5), (3.6), and Lemma 2.2, we can obtain

namely, \(x_{n}\rightarrow x^{*}\) as \(n\rightarrow\infty\). This completes the proof. □

Theorem 3.2

Let C be a nonempty closed convex subset of the real Hilbert space H. Let \(T: C \rightarrow C\) be a nonexpansive mapping with \(F(T)\neq \emptyset\) and \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\{\alpha_{n}\}, \{\beta_{n}\}, \{\gamma_{n}\}, \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\alpha_{n}+\beta_{n}+\gamma_{n}=1\) and \(\lim_{n\rightarrow\infty }\gamma_{n}=1\);

-

(2)

\(\sum_{n=0}^{\infty}\beta_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\) and \(\sum_{n=0}^{\infty}|\beta_{n+1}-\beta_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a fixed point \(x^{*}\) of the nonexpansive mapping T, which is also the unique solution of the variational inequality

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{F(T)}f\), that is, \(P_{F(T)}f(x^{*})=x^{*}\).

Proof

We divide the proof into five steps.

Step 1. Firstly, we show that \(\{x_{n}\}\) is bounded.

Indeed, take \(p\in F(T)\) arbitrarily, we have

It follows that

Since \(\gamma_{n},s_{n}\in(0,1)\), \(1-\gamma_{n}(1-s_{n})>0\). Moreover, by (3.8) and \(\alpha_{n}+\beta_{n}+\gamma_{n}=1\), we get

Thus, we have

By induction, we obtain

Hence, it turns out that \(\{x_{n}\}\) is bounded. Consequently, we deduce immediately that \(\{f(x_{n}) \}\), \(\{T (s_{n}x_{x}+(1-s_{n})x_{n+1} ) \}\) are bounded.

Step 2. Next, we prove that \(\lim_{n\rightarrow\infty} \| x_{n+1}-x_{n}\|=0\).

To see this, we apply (3.7) to get

where \(M_{2}>0\) is a constant such that

It turns out that

that is,

Note that \(0<\varepsilon\leqslant s_{n-1}\leqslant s_{n}<1\), we have

and

Thus,

Since \(\sum_{n=0}^{\infty}\beta_{n}=\infty\), \(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\), and \(\sum_{n=0}^{\infty}|\beta _{n+1}-\beta_{n}|<\infty\), by Lemma 2.3, we can get \(\| x_{n+1}-x_{n}\|\rightarrow0\) as \(n\rightarrow\infty\).

Step 3. Now, we prove that \(\lim_{n\rightarrow\infty} \|x_{n}-Tx_{n}\|=0\).

In fact, it can see that

Then, by \(\lim_{n\rightarrow\infty}\|x_{n+1}-x_{n}\|=0\) and \(\lim_{n\rightarrow\infty}\gamma_{n}=1\), we get \(\|x_{n}-Tx_{n}\|\rightarrow0\) as \(n\rightarrow\infty\). Similarly to (3.3), we also have

Step 4. In this step, we claim that \(\limsup_{n\rightarrow\infty }\langle x^{*}-f(x^{*}), x^{*}-x_{n}\rangle\leqslant0\), where \(x^{*}=P_{F(T)}f(x^{*})\).

The proof is the same as Step 4 in Theorem 3.1, here we omit it.

Step 5. Finally, we show that \(x_{n}\rightarrow x^{*}\) as \(n\rightarrow\infty\). Here again \(x^{*}\in F(T)\) is the unique fixed point of the contraction \(P_{F(T)}f\) or in other words, \(x^{*}=P_{F(T)}f(x^{*})\).

In fact, we have

where

It turns out that

Solving this quadratic inequality for \(\|s_{n} x_{n}+(1-s_{n})x_{n+1}-x^{*}\|\) yields

This implies that

namely,

Then

which is reduced to the inequality

that is,

It follows that

Let

Since the sequence \(\{s_{n}\}\) satisfies \(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\), \(\lim_{n\rightarrow \infty}s_{n}\) exists; assume that

Then

Let \(\rho_{2}\) satisfy

then there exists an integer \(N_{2}\) big enough such that \(y_{n}>\rho_{2}\) for all \(n\geqslant N_{2}\). Hence, we have

for all \(n\geqslant N_{2}\). It turns out from (3.10) that, for all \(n\geqslant N_{2}\),

By \(\lim_{n\rightarrow\infty}\alpha_{n}=\lim_{n\rightarrow\infty }\beta_{n}=0\), \(\lim_{n\rightarrow\infty}\gamma_{n}=1\), (3.9), and Step 4, we have

From (3.11), (3.12), and Lemma 2.2, we can obtain that

namely, \(x_{n}\rightarrow x^{*}\) as \(n\rightarrow\infty\). This completes the proof. □

4 Application

4.1 A more general system of variational inequalities

Let C be a nonempty closed convex subset of the real Hilbert space H and \(\{A_{i}\}_{i=1}^{N}:C\rightarrow H\) be a family of mappings. In [3], Cai and Bu considered the problem of finding \((x_{1}^{*},x_{2}^{*},\ldots, x_{N}^{*})\in C\times C\times \cdots\times C \) such that

Equation (4.1) can be rewritten

which is called a more general system of variational inequalities in Hilbert spaces, where \(\lambda_{i}>0\) for all \(i\in\{1, 2, \ldots, N\}\). We also have the following lemmas.

Lemma 4.1

[3]

Let C be a nonempty closed convex subset of the real Hilbert space H. For \(i=1,2,\ldots,N\), let \(A_{i}: C\rightarrow H\) be \(\delta_{i}\)-inverse-strongly monotone for some positive real number \(\delta_{i}\), namely,

Let \(G: C\rightarrow C\) be a mapping defined by

If \(0 <\lambda_{i}\leqslant2\delta_{i}\) for all \(i\in\{1,2,\ldots,N\} \), then G is nonexpansive.

Lemma 4.2

[4]

Let C be a nonempty closed convex subset of the real Hilbert space H. Let \(A_{i}:C\rightarrow H\) be a nonlinear mapping, where \(i=1,2,\ldots,N\). For given \(x_{i}^{*}\in C\), \(i=1,2,\ldots,N\), \((x_{1}^{*},x_{2}^{*},\ldots,x_{N}^{*})\) is a solution of the problem (4.1) if and only if

that is,

From Lemma 4.2, we know that \(x_{1}^{*}=G(x_{1}^{*})\), that is, \(x_{1}^{*}\) is a fixed point of the mapping G, where G is defined by (4.2). Moreover, if we find the fixed point \(x_{1}^{*}\), it is easy to get the other points by (4.3), in other words, we solve the problem (4.1). Applying Theorems 3.1 and 3.2, we get the results below.

Theorem 4.1

Let C be a nonempty closed convex subset of the real Hilbert space H. For \(i=1,2,\ldots,N\), let \(A_{i}: C\rightarrow H\) be \(\delta _{i}\)-inverse-strongly monotone for some positive real number \(\delta_{i}\) with \(F(G)\neq\emptyset\), where \(G: C\rightarrow C\) is defined by

Let \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\lambda_{i}\in(0,2\delta_{i})\), \(i=1,2,\ldots,N\), \(\{\alpha_{n}\} , \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\lim_{n\rightarrow\infty}\alpha_{n}=0\);

-

(2)

\(\sum_{n=0}^{\infty}\alpha_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a fixed point \(x^{*}\) of the nonexpansive mapping G, which is also the unique solution of the variational inequality

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{F(G)}f\), that is, \(P_{F(G)}f(x^{*})=x^{*}\).

Theorem 4.2

Let C be a nonempty closed convex subset of the real Hilbert space H. For \(i=1,2,\ldots,N\), let \(A_{i}: C\rightarrow H\) be \(\delta _{i}\)-inverse-strongly monotone for some positive real number \(\delta_{i}\) with \(F(G)\neq\emptyset\), where \(G: C\rightarrow C\) is defined by

Let \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\lambda_{i}\in(0,2\delta_{i})\), \(i=1,2,\ldots,N\), \(\{\alpha_{n}\} , \{\beta_{n}\}, \{\gamma_{n}\}, \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\alpha_{n}+\beta_{n}+\gamma_{n}=1\) and \(\lim_{n\rightarrow\infty }\gamma_{n}=1\);

-

(2)

\(\sum_{n=0}^{\infty}\beta_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\) and \(\sum_{n=0}^{\infty}|\beta_{n+1}-\beta_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a fixed point \(x^{*}\) of the nonexpansive mapping G, which is also the unique solution of the variational inequality

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{F(G)}f\), that is, \(P_{F(G)}f(x^{*})=x^{*}\).

4.2 The constrained convex minimization problem

Next, we consider the following constrained convex minimization problem:

where \(\varphi:C\rightarrow R\) is a real-valued convex function and assumes that the problem (4.4) is consistent (i.e., its solution set is nonempty). Let Ω denote its solution set.

For the minimization problem (4.4), if φ is (Fréchet) differentiable, then we have the following lemma.

Lemma 4.3

(Optimality condition) [5]

A necessary condition of optimality for a point \(x^{*}\in C\) to be a solution of the minimization problem (4.4) is that \(x^{*}\) solves the variational inequality

Equivalently, \(x^{*}\in C\) solves the fixed point equation

for every constant \(\lambda>0\). If, in addition, φ is convex, then the optimality condition (4.5) is also sufficient.

It is well known that the mapping \(P_{C}(I-\lambda A)\) is nonexpansive when the mapping A is δ-inverse-strongly monotone and \(0<\lambda<2\delta\). We therefore have the following results.

Theorem 4.3

Let C be a nonempty closed convex subset of the real Hilbert space H. For the minimization problem (4.4), assume that φ is (Fréchet) differentiable and the gradient ∇φ is a δ-inverse-strongly monotone mapping for some positive real number δ. Let \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\lambda\in(0,2\delta)\), \(\{\alpha_{n}\}, \{s_{n}\} \subset (0,1)\), satisfying the following conditions:

-

(1)

\(\lim_{n\rightarrow\infty}\alpha_{n}=0\);

-

(2)

\(\sum_{n=0}^{\infty}\alpha_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a solution \(x^{*}\) of the minimization problem (4.4), which is also the unique solution of the variational inequality

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{\Omega}f\), that is, \(P_{\Omega}f(x^{*})=x^{*}\).

Theorem 4.4

Let C be a nonempty closed convex subset of the real Hilbert space H. For the minimization problem (4.4), assume that φ is (Fréchet) differentiable and the gradient ∇φ is a δ-inverse-strongly monotone mapping for some positive real number δ. Let \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\lambda\in(0,2\delta)\), \(\{\alpha_{n}\}, \{\beta_{n}\}, \{ \gamma_{n}\}, \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\alpha_{n}+\beta_{n}+\gamma_{n}=1\) and \(\lim_{n\rightarrow\infty }\gamma_{n}=1\);

-

(2)

\(\sum_{n=0}^{\infty}\beta_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\) and \(\sum_{n=0}^{\infty}|\beta_{n+1}-\beta_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a solution \(x^{*}\) of the minimization problem (4.4), which is also the unique solution of the variational inequality

In other words, \(x^{*}\) is the unique fixed point of the contraction \(P_{\Omega}f\), that is, \(P_{\Omega}f(x^{*})=x^{*}\).

4.3 K-Mapping

In 2009, Kangtunyakarn and Suantai [6] gave K-mapping generated by \(T_{1},T_{2},\ldots,T_{N}\) and \(\lambda_{1}, \lambda_{2},\ldots ,\lambda_{N}\) as follows.

Definition 4.1

[6]

Let C be a nonempty convex subset of a real Banach space. Let \(\{T_{i}\} ^{N}_{i=1}\) be a finite family of mappings of C into itself and let \(\lambda_{1},\lambda_{2},\ldots, \lambda_{N}\) be real numbers such that \(0\leqslant\lambda_{i}\leqslant1\) for every \(i=1,2,\ldots, N\). We define a mapping \(K : C\rightarrow C\) as follows:

Such a mapping K is called the K-mapping generated by \(T_{1}, T_{2},\ldots, T_{N}\) and \(\lambda_{1}, \lambda_{2}, \ldots, \lambda_{N}\).

In 2014, Suwannaut and Kangtunyakarn [7] established the following main result for the K-mapping generated by \(T_{1}, T_{2},\ldots, T_{N}\) and \(\lambda_{1}, \lambda_{2},\ldots, \lambda_{N}\).

Lemma 4.4

[7]

Let C be a nonempty closed convex subset of the real Hilbert space H. For \(i=1, 2,\ldots, N\), let \(\{T_{i}\}^{N}_{i=1}\) be a finite family of \(\kappa_{i}\)-strictly pseudo-contractive mapping of C into itself with \(\kappa_{i}\leqslant\omega_{1}\) and \(\bigcap_{i=1}^{N}F(T_{i})\neq\emptyset\), namely, there exist constants \(\kappa_{i}\in[0, 1)\) such that

Let \(\lambda_{1},\lambda_{2},\ldots, \lambda_{N}\) be real numbers with \(0<\lambda_{i}<\omega_{2}\) for all \(i=1, 2,\ldots, N\) and \(\omega_{1}+\omega_{2}<1\). Let K be the K-mapping generated by \(T_{1}, T_{2}, \ldots, T_{N}\) and \(\lambda_{1}, \lambda_{2},\ldots, \lambda _{N}\). Then the following properties hold:

-

(1)

\(F(K)=\bigcap_{i=1}^{N}F(T_{i})\);

-

(2)

K is a nonexpansive mapping.

Based on Lemma 4.4, we have the following results.

Theorem 4.5

Let C be a nonempty closed convex subset of the real Hilbert space H. For \(i=1, 2,\ldots, N\), let \(\{T_{i}\}^{N}_{i=1}\) be a finite family of \(\kappa_{i}\)-strictly pseudo-contractive mapping of C into itself with \(\kappa_{i}\leqslant\omega_{1}\) and \(\bigcap_{i=1}^{N}F(T_{i})\neq\emptyset\). Let \(\lambda_{1},\lambda_{2},\ldots, \lambda_{N}\) be real numbers with \(0<\lambda_{i}<\omega_{2}\) for all \(i=1, 2,\ldots, N\) and \(\omega_{1}+\omega_{2}<1\). Let K be the K-mapping generated by \(T_{1}, T_{2}, \ldots, T_{N}\) and \(\lambda_{1}, \lambda_{2},\ldots, \lambda_{N}\). Let \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\{\alpha_{n}\}, \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\lim_{n\rightarrow\infty}\alpha_{n}=0\);

-

(2)

\(\sum_{n=0}^{\infty}\alpha_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a common fixed point \(x^{*}\) of the mappings \(\{T_{i}\}_{i=1}^{N}\), which is also the unique solution of the variational inequality

In other words, the point \(x^{*}\) is the unique fixed point of the contraction \(P_{\bigcap_{i=1}^{N}F(T_{i})}f\), that is, \(P_{\bigcap_{i=1}^{N}F(T_{i})}f(x^{*})=x^{*}\).

Theorem 4.6

Let C be a nonempty closed convex subset of the real Hilbert space H. For \(i=1, 2,\ldots, N\), let \(\{T_{i}\}^{N}_{i=1}\) be a finite family of \(\kappa_{i}\)-strictly pseudo-contractive mapping of C into itself with \(\kappa_{i}\leqslant\omega_{1}\) and \(\bigcap_{i=1}^{N}F(T_{i})\neq\emptyset\). Let \(\lambda_{1},\lambda_{2},\ldots, \lambda_{N}\) be real numbers with \(0<\lambda_{i}<\omega_{2}\) for all \(i=1, 2,\ldots, N\) and \(\omega_{1}+\omega_{2}<1\). Let K be the K-mapping generated by \(T_{1}, T_{2}, \ldots, T_{N}\) and \(\lambda_{1}, \lambda_{2},\ldots, \lambda_{N}\). Let \(f: C \rightarrow C\) be a contraction with coefficient \(\theta\in[0,1)\). Pick any \(x_{0} \in C\), let \(\{x_{n}\}\) be a sequence generated by

where \(\{\alpha_{n}\}, \{\beta_{n}\}, \{\gamma_{n}\}, \{s_{n}\} \subset(0,1)\), satisfying the following conditions:

-

(1)

\(\alpha_{n}+\beta_{n}+\gamma_{n}=1\) and \(\lim_{n\rightarrow\infty }\gamma_{n}=1\);

-

(2)

\(\sum_{n=0}^{\infty}\beta_{n}=\infty\);

-

(3)

\(\sum_{n=0}^{\infty}|\alpha_{n+1}-\alpha_{n}|<\infty\) and \(\sum_{n=0}^{\infty}|\beta_{n+1}-\beta_{n}|<\infty\);

-

(4)

\(0<\varepsilon\leqslant s_{n}\leqslant s_{n+1}<1\) for all \(n\geqslant0\).

Then \(\{x_{n}\}\) converges strongly to a common fixed point \(x^{*}\) of the mappings \(\{T_{i}\}_{i=1}^{N}\), which is also the unique solution of the variational inequality

In other words, the point \(x^{*}\) is the unique fixed point of the contraction \(P_{\bigcap_{i=1}^{N}F(T_{i})}f\), that is, \(P_{\bigcap_{i=1}^{N}F(T_{i})}f(x^{*})=x^{*}\).

References

Moudafi, A: Viscosity approximation methods for fixed-points problems. J. Math. Anal. Appl. 241, 46-55 (2000)

Xu, HK, Alghamdi, MA, Shahzad, N: The viscosity technique for the implicit midpoint rule of nonexpansive mappings in Hilbert spaces. Fixed Point Theory Appl. 2015, 41 (2015)

Cai, G, Bu, SQ: Hybrid algorithm for generalized mixed equilibrium problems and variational inequality problems and fixed point problems. Comput. Math. Appl. 62, 4772-4782 (2011)

Ke, YF, Ma, CF: A new relaxed extragradient-like algorithm for approaching common solutions of generalized mixed equilibrium problems, a more general system of variational inequalities and a fixed point problem. Fixed Point Theory Appl. 2013, 126 (2013)

Su, M, Xu, HK: Remarks on the gradient-projection algorithm. J. Nonlinear Anal. Optim. 1, 35-43 (2010)

Kangtunyakarn, A, Suantai, S: A new mapping for finding common solutions of equilibrium problems and fixed point problems of finite family of nonexpansive mappings. Nonlinear Anal., Theory Methods Appl. 71(10), 4448-4460 (2009)

Suwannaut, S, Kangtunyakarn, A: Strong convergence theorem for the modified generalized equilibrium problem and fixed point problem of strictly pseudo-contractive mappings. Fixed Point Theory Appl. 2014, 86 (2014)

Acknowledgements

The project is supported by the National Natural Science Foundation of China (Grant Nos. 11071041 and 11201074), Fujian Natural Science Foundation (Grant Nos. 2013J01006, 2015J01578) and R&D of Key Instruments and Technologies for Deep Resources Prospecting (the National R&D Projects for Key Scientific Instruments) under Grant No. ZDYZ2012-1-02-04.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ke, Y., Ma, C. The generalized viscosity implicit rules of nonexpansive mappings in Hilbert spaces. Fixed Point Theory Appl 2015, 190 (2015). https://doi.org/10.1186/s13663-015-0439-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13663-015-0439-6