Abstract

In this paper, we present a new bound for the Jensen gap with the help of a Green function. Using the bound, we deduce a converse of the Hölder inequality as well. Finally, we present some applications of the main result in information theory.

Similar content being viewed by others

1 Introduction

In the last few decades, the classical mathematical inequalities and their generalized versions for convex functions have recorded an exponential growth with significant impact in modern analysis [9, 16, 21, 22, 26–28, 30, 31, 33]. They have many applications in numerical quadrature, transform theory, probability, and statistical problems. Specially, they help to establish the uniqueness of the solutions of boundary value problems [8]. In the applied literature of mathematical inequalities, the Jensen inequality is a well-known, paramount and extensively used inequality [2–4, 7, 13, 32]. This inequality is of pivotal importance, because other classical inequalities, such as Hermite–Hadamard’s, Ky–Fan’s, Beckenbach–Dresher’s, Levinson’s, Minkowski’s, arithmetic–geometric, Young’s and Hölder’s inequalities, can be deduced from this inequality. The Jensen inequality and its generalizations, refinements, extensions and converses etc. have many applications in different fields of science, for example electrical engineering [11], mathematical statistics [23], financial economics [24], information theory, guessing and coding [1, 5, 6, 10, 12–15, 17–19, 25]. The discrete Jensen inequality can be found in [20], which states that:

If \(T:[\gamma _{1},\gamma _{2}]\rightarrow \mathbb{R}\) is a convex function and \(s_{i}\in [\gamma _{1},\gamma _{2}]\), \(u_{i}\geq 0\) for \(i=1,\ldots ,n\) with \(\sum_{i=1}^{n}u_{i}=U_{n}>0\), then

If the function T is concave then the reverse inequality holds in the above expression.

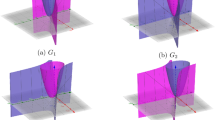

To derive the main result, we need the following Green function defined on \([\gamma _{1},\gamma _{2}]\times [\gamma _{1},\gamma _{2}]\) [29]:

This function G is continuous and convex with respect to the two variables z and x. Also, the following identity for the function \(T\in C^{2}[\gamma _{1},\gamma _{2}]\) holds, which is related to the Green function (1.1) [29]:

We organize the remaining paper as follows: In Sect. 2, we present a new bound for the Jensen gap for functions whose absolute values of the second derivative are convex, followed by a remark and a proposition presenting a converse of the Hölder inequality. In Sect. 3, we give applications of the main result for the Csiszár f-divergence functional, the Kullback–Leibler divergence, the Bhattacharyya coefficient, the Hellinger distance, the Rényi divergence, the \(\chi ^{2}\)-divergence, the Shannon entropy and triangular discrimination. Section 4 is devoted to the conclusion of the paper.

2 Main result

We begin by presenting our main result.

Theorem 2.1

Let\(T\in C^{2}[\gamma _{1},\gamma _{2}]\)be a function such that\(|T''|\)is convex and\(s_{i}\in [\gamma _{1},\gamma _{2}]\), \(u_{i}\geq 0\)for\(i=1,\ldots ,n\)with\(\sum_{i=1}^{n}u_{i}=U_{n}>0\), then

Proof

Using (1.2) in \(\frac{1}{U_{n}}\sum_{i=1}^{n}u_{i}T(s_{i})\) and \(T (\frac{1}{U_{n}} \sum_{i=1}^{n}u_{i}s_{i} )\), we get

and

Subtracting (2.3) from (2.2), we obtain

Taking the absolute value of (2.4), we get

Using a change of variable we write \(x=t\gamma _{1}+(1-t)\gamma _{2}\), \(t\in [0,1]\). Also, as \(G(z,x)\) is convex, so from (2.5) we have

where \(\bar{s}=\frac{1}{U_{n}}\sum_{i=1}^{n}u_{i}s_{i}\).

Since \(|T''|\) is a convex function, (2.6) becomes

Now by using the change of variable \(x=t\gamma _{1}+(1-t)\gamma _{2}\) for \(t\in [0,1]\), we obtain

Replacing \(s_{i}\) by s̄ in (2.8), we get

Also,

Replacing \(s_{i}\) by s̄ in (2.10), we get

Substituting the values from (2.8)–(2.11) in (2.7) and simplifying, we get the required result (2.1). □

Remark 2.2

If we use the Green functions \(G_{1}\)–\(G_{4}\) as given in [29] instead of G in Theorem 2.1, we obtain the same result (2.1).

As an application of the above result, we derive a converse of the Hölder inequality in the following proposition.

Proposition 2.3

Let\(q>1\), \(p\in \mathbb{R}^{+}-\{(2,3)\cup (0,1]\}\)such that\(\frac{1}{q}+\frac{1}{p}=1\). Also, let\([\gamma _{1},\gamma _{2}]\)be a positive interval and\((a_{1},\ldots ,a_{n})\), \((b_{1},\ldots ,b_{n})\)be two positive n-tuples such that\(\frac{\sum_{i=1}^{n}a_{i}b_{i}}{\sum_{i=1}^{n}b^{q}_{i}}\), \(a_{i}b_{i}^{- \frac{q}{p}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\), then

Proof

Let \(T(x)=x^{p}\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(T''(x)=p(p-1)x^{p-2}>0\) and \(|T''|''(x)=p(p-1)(p-2)(p-3)x^{p-4}>0\). This shows that T and \(|T''|\) are convex functions, therefore, using (2.1) for \(T(x)=x^{p}\), \(u_{i}=b_{i}^{q}\) and \(s_{i}=a_{i}b_{i}^{-\frac{q}{p}}\), we derive

By utilizing the inequality \(x^{\alpha }-y^{\alpha }\leq (x-y)^{\alpha }\), \(0\leq y\leq x\), \(\alpha \in [0,1]\) for \(x= (\sum_{i=1}^{n}a_{i}^{p} ) (\sum_{i=1}^{n}b_{i}^{q} )^{p-1}\), \(y= (\sum_{i=1}^{n}a_{i}b_{i} )^{p}\) and \(\alpha =\frac{1}{p}\), we obtain

3 Applications in information theory

Definition 3.1

(Csiszár f-divergence)

Let \([\gamma _{1},\gamma _{2}]\subset \mathbb{R}\) and \(f:[\gamma _{1},\gamma _{2}]\rightarrow \mathbb{R}\) be a function, then, for \(\mathbf{r}=(r_{1},\ldots ,r_{n})\in \mathbb{R}^{n}\) and \(\mathbf{w}=(w_{1},\ldots ,w_{n})\in \mathbb{R}^{n}_{+}\) such that \(\frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\) (\(i=1,\ldots ,n\)), the Csiszár f-divergence functional is defined as [17, 25]

Theorem 3.2

Let\(f\in C^{2}[\gamma _{1},\gamma _{2}]\)be a function such that\(|f''|\)is convex and\(\mathbf{r}=(r_{1},\ldots ,r_{n})\in \mathbb{R}^{n}\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\in \mathbb{R}^{n}_{+}\)such that\(\frac{\sum_{i=1}^{n}r_{i}}{\sum_{i=1}^{n}w_{i}}, \frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\), then

Proof

The result (3.1) can easily be deduced from (2.1) by choosing \(T=f\), \(s_{i}=\frac{r_{i}}{w_{i}}\), \(u_{i}= \frac{w_{i}}{\sum_{i=1}^{n}w_{i}}\). □

Definition 3.3

(Rényi divergence)

For two positive probability distributions \(\mathbf{r}=(r_{1},\ldots , r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\) and a nonnegative real number μ such that \(\mu \neq 1\), the Rényi divergence is defined as [17, 25]

Corollary 3.4

Let\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\). Also let\(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\)be positive probability distributions and\(\mu >1\)such that\(\sum_{i=1}^{n}w_{i} (\frac{r_{i}}{w_{i}} )^{\mu }, ( \frac{r_{i}}{w_{i}} )^{\mu -1}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\). Then

Proof

Let \(T(x)=-\frac{1}{\mu -1}\log x\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(T''(x)=\frac{1}{(\mu -1)x^{2}}>0\) and \(|T''|''(x)=\frac{6}{(\mu -1)x^{4}}>0\). This shows that T and \(|T''|\) are convex functions, therefore using (2.1) for \(T(x)=-\frac{1}{\mu -1}\log x\), \(u_{i}=r_{i}\) and \(s_{i}= (\frac{r_{i}}{w_{i}} )^{\mu -1}\), we derive (3.2). □

Definition 3.5

(Shannon entropy)

For a positive probability distribution \(\mathbf{w}=(w_{1},\ldots ,w_{n})\), the Shannon entropy (information divergence) is defined as [17, 25]

Corollary 3.6

Let\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\)and\(\mathbf{w}=(w_{1},\ldots ,w_{n})\)be a positive probability distribution such that\(\frac{1}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\). Then

Proof

Let \(f(x)=-\log x\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(f''(x)=\frac{1}{x^{2}}>0\) and \(|f''|''(x)=\frac{6}{x^{4}}>0\). This shows that f and \(|f''|\) are convex functions, therefore using (3.1) for \(f(x)=-\log x\) and \((r_{1},\ldots ,r_{n})=(1,\ldots ,1)\), we get (3.3). □

Definition 3.7

(Kullback–Leibler divergence)

For two positive probability distributions \(\mathbf{r}=(r_{1},\ldots ,r_{n})\) and \(\mathbf{w}=(w_{1},\ldots ,w_{n})\), the Kullback–Leibler divergence is defined as [17, 25]

Corollary 3.8

Let\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\)and\(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\)be positive probability distributions such that\(\frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\). Then

Proof

Let \(f(x)=x\log x\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(f''(x)=\frac{1}{x}>0\) and \(|f''|''(x)=\frac{2}{x^{3}}>0\). This shows that f and \(|f''|\) are convex functions, therefore using (3.1) for \(f(x)=x\log x\), we get (3.4). □

Definition 3.9

(\(\chi ^{2}\)-divergence)

Let \(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\) be positive probability distributions, then \(\chi ^{2}\)-divergence is defined as [25]:

Corollary 3.10

If\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\)and\(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\)are two positive probability distributions such that\(\frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\), then

Proof

Let \(f(x)=(x-1)^{2}\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(f''(x)=2>0\) and \(|f''|''(x)=0\). This shows that f and \(|f''|\) are convex functions, therefore using (3.1) for \(f(x)=(x-1)^{2}\), we obtain (3.5). □

Definition 3.11

(Bhattacharyya coefficient)

Bhattacharyya coefficient for two positive probability distributions \(\mathbf{r}=(r_{1},\ldots ,r_{n})\) and \(\mathbf{w}=(w_{1},\ldots ,w_{n})\) is defined by [25]

The Bhattacharyya distance is given by \(D_{b}(\mathbf{r},\mathbf{w})=-\log C_{b}(\mathbf{r},\mathbf{w})\).

Corollary 3.12

Let\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\)and\(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\)be two positive probability distributions such that\(\frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\). Then

Proof

Let \(f(x)=-\sqrt{x}\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(f''(x)=\frac{1}{4x^{\frac{3}{2}}}>0\) and \(|f''|''(x)=\frac{15}{16x^{\frac{7}{2}}}>0\). This shows that f and \(|f''|\) are convex functions, therefore using (3.1) for \(f(x)=-\sqrt{x}\), we obtain (3.6). □

Definition 3.13

(Hellinger distance)

For two positive probability distributions \(\mathbf{r}=(r_{1},\ldots , r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\) the Hellinger distance is defined as [25]

Corollary 3.14

If\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\)and\(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\)are positive probability distributions such that\(\frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\). Then

Proof

Let \(f(x)=\frac{1}{2}(1-\sqrt{x})^{2}\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(f''(x)=\frac{1}{4x^{\frac{3}{2}}}>0\) and \(|f''|''(x)=\frac{15}{16x^{\frac{7}{2}}}>0\). This shows that f and \(|f''|\) are convex functions, therefore using (3.1) for \(f(x)=\frac{1}{2}(1-\sqrt{x})^{2}\), we deduce (3.7). □

Definition 3.15

(Triangular discrimination)

For two positive probability distributions \(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\), the triangular discrimination is defined as [25]

Corollary 3.16

Let\([\gamma _{1},\gamma _{2}]\subseteq \mathbb{R}^{+}\)and\(\mathbf{r}=(r_{1},\ldots ,r_{n})\), \(\mathbf{w}=(w_{1},\ldots ,w_{n})\)be positive probability distributions such that\(\frac{r_{i}}{w_{i}}\in [\gamma _{1},\gamma _{2}]\)for\(i=1,\ldots ,n\). Then

Proof

Let \(f(x)=\frac{(x-1)^{2}}{(x+1)}\), \(x\in [\gamma _{1},\gamma _{2}]\), then \(f''(x)=\frac{8}{(x+1)^{3}}>0\) and \(|f''|''(x)=\frac{96}{(x+1)^{5}}>0\). This shows that f and \(|f''|\) are convex functions, therefore using (3.1) for \(f(x)=\frac{(x-1)^{2}}{(x+1)}\), we get (3.8). □

4 Conclusion

A growing interest in applying the notion of convexity to various fields of science has been recorded, in the last few decades. Convex functions have some rational properties such as differentiability, monotonicity and continuity, which help in their applications. The Jensen inequality generalizes and improves the notion of classical convexity. This inequality and its extensions, improvements, refinements and converses etc and bounds for its gap resolve some difficulties in the modeling of some physical phenomena. For such a purpose, in this paper we have derived a new bound for the Jensen gap for functions whose absolute value of second derivative are convex. Based on this bound, we have deduced a new converse of the Hölder inequality as well. Finally, we have demonstrated new bounds for the Csiszár, Rényi, \(\chi ^{2}\) and Kullback–Leibler divergences etc. in information theory as applications of the main result. The idea and technique used in this paper may be extended to other inequalities to reduce the number of difficulties in the applied literature of mathematical inequalities.

References

Adil Khan, M., Al-sahwi, Z.M., Chu, Y.-M.: New estimations for Shannon and Zipf–Mandelbrot entropies. Entropy 20(8), Article ID 608 (2018)

Adil Khan, M., Ali Khan, G., Ali, T., Kilicman, A.: On the refinement of Jensen’s inequality. Appl. Math. Comput. 262, 128–135 (2015)

Adil Khan, M., Hanif, M., Hameed Khan, Z.A., Ahmad, K., Chu, Y.-M.: Association of Jensen’s inequality for s-convex function with Csiszár divergence. J. Inequal. Appl. 2019, Article ID 162 (2019)

Adil Khan, M., Khalid, S., Pečarić, J.: Improvement of Jensen’s inequality in terms of Gâteaux derivatives for convex functions in linear spaces with applications. Kyungpook Math. J. 52, 495–511 (2012)

Adil Khan, M., Pečarić, Ð., Pečarić, J.: Bounds for Shannon and Zipf–Mandelbrot entropies. Math. Methods Appl. Sci. 40(18), 7316–7322 (2017)

Adil Khan, M., Pečarić, Ð., Pečarić, J.: Bounds for Csiszár divergence and hybrid Zipf–Mandelbrot entropy. Math. Methods Appl. Sci. 42(18), 7411–7424 (2019)

Adil Khan, M., Pečarić, J., Chu, Y.-M.: Refinements of Jensen’s and McShane’s inequalities with applications. AIMS Math. 5(5), 4931–4945 (2020)

Agarwal, R., Yadav, M.P., Baleanu, D., Purohit, S.D.: Existence and uniqueness of miscible flow equation through porous media with a nonsingular fractional derivative. AIMS Math. 5(2), 1062–1073 (2020)

Bainov, D., Simeonov, P.: Integral Inequalities and Applications. Kluwer Academic, Boston (1992)

Budimir, I., Dragomir, S.S., Pečarić, J.: Further reverse results for Jensen’s discrete inequality and applications in information theory. J. Inequal. Pure Appl. Math. 2(1), Article 5 (2001)

Cloud, M.J., Drachman, B.C., Lebedev, L.P.: Inequalities with Applications to Engineering. Springer, Heidelberg (2014)

Dragomir, S.S.: A converse of the Jensen inequality for convex mappings of several variables and applications. Acta Math. Vietnam. 29(1), 77–88 (2004)

Dragomir, S.S.: A new refinement of Jensen’s inequality in linear spaces with applications. Math. Comput. Model. 52, 1497–1505 (2010)

Dragomir, S.S.: A refinement of Jensen’s inequality with applications for f-divergence measures. Taiwan. J. Math. 14(1), 153–164 (2010)

Dragomir, S.S., Goh, C.J.: A counterpart of Jensen’s discrete inequality for differentiable convex mappings and applications in information theory. Math. Comput. Model. 24(2), 1–11 (1996)

Gujrati, P.D.: Jensen inequality and the second law. Phys. Lett. A (2020). https://doi.org/10.1016/j.physleta.2020.126460

Horváth, L., Pečarić, Ð., Pečarić, J.: Estimations of f- and Rényi divergences by using a cyclic refinement of the Jensen’s inequality. Bull. Malays. Math. Sci. Soc. 42(3), 933–946 (2019)

Khan, K.A., Niaz, T., Pečarić, Ð., Pečarić, J.: Refinement of Jensen’s inequality and estimation of f-and Rényi divergence via Montgomery identity. J. Inequal. Appl. 2018, Article ID 318 (2018)

Khan, S., Adil Khan, M., Chu, Y.-M.: Converses of the Jensen inequality derived from the Green functions with applications in information theory. Math. Methods Appl. Sci. 43, 2577–2587 (2020)

Khan, S., Adil Khan, M., Chu, Y.-M.: New converses of Jensen inequality via Green functions with applications. Rev. R. Acad. Cienc. Exactas Fís. Nat., Ser. A Mat. 114, 114 (2020). https://doi.org/10.1007/s13398-020-00843-1

Kian, M.: Operator Jensen inequality for superquadratic functions. Linear Algebra Appl. 456, 82–87 (2014)

Kim, J.-H.: Further improvement of Jensen inequality and application to stability of time-delayed systems. Automatica 64, 121–125 (2016)

Liao, J.G., Berg, A.: Sharpening Jensen’s inequality. Am. Stat. 73(3), 278–281 (2019)

Lin, Q.: Jensen inequality for superlinear expectations. Stat. Probab. Lett. 151, 79–83 (2019)

Lovričević, N., Pečarić, Ð., Pečarić, J.: Zipf–Mandelbrot law, f-divergences and the Jensen-type interpolating inequalities. J. Inequal. Appl. 2018, Article ID 36 (2018)

Mishra, A.M., Baleanu, D., Tchier, F., Purohit, S.D.: Certain results comprising the weighted Chebyshev functional using pathway fractional integrals. Mathematics 7(10), Article ID 896 (2019)

Mishra, A.M., Kumar, D., Purohit, S.D.: Unified integral inequalities comprising pathway operators. AIMS Math. 5(1), 399–407 (2020)

Niaz, T., Khan, K.A., Pečarić, J.: On refinement of Jensen’s inequality for 3-convex function at a point. Turk. J. Ineq. 4(1), 70–80 (2020)

Pečarić, Ð., Pečarić, J., Rodić, M.: About the sharpness of the Jensen inequality. J. Inequal. Appl. 2018, Article ID 337 (2018)

Purohit, S.D., Jolly, N., Bansal, M.K., Singh, J., Kumar, D.: Chebyshev type inequalities involving the fractional integral operator containing multi-index Mittag-Leffler function in the kernel. Appl. Appl. Math. Spec. Issue 6, 29–38 (2020)

Rashid, S., Noor, M.A., Noor, K.I., Safdar, F., Chu, Y.-M.: Hermite–Hadamard type inequalities for the class of convex functions on time scale. Mathematics 7, Article ID 956 (2019)

Song, Y.-Q., Adil Khan, M., Zaheer Ullah, S., Chu, Y.-M.: Integral inequalities involving strongly convex functions. J. Funct. Spaces 2018, Article ID 6595921 (2018)

Wang, M.-K., Zhang, W., Chu, Y.-M.: Monotonicity, convexity and inequalities involving the generalized elliptic integrals. Acta Math. Sci. 39B(5), 1440–1450 (2019)

Acknowledgements

The authors would like to express their sincere thanks to the editor and the anonymous reviewers for their helpful comments and suggestions.

Availability of data and materials

Not applicable.

Funding

This research was supported by the Natural Science Foundation of China (11701176, 61673169, 11301127, 11626101, 11601485), and the Science and Technology Research Program of Zhejiang Educational Committee (Y201635325).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Khan, S., Adil Khan, M., Butt, S.I. et al. A new bound for the Jensen gap pertaining twice differentiable functions with applications. Adv Differ Equ 2020, 333 (2020). https://doi.org/10.1186/s13662-020-02794-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02794-8