Abstract

In this paper we study the numerical method for a time-fractional Black–Scholes equation, which is used for option pricing. The solution of the fractional-order differential equation may be singular near certain domain boundaries, which leads to numerical difficulty. In order to capture the singular phenomena, a numerical method based on an adaptive moving mesh is developed. A finite difference method is used to discretize the time-fractional Black–Scholes equation and error analysis for the discretization scheme is derived. Then, an adaptive moving mesh based on an a priori error analysis is established by equidistributing monitor function. Numerical experiments support these theoretical results.

Similar content being viewed by others

1 Introduction

In this paper we consider the following time-fractional Black–Scholes (B–S) equation:

where V is the value of a European call option with strike price E and expiry date T, x is the asset price, r̄ is the risk-free interest rate, σ̄ is the volatility of the underlying asset, \(\frac{\partial _{R}^{\alpha }V}{\partial \tau ^{ \alpha }}\) is the right modified Riemann–Liouville derivative defined as

Here we assume that \(\bar{\sigma }^{2}(\tau )\geq \mu >0, \beta ^{*} \geq \bar{r}(\tau )\geq \beta >0\). We note that when the functions \(\bar{\sigma }(\tau )\) and \(\bar{r}(\tau )\) are constants, problem (1.1)–(1.3) reduces to the Wyss time-fractional Black–Schole equation [26].

There are a few analytical and numerical methods for the valuation of the time-fractional B–S equations. The analytical methods for the time-fractional B–S equations are usually based on integral transform methods [5, 12, 26], homotopy analysis methods [17], or wavelet based hybrid methods [11], etc. But the solutions obtained by analytical methods usually take the form of a convolution of some special functions or an infinite series with an integral, which makes them hard to calculate. Therefore, efficient numerical methods become essential. Zhang et al. [27] and Staelen and Hendy [7] developed implicit finite difference methods for pricing the barrier options under the Wyss’ time-fractional B–S equation. Golbabai and Nikan [8] also proposed a numerical approach based on the moving least-squares method to approximate the Wyss’ time-fractional B–S equation for pricing the barrier options. Chen [4] described a new operator splitting method for pricing American options under the Wyss’ time-fractional B–S equation. Song and Wang [23] presented an implicit difference method for the Jumarie time-fractional B–S equation. Koleva and Vulkov [15] derived a weighted finite difference scheme for the Jumarie time-fractional B–S equation. Kalantari and Shahmorad [13] used a Grünwald–Letnikov scheme to solve the Jumarie time-fractional B–S equation for pricing the American put option. But these papers only study the case that the exact solutions of the B–S equations are sufficiently smooth.

As discussed in [24], the exact solutions of the time-fractional B–S equations may exhibit singularity. Based on a priori information of the exact solution, Cen et al. [2] presented an integral discretization scheme on a priori graded mesh for the Wyss time-fractional B–S equation. When the coefficients of the time-fractional B–S equation are related to time τ, a priori information of the exact solution is difficult to obtain. In this paper, an adaptive moving mesh method is developed in order to deal with the possible singularity effectively for the time-fractional B–S equation. A finite difference method is used to discretize the time-fractional Black–Scholes equation and error analysis for the discrete scheme is derived. Then, an adaptive moving mesh based on a priori error analysis is established by equidistribution of a positive monitor function which involves the second-order time derivative of the computed solution. Numerical experiments are provided to validate the theoretical results.

The remainder of the paper is organized as follows. Some theoretical results on the continuous time-fractional B–S equation are described in Sect. 2. The discretization scheme is derived in Sect. 3. An adaptive algorithm is established in Sect. 4. Finally, numerical experiments are presented in Sect. 5.

Notation. Throughout the paper, C will denote a generic positive constant that is independent of the mesh. Note that C is not necessarily the same at each occurrence. To simplify the notation we set \(g_{i}^{j}=g(x_{i},t_{j})\) for any function g on the domain of definition. We use the (pointwise) maximum norm on the domain of definition Ω by \(\Vert \cdot \Vert _{\bar{\varOmega }}\).

2 The continuous problem

By using the change of variables \(t=T-\tau, u(x,t)=V(x,T-t)\) and the relationship between the Riemann–Liouville derivative and the Caputo derivative, it is shown in [2, 4] that the model can be reformulated into

where \(\sigma (t)=\bar{\sigma }(T-t), r(t)=\bar{r}(T-t)\), and \(\frac{\partial ^{\alpha }u}{\partial t^{\alpha }}\) is the Caputo derivative defined as

The infinite domain \(\mathbb{R}^{+}\times (0,T]\) is truncated into \(\varOmega =(0,X)\times (0,T]\) for numerical calculation. The boundary conditions \(X=4E\) and \(u(X,t)=X-E e^{-rt}\) are chosen for the European call option based on Wilmott et al.’s estimate [25]. Normally, the error caused by this truncation can be neglected. Therefore, in the remaining of this paper we will consider the following time-fractional differential equation:

where

Let \(W^{1}_{t} ((0,T] )\) denote the space of functions \(w(t)\in C^{1} ( (0,T ] )\) such that \(w'\) is Lebesgue integrable in \((0,T ]\). The following result for the differential operator L can be obtained as Theorem 3 of [21] on the function space \(C(\bar{\varOmega })\cap W _{t}^{1} ( (0,T ] )\cap C_{x}^{2} ((0,X) )\).

Lemma 2.1

(Maximum principle)

Let \(u(x,t)\in C(\bar{\varOmega }) \cap W_{t}^{1} ( (0,T ] )\cap C_{x}^{2} ((0,X) )\). If \(Lu(x,t)\geq 0\)for \(x\in \varOmega \)with \(u(0,t)\geq 0,\ u(X,t) \geq 0\)for \(t\in (0,T]\)and \(u(x,0)\geq 0\)for \(x\in (0,X)\), then \(u(x,t)\geq 0\)for all \(x\in \bar{\varOmega }\).

By applying this maximum principle the following stability result can be obtained as Theorem 4 of [21].

Lemma 2.2

(Stability result)

The exact solution \(u(x,t)\)of problem (2.4)–(2.6) satisfies the following stability estimate:

Referring to [1, 2, 10, 18, 22, 24] it can be further seen that the time derivatives of the exact solution may blow up at \(t=0\), which complicates the construction of the discretization scheme.

3 Discretization scheme

Let \(\varOmega ^{K}= \{ 0=t_{0}< t_{1}<\cdots <t_{K}=T \} \) and \(\varOmega ^{N}= \{ 0=x_{0}< x_{1}<\cdots <x_{N}=X \} \). An approximation to the time-fractional derivative on \(\varOmega ^{K}\) can be obtained by the quadrature formula,

where \(D_{t}^{-}u_{i}^{k}=\frac{u^{k}_{i}-u_{i}^{k-1}}{\triangle t _{k}}\) with \(\triangle t_{k}=t_{k}-t_{k-1}\).

Since the Black–Scholes differential operator becomes a convection-dominated when the volatility or the asset price is small, a piecewise uniform mesh \(\varOmega ^{N}\) is constructed as that in [3] for the spatial discretization to ensure the stability:

where

Then the mesh sizes \(h_{i}=x_{i}-x_{i-1}\) satisfy

On the piecewise uniform mesh \(\varOmega ^{N}\) we apply a central difference scheme to approximate the spatial derivatives.

Hence, combining the time discretization scheme with spatial discretization scheme we can derive the fully discretized scheme on \(\varOmega ^{N\times K}\equiv \varOmega ^{N}\times \varOmega ^{K}\) as follows:

where \(U_{i}^{j}\) is the approximation solution of \(u(x_{i},t_{j})\),

and

Next we show that the matrix associated with the discrete operator \(L^{N,K}\) is an M-matrix. Hence the scheme is maximum-norm stable.

Lemma 3.1

(Discrete maximum principle)

The operator \(L^{N,K}\)defined by (3.6) on the mesh \(\varOmega ^{N\times K}\)satisfies a discrete maximum principle, i.e. if \(v^{j}_{i}\)is a mesh function that satisfies \(v_{0}^{j}\geq 0,\ v^{j}_{N}\geq 0\ (0\leq j\leq K)\), \(v_{i}^{0}\geq 0\ (0\leq i \leq N) \)and \(L^{N,K}v^{j}_{i}\geq 0 \ (1 \leq i< N,\ 0< j \leq K)\), then \(v^{j}_{i}\geq 0\)for all \(i,j\).

Proof

Let

and

By simple calculation we have

for \(2\leq i< N\). It is easy to show

and

where \(\xi _{k}\in (t_{k-1},t_{k})\). Hence, it is easy to see that the matrix associated with \(L^{N,K}\) is a strictly diagonally dominant L-matrix, which means that it is an M-matrix. By applying the same argument as that in [14, Lemma 3.1], it is straightforward to obtain the result of our lemma. □

The next lemma gives us a useful formula for the truncation error.

Lemma 3.2

LetUbe the solution of the difference scheme (3.3)–(3.5) andube the exact solution of problem (2.4)–(2.6). Then we have the following truncation error estimates:

for \(1\leq i< N\)and \(1\leq j\leq K\), whereCis a positive constant independent of the mesh.

Proof

It follows from (3.3) and (3.6) that

For \(k< j\) we use an integration by parts as that in [24] to obtain

where we have used the mean value theorem with \(\gamma _{1},\gamma _{2}, \gamma _{3}\in (t_{k-1},t_{k} )\). Hence, applying a Taylor formula with the integral form of the remainder we can obtain

for \(k< j\). Similarly, we have

where we also have used the mean value theorem with \(\gamma _{4},\gamma _{5}\in (t_{j-1},t_{j} )\). By applying Taylor’s formulas about \(x_{i}\) we also have

and

Combining (3.7) with (3.8)–(3.11) we have

From this we complete the proof. □

Based on the properties of the European option [2, 3, 25] we assume that the solution u satisfies the following regularities:

Then applying the maximum principle and the truncation error estimates we have the following bound.

Theorem 3.3

LetUbe the solution of difference scheme (3.3)–(3.5) andube the exact solution of problem (2.4)–(2.6). Then, under the assumption (3.13) we have the following bound:

whereCis a positive constant independent of the mesh.

4 Adaptive time meshes via equidistribution

Since the solution \(u(x,t)\) of the problem exhibits singularity at \(t=0\), one has to use adapted nonuniform time meshes which are fine inside the singular region and coarse in the outer region. To obtain such a mesh, we use the idea of equidistribution principle which has been applied to a wide range of practical problems (see, e.g. [6, 9, 16, 19, 20]). A mesh \(\varOmega ^{K}\) is said to be equidistributed, if

where \(\bar{M}(t)\) is called the monitor function. In accordance with the estimate (3.14) in Theorem 3.3, a piecewise constant function is chosen to be the monitor function \(M(x_{i},t)\), i.e.,

where

This type of monitor function has been used in some literature; see e.g., Das and Vigo-Aguiar [6], Gowrisankar and Natesan [9] and Kopteva et al. [16].

In order to solve the equidistribution problem (4.1), we construct the following iteration algorithm for the time discretization:

Step 1. Take the uniform mesh \(\varOmega ^{N,K,(0)}= \{ (x_{i},t_{j}^{(0)} ) \vert 0\leq i\leq N, 0\leq j\leq K \} \) as the initial mesh for the iteration and go to Step 2 with \(k=0\).

Step 2. Compute the discrete solution \(\{ U_{i}^{j,(k)} \} \) satisfying (3.3)–(3.5) with the help of the mesh \(\varOmega ^{N,K,(k)}= \{ (x_{i},t_{j}^{(k)} ) \vert 0\leq i\leq N, 0\leq j\leq K \} \). Set \(\triangle t_{j}^{(k)}=t_{j}^{(k)}-t_{j-1}^{(k)}\) for each j. Compute

and find \(i^{*}\) such that

where \(M_{i}^{p,(k)}\) is the value of the monitor function computed at the pth interior node of the current mesh. We set \(M_{i}^{0,(k)}=M _{i}^{1,(k)}\) and \(M_{i}^{K,(k)}=M_{i}^{K-1,(k)}\).

Step 3. Choose a constant \(C_{0}>1\). The stopping criterion for the iteration algorithm is

If it holds true, then go to Step 5, else continue with Step 4.

Step 4. Set \(Y_{j}^{(k)}=j\varPhi _{i^{*}}^{K,(k)}/K\). Interpolate \((Y_{j}^{(k)},t_{j}^{(k+1)} )\) to \((\varPhi _{i^{*}} ^{j,(k)},t_{j}^{(k)} )\) by using the piecewise linear interpolation. Then generate a new mesh

Set \(k=k+1\) and return to Step 2.

Step 5. Set \(\varOmega ^{N,K,*}=\varOmega ^{N,K,(k)}\) and \(\{ U_{i}^{j,*} \} = \{ U_{i}^{j,(k)} \} \), then stop.

5 Numerical experiments

In this section we carry out numerical experiments for two test problems to indicate the efficiency and accuracy of our numerical scheme.

Example 5.1

A fractional differential equation with a known exact solution:

with \(\sigma =0.1, r=0.06\) and \(0<\alpha <1\), where \(f(x,t)\) is chosen such that the exact solution is \(u(x,t)=t^{\alpha }+e^{x}+x+1\).

The maximum error is denoted by

and the corresponding convergence rate is computed by

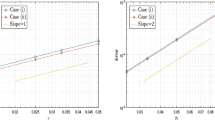

The numerical results on an adaptive moving mesh for Example 5.1 are tabulated in Table 1. In order to aid the reader’s understanding of the mesh computed by the algorithm when solving Example 5.1, Fig. 1, which should be read from bottom to top, shows the time mesh after each iteration. Figure 2 represents the final computed time mesh for Example 5.1 with \(\alpha =0.2\), which shows that the mesh points are concentrated near \(t=0\).

Evolution of the time mesh for Example 5.1 with \(\alpha =0.2\)

Final computed time mesh for Example 5.1 with \(\alpha =0.2\)

Example 5.2

A time-fractional Black–Scholes equation without a known exact solution:

with parameters: \(\sigma =0.3(1+t), r=0.04(1+\sin t), E=10, T=1\) and \(X=40\).

The double mesh principle is used to estimate the errors and compute the experiment convergence rates. Set \(\bar{U}^{N,K}(x,t)\) be a linear interpolation of the approximated solution \(\{ U_{i}^{j} \} \) with spatial discretization parameter N and time discretization parameter K and \(\bar{U}^{N,K}(x_{i},t_{j})\) be the value of the function \(\bar{U}^{N,K}(x,t)\) at mesh point \((x_{i},t _{j})\). Then the maximum errors

and the convergence rates

for Example 5.2 are listed in Table 2. The generation of adapted moving meshes after each iteration for the time discretization is depicted in Fig. 3. The final computed time mesh for the time-fractional Black–Scholes equation with \(\alpha =0.2\) is depicted in Fig. 4, which also shows that the mesh points are concentrated near \(t=0\). The computed option value U is depicted in Fig. 5, which shows that the numerical solution by our method is non-oscillatory.

Evolution of the time mesh for Example 5.2 with \(\alpha =0.2\)

Final computed time mesh for Example 5.2 with \(\alpha =0.2\)

Computed option value U for Example 5.2 with \(\alpha =0.2\)

Tables 1 and 2 show that the computed solution converges to the exact solution on an adaptive moving mesh with first order accuracy and the numerical results do not depend strongly on the value of α, which supports the convergence estimate of Theorem 3.3. From these results we confirm that our method with an adaptive moving mesh is more accurate than the method on the uniform mesh.

References

Cen, Z., Huang, J., Le, A., Xu, A.: A second-order scheme for a time-fractional diffusion equation. Appl. Math. Lett. 90, 79–85 (2019)

Cen, Z., Huang, J., Xu, A., Le, A.: Numerical approximation of a time-fractional Black–Scholes equation. Comput. Math. Appl. 75(8), 2874–2887 (2018)

Cen, Z., Le, A.: A robust and accurate finite difference method for a generalized Black–Scholes equation. J. Comput. Appl. Math. 235(13), 3728–3733 (2011)

Chen, C., Wang, Z., Yang, Y.: A new operator splitting method for American options under fractional Black–Scholes models. Comput. Math. Appl. 77, 2130–2144 (2019)

Chen, W., Xu, X., Zhu, S.: Analytically pricing double barrier options based on a time-fractional Black–Scholes equation. Comput. Math. Appl. 69(12), 1407–1419 (2015)

Das, P., Vigo-Aguiar, J.: Parameter uniform optimal order numerical approximation of a class of singularly perturbed system of reaction diffusion problems involving a small perturbation parameter. J. Comput. Appl. Math. 354, 533–544 (2019)

De Staelen, R.H., Hendy, A.S.: Numerically pricing double barrier options in a time-fractional Black–Scholes model. Comput. Math. Appl. 74(6), 1166–1175 (2017)

Golbabai, A., Nikan, O.: A computational method based on the moving least-squares approach for pricing double barrier options in a time-fractional Black–Scholes model. Comput. Econ. (2019, in press). https://doi.org/10.1007/s10614-019-09880-4

Gowrisankar, S., Natesan, S.: An efficient robust numerical method for singularly perturbed Burgers’ equation. Appl. Math. Comput. 346, 385–394 (2019)

Gracia, J.L., O’Riordan, E., Stynes, M.: A fitted scheme for a Caputo initial-boundary value problem. J. Sci. Comput. 76, 583–609 (2018)

Hariharan, G.: An efficient wavelet based approximation method to time fractional Black–Scholes European option pricing problem arising in financial market. Appl. Math. Sci. 69(7), 3445–3456 (2013)

Jumarie, G.: Derivation and solutions of some fractional Black–Scholes equations in coarse-grained space and time. Application to Merton’s optimal portfolio. Comput. Math. Appl. 59(3), 1142–1164 (2010)

Kalantari, R., Shahmorad, S.: A stable and convergent finite difference method for fractional Black–Scholes model of American put option pricing. Comput. Econ. 53(1), 191–205 (2019)

Kellogg, R.B., Tsan, A.: Analysis of some difference approximations for a singular perturbation problem without turning points. Math. Comput. 32(144), 1025–1039 (1978)

Koleva, M.N., Vulkov, L.G.: Numerical solution of time-fractional Black–Scholes equation. Comput. Appl. Math. 36(4), 1699–1715 (2017)

Kopteva, N., Madden, N., Stynes, M.: Grid equidistribution for reaction-diffusion problems in one dimension. Numer. Algorithms 40(3), 305–322 (2005)

Kumar, S., Kumar, D., Singh, J.: Numerical computation of fractional Black–Scholes equation arising in financial market. Egypt. J. Basic Appl. Sci. 1, 177–193 (2014)

Liao, H.L., Li, D., Zhang, J.: Sharp error estimate of a nonuniform L1 formula for time-fractional reaction-subdiffusion equations. SIAM J. Numer. Anal. 56, 1112–1133 (2018)

Liu, L.-B., Chen, Y.: An adaptive moving grid method for a system of singularly perturbed initial value problems. Appl. Math. Comput. 274, 11–22 (2015)

Liu, L.-B., Chen, Y.: A-posteriori error estimation in maximum norm for a strongly coupled system of two singularly perturbed convection-diffusion problems. J. Comput. Appl. Math. 313, 152–167 (2017)

Luchko, Y.: Maximum principle for the generalized time-fractional diffusion equation. J. Math. Anal. Appl. 351, 218–223 (2009)

Lyu, P., Vong, S.: A high-order method with a temporal nonuniform mesh for a time-fractional Benjamin–Bona–Mahony equation. J. Sci. Comput. 80, 1607–1628 (2019)

Song, L., Wang, W.: Solution of the fractional Black–Scholes option pricing model by finite difference method. Abstr. Appl. Anal. 2013, Article ID 194286 (2013). https://doi.org/10.1155/2013/194286

Stynes, M., O’Riordan, E., Gracia, J.L.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55(2), 1057–1079 (2017)

Wilmott, P., Dewynne, J., Howison, S.: Option Pricing: Mathematical Models and Computation. Oxford Financial Press, Oxford (1993)

Wyss, W.: The fractional Black–Scholes equation. Fract. Calc. Appl. Anal. 3(1), 51–61 (2000)

Zhang, H., Liu, F., Turner, I., Yang, Q.: Numerical solution of the time fractional Black–Scholes model governing European options. Comput. Math. Appl. 71(9), 1772–1783 (2016)

Acknowledgements

We would like to thank the anonymous reviewers for their valuable suggestions and comments for the improvement of this paper.

Funding

The work was supported by the Project of Philosophy and Social Science Research in Zhejiang Province (Grant No. 19NDJC039Z), Humanities and Social Sciences Planning Fund of Ministry of Education of China (Grant No. 18YJAZH002), Zhejiang Province Public Welfare Technology Application Research Project (Grant No. LGF19A010001), Major Humanities and Social Sciences Projects in Colleges and Universities of Zhejiang Province (Grant No. 2018GH020), Ningbo Municipal Soft Science Foundation (Grant No. 2018A10041), Ningbo Municipal Natural Science Foundation (Grant Nos. 2019A610045, 2019A610038), and National College Students Innovation and Entrepreneurship Training Program (Grant No. 201910876026).

Author information

Authors and Affiliations

Contributions

The first author carried out the literature review, designed the numerical algorithm and conducted numerical experiments, the second author participated in analyzing the error and designing the numerical algorithm, and the third author participated in doing numerical experiments. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there is no conflict of interests regarding the publication of this paper. The authors confirm that the mentioned received funding in the ’Acknowledgements’ section does not lead to any conflict of interests regarding the publication of this manuscript.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Huang, J., Cen, Z. & Zhao, J. An adaptive moving mesh method for a time-fractional Black–Scholes equation. Adv Differ Equ 2019, 516 (2019). https://doi.org/10.1186/s13662-019-2453-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2453-1