Abstract

This paper is devoted to the investigation of the sliding-mode controller design problem for a class of complex dynamical network systems with Markovian jump parameters and time-varying delays. On the basis of an appropriate Lyapunov–Krasovskii functional, a set of new sufficient conditions is developed which not only guarantee the stochastic stability of the sliding-mode dynamics, but also satisfy the \({H_{\infty}}\) performance. Next, an integral sliding surface is designed to guarantee that the closed-loop error system reach the designed sliding surface in a finite time. Finally, an example is given to illustrate the validity of the obtained theoretical results.

Similar content being viewed by others

1 Introduction

In recent years, increasing attention has been drawn to the problem of complex networks due to their potential applications in many real-world systems, such as biological systems, chemical systems, social systems and technological systems. In particular, the synchronization phenomena in complex dynamical networks system have attracted rapidly increasing interests, which mean that all nodes can reach a common state. Several famous network models, such as the scale-free model [1] and the small-word model [2, 3], which accurately characterize some important natural structures, have been researched. Complex dynamical network are prominent in describing the sophisticated collaborative dynamics in many fields of science and engineering [4,5,6].

The feature of time delay exists extensively in many real-world systems. It is well known that the existence of time delay in a network can make system instable and degrade its performance. In recent decades, considerable attention has been devoted to the time-delay systems due to their extensive applications in practical systems including circuit theory, neural network [7,8,9,10] and complex dynamical networks system [11,12,13,14,15,16] etc. Thus, synchronization for complex dynamical networks with time delays in the dynamical nodes and coupling has become a key and significant topic. Some researchers have proposed some results in this area. In [11], the author proposed pinning control scheme to achieve synchronization for singular complex networks with mixed time delays. Based on impulsive control method, the authors in [12, 13] studied projective synchronization between general complex networks with coupling time-varying delay and multiple time-varying delays [14], respectively. Based on sampled-data control [15], the authors proposed a method with finite-time \({H_{\infty}}\) synchronization in Markovian jump complex networks with time-varying delays. Based on pinning impulsive control [17], the problem of exponential synchronization of Lur’e complex dynamical network with delayed coupling was studied.

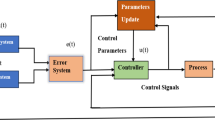

The dynamical behaviors of all nodes in complex dynamical networks are not always the same. Thus, many authors have studied the characteristics of all nodes in CDNs with the help of digital controllers, such as pinning control [18,19,20,21], sampled-data control [22, 23], impulsive control [24,25,26] and sliding control [27,28,29,30] and so on. Under an important pinning control approach, by a minimum number of controllers, the system can reached the predetermined goal. Under a sampled-data controller, the states of the control systems at sampling instants are adjusted continuously by using zero-order holder. Under an impulsive controller, states of the control systems are adjusted at discrete-time sampling instants. Under sliding control, in the design of the sliding surface, a set of specified matrices are employed to establish the connections among sliding surface corresponding to every mode. No matter what control strategy is adopted, the ultimate goal is to make the system stable and achieve our intended results. In this paper, the goal is to select suitable sliding control to synchronize complex networks with Markovian jump parameters and time delays.

The sliding-mode control methods were initiated in the former Soviet Union about 40 years ago, and since then the sliding-mode control methodology has been receiving much more attention within the last two decades. Sliding-mode control is widely adopted in lots of complex and engineering systems, including time delays [31,32,33] stochastic systems [34, 35], singular systems [36,37,38], Markovian jumping systems [39,40,41], and fuzzy systems [42,43,44]. As is well known, system performance may be degraded by the affection of the presence of nonlinearities and external disturbances. In [32], a sliding-mode approach is proposed for the exponential \({H_{\infty}}\) synchronization problem of a class of master–slave time-delay systems with both discrete and distributed time delays. In [34], the authors were concerned with event-triggered sliding-mode control for an uncertain stochastic system subject to limited communication capacity. In [38], this paper is concerned with non-fragile sliding-mode control of discrete singular systems with external disturbance. In [41], the authors considered sliding-mode control design for singular stochastic Markovian jump systems with uncertainties. The main advantage of the sliding mode is low sensitivity to plant parameter variation and disturbance, which eliminate the necessity of exact modeling.

Markovian jump systems including time-evolving and event-driven mechanisms have the advantage of better representing physical systems with random changes in both structure and parameters. Much recent attention has been paid to the investigation of these systems. When complex dynamical networks systems experience abrupt changes in their structure, it is natural to model them by Markovian jump complex networks systems. A great deal of literature has been published to study the Markovian jump complex networks systems; see [11, 14, 15, 19, 23, 28] for instance.

Up to now, unfortunately, there have only been few papers related to the topic of synchronization of complex dynamical networks with Markovian jump parameters and time-varying delays coupling in the dynamical nodes. So it is challenging to solve this synchronization problem for complex dynamical networks. Motivated by the aforementioned discussion, this paper aims to study the \({H_{\infty}}\) synchronization of complex dynamical network system. To achieve the \({H_{\infty}}\) synchronization of complex dynamical networks with Markovian jump parameter, the integral sliding surface is designed, and a novel sliding-mode controllers is proposed. The main contributions of this article are summarized as follows: (1) This paper extends previous work on the synchronization problem for complex dynamical network systems with Markovian jump parameters and time-varying delays and derives some new theoretical results. (2) An appropriate integral sliding-mode surface is constructed such that the reduced-order equivalent sliding motion can adjust the effect of the chattering phenomenon. (3) Using a Lyapunov–Krasovskii functional and a sliding-mode controller, we establish new sufficient conditions in terms of LMIs to ensure the stochastic stability and the \({H_{\infty}}\) performance condition.

Notation

\({R^{n}}\) denotes the n dimensional Euclidean space; \({R^{m \times n}}\) represents the set of all \(m \times n\) real matrices. For a real asymmetric matrix X and Y, the notation \(X \geqslant Y\) (respectively, \(X > Y\) ) means \(X - Y\) is semi-positive definite (respectively, positive definite). The superscript T denotes matrix transposition. Moreover, in symmetric block matrices, ∗ is used as an ellipsis for the terms that are introduced by asymmetry and \(\operatorname{diag} \{ \cdots \}\) denotes a block-diagonal matrix. The notation \(A \otimes B\) stands for the Kronecker product of matrices A and B. \(\Vert \, { \cdot }\, \Vert \) stands for the Euclidean vector norm. \({\mathcal {E}}\) stands for the mathematical expectation. If not explicitly stated, matrices are assumed to have compatible dimensions.

2 System description and preliminary lemma

Let \(\{ r(t)\ (t \ge0)\} \) be a right-continuous Markovian chain on the probability space \((\varOmega,F, {\{ {F_{t}}\} _{t \ge0}},P)\) taking a value in the finite space \({\mathcal {S}} = \{ 1,2, \ldots,m\} \), with generator \(\varPi = {\{ {\pi_{ij}}\} _{m \times m}}\) (\(i,j \in {\mathcal {S}}\)) given as follows:

where \(\Delta t > 0\), \(\lim_{\Delta t \to0} (o\Delta t/\Delta t) = 0\), and \({\pi_{ij}}\) is the transition rate from mode i to mode j satisfying \({\pi_{ij}} \ge0\) for \(i \ne j\) with \({\pi_{ij}} = - \sum_{j = 1\, j \ne i}^{m} {{\pi _{ij}}}\) (\(i,j \in{\mathcal {S}}\)).

The following complex dynamical network systems of N identical nodes is considered, in which each node consists of an n-dimensional dynamical subsystem with Markovian jump parameter and time delay:

where \({x_{k}} ( t ) = ( {{x_{k1}},{x_{k2}}, \ldots ,{x_{kn}}} )^{T} \in{R^{n}}\) represents the state vector of the kth node of the complex dynamical system; \({u_{k}} ( t )\) denote the control input and \({w_{k}} ( t )\) is the disturbance; \(f ( {{x_{k}} ( t )} )\) is for vector-valued nonlinear functions; \(A ( {r ( t )} )\), \(C ( {r ( t )} )\), \(D ( {r ( t )} )\) and \(B ( {r ( t )} )\) are matrix functions of the random jumping process \(\{ {r ( t )} \}\); \({\varGamma_{1}} ( {r ( t )} )\) and \({\varGamma_{2}} ( {r ( t )} )\) represent the inner coupling matrix of the complex networks; \({\sigma_{1}}\) and \({\sigma_{2}} > 0\) denote the non-delayed and delayed coupling strengths. \(G = { ( {{g_{kj}}} )_{N \times N}}\) is the out-coupling matrix representing the topological structure of the complex networks, in which \({g_{kj}}\) is defined as follows: if there exists a connection between node k and node j (\({k \ne j}\)), then \({g_{kj}} = {g_{jk}} = 1\), otherwise, \({g_{kj}} = {g_{jk}} = 0\) (\({k \ne j}\)). The row sums of G are zero, that is, \(\sum_{j = 1}^{N} {{g_{kj}}} = - {g_{kk}}\), \(k = 1,2, \ldots,N\). The bounded function \(\tau ( t )\) represents unknown discrete-time delays of the system. The time delay \(\tau ( t )\) is assumed to satisfy the condition as follows:

where τ and τ̄ are given nonnegative constants.

Assumption 2.1

For all \(x,y \in{R^{n}}\), the nonlinear function \(f ( \cdot )\) is continuous and assumed to satisfy the following sector-bounded nonlinearity condition:

where \({U_{1}}\) and \({U_{2}} \in{R^{n \times n}}\) are known constant matrices with \({U_{2}} - {U_{1}} > 0\). For presentation simplicity and without loss of generality, it is assumed that \(f ( 0 ) = 0\).

Definition 2.1

([41])

The complex dynamical network systems (1) is said to be stochastically stable, if any \(e ( 0 ) \in{R^{n}}\) and \({r_{0}} \in{\mathcal {S}}\) there exists a scalar\(\tilde{M} ( {e ( 0 ),{r_{0}}} ) > 0\) such that

where \(e ( {t,e ( 0 ),{r_{0}}} )\) denotes the solution under the initial condition \(e ( 0 )\) and \({r_{0}}\). And \({e_{k}} ( t ) = {x_{k}} ( t ) - s ( t )\) is the synchronization error of the complex dynamical network system, and \(s ( t ) \in{R^{n}}\) can be an equilibrium point, or a (quasi-)periodic orbit, or an orbit of a chaotic attractor, which satisfies \(\dot{s} ( t ) = A ( {r ( t )} )s ( t ) + C ( {r ( t )} )f ( {s ( t )} )\).

Definition 2.2

([32])

The \({H_{\infty}}\) performance measure of the systems (1) is defined as

where the positive scalar γ is given.

Lemma 2.1

(Jensen’s inequality)

For a positive matrix M, scalar \({h_{U}} > {h_{L}} > 0\) the following integrations are well defined:

-

(1)

\(- ({h_{U}} - {h_{L}})\int_{t - {h_{U}}}^{t - {h_{L}}} {{x^{T}}(s)} Mx(s)\,ds \le - (\int_{t - {h_{U}}}^{t - {h_{L}}} {{x^{T}}(s)\,ds} )M(\int_{t - {h_{U}}}^{t - {h_{L}}} {{x^{T}}(s)\,ds} )\),

-

(2)

\(- (\frac{{h_{U}^{2} - h_{L}^{2}}}{2})\int_{t - {h_{U}}}^{t - {h_{L}}} {\int_{s}^{t} {{x^{T}}(u)Mx(u)} } \,du\,ds \le - (\int_{t - {h_{U}}}^{t - {h_{L}}} {\int_{s}^{t} {{x^{T}}(u)} } \,du\,ds)M(\int _{t - {h_{U}}}^{t - {h_{L}}} {\int_{s}^{t} {x(u)} } \,du\,ds)\).

Lemma 2.2

([11])

If for any constant matrix \(R \in R{^{m \times m}}\), \(R = {R^{T}} > 0\), scalar \(\gamma > 0\) and a vector function \(\phi:[0,\gamma] \to R{^{m}}\) such that the integrations concerned are well defined, the following inequality holds:

Lemma 2.3

([45])

Let ⊗ denote the Kronecker product. A, B, C and D are matrices with appropriate dimensions. The following properties hold:

-

(1)

\(( {cA} ) \otimes B = A \otimes ( {cB} )\), for any constant c,

-

(2)

\(( {A + B} ) \otimes C = A \otimes C + B \otimes C\),

-

(3)

\(( {A \otimes B} ) ( {C \otimes D} ) = ( {AC} ) \otimes ( {BD} )\),

-

(4)

\({ ( {A \otimes B} )^{T}} = {A^{T}} \otimes{B^{T}}\),

-

(5)

\({ ( {A \otimes B} )^{ - 1}} = ( {{A^{ - 1}} \otimes{B^{ - 1}}} )\).

For the sake of simplicity, when \(r ( t ) = i\), we denote \({A_{i}}\), \({C_{i}}\), \({B_{i}}\), \({D_{i}}\), \({\varGamma_{1i}}\), \({\varGamma_{2i}}\) as \(A ( {r ( t )} )\), \(C ( {r ( t )} )B ( {r ( t )} )\), \(D ( {r ( t )} )\), \({\varGamma_{1}} ( {r ( t )} )\), \({\varGamma_{2}} ( {r ( t )} )\). Let \(e ( t ) = x ( t ) - s ( t )\) be the synchronization error of system from the initial mode \({r_{0}}\). Then the error dynamical, namely, the synchronization error system can be expressed by

where \(g ( {{e_{k}} ( t )} ) = f ( {{x_{k}} ( t )} ) - f ( {{s_{k}} ( t )} )\).

Now, the original synchronization problem can be replace by the equivalent problem of the stability the system (4) by a suitable choice of the sliding-mode control. In the following, the sliding-mode controller will be designed using variable structure control and sliding-mode control methods [46]. Let us introduce the sliding surface as

\({V_{i}} \in{R^{m \times n}}\), \({K_{i}} \in{R^{r \times n}}\) are real matrices to be designed. \({V_{i}}\) is designed such that \({V_{i}}{B_{i}}\) is non-singular. It is clear that \({\dot{S}_{k}} ( {t,i} ) = 0\) is a necessary condition for the state trajectory to stay on the switching surface \({S_{k}} ( {t,i} ) = 0\). Therefore, by \({\dot{S}_{k}} ( {t,i} ) = 0\) and (4), we get

Solving Eq. (6) for \({u_{k}} ( t )\)

where \({\hat{V}_{i}} = { ( {{V_{i}}{B_{i}}} )^{ - 1}}{V_{i}}\).

Substituting (7) into (4), the error dynamics with sliding mode is given as follows:

Or equivalently

The problem to be addressed in this paper is formulated as follows: given the complex dynamical network system (1) with Markovian jump parameters and time delays, finding a mode-dependent sliding mode stochastically stable and \({H_{\infty}}\) synchronization control \(u ( t )\) with any \(r ( t ) = i \in{\mathcal {S}}\) for the error system (4) is stochastically stable and satisfies an \({H_{\infty}}\) norm bound γ, i.e. \({J_{\infty}} < 0\).

3 Main results

The purpose of this section is to solve the problem of \({H_{\infty}}\) synchronization. More specifically, we will establish LMI conditions to check whether the sliding-mode dynamics have ideal properties, such as being stochastically stable and \({H_{\infty}}\) synchronization. The relevant conclusion of the stability analysis is provided in the following theorem.

3.1 Stability analysis

Theorem 3.1

Let the matrices \({V_{i}}\), \({K_{i}}\) (\({i = 1,2, \ldots,N}\)) with \(\det ( {{V_{i}}{B_{i}}} ) \ne0\) be given. The complex dynamical network system (1) with Markovian jump parameter is stochastically stable and shows \({H_{\infty}}\) synchronization in the sense of Definition 2.1 and Definition 2.2, if there exist some positive definite matrices \({P_{i}}\), \({Q_{1}}\), \({Q_{2}}\), \({X_{\iota}}\) (\({\iota = 1,2,3}\)) such that the following matrix holds for any \(i \in{\mathcal {S}}\):

where

Proof

Design the following positive definition functional for the system:

where

By the definition of the infinitesimal operator \({\mathcal {L}}\) of the stochastic Lyapunov–Krasovskii functional in [47], we obtain

Calculating the infinitesimal generator of \(V ( {e ( t ),i,t} )\) along the trajectory of the error sliding-mode dynamics (8) and (9), we obtain

According to Lemma 2.1 and Lemma 2.2, we have

For any matrices \({\varLambda_{1}}\) and \({\varLambda_{2}}\) with appropriate dimensions, the following equations hold:

It can be deduced from Assumption 2.1 that, for the matrices \({U_{1}}\) and \({U_{2}}\), the following inequalities hold:

where

On the other hand, for a prescribed \(\gamma > 0\), under zero initial condition, \({J_{\infty}}\) can be rewritten as

From the obtained derivation terms in Eqs. (16)–(21) and adding Eqs. (22)–(23) into (24)

where

According to the condition (10) in Theorem 3.1, it means that the condition \({J_{\infty}} < 0\) is satisfied. Moreover, \({J_{\infty}} < 0\) for \(w ( t ) = 0\) implies \({\mathcal {E}} \{ {{\mathcal {L}}V ( {e ( t ),i,t} )} \} < 0\). Then we have

where \({a_{1}} = \min \{ {{\lambda_{\min}} ( { - {\varPhi _{i}}} ),i \in{\mathcal {S}}} \}\), then \({a_{1}} > 0\). By Dynkin’s formula, we have

and

Then from Definition 2.1, the sliding-mode dynamical system (9) is stochastically stable. This completes the proof. □

Remark 1

It should be pointed out that Theorem 3.1 provided a sufficient condition of stability for the sliding-mode complex dynamical network systems (9). But the parameter matrix is not given so we cannot apply the LMI toolbox of Matlab to solve them. According to Theorem 3.1 and the Schur complement, the strict LMI conditions will be given in the next theorem.

Theorem 3.2

Under Assumption 2.1, a synchronization law given in the form of Eq. (9) exists such that the Markovian jump synchronization error system (9) with time-varying delays is stochastically stable and an \({H_{\infty}}\) performance level \(\gamma > 0\) in the sense of Definition 2.1 and Definition 2.2, if there exist some matrices \({Y_{i}}\), \({\hat{V}_{i}}\) and positive definite matrices \({M_{i}}\) (\({i = 1,2, \ldots,s}\)), \({\tilde{X}_{\iota}}\) (\({\iota = 1,2,3}\)), \({Q_{1}}\), \({Q_{2}}\) satisfying the following LMIs:

where

Proof

Using the following diagonal matrix:

and its transpose, to pre-multiplying and post-multiplying (11), where \({M_{i}} = P_{i}^{ - 1}\), applying Schur complements and Lemma 2.3 and considering \({K_{i}}{M_{i}} = {Y_{i}}\), we can get (29). Thereby the proof of the theorem is completed. □

3.2 Sliding-model control design

The objective now is to study the reachability. In this section, an appropriate control law will be constructed to drive the trajectories of the system (1) into the designed sliding surface \({S_{k}} ( {t,i} ) = 0\) with \({S_{k}} ( {t,i} )\) defined in (6) in finite time and maintain them on the surface afterwards.

Theorem 3.3

Suppose that the sliding function is given in (6) where \({K_{i}}\) and \({M_{i}}\) satisfy (29)–(30). Then the trajectories of the error dynamic system (9) can be driven onto the sliding surface \({S_{k}} ( {t,i} ) = 0\) in finite time and then maintain the sliding motion if the control is designed as follows:

where \({\rho_{i}}: = \max_{i \in{\mathcal {S}}} { ( {{\lambda_{\max}} ( {{D_{i}}D_{i}^{T}} )} )^{0.5}}\).

Proof

Choose the following Lyapunov function:

Calculating the time derivative of the sliding-mode surface \({S_{k}} ( {t,i} )\) along the trajectory of (4), we obtain

Substituting (31) into (33) implies that

Then, letting \({S_{k}} ( {{t_{0}} = 0,{r_{0}}} ) = {S_{k0}}\) and integrating from \(0 \to t\), one obtains

The left-hand side of (35) is nonnegative; we can judge that \(W ( {{S_{k}} ( {t,i} )} )\) reaches zero in finite time for each mode \(i \in{\mathcal {S}} = \{ {1,2, \ldots,m} \}\), and the finite time \({t^{*} }\) is estimated by

Therefore, it is shown from (36) that the system trajectories can be driven onto the predefined sliding surface in finite time. In other words, the sliding-mode surface \({S_{k}} ( {t,i} )\) must be reachable. □

Remark 2

In order to eliminate the chattering caused by \(\operatorname{sign} ( {B_{i}^{T}V_{i}^{T}S ( {k,i} )} )\), a boundary layer is introduced around each switch surface by replace \(\operatorname{sign} ( {B_{i}^{T}V_{i}^{T}S ( {k,i} )} )\) in (31) by saturation function. Hence, the control law (31) can be expressed as

The jth element of \(\operatorname{sat} ( B_{i}^{T}V_{i}^{T}S ( {k,i} ) / \kappa )\) is described as

where \(j = 1,2, \ldots,m\), \({\kappa_{j}}\) is a measure of the boundary layer thickness around the jth switching surface.

4 Example

In this section, an example is provided to demonstrate that the proposed method is effective.

Example 1

Consider complex dynamical networks systems (1) with three nodes and mode \({\mathcal {S}} = \{ {1,2} \}\). The relevant parameters are given as follows.

Mode 1:

Mode 2:

In addition, the transition rate matrix is given by .

And the outer coupling matrix is given as

The nonlinear function \(f ( {{x_{i}} ( t )} )\) is taken as

Let us take the matrices \({U_{1}}\) and \({U_{2}}\) as follows: , .

The time-varying delay is chosen as \(\tau ( t ) = 0.9 + 0.01\sin ( {40t} )\). According, one has \(\tau = 0.91\), \(\bar{\tau}= 0.4\). Let us consider the coupling strength \({\sigma_{1}} = 0.2\), \({\sigma_{2}} = 0.5\). The coefficient of free weight matrix \(\varepsilon = 0.1\), and \(\alpha = 0.6\). The exogenous input \(\omega ( t ) = \frac{1}{{1 + {t^{2}}}}\).

The LMIs (29) in Theorem 3.2 are solved by Matlab LMI toolbox, and obtained \(\gamma = 8.4702\mathrm{e} {+} 04\).

The gain matrices \({K_{1}}\), \({K_{2}}\) can be obtained by simple calculation,

Moreover, by (5), setting \({V_{i}} = {\hat{V}_{i}}\) the switching surface function can be computed as

where \(k = 1,2,3\).

The simulation results are presented in Figs. 1–4. It can be seen from Figs. 1 and 2 that the synchronization error converges to zero in mode 1 and mode 2, respectively. Figures 3 and 4 demonstrate the sliding-mode surface function in mode 1 and mode 2, respectively.

5 Conclusion

In this paper, we have shown a sliding-mode design method to solve the \({H_{\infty}}\) synchronization problem for complex dynamical network systems with Markovian jump parameters and time-varying delays. A novel integral sliding-mode controller was proposed. On the basis of Lyapunov stability theory, it has been shown that the Markovian jump complex dynamical network systems via sliding-mode control can be guaranteed to show synchronization and satisfy \({H_{\infty}}\) performance. An example was given to shown the effectiveness of the obtained methods.

It would be interesting to extend the results obtained to multiple complex dynamical networks with multiple coupling delays. This topic will be considered in future work.

References

Barabasi, A.L., Albert, R., Jeong, H.: Scale-free characteristics of random networks: the topology of the world wide web. Physica A 281(1), 69–77 (2000)

Watts, D.J., Strogatz, S.H.: Collective dynamics of ‘small-world’ networks. Nature 393, 440–442 (1988)

Barbasi, A.L., Albert, R.: Emergence of scaling in random networks. Science 286, 509–512 (1999)

Albert, R., Jeong, H., Barabsi, A.L.: Diameter of the world wide web. Nature 401, 130–131 (1999)

Strogatz, S.H.: Exploring complex network. Nature 410, 268–276 (2001)

Wang, X.F., Chen, G.R.: Synchronization in scale-free dynamical networks: robustness and fragility. IEEE Trans. Circuits Syst. 149, 54–56 (2002)

Peng, X., Wu, H.Q., Song, K., Shi, J.X.: Global synchronization in finite time for fractional-order neural networks with discontinuous activations and time delays. Neural Netw. 94, 46–54 (2017)

Liu, M., Wu, H.Q.: Stochastic finite-time synchronization for discontinuous semi-Markovian switching neural networks with time delays and noise disturbance. Neurocomputing 310, 246–264 (2018)

Peng, X., Wu, H.Q., Cao, J.D.: Global nonfragile synchronization in finite time for fractional-order discontinuous neural networks with nonlinear growth activations. IEEE Trans. Neural Netw. Learn. Syst. (2018, in press). https://doi.org/10.1109/TNNLS.2018.2876726

Zhao, W., Wu, H.Q.: Fixed-time synchronization of semi-Markovian jumping neural networks with time-varying delays. Adv. Differ. Equ. 2018, 213 (2018)

Ma, Y.C., Ma, N.N., Chen, L.: Synchronization criteria for singular complex networks with Markovian jump and time-varying delays via pinning control. Nonlinear Anal. Hybrid Syst. 28, 85–99 (2018)

Zheng, S.: Projective synchronization in a driven-response dynamical network with coupling time-varying delays. Nonlinear Dyn. 63(3), 1429–1438 (2012)

Zheng, S.: Projective synchronization analysis of drive-response coupled dynamical network with multiple time-varying delays via impulsive control. Abstr. Appl. Anal. 2014, Article ID581971 (2014)

Ma, Y.C., Zheng, Y.Q.: Synchronization of continuous-time Markovian jumping singular complex networks with mixed mode-dependent time delays. Neurocomputing 156, 52–59 (2015)

Huang, X.J., Ma, Y.C.: Finite-time \({H_{\infty}}\) sampled-data synchronization for Markovian jump complex networks with time-varying delays. Neurocomputing 296, 82–99 (2018)

Wang, Z.B., Wu, H.Q.: Global synchronization in fixed time for semi-Markovian switching complex dynamical networks with hybrid coupling and time-varying delays. Nonlinear Dyn. (2018, in press). https://doi.org/10.1007/s11071-018-4675-2

Rankkiyappan, R., Velmurugan, G., Nicholas, G.J., Selvamani, R.: Exponential synchronization of Lur’e complex dynamical networks with uncertain inner coupling and pinning impulsive control. Appl. Math. Comput. 307, 217–231 (2017)

Song, Q., Cao, J., Liu, F.: Pinning-controlled synchronization of hybrid-coupled complex dynamical networks with mixed time-delays. Int. J. Robust Nonlinear Control 22(6), 690–706 (2012)

Lee, T.H., Ma, Q., Xu, S., Ju, H.P.: Pinning control for cluster synchronization of complex dynamical networks with semi-Markovian jump topology. Int. J. Control 88(6), 1223–1235 (2015)

Yang, X.S., Cao, J.D.: Synchronization of complex networks with coupling delay via pinning control. IMA J. Math. Control Inf. 34(2), 579–596 (2017)

Feng, J.W., Sun, S.H., Chen, X., Zhao, Y., Wang, J.Y.: The synchronization of general complex dynamical network via pinning control. Nonlinear Dyn. 67(2), 1623–1633 (2012)

Lee, T.H., Wu, Z.G., Ju, H.P.: Synchronization of a complex dynamical network with coupling time-varying delays via sampled-data control. Appl. Math. Comput. 219(3), 1354–1366 (2012)

Liu, X.H., Xi, H.S.: Synchronization of neutral complex dynamical networks with Markovian switching based on sampled-data controller. Neurocomputing 139(2), 163–179 (2014)

Zhao, H., Li, L.X., Peng, H.P., Xiao, J.H., Yang, Y.X., Zhang, M.W.: Impulsive control for synchronization and parameters identification of uncertain multi-links complex network. Nonlinear Dyn. 83(3), 1437–1451 (2016)

Dai, A.D., Zhou, W.N., Feng, J.W., Xu, S.B.: Exponential synchronization of the coupling delayed switching complex dynamical networks via impulsive control. Adv. Differ. Equ. 2013, 195 (2013)

Syed, A.M., Yogambigai, J.: Passivity-based synchronization of stochastic switched complex dynamical networks with additive time-varying delays via impulsive control. Neurocomputing 273(17), 209–221 (2018)

Khanzadeh, A., Pourgholi, M.: Fixed-time sliding mode controller design for synchronization of complex dynamical networks. Nonlinear Dyn. 88(4), 2637–2649 (2017)

Syed, A.M., Yogambigai, J., Cao, J.D.: Synchronization of master–slave Markovian switching complex dynamical networks with time-varying delays in nonlinear function via sliding mode control. Acta Math. Sci. 37(2), 368–384 (2017)

Jin, X.Z., Ye, D., Wang, D.: Robust synchronization of a class of complex networks with nonlinear couplings via a sliding mode control method. In: 2012 24th Chinese Control and Decision Conference, pp. 1811–1815 (2012)

Wang, Z.B., Wu, H.Q.: Projective synchronization in fixed time for complex dynamical networks with nonidentical nodes via second-order sliding mode control strategy. J. Franklin Inst. 355, 7306–7334 (2018)

Han, Y.Q., Kao, Y.G., Gao, C.C.: Robust sliding mode control for uncertain discrete singular systems with time-varying delays and external disturbances. Automatica 75, 210–216 (2017)

Karimi, H.A.: A sliding mode approach to \({H_{\infty}}\) synchronization of master–slave time-delay systems with Markovian jumping parameters and nonlinear uncertainties. J. Franklin Inst. 349, 1480–1496 (2012)

Wu, L.G., Zheng, W.X.: Passivity-based sliding mode control of uncertain singular time-delay systems. Automatica 45, 2120–2127 (2009)

Wu, L.G., Gao, Y.B., Liu, J.X., Yi, H.Y.: Event-triggered sliding mode control of stochastic systems via output feedback. Automatica 82, 79–92 (2017)

Liu, X.H., Vargas, A.N., Yu, X.H., Xu, L.: Stabilizing two-dimensional stochastic systems through sliding mode control. J. Franklin Inst. 354(14), 5813–5824 (2017)

Han, Y.Q., Kao, Y.G., Gao, C.C.: Robust sliding mode control for uncertain discrete singular systems with time-varying delays. Int. J. Syst. Sci. 48(4), 818–827 (2017)

Liu, Y.F., Ma, Y.C., Wang, Y.N.: Reliable finite-time sliding-mode control for singular time-delay system with sensor faults and randomly occurring nonlinearities. Appl. Math. Comput. 320, 341–357 (2018)

Liu, L.P., Fu, Z.M., Cai, X.S., Song, X.N.: Non-fragile sliding mode control of discrete singular systems. Commun. Nonlinear Sci. Numer. Simul. 18(3), 735–743 (2013)

Feng, Z.G., Shi, P.: Sliding mode control of singular stochastic Markov jump systems. IEEE Trans. Autom. Control 62(8), 4266–4273 (2017)

Zhu, Q., Yu, X.H., Song, A.G., Fei, S.M., Cao, Z.Q., Yang, Y.Q.: On sliding mode control of single input Markovian jump systems. Automatica 50(11), 2897–2904 (2014)

Zhang, Q.L., Li, L., Yan, X.G., Spurgeon, S.K.: Sliding mode control for singular stochastic Markovian jump systems with uncertainties. Automatica 79, 27–34 (2017)

Zhang, D., Zhang, Q.L.: Sliding mode control for T–S fuzzy singular semi-Markovian jump system. Nonlinear Anal. Hybrid Syst. 30, 72–91 (2018)

Cheng, C.C., Chen, S.H.: Adaptive sliding mode controller design based on T–S fuzzy system models. Automatica 42(6), 1005–1010 (2006)

Jing, Y.H., Yang, G.H.: Fuzzy adaptive quantized fault-tolerant control of strict-feedback nonlinear systems with mismatched external disturbance. IEEE Trans. Syst. Man Cybern. Syst. (2018, in press). https://doi.org/10.1109/TSMC.2018.2867100

Ma, Y.C., Ma, N.N.: Finite-time \({H_{\infty}}\) synchronization for complex dynamical networks with mixed mode-dependent time delays. Neurocomputing 218, 223–233 (2016)

Utkin, V.I.: Variable structure systems with sliding modes. IEEE Trans. Autom. Control 22(2), 212–222 (1997)

Chen, B., Niu, Y., Zou, Y.: Sliding mode control for stochastic Markovian jumping systems with incomplete transition rate. IET Control Theory Appl. 7(10), 1330–1338 (2013)

Funding

This paper was supported in part by the Applied Fundamental Research (Major frontier projects) of Sichuan Province of China (Grant No. 2016JC0314). The authors would like to thank the editor and anonymous reviewers for their many helpful comments and suggestions to improve the quality of this paper.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ma, N., Liu, Z. & Chen, L. Sliding-mode \({H_{\infty}}\) synchronization for complex dynamical network systems with Markovian jump parameters and time-varying delays. Adv Differ Equ 2019, 48 (2019). https://doi.org/10.1186/s13662-019-1987-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-1987-6