Abstract

This paper deals with the problem of estimating the unknown parameters in a long-memory process based on the maximum likelihood method. The mean-square and the almost sure convergence of these estimators based on discrete-time observations are provided. Using Malliavin calculus, we present the asymptotic normality of these estimators. Simulation studies confirm the theoretical findings and show that the maximum likelihood technique can effectively reduce the mean-square error of our estimators.

Similar content being viewed by others

1 Introduction

Brownian motion has been widely used in the Black–Scholes option-pricing framework to model the return of assets. Consequently, several papers have already focused on the valuation of options when the underlying asset of stock price is modeled by a geometric Brownian motion. In this situation, one dimensional distributions of the stock prices are log-normal and the log-returns of the stocks are independent normal random variables. However, empirical studies show that log-returns of financial asset often have the properties of self-similarity and long-range dependency (see Casas and Gao [1]; Los and Yu [2]). To model these observed properties, it is possible to use one of the fractal processes, namely fractional Brownian motion (hereafter fBm), to replace the driving standard Brownian motion (see, for example, Willinger et al. [3]). Since fBm is not a semi-martingale (except in the Brownian motion case), we cannot use the usual stochastic calculus to analyze it. Fortunately, the research was re-encouraged by new insights in stochastic analysis based on the Wick product (see Duncan et al. [4]; Hu and Øksendal [5]), namely the fractional-Itô-integral. Thereafter, many scholars considered the problem of applying fBm in finance in a large setting, such as Elliott and van der Hoek [6]; Elliott and Chan [7]; Guasoni [8]; Rostek [9]; Azmoodeh [10].

When the fBm is used to describe the fluctuation of some financial phenomena, it is important to identify the parameters in stochastic models driven by fBm. This is because all the models involve unknown parameters or functions, which should be estimated from observations of the process. The estimation of these processes driven by fBm is therefore a crucial step in all applications, particularly in applied finance. For example, a crucial problem with the applications of these option-pricing formulas in the fractional Black–Scholes market in practice is how to obtain the unknown parameters in geometric fractional Brownian motion (hereafter gfBm). Otherwise, applying these models with long memory and self-similarity requires efficient and accurate synthesis of discrete gfBm. Even though fBm has stationary self-similar increments, it is neither independent nor Markovian. This means that state space models and Kalman filter estimators cannot be applied to the parameters of these processes driven by fBm. However, in the literature, several heuristic methods (such as the maximum likelihood approach and the least squares technique) are available to sort out these problems in this kind. For example, several contributions have been already reported for parameter estimation problems concerning continuous-time models where the driving processes are fractional Brownian motions (see Kleptsyna et al. [11]). Actually, the problem of maximum likelihood estimation (hereafter MLE) of the drift parameter has also been extensively studied (see, for example, Kleptsyna and Le Breton [12]; Tudor and Viens [13]). A similar problem for stochastic processes driven by fractional noise in the path-dependent case was investigated in Cialenco et al. [14]. Hu and Nualart [15] obtained least squares estimation (hereafter LSE) for the fractional Ornstein–Uhlenbeck process and proposed the asymptotic normality of LSE using Malliavin calculus. More recently, there has been increased interest in studying asymptotic properties of LSE for the drift parameter in the univariate case with fractional processes (see, Azmoodeh and Morlanes [16]; Cheng et al. [17]). Moreover, Xiao and Yu [18] and Xiao and Yu [19] considered the LSE in fractional Vasicek models in the stationary case, the explosive case, and the null recurrent case. For a general theory, including the Girsanov theorem and some results on statistical inference, for finite dimensional diffusions driven by fractional noise see also the well summarized monograph by Prakasa Rao [20].

All the papers mentioned above focused on parametric estimating of stochastic models driven by fBm in the continuous-time case. However, in practical terms, as stated in Tudor and Viens [13], it is usually only possible to observe this process in discrete-time samples (e.g., stock prices collected once a day). Thus the hypothesis of the continuous sampling observations is unreasonable since in practice it is obviously impossible to observe a process continuously over any given interval, due, for instance, to the limitations on the precision of the measuring instrument or the unavailability of observations at every point in time. Therefore, statistical inference for discretely observed processes is of great interest for practical purposes, and at the same time, it poses a challenging problem (see, for example, Xiao et al. [21]; Long et al. [22], Liu and Song [23]; Barboza and Viens [24]; El Onsy et al. [25]; Bajja et al. [26]). In ground-breaking work, Fox and Taqqu [27] used the Fourier method to estimate the long memory coefficient in stationary long-memory process. Dahlhaus [28] considered the unknown parameters in fractional Gaussian processes based on the Whittle function in the frequency domain. Tsai and Chan [29] considered a general order differential equation driven by fBm, and its derivatives. They considered the estimation of the continuous time parameters using possibly irregularly spaced data by maximizing the Whittle likelihood. Tsai and Chan [30] showed that the parameters in their model were identifiable and that the Whittle estimator had desirable asymptotic properties. Bhansali et al. [31] estimated the long memory parameter in the stationary region by frequency domain methods. Significant work has been done by Bertin et al. [32] and Bertin et al. [33], who constructed maximum likelihood estimators for both the drift fBm and drift Rosenblatt process. Recently, the parameter estimation problem for the discretely observed fractional Ornstein–Uhlenbeck was studied in Brouste and Iacus [34] and Es-Sebaiy [35]. Kim and Nordman [36] studied a frequency domain bootstrap for approximating the distribution of Whittle estimators for a class of long memory linear processes. Bardet and Tudor [37] presented the theory of the long-memory parameter estimation for non-Gaussian stochastic processes using the Whittle estimator. Xiao et al. [38] used the quadratic variation to estimate unknown parameters of gfBm from discrete observations. In this paper, inspired by Hu et al. [39] and Bertin et al. [32], we estimate the unknown parameters of a special long-memory process with discrete-time data, namely gfBm, based on MLE. The maximum likelihood technique is chosen in this paper because of two reasons: one is that this technique has been applied efficiently in a large set; the other is that it has well-documented favorable properties, such as being asymptotically consistent, unbiased, efficient and normally distributed about the true parameter values. The main contributions of this paper are to determine the estimators for the process of gfBm and to prove the asymptotic properties of these estimators. For these purposes, we first construct estimators of the gfBm, which is observed with random noise errors in the discrete framework. Then we present the asymptotic results. Finally, we perform some simulations in order to study numerically the asymptotic behavior of these estimators.

The remainder of this paper proceeds as follows. Section 2 contains some basic notations on Malliavin calculus that will be used in the forthcoming sections. Section 3 concretely addresses estimators of gfBm. Section 4 deals with the mean-square convergence, the almost surely convergence and the asymptotic normality of these estimators. In Sect. 5, we give simulation examples to show the performance of these estimators, and the standard error is proposed as a criterion of validation. Finally, Sect. 6 makes the concluding remarks. For the sake of the presentation, some difficult technical details are deferred to the Appendix.

2 Preliminaries

In this section we recall some basic results that will be used in this paper and also fix some notations. We refer to Nualart [40] or Hu and Nualart [15] for further reading.

The fBm \(\{B_{t}^{H},t\in\mathbb{R}\}\) with the Hurst parameter \(H\in(0,1)\) is a zero mean Gaussian process with covariance

We assume that \(B^{H}\) is defined on a complete probability space \((\Omega,\mathcal{A},P)\) such that \(\mathcal{A}\) is generated by \(B^{H}\). Fix a time interval \([0,T]\). Denote by \(\mathcal{E}\) the set of real valued step functions on \([0,T]\) and let \(\mathcal{H}\) be the Hilbert space defined as the closure of \(\mathcal{E}\) with respect to the scalar product

where \(R_{H}\) is the covariance function of the fBm, given in (1). The mapping \(\mathbf{1}_{[0,t]}\longmapsto B_{t}^{H}\) can be extended to a linear isometry between \(\mathcal{H}\) and the Gaussian space \(\mathcal{H}_{1}\) spanned by \(B^{H}\). We denote this isometry by \(\varphi\longmapsto B^{H}(\varphi)\). For \(H=\frac{1}{2}\) we have \(\mathcal{H}= L^{2}([0,T])\), whereas for \(H>\frac{1}{2}\) we have \(L^{\frac{1}{H}} ([0,T])\subset\mathcal{H}\) and for \(\varphi, \psi\in L^{\frac{1}{H}} ([0,T])\) we have

where \(\alpha_{H}=H(2H-1)\).

Let \(\mathcal{S}\) be the space of smooth and cylindrical random variables in the form of

where \(f\in C_{b}^{\infty}(\mathbb{R}^{n})\) (f and all its partial derivatives are bounded). For a random variable F of the form (3), we define its Malliavin derivative as the \(\mathcal{H}\)-valued random variable

By iteration, one can define the mth derivative \(D^{m}F\), which is an element of \(L^{2}(\Omega;\mathcal{ H}^{\otimes m})\), for every \(m\geq2\). For \(m\geq1\), \({\mathbb{D}}^{m,2}\) denotes the closure of \(\mathcal{S}\) with respect to the norm \(\Vert \cdot \Vert _{m,2}\), defined by the relation

We shall make use of the following theorem for multiple stochastic integrals (see Nualart and Peccati [41] or Nualart and Ortiz-Latorre [42]).

Theorem 2.1

Let \({F_{n}}\), \(n\geq1\) be a sequence of random variables in the pth Wiener chaos, \(p>2\), such that \(\lim_{n\rightarrow\infty}\mathbb{E} ( F_{n}^{2} ) =\sigma^{2}\). Then the following conditions are equivalent:

-

(i)

\(F_{n}\) converges in law to \(N(0, \sigma^{2})\) as n tends to infinity;

-

(ii)

\(\Vert DF_{n}\Vert ^{2}_{\mathcal{H}}\) converges in \(L^{2}\) to a constant as n tends to infinity.

Remark 2.2

In Nualart and Ortiz-Latorre [42], it is proved that (i) is equivalent to the fact that \(\Vert DF_{n}\Vert ^{2}_{\mathcal{H}}\) converges in \(L^{2}\) to \(p\sigma^{2}\) as n tends to infinity. If we assume (ii), the limit of \(\Vert DF_{n}\Vert ^{2}_{\mathcal{H}}\) must be equal to \(p\sigma^{2}\) because

3 Maximum likelihood estimators

In the literature, it is common to use the following form to model the underlying asset price \(S_{t}\) of a derivative:

where μ, σ and H are constants to be estimated, and \((B_{t}^{H}, t\ge0) \) is a fBm with the Hurst parameter \(H\in( \frac{1}{2}, 1)\). In fact, the process of (5) has received much attention these days due to its diverse application such as in physical, biological and mathematical finance models. Hence the parameter estimation is an important problem. Using the Wick integration, Hu and Øksendal [5] stated that the solution of (5) is

Hence, estimating the parameters in (5) is equal to estimate the parameters from the following model:

Let \(\mu=a\), \(-\frac{1}{2}\sigma^{2}=b\) and \(\sigma=c\). Then we consider a general model as follows:

We assume that the process is observed at discrete-time instants \((t_{1}, t_{2}, \ldots, t_{N})\). This is a natural situation that one encounters in practice. In this situation, we have a sample of discrete observations and try to estimate parameters for the process of (8) generating the sample. In the case of stochastic processes driven by Brownian motion, this problem have been well studied. The Markov structure of Brownian motion is essential for most of the methods used and therefore studying this problem in non-Markovian models seems challenging in this paper. On the other hand, for Gaussian processes estimation techniques have been quite extensively developed and it becomes apparent that some of these techniques work fine for an estimation of the unknown parameters for the process of (8). The reasons why we do not use the likelihood given by the Girsanov transformation for estimating the unknown parameters (Cialenco et al. [14]) are twofold. First of all, the Girsanov transformation gives the likelihood for continuous observations of the process. In practice we have discrete observations so we need to approximate the integrals appearing in the likelihood by discrete analogs. This requires the time between observations to be small, which is not always the case. Furthermore, the Girsanov transformation gives the likelihood as a function of a and b. It cannot provide us with insights for estimating H, which in most practical situations will be unknown. This differs from the classical case where \(H = 1/2\) is known. Hence, although the Girsanov transformation is theoretically interesting, it does not seem very practical to use it in the context of parameter estimation for the process (8). Consequently, our technic used in this paper is inspired from Hu et al. [39], which seems the best way to estimate (8). To simplify notations, we assume \(t_{k}=kh\), \(k=1, 2, \ldots, N\), for some fixed length \(h>0\). Thus the observation vector is \(\mathbf{Y}=(Y_{h},Y_{2h},\ldots,Y_{Nh})'\) (where the superscript ′ denotes the transpose of a vector). There are two reasons for us to study the general case of (8). One is because we can obtain the explicit estimators. The other is that it is also widely applied in various fields. The logarithm of a widely used gfBm, which is popular in finance (see Hu and Øksendal [5]), is of the form (8). Moreover, the motivation of the model of (8) is the extension of gfBm. Now, we introduce the following notations:

where \(\mathbf{t}=(h, 2h, \ldots, Nh)'\), \(\mathbf{t}^{2H}= ( h^{2H}, (2h)^{2H}, \ldots, (Nh)^{2H} ) '\) and \(\mathbf{B}_{t}^{H}=(B_{h} ^{H}, \ldots, B_{Nh}^{H})' \).

First, let us discuss the estimation of the Hurst parameter in the model (9). From Corollary 2 in Kubilius and Melichov [43], we immediately obtain the estimator of H from the observation Y:

where \(\lfloor z\rfloor\) denotes the greatest integer not exceeding z.

Thus it is easy to obtain an estimator for the Hurst parameter by using such quadratic variations. Moreover, using the same argument of Kubilius and Melichov [43], we find that the estimator Ĥ (defined by (10)) is a strong consistent estimator of H as N goes to infinity. Essentially, the problem of estimating the Hurst parameter in fractional processes has been extensively studied (see Shen et al. [44]; Kubilius and Mishura [45]; Salomónab and Fortc [46]). Therefore, throughout this paper, we assume that the Hurst coefficient is known. Thus, the aim of this paper is to estimate the unknown parameters a, b and \(c^{2}\) from observations \(Y=Y_{ih}\), \(0\leq i \leq N\), for a fixed interval h and study their asymptotic properties as \(N\rightarrow\infty\).

Actually, as the law of Y is Gaussian, the likelihood function of Y can be explicitly evaluated. Thus the estimators of a, b and \(c^{2}\) can be provided by the following theorem.

Theorem 3.1

The maximum likelihood estimators of a, b and \(c^{2}\) from the observation Y are given by

where

Proof

Since Y is Gaussian, the joint probability density function of Y is

Then we obtain the log-likelihood function

The MLE of a, b and \(c^{2}\) are obtained by maximizing the log-likelihood function \(L(\mathbf{Y}; a, b, c^{2})\) with respect to a, b and \(c^{2}\), respectively. Finally, we obtain (11), (12) and (13). □

4 The asymptotic properties

In this section we will discuss the \(L^{2}\)-consistency, the strong consistency and the asymptotic normality for the MLEs of a, b and \(c^{2}\).

First, we consider the \(L^{2}\)-consistency of (11) and (12).

Theorem 4.1

Both MLEs of a (defined by (11)) and b (defined by (12)) are unbiased and converge in mean square to a and b, respectively, as \(N\rightarrow\infty\).

Proof

Substituting Y by \(a \mathbf{t}+b \mathbf{t}^{2H} +c \mathbf{B}_{t} ^{H}\) in (11), we have

Then we have

Thus â is unbiased. On the other hand, using (15), we have

Denote

and denote by \(\Gamma_{ij}^{-1}\) the entry of the inverse matrix \(\Gamma^{-1}_{H}\) of \(\Gamma_{H}\). Then we shall use the following inequality (with \(x=\mathbf{N}=(1, 2, \dots, N)\)):

where \(\lambda_{\max}(\Gamma_{H})\) is the largest eigenvalue of the matrix \(\Gamma_{H}\). Since \(\Vert \mathbf{N}\Vert ^{2}_{2}=1^{2}+2^{2}+\cdots+N ^{2}=\frac{N(N+1)(2N+1)}{6}\), we know that \(\Vert \mathbf{N}\Vert _{2}^{2}\approx\frac{1}{3} N^{3}\). On the other hand, we have by the Gerschgorin Circle Theorem (see Golub and van Loan [47]: Theorem 8.1.3, p. 395)

where C is a positive constant. Thus we have

Moreover, a similar computation, combined with Theorem 1 of Zhan [48], implies that

with Ĉ a positive constant.

Consequently, using (16) and (17), we see that \(\operatorname{Var}[ \hat{a}]\) converges to zero as \(N\rightarrow\infty\). In the same way we can prove the convergence of b̂. □

In what follows, we study the mean-square convergence of the estimator \(\hat{c}^{2} \) defined by (13).

Theorem 4.2

The estimator \(\hat{c}^{2}\) (defined by (13)) of \(c^{2}\) has “normal” \(\frac{N-2}{N}\) bias and it converges in mean square to \(c^{2}\) as \(N\rightarrow\infty\). That is to say,

Proof

By replacing Y with \(a \mathbf{t}+b \mathbf{t}^{2H} +c \mathbf{B}_{t} ^{H} \) in (13), we have

Now, using (28) in the Appendix we have

Finally, using (28) and (29) in the Appendix, we have

which is convergent to 0 as N goes to infinity. Thus we proved the theorem. □

Now we can show the strong consistency of the MLEs of â, b̂ and \(\hat{c}^{2}\) as \(N\rightarrow\infty\).

Theorem 4.3

The estimators â, b̂ and \(\hat{c}^{2}\) defined by (12), (13) and (14), respectively, are strongly consistent, that is,

Proof

First, we will discuss the convergence of â. To this end, we will show that

for some \(\epsilon>0\).

Take \(0<\epsilon<1-H\). Since the random variable \(\hat{a}-a\) is a Gaussian random variable, from Chebyshev’s inequality and the property of the central absolute moments of Gaussian random variables, we have

where \(C_{q}\) is a constant depending on q. For sufficiently large q, we have \(q\epsilon+(H-1)q<-1\). Thus (23) is proved, which implies (20) by the Borel–Cantelli lemma.

Moreover, (21) and (22) can be obtained in a similar way. □

Now we are in the position to present the asymptotic normality for the estimators â, b̂ and \(\hat{c}^{2}\). First from (15), it is clear that

where \(\xrightarrow{\mathcal{L}}\) denotes convergence in the distribution.

Similarly, we obtain

Hence, only the asymptotic distribution of \(\hat{c}^{2}\) is left to be studied in the following theorem.

Theorem 4.4

The estimator \(\hat{c}^{2}\) (defined by (14)) of \(c^{2}\) is asymptotic normality. That is to say,

Proof

Using (19), we can define

From (28) and (29) in the Appendix, we can show that

From Theorem 4 in Nualart and Ortiz-Latorre [42] (see also Theorem 2.1 in Sect. 2), in order to show (24), it suffices to show that \(\Vert D F_{N}\Vert ^{2}_{\mathcal{H}} \) converges to a constant in \(L^{2}\) as N tends to infinity. Using (4), we can obtain

where \(D_{s}(\mathbf{B}_{t}^{H})'=(1_{[0,h]}(s),1_{[0,2h]}(s),\ldots, 1_{[0,Nh]{}}(s))\). Therefore, using (2), we have

Since both \(D_{s} ( \mathbf{B}_{t}^{H} ) ' \Gamma^{-1}_{H} \mathbf{B}_{t}^{H} \) and \(D_{u} ( \mathbf{B}_{t}^{H} ) ' \Gamma ^{-1}_{H} \mathbf{B}_{t}^{H}\) are Gaussian random variables, we can obtain

Let \(\Gamma^{-1}_{H}=(\Gamma^{-1}_{ij})_{i,j=1,\ldots,N}\), \(\Gamma_{H}=(\Gamma_{ij})_{i,j=1,\ldots,N}\) and \(\delta_{lk} \) be the Kronecker symbol. We shall use \(\int_{0}^{ih} \int_{0}^{i'h} \vert s-u\vert^{2H-2}\,ds\,du =\Gamma_{ii'}\) and \(\sum_{j=1}^{N} \Gamma_{ij}^{-1} \Gamma_{i'j} =\delta_{ii'}\). Then we have

which converges to 0 as \(N\rightarrow\infty\). In the same way, we can prove that \(\mathbb{E} ( \vert A_{T}^{(i)}-\mathbb{E} A_{T}^{(i)}\vert^{2} ) \) converges to zero as N tends to infinity, for \(i=2,3, \ldots,10\). By the triangular inequality, we see that

Together with

we have shown that \(\Vert D F_{N}\Vert ^{2}_{\mathcal{H}} \) converges to a constant in \(L^{2}\) as N tends to infinity. This completes the proof of this theorem. □

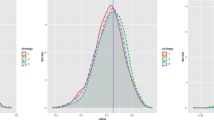

5 Simulation

In this section, we construct Monte Carlo studies for the different values of a, b and \(c^{2}\) to numerically investigate the efficiency of our estimators. Actually, the main obstacle to a Monte Carlo simulation is to obtain fBm, in contrast to the standard Brownian motion. However, in the literature, there are alternative methods to solve this problem (see Coeurjolly [49]). In this paper, we use Paxson’s algorithm (see Paxson [50]). First, we generate fractional Gaussian noise based on Paxson’s method by fast Fourier transformation. Second, we obtain the fBm using the result that a fBm is defined as the partial sum of the fractional Gaussian noise. Finally, we obtain the process of (9). For \(N=1000\), \(h=0.001\) and \(t\in[0,1]\), some sample trajectories of the process (9) are shown Figs. 1–3 for different values of H, a, b and \(c^{2}\). The simulation results reflex main properties of fBm: a larger value of H corresponds to smoother sample paths.

Sample paths of model (9) for different values of Hurst parameter (Chosen parameters \(a=0.10\); \(b=-0.2\); \(c=0.2\))

Sample paths of model (9) for different values of Hurst parameter (Chosen parameters \(a=0.25\); \(b=0.45\); \(c=0.3\))

Sample paths of model (9) for different values of Hurst parameter (Chosen parameters \(a=0.36\); \(b=0.28\); \(c=0.40\))

In the following, we consider the problem of estimating H, a, b and c from the observations \(Y_{h},\ldots, Y_{Nh}\). We have simulated the observations \(Y_{h},\ldots, Y_{Nh}\) for different values of H, a, b and c with the step \(h=0.01\). For each case we simulate the observations (N is presented in the following tables) and calculate 80 estimation of estimators. Then we can implement the observations to obtain the estimators by an exact MLE method. The simulated mean and variance of these estimators are given in the following tables (true value is the parameter value used in the Monte Carlo simulation; the empirical mean and the standard deviation are for the sample statistics).

From the output of the numerical computations, we find that both the standard deviations and the absolute errors of H, a, b and c decrease to zero as the number of observations increases. The computation results demonstrate that the simulated mean of the maximum likelihood estimators converges to the true value rapidly. Hence we can see that the estimators are excellent for \(H>\frac{1}{2}\). In summary, our estimation results also show that the MLE performs well since the estimating results match the chosen parameters exactly. Comparing the results of Tables 1–3 above with those of Tables 1–3 in Hu et al. [39], we can see that the standard deviation is considerably larger than those of Hu et al. [39]. The reason for this difference is that the authors assumed that the Hurst parameter was known in Hu et al. [39]. Our estimators of a, b and c in this paper include the estimator of H. It is worth to emphasize that a bad estimate of H may lead to a poor estimate of a, b and c. Obviously, more accuracy of the estimator for H leads the small standard deviation. Let us also mention that the accuracy of the estimator for H can affect the accuracy of a, b and c. This is because all the estimators of a, b and c involve H. Analysis of this effect is well beyond the scope of this paper.

6 Conclusion

The processes of long memory have evolved into a vital and important part of the time series analysis during the last decades, as studies in empirical finance have sought to use the “ideal” models in practical applications of importance in the financial engineering. Among them, the gfBm has been shown by many authors to be a useful description of financial time series. However, when employing a continuous mathematical framework to discrete data, it is important to provide optimal methods for estimating the correct parameters of this process. In this paper, we have extended the notion of gfBm into the discrete domain and then proposed the methodology of parameter estimation in time series driven by gfBm. For these purposes we construct the estimators by MLE and provide the convergence analysis for these estimators. Moreover, numerical study illustrated the effectiveness of the methodology. As can be seen in Tables 1–3, the MLE provides a mean which is consistent with the true value and presents a variance which is nearly equal to zero. Hence the numerical results show that the MLE is a fast, accurate and reliable methodology to estimate the parameters of gfBm. Although in the present paper we only develop the asymptotic theory for a special linear long memory model driven by fBm, our method is generally applicable to discrete-time models with a linear drift and with a constant diffusion, which are driven by centered Gaussian processes. Furthermore, we can see that model (9) can be considered as a general model of long memory property. It is obvious that some interesting cases are included in (9). In particular:

-

(i)

When \(b=0\), we can obtain the fBm with drift parameter.

-

(ii)

For \(H=\frac{1}{2}\), we can obtain the geometric Brownian motion.

-

(iii)

If \(H=\frac{1}{2}\), \(c=1\) and \(b=0\), we can get the linear regression model.

-

(iv)

If \(b=0\) and \(c=1\), we can get the regression model with long-memory errors.

This paper has investigated the asymptotic behavior of the MLE for gfBm. However, we just estimated these parameters separately. What should be done in the near future is to estimate all the unknown parameters (including the Hurst index) simultaneously. Thus, the problem of joint parameter estimation for stochastic models driven by fBm is interesting and attractive. We also expect the need for these methods and for improvements in the statistical machinery that is available to practitioners to grow further as the financial industry continues to expand and data sets become richer. The field is therefore of growing importance for both theorists and practitioners.

References

Casas, I., Gao, J.: Econometric estimation in long-range dependent volatility models: theory and practice. J. Econom. 147(1), 72–83 (2008)

Los, C.A., Yu, B.: Persistence characteristics of the Chinese stock markets. Int. Rev. Financ. Anal. 17(1), 64–82 (2008)

Willinger, W., Taqqu, M.S., Teverovsky, V.: Stock market prices and long-range dependence. Finance Stoch. 3(1), 1–13 (1999)

Duncan, T.E., Hu, Y., Pasik-Duncan, B.: Stochastic calculus for fractional Brownian motion I: theory. SIAM J. Control Optim. 38(2), 582–612 (2000)

Hu, Y., Øksendal, B.: Fractional white noise calculus and applications to finance. Infin. Dimens. Anal. Quantum Probab. Relat. Top. 6(1), 1–32 (2003)

Elliott, R.J., van der Hoek, J.: A general fractional white noise theory and applications to finance. Math. Finance 13(2), 301–330 (2003)

Elliott, R.J., Chan, L.: Perpetual American options with fractional Brownian motion. Quant. Finance 4(2), 123–128 (2004)

Guasoni, P.: No arbitrage under transaction costs, with fractional Brownian motion and beyond. Math. Finance 16(3), 569–582 (2006)

Rostek, S.: Option Pricing in Fractional Brownian Markets. Springer, Heidelberg (2009)

Azmoodeh, E.: On the fractional Black–Scholes market with transaction costs. Commun. Math. Finance 2(3), 21–40 (2013)

Kleptsyna, M.L., Le Breton, A., Roubaud, M.C.: Parameter estimation and optimal filtering for fractional type stochastic systems. Stat. Inference Stoch. Process. 3(1), 173–182 (2000)

Kleptsyna, M.L., Le Breton, A.: Statistical analysis of the fractional Ornstein–Uhlenbeck type process. Stat. Inference Stoch. Process. 5(3), 229–248 (2002)

Tudor, C.A., Viens, F.: Statistical aspects of the fractional stochastic calculus. Ann. Stat. 25(5), 1183–1212 (2007)

Cialenco, I., Lototsky, S., Pospisil, J.: Asymptotic properties of the maximum likelihood estimator for stochastic parabolic equations with additive fractional Brownian motion. Stoch. Dyn. 9(2), 169–185 (2009)

Hu, Y., Nualart, D.: Parameter estimation for fractional Ornstein–Uhlenbeck processes. Stat. Probab. Lett. 80(11–12), 1030–1038 (2010)

Azmoodeh, E., Morlanes, J.I.: Drift parameter estimation for fractional Ornstein–Uhlenbeck process of the second kind. Statistics 49(1), 1–18 (2015)

Cheng, P., Shen, G., Chen, Q.: Parameter estimation for nonergodic Ornstein–Uhlenbeck process driven by the weighted fractional Brownian motion. Adv. Differ. Equ. 2017, 366 (2017)

Xiao, W., Yu, J.: Asymptotic theory for estimating drift parameters in the fractional Vasicek model. Econ. Theory (2018) (forthcoming)

Xiao, W., Yu, J.: Asymptotic Theory for Rough Fractional Vasicek Models. Economics and Statistics Working Papers 7-2018, Singapore Management University, School of Economics (2018). http://ink.library.smu.edu.sg/soe_research/2158

Prakasa Rao, B.L.S.: Statistical Inference for Fractional Diffusion Processes. Wiley, New York (2010)

Xiao, W., Zhang, W., Xu, W.: Parameter estimation for fractional Ornstein–Uhlenbeck processes at discrete observation. Appl. Math. Model. 35(9), 4196–4207 (2011)

Long, H., Shimizu, Y., Sun, W.: Least squares estimators for discretely observed stochastic processes driven by small Lévy noises. J. Multivar. Anal. 116(1), 422–439 (2013)

Liu, Z., Song, N.: Minimum distance estimation for fractional Ornstein–Uhlenbeck type process. Adv. Differ. Equ. 2014(1), 137 (2014)

Barboza, L.A., Viens, F.G.: Parameter estimation of Gaussian stationary processes using the generalized method of moments. Electron. J. Stat. 11(1), 401–439 (2017)

El Onsy, B., Es-Sebaiy, K., Viens, F.G.: Parameter estimation for a partially observed Ornstein–Uhlenbeck process with long-memory noise. Stochastics 89(2), 431–468 (2017)

Bajja, S., Es-Sebaiy, K., Viitasaari, L.: Least squares estimator of fractional Ornstein–Uhlenbeck processes with periodic mean. J. Korean Stat. Soc. 46(4), 608–622 (2017)

Fox, R., Taqqu, M.S.: Large-sample properties of parameter estimates for strongly dependent stationary Gaussian time series. Ann. Stat. 14(2), 517–532 (1986)

Dahlhaus, R.: Efficient parameter estimation for self-similar processes. Ann. Stat. 17(4), 1749–1766 (1989)

Tsai, H., Chan, K.S.: Quasi-maximum likelihood estimation for a class of continuous-time long-memory processes. J. Time Ser. Anal. 26(5), 691–713 (2005)

Tsai, H., Chan, K.S.: Maximum likelihood estimation of linear continuous time long memory processes with discrete time data. J. R. Stat. Soc. B 67(5), 703–716 (2005)

Bhansali, R.J., Giraitis, L., Kokoszka, P.: Estimation of the memory parameter by fitting fractionally differenced autoregressive models. J. Multivar. Anal. 97(10), 2101–2130 (2006)

Bertin, K., Torresa, S., Tudor, C.A.: Maximum likelihood estimators and random walks in long memory models. Statistics 45(4), 361–374 (2011)

Bertin, K., Torres, S., Tudor, C.A.: Drift parameter estimation in fractional diffusions driven by perturbed random walks. Stat. Probab. Lett. 81(2), 243–249 (2011)

Brouste, A., Iacus, S.M.: Parameter estimation for the discretely observed fractional Ornstein–Uhlenbeck process and the Yuima R package. Comput. Stat. 28(4), 1529–1547 (2013)

Es-Sebaiy, K.: Berry–Esséen bounds for the least squares estimator for discretely observed fractional Ornstein–Uhlenbeck processes. Stat. Probab. Lett. 83(10), 2372–2385 (2013)

Kim, Y.M., Nordman, D.J.: A frequency domain bootstrap for Whittle estimation under long-range dependence. J. Multivar. Anal. 115, 405–420 (2013)

Bardet, J.M., Tudor, C.: Asymptotic behavior of the Whittle estimator for the increments of a Rosenblatt process. J. Multivar. Anal. 131, 1–16 (2014)

Xiao, W., Zhang, W., Zhang, X.: Parameter identification for the discretely observed geometric fractional Brownian motion. J. Stat. Comput. Simul. 85(2), 269–283 (2015)

Hu, Y., Nualart, D., Xiao, W., Zhang, W.: Exact maximum likelihood estimator for drift fractional Brownian motion at discrete observation. Acta Math. Sci. 31(5), 1851–1859 (2011)

Nualart, D.: The Malliavin Calculus and Related Topics, 2nd edn. Springer, Berlin (2006)

Nualart, D., Peccati, G.: Central limit theorems for sequences of multiple stochastic integrals. Ann. Probab. 33(1), 177–193 (2005)

Nualart, D., Ortiz-Latorre, S.: Central limit theorems for multiple stochastic integrals and Malliavin calculus. Stoch. Process. Appl. 118(4), 614–628 (2008)

Kubilius, K., Melichov, D.: Quadratic variations and estimation of the Hurst index of the solution of SDE driven by a fractional Brownian motion. Lith. Math. J. 50(4), 401–417 (2010)

Shen, H., Zhu, Z., Lee, L.T.C.: Robust estimation of the self-similarity parameter in network traffic using wavelet transform. Signal Process. 87(9), 2111–2124 (2007)

Kubilius, K., Mishura, Y.: The rate of convergence of Hurst index estimate for the stochastic differential equation. Stoch. Process. Appl. 122(11), 3718–3739 (2012)

Salomónab, L.A., Fortc, J.C.: Estimation of the Hurst parameter in some fractional processes. J. Stat. Comput. Simul. 83(3), 542–554 (2013)

Golub, G.H., van Loan, C.F.: Matrix Computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Zhan, X.: Extremal eigenvalues of real symmetric matrices with entries in an interval. SIAM J. Matrix Anal. Appl. 27(3), 851–860 (2005)

Coeurjolly, J.F.: Simulation and identification of the fractional Brownian motion: a bibliographical and comparative study. J. Stat. Softw. 5(7), 1–53 (2000)

Paxson, V.: Fast, approximate synthesis of fractional Gaussian noise for generating self-similar network traffic. Comput. Commun. Rev. 27(5), 5–18 (1997)

Schott, J.R.: Matrix Analysis for Statistics, 2nd edn. Wiley, Hoboken (2005)

Acknowledgements

The author would like to thank the referees for their very helpful and detailed comments, which have significantly improved the presentation of this paper.

Funding

This research was supported by the Natural Science Foundation of Guangdong Province, China (no. S2013010016270).

Author information

Authors and Affiliations

Contributions

LS carried out the proofs of all theorems. LW participated in the design of the study and helped to draft the manuscript. PF conceived of the Monte Carlo simulation study. All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In this appendix we show how to obtain the required moment computation for \(\hat{c}^{2}\).

Proposition 7.1

Let \(\hat{c}^{2}\) be defined by (13). Then

Proof

First, using Theorem 10.18 in Schott [51], \(\mathbb{E} [ ( \mathbf{B} _{t}^{H}\mathbf{B}_{t}^{H} ) ' ] =\Gamma_{H}\) and (19), we can compute the expectation of \(\hat{c}^{2}\) as follows:

To compute the variance of \({\hat{c}^{2}}\), we introduce \(\mathbf{X}= \Gamma_{H}^{-1/2} ( \mathbf{B}_{t}^{H} ) \). Then

Therefore, X is a standard Gaussian vector of dimension N. For any λ small enough and \(\varepsilon\in\mathbb{R}\), we have

A standard technique of completing the squares yields

What we are only interested are the coefficients of \(\lambda^{2}\), \(\lambda\varepsilon^{2}\) and \(\varepsilon^{4}\) in the above expression \(f(\lambda, \varepsilon)\). We have

Comparing the coefficients of \(\lambda^{2}\), \(\lambda\varepsilon^{2}\) and \(\varepsilon^{4}\), we have

Then we can obtain

Analogously to the discussion above, we obtain

which completes the proof. □

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sun, L., Wang, L. & Fu, P. Maximum likelihood estimators of a long-memory process from discrete observations. Adv Differ Equ 2018, 154 (2018). https://doi.org/10.1186/s13662-018-1611-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1611-1