Abstract

In this article, a kind of fuzzy cellular neural networks (FCNNs) with proportional delays and leakage delays are involved. Utilizing the differential inequality strategies, a sequence of sufficient criteria ensuring the global exponential convergence of involved model are presented. Computer simulations are performed to verify the analytic findings. The analytic findings of this article are innovative and complete several existing works.

Similar content being viewed by others

1 Introduction

In recent several decades, cellular neural networks (CNNs) have attracted much attentions from many scholars since they have been applied in numerous areas, for example, they can be applied to image processing, pattern recognition, psychophysics, etc. [1–6]. A great deal of achievement on the dynamics of CNNs has been made. For instance, Abbas and Xia [7] studied the attractivity of k-almost automorphic solution of CNNs with delay, Balasubramaniam et al. [8] considered the global asymptotic stability of BAM FCNNs with mixed delays, Qin et al. [9] considered the convergence and attractivity of memristor-based delayed CNNs. For additional explanation, one can refer to [10–15].

We know that FCNNs possess fuzzy logic between template input and/or output. A lot of authors think that the FCNNs play a key role in image processing aspects [16]. In addition, leakage delay has a great effect on the dynamical nature of neural networks [17–21]. For instance, leakage delay can destabilize a model [22]. In [23], the authors believed that the appearance of the equilibrium has no contact with initial value and delay. Moreover, the proportional delay of neural networks can be expressed by \(\xi(s)=s-rs, 0< r<1, s>0\). In real life, proportional delay plays a huge role in many areas such as quality of web, current collection [24] and so on. Since the applications of CNNs have an important relation with the global exponential convergence behaviors [25–37]. Therefore we think that it is meaningful to analyze the global exponential convergence of neural networks with proportional delays and leakage delays. But now there is no existing work as regards the global exponential convergence of FCNNs with proportional delays and leakage delays.

Inspired by the viewpoint, it is necessary for us to study the existence and global attractivity for neural networks with proportional delays and leakage delays. In this paper, we will discuss the following neural network model:

where \(\alpha_{i}(t)\) is a rate coefficient, \(A_{ij}(t)\ (B_{ij}(t)) \) denotes the feedback (feedforward) template; \(C_{ij}(t)\ (D_{ij}(t))\) stands for fuzzy feedback MIN (MAX) template, \(E_{ij}(t)\ (F_{ij}(t))\) means fuzzy feedforward MIN (MAX) template. \(\bigwedge\ (\bigvee)\) stand for the fuzzy AND (OR) operation, \(z_{i}(t), v_{i}(t)\) and \(G_{i}(t)\) denote the state, input and bias of the ith neuron, respectively; \(h(\cdot)\) is the activation function; \(\delta_{i}(t)\) is the transmission delay. \(\theta _{ij}, i, j\in\Lambda\) stand for proportional delays and satisfy \(0<\theta_{ij}\leq1\), and \(\theta_{ij}t=t-(1-\theta_{ij})t\), in which \(\tau_{ij}(t)=(1-\theta_{ij})t\) is the transmission delay function, and \((1-\theta_{ij})t\rightarrow\infty\) as \(\theta_{ij}\neq1, t\rightarrow\infty\), \(t-\delta_{i}(t)>\bar{t}\) \(\forall t\geq\bar{t}\).

The initial values of (1.1) take the form

where \(\varsigma_{i}=\min_{i,j\in\Lambda}\{\theta_{ij}\}\), and \(\psi _{i}(t)\in R\) represents a continuous function, where \(t\in[\varsigma_{i} \bar{t}, \bar{t}]\).

Set

where l stands for a bounded and continuous function. Let \(z=(z_{1},z_{2},\ldots,z_{n})^{T}\in R^{n}\), \(\vert z \vert =( \vert z_{1} \vert , \vert z_{2} \vert ,\ldots, \vert z_{n} \vert )^{T}\) and \(\Vert z \Vert =\max_{i\in\Lambda} \vert z_{i} \vert \). We assume that \(d_{i}, A_{ij}, B_{ij},C_{ij},D_{ij}, E_{ij},F_{ij},G_{i},v_{i}: [\bar{t},+\infty)\rightarrow R\) and \(\delta_{i}: [\bar{t},+\infty)\rightarrow[0,+\infty)\) are bounded and continuous functions.

Lemma 1.1

([38])

If \(z_{j}\) and \(q_{j}\) are two states of (1.1), then

Now we also give some assumptions as follows:

-

(Q1)

\(\exists \alpha_{i}^{*}:[t_{0},+\infty)\rightarrow(0,+\infty)\) and a constant \(\eta_{i}>0\) which satisfy \(e^{-\int_{s}^{t}\alpha_{i}(\theta)\,d\theta }\leq \eta_{i}e^{-\int_{s}^{t}\alpha_{i}^{*}(\theta)\,d\theta}\), \(\forall t,s\in R, i\in\Lambda\) and \(t-s\geq0\), where \(\alpha_{i}^{*}\) is a bounded and continuous function.

-

(Q2)

∃ constants \(L_{j}\geq0\) which satisfy \(\vert h_{j}(t_{1})-h_{j}(t_{2}) \vert \leq L_{j} \vert t_{1}-t_{2} \vert , h_{j}(0)=0\) \(\forall t_{1},t_{2}\in R, i\in\Lambda\).

-

(Q3)

∃ constants \(\mu_{1}>0,\mu_{2}>0,\ldots,\mu_{n}>0\) and \(\gamma^{*}>0\) which satisfy

$$\begin{aligned} & \sup_{t\geq\bar{t}}\Biggl\{ -\alpha_{i}^{*}(t)+ \eta_{i}\Biggl[ \bigl\vert \alpha _{i}(t) \bigr\vert \delta_{i}^{*}(t)e^{\gamma^{*}\delta_{i}^{+}}+\mu_{i}^{-1}\sum _{j=1}^{n} \bigl\vert A_{ij}(t) \bigr\vert L_{j}\mu_{j} \\ & \quad{}+\mu_{i}^{-1}\sum_{j=1}^{n} \bigl\vert C_{ij}(t) \bigr\vert L_{j}\mu_{j} e^{\gamma^{*}(1-\theta _{ij})t}+\mu_{i}^{-1}\sum _{j=1}^{n} \bigl\vert D_{ij}(t) \bigr\vert L_{j}\mu_{j}e^{\gamma^{*}(1-\theta _{ij})t}\Biggr]\Biggr\} < 0, \\ & \sup_{t\geq\bar{t}}\Biggl\{ \bigl\vert \alpha_{i}(t) \bigr\vert +\eta_{i}\Biggl[ \bigl\vert \alpha _{i}(t) \bigr\vert \delta_{i}(t)e^{\gamma^{*}\delta_{i}^{+}}+\mu_{i}^{-1} \sum_{j=1}^{n} \bigl\vert A_{ij}(t) \bigr\vert L_{j}\mu_{j} \\ & \quad{}+\mu_{i}^{-1}\sum_{j=1}^{n} \bigl\vert C_{ij}(t) \bigr\vert L_{j} \mu_{j}e^{\gamma^{*}(1-\theta _{ij})t}+\mu_{i}^{-1}\sum _{j=1}^{n} \bigl\vert D_{ij}(t) \bigr\vert L_{j}\mu_{j}e^{\gamma^{*}(1-\theta _{ij})t}\Biggr]\Biggr\} < 1, \end{aligned}$$and \(G_{i}(t)+(B_{ij}(t)+E_{ij}(t)+F_{ij}(t))v_{j}=O(e^{-\gamma^{*}t})\) as \(t\rightarrow+\infty\), where \(i,j\in\Lambda\).

The pivotal achievements of this article consist of three points: (i) the global exponential convergence of FCCNs with leakage delays and proportional delays is firstly considered; (ii) a new sufficient criterion guaranteeing the global exponential convergence of model (1.1) is presented; (iii) the analytic predictions of this article are more common and the analysis method of this article can be applied to the investigation of some other related network systems.

2 Main findings

Now we will give the important findings on the global exponential convergence for (1.1).

Theorem 2.1

For (1.1), if (Q1)–(Q3) hold, then ∃ a constant \(\gamma>0\) such that, for every \(z=(z_{1},z_{2},\ldots,z_{n})^{T}\), \(z_{i}(t)=O(e^{-\gamma t})\) when \(t\rightarrow+\infty, i\in\Lambda\).

Proof

In order to prove \(z_{i}(t)=O(e^{-\gamma t})\) when \(t\rightarrow+\infty, i\in\Lambda\), we need prove that there exists a constant \(M>0\) such that \(z_{i}(t)=M e^{-\gamma t} \) when \(t\rightarrow+\infty, i\in\Lambda\). For convenience, we firstly establish an equivalent form of the original system by applying a suitable variable substitution. By way of contradiction and the differential inequality strategies, we obtain the results of the theorem. In the following, we will given the detailed proofs.

Assume that \(z(t)=(z_{1}(t),z_{2}(t),\ldots, z_{n}(t))^{T}\) is an arbitrary solution of (1.1) and the initial value is \(\psi=(\psi_{1},\psi_{2},\ldots,\psi_{n})^{T}\). Set

Then (1.1) becomes

By (Q3), we can find a \(\gamma\in(0,\min\{\gamma^{*},\min_{i\in\Lambda}\inf_{t\geq t_{0}}\alpha_{i}^{*}(t)\})\) which satisfies

Set

For \(\epsilon>0\), one has

\(\forall t\in[\varsigma_{i}\bar{t},\bar{t}]\), where \(\chi=\max_{i\in\Lambda}\eta_{i}+1\) which satisfies

\(\forall t\geq\bar{t}, i\in\Lambda\). In the sequel, we will prove that

\(\forall t\geq\bar{t}, i\in\Lambda\). Assume that (2.8) does not hold, then we can find \(i\in\Lambda\) and \(t^{*}>\bar{t}\) which satisfies

and

\(\forall t\in[\varsigma_{j}\bar{t},t^{*}],j\in\Lambda\).

In addition

where \(s\in[\bar{t},t],t\in[\bar{t},t^{*}],i\in\Lambda\).

In view of (2.11), one has

According to (2.3), (2.7) and (2.10), we get

By (2.9), we know that (2.8) holds. Therefore \(z_{i}(t)=O(e^{-\gamma t})\) when \(t\rightarrow+\infty, i\in\Lambda\). □

Remark 2.1

In [39], the authors studied the finite-time synchronization of delayed neural networks. This paper does not involve proportional delay and leakage delay. In [40] the authors analyzed the finite-time synchronization for neural networks with proportional delays, this article does not consider the leakage delays. Huang [41] considered the exponential stability of delayed neural networks, but he also did not consider the effect of proportional delays and leakage delays. moreover, all authors of [39–41] did not investigate the global exponential convergence of systems. In this article, we study the global exponential convergence of FCNNs with leakage delays and proportional delays. All the theoretical findings in [39–41] cannot be applied to (1.1) to ensure the global exponential convergence of (1.1). Up to now, there are no results on the global exponential convergence of FCNNs with leakage delays and proportional delays. From this viewpoint, our results on global exponential convergence for FCNNs are essentially innovative and complete several earlier publications.

3 Examples

Considering the following model:

where \(h_{1}(v)=h_{2}(v)=0.5(| v+1|-| v-1| )\) and

Then \(L_{1}=L_{2}=1\). Let \(\alpha_{i}^{*}(t)=0.1, \eta_{i}=e^{\frac{1}{10}}\), then \(e^{-\int_{s}^{t}\alpha_{i}(\theta)\,d\theta}\leq e^{\frac{1}{10}}e^{-(t-s)},i=1,2,t\geq s\). Let \(\mu_{1}=\mu_{2}=1, \gamma^{*}=1\). So

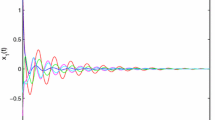

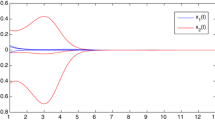

Therefore (Q1)–(Q3) of Theorem 2.1 hold true, then all solutions of (3.1) have global exponential convergence. This result can be shown in Fig. 1 and Fig. 2.

Diagram of relationship of \(t-z_{1}\) for (3.1)

Diagram of relationship of \(t-z_{2}\) for (3.1)

4 Conclusions

In this article, we have discussed neural networks with leakage delays and proportional delays. With the aid of inequality strategies and fuzzy differential equation approach, a sufficient criterion ensuring the global exponential convergence of neural networks with leakage delays and proportional delays is derived. The sufficient condition can be tested by algebraic manipulation without difficulty. The derived results complement parts of earlier work (for instance, [39–41]). In addition, the method of this article can be used to discuss some other similar network models.

References

Ratnavelu, K., Kalpana, M., Balasubramaniam, P., Wong, K., Raveendran, P.: Image encryption method based on chaotic fuzzy cellular neural networks. Signal Process. 140, 87–96 (2017)

Kimura, M., Morita, R., Sugisaki, S., Matsuda, T., Nakashima, Y.: Cellular neural network formed by simplified processing elements composed of thin-film transistors. Neurocomputing 248, 112–119 (2017)

Marco, M.D., Forti, M., Pancioni, L.: Memristor standard cellular neural networks computing in the flux–charge domain. Neural Netw. 93, 152–164 (2017)

Starkov, S.O., Lavrenkov, Y.N.: Prediction of the moderator temperature field in a heavy water reactor based on a cellular neural network. Nucl. Energy Technol. 3(2), 133–140 (2017)

Ratnavelu, K., Manikandan, M., Balasubramaniam, P.: Design of state estimator for BAM fuzzy cellular neural networks with leakage and unbounded distributed delays. Inf. Sci. 397–398, 91–109 (2017)

Ban, J.C., Chang, C.H.: When are two multi-layer cellular neural networks the same? Neural Netw. 79, 12–19 (2016)

Abbas, S., Xia, Y.H.: Existence and attractivity of k-almost automorphic sequence solution of a model of cellular neural networks with delay. Acta Math. Sci. 33(1), 290–302 (2013)

Balasubramaniam, P., Kalpana, M., Rakkiyappan, R.: Global asymptotic stability of BAM fuzzy cellular neural networks with time delay in the leakage term, discrete and unbounded distributed delays. Math. Comput. Model. 53(5–6), 839–853 (2011)

Qin, S.T., Wang, J., Xue, X.P.: Convergence and attractivity of memristor-based cellular neural networks with time delays. Neural Netw. 63, 223–233 (2015)

Xu, C.J., Li, P.L.: On anti-periodic solutions for neutral shunting inhibitory cellular neural networks with time-varying delays and D operator. Neurocomputing 275, 377–382 (2018)

Jia, R.W.: Finite-time stability of a class of fuzzy cellular neural networks with multi-proportional delays. Fuzzy Sets Syst. 319, 70–80 (2017)

Wu, S.L., Niu, T.C.: Qualitative properties of traveling waves for nonlinear cellular neural networks with distributed delays. J. Math. Anal. Appl. 434(1), 617–632 (2016)

Lin, Y.L., Hsieh, J.G., Jeng, J.H.: Robust decomposition with guaranteed robustness for cellular neural networks implementing an arbitrary Boolean function. Neurocomputing 143, 339–346 (2014)

Zhou, L.Q., Chen, X.B., Yang, Y.X.: Asymptotic stability of cellular neural networks with multiple proportional delays. Appl. Math. Comput. 229, 457–466 (2014)

Jiang, A.: Exponential convergence for shunting inhibitory cellular neural networks with oscillating coefficients in leakage terms. Neurocomputing 165, 159–162 (2015)

Ding, W., Han, M.A.: Synchronization of delayed fuzzy cellular neural networks based on adaptive control. Phys. Lett. A 372(26), 4674–4681 (2008)

Wang, F., Liu, M.C.: Global exponential stability of high-order bidirectional associative memory (BAM) neural networks with time delays in leakage terms. Neurocomputing 177, 515–528 (2016)

Li, Y.K., Yang, L.: Almost automorphic solution for neutral type high-order Hopfield neural networks with delays in leakage terms on time scales. Appl. Math. Comput. 242, 679–693 (2014)

Xie, W.J., Zhu, Q.X., Jiang, F.: Exponential stability of stochastic neural networks with leakage delays and expectations in the coefficients. Neurocomputing 173, 1268–1275 (2016)

Rakkiyappan, R., Lakshmanan, S., Sivasamy, R., Lim, C.P.: Leakage-delay-dependent stability analysis of Markovian jumping linear systems with time-varying delays and nonlinear perturbations. Appl. Math. Model. 40(7–8), 5026–5043 (2016)

Sakthivel, R., Vadivel, P., Mathiyalagan, K., Arunkumar, A., Sivachitra, M.: Design of state estimator for bidirectional associative memory neural networks with leakage delays. Inf. Sci. 296, 263–274 (2015)

Zhang, H., Shao, J.Y.: Almost periodic solutions for cellular neural networks with time-varying delays in leakage terms. Appl. Math. Comput. 219(24), 11471–11482 (2013)

Chen, Y.G., Fu, Z.M., Liu, Y.R., Alsaadi, F.E.: Further results on passivity analysis of delayed neural networks with leakage delay. Neurocomputing 224, 135–141 (2017)

Li, L., Yang, Y.Q.: On sampled-data control for stabilization of genetic regulatory networks with leakage delays. Neurocomputing 149, 1225–1231 (2015)

Wu, A.L., Zeng, Z.G., Zhang, J.: Global exponential convergence of periodic neural networks with time-varying delays. Neurocomputing 78(1), 149–154 (2012)

Wan, P., Jian, J.G.: Global convergence analysis of impulsive inertial neural networks with time-varying delays. Neurocomputing 245, 68–76 (2017)

Yi, X.J., Gong, S.H., Wang, L.J.: Global exponential convergence of an epidemic model with time-varying delays. Nonlinear Anal., Real World Appl. 12(1), 450–454 (2011)

Zhang, Y.N., Shi, Y.Y., Chen, K., Wang, C.L.: Global exponential convergence and stability of gradient-based neural network for online matrix inversion. Appl. Math. Comput. 215(3), 1301–1306 (2009)

Kong, S.L., Zhang, Z.S.: Optimal control of stochastic system with Markovian jumping and multiplicative noises. Acta Autom. Sin. 38(7), 1113–1118 (2012)

Kong, S.L., Saif, M., Zhang, H.S.: Optimal filtering for Itô-stochastic continuous-time systems with multiple delayed measurements. IEEE Trans. Autom. Control 58(7), 1872–1876 (2013)

Tang, X.H., Chen, S.T.: Ground state solutions of Nehari–Pohozaev type for Schrödinger–Poisson problems with general potentials. Discrete Contin. Dyn. Syst., Ser. A 37(9), 4973–5002 (2017)

Tang, X.H., Chen, S.T.: Ground state solutions of Nehari–Pohozaev type for Kirchhoff-type problems with general potentials. Calc. Var. Partial Differ. Equ. 56, 110 (2017)

Chen, S.T., Tang, X.H.: Ground state sign-changing solutions for a class of Schrödinger–Poisson type problems in \(R^{3}\). Z. Angew. Math. Phys. 67(2), 1–18 (2016)

Li, X.D., Song, S.J.: Stabilization of delay systems: delay-dependent impulsive control. IEEE Trans. Autom. Control 62(1), 406–411 (2017)

Li, X.D., Cao, J.D.: An impulsive delay inequality involving unbounded time-varying delay and applications. IEEE Trans. Autom. Control 62(7), 3618–3625 (2017)

Xu, C.J., Li, P.L.: Global exponential stability of periodic solution for fuzzy cellular neural networks with distributed delays and variable coefficients. J. Intell. Fuzzy Syst. 32(2), 2603–2615 (2017)

Xu, C.J., Li, P.L.: pth moment exponential stability of stochastic fuzzy Cohen–Grossberg neural networks with discrete and distributed delays. Nonlinear Anal., Model. Control 22(4), 531–544 (2017)

Yang, T., Yang, L.B.: The global stability of fuzzy cellular neural networks. IEEE Trans. Circuits Syst. I 43(10), 880–883 (1996)

Abdurahman, A., Jiang, H.J., Teng, Z.D.: Finite-time synchronization for fuzzy cellular neural networks with time-varying delays. Fuzzy Sets Syst. 297, 96–111 (2016)

Wang, W.T.: Finite-time synchronization for a class of fuzzy cellular neural networks with time-varying coefficients and proportional delays. In: Fuzzy Sets and Systems (2018) in press

Huang, T.W.: Exponential stability of fuzzy cellular neural networks with distributed delay. Phys. Lett. A 351(1–2), 48–52 (2006)

Acknowledgements

The first author was supported by National National Natural Science Foundation of China (No. 61673008), Project of High-level Innovative Talents of Guizhou Province ([2016]5651), Major Research Project of The Innovation Group of The Education Department of Guizhou Province ([2017]039), Project of Key Laboratory of Guizhou Province with Financial and Physical Features ([2017]004) and the Foundation of Science and Technology of Guizhou Province (2018).

Author information

Authors and Affiliations

Contributions

All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xu, C., Chen, L., Guo, T. et al. Dynamics of FCNNs with proportional delays and leakage delays. Adv Differ Equ 2018, 72 (2018). https://doi.org/10.1186/s13662-018-1525-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1525-y