Abstract

In this paper, we consider a stochastic SIR epidemic model with regime switching. The Markov semigroup theory will be employed to obtain the existence of a unique stable stationary distribution. We prove that, if \(\mathcal{R}^{s}<0\), the disease becomes extinct exponentially; whereas if \(\mathcal{R}^{s}>0\) and \(\beta(i)>\alpha(i)(\varepsilon(i)+\gamma(i))\), \(i\in\mathbb{S}\), the densities of the distributions of the solution can converge in \(L^{1}\) to an invariant density.

Similar content being viewed by others

1 Introduction

Infectious disease population dynamics is often influenced by different types of environmental noise, in which white noise is the most typical one. The effects of white noise on epidemic models have already been considered by many authors (e.g. [1–4]). In this literature the extinction and persistence of the disease were discussed. In reference [4], the authors assumed that the environmental white noises mainly influence the natural death rates μ of the populations. That is, \(\mu \to \mu +\sigma \dot{B}(t)\), where \(B(t)\) is a standard Brownian motion, \(\sigma^{2}\) represents the intensity of white noise. By replacing \(\mu \,{\mathrm{d}}t\) by \(\mu \,{\mathrm{d}}t+ \sigma\, {\mathrm{d}}B(t)\) in the deterministic SIR model with saturated incidence, the authors obtained a stochastic SIR model, which takes the form of

In this model, \(S(t)\) and \(I(t)\) denote the number of susceptible and infected individuals at time t, respectively. The influx of individuals into the susceptibles is given by a constant Λ. The natural death rate is denoted by constant μ and individuals in \(I(t)\) suffer an additional death due to disease with rate constant ε; β and γ represent the disease transmission coefficient and the rate of recovery from infection, respectively; α is the saturated coefficient. Since the dynamics of compartment R has no effect on the disease transmission dynamics, it was omitted from the classical SIR model. In [4] the authors analyzed the long-time behavior of densities of the distributions of the solution and proved that the densities can converge in \(L^{1}\) to an invariant density.

In reality, except for white noise there is another important environmental noise: color noise, which can cause the population system to switch from one environmental regime to another. Such a switching is usually described by a continuous-time Markov chain \(r(t)\), \(t\geq 0\) with a finite state space \(\mathbb{S}=\{1,2,\ldots,N\}\). And the generator \(\Gamma =(\gamma_{ij})_{N\times N}\) of \(r(t)\) is given by

where \(\gamma_{ij}\geq 0\) for \(i,j=1,\ldots,N\) with \(j\neq i\) and \(\gamma_{ii}=-\sum_{j\neq i}\gamma_{ij}\) for each \(i=1,\ldots,N\). Assume Markov chain \(r(t)\) is independent of Brownian motion. For convenience, throughout this paper we assume

This assumption ensures that the Markov chain \(r(t)\) is irreducible. Consequently, there exists a unique stationary distribution \(\pi =\{\pi_{1},\pi_{2},\ldots,\pi_{N}\}\) of \(r(t)\) which satisfies \(\pi \Gamma =0\), \(\sum_{i=1}^{N}\pi_{i}=1\) and \(\pi_{i}>0\), \(\forall i \in \mathbb{S}\).

Incorporating color noise into system (1.1), we get a regime-switching diffusion model:

where \(\Lambda (i)\), \(\beta (i)\), \(\mu (i)\), \(\varepsilon (i)\), \(\gamma (i)\), \(\alpha (i)\) and \(\sigma (i)\), \(i\in \mathbb{S}\), are all positive constants.

In the earlier literature, regime switching was introduced into population models; see e.g. [5–8]. Due to the important effect of color noise on disease transmission, many author considered deterministic epidemic model with Markovian switching [9, 10].

Recently, much literature considered asymptotic behavior of stochastic epidemic model under regime switching, e.g. [11–14]. In this literature the uniform ellipticity condition is necessary when proving the ergodicity of stochastic system. But for system (1.2) the diffusion matrix of system (1.2) is given by \(A_{i}=\sigma^{2}(i) \bigl ( {\scriptsize\begin{matrix}{} S^{2} & SI \cr SI & I^{2}\end{matrix}} \bigr ) \), \(i\in \mathbb{S}\). Obviously \(A_{i}\) is degenerate, the uniform ellipticity condition is no longer satisfied. System (1.2) with \(\alpha =\varepsilon =\gamma =0\) has been considered by Liu [12], but the author ignored the fact that a degenerate diffusion matrix cannot ensure uniform ellipticity.

Throughout this paper, if A is a vector or matrix, we use \(A'\) to denote its transpose; set \(\hat{g}=\min_{k\in \mathbb{S}}\{g(k)\}\) and \(\check{g}=\max_{k\in \mathbb{S}}\{g(k)\}\) for any vector \(g=(g(1),\ldots,g(N))\); set

where \(\boldsymbol{\nu} =(\nu_{1},\ldots,\nu_{N})'\) is the unique positive solution of linear equation

Remark 1.1

The existence of the unique solution of (1.3) is given by Lemma 2.1 in [12].

By using similar arguments to Theorem 2.1 of [14], it follows that, for any \((S(0),I(0), r(0))\in \mathbb{R}^{2}_{+} \times \mathbb{S}\), system (1.2) has a unique global solution, which remain in \(\mathbb{R}^{2}_{+}\) with probability 1.

The aim of this paper is to consider the long-time behavior of system (1.2). We prove that the disease becomes extinct exponentially if \(\mathcal{R}^{s}<0\); whereas if \(\mathcal{R}^{s}>0\) and \(\beta (i)>\alpha (i)(\varepsilon (i)+ \gamma (i))\), \(i\in \mathbb{S}\), system (1.2) has a stable stationary distribution.

The rest of this paper is organized as follows. In Section 2, we present the sufficient condition for the extinction of the disease. In Section 3 the conditions for the existence of a stable stationary distribution are given. Finally, we draw a conclusion.

2 Extinction of the disease

In this section, we present the sufficient condition for the extinction of the disease.

Theorem 2.1

If \(\mathcal{R}^{s}<0\), the disease \(I(t)\) tends to zero exponentially.

Proof

Consider the following system:

By the stochastic comparison theorem, it follows that \(S(t)\leq X(t)\) a.s. if \(S(0)=X(0)>0\). According to Corollary 4.1 in [12], we have

By using the generalized Itó formula, it follows from (1.2) that

Taking \(t\to \infty \), in view of (2.1), it follows that

The proof is complete. □

3 Existence of stationary distribution and its stability

Let \(x(t)=\ln S(t)\) and \(y(t)=\ln I(t)\), system (1.2) becomes

where \(c_{1}(i):=\mu (i)+\frac{\sigma^{2}(i)}{2}\), \(c_{2}(i):=\mu {(i)}+\varepsilon (i)+\gamma (i)+\frac{\sigma^{2}(i)}{2}\).

In order to investigate the existence of stationary distribution of system (1.2) and its stability, it suffices to consider the corresponding property for system (3.1).

Theorem 3.1

Let \((x(t),y(t))\) be a solution of system (3.1) with initial value \((x(0),y(0),r(0))\in \mathbb{R}^{2}\times \mathbb{S}\). Then for every \(t>0\) the distribution of \((x(t),y(t),r(t))\) has a density \(u(t,x,y,i)\). If \(\mathcal{R}^{s}>0\) and \(\beta (i)>\alpha (i)(\varepsilon (i)+\gamma (i))\), \(i\in \mathbb{S}\), then there exists a unique density \(u_{*}(x,y,i)\) such that

Next, we will prove this theorem by Lemmas 3.1–3.2.

Let \((x^{(i)}(t),y^{(i)}(t))\) be a solution of system

Denote by \({\mathcal{A}}_{i}\) the differential operators

where \(\mathcal{B}(\mathbb{R}^{2})\) is the σ-algebra of Borel subsets of \(\mathbb{R}^{2}\), m is the Lebesgue measure on \((\mathbb{R}^{2},\mathcal{B}(\mathbb{R}^{2}))\) and

According to Lemma 3.3 and Lemma 3.5 in [4], we know that for any \(i\in \mathbb{S}\) the operator \({\mathcal{A}}_{i}\) generates an integral Markov semigroup \(\{{\mathcal{T}}_{i}(t)\}_{t \geq 0}\) on the space \(L^{1}(\mathbb{R}^{2},\mathcal{B}(\mathbb{R} ^{2}),m)\) and

Let \((x(t),y(t))\) be the unique solution of system (3.1) with \((x(0),y(0),r(0))\in \mathbb{R}^{2}\times \mathbb{S}\), then \((x(t),y(t),r(t))\) constitutes a Markov process on \(\mathbb{R}^{2} \times \mathbb{S}\). In view of Lemma 5.5 in [15], for \(t>0\) the distribution of the process \((x(t),y(t), r(t))\) is absolutely continuous and its density \(u=(u_{1},u_{2},\ldots,u_{N})\) (where \(u_{i}:=u(t,x,y,i)\)) satisfies the following master equation:

where \({\mathcal{A}}u=({\mathcal{A}}_{1}u_{1},{\mathcal{A}}_{2}u_{2},\ldots,{\mathcal{A}}_{N}u_{N})'\).

Let \(X=\mathbb{R}^{2}\times \mathbb{S}\), Σ be the σ-algebra of Borel subsets of X, and m̂ be the product measure on \((X,\Sigma )\) given by \(\hat{m}(B\times {i})=m(B)\) for each \(B\in \mathcal{B}(\mathbb{R}^{2})\) and \(i\in \mathbb{S}\). Obviously, \({\mathcal{A}}u\) generates a Markov semigroup \(\{{\mathcal{T}}(t)\} _{t\geq 0}\) on the space \(L^{1}(X,\Sigma ,\hat{m})\), which is given by

Let λ be a constant such that \(\lambda >\max_{1\leq i\leq N} \{-\gamma_{ii}\}\) and \(Q=\lambda^{-1}\Gamma '+I\). Then (3.4) becomes

Obviously, Q is also a Markov operator on \(L^{1}(X,\Sigma ,\hat{m})\).

From the Philips perturbation theorem [16], (3.5) with the initial condition \(u(0,x,y,k)=f(x,y,k)\) generates a Markov semigroup \(\{\mathcal{{P}}(t)\} _{t\geq 0}\) on the space \(L^{1}(X)\) given by

where \(S^{(0)}(t)={\mathcal{T}}(t)\) and

Lemma 3.1

If \(\beta (i)>\alpha (i)(\varepsilon (i)+\gamma (i))\), \(i\in \mathbb{S}\), then the semigroup \(\{\mathcal{P}(t)\}_{t\geq 0}\) is asymptotically stable or is sweeping with respect to compact sets.

Proof

Since \(\{\mathcal{T}(t)\}_{t\geq 0}\) is an integral Markov semigroup, \(\{\mathcal{P}(t)\}_{t\geq 0}\) is a partially integral Markov semigroup. In view of (3.3), (3.7) and \(Q_{ij}>0\) (\(i \neq j\)), we know that, for every nonnegative \(f\in L^{1}(X)\) with \(\Vert f \Vert =1\),

By using similar arguments to Corollary 1 in [17], it follows that \(\{\mathcal{P}(t)\}_{t\geq 0}\) is asymptotically stable or is sweeping with respect to compact sets. □

Remark 3.1

A density \(f_{*}\) is called invariant if \(\mathcal{{P}}(t)f_{*}=f_{*}\) for each \(t>0\). The Markov semigroup \(\{\mathcal{{P}}(t)\}_{t\geq 0}\) is called asymptotically stable if there is an invariant density \(f_{*}\) such that

where \(D=\{f\in L^{1}(X):f\geq 0, \Vert f \Vert =1\}\).

A Markov semigroup \(\{\mathcal{{P}}(t)\}_{t\geq 0}\) is called sweeping with respect to a set \(A\in \Sigma \) if for every \(f\in D\)

Lemma 3.2

If \({\mathcal{R}}^{s}>0\) and \(\beta (i)>\alpha (i)(\varepsilon (i)+ \gamma (i))\), \(i\in \mathbb{S}\), then the semigroup \(\{\mathcal{{P}}(t)\} _{t\geq 0}\) is asymptotically stable.

Proof

We will construct a nonnegative \(C^{2}\)-function V and a closed set \(U\in \mathcal{B}(\mathbb{R}^{2})\) (which lies entirely in \(\mathbb{R}^{2}\)) such that, for any \(i\in \mathbb{S}\),

where

and

In fact, \(\mathscr{A}^{*}\) is the adjoint operator of the infinitesimal generator of the semigroup \(\{\mathcal{{P}}(t)\}_{t\geq 0}\).

Since the matrix Γ is irreducible, there exists \(\varpi =( \varpi_{1},\varpi_{2},\ldots,\varpi_{N})\) that is a solution of the Poisson system (see [18], Lemma 2.3) such that

where \({\mathcal{R}}=({\mathcal{R}}_{1},{\mathcal{R}}_{2},\ldots, {\mathcal{R}}_{N})'\) and \(\mathbf{{1}}=(1,1,\ldots,1)'\). That is, for any \(i\in \mathbb{S}\),

Take \(\theta \in (0,1)\) and \(r>0\) such that

where the function \(f(x)\) is given in (3.11).

Define a \(C^{2}\)-function V as follows:

Next we will find a closed set \(U\subset \mathbb{R}^{2}\) such that \(\mathscr{A}^{*}V(x,y,i)\leq -1\), \((x,y)\in \mathbb{R}^{2}-U\).

Denote

Then

Direct calculation implies that

and

where equations (1.3) and (3.9) are used. Hence,

where

In view of (3.10), we can obtain

Take \(\kappa \in (0,\infty )\) large enough such that

where \(U_{1}=\{(x,y)\in \mathbb{R}^{2}:x\in [-\kappa ,\kappa ]\}\).

For \((x,y)\in U_{1}\), according to (3.10) we have

and

Taking \(\rho \in (0,\infty )\) large enough such that

where \(U_{2}=\{(x,y)\in \mathbb{R}^{2}:x\in [-\kappa ,\kappa ], y \in [-\rho ,\rho ]\}\). Noting \(U_{2}\subset U_{1}\), we obtain \((\mathbb{R}^{2}-U_{1})\cup (U_{1}-U_{2})=\mathbb{R}^{2}-U_{2}\). Combining (3.12) and (3.13), it follows that, for any \(i\in \mathbb{S}\),

Such a function V is called a Khasminskiĭ function. By using similar arguments to those in [19], the existence of a Khasminskiĭ function implies that the semigroup is not sweeping from the set \(U_{2}\). According to Lemma 3.1, the semigroup \(\{\mathcal{{P}}(t)\}_{t\geq 0}\) is asymptotically stable, which completes the proof. □

4 Conclusion

In this paper, we consider the long-time behavior of a regime-switching SIR epidemic model. Since the diffusion is degenerate we employ the Markov semigroup theory to study the long-time behavior of system (1.2). We prove that if \(\mathcal{R}^{s}<0\), the disease becomes extinct exponentially; whereas if \(\mathcal{R}^{s}>0\) and \(\beta (i)>\alpha (i)(\varepsilon (i)+ \gamma (i))\), \(i\in \mathbb{S}\), the densities of the distributions of the solution can converge in \(L^{1}\) to an invariant density. In some sense, \(\mathcal{R}^{s}\) is the threshold determining that the disease does or does not occur.

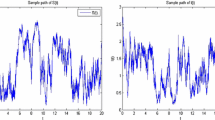

Let \(\mathbb{S}=\{1,2\}\), \(\Gamma = \bigl [{\scriptsize\begin{matrix}{} -2 & 2 \cr 1 & -1 \end{matrix}} \bigr ] \). Obviously, \(\pi =(1/3, 2/3)\). Take \(S(0)=2\), \(I(0)=1\) and

It is easy to check that the disease persists and becomes extinct in fixed environments 1 and 2, respectively. However, in the regime-switching case, we get \(\mathcal{R}^{s}=0.1042>0\). According to Theorem 3.1, the disease is persistent (see Figure 1). In fact, the condition \(\beta (i)>\alpha (i)(\varepsilon (i)+\gamma (i))\), \(i\in \mathbb{S}\) is not necessary (see Figure 2). In the proof of Theorem 3.1, such a condition is mainly used to ensure that the support of the invariant measure (if it exists) in each fixed environment is \(\mathbb{R}^{2}_{+}\), which can make the proof of the main result easier.

References [20, 21] provided another skeleton to prove the ergodicity of stochastic population systems and rates of convergence can also be estimated. In the future, we may continue our research in this direction. In addition, delay is a common phenomenon in the natural world; then it is interesting to consider stochastic models with delay (see e.g. [22, 23]), which can lead to further investigation of along the line of the present paper.

References

Tornatore, E., Buccellato, S., Vetro, P.: Stability of a stochastic SIR system. Physica A 354, 111–126 (2005)

Gray, A., Greenhalgh, D., Hu, L., et al.: A stochastic differential equation SIS epidemic model. SIAM J. Appl. Math. 71, 876–902 (2011)

Ji, C., Jiang, D., Yang, Q., Shi, N.: Dynamics of a multigroup SIR epidemic model with stochastic perturbation. Automatica 48, 121–131 (2012)

Lin, Y., Jiang, D., Jin, M.: Stationary distribution of a stochastic SIR model with saturated incidence and its asymptotic stability. Acta Math. Sci. 35B, 619–629 (2015)

Luo, Q., Mao, X.: Stochastic population dynamics under regime switching. J. Math. Anal. Appl. 334, 69–84 (2007)

Li, X., Jiang, D., Mao, X.: Population dynamical behavior of Lotka–Volterra system under regime switching. J. Comput. Appl. Math. 232, 427–448 (2009)

Zhu, C., Yin, G.: On hybrid competitive Lotka–Volterra ecosystems. Nonlinear Anal. 71, e1370–e1379 (2009)

Liu, M., He, X., Yu, J.: Dynamics of a stochastic regime-switching predator–prey model with harvesting and distributed delays. Nonlinear Anal. Hybrid Syst. 28, 87–104 (2018)

Greenhalgh, D., Liang, Y., Mao, X.: Modelling the effect of telegraph noise in the SIRS epidemic model using Markovian switching. Physica A 462, 684–704 (2016)

Li, D., Liu, S., Cui, J.: Threshold dynamics and ergodicity of an SIRS epidemic model with Markovian switching. J. Differ. Equ. 263, 8873–8915 (2017)

Zhang, X., Jiang, D., Alsaedib, A., et al.: Stationary distribution of stochastic SIS epidemic model with vaccination under regime switching. Appl. Math. Lett. 59, 87–93 (2016)

Liu, Q.: The threshold of a stochastic susceptible-infective epidemic model under regime switching. Nonlinear Anal. Hybrid Syst. 21, 49–58 (2016)

Settati, A., Lahrouz, A.: Asymptotic properties of switching diffusion epidemic model with varying population size. Appl. Math. Comput. 219, 11134–11148 (2013)

Han, Z., Zhao, J.: Stochastic SIRS model under regime switching. Nonlinear Anal., Real World Appl. 14, 352–364 (2013)

Xi, F.: On the stability of jump-diffusions with Markovian switching. J. Math. Anal. Appl. 341, 588–600 (2008)

Lasota, A., Mackey, M.C.: Chaos, Fractals and Noise. Stochastic Aspects of Dynamics. Springer Applied Mathematical Sciences, vol. 97. New York (1994)

Rudnicki, R.: Long-time behaviour of a stochastic prey–predator model. Stoch. Process. Appl. 108, 93–107 (2003)

Khasminskii, R.Z., Zhu, C., Yin, G.: Stability of regime-switching diffusions. Stoch. Process. Appl. 117, 1037–1051 (2007)

Pichór, K., Rudnicki, R.: Stability of Markov semigroups and applications to parabolic systems. J. Math. Anal. Appl. 215, 56–74 (1997)

Dieu, N.T., Nguyen, D.H., Du, N.H., Yin, G.: Classification of asymptotic behavior in a stochastic SIR model. SIAM J. Appl. Dyn. Syst. 15(2), 1062–1084 (2016)

Hening, A., Nguyen, D.H.: Coexistence and extinction for stochastic Kolmogorov systems. Ann. Appl. Probab. To appear (2017). https://arxiv.org/abs/1704.06984

Liu, M., Fan, M.: Stability in distribution of a three-species stochastic cascade predator–prey system with time delays. IMA J. Appl. Math. 82, 396–423 (2017)

Liu, M., Bai, C., Jin, Y.: Population dynamical behavior of a two-predator one-prey stochastic model with time delay. Discrete Contin. Dyn. Syst. 37, 2513–2538 (2017)

Acknowledgements

The authors thank the anonymous referees for valuable suggestions, which led to improvement of the original manuscript. The work was supported by the Education Department of Jilin Province (Grant No: [2016]47).

Author information

Authors and Affiliations

Contributions

All authors contributed equally in this article. They read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jin, M., Lin, Y. & Pei, M. Asymptotic behavior of a regime-switching SIR epidemic model with degenerate diffusion. Adv Differ Equ 2018, 84 (2018). https://doi.org/10.1186/s13662-018-1505-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1505-2