Abstract

This paper is concerned with the Mittag-Leffler stability of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument. Applying the Lyapunov method, the generalized Gronwall-Bellman inequality, and the theory of fractional-order differential equations, sufficient conditions are presented to guarantee the existence and uniqueness of solution. Besides, the global Mittag-Leffler stability is investigated. The obtained criteria are useful in the analysis and design of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument. A numerical example is given to substantiate the validity of the theoretical results.

Similar content being viewed by others

1 Introduction

Recently, neural networks have attracted much attention due to their great potential prospectives in various areas, such as signal processing, associative memory, pattern recognition, and so on [1–18]. As a general class of recurrent neural networks, Cohen-Grossberg neural networks, including Hopfield neural networks and cellular neural networks as two special cases, were proposed by Cohen and Grossberg in 1983. From then on, many researchers have investigated this type of neural networks extensively [19–24]. In [19], the robust stability about the integer-order Cohen-Grossberg neural networks is explored based on the comparison principle. Liu et al. [20] investigate the multistability of Cohen-Grossberg neural networks with nonlinear activation functions in any open interval. In addition, the dynamic properties of Cohen-Grossberg neural networks can describe the evolution of the competition between species in living nature, where the equilibrium points stand for the survival or extinction of the species.

From the viewpoint of mathematics, fractional calculus generalizes integer-order calculus. Meanwhile, fractional derivatives can depict real situations more elaborately than integer-order derivatives, especially when the situations posses hereditary properties or have memory. Due to these facts, fractional-order systems play an important role in scientific modeling and practical applications [25–35]. In [25], the Mittag-Leffler stability of fractional-order memristive neural networks is investigated by utilizing the Lyapunov method. In [29], global Mittag-Leffler stability and asymptotic ω-periodicity of fractional-order fuzzy neural networks with time-varying input are considered. The Mittag-Leffler stability for a general class of Hopfield neural networks is explored in [33] by using the generalized second Lyapunov method. Because of the difference between fractional-order systems and integer-order systems, the analysis method in integer-order systems cannot be applied directly to fractional-order systems. The investigation on fractional-order systems is still at an early stage. Furthermore, the study of fractional-order systems is complex due to the absence of general approaches. Hence, the investigation on the dynamics of fractional-order systems is a valuable and challenging problem.

Fuzzy logic has the property of fuzzy uncertainties and has the advantages of simulating the human way of thinking. Traditional neural networks with fuzzy logic are called fuzzy neural networks; they can be used for broadening the range of application of traditional neural networks. Studies have shown that fuzzy neural networks are useful models for exploring human cognitive activities. There are many profound reports about the fuzzy neural networks (see, for example, [36–40]).

Neural networks with deviating argument, which are proposed in the model of recurrent neural networks by Akhmet et al. [41], are suitable for modeling situations in physics, economy, and biology. In these situations, not only past but also future events are critical for the current properties. The deviating argument changes its type from advanced to retarded alternately and it can link past and future events [42–49]. Neural networks with deviating argument conjugate continuous neural networks and discrete neural networks. Hence, this type of neural networks has the properties of both continuous neural networks and discrete neural networks. From a mathematical perspective, these neural networks are of a mixed type. With the evolution of the process, the deviating state can be advanced and retarded, commutatively. The dynamic behavior of this type of neural networks is studied extensively [50–52] and deserves further investigation.

Inspired by the above discussions, this paper formulates the global Mittag-Leffler stability of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument. Roughly stated, there are three aspects of contribution in this paper:

-

The existence and uniqueness of solution for fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument are addressed.

-

Sufficient conditions are derived to guarantee the global Mittag-Leffler stability of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument.

-

The existing approaches for the stability of neural networks cannot be applied straightforwardly to fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument. In accordance with the theory of differential equations with deviating argument and in conjunction with the properties of fractional-order calculus, the global Mittag-Leffler stability of such type of neural networks is explored in detail.

The rest of the paper is arranged as follows. Some preliminaries and model descriptions are presented in Section 2. The main results are stated in Section 3. The validity of the obtained results is substantiated in Section 4. Concluding remarks are given in Section 5.

2 Preliminaries and model description

2.1 Preliminaries about fractional-order calculus

Let us give a brief introduction to fractional calculus with some concepts, definitions, and useful lemmas.

The Caputo derivative of the function \(\mathcal{F}(\cdot) \in C^{n+1}([t_{0}, +\infty],R)\) with order q is defined by

Correspondingly, the fractional integral of the function \(\mathcal{F}(\cdot)\) with order q is defined by

where \(t\geq t_{0}\), n is a positive integer such that \(n-1< q< n\), and \(\Gamma(q){=\int_{0}^{+\infty}r^{q-1}\exp(-r)\,\mathrm {d}r}\) is the Gamma function.

The one-parameter Mittag-Leffler function is defined as

while the two-parameter Mittag-Leffler function is defined as

where \(q>0\), \(p>0\), and s is a complex number.

2.2 Model

Let \(\mathcal{N}\) denote the set of natural numbers and let \(\mathcal{R}^{+}\) and \(\mathcal{R}^{n}\) stand for the set of nonnegative real numbers and an n-dimensional Euclidean space. For a vector \(x \in\mathcal{R}^{n}\), its norm is defined as \(\|x\|=\sum_{i=1}^{n}|x_{i}|\). Take two real-valued sequences \(\{\eta_{k}\}\) and \(\{\xi_{k}\}\), \(k \in\mathcal{N}\) satisfying \(\eta_{k}<\eta_{k+1}\), \(\eta_{k}\leq\xi_{k}\leq\eta_{k+1}\) for any \(k \in\mathcal{N}\), and \(\lim_{k\rightarrow+\infty}\eta_{k}=+\infty\).

In this paper, we consider a general class of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument described by the following fractional-order differential equations:

where the fractional order q satisfies \(0< q<1\), \(x_{i}(t)\) is the state of the ith neuron, \(\omega_{i}(\cdot)\) and \(\alpha(\cdot)\) are continuous functions, \(b_{ij}\) and \(c_{ij}\) are synaptic weights from the ith neuron to the jth neuron at time t and \(\gamma(t)\), respectively, \(d_{ij}\) denotes the synaptic weight for the bias of the ith neuron, \(h_{ij}\), \(l_{ij}\), \(p_{ij}\), and \(r_{ij}\) signify the synaptic weights of fuzzy local operations, and ⋀ and ⋁ stand for the fuzzy AND and fuzzy OR operation, respectively. \(\gamma(t)=\xi_{k}\), for \(t \in[\eta_{k},\eta_{k+1})\), \(k \in\mathcal{N}\), \(t \in \mathcal{R}^{+}\), which is a piecewise constant function, is called a deviating argument. \(f_{j}(\cdot)\) and \(g_{j}(\cdot)\) are activation functions, while \(u_{j}\) and \(I_{i}\) represent the bias and the input, respectively.

It is clear that (1) is of the mixed type. When \(t \in[\eta_{k},\xi_{k})\) and \(\gamma(t)=\xi_{k}>t\), (1) is an advanced system. When \(t \in[\xi_{k},\eta_{k+1})\) and \(\gamma(t)=\xi_{k}< t\), (1) is a retarded system. Hence, (1) changes its deviation type during the process and is of the mixed type.

2.3 Definitions and lemmas

In this subsection, some useful definitions and lemmas are given as follows.

Definition 2.1

See [19]

An equilibrium point of (1) is a constant vector \(x^{*}= (x_{1}^{*},x_{2}^{*},\ldots,x_{n}^{*})^{T}\), such that

Definition 2.2

See [25]

The equilibrium point \(x^{*}=(x_{1}^{*},x_{2}^{*}, \ldots,x_{n}^{*})^{T}\) of (1) is globally Mittag-Leffler stable if, for any solution \(x(t)\) of (1) with initial condition \(x_{0}\), there exist two positive constants κ and ε such that

Remark 2.1

The same way the exponent function is broadly used in integer-order neural networks, the Mittag-Leffler function is widely used in fractional-order neural networks. From Definition 2.2, Mittag-Leffler stability possesses the power-law property \((t-t_{0})^{-q}\), which is entirely different from exponential stability.

Lemma 2.1

Let \(q>0\), let \(\mathcal{X}\) and \(\mathcal{G}\) be non-negative constants, and suppose \(\mathcal{Y}(t)\) is non-negative and locally integrable on \([t_{0},\bar{t} )\) with

or

Then

or

The proof of Lemma 2.1 is almost identical to that of Theorem 1 and Corollary 2 in [30], and it is omitted here for the sake of convenience.

Lemma 2.2

See [34]

Let \(\mathcal{F}(t) \in R^{n}\) be a continuous and derivable function. Then

for all \(t\geq t_{0}\) and \(0<\alpha<1\).

Lemma 2.3

See [40]

Suppose \(x=(x_{1},x_{2},\ldots ,x_{n})^{T}\) and \(y=(y_{1},y_{2}, \ldots,y_{n})^{T}\) are two states of (1). Then

Lemma 2.4

See [29]

Let \(0< q<1\). \(\mathcal{F}(t)\) is a continuous function on \([t_{0},+\infty)\), if there exist two constants \(\mu_{1}>0\) and \(\mu_{2}\geq0\) such that

Then

Remark 2.2

There are distinct differences between fractional-order differential equations and integer-order differential equations. Properties in integer-order differential equations cannot be simply extended to fractional-order differential equations. Lemma 2.1, Lemma 2.2, and Lemma 2.4 provide powerful tools for exploring fractional-order differential equations.

2.4 Notations and assumptions

For the sake of convenience, some notations are introduced in this subsection. First, let us define some notations, which will be used later:

Throughout this paper, the parameters and activation functions of (1) are supposed to satisfy the following assumptions:

-

(A1)

for functions \(\omega_{i}(\cdot)\) and \(\alpha_{i}(\cdot)\), there exist positive constants \(\underline{\omega}_{i}\), \(\overline{\omega}_{i}\), \(\underline{\alpha}_{i}\), and \(\overline{\alpha}_{i}\) and Lipschitz constants \(\widetilde{\omega}_{i}\) such that

$$ \underline{\omega}_{i}\leq\omega_{i}(x_{i})\leq \overline{\omega}_{i}, \qquad\big|\omega_{i}(x_{i})- \omega_{i}(y_{i})\big|\leq\widetilde{\omega}_{i}|x_{i}-y_{i}|, \qquad \underline{\alpha}_{i}\leq\frac{\alpha_{i}(x_{i}) -\alpha_{i}(y_{i})}{x_{i}-y_{i}}\leq\overline{ \alpha}_{i}, $$and \(\alpha_{i}(0)=0\), for any \(x_{i},y_{i} \in \mathcal{R}\), \(x_{i}\neq y_{i}\), \(i=1,2,\ldots,n\);

-

(A2)

for activation functions \(f_{i}(\cdot)\) and \(g_{i}(\cdot)\), there exist Lipschitz constants \(\tilde{c}_{i}\) and \(\hat{c}_{i}\) such that

$$ \big|f_{i}(x_{i})-f_{i}(y_{i})\big|\leq \tilde{c}_{i}|x_{i}-y_{i}|, \qquad \big|g_{i}(x_{i})-g_{i}(y_{i})\big|\leq \hat{c}_{i}|x_{i}-y_{i}|, $$while \(f_{i}(0)=g_{i}(0)=0\), for any \(x_{i},y_{i} \in\mathcal{R}\), \(i=1,2,\ldots,n\);

-

(A3)

there exists a positive constant \(\eta>0\) such that

$$ \eta_{k+1}-\eta_{k}\leq\eta, \quad\mbox{for } k \in \mathcal{N}; $$ -

(A4)

\(\frac{\eta^{q}(\tilde{A}+A_{2})}{\Gamma(q+1)}<1\);

-

(A5)

\(\theta_{2}(\theta_{1}+A_{2})<1\), \(\frac{\theta_{2}(\theta_{1}+A_{2})E_{q}(\theta_{1} \eta^{q})}{1-A_{2}\theta_{2}E_{q}(\theta_{1}\eta^{q})}<1\);

-

(A6)

\(\theta_{2}(A_{1}\tau+A_{2})<1\).

Fix \(k \in\mathcal{N}\), for every \((t_{0},x^{0}) \in[\eta_{k},\eta_{k+1}]{\times\mathcal{R}^{n}}\) and assume \(\eta_{k}\leq t_{0} <\xi_{k}\leq\eta_{k+1}\) without loss of generality. Construct a sequence \(\{z_{i}^{m}(t)\}\), \(i=1,2,\ldots,n\), such that

for \(m \in\mathcal{N}\) and \(z_{i}^{0}(t)=x_{i}^{0}\), where \(F_{i}^{m}(s)\) and \(C_{i}\) are defined in (2). We obtain

Define a norm \(\|z(t)\|_{M}=\max_{t_{0}\leq t \leq\xi_{k}}\|z(t)\|\). Then

where B and \(\theta_{2}\) are defined in (2).

Hence

Under (A4),

for \(m \in\mathcal{N}\).

In combination with the continuity of the functions \(\alpha(\cdot)\), \(f(\cdot)\), and \(g(\cdot)\), we conclude that

for \(m\in\mathcal{N}\) with initial condition \(x^{0}\), where \(M_{i}\) is defined in (2), \(i=1,2,\ldots, n\).

3 Main results

3.1 Existence and uniqueness of solution

From the viewpoint of theory and application, the existence and uniqueness of solution for differential equations is a precondition, so we begin with the theorem for the existence and uniqueness of solution for (1).

Theorem 3.1

Let (A1)-(A5) hold. Then, for each pair \((t_{0},x^{0}) \in\mathcal{R}^{+} \times\mathcal{R}^{n}\), (1) has a unique solution \(x(t)=x(t,t_{0},x^{0})\), \(t\geq t_{0}\), with the initial condition \(x(t_{0})=x^{0}\).

Proof

First, we prove the existence of solutions.

Take \(k \in\mathcal{N}\). Without loss of generality, assume \(\eta_{k}\leq t_{0} <\xi_{k} \leq\eta_{k+1}\). We first prove (1) exists a unique solution \(x(t,t_{0},x^{0})\) for every \((t_{0},x^{0}) \in[\eta_{k},\eta_{k+1}]\).

Denote \(z_{i}(t)=x_{i}(t,t_{0},x_{0})\) for simplicity and construct the following equivalent integral equation:

From (3), we have

Combining this with

and

we get

where ω̃, \(M_{i}\), M, \(A_{1}\), \(A_{2}\), \(\theta_{1}\), and \(\theta_{2}\) are defined in (2).

From the definition of the norm of \(\|z(t)\|_{M}=\max_{t_{0}\leq t\leq\xi_{k}}(\|z(t)\|)\), we have

where \(\mathcal{H}=\theta_{2}(\lambda_{1}\|x^{0}\|+ \lambda_{2}\sum_{i=1}^{n}|u_{i}|+\sum_{i=1}^{n}|I_{i}|)\), \(\lambda_{1}=\max_{1\leq i\leq n} [\overline{\omega}_{i} (\overline{\alpha}_{i} +\sum_{j=1}^{n}(|b_{ji}|\tilde{c}_{i}+|c_{ji}|\hat{c}_{i} +|h_{ji}|\tilde{c}_{i}+|l_{ji}|\tilde{c}_{i}) ) ]\), and \(\lambda_{2}=\max_{1\leq i\leq n} (\sum_{j=1}^{n} \overline{\omega}_{i}(|d_{ji}|+|p_{ji}+|d_{ji}) )\). Hence, there exists a unique solution \(z(t)=x(t,t_{0},x^{0})\) for (1) on \([\xi_{k},t_{0}]\). Assumptions (A1) and (A2) imply that \(z(t)=x(t,t_{0},x^{0})\) can be continued to \(\eta_{k+1}\). In a similar way, \(z(t)=x(t,t_{0},x^{0})\) can continue from \(\eta_{k+1}\) to \(\xi_{k+1}\) and then to \(\eta_{k+2}\). Hence, we conclude that for (1) there exists a solution \(x(t)=x(t,t_{0},x^{0})\), \(t\geq t_{0}\), by mathematical induction.

Now, we prove the uniqueness of the solution.

Let

for \(l=1,2\) and \(i=1,2,\ldots,n\).

Denote by \(x^{1}(t)\) and \(x^{2}(t)\) two different solutions for (1) with initial conditions \((t_{0},x^{1})\) and \((t_{0},x^{2})\), respectively, where \(t_{0} \in[\eta_{k},\eta_{k+1}]\). To prove the uniqueness, it is sufficient to check that \(x^{1}\neq x^{2}\) implies \(x^{1}(t)\neq x^{2}(t)\) for every \(t \in[\eta_{k},\eta_{k+1}]\). Then we get

Applying Lemma 2.1, we get

Particularly,

and

Substituting (5) into (4), we obtain

Suppose that there exists some \(\bar{t} \in[\eta_{k}, \eta_{k+1}]\) such that \(x^{1}(\bar{t})=x^{2}(\bar{t})\). Then

Combining this with (5), (6), and (7), we get

Applying (A5), it follows that

This poses a contradiction and it demonstrates the validity of the uniqueness of solution for (1). Hence, (1) has a unique solution \(x(t)\) for every initial condition \((t_{0},x_{0}) \in\mathcal{R}^{+} \times\mathcal{R}^{n}\). This completes the proof. □

3.2 Estimation of deviating argument

In this subsection, we give the estimation of the norm of the deviating state.

From Schauder’s fixed point theorem and assumptions (A1) and (A2), the existence of the equilibrium point of (1) can be guaranteed. Denote the equilibrium point of (1) by \(x^{*}=(x_{1}^{*}, x_{2}^{*},\ldots, x_{n}^{*})^{T}\). Substitution of \(v(t)=x(t)-x^{*}\) into (1) leads to

where \(\widetilde{\alpha}_{i}(v_{i}(t))=\alpha_{i}(v_{i}(t) +x_{i}^{*})-\alpha_{i}(x_{i}^{*})\), \(F_{j}(v_{j}(t)) =f_{j}(v_{j}(t)+x_{j}^{*})-f_{j}(x_{j}^{*})\) and \(G_{j}(v_{j}(t))= g_{j}(v_{j}(t)+x_{j}^{*})-g_{j}(x_{j}^{*})\) for \(i,j=1,2,\ldots,n\).

Theorem 3.2

Let (A1)-(A6) hold and let \(v(t)=(v_{1}(t),v_{2}(t))^{T},\ldots, v_{n}(t)\) be a solution of (8). Then

for any \(t \in\mathcal{R}^{+}\), where ϱ is defined in (2).

Proof

For any \(t \in\mathcal{R}^{+}\), there exists a unique \(k \in\mathcal{N}\) such that \(t \in[\eta_{k}, \eta_{k+1})\). It follows that

for \(t \in[\xi_{k},\eta_{k+1})\), and

for \(t \in[\eta_{k}, \xi_{k})\).

Without loss of generality, we only consider the case of \(t \in[\xi_{k},\eta_{k+1})\). The other case can be considered in a similar manner.

We have

where \(A_{1}\), \(A_{2}\), and \(\theta_{2}\) are defined in (2).

Applying Lemma 2.1, we have

In a similar way, by exchanging the locations of \(v_{i}(t)\) and \(v_{i}(\xi_{k})\) in (9), we get

Hence

where τ and ϱ are defined in (2).

Therefore, Theorem 3.2 is valid for any \(t \in\mathcal{R}^{+}\). □

3.3 Global Mittag-Leffler stability

Theorem 3.3

Let (A1)-(A6) hold. Then (1) is globally Mittag-Leffler stable if the following inequality is satisfied:

where \(A_{3}\), \(A_{4}\), and ϱ are defined in (2).

Proof

Define a Lyapunov function by

From Lemma 2.2 and Lemma 2.3, we derive

Applying Theorem 3.2, it follows that

From the definition of \(W(v(t))\), it is clear that

and

Hence

where \(\Delta=(A_{3}-A_{4}\varrho^{2})>0\).

Based on Lemma 2.4, it follows that

Therefore,

where \(\mathbf{M}=n\).

Hence, (1) is globally Mittag-Leffler stable. This completes the proof. □

Remark 3.1

Fractional-order neural networks have plenty of favorable characteristics, such as infinity memory and hereditary features, in contrast with integer-order ones, whereas the approaches investigated in integer-order neural networks cannot be applied straightforward to fractional-order ones.

Remark 3.2

In recent years, fractional-order neural networks have been under intensive investigation. A lot of results are reported about Mittag-Leffler stability and asymptotical ω-periodicity on fractional-order neural networks with or without deviating argument. Very few of them are about the stability of fractional-order Cohen-Grossberg neural networks with deviating argument and the existing results cannot be applied straightforwardly to fractional-order Cohen-Grossberg neural networks with deviating argument. From this point of view, the result derived in this paper can be viewed as an extension to the existing literature.

4 Illustrative examples

In this section, one example is given to demonstrate the validity of the results.

Example 1

Consider the following fractional-order neural network in the presence of deviating argument:

where \(\{\eta_{k}\}=\frac{k}{20}\), \(\{\xi_{k}\}=\frac{2k+1}{40}\) and \(\gamma(t)=\xi_{k}\) for \(t \in[\eta_{k},\eta_{k+1})\), \(k \in\mathcal{N}\).

It can be seen that \(\underline{\omega}_{1}=\frac{5}{3}\), \(\overline{\omega}_{1}= \frac{7}{3}\),\(\underline{\omega}_{2} =\frac{2}{3}\), \(\overline{\omega}_{2}=\frac{4}{3}\), \(\widetilde{\omega}_{1}=\widetilde{\omega}_{2}=\frac{1}{3}\), \(\underline{\alpha}_{1}=\overline{\alpha}_{1}=4\), \(\underline{\alpha}_{2}=\overline{\alpha}_{2}=3\), \(\tilde{c}_{1}=\tilde{c}_{2}=1\), \(\hat{c}_{1}=\hat{c}_{2}= \frac{1}{3}\), \(\eta=\frac{1}{20}\), \(b_{11}=0.04\), \(b_{12}=0.01\), \(b_{21}=0.02\), \(b_{22}=0.02\), \(c_{11}=0.01\), \(c_{12}=0.02\), \(c_{21}=0.03\), \(c_{22}=0.01\), \(d_{11}=d_{12}=d_{21}=d_{22}=0\), \(p_{11}=p_{12}=p_{21}=p_{22}=0\), \(r_{11}=r_{12}=r_{21}=r_{22}=0\), \(h_{11}=0.01\), \(h_{12}=0.02\), \(h_{21}=0.03\), \(h_{22}=0.02\), and \(l_{11}=0.02\), \(l_{12}=0.02\), \(l_{21}=0.02\), \(l_{22}=0.01\), \(I_{1}=I_{2}=0.01\).

Choose the initial value \(x^{0}\) satisfying \(|x_{1}^{0}|\leq0.5,|x_{2}^{0}|\leq0.5\). By calculation, we have

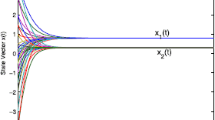

Based on Theorem 3.3, (10) is globally Mittag-Leffler stable. Simulation results from several initial values are depicted in Figures 1 and 2, which are well suited to show the theoretical predictions.

Transient behavior of \(\pmb{x_{1}(t)}\) and \(\pmb{x_{2}(t)}\) in ( 10 ).

The phase plot of \(\pmb{x(t)}\) in ( 10 ).

Remark 4.1

Example 1 shows that the derived criteria are applicable to the Mittag-Leffler stability of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument. As a special case of the obtained criteria, let \(\widetilde{\omega}=0\), that is, \(\omega_{i}(\cdot)\) degenerates into a constant, and \(d_{ij}=0\), \(h_{ij}=0\), \(l_{ij}=0\), \(p_{ij}=0\), and \(r_{ij}=0\), for \(i,j=1,2, \ldots,n\). It is obvious that the criteria are still valid, which is exactly the main theorem in [52]. Hence, the criteria proposed in this paper can be deemed a generalization of the existing literature.

5 Concluding remarks

In this paper, the global Mittag-Leffler stability of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument is considered. Sufficient conditions are obtained to ensure the existence and uniqueness of the solution. Furthermore, the global Mittag-Leffler stability of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument is investigated. A numerical example and the corresponding simulations show that global Mittag-Leffler stability can be guaranteed under the derived criteria. The results obtained in this paper supplement the existing literature. Future work may aim at exploring the multistability and multiperiodicity for fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument.

References

Bao, H, Cao, J: Projective synchronization of fractional-order memristor-based neural networks. Neural Netw. 63, 1-9 (2015)

Chen, L, Chai, Y, Wu, R, Ma, T, Zhai, H: Dynamic analysis of a class of fractional-order neural works with delay. Neurocomputing 111, 190-194 (2013)

Chen, L, Qu, J, Chai, Y, Wu, R, Qi, G: Synchronization of a class of fractional-order chaotic neural networks. Entropy 15, 3265-3276 (2013)

Chen, W, Zheng, W: An improved stabilization method for sampled-data control systems with control packet loss. IEEE Trans. Autom. Control 57(9), 2378-2384 (2012)

Chen, W, Zheng, W: Robust stability analysis for stochastic neural networks with time-varying delay. IEEE Trans. Neural Netw. 21(3), 508-514 (2010)

Di Marco, M, Forti, M, Grazzini, M, Pancioni, L: Convergence of a class of cooperative standard cellular neural network arrays. IEEE Trans. Circuits Syst. I, Regul. Pap. 59(4), 772-783 (2012)

Di Marco, M, Forti, M, Grazzini, M, Pancioni, L: Limit set dichotomy and multistability for a class of cooperative neural networks with delays. IEEE Trans. Neural Netw. Learn. Syst. 23(9), 1473-1485 (2012)

Han, Q: A discrete delay decomposition approach to stability of linear retarded and neutral systems. Automatica 45(2), 517-524 (2009)

Han, Q: Improved stability criteria and controller design for linear neutral systems. Automatica 45(8), 1948-1952 (2009)

Huang, H, Huang, T, Chen, X: Global exponential estimates of delayed stochastic neural networks with Markovian switching. Neural Netw. 36, 136-145 (2012)

Huang, H, Huang, T, Chen, X: A mode-dependent approach to state estimation of recurrent neural networks with Markovian jumping parameters and mixed delays. Neural Netw. 46, 50-61 (2013)

Song, Q, Yan, H, Zhao, Z, Liu, Y: Global exponential stability of complex-valued neural networks with both time-varying delays and impulsive effects. Neural Netw. 79, 108-116 (2016)

Song, Q, Yan, H, Zhao, Z, Liu, Y: Global exponential stability of impulsive complex-valued neural networks with both asynchronous time-varying and continuously distributed delays. Neural Netw. 81, 1-10 (2016)

Wang, L, Lu, W, Chen, T: Multistability and new attraction basins of almost-periodic solutions of delayed neural networks. IEEE Trans. Neural Netw. 20(10), 1581-1593 (2009)

Wang, L, Lu, W, Chen, T: Coexistence and local stability of multiple equilibria in neural networks with piecewise linear nondecreasing activation functions. Neural Netw. 23(2), 189-200 (2010)

Wang, Z, Shen, B, Shu, H, Wei, G: Quantized \(H_{\infty}\) control for nonlinear stochastic time-delay systems with missing measurements. IEEE Trans. Autom. Control 57(6), 1431-1444 (2012)

Wang, Z, Zhang, H, Jiang, B: LMI-based approach for global asymptotic stability analysis of recurrent neural networks with various delays and structures. IEEE Trans. Neural Netw. 22(7), 1032-1045 (2011)

Zhang, H, Liu, Z, Huang, G, Wang, Z: Novel weighting-delay-based stability criteria for recurrent neural networks with time-varying delay. IEEE Trans. Neural Netw. 21(1), 91-106 (2010)

Bao, G, Wen, S, Zeng, Z: Robust stability analysis of interval fuzzy Cohen-Grossberg neural networks with piecewise constant argument of generalized type. Neural Netw. 33, 32-41 (2012)

Liu, P, Zeng, Z, Wang, J: Multistability analysis of a general class of recurrent neural networks with non-monotonic activation functions and time-varying delays. Neural Netw. 79, 117-127 (2016)

Mathiyalagan, K, Park, J, Sakthivel, R, Anthoni, S: Delay fractioning approach to robust exponential stability of fuzzy Cohen-Grossberg neural networks. Appl. Math. Comput. 230, 451-463 (2014)

Muralisankar, S, Gopalakrishnan, N: Robust stability criteria for Takagi-Sugeno fuzzy Cohen-Grossberg neural networks of neutral type. Neurocomputing 144, 516-525 (2014)

Li, R, Cao, J, Alsaedi, A, Ahmad, B, Alsaadi, F, Hayat, T: Nonlinear measure approach for the robust exponential stability analysis of interval inertial Cohen-Grossberg neural networks. Complexity 21(S2), 459-469 (2016)

Zhang, Z, Cao, J, Zhou, D: Novel LMI-based condition on global asymptotic stability for a class of Cohen-Grossberg BAM networks with extended activation functions. IEEE Trans. Neural Netw. Learn. Syst. 25(6), 1161-1172 (2014)

Chen, J, Zeng, Z, Jiang, P: Global Mittag-Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 51, 1-8 (2014)

Song, C, Cao, J: Dynamics in fractional-order neural networks. Neurocomputing 142, 494-498 (2014)

Wang, F, Yang, Y, Hu, M: Asymptotic stability of delayed fractional-order neural networks with impulsive effects. Neurocomputing 154, 239-244 (2015)

Wang, H, Yu, Y, Wen, G: Stability analysis of fractional-order Hopfield neural networks with time delays. Neural Netw. 55, 98-109 (2014)

Wu, A, Zeng, Z: Boundedness, Mittag-Leffler stability and asymptotical ω-periodicity of fractional-order fuzzy neural networks. Neural Netw. 74, 73-84 (2016)

Ye, H, Gao, J, Ding, Y: A generalized Gronwall inequality and its application to a fractional differential equation. J. Math. Anal. Appl. 328(2), 1075-1081 (2007)

Yu, J, Hu, C, Jiang, H: α-stability and α-synchronization for fractional-order neural networks. Neural Netw. 35, 82-87 (2012)

Yu, J, Hu, C, Jiang, H, Fan, X: Projective synchronization for fractional neural networks. Neural Netw. 49, 87-95 (2014)

Zhang, S, Yu, Y, Wang, H: Mittag-Leffler stability of fractional-order Hopfield neural networks. Nonlinear Anal. Hybrid Syst. 16, 104-121 (2015)

Aguila-Camacho, N, Duarte-Mermoud, M, Gallegos, J: Lyapunov functions for fractional order systems. Commun. Nonlinear Sci. Numer. Simul. 19(9), 2951-2957 (2014)

Ding, X, Cao, J, Zhao, X, Alsaadi, F: Finite-time stability of fractional-order complex-valued neural networks with time delays. Neural Process. Lett. (2017). doi:10.1007/s11063-017-9604-8

Liu, Z, Zhang, H, Wang, Z: Novel stability criterions of a new fuzzy cellular neural networks with time-varying delays. Neurocomputing 72(4-6), 1056-1064 (2009)

Sheng, L, Gao, M, Yang, H: Delay-dependent robust stability for uncertain stochastic fuzzy Hopfield neural networks with time-varying delays. Fuzzy Sets Syst. 160(24), 3503-3517 (2009)

Xing, X, Pan, Y, Lu, Q, Cui, H: New mean square exponential stability condition of stochastic fuzzy neural networks. Neurocomputing 156, 129-133 (2015)

Zhu, Q, Li, X: Exponential and almost sure exponential stability of stochastic fuzzy delayed Cohen-Grossberg neural networks. Fuzzy Sets Syst. 203, 74-94 (2012)

Yang, T, Yang, L: The global stability of fuzzy cellular neural network. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 43(10), 880-883 (1996)

Akhmet, M, Aruğaslan, D, Yılmaz, E: Stability analysis of recurrent neural networks with piecewise constant argument of generalized type. Neural Netw. 23, 805-811 (2010)

Akhmet, M: On the integral manifolds of the differential equations with piecewise constant argument of generalized type. In: Proceedings of the Conference on Differential & Difference Equations and Applications, pp. 11-20 (2006)

Akhmet, M: Integral manifolds of the differential equations with piecewise constant argument of generalized type. Nonlinear Anal., Theory Methods Appl. 66(2), 367-383 (2007)

Akhmet, M: On the reduction principle for differential equations with piecewise constant argument of generalized type. J. Math. Anal. Appl. 336(1), 646-663 (2007)

Akhmet, M: Stability of differential equations with piecewise constant argument of generalized type. Nonlinear Anal., Theory Methods Appl. 68(4), 794-803 (2008)

Akhmet, M: Almost periodic solutions of differential equations with piecewise constant argument of generalized type. Nonlinear Anal. Hybrid Syst. 2(2), 456-467 (2008)

Akhmet, M, Aruğaslan, D: Yılmaz, E: Stability in cellular neural networks with piecewise constant argument. J. Comput. Appl. Math. 233(9), 2365-2373 (2010)

Chiu, K, Pinto, M, Jeng, J: Existence and global convergence of periodic solutions in recurrent neural network models with a general piecewise alternately advanced and retarded argument. Acta Appl. Math. 133(1), 133-152 (2014)

Wu, A, Zeng, Z: Output convergence of fuzzy neurodynamic system with piecewise constant argument of generalized type. IEEE Trans. Syst. Man Cybern. Syst. 46(12), 1689-1702 (2016)

Akhmet, M, Yılmaz, E: Impulsive Hopfield-type neural network system with piecewise constant argument. Nonlinear Anal., Real World Appl. 11(4), 2584-2593 (2010)

Wan, L, Wu, A: Stabilization control of generalized type neural networks with piecewise constant argument. J. Nonlinear Sci. Appl. 9(6), 3580-3599 (2016)

Wu, A, Liu, L, Huang, T, Zeng, Z: Mittag-Leffler stability of fractional-order neural networks in the presence of generalized piecewise constant arguments. Neural Netw. 85, 118-127 (2017)

Acknowledgements

The work is supported by the Natural Science Foundation of China under Grants 61640309 and 61773152, the Natural Science Fund of Hubei Province of China under Grant 2016CFC735, the Research Project of Hubei Provincial Department of Education of China under Grant T201710, and the Engineering Laboratory Foundation of Hubei Province of China under Grant 201611.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors claim that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wan, L., Wu, A. Mittag-Leffler stability analysis of fractional-order fuzzy Cohen-Grossberg neural networks with deviating argument. Adv Differ Equ 2017, 308 (2017). https://doi.org/10.1186/s13662-017-1368-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1368-y