Abstract

Using the theorem and properties of the fixed point index in a Banach space and applying a new method to dispose of the impulsive term, we prove that there exists a solvable interval of positive parameter λ in which the second order impulsive singular equation has two infinite families of positive solutions. Moreover, we also establish the new expression of Green’s function for the above equation. Noticing that \(\lambda>0\) and \(c_{k}\neq0\) (\(k=1,2,\ldots,n\)), our main results improve many previous results. This is probably the first time that the existence of two infinite families of positive solutions for second order impulsive singular parametric equations has been studied.

Similar content being viewed by others

1 Introduction

In this paper, we consider the existence of two infinite families of positive solutions for the second impulsive singular parametric differential equation

where \(\lambda>0\) is a positive parameter, \(J=[0,1]\), \(t_{k} \in\mathrm{R}\), \(k =1,2,\ldots,n\), \(n \in\mathrm{N}\) satisfy \(0< t_{1}< t_{2}<\cdots <t_{k}<\cdots<t_{n}<1\), \(a, b>0\), \(\{c_{k}\}\) is a real sequence with \(c_{k}>-1\), \(k=1,2,\ldots,n\), \(x(t_{k}^{+})\) (\(k=1,2,\ldots,n\)) represents the right-hand limit of \(x(t)\) at \(t_{k}\), \(\omega\in L^{p}[0,1]\) for some \(p\geq1\) and and has infinitely many singularities in \([0,\frac{1}{2})\).

In addition, ω, f, h and \(c_{k}\) satisfy the following conditions:

- (H1):

-

\(\omega(t)\in L^{p}[0,1]\) for some \(p\in[1,+\infty)\), and there exists \(\xi>0\) such that \(\omega(t)\geq\xi\) a.e. on J;

- (H2):

-

There exists a sequence \(\{t_{i}'\}_{i=1}^{\infty} \) such that \(t_{1}'<\frac{1}{2}\), \(t_{i}' \downarrow t^{*}\geq0 \) and \(\lim_{t\rightarrow t_{i}'} \omega(t) =+\infty\) for all \(i=1, 2,\ldots \) ;

- (H3):

-

\(f(t,u): J\times[0,+\infty)\rightarrow[0,+\infty)\) is continuous, \(\{c_{k}\}\) is a real sequence with \(c_{k}>-1\), \(k=1, 2, \ldots, n\), \(c(t):=\Pi_{0< t_{k}< t}(1+c_{k})\);

- (H4):

-

\(h\in C[0,1]\) is nonnegative with \(\mu\in[0,1)\), where

$$\mu= \int_{0}^{1}A(t)h(t)c(t)\,dt, $$and

$$ A(t)=\frac{(a+b-at)c(1)+a+b}{a(a+2b)c(1)}. $$(1.2)

Remark 1.1

Throughout this paper, we always assume that a product \(c(t):=\Pi_{0< t_{k}< t}(1+c_{k})\) equals unity if the number of factors is equal to zero, and let

Remark 1.2

Combining (H2), Remark 1.1 and the definition of \(c(t)\), we know that \(c(t)\) is a step function bounded on J, and

Remark 1.3

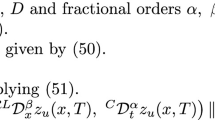

To make it clear for the reader what \(c(t)\) is, we give a special example of \(c(t)\), e.g., letting \(k=3\), \(t_{1}=\frac{1}{4}\), \(t_{2}=\frac{1}{2}\), \(t_{3}=\frac {3}{4}\), \(c_{1}=-\frac{1}{2}\), \(c_{2}=-\frac{1}{3}\), \(c_{3}=-\frac {1}{4}\), we can get the graph of \(c(t)\). For details, see Figure 1.

Such problems were first studied by Zhang and Feng [1]. By using the transformation technique to deal with impulsive term of second impulsive differential equations, the authors obtained the existence results of positive solutions by using fixed point theorems in a cone. But they only gave the sufficient conditions for the existence of finite positive solutions. In fact, there is almost no paper that considers the existence of infinitely many positive solutions for second order singular impulsive parametric equations; for details, see [2–7].

For the case \(\lambda=1\), \(a=1\), \(b=0\), \(h(t)\equiv0\) on \(t\in J\) and \(c_{k}=0\) (\(k=1,2,\ldots,n\)), problem (1.1) reduces to the problem studied by Kaufmann and Kosmatov in [8]. By using Krasnosel’skiĭ’s fixed point theorem and Hölder’s inequality, the authors showed the existence of countably many positive solutions. The other related results can be found in [9–14]. However, there are almost no papers considering a second order impulsive parametric equation with infinitely many singularities. To identify a few, we refer the reader to [8–14] and the references therein.

The main reasons are that \(\lambda\neq0\) and \(c_{k}\neq0\) (\(k=1,2,\ldots ,n\)) in problem (1.1). If \(\lambda\neq0\), then it is very difficult to be concerned with determining values of λ, for which there exist infinitely many positive solutions. On the other hand, if \(c_{k}\neq0\) (\(k=1,2,\ldots,n\)), then there exist singular points and impulsive points in the same problem, which leads to many difficulties in defining the interval \([\tau _{i},1-\tau_{i}]\), where \(t'_{i+1}\leq\tau_{i}\leq t'_{i}\). The goal of this paper is to seek new methods to solve these difficulties and to give some new sufficient conditions to guarantee that problem (1.1) has two infinite families of positive solutions.

2 Preliminaries

In this section, we collect some definitions and lemmas for the convenience of later use and reference.

Definition 2.1

A function \(x(t)\) is said to be a solution of problem (1.1) on J if:

-

(i)

\(x(t)\) is absolutely continuous on each interval \((0,t_{1}]\) and \((t_{k},t_{k+1}]\), \(k=1,2,\ldots,n\);

-

(ii)

for any \(k=1,2,\ldots,n\), \(x(t_{k}^{+})\), \(x(t_{k}^{-})\) exist and \(x(t_{k}^{-})=x(t_{k})\);

-

(iii)

\(x(t)\) satisfies (1.1).

We shall reduce problem (1.1) to a system without impulse. To this goal, firstly by means of the transformation

we convert problem (1.1) into

It follows from (1.1), (2.1) and (2.2) that we can obtain the following lemma.

Lemma 2.1

Assume that (H1)-(H4) hold. Then

-

(i)

if \(y(t)\) is a solution of problem (2.2) on J, then \(x(t)=c(t)y(t)\) is a solution of problem (1.1) on J;

-

(ii)

if \(x(t)\) is a solution of problem (1.1) on J, then \(y(t)=c^{-1}(t)x(t)\) is a solution of problem (2.2) on J, where \(c^{-1}(t)\) is defined in Remark 1.1.

Lemma 2.2

If (H1)-(H4) hold, then problem (2.2) has a solution y, and y can be expressed in the form

where

Proof

First suppose that y is a solution of problem (2.2). It is easy to see by integration of problem (2.2) that

Letting \(t=1\) in (2.6), (2.7), we find

Combining the boundary condition \(ay(0)-by'(0)=\int _{0}^{1}h(s)c(s)y(s)\,ds\), \(ac(1)y(1)+bc(1) y'(1)=\int_{0}^{1}h(s)c(s)y(s)\,ds\) and (2.8), we obtain

Substituting (2.9), (2.10) into (2.7) and letting

we have

Therefore, we have

Let

then

The proof of the lemma is complete. □

Lemma 2.3

Let \(\theta\in(0,\frac{1}{2})\) and \(\theta_{i}\) be defined in (3.4). Noticing that \(a, b>0\), it follows from (2.4) and (2.5) that

where

and

Proof

It is obvious that (2.15) and (2.16) hold by the definition of \(G(t,s)\) and \(H(t,s)\).

Next, we show that (2.17) holds for \(t\in[\theta_{i},1-\theta_{i}]\), \(s\in J\). In fact, if \(s\le t\), it follows from (2.5) that

Similarly, we can prove that \(G(t,s)\ge\frac{b(b+a\theta_{i})}{d}\), \(\forall 0\le t\le s\le1\).

Therefore,

And then, by (2.4), for \(t\in[\theta_{i},1-\theta_{i}]\), \(s\in J\), we have

So,

The proof is complete. □

Lemma 2.4

see [15]

Let E be a real Banach space and K be a cone in E. For \(r>0\), define \(K_{r}=\{x\in K: \|x\|< r \}\). Assume that \(T: \bar{K}_{r}\rightarrow K\) is completely continuous such that \(Tx\neq x\) for \(x\in\partial K_{r}=\{x\in K:\|x\|=r\}\).

-

(i)

If \(\|Tx\|\geq\|x\|\) for \(x\in\partial K_{r}\), then \(i(T, K_{r}, K)=0\).

-

(ii)

If \(\|Tx\|\leq\|x\|\) for \(x\in\partial K_{r}\), then \(i(T, K_{r}, K)=1\).

Lemma 2.5

Hölder

Let \(e\in L^{p}[a,b]\) with \(p>1\), \(h\in L^{q}[a,b]\) with \(q>1\), and \(\frac{1}{p}+\frac{1}{q}=1\). Then \(eh\in L^{1}[a,b]\) and

Let \(e\in L^{1}[a,b]\), \(h\in L^{\infty}[a,b]\). Then \(eh\in L^{1}[a,b]\) and

3 The existence of two infinite families of positive solutions

In this part, applying the well-known fixed point index theory in a cone, we get the optimal interval of parameter λ in which problem (1.1) has two infinite families of positive solutions. We remark that our methods are entirely different from those used in [8–14].

Let \(E=C[0,1]\). Then E is a real Banach space with the norm \(\| \cdot\|\) defined by

Define a cone K in E by

where \(\delta_{D}=\frac{\delta}{D}\).

Remark 3.1

It follows from the definition of δ and D that \(0<\delta_{D}<1\).

Define \(T_{\lambda}:K\rightarrow K\) by

Theorem 3.1

Assume that (H1)-(H4) hold. Then \(T_{\lambda}(K)\subset K\) and \(T_{\lambda}:K\rightarrow K\) is completely continuous.

Proof

For \(y\in K\), it follows from (2.15) and (3.2) that

It follows from (2.16), (3.2) and (3.3) that

Next, by similar arguments of Theorem 1 in [16] one can prove that \(T_{\lambda}:K\rightarrow K\) is completely continuous. So it is omitted, and the theorem is proved. □

Remark 3.2

From (3.2), we know that \(y\in E\) is a solution of problem (2.2) if and only if y is a fixed point of operator \(T_{\lambda}\).

Let \(\{\theta_{i}\}_{i=1}^{\infty}\) be such that \(t_{i+1}'<\theta _{i}<t_{i}'\), \(i=1,2,\ldots\) . Then, for any \(i\in\mathrm{N}\), we define the cone \(K_{\theta_{i}}\) by

where

Remark 3.3

Assume that (H1)-(H4) hold. Then \(T_{\lambda}(K_{\theta_{i}})\subset K_{\theta_{i}}\) and \(T_{\lambda}: K_{\theta_{i}}\rightarrow K_{\theta_{i}}\) is completely continuous.

Next, using Lemmas 2.1-2.5, we give our main results under the case \(\omega\in L^{P}[0,1]\); \(p>1\), \(p=1\) and \(p=\infty\).

For convenience, we write

Firstly, we consider the case \(p>1\).

Theorem 3.2

Assume that (H1)-(H4) hold. Let \(\{r_{i}\} _{i=1}^{\infty}\), \(\{\gamma_{i}\}_{i=1}^{\infty}\) and \(\{R_{i}\} _{i=1}^{\infty}\) be such that

For each natural number i, let f satisfy the following conditions:

- (H5):

-

\(f_{0}^{c_{M}r_{i}}\leq l\) and \(f_{0}^{c_{M}R_{i}}\leq l\), where

$$ 0< l\leq\max \biggl\{ \frac{2\lambda c_{m}}{c_{M}\|G\|_{q}\|\omega\| _{p}},\frac{2\lambda c_{m}}{c_{M}\|G\|_{1}\|\omega\|_{\infty}}, \frac{2\lambda c_{m}}{c_{M}\beta\|\omega\|_{1}} \biggr\} ; $$(3.6) - (H6):

-

\(f_{c_{m}\delta_{iD}\gamma_{i}}^{c_{M}\gamma_{i}}\geq \eta\), where \(\eta>0\).

Then there exists \(\tau>0\) such that, for \(0<\lambda<\tau\), problem (1.1) has two infinite families of positive solutions \(x_{i\lambda}^{(1)}(t)\), \(x_{i\lambda}^{(2)}(t)\) and \(\max_{t\in J}x_{i\lambda}^{(1)}(t)>c_{m}\delta_{iD}\gamma _{i}\), \(i=1,2,\ldots \) .

Proof

Let \(\tau=\inf\{\tau_{i}\}\), \(\tau_{i}=\alpha _{i}c_{m}^{-1} \xi\eta(1-2\theta_{i})\gamma_{i}^{-1}\), \(i=1,2,\ldots \) . Then, for \(0<\lambda<\tau\), (3.1) and Theorem 3.1 imply that \(T_{\lambda }:K\rightarrow K\) is completely continuous.

Let \(t\in J\), \(y\in\partial K_{r_{i}\theta_{i}}\). Then \(0\leq c(t)y(t)\leq c_{M}r_{i}\). Therefore, for \(t\in J\), \(y\in\partial K_{r_{i}\theta_{i}}\), it follows from \(f_{0}^{c_{M}r_{i}}\leq l\) that

Consequently, for \(y \in\partial K_{r_{i}\theta_{i}}\), we have \(\|T_{\lambda}y\|<\|y\|\), i.e., by Lemma 2.4,

Similarly, for \(y \in\partial K_{R_{i}\theta_{i}}\), we have \(\|T_{\lambda}y\|<\|y\|\), and then it follows from Lemma 2.4 that

On the other hand, let

then \(0\leq c(t)y(t)\leq c_{M}\|y\|\leq c_{M}\gamma_{i}\). And hence, it follows from (2.15) and (3.2) that

Furthermore, for \(y \in\bar{K}_{\delta_{iD}\gamma_{i}\theta_{i}}^{\gamma_{i}}\), we have \(c(t)y(t)\leq c_{M}\gamma_{i}\), \(t\in J\), \(\min_{t\in[\theta _{iD},1-\theta_{i}]}c(t)y(t)\geq c_{m}\delta_{iD}\gamma_{i}\), and then

Let \(y_{0}\equiv\frac{\delta_{iD}\gamma_{i}+\gamma_{i}}{2}\) and \(F(t,y)=(1-t)T_{\lambda}y+ty_{0}\), then \(F:J\times\bar{K}_{\delta_{iD}\gamma_{i}\theta_{i}}^{\gamma_{i}}\rightarrow K_{\theta_{i}}\) is completely continuous. From the analysis above, we obtain for \((t,y)\in J\times\bar{K}_{\delta_{iD}\gamma_{i}\theta_{i}}^{\gamma_{i}}\),

Therefore, for \(t\in J\), \(y\in\partial K_{\delta_{iD}\gamma_{i}\theta _{i}}^{\gamma_{i}}\), we have \(F(t,y)\neq y\). Hence, by the normality property and the homotopy invariance property of the fixed point index, we obtain

Consequently, by the solution property of the fixed point index, \(T_{\lambda}\) has a fixed point \(y_{i\lambda}^{(1)}\) and \(y_{i\lambda}^{(1)} \in K_{\delta_{iD}\gamma_{i}\theta_{i}}^{\gamma _{i}}\). By Lemma 2.2 and Remark 3.1, it follows that \(y_{i\lambda}^{(1)}\) is a solution to problem (2.2), and

Therefore, it follows from Lemma 2.1 that problem (1.1) has a solution \(x_{i\lambda}^{(1)}(t)=c(t)y_{i\lambda}^{(1)}(t)\) with

On the other hand, from (3.8), (3.9) and (3.13) together with the additivity of the fixed point index, we get

Hence, by the solution property of the fixed point index, \(T_{\lambda}\) has a fixed point \(y_{i\lambda}^{(2)}\) and \(y_{i\lambda}^{(2)} \in K_{R_{i}}\backslash(\bar{K}_{r_{i}}\cup \bar{K}_{\delta_{iD}\gamma_{i}\theta_{i}}^{\gamma_{i}})\). By Lemma 2.2 and Remark 3.1, it follows that \(y_{i\lambda}^{(2)}\) is also a solution to problem (2.2), and \(y_{i\lambda}^{(1)}\neq y_{i\lambda}^{(2)}\). And then, by Lemma 2.1, we have problem (1.1) has another solution \(x_{i\lambda }^{(2)}(t)=c(t)y_{i\lambda}^{(2)}(t)\). Since \(i\in\mathrm{N}\) was arbitrary, the proof is complete. □

The following results deal with the case \(p=\infty\).

Theorem 3.3

Assume that (H1)-(H4) hold. Let \(\{r_{i}\} _{i=1}^{\infty}\), \(\{\gamma_{i}\}_{i=1}^{\infty}\) and \(\{R_{i}\} _{i=1}^{\infty}\) be such that

For each natural number i, letf satisfy (H5) and (H6), then there exists \(\tau>0\) such that, for \(0<\lambda<\tau\), problem (1.1) has two infinite families of positive solutions \(x_{i\lambda}^{(1)}(t)\), \(x_{i\lambda}^{(2)}(t)\) and \(\max_{t\in J}x_{i\lambda}^{(1)}(t)>c_{m}\delta_{iD}\gamma _{i}\), \(i=1,2,\ldots \) .

Proof

Let \(\|G\|_{1}\|\omega\|_{\infty}\) replace \(\|G\|_{q}\| \omega\|_{p}\) and repeat the previous argument. □

Finally, we consider the case of \(p=1\).

Theorem 3.4

Assume that (H1)-(H4) hold. Let \(\{r_{i}\} _{i=1}^{\infty}\), \(\{\gamma_{i}\}_{i=1}^{\infty}\) and \(\{R_{i}\} _{i=1}^{\infty}\) be such that

For each natural number i, let f satisfy (H5) and (H6), then there exists \(\tau>0\) such that, for \(0<\lambda<\tau\), problem (1.1) has two infinite families of positive solutions \(x_{i\lambda}^{(1)}(t)\), \(x_{i\lambda}^{(2)}(t)\) and \(\max_{t\in J}x_{i\lambda}^{(1)}(t)>c_{m}\delta_{iD}\gamma _{i}\), \(i=1,2,\ldots \) .

Proof

Let \(\beta\|\omega\|_{1}\) replace \(\|G\|_{q}\|\omega\| _{p}\) and repeat the previous argument. □

Remark 3.4

Comparing with Kaufmann and Kosmatov [8], the main features of this paper are as follows.

-

(i)

The solvable intervals of positive parameter λ are available.

-

(ii)

Two infinite families of positive solutions are obtained.

-

(iii)

\(c_{k}>-1\), \(k=1, 2, \ldots, n\), not only \(c_{k}\equiv0\).

4 Examples

From Section 3, it is not difficult to see that (H1) and (H2) play an important role in the proof that problem (1.1) has two infinite families of positive solutions. So, we firstly provide an example of families of functions \(\omega(t)\) satisfying conditions (H1) and (H2). And then we consider a boundary value problem associated with problem (1.1).

Example 4.1

We will check that there exists a function \(\omega(t)\) satisfying conditions (H1) and (H2).

Let

It is easy to know

and from \(\sum_{n=1}^{\infty}\frac{1}{n^{4}}=\frac{\pi ^{4}}{90}\), there is

Consider the function

where

From \(\sum_{n=1}^{\infty}\frac{1}{(5n-1)(5n+4)}=\frac{1}{20}\) and \(\sum_{n=1}^{\infty}\frac{1}{n^{2}}=\frac{\pi^{2}}{6}\), we have

Thus, it is easy to see

which shows that \(\omega(t)\in L^{1}[0,1]\).

On the other hand, a simple calculation shows that \(\omega(t)=\sum_{n=1}^{\infty}\omega_{n}(t)\geq\xi=\frac{21}{38}\times\frac {1}{20}\). So ω satisfies conditions (H2) and (H1).

Example 4.2

Let \(\omega(t)\) be defined as in Example 4.1. Consider the following boundary value problem:

Let \(c_{1}=t_{1}=\frac{1}{2}\), \(h(t)=\frac{6}{119}t\), \(a=\frac{1}{2}\), \(b=\frac{1}{4}\). Then \(d=\frac{1}{2}\), and

and then \(c_{M}=\frac{3}{2}\), \(c_{m}=1\), \(c(1)=\frac{3}{2}\).

Similarly, a simple calculation shows that \(A(t)=\frac{5}{2}-t\), \(A_{M}=\frac{5}{2}\), \(D=\frac{130}{119}\), \(\beta=\frac{65}{119}\), \(\delta _{i}=\frac{1+2\theta_{i}}{3}\), \(\delta_{iD}=\frac{119(1+2\theta _{i})}{390}\), \(i=1,2,\ldots \) , and

Now we consider the multiplicity of positive solutions for problem (4.1).

Let \(f(t,x)=\frac{1}{27\|\omega\|_{1}\beta}(t+1)x\). It follows from the definitions of \(\omega(t)\), \(f(t,x)\), \(c(t)\) and \(h(t)\) that conditions (H1)-(H4) hold. Hence, we only verify the other conditions of our main results.

Let \(\theta_{i}=\frac{3}{7}-\frac{1}{4(i+2)^{4}}\). Then \(\theta_{i}\in (0,\frac{3}{7})\). For \(R_{i}=\frac{1}{100^{i}}\), \(\gamma_{i}=\frac {1}{10\times100^{i}}\) and \(r_{i}=\frac{1}{30\times100^{i}}\), \(i=1,2,\ldots \) , we have

By a direct calculation, we have

Similarly, for any \(t\in J\), \(x\in[0,\frac{3}{2}R_{i}]\), we have \(f(t,x)\leq\frac{1}{9\|\omega\|_{1}\beta\times100^{i}}< l\), and

Hence, by Theorem 3.2, problem (4.1) has two infinite families of positive solutions \(x_{i\lambda}^{(1)}(t)\) and \(x_{i\lambda }^{(2)}(t)\) for \(0< \lambda<\tau= \inf\{\alpha_{i}\xi\eta(1-2\theta _{i})\gamma_{i}^{-1}\}\), \(i=1,2,\ldots \) .

References

Zhang, X, Feng, M: Transformation techniques and fixed point theories to establish the positive solutions of second-order impulsive differential equations. J. Comput. Appl. Math. 271, 117-129 (2014)

Liu, L, Hu, L, Wu, Y: Positive solutions of two-point boundary value problems for systems of nonlinear second order singular and impulsive differential equations. Nonlinear Anal. 69, 3774-3789 (2008)

Agarwal, RP, Franco, D, O’Regan, D: Singular boundary value problems for first and second order impulsive differential equations. Aequ. Math. 69, 83-96 (2005)

Lin, X, Jiang, D: Multiple solutions of Dirichlet boundary value problems for second order impulsive differential equations. J. Math. Anal. Appl. 321, 501-514 (2006)

Liu, Y, O’Regan, D: Multiplicity results using bifurcation techniques for a class of boundary value problems of impulsive differential equations. Commun. Nonlinear Sci. Numer. Simul. 16, 1769-1775 (2011)

Xu, J, Wei, Z, Ding, Y: Existence of positive solutions for a second order periodic boundary value problem with impulsive effects. Topol. Methods Nonlinear Anal. 43, 11-21 (2014)

Hao, X, Liu, L, Wu, Y: Positive solutions for second order impulsive differential equations with integral boundary conditions. Commun. Nonlinear Sci. Numer. Simul. 16, 101-111 (2011)

Kaufmann, ER, Kosmatov, N: A multiplicity result for a boundary value problem with infinitely many singularities. J. Math. Anal. Appl. 269, 444-453 (2002)

Liang, SH, Zhang, JH: The existence of countably many positive solutions for nonlinear singular m-point boundary value problems. J. Comput. Appl. Math. 214, 78-89 (2008)

Ji, D, Bai, Z, Ge, W: The existence of countably many positive solutions for singular multipoint boundary value problems. Nonlinear Anal. 72, 955-964 (2010)

Kosmatov, N: Countably many solutions of a fourth order boundary value problem. Electron. J. Qual. Theory Differ. Equ. 2004, 12 (2004)

Bonanno, G, Bella, BD, Henderson, J: Infinitely many solutions for a boundary value problem with impulsive effects. Bound. Value Probl. 2013, 278 (2013)

Graef, JR, Heidarkhani, S, Kong, L: Infinitely many solutions for systems of multi-point boundary value problems using variational methods. Topol. Methods Nonlinear Anal. 42, 105-118 (2013)

Afrouzi, GA, Hadjian, A, Shokooh, S: Infinitely many solutions for a Dirichlet boundary value problem with impulsive condition. UPB Sci. Bull., Ser. A 77, 1-22 (2015)

Guo, D, Lakshmikantham, V: Nonlinear Problems in Abstract Cones. Academic Press, New York (1988)

Zhao, A, Yan, W, Yan, J: Existence of positive periodic solution for an impulsive delay differential equation. In: Topological Methods, Variational Methods and Their Applications, pp. 269-274. World Scientific, River Edge (2002)

Acknowledgements

This work is sponsored by the National Natural Science Foundation of China (11301178)and the Beijing Natural Science Foundation (1163007), the Scientific Research Project of Construction for Scientific and Technological Innovation Service Capacity (71E1610973) and the teaching reform project of Beijing Information Science & Technology University (2015JGYB41). The authors are grateful to anonymous referees for their constructive comments and suggestions which have greatly improved this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All results belong to MW and MF. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wang, M., Feng, M. New Green’s function and two infinite families of positive solutions for a second order impulsive singular parametric equation. Adv Differ Equ 2017, 154 (2017). https://doi.org/10.1186/s13662-017-1211-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1211-5