Abstract

In this paper, we study the finite time stability of delay differential equations via a delayed matrix cosine and sine of polynomial degrees. Firstly, we give two alternative formulas of the solutions for a delay linear differential equation. Secondly, we obtain a norm estimation of the delayed matrix sine and cosine of polynomial degrees, which are used to establish sufficient conditions to guarantee our finite time stability results. Meanwhile, a numerical example is presented demonstrating the validity of our theoretical results. Finally, we extend our study to the same issue of a delay differential equation with nonlinearity by virtue of the Gronwall inequality approach.

Similar content being viewed by others

1 Introduction

Generally speaking, it is not an easy task to seek the fundamental matrix for linear differential delay systems due to the memory accumulated by the long-tail effects that the time-delay term introduces. In the theory of linear systems, it is necessary to find an explicit form of the desired fundamental matrix for the stability analysis. In fact, some easily used criteria for the stability results involve the fundamental matrix. In the past decade, there has been a rapid development on the representation of solutions, which lead to results on asymptotic stability, finite time stability and control problems for linear/nonlinear continuous delay systems and discrete delay systems or fractional order delay systems. For more results on matrix representation of the solution to a delay differential and discrete systems and their stability analysis and control problems, one can refer to [1–21] and the references therein.

The concept of finite time stability of delay differential equations arises from the fields of multibody mechanics, automatic engines and physiological systems as introduced by Dorato [22], which characterizes the system state by not exceeding a certain bounded for a given finite time interval and this seems more appropriate from practical considerations. Concerning the finite time stability, Ulam’s stability and stable manifolds of linear systems, impulsive systems and fractional systems, the methods of fundamental matrix, linear matrix inequality, algebraic inequality and integral inequality are often used to deal with this issue. For more recent contributions, one can see [23–37].

After reviewing, the criteria for linear delay differential systems are established by characterizing the eigenpolynomial distribution in the previous literature; it is not difficult to see that the procedure of the proof is complicated and the threshold for the desired delay systems is not easy to determine in practical problems. Thus it will be better to analyze the stability of delay differentia systems by using the representation of the solutions directly.

In this paper, we study the finite time stability of the following second order linear differential equations with a pure delay term:

where \(x\in \mathbb {R}^{n}\), τ is the time delay, φ is an arbitrary twice continuously differentiable vector function, T is a pre-fixed positive number and Ω is a \(n\times n\) nonsingular matrix.

Recently, Khusainov et al. [2] gave a new representation of the solution for (1) as follows:

where \(\cos_{\tau}\Omega t\) is called the delayed matrix cosine of polynomial degree 2k (see [2], Definition 1) on the intervals \((k-1)\tau\leq t< k\tau\) formulated by

and \(\sin_{\tau}\Omega t\) is called a delayed matrix sine of polynomial degree \(2k+1\) (see [2], Definition 2) on the intervals \((k-1)\tau\leq t< k\tau\) formulated by

respectively, and Θ and I are the zero and identity matrices. Delayed matrix cosine and sine of polynomial degrees play an important role in studying second order delay differential equations since they can act as the fundamental matrix to seeking some possible representation of solutions to the problem by using a variation of constants formula. For more properties of a delayed matrix cosine and sine of polynomial degrees, one can see [2], Lemmas 1-6.

Obviously, when \(\tau=0\), \(\cos_{\tau}\Omega t\) and \(\sin_{\tau}\Omega t\) reduce to the matrix cosine function \(\cos\Omega t\) and matrix sine function \(\sin\Omega t\), respectively, which are given by the formal matrix series

and

By Gantmakher [38], p.123,

is a solution of a second order differential system \(\ddot{x}(t)+\Omega^{2}x(t)=0, t\geq0, x(0)=x_{0}\in \mathbb {R}^{n}, \dot {x}(0)=\dot{x}_{0}\in \mathbb {R}^{n}\).

Motivated by [2], we prefer to adopt the method of the delayed matrix cosine and sine of polynomial degree to study the stability of the second order delay differential system (1). Compared with the method of the eigenpolynomial distribution, we do not need to solve an equation of the fourth degree. We give stability criteria by establishing the desired inequalities via using the norm estimation of the delayed matrix cosine and sine of polynomial degree.

The rest of this paper is organized as follows. In Section 2, we give two other possible formulas of solutions for the current systems by adopting the methods of integration by parts. Two very important lemmas, which present the estimation of the delayed matrix sine and cosine of polynomial degrees, is given. In Section 3, we present three sufficient conditions to guarantee the finite time stability results. In Section 4, an example is given to demonstrate the applicability of our main results for the linear case. In the final section, we extend the study of the finite time stability of the delay differential equation with nonlinearity by using a Gronwall inequality under a linear growth condition.

2 Preliminaries

Denote by \(C(J, \mathbb{R}^{n})\) the metric space of vector-value continuous functions from \(J\rightarrow\mathbb{R}^{n}\) endowed with the norm \(\|x\| =\sum^{n}_{i=1}|x_{i}(t)|\), and consider its \(\|x\|_{C}=\max_{t\in J}\| x(t)\|\). We introduce a space \(C^{1}(J, \mathbb {R}^{n})=\{x\in C(J, \mathbb {R}^{n}): \dot{x}\in C(J, \mathbb {R}^{n}) \}\). For \(A: \mathbb {R}^{n}\to \mathbb {R}^{n}\), we consider its matrix norm \(\|A\|=\max_{\|z\|=1}\|Az\|\) generated by \(\|\cdot\|\). In addition, we note \(\|\varphi\|_{C}=\max_{s\in[-\tau, 0]}\|\varphi(s)\|\).

We need the following rules of differentiation for the delayed matrix cosine of polynomial degree 2k on the interval \([(k-1)\tau,k\tau)\) and sine of polynomial degree \(2k+1\) on the interval \([(k-1)\tau,k\tau)\) defined in (3) and (4), respectively.

Lemma 2.1

see [2], Lemmas 1 and 2

The following rules of differentiation are true for the matrix functions (3) and (4):

Remark 2.2

For simplification of the next computation, one can divide the term \(\int^{0}_{-\tau}\sin_{\tau}\Omega(t-\tau-s)\ddot{\varphi}(s)\,ds\) in (2) into the following form according to the subintervals \([(k-1)\tau,k\tau)\):

Obviously, \(\sin_{\tau}\Omega(t-\tau-s)\) has different formulas in different subintervals \([(k-1)\tau,k\tau)\) by (4).

By Remark 2.2, the solution (2) of system (1) can be expressed in the following form:

for \((k-1)\tau\leq t\leq k\tau\).

Observing the solution (2) involves φ̈, which seems a requirement that is a bit stronger to the initial conditions.

Remark 2.3

In order to obtain some alternative formulas, one can apply integration by parts via Lemma 2.1 to derive that

then the solution (2) can be expressed as

If we take integration by parts again for the integral part of (6), then we have

which implies that (6) can be expressed as

Definition 2.4

see [24], Definitions 2.1

The system (1) satisfying the initial conditions \(x(t)\equiv \varphi(t)\) and \(\dot{x}(t)\equiv\dot{\varphi}(t)\) for \(-\tau\leq t\leq 0\) is finite time stable with respect to \(\{0,J,\delta,\epsilon,\tau\} \), if and only if

implies

where \(\gamma=\max\{\|\varphi\|_{C},\|\dot{\varphi}\|_{C},\|\ddot {\varphi}\|_{C}\}\) denotes the initial time of observation of the system. In addition, δ, ϵ are real positive numbers.

Using the form of \(\cos_{\tau}\Omega t\) and \(\sin_{\tau}\Omega t\) one can prove the following two lemmas, which will be widely used in the sequel.

Lemma 2.5

For any \(t\in[(k-1)\tau,k\tau)\), \(k=0,1,\ldots,n\), the following formula is true:

Proof

Using the form of (3), one can calculate that

The proof is completed. □

Lemma 2.6

For any \(t\in[(k-1)\tau,k\tau)\), \(k=0,1,\ldots,n\), the following formula is true:

Proof

Using the form of (4), we get

The proof is finished. □

Remark 2.7

When \(t\in(-\infty,-\tau)\), we can get \(\|\cos_{\tau }\Omega t\|=\|\sin_{\tau}\Omega t\|=0\) by the form (3) and (4).

As is well known, the exponential form of hyperbolic functions cosht and sinht are defined as follows:

Then, for all \(t\in\mathbb{R}\), we have the fact that \(\sinh t\leq\cosh t\) holds and the larger the value of t, the closer cosht and sinht. When \(t\rightarrow+\infty\), we get \(\cosh t=\sinh t\). In addition, both cosht and sinht are nonnegative, monotone increasing functions for \(t\geq0\).

Obviously, the derivatives of \(\cosh(\cdot)\) and \(\sinh(\cdot)\) are

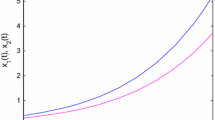

Next, we give an example to verify the results of Lemmas 2.5 and 2.6 and also show the images of a delayed cosine function and delayed sine function.

Example 2.8

Set \(\tau=0.4\), \(\Omega=2\), \(\Omega\in \mathbb {R}^{1}\). By (3) and (4) we derive that \(\cos_{0.4}2t\) and \(\sin_{0.4}2t\) are as follows:

and

It follows from Figure 1 and Figure 2 that the inequalities in Lemma 2.5 and Lemma 2.6 hold.

The images of delayed cosine \(\cos_{0.4}2t\) and delayed sine \(\sin_{0.4}2t\) are shown in Figure 3 and Figure 4, respectively. Obviously, we can see that the delayed cosine and delayed sine do have some similar properties of the classical cosine and sine functions such as a wave line, monotonicity and periodicity.

However, the differences between delayed cosine and delayed sine and cosine and sine are that the delayed cosine and delayed sine appear in the interval segment \([-\tau,0]\) and with the increasing of variables t, the upper and lower bounds of delayed cosine and delayed sine are also increasing. When the delay \(\tau=0\), by (3) and (4), the delayed cosine and delayed sine coincide with the cosine and sine functions.

3 Finite time stability results for linear case

In this section, we present some sufficient conditions for finite time stability results for the desired system (1) by using three possible formulas of solutions, which do enrich the design methods in the practical problem.

Now we are ready to present the first theorem by using the classical representation of solution (2) derived by Khusainov et al. [2].

Theorem 3.1

The system (1) is finite time stable with respect to \(\{ 0,J,\delta,\epsilon,\tau\}\), if

where δ and ϵ are defined in Definition 2.4.

Proof

By using equation (2) via some fundamental computations, one can get

Next, according to Lemmas 2.5 and 2.6, we obtain

where we use the fact

Since sinht and cosht are both monotonically increasing functions when \(t\geq0\), \(\|x(t)\|<\epsilon\) for \(\forall t\in J\) can be obtained by combining (12) and (14).

Thus, the system (1) is finite time stable by Definition 2.4. □

Next, we use a new alternative representation of solution (6) to derive the following result.

Theorem 3.2

The system (1) is finite time stable with respect to \(\{ 0,J,\delta,\epsilon,\tau\}\), if

where \(\lambda:=\max\{\cosh(\|\Omega\|\tau), \cosh(\| \Omega\|T)\}\).

Proof

Similar to Theorem 3.1, we estimate the norm \(\|\cdot\|\) of the solution formula (6),

By (8), the inequality (17) implies

Then, according to Lemmas 2.5 and 2.6, one can get

where we use the fact

Linking (16) and (18), we obtain \(\|x(t)\|<\epsilon , \forall t\in J\). Thus, the system (1) is finite time stable. □

Finally, we adopt another representation of solution (7) to derive another new result.

Theorem 3.3

The system (1) is finite time stable with respect to \(\{ 0,J,\delta,\epsilon,\tau\}\), if

where \(\theta:=\max\{\cosh( \Vert \Omega \Vert \tau), \cosh( \Vert \Omega \Vert T-\tau)\}\).

Proof

By Lemmas 2.5, 2.6 and taking the norm on both sides of (7) via (8), we have

Note that \(\sin_{\tau}\Omega t=\Theta\) if \(t\in(-\infty ,-\tau)\). For \(-\tau\leq s\leq0\), we get \(\|\sin_{\tau}\Omega(t-2\tau -s)\|=0\) when \(t-2\tau-s<-\tau\), by Lemma 2.6, we get \(\|\sin _{\tau}\Omega(t-2\tau-s)\|\leq\sinh[\|\Omega\|(t-\tau-s)]\leq\sinh(\| \Omega\|t)\) when \(t-2\tau-s\geq-\tau\). In the end, we can obtain

From (8), (20), (21), Lemmas 2.5 and 2.6, we can get

Substituting (19) into (22), we can finally obtain \(\Vert x(t) \Vert <\epsilon, \forall t\in J\). Thus, the system (1) is finite time stable. □

Remark 3.4

By the results in Theorems 3.1-3.3, we can analyze that when \(\alpha<\beta\) and \(\alpha<\rho\), the result of Theorem 3.1 is the optimal. When \(\beta<\alpha\) and \(\beta<\rho\), the result of Theorem 3.2 is the optimal. When \(\rho<\alpha\) and \(\rho<\beta\), the result of Theorem 3.3 is the optimal. And

Remark 3.5

We have studied the finite time stability of system (1) in Theorems 3.1-3.3. Now we analyze the stability of solution (2) to system (1) when \(t\rightarrow\infty\). In fact,

which implies that it is impossible to guarantee that \(\Vert x(t) \Vert \rightarrow0\) when \(t\rightarrow\infty\) since the first term \(\Vert \cos _{\tau}\Omega t \Vert \Vert \varphi(-\tau) \Vert \leq\cosh( \Vert \Omega \Vert t) \Vert \varphi (-\tau) \Vert =\frac{e^{ \Vert \Omega \Vert t}+e^{- \Vert \Omega \Vert t}}{2} \Vert \varphi(-\tau) \Vert \rightarrow\infty\) when \(t\rightarrow\infty\) even we put the strong condition on \(\Vert \Omega^{-1} \Vert \leq e^{-\nu(t+\tau)},\nu> \Vert \Omega \Vert \), to guarantee the second and third terms tend to zero due to \(\Vert \sin _{\tau}\Omega t \Vert \leq\sinh[ \Vert \Omega \Vert (t+\tau)]\leq e^{ \Vert \Omega \Vert (t+\tau)}\).

In the next section, we give an example of the stability of system (1) to verify Theorems 3.1-3.3.

4 A numerical example

Example 4.1

In this part, we consider the finite time stability of the following second order differential equations:

where \(\tau=0.5\), \(T=1\), \(n=2\),

By (5), we get the solution of system (23) as follows:

where \(0\leq t\leq0.5 \) and

where \(0.5\leq t\leq1\).

Next, we get

and

When \(0\leq t\leq0.5\), by (24) we get

Through a basic calculation one can obtain

and

When \(0.5\leq t\leq1\), by (25) in the same way we get

then one can obtain

and

By calculating we obtain \(\gamma=\max\{\|\varphi\|_{C},\|\dot{\varphi}\| _{C},\|\ddot{\varphi}\|_{C}\}=0.3\), \(\|\Omega\|=3\), \(\|\Omega^{-1}\| =0.75\), then we set \(\delta=0.31>0.3=\gamma\).

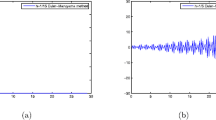

Figure 5 shows the state response \(x(t)\) of (23) and Figure 6 shows the norm \(\|x(t)\| \) of (23). By Theorems 3.1-3.3, we can calculate that \(\|x(t)\|\leq18.8158\), \(\|x(t)\|\leq7.0106\) and \(\|x(t)\|\leq 7.7167\), we just only take \(\epsilon=18.82, 7.02, 7.72\), respectively. The data is shown in Table 1.

The state response \(\pmb{x(t)}\) of ( 23 ).

The norm \(\pmb{\|x(t)\|}\) of ( 23 ).

We can see \(\|x(t)\|<\epsilon\) for \(\forall t\in J\) through Figure 6 and Table 1, the system (23) is finite time stable with respect to \(\{0,J,\delta,\epsilon,\tau\}\) under Theorems 3.1-3.3. We can also obtain \(\alpha=18.8158, \beta=7.0106,\rho=7.7167\). The result of Theorem 3.2 is the optimal in this example.

5 Extension to delay system with nonlinear term

In this section, we consider the following delay differential equations with nonlinear term:

where \(f\in C(\mathbb {R}^{n},\mathbb {R}^{n})\).

Definition 5.1

see [39], Definitions 2

The system (26) satisfying initial conditions \(x(t)\equiv \varphi(t)\) and \(\dot{x}(t)\equiv\dot{\varphi}(t)\) for \(-\tau\leq t\leq 0\) are finite time stable with respect to \(\{0,J,\delta,\epsilon,\tau\} \), if and only if

implies

where \(\gamma=\max\{\|\varphi\|_{C},\|\dot{\varphi}\|_{C},\|\ddot {\varphi}\|_{C}\}\) denotes the initial time of observation of the system. In addition, δ, ϵ are real positive numbers.

The following Gronwall inequality will be used to derive the finite time stability for our problem.

Lemma 5.2

see [40], p.12

Let \(u(t)\), \(k(t,s)\) and its partial derivative \(k_{t}(t,s)\) be nonnegative continuous functions for \(t_{0}< s< t\), and suppose

where \(a\geq0\) is a constant. Then

Now we are ready to state our main result in the section.

Theorem 5.3

Suppose that \(f\in C(\mathbb {R}^{n},\mathbb {R}^{n})\) and there exists \(P>0\) such that \(\| f(x)\|\leq P\|x\|\) for all \(x\in \mathbb {R}^{n}\). The system (26) is finite time stable with respect to \(\{ 0,J,\delta,\epsilon,\tau\}\) provided that

where

Proof

By [2], Theorem 2, equation (14), the solution of (26) has the form

where the matrix Ω is nonsingular. Taking the norm for (30), we obtain

Next, according to Lemmas 2.5, 2.6 and (15), we obtain

Note that \(\|f(x)\|\leq P\|x\|\) for all \(x\in \mathbb {R}^{n}\), then the inequality (32) becomes that

where a is defined in (29) and \(k(t,s)=P \Vert \Omega^{-1} \Vert \sinh [ \Vert \Omega \Vert (t-s) ]\).

Calculating the partial derivative \(k_{t}(t,s)\) via (9), we obtain

Note that \(\Vert x(t) \Vert \), \(k(t,s)\) and its partial derivative \(k_{t}(t,s)\) are all nonnegative continuous functions and a is a constant. Thus, the conditions in Lemma 5.2 are satisfied, then by Lemma 5.2 we get

Next,

Submitting (35) into (34) and by (28) we obtain

which implies that \(\Vert x(t) \Vert ^{2}<\epsilon, t\in J\). The proof is finished. □

References

Khusainov, DY, Shuklin, GV: Linear autonomous time-delay system with permutation matrices solving. Stud. Univ. Žilina Math. Ser. 17, 101-108 (2003)

Khusainov, DY, Diblík, J, Růžičková, M, Lukáčová, J: Representation of a solution of the Cauchy problem for an oscillating system with pure delay. Nonlinear Oscil. 11, 261-270 (2008)

Khusainov, DY, Shuklin, GV: Relative controllability in systems with pure delay. Int. J. Appl. Math. 2, 210-221 (2005)

Medveď, M, Pospišil, M, Škripková, L: Stability and the nonexistence of blowing-up solutions of nonlinear delay systems with linear parts defined by permutable matrices. Nonlinear Anal. 74, 3903-3911 (2011)

Medveď, M, Pospišil, M: Sufficient conditions for the asymptotic stability of nonlinear multidelay differential equations with linear parts defined by pairwise permutable matrices. Nonlinear Anal. 75, 3348-3363 (2012)

Diblík, J, Fečkan, M, Pospišil, M: Representation of a solution of the Cauchy problem for an oscillating system with two delays and permutable matrices. Ukr. Math. J. 65, 58-69 (2013)

Diblík, J, Khusainov, DY, Baštinec, J, Sirenko, AS: Exponential stability of linear discrete systems with constant coefficients and single delay. Appl. Math. Lett. 51, 68-73 (2016)

Diblík, J, Khusainov, DY: Representation of solutions of linear discrete systems with constant coefficients and pure delay. Adv. Differ. Equ. 2006, 080825 (2006)

Diblík, J, Khusainov, DY: Representation of solutions of discrete delayed system \(x(k+1)=Ax(k)+Bx(k-m)+f(k)\) with commutative matrices. J. Math. Anal. Appl. 318, 63-76 (2006)

Diblík, J, Khusainov, DY, Růžičková, M: Controllability of linear discrete systems with constant coefficients and pure delay. SIAM J. Control Optim. 47, 1140-1149 (2008)

Diblík, J, Fečkan, M, Pospišil, M: On the new control functions for linear discrete delay systems. SIAM J. Control Optim. 52, 1745-1760 (2014)

Diblík, J, Morávková, B: Discrete matrix delayed exponential for two delays and its property. Adv. Differ. Equ. 2013, 139 (2013)

Diblík, J, Morávková, B: Representation of the solutions of linear discrete systems with constant coefficients and two delays. Abstr. Appl. Anal. 2014, Article ID 320476 (2014)

Boichuk, A, Diblík, J, Khusainov, D, Růžičková, M: Fredholm’s boundary-value problems for differential systems with a single delay. Nonlinear Anal. 72, 2251-2258 (2010)

Pospišil, M: Representation and stability of solutions of systems of functional differential equations with multiple delays. Electron. J. Qual. Theory Differ. Equ. 2012, 54 (2012)

Luo, Z, Wang, J: Finite time stability analysis of systems based on delayed exponential matrix. J. Appl. Math. Comput. (2016). doi:10.1007/s12190-016-1039-2

Li, M, Wang, J: Finite time stability of fractional delay differential equations. Appl. Math. Lett. 64, 170-176 (2017)

You, Z, Wang, J: On the exponential stability of nonlinear delay systems with impulses. IMA J. Math. Control Inf. 1-31 (2017). doi:10.1093/imamci/dnw077

Liang, C, Wang, J: Analysis of iterative learning control for an oscillating control system with two delays. Trans. Inst. Meas. Control (2017). doi:10.1177/0142331217690581

Pospíšil, M, Diblík, J, Fečkan, M: On the controllability of delayed difference equations with multiple control functions. AIP Conf. Proc. 1648, 58-69 (2015)

Pospíšil, M: Representation of solutions of delayed difference equations with linear parts given by pairwise permutable matrices via Z-transform. Appl. Math. Comput. 294, 180-194 (2017)

Dorato, P: Short time stability in linear time-varying systems. In: Proc. IRE Int. Convention Record, Part 4, pp. 83-87 (1961)

Amato, F, Ariola, M, Cosentino, C: Robust finite-time stabilisation of uncertain linear systems. Int. J. Control 84, 2117-2127 (2011)

Lazarević, MP, Spasić, AM: Finite-time stability analysis of fractional order time-delay system: Grownwall’s approach. Math. Comput. Model. 49, 475-481 (2009)

Yang, X, Cao, J: Finite-time stochastic synchronization of complex networks. Appl. Math. Model. 34, 3631-3641 (2010)

Wang, Q, Lu, DC, Fang, YY: Stability analysis of impulsive fractional differential systems with delay. Appl. Math. Lett. 40, 1-6 (2015)

Zhu, Q, Cao, J, Rakkiyappan, R: Exponential input-to-state stability of stochastic Cohen-Grossberg neural networks with mixed delays. Nonlinear Dyn. 79, 1085-1098 (2015)

Wu, R, Lu, Y, Chen, L: Finite-time stability of fractional delayed neural networks. Neurocomputing 149, 700-707 (2015)

Wang, L, Shen, Y, Ding, Z: Finite time stabilization of delayed neural networks. Neural Netw. 70, 74-80 (2015)

Phat, VN, Muoi, NH, Bulatov, MV: Robust finite-time stability of linear differential-algebraic delay equations. Linear Algebra Appl. 487, 146-157 (2015)

Wang, J, Fečkan, M, Zhou, Y: Ulam’s type stability of impulsive ordinary differential equations. J. Math. Anal. Appl. 395, 258-264 (2012)

Wang, J, Zhou, Y, Fečkan, M: Nonlinear impulsive problems for fractional differential equations and Ulam stability. Comput. Appl. Math. 64, 3389-3405 (2012)

Wang, J, Li, X, Fečkan, M, Zhou, Y: Hermite-Hadamard type inequalities for Riemann-Liouville fractional integrals via two kinds of convexity. Appl. Anal. 92, 2241-2253 (2013)

Wang, J, Fečkan, M: A general class of impulsive evolution equations. Topol. Methods Nonlinear Anal. 46, 915-934 (2015)

Wang, J, Fečkan, M, Zhou, Y: A survey on impulsive fractional differential equations. Fract. Calc. Appl. Anal. 19, 806-831 (2016)

Wang, J, Fečkan, M, Zhou, Y: Center stable manifold for planar fractional damped equations. Appl. Math. Comput. 296, 257-269 (2017)

Wang, J, Fečkan, M, Tian, Y: Stability analysis for a general class of non-instantaneous impulsive differential equations. Mediterr. J. Math. 14, 1-21 (2017)

Gantmakher, FR: Theory of Matrices. Nauka, Moscow (1959) (in Russian)

Lazarević, MP, Debeljković, D, Nenadić, Z: Finite-time stability of delayed systems. IMA J. Math. Control Inf. 17, 101-109 (2000)

Bainov, DD, Simeonov, PS: Integral Inequalities and Applications. Springer, Berlin (1992)

Acknowledgements

The authors are grateful to the referees for their careful reading of the manuscript and valuable comments. The authors also are grateful for the help from the editor.

This work is partially supported by NNSFC (No. 11661016; 11261011) and Training Object of High Level and Innovative Talents of Guizhou Province ((2016)4006), Unite Foundation of Guizhou Province ([2015]7640), and Graduate ZDKC ([2015]003).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Liang, C., Wei, W. & Wang, J. Stability of delay differential equations via delayed matrix sine and cosine of polynomial degrees. Adv Differ Equ 2017, 131 (2017). https://doi.org/10.1186/s13662-017-1188-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1188-0