Abstract

This paper proposes the robust iterative learning control (ILC) design for uncertain linear systems with time-varying delays and random packet dropouts. The packet dropout is modeled by an arbitrary stochastic sequence satisfying the Bernoulli binary distribution, which renders the ILC system to be stochastic instead of a deterministic one. The main idea of this paper is to transform the ILC design into robust stability for a two-dimensional (2D) stochastic system described by the Roesser model with a delay varying in a range. A delay-dependent stability condition, which can guarantee mean-square asymptotic stability of such a 2D stochastic system, is derived in terms of linear matrix inequalities (LMIs), and formulas can be given for the ILC law design. An example for the injection molding is given to demonstrate the effectiveness of the proposed ILC method.

Similar content being viewed by others

1 Introduction

Iterative learning control (ILC) is an effective technique for systems that could perform the same task over a finite time interval repetitively. It updates the control input signal only depending on I/O data of previous iteration, and the tracking performance can become better and better. Due to its simplicity and effectiveness, ILC has been widely applied in many practical systems such as robotics, chemical batch processes, hard disk drives and urban traffic systems [1–8].

Time delay, which is a source of instability and poor performance, often appears in practical systems due to the finite speed of signal transmission and information processing. As a result, studies on batch process control have attracted considerable attention [9–13]. Recently, ILC has been introduced to systems with time delays to improve the tracking performance. In [14], a new ILC method is proposed for a class of linear systems with time delay using a holding mechanism. In [15], an ILC algorithm integrated with Smith predictor for batch processes with fixed time delay is proposed and analyzed in the frequency domain; itcan obtain perfect tracking performance under certain conditions. In [16], an ILC scheme is proposed for systems with time delay and model uncertainties based on the internal model control principle. In [17], a two-dimensional (2D) model based on ILC methods for systems both with state delays and with input delays is presented; necessary and sufficient conditions for the stability of ILC are also provided. In [18], the problem of ILC design for time-delay systems in the presence of initial shifts is considered, and the 2D system theory is employed to develop a convergence condition for both asymptotic stability and monotonic convergence of ILC.

In these existing studies [14–18], the time delay considered is known and fixed constants. As we know, many practical systems suffer from time-varying delays, which are less conservative than constant delays. It is a challenge to design ILC for systems with time-varying delays. There have already been a few results on this issue. In [19], a robust state feedback integrated with ILC scheme is proposed for batch processes with interval time-varying delay. The design is considered using a 2D Roesser model based on the general 2D system theory [20]. In [21], a robust closed-loop ILC scheme is proposed for batch processes with state delay and time-varying uncertainties, and the batch processes are described as a 2D FM model. In [22], a robust output feedback incorporated with ILC scheme is proposed for a kind of batch process with uncertainties and interval time-varying delay. The batch process is transformed into a 2D FM model with a delay varying in a range, and the design is cast as a robust H∞ control for uncertain 2D systems. It is noticed that almost all available results on ILC systems with time delays are based on an implicit assumption that sensor output measurement is perfect. However, the assumption is often not true in most cases in practice. The main reason is that sensors may suffer from probabilistic signal missing especially in a networked environment [23–25].

Actually, the problem of ILC for networked control systems (NCSs) with packet dropouts has received some attention in the research field. The stability of ILC for linear and nonlinear systems with intermittent measurement is investigated in [26, 27]. Some robust ILC designs are proposed for NCSs to suppress the effect of data dropouts in [28–33]. However, to our best knowledge, no work considering ILC systems with data dropouts and time delay simultaneously has been done up to now.

This paper proposes a robust ILC design scheme for uncertain linear systems with time-varying delays and random packet dropouts. Here the considered systems are implemented in a network environment, where data packet may be missed during transmission. For convenience, only the measurement packet dropout is taken into account. The packet dropout is modeled by an arbitrary stochastic sequence satisfying the Bernoulli binary distribution, which renders the ILC system to be stochastic instead of a deterministic one. Then, a 2D stochastic Roesser model with a delay varying in a range is established to describe the entire dynamics. Based on 2D system theory, the ILC law is designed to guarantee mean-square asymptotic stability of the considered 2D stochastic systems. Afterwards, a delay-dependent stability condition is derived in terms of linear matrix inequalities (LMIs), and formulas can be given for the ILC law design. Finally, an example for the injection molding is given to demonstrate the effectiveness of the proposed ILC method.

Throughout the present paper, the following notations are used. The superscript ‘T’ denotes the matrix transposition, I denotes the identity matrix, 0 denotes the zero vector or matrix with the required dimensions. \(\operatorname{diag} \{ \bullet \}\) denotes the standard (block) diagonal matrix whose off-diagonal elements are zero. In symmetric block matrices, an asterisk ∗ is used to denote the term that is induced by symmetry. The notation \(\Vert \bullet \Vert \) refers to the Euclidean vector norm, \(E \{ x \},E \{ x|y \}\) mean the expectation of x and the expectation of x conditional on y, respectively. Matrices, if the dimensions are not explicitly stated, are assumed to be compatible for algebraic operations.

2 Problem formulation and 2D system representation

Consider the following linear discrete time system with time-varying delay:

where k denotes iteration, t denotes discrete time. \(x(t,k),u(t,k),y(t,k)\) are state, input and output variables, \(A,A_{d},B,C\) are the matrices with appropriate dimensions describing the system in the state space. \(x_{0k}\) stands for the initial condition of the process in the kth iteration. \(d(t)\) is time-varying delay satisfying

where \(d_{m},d_{M}\) denote the lower and upper delay bounds. \(\Delta A,\Delta A_{d}\) denote admissible uncertain perturbations of matrices A and \(A_{d}\), which can be represented as

where \(E,F_{1},F_{2}\) are known real constant matrices characterizing the structures of uncertain perturbations, and Σ is an uncertain perturbation of the system that satisfies \(\Sigma^{T}\Sigma \le I\).

For system (1), design the following ILC update law:

where \(\Delta u(t,k)\) is the ILC update law to be designed.

Denote \(e(t,k) = y_{d}(t) - y(t,k)\), \(\eta (t,k) = x(t - 1,k + 1) - x(t - 1,k)\). From (1) and (2), we can obtain

where

\(h(k)\) is a new delay variable satisfying \(h_{m} \le h(k) \le h_{M}\) with \(h_{m},h_{M}\) denoting the lower and upper delay bounds. The new vector \(e(t,k - h(k))\) is introduced here as is also done in [14] so that system (1) can be modeled as a normal 2D system with interval time delay.

Consider the following ILC update laws:

where \(K = [K_{1},K_{2}]\) is a gain matrix to be designed. ILC law (4) contains two parts: a P-type ILC law and a state feedback control law. This control scheme has the advantages of feedback loop such as robustness and meanwhile enjoys the extra performance improvement from ILC.

It is assumed that the ILC law is implemented in a networked control system as shown in Figure 1, where the network only exists on the side from plant to controller for convenience. The data \(x(t,k + 1),x(t,k),e(t,k)\) are transferred as one whole packet from the plant to the controller. It is further supposed that the controller can detect whether the packet is dropped or not. If the data packet is missed, then \(\Delta u(t,k) = 0\), that is, \(u(t,k + 1) = u(t,k)\). If the data packet is not missed, the term \(\Delta u(t,k)\) is calculated as (4). In this case, the ILC update law (4) can be described as

where the stochastic parameter \(\alpha (t,k)\) is a random Bernoulli variable taking the values of 0 and 1 with

in which α satisfying \(0 \le \alpha \le 1\) is a known constant.

From (3) and (4), it can be derived

Denote \(\eta (t,k) = x^{h}(i,j),e(t,k) = x^{v}(i,j)\), system (7) can be rewritten as the following typical 2D Roesser system:

where the bounded conditions are defined by

where \(r_{1} < \infty\) and \(r_{2} < \infty\) are positive integers, \(\rho_{ij}\) and \(\sigma_{ij}\) are given vectors.

Remark 1

It is worth pointing out that 2D system (8) is a stochastic system due to the introduction of the stochastic variable \(\alpha (i,j)\). It differs from the deterministic 2D or ILC systems with time delay in recent works such as [17–22]. Here, the ILC design should be discussed under the framework of stochastic stability. To this end, we need to give the following definition of stochastic stability for 2D systems.

Definition 1

[32]

For all initial bound conditions in (9), 2D system (8) is said to be mean-square asymptotically stable if the following is satisfied:

3 Stability analysis and controller design

3.1 Stability analysis

In this section, we focus on the problem of robust stability and robust stabilization for 2D stochastic system (8) using an LMI technique. The following lemma is needed in the proof of our main result.

Lemma 1

[34]

For any vector \(\delta (t) \in \mathbb{R}^{n}\), two positive integers \(\kappa_{0},\kappa_{1}\) and matrix \(0 < R \in \mathbb{R}^{n \times n}\), the following inequality holds:

Now, we can give our main result.

Theorem 1

Given positive integers \(d_{m},d_{M},h_{m},h_{M}\), 2D system (8) is mean-square asymptotically stable if there exists a positive definite symmetric matrix \(P = \bigl [{\scriptsize\begin{matrix}{}P^{h} & \cr & P^{v} \end{matrix}} \bigr ]\), \(Q = \bigl [{\scriptsize\begin{matrix}{} Q^{h} & \cr & Q^{v} \end{matrix}} \bigr ]\), \(M = \bigl [{\scriptsize\begin{matrix}{} M^{h} & \cr & M^{v} \end{matrix}} \bigr ]\), and \(G = \bigl [{\scriptsize\begin{matrix}{} G^{h} & \cr & G^{v} \end{matrix}} \bigr ]\) such that the following matrix inequality holds:

where

Proof

Define \(\tilde{\alpha}_{i,j} = \alpha (i,j) - \alpha\), it is obvious that

Denote

Consider the following Lyapunov function candidate:

where

and \(P^{h},P^{v},Q^{h},Q^{v},M^{h},M^{v},G^{h},G^{v}\) are positive definite matrices to be determined.

Define the following index:

Calculating (12) along the solutions of system (8), we can obtain

and

For (17) and (22), using Lemma 1 yields

and

Since \(\tilde{\alpha}_{i,j}\) is independent with \(x(i,j)\) and \(x_{d}(i,j)\), we have

From (17)-(24) and using the result in (25), we can obtain

where

and

By using Schur complements, it is obvious that (10) is equivalent to (26). Since \(\psi < 0\), it is obvious that

Taking mathematical expectation on both sides of (27) leads to

Summing up both sides of (28) from N to 0 with respect to i and 0 to N with respect to j, for any nonnegative integer N and considering boundary conditions (9), we have

It is clear that the sum of the Lyapunov functional value decreases along the state trajectories. Then, from [15], we can conclude that

which implies that system (8) is asymptotically stable.

This completes the proof of Theorem 1. □

Remark 2

Theorem 1 provides a sufficient condition for 2D uncertain systems with a delay varying in a range and random packet dropouts. If the communication link existing between the plant and the controller is perfect, that is, there is no packet dropout during their transmission, then \(\alpha = 1\) and \(\theta = 0\). In this case, the condition in Theorem 1 becomes the condition obtained in [19, 20] for a 2D deterministic system with time delay. From this point of view, Theorem 1 can be seen as an extension to 2D time-delay systems with packet dropout.

3.2 Controller design

Theorem 1 gives a mean-square asymptotic stability condition where the controller gain matrix K is known. However, our eventual purpose is to determine a suitable K by system matrices \(A,A_{d},B,E,F_{1},F_{2}\) and parameter α.

Lemma 2

[35]

Let \(U,V,\Sigma\) and W be real matrices of appropriate dimensions with W satisfying \(W = W^{T}\), then for all \(\Sigma^{T}\Sigma \le I\),

if and only if there exists \(\varepsilon > 0\) such that

Now, we can give the following result.

Theorem 2

Given positive integers \(d_{m},d_{M},h_{m},h_{M}\), 2D system (8) is mean-square asymptotically stable if there exist a positive definite symmetric matrix \(L = \bigl [{\scriptsize\begin{matrix}{} L^{h} & \cr & L^{v} \end{matrix}} \bigr ]\), \(S = \bigl [{\scriptsize\begin{matrix}{} S^{h} & \cr & S^{v} \end{matrix}} \bigr ]\), \(M_{1} = \bigl [{\scriptsize\begin{matrix}{} M_{1}^{h} & \cr & M_{1}^{v} \end{matrix}} \bigr ]\), \(M_{2} = \bigl [{\scriptsize\begin{matrix}{} M_{2}^{h} & \cr & M_{2}^{v} \end{matrix}} \bigr ]\), \(X = \bigl [{\scriptsize\begin{matrix}{} X^{h} & \cr & X^{v} \end{matrix}} \bigr ]\) matrix Y and positive scalar ε such that the following matrix inequality holds:

where

In this case, a suitable ILC law can be selected as \(K = YL^{ - 1}\).

Proof

Pre- and post-multiply inequality (10) by \(\operatorname{diag} \{ L,L,G^{ - 1},L,L,G^{ - 1},G^{ - 1} \}\), by using Schur complements and letting \(L = P^{ - 1}\), the following LMI can be obtained:

that is,

By Lemma 2, (31) holds if and only if there exists a scalar \(\varepsilon > 0\) such that

Using Schur complements, condition (32) implies that the condition in Theorem 2 holds. □

Remark 3

Notethat the condition in Theorem 2 is no longer LMIs owing to the term \(LX^{ - 1}L\) in \(\tilde{\psi}_{1}\). The available LMI tools cannot be used directly to obtain a feasible solution. However, we can use the idea of iterative algorithms in combination with LMI convex optimization problems as is done in [36, 37]. Then an available control law can be obtained.

Remark 4

It isnoticed thatthe data dropoutmay occur on both system output and control input sides in NCSs. In this paper, we only consider output measurement missing for the sake of convenience, as is also done in most existing works. However, the result in this paper can be extended to the control input signal dropouts.

Remark 5

Since the system performs the same task repetitively, computation complexity is an important issue for ILC systems. The large number of iterations leads to high accuracy but heavy computational burden. How to keep the balance between iteration number and tracking accuracy is an important problem for practical ILC systems. Some efforts can be made to address this issue. Firstly, we can pre-calculate an acceptable tracking error, which satisfies the accuracy requirement of the control objective. If the tracking error reaches the given value, then the iteration process is stopped. Secondly, we can design optimal ILC with monotonic convergence and fast convergence speed such that learning transient behavior is reduced.

4 Illustrative example

In this section, the result is applied to an injection molding process to demonstrate the effectiveness of the proposed ILC design approach. Injection molding process is a typical repetitive process, where key process variables are controlled to follow certain profiles repetitively to ensure the product quality [22, 38]. Injection velocity is a key variable in the filling stage, which is controlled by manipulating the opening of a hydraulic valve. A state-space representation of injection molding velocity control can be described as follows:

where matrices \(A = [{\scriptsize\begin{matrix}{}1.58 & - 0.59 \cr 1 & 0 \end{matrix}} ]\), \(B = [{\scriptsize\begin{matrix}{}1 \cr 0 \end{matrix}} ]\), \(C = [ 1.69 \quad {-}1.42 ]\). \(\Delta A = E\Sigma F,\Delta A_{d} = E\Sigma F_{2}\) indicate uncertain parameter perturbation with

where \(\xi_{1,2}\) are unknown variables. The values of time-varying delays are chosen as \(0.5 \le d(t) \le 4\) and \(0.1 \le h(k) \le 2\), which is derived from [22].

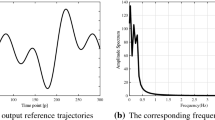

The desired trajectory is given as follows:

In simulation, the initial states are given as \(x_{1}(0,k) = x_{2}(0,k) = 0\) for all k, and the control input is selected as \(u(t,0) = 0\) for all t. The time-varying delay \(d(t)\) changes randomly with \(d(t) \in [0.5\ 4]\). The uncertain parameters \(\xi_{1,2}\) are assumed to vary randomly within \([0\ 1]\) along with both time and iteration direction.

To perform the simulation, we consider the following two cases: (1) \(\bar{\alpha} = 1\), (2) \(\bar{\alpha} = 0.75\). Obviously, Case 1 means that there is no packet dropout. Case 2 means that there is 25 percent dropout. Using Theorem 2, we can obtain the controller \(K = [- 1.95 \ 0.63 \ 0.06]\) for Case 1 and \(K = [- 2.05 \ 0.73 \ 0.08]\) for Case 2. Figure 2 shows the tracking error on the iteration domain for Case 1. It is obvious that the tracking error converges to a small steady state without packet dropouts, where the final error is caused by unpredictable random parameter disturbances. To see the convergence procedure more clearly, system outputs at 1st, 10th and 50th iteration are plotted in Figure 3.

For Case 2, the tracking error on the iteration domain is plotted in Figure 4, and system outputs at the three different iterations are plotted in Figure 5. It can be seen that even though the tracking performance has degraded and significant tracking errors exist in the start iteration due to packet dropouts, better tracking can also be achieved after some iterations. It is thus demonstrated that the proposed method can be used for robust tracking against non-repeatable uncertainties along iteration, random packet dropouts and time-varying delay. Besides, the convergence speed of Case 2 is slower than that of Case 1 as shown in Figures 2 and 4, which illustrates that convergence speed gets slower as dropout rate increases.

5 Conclusions

The robust ILC design is considered for uncertain linear systems with time-varying delays and random packet dropouts. By modeling the packet dropout as an arbitrary stochastic sequence satisfying the Bernoulli binary distribution, the considered system can be transformed into a 2D stochastic system described by the Roesser model with a delay varying in a range. Then, a delay-dependent stability condition is derived in terms of linear matrix inequalities (LMIs), and formulas can be given for the ILC law design. The results on injection velocity control have illustrated the feasibility and effectiveness of the proposed design.

References

Arimoto, S, Kawamura, S, Miyazaki, F: Bettering operation of robots by learning. J. Robot. Syst. 1(2), 123-140 (1984)

Bristow, DA, Tharayil, M, Alleyne, AG: A survey of iterative learning control. IEEE Control Syst. Mag. 26(3), 96-114 (2006)

Ahn, HS, Chen, Y, Moore, KL: Iterative learning control: brief survey and categorization. IEEE Trans. Syst. Man Cybern., Part C, Appl. Rev. 37(6), 1099-1121 (2007)

Li, Y, Jiang, W: Fractional order nonlinear systems with delay in iterative learning control. Appl. Math. Comput. 257, 546-552 (2015)

Meng, DY, Jia, YM: Iterative learning approaches to design finite-time consensus protocols for multi-agent systems. Syst. Control Lett. 61(1), 187-194 (2012)

Chi, RH, Hou, ZS, Xu, JX: A discrete-time adaptive ILC for systems with iteration-varying trajectory and random initial condition. Automatica 44(8), 2207-2213 (2008)

Xu, JX: A survey on iterative learning control for nonlinear systems. Int. J. Control 84(7), 1275-1294 (2011)

Li, JS, Li, JM: Iterative learning control approach for a kind of heterogeneous multi-agent systems with distributed initial state learning. Appl. Math. Comput. 257, 1044-1057 (2015)

Nam, PT, Trinh, H, Pathirana, PN: Discrete inequalities based on multiple auxiliary functions and their applications to stability analysis of time-delay systems. J. Franklin Inst. 352(12), 5810-5831 (2015)

Seuret, A, Gouaisbaut, F, Fridman, E: Stability of discrete-time systems with time-varying delays via a novel summation inequality. IEEE Trans. Autom. Control 60(10), 2740-2745 (2015)

Zhang, XM, Han, QL: Abel lemma-based finite-sum inequality and its application to stability analysis for linear discrete time-delay system. Automatica 57, 199-202 (2015)

Zhang, CK, He, Y, Jiang, L, Wu, M: An improved summation inequality to discrete-time systems with time-varying delay. Automatica 74, 10-15 (2016)

Zhang, CK, He, Y, Jiang, L, Wu, M, Zeng, HB: Delay-variation-dependent stability of delayed discrete-time systems. IEEE Trans. Autom. Control 61(9), 2663-2669 (2016)

Park, KH, Bien, Z, Hwang, DH: Design of an iterative learning controller for a class of linear dynamic systems with time delay. IEE Proc., Control Theory Appl. 145(6), 507-512 (1998)

Xu, JX, Hu, Q, Lee, TH, Yamamoto, S: Iterative learning control with Smith time delay compensator for batch processes. J. Process Control 11(3), 321-328 (2001)

Liu, T, Gao, FR, Wang, YQ: IMC-based iterative learning control for batch processes with uncertain time delay. J. Process Control 20(2), 173-180 (2010)

Li, XD, Chow, TWS, Ho, JKL: 2D system theory based iterative learning control for linear continuous systems with time delays. IEEE Trans. Circuits Syst. I, Regul. Pap. 52(7), 1421-1430 (2005)

Meng, DY, Jia, YM, Du, JP, Yu, FS: Robust design of a class of time-delay iterative learning control systems with initial shifts. IEEE Trans. Circuits Syst. I, Regul. Pap. 56(8), 1744-1757 (2009)

Wang, LM, Mo, SY, Zhou, DH, Gao, FR: Robust design of feedback integrated with iterative learning control for batch processes with uncertainties and interval time-varying delays. J. Process Control 21(7), 987-996 (2011)

Chen, SF: Delay-dependent stability for 2D systems with time-varying delay subject to state saturation in the Roesser model. Appl. Math. Comput. 216(9), 2613-2622 (2010)

Liu, T, Gao, F: Robust two-dimensional iterative learning control for batch processes with state delay and time-varying uncertainties. Chem. Eng. Sci. 65(23), 6134-6144 (2010)

Wang, LM, Mo, SY, Zhou, DH, Gao, FR: H∞ Design of 2D controller for batch processes with uncertainties and interval time-varying delays. Control Eng. Pract. 21(10), 1321-1333 (2013)

Fang, H, Ye, H, Zhong, M: Fault diagnosis of networked control systems. Annu. Rev. Control 31(1), 55-68 (2007)

Jiang, S, Fang, H: H∞ Static output feedback control for nonlinear networked control systems with time delays and packet dropouts. ISA Trans. 52(2), 215-222 (2013)

Zheng, X, Fang, H: Recursive state estimation for discrete-time nonlinear systems with event-triggered data transmission norm-bounded uncertainties and multiple missing measurements. Int. J. Robust Nonlinear Control 26(17), 3673-3695 (2016)

Bu, XH, Hou, ZS: Stability of iterative learning control with data dropouts via asynchronous dynamical system. Int. J. Autom. Comput. 8(1), 29-36 (2011)

Bu, XH, Yu, FS, Hou, ZS, Wang, FZ: Iterative learning control for a class of nonlinear systems with random packet losses. Nonlinear Anal., Real World Appl. 14(1), 567-580 (2013)

Ahn, HS, Chen, Y, Moore, KL: Intermittent iterative learning control. In: Proc. of the IEEE Int. Symposium on Intelligent Control, Germany, pp. 832-837 (2006)

Ahn, HS, Chen, YQ, Moore, KL: Discrete-time intermittent iterative learning control with independent data dropouts. In: Proceedings of 17th IFAC World Congress, Korea, pp. 12442-12447 (2008)

Liu, CP, Xu, JX, Wu, J: Iterative learning control for network systems with communication delay or data dropout. In: Proceedings of 48rd IEEE Conference on Decision and Control, China, pp. 4858-4863 (2009)

Bu, XH, Hou, ZS, Yu, FS, Wang, FZ: H∞ Iterative learning controller design for a class of discrete-time systems with data dropouts. Int. J. Syst. Sci. 45(9), 1902-1912 (2014)

Bu, XH, Hou, ZS, Jin, ST, Chi, RH: An iterative learning control design approach for networked control systems with data dropouts. Int. J. Robust Nonlinear Control 26(1), 91-109 (2016)

Shen, D, Wang, YQ: ILC for networked nonlinear systems with unknown control direction through random Lossy channel. Syst. Control Lett. 77, 30-39 (2015)

Qiu, J, Xia, Y, Yang, H, Zhang, J: Robust stabilisation for a class of discrete-time systems with time-varying delays via delta operators. IET Control Theory Appl. 2(1), 87-93 (2008)

Boyd, S, Ghaoui, LE, Feron, E, Balakrishnan, V: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia (1994)

Ghaoui, EL, Oustry, F, Aitrami, M: A cone complementarity linearization algorithms for static output feedback and related problems. IEEE Trans. Autom. Control 42(8), 1171-1176 (1997)

Moon, YS, Park, P, Kwon, WH, Lee, YS: Delay-dependent robust stabilisation of uncertain state delayed systems. Int. J. Control 74(14), 1447-1455 (2001)

Shi, J, Gao, F, Wu, TJ: Robust design of integrated feedback and iterative learning control of a batch process based on a 2D Roesser system. J. Process Control 15(8), 907-924 (2005)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Nos. 61573129, 61433002, 61573130), the Program for Science & Technology Innovation Talents in Universities of Henan Province (16HASTIT046), the Fundamental Research Funds for the Universities of Henan Province, the program of Key Young Teacher of Higher Education of Henan Province (2014GGJS-041), the program of Key Young Teacher of Henan Polytechnic University, the Science and Technology Innovation Talents Project of Henan Province (164100510004) and the Innovation Scientists and Technicians Troop Construction Projects of Henan Province (CXTD2016054).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

BXH and HZW carried out the main part of this article. HZS and YJQ corrected and revised the manuscript. All authors have read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Xuhui, B., Zhanwei, H., Zhongsheng, H. et al. Robust iterative learning control design for linear systems with time-varying delays and packet dropouts. Adv Differ Equ 2017, 84 (2017). https://doi.org/10.1186/s13662-017-1140-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1140-3