Abstract

This paper is concerned with controlled nonlinear impulsive evolution equations with nonlocal conditions. The existence of PC-mild solutions is proved, but the uniqueness cannot be obtained. By constructing approximating minimizing sequences of functions, the existence of optimal controls of systems governed by nonlinear impulsive evolution equations is also presented.

Similar content being viewed by others

1 Introduction

Since the end of last century, impulsive evolution equations and impulsive optimal controls have attracted much attention because many evolutional processes are subject to abrupt changes in the form of impulses (see [1–11]). Indeed, we can find numerous applications in demography, control, mechanics, electrical engineering fields, and so on. Recently, there has been significant development in impulsive differential equations and optimal controls. For more details, we refer to the monographs of Ahmed [2, 3, 12], Xiang [7, 12, 13], Ashyralyev [8, 9], Sharifov [8–11, 14], Mardanov [14], and the references therein. There are many papers discussing the impulsive differential equations and impulsive optimal controls with the classic initial condition: \(x(0)=x_{0}\) (see [2, 4, 7, 12, 13]). How does one look for suitable optimal controls when the uniqueness of solutions of controlled impulsive equations cannot be obtained? In spite of some attempts and efforts, the previous method is not entirely satisfactory. New techniques must be given. In particular, nonlocal differential equations have been studied extensively in recent years [10, 11, 15, 16]. But the existence of optimal controls of systems governed by nonlocal impulsive evolution equations with the initial condition \(x(0)+g(x)=x_{0}\) seems to be rarely involved. In this paper, we consider the following controlled nonlocal impulsive equation:

where \(A: D(A)\subseteq X\rightarrow X\) is the infinitesimal generator of strongly continuous semigroup \(S(t)\) for \(t>0\) in a real Banach space X, f is a nonlinear perturbation, \(J_{i}\) (\(i=1,2,\ldots,q\)) is a nonlinear map and \(\bigtriangleup x(t_{i})=x(t_{i}^{+})-x(t_{i}^{-})\), g is a given X-valued function, the control \(u\in U_{ad}\), \(U_{ad}\) is a control set which we will introduce in Section 2.

By introducing a reasonable mild solution for (1.1) that can be represented by integral equation, we show the existence of feasible pairs. Moreover, a limited Lagrange problem of system governed by (1.1) is investigated. Due to the lack of uniqueness of feasible pairs, we mainly apply the idea of constructing approximating minimizing sequences of functions twice and derive the existence of optimal controls. It is different from the usual approach that the Banach contraction mapping theory is applied directly to prove the existence of optimal controls if the uniqueness of feasible pairs cannot be obtained. Therefore our results essentially generalize and develop many previous ones in this field.

This paper is organized as follows. In Section 2, we give some associated notations and recall some concepts and facts about the measure of noncompactness, fixed point theorem and impulsive semilinear differential equations. In Section 3, the existence results of controlled nonlocal evolution equations are obtained. In Section 4, the existence of optimal controls for a Lagrange problem is established. Finally, an example is presented to illustrate our results in the last section.

2 Preliminaries

Let X be a real Banach space. \(\mathcal {L}(X)\) is the class of (not necessary bounded) linear operators in X. We denote by \(C([0,T];X)\) the Banach space of all continuous functions from \([0,T]\) to X with the norm \(\|u\|= \sup \{ \|u(t)\|,t\in [0,T]\}\) and by \(L^{1}([0,T];X)\) the Banach space of all X-valued Bochner integrable functions defined on \([0,T]\) with the norm \(\|u\|_{1}=\int ^{T}_{0}\|u(t)\|\,dt\). Let \(PC([0,T];X)=\{u: [0,T]\rightarrow X: u(t)\mbox{ is continuous at } t\neq t_{i}\mbox{ and left continuous at }t=t_{i}\mbox{ and the right limit } u(t_{i}^{+})\mbox{ exists for }i=1,2,\ldots,q\}\). It is easy to check that \(PC([0,T];X)\) is a Banach space with the norm \(\|u\|_{PC}=\sup\{\|u(t)\|, t\in[0,T]\}\) and \(C([0,T];X)\subseteq PC([0,T];X)\subseteq L^{1}([0,T];X)\).

We introduce the Hausdorff measure of noncompactness α defined on each bounded subset Ω of E by

The map \(Q:D\subset E\rightarrow E\) is said to be α-contraction if there exists a positive constant \(k < 1\) such that \(\alpha(QD) \leq k\alpha(D)\) for any bounded closed subset \(D\subset E\), where E is a Banach space.

The following fixed point theorem plays a key role in the proof of our main results.

Lemma 2.1

([17]: Darbo-Sadovskii)

If \(D\subset E\) is bounded closed and convex, the continuous map \(Q:D\rightarrow D\) is an α-contraction, then the map Q has at least one fixed point in D.

Let Y be another separable reflexive Banach space where controls u take values. Denoted \(P_{f}(Y)\) by a class of nonempty closed and convex subsets of Y. We suppose that the multivalued map \(w:[0,T]\rightarrow P_{f}(Y)\) is measurable, \(w(\cdot)\subset E\), where E is a bounded set of Y, and the admissible control set \(U_{ad}=S_{w}^{p}=\{u\in L^{p}(E)|u(t)\in w(t), \mbox{ a.e.}\}\), \(p>1\). Then \(U_{ad}\neq \emptyset\), which can be found in [1].

Throughout this work, we suppose that

-

(A)

The linear operator \(A: D(A)\subseteq X\rightarrow X\) generates a compact \(C_{0}\)-semigroup \(\{S(t):t\geq0\}\). Hence, there exists a positive number M such that \(M=\sup_{0\leq t\leq T}\|S(t)\|\).

For use in the sequel, we introduce the following definition.

Definition 2.1

A function \(x\in PC([0,T]; X)\) is said to be a PC-mild solution of the nonlocal problem (1.1), if it satisfies

In addition, let r be a finite positive constant, and set \(B_{r} :=\{x\in X: \|x\|\leq r\}\) and \(W_{r} :=\{y\in PC([0,T]; X): y(t)\in B_{r}, \forall t\in[0,T]\}\).

3 Controlled impulsive differential equations

In this section, we consider the following controlled nonlocal impulsive problem:

First, we give the following hypotheses:

-

(F)

-

(1)

\(f:[0,T]\times X\rightarrow X\) is a Carathéodory function, i.e., for all \(x\in X\), \(f(\cdot,x): [0,T]\rightarrow X\) is measurable and for a.e. \(t\in[0,T]\), \(f(t,\cdot):X\rightarrow X\) is continuous.

-

(2)

For finite positive constant \(r>0\), there exists a function \(\varphi_{r}\in L^{1}(0,T;R)\) such that

$$\bigl\| f(t,x)\bigr\| \leq\varphi_{r}(t) $$for a.e. \(t\in[0, T]\) and \(x\in B_{r}\).

-

(1)

-

(B)

\(B:[0,T]\rightarrow \mathcal {L}(Y,X)\) is essentially bounded, i.e., \(B\in L^{\infty}([0,T],\mathcal {L}(Y,X))\).

-

(G)

\(g: PC([0,T]; X)\rightarrow X\) is a continuous and compact operator.

-

(J)

\(J_{i}:X\rightarrow X\) satisfies the following Lipschitz condition:

$$\bigl\| J_{i}(x_{1})-J_{i}(x_{2})\bigr\| \leq h_{i}\|x_{1}-x_{2}\|,\quad i=1,2,\ldots,q, $$for some constants \(h_{i}>0\) and \(x_{1},x_{2}\in PC([0,T]; X)\).

-

(R)

\(M\{\|x_{0}\|+\sup_{x\in W_{r}}\|g(x)\|+\|Bu\|_{L^{1}}+\sum_{1\leq i\leq q}[\|J_{i}(0)\|+h_{i}r]+\|\varphi_{r}\|_{L^{1}}\}\leq r\).

Remark 3.1

From the assumption (B) and the definition of \(U_{ad}\), it is also easy to verify that \(Bu\in L^{p}([0,T];X)\) with \(p>1\) for all \(u\in U_{ad}\). Therefore, \(Bu\in L^{1}([0,T];X)\) and \(\|Bu\|_{L^{1}}<+\infty\).

Remark 3.2

Under the assumption (G), \(\sup_{x\in W_{r}}\|g(x)\|<+\infty\).

Theorem 3.1

Assume that there exists a constant \(r > 0\) such that the conditions (A), (F), (B), (G), (J), and (R) are satisfied. Then the nonlocal problem (3.1) has at least one PC-mild solution on \([0,T]\).

In order to obtain the existence of solutions for controlled impulsive evolution equations and optimal controls, we need the following important lemma, which can easily be proved (refer to Lemma 3.2 and Corollary 3.3 in Chapter 3 of [4]).

Lemma 3.1

Suppose \(S(t)\) is a compact \(C_{0}\)-semigroup on Banach space X and the operator B satisfies the condition (B). If \(p>1\) and define

Then \(\Im: U_{ad}\subset L^{p}([0,T];X)\rightarrow PC([0,T];X)\) is compact. Moreover, if \(u_{k}(\cdot)\in U_{ad}\subset L^{p}([0,T];X)\) satisfies \(u_{k}(\cdot)\rightarrow u(\cdot)\), weakly, as \(k\rightarrow\infty\) in \(L^{p}([0,T];X)\), then \(\Im u_{k} \rightarrow\Im u\) as \(k\rightarrow \infty\).

Proof of Theorem 3.1

The proof is divided into the following three steps.

Step 1. Let \(x_{0}\in X\) be fixed. We define a mapping H on \(PC([0,T]; X)\) by

\(0\leq t\leq T\). It follows from the properties of the \(C_{0}\)-semigroup and all assumptions in Theorem 3.1 that H is well defined and maps \(PC([0,T]; X)\) into itself. Furthermore, one can also easily see that \(x\in PC([0,T])\) is a PC-mild solution of controlled nonlocal impulsive differential equation (3.1) if and only if x is a fixed point of H. Therefore, we shall prove that H has a fixed point in \(PC([0,T])\).

Step 2. We show that H maps \(W_{r}\) into itself. By conditions (F), (B), (G), (J), one obtains, for each \(x\in W_{r}\),

By (R) we have \(Hx\in W_{r}\).

Step 3. In the sequel, we show that H is continuous in \(W_{r}\). Letting \(x_{1},x_{2}\in W_{r}\), we have

This, together with (F)(2), (G), (J), shows that H is continuous.

Step 4. Now we prove that H has at least one fixed point. Set

for any \(x\in W_{r}\). By (G), one obtains that the mapping \(\Lambda_{1}\) is compact in \(W_{r}\), and hence \(\alpha(\Lambda_{1} W_{r})=0\). Similarly, it follows from (A), Arzela-Ascoli’s theorem, and Lemma 3.1 that the mapping \(\Lambda_{2}\) is also compact in \(W_{r}\). Therefore, \(\alpha(\Lambda_{2} W_{r})=0\).

On the other hand, noticing that \(\{J_{i}\}_{i=1}^{q}\) are Lipschitz continuous, for \(x_{1},x_{2}\in W_{r}\), one has

Consequently,

By condition (R) we have

This shows that H is an α-contraction. Now by Lemma 2.1 we immediately deduce that the mapping H has a fixed point in \(W_{r}\), i.e., (3.1) has at least one mild solution. This completes the proof. □

Remark 3.3

In [7], the authors obtain the solvability of impulsive integro-differential equations of mixed type with given initial value on an infinite dimensional Banach space. However, they need the locally Lipschitz continuity of f. Here, without the Lipschitz assumption of f, we make full use of the technique of the compactness as regards the solution operator to obtain the solvability of the controlled nonlocal impulsive equation (3.1). Therefore, our results improve those in [7, 13] and the references therein, and they have broader applications.

Remark 3.4

The uniqueness of solution of the controlled impulsive differential equation (3.1) cannot be obtained. Therefore, we can denote by \(\operatorname{Sol}(u)\) all solutions of system (3.1) in \(W_{r}\), for any \(u\in U_{ad}\).

Assume that

- (G′):

-

\(g(x)=g(s_{1},\ldots,s_{m},x(s_{1}),\ldots,x(s_{m}))=\sum_{j=1}^{m}c_{j} x(s_{j})\), where \(c_{j}\), \(j=1,2,\ldots,m\), are given constants, and \(0< s_{1}< s_{2}<\cdots< s_{m}<T\).

Corollary 3.1

Let conditions (A), (F), (B), (G′), and (J) be satisfied. Then the nonlocal impulsive equation (3.1) has at least one PC-mild solution on \([0,T]\) provided that

Proof

It is easy to see that if \(g(x)=g(s_{1},\ldots,s_{m},x(s_{1}),\ldots,x(s_{m}))=\sum_{j=1}^{m}c_{j} x(s_{j})\), then the condition (G) holds. Thus all the conditions in Theorem 3.1 are satisfied. Then the nonlocal impulsive equation (3.1) has at least one PC-mild solution on \([0,T]\). This completes the proof. □

4 Existence of optimal controls

In the section, we give the existence of optimal controls for system (3.1).

Let \(x^{u}\in W_{r}\) denote the PC-mild solution of system (3.1) corresponding to the control \(u\in U_{ad}\), we consider the following limited Lagrange problem (P):

Find \(x^{0}\in W_{r}\subseteq PC([0,T]; X)\) and \(u^{0}\in U_{ad}\) such that

where

and \(x^{0}\in W_{r}\) denotes the PC-mild solution of system (3.1) corresponding to the control \(u^{0}\in U_{ad}\).

We make the following assumption:

-

(L)

-

(1)

The function \(l:[0,T]\times X\times Y\rightarrow R\cup \infty\) is Borel measurable;

-

(2)

\(l(t,\cdot,\cdot)\) is sequentially lower semicontinuous on \(X\times Y\) for a.e. \(t\in[0,T]\);

-

(3)

\(l(t,x,\cdot)\) is convex on Y for each \(x\in X\) and a.e. \(t\in[0,T]\);

-

(4)

there are two constants \(c\geq0\), \(d>0\) and \(\phi\in L^{1}([0,T];R)\) such that

$$l(t,x,u)\geq\phi(t)+c\|x\|+d\|u\|_{Y}^{p}. $$

-

(1)

Remark 4.1

A pair \((x(\cdot),u(\cdot))\) is said to feasible if it satisfies system (3.1) for \(x(\cdot)\in W_{r}\).

Remark 4.2

If \((x^{u},u)\) is a feasible pair, then \(x^{u}\in \operatorname{Sol}(u)\subset W_{r}\).

Theorem 4.1

Assume that condition (L) is satisfied. Under the conditions of Theorem 3.1, the problem (P) has at least one optimal feasible pair.

Proof

The proof is divided into the following four steps.

Step 1. For any \(u\in U_{ad}\), set

If there are finite elements in \(\operatorname{Sol}(u)\), there exists some \(\overline{x}^{u}\in \operatorname{Sol}(u)\), such that \(J(\overline{x}^{u},u)=\inf_{x^{u}\in \operatorname{Sol}(u)} J(x^{u},u)=J(u)\).

If there are infinite elements in \(\operatorname{Sol}(u)\), there is nothing to prove in the case of \(J(u)=\inf_{x^{u}\in \operatorname{Sol}(u)} J(x^{u},u)=+\infty\).

We assume that \(J(u)=\inf_{x^{u}\in \operatorname{Sol}(u)} J(x^{u},u)<+\infty\). By assumption (L), one has \(J(u)>-\infty\).

By the definition of the infimum there exists a sequence \(\{x^{u}_{n}\} _{n=1}^{\infty}\subseteq \operatorname{Sol}(u)\), such that \(J(x^{u}_{n},u)\rightarrow J(u)\) as \(n\rightarrow \infty\).

Since \(\{(x^{u}_{n},u)\}_{n=1}^{\infty}\) is a sequence of feasible pairs, we have

Step 2. Now we will prove that there exists some \(\overline{x}^{u}\in \operatorname{Sol}(u)\) such that \(J(\overline{x}^{u},u)= \inf_{x^{u}\in \operatorname{Sol}(u)} J(x^{u},u)=J(u)\). To show it, we first prove that \(\{x^{u}_{n}\}_{n=1}^{\infty}\) is precompact in \(PC([0,T]; X)\) for each \(u\in U_{ad}\). For this purpose, note that

From Step 4 in the proof of Theorem 3.1, we know that \(\{\Lambda_{1} x^{u}_{n}\}_{n=1}^{\infty}\) and \(\{\Lambda_{2} x^{u}_{n}\}_{n=1}^{\infty}\) are both precompact subsets of \(PC([0,T]; X)\). Moreover, by condition (J), we see that \(\Lambda_{3}\) is Lipschitz continuous in \(PC([0,T]; X)\) with Lipschitz constant \(M(\sum_{i=1}^{q} h_{i})\). Thus, according to the properties of the Hausdorff measure of noncompactness, we conclude that

Since the condition (R) holds, \(M(\sum_{i=1}^{q} h_{i})<1\). Thus the above inequality implies that \(\beta( \{x^{u}_{n}\}_{n=1}^{\infty})=0\). Consequently, the set \(\{x^{u}_{n}\}_{n=1}^{\infty}\) is precompact in \(PC([0,T]; X)\) for \(u\in U_{ad}\). Without loss of generality, we may suppose that \(x^{u}_{n}\rightarrow\overline{x}^{u}\), as \(n\rightarrow\infty\) in \(PC([0,T]; X)\) for \(u\in U_{ad}\). Taking the limit \(n\rightarrow\infty \) in both sides for (4.1), according to the continuity of \(S(t)\), g, \(J_{i}\), and f with respect to the second argument, and using the dominated convergence theorem, we deduce that

which implies that \(\overline{x}^{u}\in \operatorname{Sol}(u)\).

Step 3. We claim that \(J(\overline{x}^{u},u)= \inf_{x^{u}\in \operatorname{Sol}(u)}J(x^{u},u)=J(u)\), for \(\forall u\in U_{ad}\). In fact, since \(PC([0,T]; X)\) is continuously embedded in \(L^{1}([0,T];X)\), by the definition of a feasible pair, and using the assumption \([L]\) and Balder’s theorem, we have

that is, \(J(\overline{x}^{u},u)= J(u)\). This shows that \(J(u)\) attains its minimum at \(\overline{x}^{u}\in PC([0,T]; X)\) for each \(u\in U_{ad}\).

Step 4. Find \(u_{0}\in U_{ad}\) such that \(J(u_{0})\leq J(u)\), for all \(u\in U_{ad}\).

If \(\inf_{u\in U_{ad}}J(u)=+\infty\), there is nothing to prove.

Assume that \(\inf_{u\in U_{ad}}J(u)<+\infty\). Similar to Step 1, we can prove that \(\inf_{u\in U_{ad}}J(u)>-\infty\), and there exists a sequence \(\{u_{n}\} _{n=1}^{\infty}\subseteq U_{ad}\) such that \(J(u_{n})\rightarrow\inf_{u\in U_{ad}}J(u)\) as \(n\rightarrow \infty\). We use that \(\{u_{n}\}_{n=1}^{\infty}\subseteq U_{ad}\), \(\{u_{n}\} _{n=1}^{\infty}\) is bounded in \(L^{p}([0,T]; Y)\). Moreover, \(L^{p}([0,T]; Y)\) is reflexive Banach space. Thus there exists a subsequence, and without loss of generality we may suppose that \(\{u_{n}\}_{n=1}^{\infty}\) converges weakly to some \(u_{0}\in L^{p}([0,T]; Y)\) as \(n\rightarrow\infty\).

Note that \(U_{ad}\) is closed and convex, so it follows from the Mazur lemma that \(u_{0}\in U_{ad}\). For \(n\geq1\), \(\overline{x}^{u_{n}}\) is the mild solution for (3.1) corresponding to \(u_{n}\), where \(J(u_{n})\) attains its minimum. Then \((\overline{x}^{u_{n}},u_{n})\) is a feasible pair and satisfies the following integral equation:

Set

Then

We know that \(\{\Lambda_{1} \overline{x}^{u_{n}}\}_{n=1}^{\infty}\) is precompact in \(PC([0,T]; X)\) and \(\Lambda_{3}\) is Lipschitz continuous in \(PC([0,T]; X)\) with Lipschitz constant \(M(\sum_{i=1}^{q} h_{i})<1\). Furthermore, by the compactness of the semigroup \(\{S(t),t>0\}\) and Lemma 3.1, it is easy to prove that \(\{\Lambda'_{2}\overline{x}^{u_{n}}\}_{n=1}^{\infty}\subseteq PC([0,T]; X)\) is precompact, and \(\Im u_{n}\rightarrow\Im u_{0}\) in \(C([0,T]; X)\) as \(n\rightarrow\infty\).

Similar to Step 4 in the proof of Theorem 4.1, we deduce that \(\beta( \{\overline{x}^{u_{n}}\}_{n=1}^{\infty})=0\), i.e., \(\{\overline {x}^{u_{n}}\}_{n=1}^{\infty}\subseteq PC([0,T]; X)\) is precompact. Thus there exists a subsequence, relabeled as \(\{\overline{x}^{u_{n}}\} _{n=1}^{\infty}\), and \(\overline{x}^{u_{0}}\in PC([0,T]; X)\) such that \(\overline{x}^{u_{n}}\rightarrow\overline{x}^{u_{0}}\), as \(n\rightarrow \infty\) in \(PC([0,T]; X)\). Taking the limit \(n\rightarrow\infty\) in both sides for (4.2), we have

then \((\overline{x}^{u},u_{0})\) is a feasible pair.

Since \(PC([0,T]; X)\hookrightarrow L^{1}([0,T]; X)\), by the condition (L) and Balder’s theorem, we obtain

Therefore,

Moreover,

i.e., J attains its minimum at \(u_{0}\in U_{ad}\). □

Remark 4.3

Constructing approximating minimizing sequences of functions twice plays a key role in the proof of looking for optimal controls, which enable us to deal with the multiple solution problem of feasible pairs. More importantly, this will allow us to study more extensive and complex evolution equations and optimal controls problems. Moreover, we have the following consequences.

Corollary 4.1

Assume that conditions (A), (F), (B), (G′), (J), and (L) are satisfied. Then the problem (P) has at least one optimal feasible pair on \([0,T]\) provided that

Proof

If condition (G′) holds, then we conclude to the existence of feasible pairs on \([0,T]\) from Corollary 3.1. Similar to the proof of Theorem 4.1, we can obtain the optimal feasible pair on \([0,T]\). This completes the proof. □

In particular, if \(g(x)=x_{0}\), we have the following result.

Corollary 4.2

Assume that conditions (A), (F), (B), (J), and (L) are satisfied and \(g(x)=x_{0}\). Then the problem (P) has at least one optimal control on \([0,T]\) provided that

We use the following assumptions instead of (J) and (R):

- (J′):

-

\(J_{i}:X\rightarrow X\), \(i=1,2,\ldots,q\), are continuous and compact mappings.

- (R′):

-

\(M\{\|x_{0}\|+\sup_{x\in W_{r}}\|g(x)\|+\|Bu\|_{L^{1}}+\sup_{x\in W_{r}}\sum_{i=1}^{q}\|J_{i}(x(t_{i}))\|+\|\varphi_{r}\|_{L^{1}}\}\leq r\).

We may apply Schauder’s second fixed point theorem to obtain the existence of PC-mild solutions. Note that H is a continuous mapping from \(W_{r}\) to \(W_{r}\). We need to prove that H is a compact mapping. In fact, we already proved that \(\Lambda_{1}\) and \(\Lambda_{2}\) are both compact operators in Theorem 3.1. The same idea can be used to prove the compactness of \(\Lambda_{3}\) due to the assumption (J′) and the Ascoli-Arzela theorem. The rest of the proof is similar to that of Theorem 4.1. So we can obtain the following result.

Corollary 4.3

Let (A), (F), (B), (G), (J′), (R′), and (L) be satisfied. Then the problem (P) has at least one optimal control on \([0,T] \).

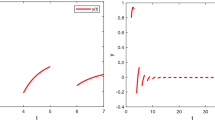

5 An example

In this section, we shall give one example to illustrate our theory.

Example 5.1

Consider the following semilinear partial differential system:

where \(\omega:[0,T]\times[0,1]\times[0,1]\times R\rightarrow R\) and \(F:[0,T]\times R\rightarrow R\).

Take \(X=L^{2}[0,1]\) with the norm \(\|\cdot\|_{2}\) and we consider the operator \(A:D(A)\subseteq X\rightarrow X\) defined by \(A=\frac{\partial^{2}}{\partial y^{2}}\) with the domain \(D(A)=\{ z\in X: z,z^{\prime\prime} \mbox{ are absolutely continuous and }z^{\prime\prime}\in X,z(0)=z(1)=0\}\). It is well known that the operator A generates a compact semigroup \(S(t)\) on X (see [18]). Define \(x(\cdot)(y)=x(\cdot,y)\), \(B(\cdot )u(\cdot)(y)=u(\cdot,y)\). Let

Suppose that \(\omega:[0,T]\times[0,1]\times[0,1]\times R\rightarrow R\) satisfies the Carathéodory condition, that is, \(\omega (t,y,\xi,r)\) is a continuous function about r for a.e. \((t,y,\xi)\in [0,T]\times[0,1]\times[0,1]\); \(\omega(t,y,\xi,r)\) is measurable about \((t,y,\xi)\) for each fixed \(r\in R\).

Further, we assume that ω satisfies:

-

(i)

\(|\omega(t,y,\xi,r)-\omega(t,y',\xi,r)|\leq g_{k}(t,y,y',\xi)\) for all \((t,y,\xi,r), (t,y',\xi,r)\in[0,T]\times [0,1]\times [0,1]\times R\) with \(|r|\leq k\), where \(g_{k}\in L^{1}([0,T]\times[0,1]\times[0,1]\times R;R^{+})\) satisfies \(\lim_{y\rightarrow y'}\int_{0}^{1}\int_{0}^{T} g_{k}(t,y,y',\xi)\,dt\,d\xi=0\), uniformly in \(y'\in[0,1]\).

-

(ii)

\(|\omega(t,y,\xi,r)|\leq \frac{\delta}{T}|r|+\zeta(t,y,\xi)\) for all \(r\in R\), where \(\zeta\in L^{2}([0,T]\times[0,1]\times[0,1]; R^{+})\) and \(\delta>0\).

Now we assume that:

-

(1)

\(f:[0,T]\times X\rightarrow X\) is a continuous function defined by

$$f(t,z) (y)=F\bigl(t,z(y)\bigr), \quad 0\leq t\leq T, 0\leq y\leq1. $$Moreover, for given \(r>0\), there exists an integrable function \(\phi_{r}: [0,T]\rightarrow R\) such that \(\|f(t,z)\|\leq \phi_{r}(t)\) for \(t\in[0, T]\), \(z\in B_{r}\).

-

(2)

\(g:PC([0,T];X)\rightarrow X\) is defined by

$$g(x) (y)= \int_{0}^{1} \int_{0}^{T} \omega\bigl(t,y,\xi,x(t,\xi)\bigr)\,dt\,d\xi,\quad y\in[0,1]. $$From Theorem 4.2 in [19], we directly see that g is well defined and it is a continuous and compact map by the above conditions (i) and (ii) about the function ω.

-

(3)

\(J_{i}:X\rightarrow X\) is a continuous function for each \(i=1,2,\ldots,q\), defined by

$$J_{i}(x) (y)=J_{i}\bigl(x(y)\bigr). $$Here we take \(J_{i}(x(y))=(\alpha_{i}|x(y)|+t_{i})^{-1}\), \(\alpha _{i}>0\), \(i=1,2,\ldots,q\), \(0< t_{1}< t_{2}<\cdots<t_{q}<T\), \(y\in[0,1]\). Then \(J_{i}\) is Lipschitz continuous with constant \(h_{i}=\alpha_{i}/t_{i}^{2}\), \(i=1,2,\ldots,q\), i.e., the assumption (J) is satisfied.

Let us observe that the problem (5.1) may be reformulated as

with the cost function

where \((x^{u},u)\) is a feasible pair. If the inequality

holds, (5.2) satisfies all the assumptions given in our former theorems. Therefore our results can be used to deal with (5.2).

References

Lakshmikantham, V, Bainov, DD, Simeonov, PS: Theory of Impulsive Differential Equations. World Scientific, Singapore (1989)

Ahmed, NU, Teo, KL, Hou, SH: Nonlinear impulsive systems on infinite dimensional spaces. Nonlinear Anal. 54, 907-925 (2003)

Ahmed, NU: Optimal feedback control for impulsive systems on the space of finitely additive measures. Publ. Math. (Debr.) 70, 371-393 (2007)

Li, X, Yong, J: Optimal Control Theory for Infinite Dimensional Systems. Birkhäuser, Basel (1995)

Zavalishchion, A: Impulsive dynamic systems and applications to mathematical economics. Dyn. Syst. Appl. 3, 443-449 (1994)

Chang, YK, Li, WS: Solvability for impulsive neutral integro-differential equations with state-dependent delay via fractional operators. J. Optim. Theory Appl. 144, 445-459 (2010)

Wei, W, Xiang, X, Peng, Y: Nonlinear impulsive integro-differential equations of mixed type and optimal controls. Optimization 55, 141-156 (2006)

Ashyralyev, A, Sharifov, YA: Existence and uniqueness of solutions for nonlinear impulsive differential equations with two-point and integral boundary conditions. Adv. Differ. Equ. 2013, 173 (2013)

Ashyralyev, A, Sharifov, YA: Optimal control problems for impulsive systems with integral boundary conditions. Electron. J. Differ. Equ. 2013, 80 (2013)

Sharifov, YA, Mamedova, NB: Optimal control problem described by impulsive differential equations with nonlocal boundary conditions. Differ. Equ. 50, 401-409 (2014)

Sharifov, YA: Optimality conditions in problems of control over systems of impulsive differential equations with nonlocal boundary conditions. Ukr. Math. J. 64, 958-970 (2012)

Ahmed, NU, Xiang, X: Nonlinear uncertain systems and necessary conditions of optimality. SIAM J. Control Optim. 35, 1755-1772 (1997)

Pongchalee, P, Sattayatham, P, Xiang, X: Relaxation of nonlinear impulsive controlled systems on Banach spaces. Nonlinear Anal. 68, 1570-1580 (2008)

Mardanov, MJ, Sharifov, YA, Molaei, HH: Existence and uniqueness of solutions for first-order nonlinear differential equations with two-point and integral boundary conditions. Electron. J. Differ. Equ. 2014, 259 (2014)

Xue, X: Semilinear nonlocal problems without the assumptions of compactness in Banach spaces. Anal. Appl. 8, 211-225 (2010)

Zhu, L, Huang, Q, Li, G: Existence and asymptotic properties of solutions of nonlinear multivalued differential inclusions with nonlocal conditions. J. Math. Anal. Appl. 390, 523-534 (2012)

Banas, J, Goebel, K: Measure of Noncompactness in Banach Spaces. Lecture Notes in Pure and Applied Mathematics, vol. 60. Dekker, New York (1980)

Pazy, A: Semigroups of Linear Operators and Applications to Partial Differential Equations. Springer, Berlin (1983)

Martin, RH: Nonlinear Operators and Differential Equations in Banach Spaces. Wiley, New York (1976)

Acknowledgements

The authors are grateful to the editor and anonymous reviewers for their valuable comments and suggestions. Moreover, this research was supported by the Natural Science Foundation of China (11201410) and the Natural Science Foundation of Jiangsu Province (BK2012260 and BK20141271).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zhu, L., Huang, Q. Nonlinear impulsive evolution equations with nonlocal conditions and optimal controls. Adv Differ Equ 2015, 378 (2015). https://doi.org/10.1186/s13662-015-0715-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-015-0715-0