Abstract

In this paper, we propose a hybrid type method which consists of a resolvent operator technique and a generalized projection onto a moving half-space for approximating a zero of a maximal monotone mapping in Banach spaces. The weak convergence of the iterative sequence generated by the algorithm is also proved. Our results extend and improve the recent ones announced by Zhang (Oper. Res. Lett. 40:564-567, 2012) and Wei and Zhou (Nonlinear Anal. 71:341-346, 2009).

Similar content being viewed by others

1 Introduction

Let E be a Banach space with norm \(\|\cdot\|\), and \(E^{*}\) be the dual space of E. \(\langle\cdot,\cdot\rangle\) denotes the duality pairing of E and \(E^{*}\). Let \(M:E\rightarrow2^{E^{*}}\) be a maximal monotone mapping. We consider the following problem: Find \(x\in E\) such that

This is the zero point problem of a maximal monotone mapping. We denote the set of solutions of Problem (1.1) by \(VI(E,M)\) and suppose \(VI(E,M)\neq\emptyset\).

Problem (1.1) plays an important role in optimizations. This is because it can be reduced to a convex minimization problem and a variational inequality problem. Many authors have constructed many iterative algorithms to approximate a solution of Problem (1.1) in several settings (see [1–11] and the references therein).

Recently, in [9], Wei and Zhou proposed the following iterative algorithm.

Algorithm 1.1

where \(\{\alpha_{n}\}\subset[0,1]\) with \(\alpha_{n}\leq1-\delta\) for some \(\delta\in(0,1)\), \(\{r_{n}\}\subset(0,+\infty)\) with \(\inf_{n\geq 0}r_{n}>0\) and the error sequence \(\{e_{n}\}\subset E\) such that \(\|e_{n}\|\rightarrow0\), as \(n\rightarrow\infty\). They proved the iterative sequence (1.2) converges strongly to \(\Pi_{VI(E,M)}x_{0}\).

We note that, in Algorithm 1.1, we want to compute the generalized projection of \(x_{0}\) onto \(H_{n}\cap W_{n}\) to obtain the next iterate. If the set \(H_{n}\cap W_{n}\) is a specific half-space, then the generalized projection onto it is easily executed. But \(H_{n}\cap W_{n}\) may not be a half-space, although both of \(H_{n}\) and \(W_{n}\) are half-spaces. If \(H_{n}\cap W_{n}\) is a general closed and convex set, then it is not easy to compute a minimal general distance. This might seriously affect the efficiency of Algorithm 1.1.

In order to make up the defect, we will construct a new iterative algorithm in this paper by referring to the idea of [5] as follows.

In [5], Zhang proposed a modified proximal point algorithm with errors for approximating a solution of Problem (1.1) in Hilbert spaces. More precisely, he proposed Algorithm 1.2 and proved Theorems 1.1 and 1.2 as follows.

Algorithm 1.2

(i.e. Algorithm 2.1 of [5])

Step 0. Select an initial \(x_{0}\in\mathscr{H}\) (a Hilbert space) and set \(k=0\).

Step 1. Find \(y_{k}\in\mathscr{H}\) such that

where \(J_{k}=(I+\lambda_{k}M)^{-1}\) is the resolvent operator, the positive sequence \(\{\lambda_{k}\}\) satisfies \(\alpha:=\inf_{k\geq0}\lambda_{k}>0\) and \(\{e_{k}\}\) is an error sequence.

Step 2. Set \(K=\{z\in\mathscr{H},\langle x_{k}-y_{k}+e_{k},z-y_{k}\rangle\leq0 \}\) and

where \(P_{K}\) denotes the metric projection from \(\mathscr{H}\) onto K and \(\{\beta_{k}\}_{k=0}^{+\infty}\subset(0,1]\) and \(\{\rho_{k}\}_{k=0}^{+\infty}\subset[0,2)\) are real sequences.

Theorem 1.1

(i.e. Theorem 2.1 of [5])

Let \(\{ x_{k}\}\) be the sequence generated by Algorithm 1.2. If

-

(i)

\(\|e_{k}\|\leq\eta_{k}\|x_{k}-y_{k}\|\) for \(\eta_{k}\geq0\) with \(\sum_{k=0}^{\infty}\eta_{k}^{2}<+\infty\),

-

(ii)

\(\{\beta_{k}\}_{k=0}^{+\infty}\subset[c,d]\) for some \(c,d\in(0,1)\),

-

(iii)

\(0<\inf_{k\geq0}\rho_{k}\leq\sup_{k\geq0}\rho_{k}<2\),

then the sequence \(\{x_{k}\}\) converges weakly to a solution of Problem (1.1) in Hilbert spaces.

Theorem 1.2

(i.e. Theorem 2.3 of [5])

Let \(\{ x_{k}\}\) be the sequence generated by Algorithm 1.2 for \(\rho_{k}=0\). If

-

(i)

\(\lim_{k\rightarrow\infty}\|e_{k}\|=0\),

-

(ii)

\(\{\beta_{k}\}_{k=0}^{+\infty}\subset[c,1]\) for some \(c>0\),

then the sequence \(\{x_{k}\}\) converges weakly to a solution of Problem (1.1) in Hilbert spaces.

We note that the set K is a half-space, and hence Algorithm 1.2 is easier to execute than Algorithm 1.1. But, since the metric projection strictly depends on the inner product properties of Hilbert spaces, Algorithm 1.2 can no longer be applied for Problem (1.1) in Banach spaces.

However, many important problems related to practical problems are generally defined in Banach spaces. For example, the maximal monotone operator related to an elliptic boundary value problem has a Sobolev space \(W^{m,p}(\Omega)\) as its natural domain of definition [12]. Therefore, it is meaningful to consider Problem (1.1) in Banach spaces. Motivated and inspired by Algorithms 1.1 and 1.2, the purpose of this paper is to construct a new iterative algorithm for approximating a solution of Problem (1.1) in Banach spaces. In the algorithm, we will replace the generalized projection onto \(H_{n}\cap W_{n}\) constructed in Algorithm 1.1 by a generalized projection onto a specific constructible half-space by using the idea of Algorithm 1.2. This will make up the defect of Algorithm 1.1 mentioned above.

2 Preliminaries

In the sequel, we use \(x_{n}\rightarrow x\) and \(x_{n}\rightharpoonup x\) to denote the strong convergence and weak convergence of the sequence \(\{x_{n}\}\) in E to x, respectively.

Let \(J:E\rightarrow2^{E^{*}}\) be the normalized duality mapping defined by

It is well known that if E is smooth then J is single-valued and if E is uniformly smooth, then J is uniformly norm-to-norm continuous on bounded subsets of E. We shall still denote the single-valued duality mapping by J. Recall that if E is smooth, strictly convex and reflexive, then the duality mapping J is strictly monotone, single-valued, one-to-one and onto, for more details, refer to [7].

The duality mapping J from a smooth Banach space E into \(E^{*}\) is said to be weakly sequentially continuous if \(x_{n}\rightharpoonup x\) implies \(Jx_{n}\rightharpoonup Jx\); see [6] and the references therein.

Definition 2.1

([13])

A multi-valued operator \(M:E\rightarrow2^{E^{*}}\) with domain \(D(M)=\{z\in E:Mz\neq\emptyset\}\) and range \(R(M)=\bigcup\{Mz\in E^{*}:z\in D(M)\}\) is said to be

-

(i)

monotone if \(\langle x_{1}-x_{2},u_{1}-u_{2}\rangle\geq0\) for each \(x_{i}\in D(M)\) and \(u_{i}\in M(x_{i})\), \(i = 1,2\);

-

(ii)

maximal monotone, if M is monotone and its graph \(G(M)=\{(x,u):u\in Mx\}\) is not properly contained in the graph of any other monotone operator. It is well known that a monotone mapping M is maximal if and only if for \((x,u)\in E\times E^{*}\), \(\langle x-y,u-v\rangle\geq0\) for every \((y,v)\in G(M)\) implies \(u\in Mx\).

Let E be a smooth Banach space. Define

Clearly, from the definition of ϕ we have

-

(A1)

\((\|x\|-\|y\|)^{2}\leq\phi(y,x)\leq(\|x\|+\|y\|)^{2}\),

-

(A2)

\(\phi(x,y)=\phi(x,z)+\phi(z,y)+2\langle x-z,Jz-Jy\rangle\),

-

(A3)

\(\phi(x,y)=\langle x,Jx-Jy\rangle+\langle y-x,Jy\rangle\leq\|x\|\|Jx-Jy\|+\|y-x\|\|y\|\).

Let E be a reflexive, strictly convex, and smooth Banach space. K denotes a nonempty, closed, and convex subset of E. By Alber [14], for each \(x\in E\), there exists a unique element \(x_{0}\in K\) (denoted by \(\Pi_{K}(x)\)) such that

The mapping \(\Pi_{K}:E\rightarrow K\) defined by \(\Pi_{K}(x)=x_{0}\) is called the generalized projection operator from E onto K. Moreover, \(x_{0}\) is called the generalized projection of x. See [15] for some properties of \(\Pi_{K}\). If E is a Hilbert space, then \(\Pi_{K}\) is coincident with the metric projection \(P_{K}\) from E onto K.

Lemma 2.1

([7])

Let E be a reflexive, strictly convex, and smooth Banach space, let C be a nonempty, closed and convex subset of E and let \(x\in E\). Then

Lemma 2.2

([16])

Let C be a nonempty, closed, and convex subset of a smooth Banach space E, and let \(x\in E\). Then \(x_{0}=\Pi_{C}(x)\) if and only if

Lemma 2.3

([16])

Let E be a uniformly convex and smooth Banach space. Let \(\{y_{n}\}\), \(\{z_{n}\}\) be two sequences of E. If \(\phi(y_{n},z_{n})\rightarrow0\) and either \(\{y_{n}\}\), or \(\{z_{n}\}\) is bounded, then \(y_{n}-z_{n}\rightarrow0\).

Lemma 2.4

([13])

Let E be a reflexive Banach space and λ be a positive number. If \(T:E\rightarrow2^{E^{*}}\) is a maximal monotone mapping, then \(R(J+\lambda T)=E^{*}\) and \((J+\lambda T)^{-1}:E^{*}\rightarrow E\) is a demi-continuous single-valued maximal monotone mapping.

Lemma 2.5

([9])

Let E be a real reflexive, strictly convex, and smooth Banach space, \(T:E\rightarrow2^{E^{*}}\) be a maximal monotone operator with \(T^{-1}0\neq\emptyset\), then for \(\forall x\in E\), \(y\in T^{-1}0\) and \(r>0\), we have

where \(Q_{r}^{T}x=(J+rT)^{-1}Jx\).

Lemma 2.6

([17])

Let \(\{a_{n}\}\) and \(\{t_{n}\}\) be two sequences of nonnegative real numbers satisfying the inequality

If \(\sum_{n=1}^{\infty}t_{n}<\infty\), then \(\lim_{n\rightarrow\infty}a_{n}\) exists.

Lemma 2.7

Let S be a nonempty, closed, and convex subset of a uniformly convex, smooth Banach space E. Let \(\{x_{n}\}\) be a bounded sequence in E. Suppose that, for all \(u\in S\),

for every \(n = 1, 2,\ldots\) and \(\sum_{n=1}^{\infty}\theta_{n}<\infty\). Then \(\{\Pi_{S}(x_{n})\}\) is a Cauchy sequence.

Proof

Put \(u_{n}:=\Pi_{S}x_{n}\) for all \(n\geq1\). For \(\omega\in S\), we have \(\phi(u_{n},x_{n})\leq\phi(\omega,x_{n})\). Thus, \(\{u_{n}\}\) is bounded. By \(u_{n+1}=\Pi_{S}{x_{n+1}}\) and \(u_{n}=\Pi_{S}{x_{n}}\in S\), we infer that

where \(M^{*}=\sup\{\phi(u_{n},x_{n}),n\geq1\}\). Since \(\sum_{n=1}^{\infty}\theta_{n}<\infty\), it follows from Lemma 2.6 that \(\lim_{n\rightarrow\infty}\phi(u_{n},x_{n})\) exists. Using (2.2), for all \(m\geq1\), we have \(\phi(u_{n},x_{n+m})\leq\prod_{i=0}^{m-1}(1+\theta_{n+i})\phi(u_{n},x_{n})\). Then we infer from \(u_{n+m}=\Pi_{S}x_{n+m}\) and \(u_{n}=\Pi_{S}x_{n}\in S\) that

Therefore, \(\lim_{n\rightarrow\infty}\phi(u_{n},u_{n+m})=0\), and hence we have, from Lemma 2.3, \(\lim_{n\rightarrow\infty}\|u_{n}-u_{n+m}\|=0\), for all \(m\geq1\). Consequently, \(\{u_{n}\}\) is a Cauchy sequence. □

3 Main results

In this section, we construct a new iterative algorithm and prove two convergence theorems for two different iterative sequences generated by the new iterative algorithm for solving Problem (1.1) in Banach spaces.

Algorithm 3.1

Step 0. (Initiation) Arbitrarily select initial \(x_{0}\in E\) and set \(k=0\), where E is a reflexive, strictly convex, and smooth Banach space.

Step 1. (Resolvent step) Find \(y_{k}\in E\) such that

where \(Q_{\lambda_{k}}^{M}=(J+\lambda_{k}M)^{-1}J\), the positive sequence \(\{\lambda_{k}\}\) satisfies \(\alpha_{1}:=\inf_{k\geq0}\lambda_{k}>0 \) and \(\{e_{k}\}\) is an error sequence.

Step 2. (Projection step) Set \(C_{k}=\{z\in E:\langle z-y_{k},J(x_{k}+e_{k})-J(y_{k})\rangle\leq0\}\) and

where \(\{\beta_{k}\}\subset(0,1]\), and \(\{\rho_{k}\}\subset(0,1]\).

Step 3. Let \(k=k+1\) and return to Step 1.

Now we show the convergence of the iterative sequence generated by Algorithm 3.1 in the Banach space E.

Theorem 3.1

Let E be a uniformly convex, uniformly smooth Banach space whose duality mapping J is weakly sequentially continuous and \(M:E\rightarrow2^{E^{*}}\) be a maximal monotone mapping such that \(VI(E,M)\neq\emptyset\). If

and \(\liminf_{k\rightarrow\infty}\beta_{k}\rho_{k}>0\), then the iterative sequence \(\{x_{k}\}\) generated by Algorithm 3.1 converges weakly to an element \(\hat{x}\in VI(E,M)\). Further, \(\hat{x}=\lim_{k\rightarrow\infty}\Pi_{VI(E,M)}(x_{k})\).

Proof

We split the proof into four steps.

Step 1. Show that \(\{x_{k}\}\) is bounded.

Suppose \(x^{*}\in VI(E,M)\), then we have \(0\in M(x^{*})\). From (3.1), it follows that

By the monotonicity of M, we deduce that

which leads to

Let \(t_{k}=\Pi_{C_{k}}J^{-1}(Jx_{k}-\rho_{k}(Jx_{k}-Jy_{k}))\). It follows from (3.2) that

By Lemmas 2.1 and 2.5, we deduce that

where \(M=\sup_{k\geq0}(\beta_{k}\rho_{k})(2\|x^{*}\|+1)\). Since \(\sum_{k=0}^{\infty}\eta_{k} <\infty\), (3.7) implies that \(\lim_{k\rightarrow\infty}\phi(x^{*},x_{k})\) exists by Lemma 2.6. Hence, \(\{x_{k}\}\) is bounded. From (3.3), we have \(\|x_{k}+e_{k}\|^{2}\leq\|x_{k}\|^{2}+\eta_{k}\), and hence \(\{x_{k}+e_{k}\}\) is also bounded.

Step 2. Show that \(\{x_{k}\}\) and \(\{y_{k}\}\) have the same weak accumulation points.

It follows from (3.7) that

and hence

Since \(\liminf_{k\rightarrow\infty}\beta_{k}\rho_{k}>0\), \(\lim_{k\rightarrow\infty}\phi(x^{*},x_{k})\) exists and \(\sum_{k=0}^{\infty}\eta_{k} <\infty\), we have

By Lemma 2.3, we deduce that

Since J is uniformly norm-to-norm continuous on bounded sets, we have

It follows from (3.3) that

Since \(\|Jy_{k}-Jx_{k}\|\leq\|Jy_{k}-J(x_{k}+e_{k})\|+\|J(x_{k}+e_{k})-Jx_{k}\|\), it follows from (3.10) and (3.11) that

Since \(J^{-1}\) is also uniformly norm-to-norm continuous on bounded sets, we have

Consequently, we see that \(\{x_{k}\}\) and \(\{y_{k}\}\) have the same weak accumulation points.

Step 3. Show that each weak accumulation point of the sequence \(\{x_{k}\}\) is a solution of Problem (1.1).

Since \(\{x_{k}\}\) is bounded, let us suppose x̂ is a weak accumulation point of \(\{x_{k}\}\). Hence, we can extract a subsequence that weakly converges to x̂. Without loss of generality, let us suppose that \(x_{k}\rightharpoonup\hat{x}\) as \(k\rightarrow\infty\). Then from (3.13), we have \(y_{k}\rightharpoonup\hat{x}\) as \(k\rightarrow\infty\).

For any \((v,u)\in G(M)\), it follows from the monotonicity of M and (3.1) that

which implies that

Taking the limits in (3.14), by (3.10), (3.13), and the boundedness of \(\{y_{k}\}\), we have

Since M is maximal monotone, by the arbitrariness of \((v,u)\in G(M)\), we conclude that \((\hat{x},0)\in G(M)\) and hence x̂ is a solution of Problem (1.1), i.e., \(\hat{x}\in VI(E,M)\).

Step 4. Show that \(x_{k}\rightharpoonup\hat{x}\), as \(k\rightarrow\infty\) and \(\hat{x}=\lim_{k\rightarrow\infty}\Pi_{VI(E,M)}(x_{k})\).

Put \(u_{k}=\Pi_{VI(E,M)}(x_{k})\). Since \(\hat{x}\in VI(E,M)\), we have \(\phi(u_{k},x_{k})\leq\phi(\hat{x},x_{k})\), which implies that \(\{u_{k}\}\) is bounded. Since \(u_{k}\in VI(E,M)\), we have from (3.7)

where \(M^{*}=\sup_{k\geq0}(\frac{M}{\phi(u_{k},x_{k})})\). Since \(\sum_{k=0}^{\infty}\eta_{k} <\infty\), it follows from Lemma 2.7 that \(\{u_{k}\}\) is a Cauchy sequence. Since \(VI(E,M)\) is closed, we see that \(\{u_{k}\}\) converges strongly to \(z\in VI(E,M)\). By the uniform smoothness of E, we also have

On the other hand, it follows from \(\hat{x}\in VI(E,M)\), \(u_{k}=\Pi_{VI(E,M)}x_{k}\), and Lemma 2.2 that

Let \(k\rightarrow\infty\), it follows from the weakly sequential continuity of J and (3.16) that \(\langle z-\hat{x},J\hat{x}-Jz\rangle\geq0\). Since E is strictly convex, we have \(z=\hat{x}\). Therefore, \(\{x_{k}\}\) converges weakly to \(\hat{x}\in VI(E,M)\), where \(\hat{x}=\lim_{k\rightarrow\infty}\Pi_{VI(E,M)}x_{k}\). □

Next, we show the convergence of the iterative sequence when \(\rho_{k}=0\).

Theorem 3.2

Let E be a uniformly convex, uniformly smooth Banach space whose duality mapping J is weakly sequentially continuous and \(M:E\rightarrow2^{E^{*}}\) be a maximal monotone mapping such that \(VI(E,M)\neq\emptyset\). Let \(\{x_{k}\}\) be the sequence generated by Algorithm 3.1 for \(\rho_{k}=0\).

If \(\{\lambda_{k}\}\), \(\{\beta_{k}\}\), and \(\{e_{k}\}\) satisfy \(\inf_{k\geq0}\lambda_{k}=\alpha_{1}>0\), \(0<\beta_{k}\leq 1\), \(\liminf_{k\rightarrow\infty}\beta_{k}>0\), and \(\lim_{k\rightarrow\infty}\|e_{k}\|=0\), then the iterative sequence \(\{x_{k}\}\) converges weakly to an element \(\hat{x}\in VI(E,M)\). Further, \(\hat{x}=\lim_{k\rightarrow\infty}\Pi_{VI(E,M)}(x_{k})\).

Proof

Suppose \(x^{*}\in VI(E,M)\), then we have \(0\in M(x^{*})\). It is similar to the proof of Theorem 3.1, we have

Let \(t_{k}=\Pi_{C_{k}}(x_{k})\). It follows from (3.2) for \(\rho_{k}=0\) that

By Lemma 2.1, we deduce that

From (3.17) and (3.18), we have

which implies that \(\lim_{k\rightarrow\infty}\phi(x^{*},x_{k})\) exists and hence \(\{x_{k}\}\) is bounded. Consequently, \(\{x_{k}+e_{k}\}\) is also bounded. Since \(\liminf_{k\rightarrow\infty}\beta_{k}>0\), from (3.19), we have

Thus, it follows from Lemma 2.3 that

and hence \(\{t_{k}\}\) is bounded. Since \(\|(x_{k}+e_{k})-t_{k}\|\leq\|x_{k}-t_{k}\|+\|e_{k}\|\), we have from \(\lim_{k\rightarrow\infty}\|e_{k}\|=0\) and (3.21)

Hence, it follows from (A3) that

From the definition of \(C_{k}\), we have \(y_{k}=\Pi_{C_{k}}(x_{k}+e_{k})\). Hence, we obtain from Lemma 2.1 and \(t_{k}=\Pi_{C_{k}}(x_{k})\in C_{k}\)

It follows from (3.22) and (3.23) that

From Lemma 2.3, we obtain

Since \(\|y_{k}-x_{k}\|\leq\|y_{k}-t_{k}\|+\|t_{k}-x_{k}\|\), we have from (3.25) and (3.21)

Since \(\|x_{k}+e_{k}-y_{k}\|\leq\|x_{k}+e_{k}-x_{k}\|+\|x_{k}-y_{k}\|\), it follows from \(\lim_{k\rightarrow\infty}\|e_{k}\|=0\) and (3.26) that

Since J is uniformly norm-to-norm continuous on bounded sets, we have

By a similar proof to Step 3 and Step 4 in the proof of Theorem 3.1, we can easily obtain the desired conclusion. Therefore, we omit it. □

Remark 3.1

There are the following differences between Theorem 3.2 and the recent results announced by [5, 9] and [18]:

-

(i)

When \(E=\mathscr{H}\) (a Hilbert space), Theorem 3.2 reduces to Theorem 2.3 of Zhang [5] (i.e. Theorem 1.2 of this paper). That is to say, Theorem 3.2 extends Theorem 2.3 of Zhang [5] from Hilbert spaces to more general Banach spaces. Furthermore, we see that the convergence point of \(\{x_{k}\}\) is \(\lim_{k\rightarrow\infty}\Pi_{VI(E,M)}(x_{k})\), which is more concrete than that of Theorem 2.3 of Zhang [5].

-

(ii)

In Algorithm 3.1, the set \(C_{k}\) is a half-space, and hence it is easier to compute the generalized projection of the current iterate onto it than that onto a general closed convex set \(H_{n}\cap W_{n}\) or \(H_{n}\) constructed in [9, 18] to obtain the next iterate. Hence, Algorithm 3.1 improves Algorithm 1.1 and those algorithms in [18] from a numerical point of view.

In the following, we give a simple example to compare Algorithm 1.1 constructed in [9] with Algorithm 3.1 for \(\rho_{k}=0\).

Example 3.1

Let \(E=\mathbb{R}\), \(M:\mathbb{R}\rightarrow\mathbb{R}\) and \(M(x)=x\). It is obvious that M is maximal monotone and \(VI(E,M)=\{0\}\neq\emptyset\).

The numerical experiment result of Algorithm 1.1

Take \(r_{k}=1+\frac{1}{k+2}\), \(\alpha_{k}=\frac{1}{2}-\frac{1}{k+2}\), \(e_{k}=0\), for all \(k\geq0\), and initial point \(x_{0}=-\frac{1}{3}\in \mathbb{R}\). Then \(\{x_{k}\}\) generated by Algorithm 1.1 is the following sequence:

and \(x_{k}\rightarrow0\) as \(k\rightarrow\infty\), where \(0\in VI(E,M)\).

Proof

By Algorithm 1.1, we have \(y_{0}=\frac{1}{1+r_{0}}x_{0}=-\frac{2}{15}\), \(z_{0}=-\frac{2}{15}>x_{0}\), \(H_{0}=\{v\in \mathbb{R},\|v-z_{0}\|\leq\|v-x_{0}\|\}=[z_{0}-(\frac{z_{0}-x_{0}}{2}),+\infty )=[-\frac{7}{30},+\infty)\), \(W_{0}=\{v\in\mathbb{R}, \langle v-x_{0},x_{0}-x_{0}\rangle\leq0\}=\mathbb{R}\). Therefore, \(H_{0}\cap W_{0}=H_{0}=[-\frac{7}{30},+\infty)\) and \(x_{1}=P_{[-\frac{7}{30},+\infty)}(-\frac{1}{3})=-\frac{7}{30}=\frac {7\cdot0^{2}+29\cdot0+28}{8\cdot0^{2}+36\cdot0+40}x_{0}\). Suppose that \(x_{k+1}=\frac{7k^{2}+29k+28}{8k^{2}+36k+40}x_{k}\). By Algorithm 1.1, \(y_{k+1}=\frac{k+3}{2k+7}x_{k+1}\), and hence

\(H_{k+1}=\{v\in \mathbb{R}:\|v-z_{k+1}\|\leq\|v-x_{k+1}\|\}=[z_{k+1}-\frac {z_{k+1}-x_{k+1}}{2},+\infty)\subset[x_{k+1},+\infty)\), \(W_{k+1}=\{v\in\mathbb{R}:\langle v-x_{k+1},x_{0}-x_{k+1}\rangle\leq0\}=[x_{k+1},+\infty)\). Therefore, \(H_{k+1}\cap W_{k+1}=H_{k+1}=[z_{k+1}-\frac{z_{k+1}-x_{k+1}}{2},+\infty)\) and

Combine (3.30) with (3.31), we obtain \(x_{k+2}=\frac{7(k+1)^{2}+29(k+1)+28}{8(k+1)^{2}+36(k+1)+40}x_{k+1}\). By induction, (3.29) holds. □

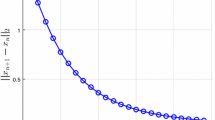

Next, we give the numerical experiment results by using Table 1, which shows that the iteration process of the sequence \(\{x_{k}\}\) as initial point \(x_{0}=-\frac{1}{3}\). From the figures, we can see that \(\{x_{k}\}\) converges to 0.

The numerical experiment result of Algorithm 3.1 for \(\rho _{k}=0\)

Take \(\lambda_{k}=1+\frac{1}{k+2}\), \(k\geq0\), \(\beta_{k}=0\), \(e_{k}=0\), for all \(k\geq0\), and initial point \(x_{0}=-\frac{1}{3}\in \mathbb{R}\). Then \(\{x_{k}\}\) generated by Algorithm 3.1 is the following sequence:

and \(x_{k}\rightarrow0\) as \(k\rightarrow\infty\), where \(0\in VI(E,M)\).

Proof

By (3.1),

By Algorithm 3.1, we have \(C_{0}=\{z\in\mathbb{R}:\langle z-y_{0},x_{0}-y_{0}\rangle\leq0\}=[y_{0},+\infty)\). By (3.2), \(x_{1}=P_{C_{0}}(x_{0})=y_{0}=-\frac{2}{15}>x_{0}\). This is

Suppose that

By (3.1),

It follows from Algorithm 3.1 and (3.34) that \(C_{k+1}=\{z\in E:\langle z-y_{k+1},x_{k+1}-y_{k+1}\rangle\leq0\}=[y_{k+1},+\infty)\), and hence

By induction, (3.32) holds. □

Next, we give the numerical experiment results by using Table 2, which shows that the iteration process of the sequence \(\{x_{k}\}\) as initial point \(x_{0}=-\frac{1}{3}\). From the figures, we can see that \(\{x_{k}\}\) converges to 0.

Remark 3.2

Comparing Table 1 with Table 2, we can intuitively see that the convergence speed of Algorithm 3.1 for \(\rho_{k}=0\) is faster than that of Algorithm 1.1 constructed in [9].

Remark 3.3

In [19, 20], the authors proposed several different iterative algorithms for approximating zeros of m-accretive operators in Banach spaces. The nonexpansiveness of the resolvent operator of the m-accretive operator is employed in theses algorithms. Since the resolvent operator of a maximal monotone operator is not nonexpansive in Banach spaces, these algorithms cannot be applied to Problem (1.1).

Remark 3.4

In [21], the authors established viscosity iterative algorithms for approximating a common element of the set of fixed points of a nonexpansive mapping and the set of solutions of the variational inequality for an inverse-strongly monotone mapping in Hilbert spaces by using the nonexpansiveness of the metric projection operator. However, the metric projection operator is not nonexpansive in Banach spaces. Therefore, the algorithms of [21] cannot be applied to Problem (1.1) of this paper in Banach spaces.

References

Rockafellar, RT: Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 14, 877-898 (1976)

Eckstein, J, Bertsekas, DP: On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Program. 55, 293-318 (1992)

He, BS, Liao, L, Yang, Z: A new approximate proximal point algorithm for maximal monotone operator. Sci. China Ser. A 46, 200-206 (2003)

Yang, Z, He, BS: A relaxed approximate proximal point algorithm. Ann. Oper. Res. 133, 119-125 (2005)

Zhang, QB: A modified proximal point algorithm with errors for approximating solution of the general variational inclusion. Oper. Res. Lett. 40, 564-567 (2012)

Iiduka, H, Takahashi, W: Strong convergence studied by a hybrid type method for monotone operators in a Banach space. Nonlinear Anal. 68, 3679-3688 (2008)

Alber, YI, Reich, S: An iterative method for solving a class of nonlinear operator equations in Banach spaces. Panam. Math. J. 4, 39-54 (1994)

Kamimura, S, Takahashi, W: Strong convergence of a proximal-type algorithm in a Banach space. SIAM J. Optim. 13, 938-945 (2002)

Wei, L, Zhou, HY: Strong convergence of projection scheme for zeros of maximal monotone operators. Nonlinear Anal. 71, 341-346 (2009)

Solodov, MV, Svaiter, BF: Forcing strong convergence of proximal point iterations in a Hilbert space. Math. Program. 87, 189-202 (2000)

Yanes, CM, Xu, HK: Strong convergence of the CQ method for fixed point iteration processes. Nonlinear Anal. 64, 2400-2411 (2006)

Mosco, U: Perturbation of variational inequalities. In: Nonlinear Functional Analysis. Proc. Sympos. Pure Math., vol. XVIII, Part 1, Chicago, IL, 1968, pp. 182-194. Am. Math. Soc., Providence (1970)

Pascali, D: Nonlinear Mappings of Monotone Type. Sijthoff & Noordhoff, Alphen aan den Rijn (1978)

Alber, YI: Metric and generalized projection operators in Banach spaces: properties and applications. In: Kartsatos, A (ed.) Theory and Applications of Nonlinear Operators of Monotonic and Accretive Type, pp. 15-50. Dekker, New York (1996)

Alber, YI, Guerre-Delabriere, S: On the projection methods for fixed point problems. Analysis 21, 17-39 (2001)

Matsushita, S, Takahashi, W: A strong convergence theorem for relatively nonexpansive mappings in a Banach space. J. Approx. Theory 134, 257-266 (2005)

Tan, KK, Xu, HK: Approximating fixed points of nonexpansive mapping by the Ishikawa iteration process. J. Math. Anal. Appl. 178, 301-308 (1993)

Ceng, LC, Ansari, QH, Yao, JC: Hybrid proximal-type and hybrid shrinking projection algorithms for equilibrium problems, maximal monotone operators, and relatively nonexpansive mappings. Numer. Funct. Anal. Optim. 31, 763-797 (2010)

Ceng, LC, Khan, AR, Ansari, QH, Yao, JC: Strong convergence of composite iterative schemes for zeros of m-accretive operators in Banach spaces. Nonlinear Anal. 70, 1830-1840 (2009)

Ceng, LC, Ansari, QH, Schaible, S, Yao, JC: Hybrid viscosity approximation method for zeros of m-accretive operators in Banach spaces. Numer. Funct. Anal. Optim. 33, 142-165 (2012)

Ceng, LC, Khan, AR, Ansari, QH, Yao, JC: Viscosity approximation methods for strongly positive and monotone operators. Fixed Point Theory 10, 35-71 (2009)

Acknowledgements

This research is financially supported by the National Natural Science Foundation of China (11401157). The author is grateful to the referees for their valuable comments and suggestions, which improved the contents of the article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Liu, Y. Weak convergence of a hybrid type method with errors for a maximal monotone mapping in Banach spaces. J Inequal Appl 2015, 260 (2015). https://doi.org/10.1186/s13660-015-0772-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-015-0772-7