Abstract

This paper is concerned with asynchronous dissipative control for a class of networked time-delay Markov jump systems with event-triggered scheme and packet dropouts. To reduce communication consumption, an event-triggered transmission scheme is introduced. The phenomenon of packet dropout during the communication between the controller and the actuator is described by the Bernoulli model. The designed dissipative controller is asynchronous with the physical plant, which is described by a hidden Markov model. Based on a mode-dependent Lyapunov–Krasovskii function, a sufficient condition for the closed-loop control system to be stochastically stable and strictly \((\mu ,\vartheta ,\upsilon ){-}\gamma\)-dissipative is obtained. Furthermore, the design of dissipative controller is simplified by using matrix scaling and slack matrix techniques. Finally, a robotic arm system is used as an example to verify the effectiveness of our proposed design method.

Similar content being viewed by others

1 Introduction

As practical engineering systems become increasingly massive and complex, abrupt variations in parameters or structures often occur in the systems due to the factors such as component failures, network constraints and changes of the external environment [1]. Traditional linear systems are not applicable to describe such systems accurately. Fortunately, Markov jump systems (MJSs), as a class of important stochastic hybrid systems, have emerged. Due to the powerful modeling capability, MJSs have been concerned by more and more scholars [2, 3] and widely applied in many areas. For example, MJSs were applied to the highway traffic system [4]. The transient faults on power line were modeled as Markov chain, and the power systems were described as MJSs [5]. Furthermore, MJSs have also been widely used in economic, communication, aerospace and other fields [6,7,8].

With the combination of computer technology, communication technology and control theory, networked control systems (NCSs) are developing rapidly due to the advantages of less wiring, high reliability and information sharing. However, the introduction of network also produces some new issues, e.g., time delay and packet dropouts. To solving these problems, a variety of approaches have been proposed [9,10,11,12,13,14,15,16,17]. In the case of packet dropouts, the works [9,10,11] investigated the fuzzy control of nonlinear MJSs, the \({H_\infty }\) filtering of networked nonlinear discrete-time systems and the output feedback control of NCSs, respectively. As for the study of networked MJSs with time delay [12,13,14,15,16], the issue of state estimation based on sliding mode observer was addressed [13]. The stability of delayed MJSs with infinite states was analyzed, and a sufficient condition for the mean square stability of the system was given in the form of linear matrix inequalities (LMIs) [15].

On the other hand, issues like time delay and packet dropouts will lead to inadequate information transmission between different nodes. In MJSs, inadequate information transmission will inevitably cause asynchronous phenomenon. However, in most of the available works [18,19,20,21,22], it is assumed that the controller is mode-independent or perfectly synchronous with the physical plant. The former ignores mode information, which is simple in structure and easy to implement, but causes a great deal of conservatism. The latter needs the controller to obtain the mode information accurately and timely, which is difficult to achieve in practical systems. Recently, the asynchronous problem of MJSs has attracted more and more attention. The main asynchronous modeling approaches include time-delay model [23], piecewise homogeneous Markov chain model [24] and hidden Markov model (HMM) [25,26,27,28,29]. Based on HMM, an asynchronous state feedback \({H_\infty }\) controller for time-delay MJSs was designed [26], and the asynchronous output tracking control with incomplete premise matching for T-S fuzzy MJSs was investigated [29]. In addition, dissipative theory has been studied for a long time, which means that the storage of energy is less than the supply of energy; that is, there is energy dissipation in the system. In recent years, scholars have conducted a lot of research on dissipative control [12, 30,31,32]. Based on dissipative theory, the stability of sampled-data MJSs was studied [30], and the problem of sliding mode control for nonlinear MJSs with external disturbances was investigated [31]. For incremental dissipative control for nonlinear stochastic MJSs, by using incremental Hamilton–Jacobi inequalities, the sufficient conditions for the random incremental dissipative property of nonlinear stochastic MJSs were given [32]. However, the current research on asynchronous dissipative control of MJSs is still insufficient, which is one of the motives of this work.

It is noted that the data transmission in the above works is based on periodic-triggered scheme, and the sampling and transmission of system signals are in a fixed period, which can easily lead to network congestion, packet dropouts and other problems. However, the event-triggered scheme has been considered as an efficient method to overcome these obstacles [33,34,35]. The event-triggered scheme indicates that the system signals determine whether to transmit or not depending on the event-triggered criterion, which can reduce the communication consumption effectively. The event-triggered risk-sensitive state estimation problem for HMMs was studied; by using the reference probability measure method, the estimation problem is transformed into an equivalent estimation problem and solved [36]. In order to solve the issue of the event-triggered self-adaptive control for random nonlinear MJSs, two self-adaptive controllers with fixed threshold scheme and relative threshold scheme were proposed [37]. Moreover, an event-triggered output feedback control strategy was proposed for networked MJSs with partially unknown transition probabilities [38], and an event-triggered sliding mode control strategy was considered for discrete-time MJSs [39]. Nevertheless, there are few works about dissipative asynchronous control of time-delay MJSs with event-triggered scheme and packet dropouts, which is another motive of this work.

This paper focuses on the design of asynchronous dissipative controller for networked time-delay MJSs with event-triggered scheme and packet dropouts. The main contributions of this paper are as follows:

1. Compared with the literature [26], this paper considers not only the varying delay of the physical plant, but also the network-induced communication constraints such as limited bandwidth and packet dropouts, which is more practical.

2. The asynchronous dissipative control scheme proposed in this paper provides a unified framework, in which the asynchronous strategy based on HMM contains two special cases: mode independence and synchronization, which are studied most in the existing literature; at the same time, the dissipative control problem also includes \({H_\infty }\) control and passive control.

The remaining structure of this paper is as follows: Sect. 2.1 gives the problem description and system modeling. In Sect. 2.2, a sufficient condition for the closed-loop control system to be stochastically stable and strictly dissipative is obtained. A controller design method is given in Sect. 2.3. A simulation example and results and discussion are provided in Sect. 3. Section 4 draws the conclusion.

2 Methods

2.1 Problem description

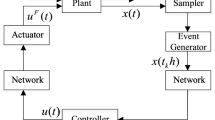

This work focuses on the design of the asynchronous dissipative controller for networked time-delay MJSs with packet dropouts under the event-triggered scheme, as shown in Fig. 1. Consider the networked time-delay MJSs as follows:

where \({x_k} \in {{\mathbb {R}}^{{n_x}}}\), \({\chi _{{k_0}}}\), \({y_k} \in {{\mathbb {R}}^{{n_y}}}\), \({u_k} \in {{\mathbb {R}}^{{n_u}}}\), \({w_k} \in {{\mathbb {R}}^{{n_w}}}\)(\({w_k} \in l[0,\infty )\)) denote the system state, the system initial state, the controlled output, the control input and the disturbance input, respectively. There exists time delay \({d_k} \in {{\mathbb {N}}^ + }\) in the system (1) with the upper bound \({d_1}\) and the lower bound \({d_2}\), \({d_1} < {d_2}\). (\({A_{{\partial _k}}}\), \({A_{d{\partial _k}}}\), \({B_{1{\partial _k}}}\), \({B_{2{\partial _k}}}\), \({C_{{\partial _k}}}\), \({C_{d{\partial _k}}}\), \({D_{1{\partial _k}}}\), \({D_{2{\partial _k}}}\)) are the given real matrices with appropriate dimensions. The Markov jump process of system (1) is controlled by the mode parameter \({\partial _k}\) (\({\partial _k} \in \mathrm{{S}}\), \(\mathrm {S} = \{ 1,2, \cdots ,s\}\)) and complies with the transition probability matrix (TPM) \(\Upsilon = [{\pi _{pq}}]\), in which the transition probability(TP) \({\pi _{pq}}\) is defined as follows:

obviously, \({\pi _{pq}} \in [0,1]\) and \(\sum \nolimits _{q = 1}^s {{\pi _{pq}} = 1}\) for \(\forall p,q \in \mathrm{{S}}\).

Considering the limited bandwidth and energy in the system, we will introduce an event-trigger to reduce the transmission rate of sampling signals and relieve the communication pressure. The event-triggered mechanism is as follows:

where \({\delta _i} \in \left[ {0,1} \right]\) is error threshold. Denote \({H_k} = diag\{ {\Delta _{1k}},{\Delta _{2k}}, \cdots ,{\Delta _{{n_x}k}}\}\), \({\Delta _{ik}} \in [ - {\delta _i},{\delta _i}],i = 1,2, \cdots ,{n_x}\). The sampling signal is transmitted to the controller only when the event-triggered condition is satisfied. Then according to (3), we can obtain

Remark 1

Owing to the introduction of the event-triggered transmission scheme, the sampling signal does not need to be transmitted periodically, thus gaining the aim of reducing the data transmission frequency. In addition, we introduce a performance index of data transmission performance \(DTP = {t_S}/{t_T} \times 100\%\) to represent the communication performance [40], in which \({t_S}\) and \({t_T}\) indicate the transmission times of sampled data with and without the event-triggered mechanism, respectively.

Based on the output of the trigger (4), we will adopt the following asynchronous controller:

where \({K_{{\sigma _k}}}\) represents the controller gain, and \({\sigma _k}\) is the mode of the controller. The mode \({\partial _k}\) of system (1) affects the mode \({\sigma _k}\) of the controller through the conditional transition matrix(CPM) \(\Psi = [{\theta _{pj}}]\), and its conditional transition (CP) \({\theta _{pj}}\) is described as follows [41]:

which denotes the controller works in mode j when the system (1) is in mode p, in which \({\theta _{pj}} \in [0,1]\) and \(\sum \nolimits _{j = 1}^s {{\theta _{pj}}} = 1\) for \(\forall p,j \in S\).

Remark 2

The controller and the physical plant (1) are mode asynchronous, and the asynchronous relationship is described by an HMM, which can well describe the asynchronization and connection between the controller and the plant through CPM. The controller’s mode is influenced by the plant’s mode, and their asynchronous level is reflected by the CPs. In addition, the asynchronous controller under the HMM scheme is more general, which covers both synchronous (i.e., \(\Psi \mathrm{{ = }}I\)) and mode-independent (i.e., \({\sigma _k} \in \{ 1\}\)) cases [42].

Considering that there exists network between the controller and the actuator, packet dropouts are inevitable. A Bernoulli stochastic process is used in this work to describe the packet dropout process:

where \({\beta _k}\) denotes the Bernoulli process with

and satisfies

Furthermore, to facilitate subsequent derivations. Defining \({{\bar{\beta }} _k} = {\beta _k} - \beta\), we obtain

where \({\bar{\beta }} = \sqrt{\beta - {\beta ^2}}\).

For the convenience of expression, let \({\partial _k} = p\), \({\partial _{k + 1}} = q\) and \({\sigma _k} = j\). According to (1), (4) and (7), we can obtain the following closed-loop dynamic system:

where \({\bar{A}_{pjk}} = {A_p} + {\beta _k}{B_{1p}}{K_j}(I+{H_k})\), \({\bar{C}_{pjk}} = {C_p} + {\beta _k}{B_{2p}}{K_j}(I+{H_k})\).

Next, some important lemmas and definitions are introduced to facilitate the work of this paper.

Definition 2.1

[43] The system (11) is stochastically stable, if \({w_k} \equiv 0\) and for any initial condition \(({x_0},{\partial _0})\), satisfying

Definition 2.2

[43] Given a constant \(\gamma > 0\), matrices \(\mu \le 0\), \(\vartheta\) and symmetric \(\upsilon\), the closed-loop system (11) is considered to be strictly \((\mu ,\vartheta ,\upsilon ) - \gamma -\)dissipative, for any positive integer N, when \({w_k} \in l[0,\infty )\) and under 0 initial condition, satisfying

where \(F({w_k},{y_k}) = y_k^{\mathrm{{T}}}\mu {y_k} + 2y_k^{\mathrm{{T}}}\vartheta {w_k} + w_k^{\mathrm{{T}}}\upsilon {w_k}\), and \(\mu \buildrel \Delta \over = - U_1^{\mathrm{{T}}}{U_1}\) is negative semi-definite.

Lemma 2.1

[44] Given the matrices A, B, C and \({A^{\mathrm{{T}}}} = A\), then

holds if there exists a matrix \(D > 0\), satisfying

2.2 Stability and dissipativity analysis of the system

This section will derive a sufficient condition to ensure that the system (11) is stochastically stable and strictly \((\mu ,\vartheta ,\upsilon ) - \gamma -\)dissipative.

Theorem 2.1

The system (11) is stochastically stable and strictly \((\mu ,\vartheta ,\upsilon ) - \gamma -\)dissipative, if there exist a matrix \({K_j} \in {{\mathbb {R}}^{{n_u} \times {n_x}}}\), positive matrices \({P_p} \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\), \({Q} \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\), \({R_{pj}} \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\) and a positive diagonal matrix \({G_{pj}} \in {{\mathbb {R}}^{{n_u} \times {n_u}}}\), for \(\forall p,j \in \mathrm{{S}}\), satisfying

where

\({\Pi _{pj}} = \left[ {\begin{array}{*{20}{c}} { - \bar{P}_p^{ - 1}}&{}0&{}0&{}0&{}{\bar{A}_{pj}^*}&{}{{A_{dp}}}&{}{{D_{1p}}}\\ *&{}{ - \bar{P}_p^{ - 1}}&{}0&{}0&{}{{\bar{\beta }} {{\bar{B}}_{1pj}}}&{}0&{}0\\ *&{}*&{}{ - I}&{}0&{}{{U_1}\bar{C}_{pj}^*}&{}{{U_1}{C_{dp}}}&{}{{U_1}{D_{2p}}}\\ *&{}*&{}*&{}{ - I}&{}{{\bar{\beta }} {U_1}{{\bar{B}}_{2pj}}}&{}0&{}0\\ *&{}*&{}*&{}*&{}{dQ - {R_{pj}}}&{}0&{}{ - \bar{C}_{pj}^{*\mathrm{{T}}}\vartheta }\\ *&{}*&{}*&{}*&{}*&{}{ - Q}&{}{ - C_{dp}^{\mathrm{{T}}}\vartheta } \\ *&{}*&{}*&{}*&{}*&{}*&{}M_p \end{array}} \right]\),

\({N_{pj}} = {\left[ {\begin{array}{*{20}{c}} {\beta \bar{B}_{1pj}^{\mathrm{{T}}}}&{{\bar{\beta }} \bar{B}_{1pj}^{\mathrm{{T}}}}&{\beta \bar{B}_{2pj}^{\mathrm{{T}}}}&{{\bar{\beta }} \bar{B}_{2pj}^{\mathrm{{T}}}}&0&0&{\beta {{({\vartheta ^{\mathrm{{T}}}}{B_{2pj}}{K_j})}^{\mathrm{{T}}}}} \end{array}} \right] ^{\mathrm{{T}}}}\),

\({L} = \left[ {\begin{array}{*{20}{c}} 0&0&0&0&I&0&0 \end{array}} \right]\), \(\bar{A}_{pj}^* = {A_p} + \beta {B_{1p}}{K_j}\), \({\bar{B}_{1pj}} = {B_{1p}}{K_j}\),

\(\bar{C}_{pj}^* = {C_p} + \beta {B_{2p}}{K_j}\), \({\bar{B}_{2pj}} = {B_{2p}}{K_j}\), \(\Lambda = diag\{ {\delta _1},{\delta _2}, \cdots ,{\delta _{{n_x}}}\}\),

\(M_p = - D_{2p}^{\mathrm{{T}}}\vartheta - {\vartheta ^{\mathrm{{T}}}}{D_{2p}} + \gamma I - \upsilon\), \({\bar{P}_p} = \sum \limits _{q = 1}^s {{\pi _{pq}}{P_q}}\), \(d = {d_2} - {d_1} + 1\).

Proof

First, using Schur complement to (17), we obtain that

By Lemma 2.1, we have

and thus obtain

where

\({\tilde{A}_{pjk}} = {A_p} + \beta {B_{1p}}{K_j}(I + {H_k})\), \({\tilde{B}_{1pjk}} = {B_{1p}}{K_j}(I + {H_k})\),

\({\tilde{C}_{pjk}} = {C_p} + \beta {B_{2p}}{K_j}(I + {H_k})\), \({\tilde{B}_{2pjk}} = {B_{2p}}{K_j}(I + {H_k})\).

which implies

Then, using Schur complement to (21) and (20) again, we have

where

\({{\bar{\Gamma }}_p ^2} = diag\{ - \bar{P}_p, - \bar{P}_p, - I, - I\}\), \({\Gamma ^1} = \left[ {\begin{array}{*{20}{c}} {dQ}&{}0\\ *&{}{ - Q} \end{array}} \right]\), \({\phi _{pjk}} = \left[ {\begin{array}{*{20}{c}} {{{\tilde{A}}_{pjk}}}&{}{{A_{dp}}}\\ {{\bar{\beta }} {{\tilde{B}}_{1pjk}}}&{}0 \end{array}} \right]\),

\(\Gamma _{pjk}^2 = \left[ {\begin{array}{*{20}{c}} {dQ}&{}0&{}{ - \tilde{C}_{pjk}^{\mathrm{{T}}}\vartheta }\\ *&{}Q&{}{ - C_{dp}^{\mathrm{{T}}}\vartheta }\\ *&{}*&{}{{M_p}} \end{array}} \right]\), \({\varphi _{pjk}} = \left[ {\begin{array}{*{20}{c}} {{{\tilde{A}}_{pjk}}}&{}{{A_{dp}}}&{}{{D_{1p}}}\\ {{\bar{\beta }} {{\tilde{B}}_{1pjk}}}&{}0&{}0\\ {{U_1}{{\tilde{C}}_{pjk}}}&{}{{U_1}{C_{dp}}}&{}{{U_1}{D_{2p}}}\\ {{\bar{\beta }} {U_1}{{\tilde{B}}_{2pjk}}}&{}0&{}0 \end{array}} \right]\).

Next, choose the mode-dependent Lyapunov–Krasovskii function as follows:

where \({V_{1k}} = x_k^{\mathrm{{T}}}{P_{{\partial _k}}}{x_k}\), \({V_{2k}} = \sum \limits _{b = - {d_2} + 1}^{ - {d_1} + 1} {\sum \limits _{a = k - 1 + b}^{k - 1} {x_a^{\mathrm{{T}}}} } Q{x_a}\). We introduce \({\xi _{1k}} = {\left[ {\begin{array}{*{20}{c}} {x_k^{\mathrm{{T}}}}&{x_{k - d_k}^{\mathrm{{T}}}} \end{array}} \right] ^{\mathrm{{T}}}}\) and \({\xi _k} = {\left[ {\begin{array}{*{20}{c}} {\xi _{1k}^{\mathrm{{T}}}}&{w_k^{\mathrm{{T}}}} \end{array}} \right] ^{\mathrm{{T}}}}\). Denoting \(\nabla {V_k}\) as the forward differential of \({V_k}\),

Then, figure out \(\mathrm{E}\{ \nabla {V_{1k}}\}\) and \(\mathrm{E}\{ \nabla {V_{2k}}\}\)

where “\(\le\)” is obtained from (27) and (28).

Noting that \({w_k} \equiv 0\) in the definition of stochastic stability, and combining (24), (25) and (26), we can get

where “<” is obtained from (22), and \(\varpi = {\lambda _{\max }}(\sum \limits _{j = 1}^s {{\theta _{pj}}{R_{pj}} - {P_p}} )\).

Accordingly,

We know that \(\varpi < 0\) from (16); hence,

which conforms to definition 2.1; namely, the stochastic stability of the system (11) is proved.

Next, we will show that the system (11) is strictly \((\mu ,\vartheta ,\upsilon ) - \gamma -\)dissipative. Define the performance index as

Then, calculate \(\mathrm{E}\{ \nabla {V_{1k}}\}\) and \(\mathrm{E}\{ \nabla {V_{2k}}\}\), respectively,

Combining (32), (33) and (34), we can obtain

where the two “<” are obtained from (22) and (16), respectively. By definition 2.2, we can know that the system (11) is strictly \((\mu ,\vartheta ,\upsilon ) - \gamma -\)dissipative. Thus, the proof is completed. \(\square\)

Remark 3

A sufficient condition for stochastic stability and strict dissipativity is derived for the system (11) in Theorem 2.1. However, in terms of existing nonlinear term in Theorem 2.1, we cannot parameterize the controller gain directly by conditions (16) and (17); therefore, further linearization is required.

2.3 Design of the event-triggered asynchronous controller

This section will provide a design method for an event-triggered asynchronous controller and further determine the controller gains.

Theorem 2.2

The system (11) is stochastically stable and strictly \((\mu ,\vartheta ,\upsilon ) - \gamma -\)dissipative, if there exist matrices \({\bar{K}_j} \in {{\mathbb {R}}^{{n_u} \times {n_x}}}\), \(V \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\), positive matrices \({\bar{P}_p} \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\), \({\bar{R}_{pj}} \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\), \(\bar{Q} \in {{\mathbb {R}}^{{n_x} \times {n_x}}}\) and a positive diagonal matrix \({\bar{G}_{pj}} \in {{\mathbb {R}}^{{n_u} \times {n_u}}}\), for \(\forall p,j \in \mathrm{{S}}\), satisfying

where

\({J_p} = [\sqrt{{\theta _{p1}}} {\bar{P}_p} \cdots \sqrt{{\theta _{pj}}} {\bar{P}_p} \cdots \sqrt{{\theta _{ps}}} {\bar{P}_p}]\), \({\hat{R}_p} = diag\{ - {\bar{R}_{p1}}, \cdots , - {\bar{R}_{pj}}, \cdots , - {\bar{R}_{ps}}\}\),

\({X_{pj}} = \left[ {\begin{array}{*{20}{c}} {d\bar{Q} + {{\bar{R}}_{pj}} - {V^{\mathrm{{T}}}} - V}&{}0&{}{ - \tilde{C}_{pj}^{*\mathrm{{T}}}\vartheta }&{}0&{}{\Lambda {{\bar{G}}_{pj}}}\\ *&{}{ - \bar{Q}}&{}{ - \bar{C}_{dp}^{\mathrm{{T}}}\vartheta }&{}0&{}0\\ *&{}*&{}M_p&{}{\beta {\vartheta ^{\mathrm{{T}}}}{B_{2p}}{{\bar{K}}_j}}&{}0\\ *&{}*&{}*&{}{ - {{\bar{G}}_{pj}}}&{}0\\ *&{}*&{}*&{}*&{}{ - {{\bar{G}}_{pj}}} \end{array}} \right]\),

\({Y_{pj}}={\left[ {\begin{array}{*{20}{c}} {{U_1}({C_p}V + \beta {B_{2p}}{{\bar{K}}_j})}&{}{{U_1}{C_{dp}}V}&{}{{U_1}{D_{2p}}}&{}{\beta {U_1}{B_{2p}}{{\bar{K}}_j}}&{}0\\ {{\bar{\beta }} {U_1}{B_{2p}}{{\bar{K}}_j}}&{}0&{}0&{}{{\bar{\beta }} {U_1}{B_{2p}}{{\bar{K}}_j}}&{}0 \end{array}} \right] ^{\mathrm{{T}}}}\),

\({Z_{pj}} = \left[ {\begin{array}{*{20}{c}} {\sqrt{{\pi _{p1}}} W_{pj}^{\mathrm{{T}}}}&{\sqrt{{\pi _{p2}}} W_{pj}^{\mathrm{{T}}}}&\cdots&{\sqrt{{\pi _{ps}}} W_{pj}^{\mathrm{{T}}}} \end{array}} \right]\),

\({W_{pj}} = {\left[ {\begin{array}{*{20}{c}} {{A_p}V + \beta {B_{1p}}{{\bar{K}}_j}}&{}{{A_{dp}}V}&{}{{D_{1p}}}&{}{\beta {B_{1p}}{{\bar{K}}_j}}&{}0\\ {{\bar{\beta }} {B_{1p}}{{\bar{K}}_j}}&{}0&{}0&{}{{\bar{\beta }} {B_{1p}}{{\bar{K}}_j}}&{}0 \end{array}} \right] }\),

\(\tilde{C}_{pj}^* = {C_p}V + \beta {B_{2p}}{\bar{K}_j}\), \({\bar{C}_{dp}} = {C_{dp}}V\), \({\hat{P}} = diag\{ - {\bar{P}_1}, - {\bar{P}_2}, \cdots , - {\bar{P}_s}\}\).

and the controller gain \({K_j}\) can be determined by

Proof

First, we define

where V is an invertible slack matrix. Applying a congruence conversion to (36) by \(diag\{ {P_p},I, \cdots ,I\}\), one has

where \({\bar{J}_p} = [\sqrt{{\theta _{p1}}} I \cdots \sqrt{{\theta _{pj}}} I \cdots \sqrt{{\theta _{ps}}} I]\). Then, (40) is equivalent to (16).

Moreover, due to the fact that

namely

Then, (37) implies

where

\({\bar{X}_{pj}} = \left[ {\begin{array}{*{20}{c}} {d\bar{Q} + {V^{\mathrm{{T}}}}{{\bar{R}}_{pj}}V}&{}0&{}{ - \tilde{C}_{pj}^{*\mathrm{{T}}}\vartheta }&{}0&{}{\Lambda {{\bar{G}}_{pj}}}\\ *&{}{ - \bar{Q}}&{}{ - \bar{C}_{dp}^{\mathrm{{T}}}\vartheta }&{}0&{}0\\ *&{}*&{}M_p&{}{\beta {\vartheta ^{\mathrm{{T}}}}{B_{2p}}{{\bar{K}}_j}}&{}0\\ *&{}*&{}*&{}{ - {{\bar{G}}_{pj}}}&{}0\\ *&{}*&{}*&{}*&{}{ - {{\bar{G}}_{pj}}} \end{array}} \right]\).

Denoting \(\Theta = diag\{ {({V^{\mathrm{{T}}}})^{ - 1}},{({V^{\mathrm{{T}}}})^{ - 1}},I,{({V^{\mathrm{{T}}}})^{ - 1}},{({V^{\mathrm{{T}}}})^{ - 1}},I, \cdots ,I\}\), and applying a congruence conversion to (43) by \(\Theta\) , we can get

where

\({X_{pj}} = \left[ {\begin{array}{*{20}{c}} {dQ - {R_{pj}}}&{}0&{}{ - \bar{C}_{pj}^{*\mathrm{{T}}}\vartheta }&{}0&{}{\Lambda {G_{pj}}}\\ *&{}{ - Q}&{}{ - C_{dp}^{\mathrm{{T}}}\vartheta }&{}0&{}0\\ *&{}*&{}M_p&{}{\beta {\vartheta ^{\mathrm{{T}}}}{B_{2p}}{{ K}_j}}&{}0\\ *&{}*&{}*&{}{ - {G_{pj}}}&{}0\\ *&{}*&{}*&{}*&{}{ - {G_{pj}}} \end{array}} \right]\),

\({\tilde{Y}_{pj}} = {\left[ {\begin{array}{*{20}{c}} {{U_1}({C_p} + \beta {B_{2p}}{K_j})}&{}{{U_1}{C_{dp}}}&{}{{U_1}{D_{2p}}}&{}{\beta {U_1}{B_{2p}}{K_j}}&{}0\\ {{\bar{\beta }} {U_1}{B_{2p}}{K_j}}&{}0&{}0&{}{{\bar{\beta }} {U_1}{B_{2p}}{K_j}}&{}0 \end{array}} \right] ^{\mathrm{{T}}}}\),

\({\tilde{Z}_{pj}} = \left[ {\begin{array}{*{20}{c}} {\sqrt{{\pi _{p1}}} \tilde{W}_{pj}^{\mathrm{{T}}}}&{\sqrt{{\pi _{p2}}} \tilde{W}_{pj}^{\mathrm{{T}}}}&\cdots&{\sqrt{{\pi _{ps}}} \tilde{W}_{pj}^{\mathrm{{T}}}} \end{array}} \right]\),

\({\tilde{W}_{pj}} = {\left[ {\begin{array}{*{20}{c}} {{A_p} + \beta {B_{1p}}{K_j}}&{}{{A_{dp}}}&{}{{D_{1p}}}&{}{\beta {B_{1p}}{K_j}}&{}0\\ {{\bar{\beta }} {B_{1p}}{K_j}}&{}0&{}0&{}{{\bar{\beta }} {B_{1p}}{K_j}}&{}0 \end{array}} \right] }\).

By employing Schur complement to (44), we get (17), and the proof is accomplished. \(\square\)

Remark 4

In Theorem 2.1, it is difficult to compute the controller gain due to the nonlinear term. Thus, we introduce the slack matrix V in Theorem 2.2 and transform the nonlinear problem into LMIs by using matrix scaling and slack matrix techniques.

Remark 5

Based on dissipative theory, the larger \(\gamma\) implies, the better dissipative performance. We can obtain the optimal performance \({\gamma ^*}\) by solving a convex optimization problem as follows:

Moreover, it is noted that the dissipative performance also includes two special performances:

(1) \({H_\infty }\): let \(\mu = - I,\vartheta = 0,\upsilon = ({\gamma ^2} + \gamma )I\) in (37).

(2) Passivity: when \({{\mathbb {R}}^{{n_y}}} = {{\mathbb {R}}^{{n_w}}}\), let \(\mu = 0,\vartheta = I,\upsilon = 2\gamma I\) in (37).

Remark 6

Compared with the literature [26], although the same asynchronization method is introduced in this work, there are great differences in the issues of interest, the data transmission mechanism and the system performance. The work [26] mainly considered the \({H_\infty }\) control of MJSs with time delay and quantization. However, we consider not only the time delay, but also the packet dropouts between the controller and the actuator. Considering the limited communication resources, we also introduce an event-triggered mechanism to reduce communication consumption. In addition, the dissipative control we consider is more general, which covers both \({H_\infty }\) control and passive control. In particular, due to the introduction of event-triggered mechanism and packet dropouts, the technical derivation of the design method in this work is more complex.

3 Experimental results and discussion

In this section, a 4-mode robotic arm system [45] will be used to verify the validity of this design method, and the corresponding parameters are as follows:

\({A_1} = \left[ {\begin{array}{*{20}{c}} 1&{}{0.1}\\ { - 0.4905}&{}{0.8} \end{array}} \right]\), \({A_2} = \left[ {\begin{array}{*{20}{c}} 1&{}{0.1}\\ { - 0.4905}&{}{0.96} \end{array}} \right]\), \({A_3} = \left[ {\begin{array}{*{20}{c}} 1&{}{0.1}\\ { - 0.4905}&{}{0.98} \end{array}} \right]\),

\({A_4} = \left[ {\begin{array}{*{20}{c}} 1&{}{0.1}\\ { - 0.4905}&{}{0.9867} \end{array}} \right]\), \({A_{d1}} = \left[ {\begin{array}{*{20}{c}} {0.01}&{}{ - 0.02}\\ { - 0.01}&{}{0.02} \end{array}} \right]\), \({A_{d2}} = \left[ {\begin{array}{*{20}{c}} {0.01}&{}{ - 0.02}\\ {0.01}&{}{0.04} \end{array}} \right]\),

\({A_{d3}} = \left[ {\begin{array}{*{20}{c}} {0.01}&{}{ - 0.02}\\ {0.01}&{}{0.05} \end{array}} \right]\), \({A_{d4}} = \left[ {\begin{array}{*{20}{c}} {0.01}&{}{ - 0.02}\\ {0.01}&{}{0.08} \end{array}} \right]\), \({B_{11}} = \left[ {\begin{array}{*{20}{c}} 0\\ {0.1} \end{array}} \right]\), \({B_{12}} = \left[ {\begin{array}{*{20}{c}} 0\\ {0.02} \end{array}} \right]\),

\({B_{13}} = \left[ {\begin{array}{*{20}{c}} 0\\ {0.01} \end{array}} \right]\), \({B_{14}} = \left[ {\begin{array}{*{20}{c}} 0\\ {0.007} \end{array}} \right]\), \({B_{21}} = {B_{22}} = {B_{23}} = {B_{24}} = 0\),

\({C_1} = {C_2} = {C_3} = {C_4} = \left[ {\begin{array}{*{20}{c}} 1&0 \end{array}} \right]\), \({C_{d1}} = \left[ {\begin{array}{*{20}{c}} {0.1}&{0.01} \end{array}} \right]\), \({C_{d2}} = \left[ {\begin{array}{*{20}{c}} {0.1}&{0.02} \end{array}} \right]\),

\({C_{d3}} = \left[ {\begin{array}{*{20}{c}} {0.1}&{0.05} \end{array}} \right]\), \({C_{d4}} = \left[ {\begin{array}{*{20}{c}} {0.1}&{0.08} \end{array}} \right]\), \({D_{11}} = {D_{12}} = {D_{13}} = {D_{14}} = \left[ {\begin{array}{*{20}{c}} 0\\ {0.1} \end{array}} \right]\),

\({D_{21}} = {D_{22}} = {D_{23}} = {D_{24}} = 0.1\).

Let TPM \(\Upsilon\) and CPM \(\Psi\) as follows:

\(\Upsilon = \left[ {\begin{array}{*{20}{c}} {0.3}&{}{0.2}&{}{0.4}&{}{0.1}\\ {0.4}&{}{0.2}&{}{0.2}&{}{0.2}\\ {0.55}&{}{0.15}&{}{0.3}&{}0\\ {0.1}&{}{0.2}&{}{0.3}&{}{0.4} \end{array}} \right]\), \(\Psi = \left[ {\begin{array}{*{20}{c}} {0.2}&{}{0.25}&{}{0.4}&{}{0.15}\\ {0.1}&{}{0.2}&{}{0.3}&{}{0.4}\\ {0.3}&{}{0.2}&{}{0.4}&{}{0.1}\\ {0.4}&{}{0.2}&{}{0.2}&{}{0.2} \end{array}} \right]\),

respectively. Choose the dissipative parameters \(\mu = - 0.36,\vartheta = - 2,\upsilon = 2\), the parameter \(\beta = 0.9\) and the error threshold \(\delta = 0.15\).

Via solving the LMIs in Theorem 2.2, we get the optimal performance \({\gamma ^*} = 1.4140\), and the controller gain:

\({K_1} = \left[ {\begin{array}{*{20}{c}} { - 24.4130}&{ - 4.4994} \end{array}} \right]\), \({K_2} = \left[ {\begin{array}{*{20}{c}} { - 24.5972}&{ - 4.5774} \end{array}} \right]\),

\({K_3} = \left[ {\begin{array}{*{20}{c}} {\mathrm{{ - 24}}\mathrm{{.5182}}}&{\mathrm{{ - 4}}\mathrm{{.5793}}} \end{array}} \right]\), \({K_4} = \left[ {\begin{array}{*{20}{c}} {\mathrm{{ - 28}}\mathrm{{.4887}}}&{ - \mathrm{{5}}\mathrm{{.7050}}} \end{array}} \right]\).

Based on the feasible solution obtained above, we assume that the initial state \({x_0} = {\left[ {\begin{array}{*{20}{c}} {0.3}&{0.2} \end{array}} \right] ^{\mathrm{{T}}}}\) and disturbance input \({w_k} = {0.9^k}\cos (k)\). Then, a simulation is carried out with the proposed event-triggered asynchronous controller. The simulation results are shown in Figs. 2, 3 and 4. It can be observed that the system state, output and control input tend to be stable gradually from Fig. 2; namely, the system is stochastically stable. Furthermore, we can find from Fig. 3 that the amount of data transmission has decreased significantly. Figure 4 shows the modes of the controller and the plant, which are asynchronous.

Then, we will examine the event-triggered performance by changing the threshold \(\delta\). Based on Theorem 2.2, the simulation results are obtained in Table 1. It is easy to observe that as \(\delta\) increases, the optimal control performance decreases slightly, while DTP is greatly improved. Therefore, we can choose a relatively appropriate \(\delta\) to effectively reduce the data transmission rate on the premise that the system has satisfying dissipative performance. Then, we will discuss the impact of different packet dropout rates on system performance. As shown in Table 2, we can find that when \(\beta\)=1 which stands for no packet dropout, the control performance of the system is the best. With the increase of packet dropout rate, the control performance becomes worse.

Next, we will investigate three control performances (i.e., dissipative performance, \({H_\infty }\) performance and passive performance) under different CPMs: synchronous, weakly asynchronous, strongly asynchronous and completely asynchronous cases. The corresponding CPMs are as follows:

Case 1: \(\Psi = \left[ {\begin{array}{*{20}{c}} 1&{}0&{}0&{}0\\ 0&{}1&{}0&{}0\\ 0&{}0&{}1&{}0\\ 0&{}0&{}0&{}1 \end{array}} \right]\), Case 2: \(\Psi = \left[ {\begin{array}{*{20}{c}} 1&{}0&{}0&{}0\\ 0&{}1&{}0&{}0\\ {0.3}&{}{0.2}&{}{0.4}&{}{0.1}\\ {0.4}&{}{0.2}&{}{0.2}&{}{0.2} \end{array}} \right]\),

Case 3: \(\Psi = \left[ {\begin{array}{*{20}{c}} 1&{}0&{}0&{}0\\ {0.1}&{}{0.2}&{}{0.3}&{}{0.4}\\ {0.3}&{}{0.2}&{}{0.4}&{}{0.1}\\ {0.4}&{}{0.2}&{}{0.2}&{}{0.2} \end{array}} \right]\), Case 4: \(\Psi = \left[ {\begin{array}{*{20}{c}} {0.2}&{}{0.25}&{}{0.4}&{}{0.15}\\ {0.1}&{}{0.2}&{}{0.3}&{}{0.4}\\ {0.3}&{}{0.2}&{}{0.4}&{}{0.1}\\ {0.4}&{}{0.2}&{}{0.2}&{}{0.2} \end{array}} \right]\).

Furthermore, the parameters \((\mu , \vartheta , \upsilon )\) for three different performances are presented in Table 3. The optimal performance \({\gamma ^*}\) obtained by solving LMIs in Theorem 2.2 is shown in Table 4. We know that the smaller \({\gamma ^*}\) means, the worse dissipative performance, while the better \({H_\infty }\) performance and passive performance [46]. Transparently, the higher the asynchronous level between the physical plant and the controller is, the worse the control performance we obtain.

4 Conclusions

In this paper, the dissipative asynchronous control issue has been investigated for networked time-delay MJSs with event-triggered scheme and packet dropouts. An event-triggered strategy has been introduced to reduce the communication pressure, and an HHM has been used to describe the asynchronization between the controller and the physical plant. By using Lyapunov–Krasovskii function and dissipative theory, and combining slack matrix and matrix scaling techniques, a controller design method has been obtained. Finally, an example of robotic arm system has been taken to illustrate the effectiveness of our obtained approach. The relationship between dissipative performance, data transmission performance and event-triggered threshold has also been discussed. In the future, it will be worthy of our further investigation on the asynchronous control for networked MJSs with partially unknown TPs and CPs. Moreover, how to design more novel and interesting event-triggered mechanism and packet dropout strategy to save communication resources and further improve control performance is also our goal in the future.

Availability of data and materials

The datasets used and analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- MJSs:

-

Markov jump systems

- NCSs:

-

Networked control systems

- LMIs:

-

Linear matrix inequalities

- HMM:

-

Hidden Markov model

- TPM:

-

Transition probability matrix

- TP:

-

Transition probability

- DTP:

-

Data transmission performance

- CPM:

-

Conditional probability matrix

- CP:

-

Conditional probability

References

X. Xiong, H. Wang, A time-domain channel estimation method for mimo-ofdm systems with low-precision quantization. Wireless Pers. Commun. 94(3), 1869–1879 (2017)

M. Zhang, J. Huang, Y. Zhang, Stochastic stability and stabilization for stochastic differential semi-Markov jump systems with incremental quadratic constraints. Int. J. Robust Nonlinear Control 13(14), 6788–6809 (2021)

H.-J. Sun, P. He, P. Shi, Stabilization of two kinds of nonhomogeneous Markovian jump systems via sliding mode control. Int. J. Robust Nonlinear Control 32(6), 3754–3770 (2022)

L. Zhang, C. Prieur, Stochastic stability of Markov jump hyperbolic systems with application to traffic flow control. Automatica 86, 29-37 (2017)

S. Kuppusamy, Y.H. Joo, S.K. Han, Asynchronous control for discrete-time hidden Markov jump power systems. IEEE Trans. Cybern. 52(9), 9943–9948 (2021)

S. Aberkane, V. Dragan, H∞ filtering of periodic Markovian jump systems: application to filtering with communication constraints. Automatica 48(12), 3151–3156 (2012)

H. He, W. Qi, Y. Kao, Hmm-based adaptive attack-resilient control for markov jump system and application to an aircraft model. Appl. Math. Comput. 392, 125668 (2021)

F.A. Fard, T.K. Siu, Pricing participating products with Markov-modulated jump-diffusion process: an efficient numerical pide approach. Insur. Math. Econ. 53(3), 712–721 (2013)

M. Zhang, P. Shi, L. Ma, J. Cai, H. Su, Network-based fuzzy control for nonlinear Markov jump systems subject to quantization and dropout compensations. Fuzzy Sets Syst. 371(15), 96–109 (2019)

C. Zhang, F. Gang, H. Gao, J. Qiu, H∞ filtering for nonlinear discrete-time systems subject to quantization and packet dropouts. IEEE Trans. Fuzzy Syst. 19(2), 353–365 (2011)

M. Yu, S. Bai, T. Yang, J. Zhang, Quantized output feedback control of networked control systems with packet dropout. Int. J. Control Autom. Syst. 16(5), 2559–2568 (2018)

X. Zhang, Y. Yin, H. Wang, S. He, Finite-time dissipative control for time-delay markov jump systems with conic-type non-linearities under guaranteed cost controller and quantiser. IET Control Theory Appl. 15(4) , 489–498 (2021)

H. Yang, S. Yin, Actuator and sensor fault estimation for time-delay Markov jump systems with application to wheeled mobile manipulators. IEEE Trans. Ind. Inf. 16(5), 3222–3232 (2019)

R. Tao, Y. Ma, C. Wang, Stochastic admissibility of singular Markov jump systems with time-delay via sliding mode approach. Appl. Math. Comput. 380, 125282 (2020)

T. Hou, Y. Liu, F. Deng, Stability for discrete-time uncertain systems with infinite Markov jump and time-delay. Sci. China Inform. Sci. 64(5), 152202 (2021)

H. Mukaidani, R. Saravanakumar, H. Xu, W. Zhuang, Stackelberg strategy for uncertain markov jump delay stochastic systems. IEEE Control Syst. Lett. 4(4), 1006–1011 (2020)

L. Zhang, Z. Ning, P. Shi, Input-output approach to control for fuzzy Markov jump systems with time-varying delays and uncertain packet dropout rate. IEEE Trans. Cybern. 45(11), 2449–2760 (2015)

P. Shi, Y. Yin, F. Liu, J. Zhang, Robust control on saturated Markov jump systems with missing information. Inf. Sci. 256, 123–138 (2014)

M. Shen, J.H. Park, D. Ye, A separated approach to control of Markov jump nonlinear systems with general transition probabilities. IEEE Trans. Cybern. 46(9), 2010–2018 (2016)

M. Dolgov, U.D. Hanebeck, Static output-feedback control of Markov jump linear systems without mode observation. IEEE Trans. Autom. Control 62(10), 5401–5406 (2017)

H. Shen, F. Li, Z.G. Wu, J.H. Park, Finite-time I2−I∞ tracking control for Markov jump repeated scalar nonlinear systems with partly usable model information. Inf. Sci. 332, 153–166 (2016)

R. Oliveira, A.N. Vargas, J. Val, P. Peres, Mode-independent h2 control of a dc motor modeled as a Markov jump linear system. IEEE Trans. Control Syst. Technol. 22(5), 1915–1919 (2014)

L. Zhang, H. Gao, Asynchronously switched control of switched linear systems with average dwell time. Automatica 46(5), 953–958 (2010)

H. Yan, H. Zhang, F. Yang, X. Zhan, C. Peng, Event-triggered asynchronous guaranteed cost control for Markov jump discrete-time neural networks with distributed delay and channel fading. IEEE Trans. Neural Netw. Learn. Syst. 29(8), 3588–3598 (2018)

K. Yin, D. Yang, J. Liu, H. Li, Asynchronous control for positive Markov jump systems. Int. J. Control Autom. Syst. 19, 646–654 (2020)

Y. Shen, Z.G. Wu, S. Peng, S. Zhan, H.R. Karimi, H∞ control of Markov jump time-delay systems under asynchronous controller and quantizer. Automatica 99, 352–360 (2019)

H. Wang, Y. Wang, G. Zhuang, Asynchronous H∞ controller design for neutral singular Markov jump systems under dynamic event-triggered schemes. J. Franklin Inst. 358(1), 494–515 (2020)

J. Wang, G. Zhuang, J. Xia, G. Chen, Generalized non-fragile asynchronous mixed H∞ and passive output tracking control for neutral Markov jump systems. Nonlinear Dyn. 106(1), 523–541 (2021)

M. Xue, H. Yan, H. Zhang, J. Sun, H.K. Lam, Hidden-Markov-model-based asynchronous H∞ tracking control of fuzzy Markov jump systems. IEEE Trans. Fuzzy Syst. 29(5), 1081–1092 (2020)

H. Shen, J.H. Park, L. Zhang, Z.G. Wu, Robust extended dissipative control for sampled-data Markov jump systems. Int. J. Control 87(8), 1549–1564 (2014)

S. Dong, K. Xie, G. Chen, M. Liu, Z.G. Wu, Extended dissipative sliding-mode control for discrete-time piecewise nonhomogeneous Markov jump nonlinear systems. IEEE Trans. Cybern. 52(9), 9219–9229 (2021)

Y. Ren, W. Wang, D. Hua, M. Shen, Incremental dissipative control for nonlinear stochastic Markovian jump systems. J. Franklin Inst. 358(7), 3757–3778 (2021)

Y. Xu, Y. Wang, G. Zhuang, F. Chen, A dynamic event-triggered H∞ control for singular markov jump systems with redundant channels. J. Frankl. Inst. 356(16), 10076–10101 (2019)

P. Zeng, F. Deng, X. Liu, X. Gao, Event-triggered H∞ control for network-based uncertain Markov jump systems under dos attacks. J. Frankl. Inst. 358(6), 2895–2914 (2021)

M. Xing, Y. Wang, G. Zhuang, M. Zhang, Dynamic event-based dissipative asynchronous control for t-s fuzzy singular Markov jump lpv systems against deception attacks. Nonlinear Dyn. 103, 1709–1731 (2020)

J. Xu, D. Ho, F. Li, W. Yang, Y. Tang, Event-triggered risk-sensitive state estimation for hidden Markov models. IEEE Trans. Autom. Control 64(10), 4276–4283 (2019)

M. He, T. Rong, J. Li, C. He, Adaptive dynamic surface full state constraints control for stochastic Markov jump systems based on event-triggered strategy. Appl. Math. Comput. 392(1), 125563 (2021)

S. Pan, J. Zhou, Z. Ye, Event-triggered dynamic output feedback control for networked Markovian jump systems with partly unknown transition rates. Math. Comput. Simul. 181(3), 539–561 (2021)

D. Yao, B. Zhang, P. Li, H. Li, Event-triggered sliding mode control of discrete-time Markov jump systems. IEEE Trans. Syst. Man Cybern.: Syst. 49(10), 2016–2025 (2019)

H. Chen, R. Liu, W. Xia, Z. Li, Event-triggered filtering for delayed Markov jump nonlinear systems with unknown probabilities. Processes. 10(4), 769 (2022)

J. Li, Y. Niu, Y. Yang, Output-feedback control under hidden Markov analog fading and redundant channels. IEEE Trans. Circuits Syst. II Express Briefs 68(8), 2922–2926 (2021)

S. Dong, C. Chen, M. Fang, Z.G. Wu, Dissipativity-based asynchronous fuzzy sliding mode control for t-s fuzzy hidden Markov jump systems. IEEE Trans. Cybern. 50(9), 4020–4030 (2020)

X. Li, J. Lam, H. Gao, X. Xiong, H∞ and H2 filtering for linear systems with uncertain Markov transitions. Automatica 67, 252–266 (2016)

H. Gao, T. Chen, J. Lam, A new delay system approach to network-based control. Automatica 44(1), 39–52 (2008)

J. Tao, R. Lu, P. Shi, H. Su, Z.G. Wu, Dissipativity-based reliable control for fuzzy Markov jump systems with actuator faults. IEEE Trans. Cybern. 47(9), 2377–2388 (2017)

Z.G. Wu, S. Dong, H. Su, C. Li, Asynchronous dissipative control for fuzzy Markov jump systems. IEEE Trans. Cybern. 48(8), 2426–2436 (2018)

Acknowledgements

Not applicable.

Funding

This study was funded partially by the National Natural Science Foundation of China (61603133), partially by the Zhejiang Provincial Public Welfare Technology Application Research Program of China (LGG21E020001), partially by the Sichuan Science and Technology Program of China (2020YFH0124) and partially by the Zigong Key Science and Technology Project of China (2020YGJC01).

Author information

Authors and Affiliations

Contributions

HC, RL and PH participated in methodology and investigation; HC and RL participated in simulation and writing. ZL participated in supervision. All authors have reviewed the manuscript and have approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that there exist no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, H., Liu, R., He, P. et al. Asynchronous dissipative control for networked time-delay Markov jump systems with event-triggered scheme and packet dropouts. J Wireless Com Network 2022, 82 (2022). https://doi.org/10.1186/s13638-022-02156-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13638-022-02156-w