Abstract

Altmetrics measure the digital attention received by a research output. They allow us to gauge the immediate social impact of an article by taking real-time measurements of how it circulates in the Internet. While there are several companies offering attention scores, the most extensive are Altmetric.com (Altmetric Attention Score—AAS) and Plum X (Plum Print). As this is an emerging topic, many medical specialities have tried to establish if there is a relationship between an article’s altmetric data and the citations it subsequently receives. The results have varied depending on the research field. In radiology, the social network most used is Twitter and the subspeciality with the highest AAS is neuroimaging. This article will review the process involved from the start when an article is published through to finally obtaining its altmetric score. It will also address the relationship between altmetrics and more traditional approaches focusing on citations in radiology and will discuss the advantages and limitations of these new impact indicators.

Similar content being viewed by others

Key points

-

Altmetrics measure the digital attention received by an article using multiple online sources.

-

Altmetrics should not been seen as alternatives, but rather complementary, to more traditional measurements.

-

In radiology, articles with nonimaging content (for example, education, quality or safety) have more altmetric data that those with more radiology-specific content. Within subspecialities, articles on neuroimaging are those that gain most attention.

Background

Bibliometric indicators (BI) are numerical data linked to the production and consumption of scientific works [1]. The BI are calculated objectively using a large volume of data available in international reference databases [2]. As research results are shared through publications, the BI have traditionally evaluated scientific production and its impact on the community [3]. In general, the BI can be classified by whether they apply to authors or research groups (for example, the H index or collaboration indices) or if they are specific to journals (for example, the Impact Factor, the Eigenfactor or the Scimago Journal Rank) [4].

The Internet’s evolution over recent years has enabled the creation of academic social networks and this has, in turn, brought about significant changes to the way science is disseminated [5]. This new way of distributing information is possible thanks to the development of “Web 2.0” or the “Social Web” which enables its users to interact and collaborate, thus making contributions to, sharing and commenting on content [6].

Given the social and communicative nature of science, many researchers have started using social networks, blogs, repositories (virtual spaces where articles are stored and accessed to download) and other platforms which can be used to share and access scientific information [6]. In this context emerges the term, altmetrics, to define some new indicators that analyse the social impact and visibility of a scientific publication [7,8,9].

This article aims to help the reader interpret the altmetrics, understand their relationship with traditional citations and discuss their advantages and limitations.

Sources, providers and attention scores

The process which starts with the research output’s publication through to obtaining its attention score is complex and meticulous. Figure 1 is a summary of all the stages involved and is explained in more detail below.

Process used to obtain ATTENTION SCORE. Starts with the publication of a research output which has an assigned Digital Identifier. Different actions are performed on the different attention sources (for example, saves, captures, mentions, etc.) These are all integrated by the altmetric provider (the most extensive are Altmetric.com and Plum Analytics) which after applying their own formulas, determine the Attention Score (Altmetric Attention Score in the case of Altmetric and Plum Print in Plum Analytics)

Research output

It is possible to obtain an Attention Score for any scientific work (research output) (books, book chapters, academic articles, presentations, theses, grey literature, clinical trials, etc.). The only thing required to be able to record the online attention of any given document is that it has at least one digital identifier (DI).

The type of digital identifier depends on the publication type [7]. For example, academic articles are identified by the Digital Object Identifier (DOI) or PubMed Identifier (PMID), a book by its International Standard Book Number (ISBN) and a clinical trial by its ClinicalTrials.gov Identifier (NCT Number) [10].

Depending on the repository, the research output may also be identified with a different ID. For example, the arXiv repository which indexes articles from mathematics, physics and quantitative biology uses arXiv IDs [11]; or the Social Science Research Network (SSRN) IDs associated with the SSRN, owned by Elsevier, which incorporates articles from various disciplines such as Health Science and Social Science and Humanities [12].

There are also researcher IDs, for example, the Open Research and Contributor Identifier (ORCID) [13].

Only links which contain the work's ID are included in the altmetric calculations [6]. This detail is important to note as it is common to share an image with the title and article authors but although this is a means of dissemination, it does not count for the altmetric calculation.

Attention sources

Altmetrics measure the digital attention that an article receives by using data from different online resources. While sources vary widely, we can group the main ones into four main categories [5, 7, 14,15,16].

-

Social Networks. Social networks, both general ones (such as Twitter [17], Facebook [18] or YouTube [19]) and scholarly social networks (SlideShare [20], LinkedIn [21], ResearchGate [22] or Academia.edu [23] can be used by researchers to disseminate publications and network with other professionals [24].

-

Online reference managers or Bookmarking sites. These allow users to save or insert citations online and share this information with other professionals [25]. Potential interest is determined by the number of users that save any given article. The most well known are Zotero [26] and Mendeley [27]

-

Blogs: In order to calculate the altmetrics, all blog types, both science blogs and more general ones, are included. For a blog to be classified as a science blog (for example, Researchblogging website [28]), it has to not only deal with scientific subject matter, but also be produced by esteemed members of the scientific and academic community (a researcher, university professor, scholarship researcher or even a science journalist) [29]. General blogs can be published by anyone.

-

Open Access repository: These are digital platforms for academic research which can be accessed immediately, permanently and free of charge. Examples include Figshare [30] and Directory of Open Access Journals (DOAJ) at Lund University [31].

In addition to these four groups, there are also many other sources which act as altmetric inputs [32] including Faculty of 1000 (F1000) [33] which brings together and evaluates the most important published articles in any given field (including medicine) following the recommendations given by a team of scientists; collaborative encyclopaedias such as Wikipedia [34]; policy documents and social news websites such as Reddit [35].

Type of actions

Published work is disseminated and professionals receive it without actively seeking. If it looks interesting to us, we may adopt an active role and share it, download it or comment on it thus contributing to its increase in visibility and dissemination [8]. This affects the article’s social impact.

Each source has an associated action of one type or another [9, 36,37,38]:

-

Likes and shares: Social Networks

-

Viewed: HTML views, PDF downloads

-

Discussed or Mentions: blog post, comments, reviews, policy documents, Wikipedia.

-

Saved or Captures: bookmarks and saves in electronic Reference Managers.

Plum X [39] includes a fifth action, the citations. This one combines traditional citations received from citation indices such as Scopus or PubMed Central) and new citations such as clinical citations (Clinical Guidelines), policy citations or patent citations which help evaluate the social impact.

Altmetric providers

There are several companies which offer altmetric services. Each one tracks a combination of different sources (even though many coincide) and uses different formulas to calculate the attention score [40]. Table 1 summarises the main sources used by each company. The most extensive are Altmetric and Plum X.

These platforms are key research tools with regard to altmetrics and are increasingly used to evaluate articles, authors and research [41].

Altmetric

Altmetric (www.altmetric.com) [42] was created in 2011 by Euan Adie with funding from Digital Science. It's important to differentiate the term altmetrics (the general term used to define these new “social impact” indicators) from “Altmetric” or “Altmetric Attention Score” (AAS) which are specific to this company [42].

This company is used by publishers such as Springer, Nature, Publishing Group and Biomed Central [9] and is included in repositories such as the University of Queensland institutional repository.

The altmetric score for this company is referred to as the Altmetric Attention Score (AAS).

Three factors influence the altmetric calculations [42]:

-

Volume (how many times an article is mentioned)

-

Sources (where do the mentions come from)

-

Authors (of each mention, in order to not count the times an author interacts with his/her own work)

The AAS total is a number that is calculated depending on the source and frequency that it has been used [5, 9]. For example, a mention in a blog has a higher value than a Tweet (Table 2). Exactly how it is calculated is not known making it impossible for an individual to calculate the index [43].

Plum X

This was created in 2012 by Andrea Michalek and Michael Buschman from Plum Analytics [39, 44, 45]. In 2017, Plum Analytics was purchased by Elsevier and so Plum X can track the online activity of any given article indexed in the Scopus database.

Plum X divides the sources into 5 categories [9, 44, 45], each represented by a different colour: usage (green); captures (purple); social media (blue); mentions (yellow) and citations (red). Plum Print is the graphic display of data used by Plum X. It does not provide a total score but rather indicates the number of metrics for each of the five categories, making it easier to understand than the previous example.

Lagotto (PLOS Article-Level Metrics)

Lagotto was the first altmetrics data provider, created in 2009 by the Public Library of Science (PLOS) [45]. It has only been adopted by three publishers (PLOS, Copernicus and Public Knowledge Project) [40].

ImpactStory

ImpactStory was developed by Jason Priem and Heather Piwowar in 2011 and can currently be found integrated in the Our Research website [46]. Unlike those already mentioned, this one builds the altmetric profile of a researcher rather than a piece of research. To do this, they use ORCID and a Twitter account which creates a profile with a list of their publications mentioned online [40]. The users create their curriculum vitae (CV) by uploading all their work (articles, slide presentations, posters, etc.). Following this, ImpactStory tracks where each item has been cited (using Scopus), where it has been seen and read (Mendeley) and how it has been discussed (Tweets and blog comments). For each item, it indicates how to cite it, its DOI and its PubMed ID. It also allows you to download the CVs [9].

Crossref even data

Crossref even data (CED) [47] was created in 2016. It is a more limited tool as, for each event, it only sets out the information linked to the DOI. For example, it shows an article's mention on Twitter but not the number of tweets [40].

Altmetrics are not alternative metrics

Many studies have researched if there is a relationship between altmetrics and the number of citations an article goes on to receive [48], with Twitter being the most studied source.

Several authors [38, 49,50,51,52,53,54] have found a relationship between the number of tweets and the number of citations an article goes on to receive, although generally speaking, this correlation is low. Robinson-García [38] found that only 19% of the articles indexed on the Web of Science (WOS) had available altmetric data while Haustein [54] concluded that less than 10% of the articles indexed on PubMed are mentioned on Twitter. There may be a correlation between the number of tweets an article receives in the first 3 days and the number of citations it goes on to receive [50], but this assertion cannot be guaranteed as only one study has investigated this.

Many medical specialities have also analysed the relationship between the AAS (obtained from altmetric.com), the number of citations an article receives and the IF of the journal where it is published [55,56,57,58,59,60,61,62,63,64]. Even though the results vary significantly between the different specialities, generally speaking this correlation is also weak.

However, altmetrics appear to be constantly evolving and expanding. Some studies have analysed two time periods [55,56,57,58,59,60] revealing that there is a stronger correlation between the AAS, number of citations and IF for more recent articles.

Different factors can influence the level of social attention an article receives (and in turn, the Attention Score it achieves) and these include both the type of article and its topic: Editorials are the most popular type of articles on Twitter (yet they receive less citations) as opposed to more lengthy articles (which are shared less on social networks but are cited more often) [65]. Another observation is that more popular topics such as erectile dysfunction or sexual medicine have a higher Attention Score than other articles in urology even though they are not the ones most cited [66]. These aspects support the idea that altmetrics measure the social impact more than the academic or scientific impact which are measured by the BI.

Another line of investigation has researched the correlation between the number of citations and downloads on Mendeley (finding a moderate correlation in Nature, Science [67] and PLoS [68]) and between citations and the appearance of the articles in blogs. In these cases, the articles most discussed are the ones that went on to receive the most citations [69].

A higher or lower correlation with the number of citations depends on the provider. Peters [70] compared data from Plum X, ImpactStory and Altmetric.com with the number of citations, concluding that although the correlation was generally low, Plum X was the provider that showed the highest correlation.

Therefore, as most of the studies establish a low correlation between the AAS and the number of citations the article goes on to receive, generally speaking, altmetrics cannot be currently considered to be alternative metrics to the traditional BI as what they measure is the study’s social impact [9]. Popular topics and opinion articles usually have higher attention scores than an original article on a specific topic.

Altmetrics and radiology

Even though Twitter is the social network most used by radiologists, the social network’s following is low [71]. Only 14 of the 50 journals with the highest impact factors have a Twitter profile [72]. The use of Instagram is limited to disseminating clinical cases and, in addition, is not included in the Attention Score calculations [73].

Blogs and citations have also been studied with regard to radiology, demonstrating that sharing scientific material on a blog promoted on social networks significantly increases the study’s dissemination [74].

At present, there are few original studies in diagnostic imaging that research altmetric dynamics.

Rosenkrantz [75] analysed original articles published in Academic Radiology, American Journal of Roentgenology, Journal of the American College of Radiology and Radiology, comparing the AAS and the number of citations received. Out of the 892 articles, almost all obtained at least one citation on WOS while only 41.8% had an AAS available. Mendeley, Twitter and Facebook were the sources most used. The most cited articles were those on topics strictly related to radiology while those dealing with topics that crossed into other fields (for example, educational aspects in radiology) were those that achieved the highest AAS. This resonates with the social aspect of altmetrics: the more cross-disciplinary the topic, the more interest it generates [4, 9].

Neuroimaging is the subspeciality with the highest AAS [76], and Frontiers in Human Neuroscience is the journal with the highest concentration of AAS followed by Radiology and Neurology [77].

Differences according to the type of article, the topic or publication date are also observed in nuclear medicine [78].

In image diagnostics, there are many subspecialities and topics for which there is no research on the workings of altmetrics or their relationship with the number of citations received. Nor have there been studies to investigate whether or not there exists a relationship between altmetrics and the publication date, as researched for other specialities. Therefore, there remains the need for more original studies in our field in order to arrive at more solid conclusions.

Altmetrics in Insights into Imaging

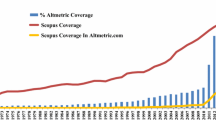

In order to discover the characteristics of altmetrics in the journal Insights into Imaging, articles published from 1 July 2019 to 31 October 2020 were analysed. From the included articles’ web pages, the Altmetric.com browser plug-in was used to access the articles’ Altmetric Attention Score and detailed source information page. The overall score, as well as the number of mentions the article received on all listed online platforms, was also registered.

Of the 168 articles, 119 (71%) had some altmetric data registered and therefore had an AAS assigned (range 112–1). This data is superior to that obtained by Rosenkrantz [70] whose research, as previously discussed, revealed that less than half of the articles he analysed had some type of altmetric data. This reconfirms the exponential growth of altmetrics in recent years. Like other specialities, the source most used was Twitter (112 articles received at least one Tweet) followed by Facebook (49), blogs (4), news outlets (3) and Wikipedia (1).

The articles with the highest AAS did not feature a dominant topic but it is true that articles on artificial intelligence sparked most interest. It is also true that articles with the highest AAS were more cross-disciplinary as observed by Rosenkrantz [75] (Table 3).

The emerging role of altmetrics and their main limitations

Altmetrics enable us to view the immediate social impact an article has [79] thanks to a measurement which is provided in real time showing how an article is shared on the Internet [39]. They also provide immediate feedback to the researcher on the social interest that their work has generated [5, 7, 39]. This immediacy contrasts with the traditional BI which add up the citations an article receives over a period of 2 or 3 years [47, 80].

The growing increase in scientific publications makes it difficult for professionals to remain up-to-date. Both this and the fact that we are increasingly using social networks means that sharing information through the Internet has become the most common way that we keep ourselves informed. The information that we see when we look at our phones can constitute the first filter to know which articles are catching the attention of our colleagues [32].

But we have to interpret this scientific material sharing with caution: the fact that an article is shared or discussed on social networks does not guarantee it is of high quality [32]. When referring to general social networks (such as Twitter or Facebook) any user can share and help disseminate research without being an expert in the subject [47, 81]. In fact, as seen previously, the articles on erectile dysfunction are the ones with the highest AAS in urology and yet there is a weak correlation between this and the number of citations they go on to receive [66].

It should also be taken into consideration that the altmetrics are expressed in page visits or social network mentions. However, the act of sharing or downloading a study does not necessarily mean that the user has read and understood it or that it will be useful for the scientific community [79, 82]. Citing a scientific study involves reading the manuscript, critically analysing it, comparing the results and incorporating the citation in the article being drafted. All this work is far from the effort required to retweet a study [79]. Furthermore, traditional citations can be tracked, whereas, knowing who is behind shares is not always clear with altmetrics (you can act under a pseudo name or use several accounts at once) [37], meaning that altmetrics can be easily manipulated.

Some authors suggest that altmetrics are useful for assessing new researchers that have not had the opportunity to accumulate many citations in their short time in academia [79]. For this reason, some researchers have incorporated them into their curriculum vitae (CV) by asserting, for example, which articles have been recommended by Faculty of 1000 or considered “Highly Accessed” (in other words, a high number of downloads) or by incorporating data from AAS or Plum Print [42, 83]. However, this puts those authors who do not use social networks at a clear disadvantage [32, 37]. A researcher with 2000 followers on Twitter will have higher altmetric indices than one that does not use it.

Beyond their incorporation in CVs, there are authors who propose using altmetrics as criteria for distributing funds for grants or projects [32, 84]. They base this opinion on the altmetrics’ immediate character. However, giving altmetrics a role in assessing a researcher or research group is problematic as any individual researcher or research group can develop multiple strategies to increase the social network dissemination of its publications, and in turn, its Attention Scores: put a link to the article on social networks, post it in blogs, store it in an institutional repository, add the study to professional profiles such as LinkedIn or Google Scholar or send copies of the article by email to colleagues and other authors that are influential in your research field [44]. All this, added to the fact that any social network user can have several different identities means that it is impossible to gauge the real social impact a study or research group is making. For this reason, the idea of distributing funds according to these indicators does not seem reliable at present.

Finally, another major limitation regarding altmetrics is the impossibility of reproducing them and data inconsistency depending on the provider [79, 85]. We do not really know what formula is used to calculate the AAS or Plum Print. Is a tweet from a blog that revises an article worth more than a tweet from a journalist who comments on the article? This type of detail is defined by each provider and is not explained, resulting in a lack of transparency [79, 79]. Given the diversity of sources, collecting all the inputs takes a lot of time and this also contributes to the lack of consistency between providers [84]. Table 4 shows the altmetric data for the article “What the radiologist should know about artificial intelligence—an ESR White paper”, published in Insights into Imaging. This data differs depending on whether the AAS or Plum Print is analysed even though both providers were consulted on the same day.

The fact that the altmetrics cannot be reproduced is due in part to the publications presenting several different versions (preprints, postprints) and also dependent on which repository it is included in [37], and this can generate ambiguity. To guarantee altmetrics transparency (regarding the way the data aggregators obtain and process the information) [9], the National Information Standards Organization (NISO) developed the NISO Altmetrics Initiative between 2013 and 2016 to establish definitions, calculation methods, improvement in data quality and the use of common identifiers to validate altmetric data [86].

While generally speaking there is a weak relationship between the AAS and the number of citations received, as we saw above, the correlation increases as the publication year becomes more recent. This means that there is a stronger relationship between the AAS and the number of citations received for the most recent articles [55,56,57,58,59,60, 82]. A recently accepted article has a digital identifier immediately assigned. Editors can use this to know which articles have gained the most social attention and include them in the year’s earlier issues, thus generating a greater number of citations for its IF [42].

Conclusion

Altmetrics measure the digital attention received by an article using multiple online sources. Due to the fact that data is harvested from a large number of sources and that there is discrepancy between providers, altmetric data should be interpreted with caution. However, it is a constantly evolving and expanding concept with ever increasing correlation between the AAS, article citations and IF. The social impact generated by an article can be useful for discovering which articles are the most popular at any given moment and can even help the journal editor know which articles may go on to be the ones that receive the most citations. However, altmetrics cannot be used to assess new researchers or distribute funds because the way they are calculated is unknown, they are susceptible to manipulation and there are high levels of inconsistency between providers. For all these reasons, altmetrics are not an alternative to the traditional BI, nor will they substitute them. Perhaps in the future, if the methodological limitations of altmetrics are resolved, all facets of a researcher could be evaluated by combining both parameters.

Availability of data and materials

The structure of the article is “narrative review” (it has not data analysis). This item is not applicable.

Abbreviations

- AAS:

-

Altmetric Attention Score

- BI:

-

Bibliometric indicators

- IF:

-

Impact factor

References

Durieux V, Gevenois PA (2010) Bibliometric indicators: quality measurements of scientific publication. Radiology 255:342–351. https://doi.org/10.1148/radiol.09090626

Alfonso F (2010) The long pilgrimage of Spanish biomedical journals toward excellence. Who helps? Quality, impact and research merit. Endrocrinol Nutr 57:110–120. https://doi.org/10.1016/j.endonu.2010.02.003

Choudhri AF, Siddiqui A, Khan NR, Cohen HL (2015) Understanding bibliometric parameters and analysis. Radiographics 33:736–746. https://doi.org/10.1148/rg.2015140036

García Villar C, Santos G (2021) Bibliometric indicators to evaluate scientific activity. Radiologia. https://doi.org/10.1016/j.rx.2021.01.002

Akshai D, Baheti AD, Bhargava P (2017) Altmetrics: a measure of social attention toward scientific research. Curr Probl Diagn Radiol 46:391–392. https://doi.org/10.1067/j.cpradiol.2017.06.005

Priem J, Hemminger BH (2010) Scientometrics 2.0: new metrics of scholarly impact on the social web. First Monday 15:1–16. https://doi.org/10.5210/fm.v15i7.2874

Taberner R (2018) Altmetrics: beyond the impact factor. Actas Dermosifiliogr (Engl Ed) 109:95–97. https://doi.org/10.1016/j.ad.2018.01.002

Priem J, Taraborelli D, Groth P, Neylon C (2010). Alt-metrics: a manifesto. http://altmetrics.org/manifesto/. Accessed 15 Aug 2020

Melero R (2015) Altmetrics a complement to conventional metrics. Biochem Med (Zagreb) 25:152–160. https://doi.org/10.11613/BM.2015.016

Clinical Trials website. https://clinicaltrials.gov/. Accessed 21 July 2020

Arxiv website. https://arxiv.org/. Accessed 20 July 2020

Social Science Research Network website. https://www.ssrn.com/index.cfm/en/. Accessed 30 Sept 2020

ORCID website. https://orcid.org/. Accessed 22 July 2020

Warren HR, Raison N, Dasqupta P (2017) The rise of Altmetrics. JAMA 317:131–132. https://doi.org/10.1001/jama.2016.18346

Priem J, Groth P, Taraborelli D (2012) The Altmetrics collection. PLoS One 7:e48753. https://doi.org/10.1371/journal.pone.0048753

Hogan AM, Winter DC (2017) Changing the rules of the game: how do we measure success in social media? Clin Colon Rectal Surg 30:259–263. https://doi.org/10.1055/s-0037-1604254

Twitter website. https://twitter.com. Accessed 20 Oct 2020

Facebook website. https://www.facebook.com/. Accessed 20 Oct 2020

Youtube website. https://www.youtube.com. Accessed 30 Oct 2020

Slideshare website. https://es.slideshare.net/. Accessed 22 Oct 2020

LinkedIn website. https://es.linkedin.com/. Accessed 23 September 2020

Researchgate website. https://www.researchgate.net/. Accessed 20 Oct 2020

Academia.edu website. https://www.academia.edu/. Accessed 1 Nov 2020

Williams AJ, Peck L, Ekins S (2017) The new alchemy: online networking, data sharing and research activity distribution tools for scientists. F1000Res 6:1315. https://doi.org/10.12688/f1000research.12185.1

Li X, Thelwall M, Giustini D (2012) Validating online reference managers for scholarly impact measurement. Scientometrics 91:461–471. https://doi.org/10.1007/s11192-011-0580-x

Zotero website. https://www.zotero.org/. Accessed 1 Nov 2020

Mendeley website. https://www.mendeley.com. Accessed 10 Oct 2020

Researchblogging website. http://researchblogging.org/news/. Accessed 31 Oct 2020

Torres-Salinas D, Cabezas-Clavijo A. Blogs as a new channel of scientific communication. http://eprints.rclis.org/14078/1/Torres-Salinas,_Daniel_y_Cabezas-Clavijo,_Alvaro._Los_blogs_como_nuevo_medio_de_comunicacion_cientifica.pdf. Accessed 28 February 2021.

Figshare website. https://figshare.com/. Accessed 2 June 2020.

Directory of Open Access Journals (DOAJ) website. https://doaj.org/. Accessed 20 July 2020

Crotty D (2017) Altmetrics. Eur Heart J 38:2647–2648. https://doi.org/10.1093/eurheartj/ehx447

Faculty 1000 website. https://f1000.com/. Accessed 1 July 2020

Wikipedia website. https://es.wikipedia.org/wiki/Wikipedia:Portada. Accessed 1 Nov 2020

Reddit website. https://www.reddit.com/. Accessed 15 Sept 2020

Bornmann L, Haunschild R (2017) Does evaluative scientometrics lose its main focus on scientific quality by the new orientation towards societal impact? Scientometrics 110:937–943. https://doi.org/10.1007/s11192-016-2200-2

Butler JS, Kaye ID, Sebastian AS et al (2017) The evolution of current research impact metrics: from bibliometrics to altmetrics? Clin Spine Surg 30:226–228. https://doi.org/10.1097/BSD.0000000000000531

Robinson-García N, Torres-Salinas D, Zahedi Z, Costas R (2014) New data, new possibilities: exploring the insides of Altmetric.com. El profesional de la información 23:359–366. https://doi.org/10.3145/epi.2014.jul.03

PlumX website. https://plumanalytics.com/learn/about-metrics/. Accessed 29 Oct 2020

Meyer HS, Artino AR, Maggio LA (2017) Tracking the Scholarly conversation in health professions education: an introduction to altmetrics. Acad Med 92:1501. https://doi.org/10.1097/ACM.0000000000001872

Ortega JL (2020) Altmetrics data providers: a meta-analysis review of the coverage of metrics and publications. El profesional de la información 1:e290107. https://doi.org/10.3145/epi.2020.ene.07

Altmetric website. https://www.altmetric.com/. Accessed 1 Nov 2020

Elmore SA (2018) The Altmetric Attention Score: what does it mean and why should I care? Toxicol Pathol 46:252–255. https://doi.org/10.1177/0192623318758294

Trueguer NS, Thoma B, Hsu CH, Sullivan D, Peters L, Lin M (2015) The Altmetric Score: a new measure for article-level dissemination and impact. Ann Emerg Med 66:549–553. https://doi.org/10.1016/j.annemergmed.2015.04.022

Akers KG (2017) Introducing altmetrics to the Journal of the Medical Library Association. J Med Libr Assoc 105:213–215. https://doi.org/10.5195/jmla.2017.250

Our Research website. https://our-research.org/. Accessed 1 Nov 2020

CrossRef Data website. https://www.crossref.org/. Accessed 22 Oct 2020

Huang W, Wang P, Wu O (2018) A correlation comparison between Altmetric Attention Scores and citations for six PLOS journals. PLoS One 13:e0194962. https://doi.org/10.1371/journal.pone.0194962

Thelwall M, Haustein S, Lariviére V, Sugimoto CR (2013) Do altmetrics work? Twitter and ten other social web services. PLoS One 8:e64841. https://doi.org/10.1371/journal.pone.0064841

Eysenbach G (2011) Can tweets predict citations? Metrics of social impact based on Twitter and correlation with traditional metrics of scientific impact. J Med Internet Res 13:e123. https://doi.org/10.2196/jmir.2012

Xia F, Su X, Wang W, Zhang C, Ning Z, Lee I (2016) Bibliographic analysis of Nature base on Twitter and Facebook Altmetrics data. PLoS One 11:e0165997. https://doi.org/10.1371/journal.pone.0165997

Ladeiras-Lopes R, Clarke S, Vidal-Perez R, Alexander M, Lüscher TF (2020) Twitter promotion predicts citation rates of cardiovascular articles: a preliminary analysis from the ESC Journals Randomized Study. Eur Heart J 41:3222–3225. https://doi.org/10.1093/eurheartj/ehaa211

De Winter J (2015) The relationship between tweets, citations, and article views for PLoS One articles. Scientometrics 102:1773–1779. https://doi.org/10.1007/s11192-014-1445-x

Haustein S, Peters I, Sugimoto CR, Thelwall M, Larivière V (2014) Tweeting biomedicine: an analysis of tweets and citations in the biomedical literature. J Am Soc Inf Sci 65:656–669. https://doi.org/10.1002/asi.23101

Nocera AP, Boyd CJ, Boudreau H, Hakim O, Rais-Bahrami S (2019) Examining the correlation between Altmetric Score and citations in the Urology Literature. Urology 134:45–50. https://doi.org/10.1016/j.urology.2019.09.014

Powell AG, Bevan V, Brown C, Lewis WG (2018) Altmetric versus bibliometric perspective regarding publication impact and force. World J Surg 42:2745–2756. https://doi.org/10.1007/s00268-018-4579-9

Mullins CH, Boyd CJ, Corey BL (2020) Examining the correlation between Altmetric Score and Citations in the General Surgery literature. J Surg Res 248:159–164. https://doi.org/10.1016/j.jss.2019.11.008

Chang J, Desai N, Gosain A (2019) Correlation between Altmetric Score and citations in Pediatric Surgery Core Journals. J Surg Res 243:52–58. https://doi.org/10.1016/j.jss.2019.05.010

Kunze KN, Polce EM, Vadhera A et al (2020) What is the predictive ability and academic impact of the Altmetrics Score and Social Media Attention? Am J Sports Med 48:1056–1062. https://doi.org/10.1177/0363546520903703

Kaul V, Bhan R, Stewart NH et al (2019) Study comparing traditional versus Alternative Metrics to measure the impact of the critical care medicine literature. Crit Care Explor 1:e0028. https://doi.org/10.1097/CCE.0000000000000028

Allen HG, Stanton TR, Di Pietro F, Moseley GL (2013) Social media release increases dissemination of original articles in the clinical pain sciences. PLoS One 8:e68914

Ruan QZ, Chen AD, Cohen JB, Singhal D, Lin SJ, Lee BT (2018) Alternative metrics of scholarly output: the relationship among Altmetric Score, Mendeley reader Score, citations and downloads in Plastic and Reconstructive Surgery. Plast Reconstr Surg 141:801–809. https://doi.org/10.1097/PRS.0000000000004128

Fassoulaki A, Vassi A, Kardasis A, Chantziara V (2018) Altmetrics should not be used for ranking of anaesthesia journals. Br J Anaesth 121:514–516

Barakat AF, Nimri N, Shokr M et al (2019) Correlation of Altmetric Attention Score and Citations for high-impact General Medicine journals: a cross-sectional study. J Gen Intern Med 34:825–827. https://doi.org/10.1007/s11606-019-04838-6

Haustein S, Costas R, Lariviére V (2015) Characterizing social media metrics of scholarly papers: the effect of documents properties and collaboration patterns. PLoS One 10:e0120495. https://doi.org/10.1371/journal.pone.0120495

O’Connor EM, Nason GJ, O’Kelly F, Manecksha RP, Loeb S (2017) Newsworthiness vs scientific impact: are the most highly cited urology papers the most widely disseminated in the media? BJU Int 120:441–454. https://doi.org/10.1111/bju.13881

Li X, Thelwall M, Giustini D (2012) Validating online reference managers for scholarly impact measurement. Scientometrics 91:461–471

Priem J, Piwowar HA, Hemminger BM (2012) Altmetrics in the wild: using social media to explore scholarly impact. ArXiv.org. http://arxiv.org/abs/1203.4745. Accessed 21 Jan 2013.

Shema H, Bar-Ilan J, Thelwall M (2014) Do blog citations correlate with a higher number of future citations? Research blogs as a potential source for alternative metrics. J Am Soc Inf Sci 65:1018–1027. https://doi.org/10.1002/asi.23037

Peters I, Kraker P, Lex E, Gumpenberger C, Gorraiz J (2016) Research data explored: an extended analysis of citations and altmetrics. Scientometrics 107:723–744. https://doi.org/10.1007/s11192-016-1887-4

Prabhu V, Rosenkrantz AB (2015) Enriched audience engagement through Twitter: should more Academic Radiology Departments seize the opportunity? J Am Coll Radiol 12:756–759. https://doi.org/10.1016/j.jacr.2015.02.016

Kelly BS, Redmond CE, Nason GJ, Healy GM, Horgan NA, Heffernan EJ (2016) The use of Twitter by radiology journals: an analysis of twitter activity and impact factor. J Am Coll Radiol 13:1391–1396. https://doi.org/10.1016/j.jacr.2016.06.041

Prabhu V, Munawar K (2020) Radiology on Instagram: analysis of public accounts and identified areas for content creation. Acad Radiol. https://doi.org/10.1016/j.acra.2020.08.024

Hoang JK, McCall J, Dixon AF, Fitzgerald RT, Gaillard F (2015) Using social media to share your Radiology Research: how effective is a blog post? J Am Coll Radiol 12:760–765. https://doi.org/10.1016/j.jacr.2015.03.048

Rosenkrantz AB, Abimbola A, Singh K, Duszak R (2017) Alternative Metrics (“Altmetrics”) for assessing article impact in popular general Radiology Journals. Acad Radiol 24:891–897. https://doi.org/10.1016/j.acra.2016.11.019

Moon JY, Yun EJ, Yoon DY et al (2020) Analysis of the altmetric top 100 articles with the highest altmetric attention scores in medical imaging journals. Jpn J Radiol 38:630–635. https://doi.org/10.1007/s11604-020-00946-0

Kim ES, Yoon DY, Kim HJ et al (2019) The most mentioned neuroimaging articles in online media: a bibliometric analysis of the top 100 articles with the highest Altmetric Attention Scores. Acta Radiol 60:1680–1686. https://doi.org/10.1177/0284185119843226

Baek S, Yoon DY, Lim KL et al (2020) Top-cited articles versus top Altmetric articles in nuclear medicine: a comparative bibliometric analysis. Acta Radiol. https://doi.org/10.1177/0284185120902391

Dinsmore A, Allen L, Dolby K (2014) Alternative perspectives on impact: the potential of ALMs and Altmetrics to inform funders about research impact. PLoS Biol 12:e1002003. https://doi.org/10.1371/journal.pbio.1002003

Wang J (2013) Citation time window choice for research impact evaluation. Scientometrics 94:851–872. https://doi.org/10.1007/s11192-012-0775-9

Das AK, Mishra S (2014) Genesis of altmetrics or article-level metrics for measuring efficacy of scholarly communications: current perspectives. J Scientometr Res 3:82–92. https://doi.org/10.4103/2320-0057.145622

Cheung M (2013) Altmetrics: too soon for use in assessment. Nature 494:176. https://doi.org/10.1038/494176d

Piwowar H, Priem J (2013) The power of altmetrics on a CV. Bull Am Soc Inf Sci Technol 39:10–13. https://doi.org/10.1002/bult.2013.1720390405

Thelwall M (2020) The pros and cons of the use of Altmetrics in Research Assessment. Sch Assess Rep 2:2–9. https://doi.org/10.29024/sar.10

Zahedi Z, Fenner M, Costas R (2015) How consistent are altmetric providers? Study of 1000 PLoS One publications using the PLOS ALM, Mendeley and Altmetric.com APIs. J Contrib 4:5. https://doi.org/10.6084/m9.figshare.1041821.v2

National Information Standards Organization (NISO) website. https://www.niso.org/standards-committees/altmetrics. Accessed 25 Sept 2020

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

CGV is the sole author of this article: I am the only contributor in the bibliographic search, the read of the references and writing the manuscript. As the sole author, I read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The author declares no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

García-Villar, C. A critical review on altmetrics: can we measure the social impact factor?. Insights Imaging 12, 92 (2021). https://doi.org/10.1186/s13244-021-01033-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13244-021-01033-2