Abstract

Background

Attenuated social attention is a key marker of autism spectrum disorder (ASD). Recent neuroimaging findings also emphasize an altered processing of sensory salience in ASD. The locus coeruleus–norepinephrine system (LC-NE) has been established as a modulator of this sensory salience processing (SSP). We tested the hypothesis that altered LC-NE functioning contributes to different SSP and results in diverging social attention in ASD.

Methods

We analyzed the baseline eye-tracking data of the EU-AIMS Longitudinal European Autism Project (LEAP) for subgroups of autistic participants (n = 166, age = 6–30 years, IQ = 61–138, gender [female/male] = 41/125) or neurotypical development (TD; n = 166, age = 6–30 years, IQ = 63–138, gender [female/male] = 49/117) that were matched for demographic variables and data quality. Participants watched brief movie scenes (k = 85) depicting humans in social situations (human) or without humans (non-human). SSP was estimated by gazes on physical and motion salience and a corresponding pupillary response that indexes phasic activity of the LC-NE. Social attention is estimated by gazes on faces via manual areas of interest definition. SSP is compared between groups and related to social attention by linear mixed models that consider temporal dynamics within scenes. Models are controlled for comorbid psychopathology, gaze behavior, and luminance.

Results

We found no group differences in gazes on salience, whereas pupillary responses were associated with altered gazes on physical and motion salience. In ASD compared to TD, we observed pupillary responses that were higher for non-human scenes and lower for human scenes. In ASD, we observed lower gazes on faces across the duration of the scenes. Crucially, this different social attention was influenced by gazes on physical salience and moderated by pupillary responses.

Limitations

The naturalistic study design precluded experimental manipulations and stimulus control, while effect sizes were small to moderate. Covariate effects of age and IQ indicate that the findings differ between age and developmental subgroups.

Conclusions

Pupillary responses as a proxy of LC-NE phasic activity during visual attention are suggested to modulate sensory salience processing and contribute to attenuated social attention in ASD.

Similar content being viewed by others

Background

Visual attention is driven by relevant stimuli [1] that are defined by an interaction of sensory salience and semantic content [2]. Sensory salience describes conspicuous stimulus features [3], whereas semantic content describes acquired knowledge about stimuli [4]. Human faces are particularly relevant and drive human visual attention [5]. Autism spectrum disorder (ASD) is characterized by an attenuated visual attention to human faces [6]. This has usually been explained by an altered semantic content of social stimuli such as an attenuated reward attribution [7, 8]. However, different processing of sensory salience in ASD might also drive attenuated visual attention to human faces. This opposing hypothesis is tested in the current study.

We define this sensory salience processing (SSP) as the consideration of conspicuous stimulus features in visual attention. SSP differs from subjective salience estimation, which is based on semantic content and includes reward processing that is altered in ASD [8]. Sensory salience is represented in early sensory processing areas such as the superior colliculi (SC) [9] and the primary visual cortex [10] by a saliency map of visual input that emphasizes sensory distinctive features [3], which are then prioritized in stimulus-driven attention [11]. In ASD, a resting-state hyperconnectivity within these sensory processing areas has been reported [12, 13]. A meta-analysis in ASD further reported elevated activity in the visual cortex during non-social visual processing [14]. These neuroimaging findings indicate increased processing of sensory salience in ASD, irrespective of semantic content [12], which may explain pronounced attention to sensory distinctive features [15] and clinical symptoms of altered sensory reactivity [16].

The locus coeruleus–norepinephrine (LC-NE) system mediates SSP [17] among other mechanisms of neuromodulation [18, 19]. The LC-NE stands out [20] by the ability to increase the signal-to-noise ratio in synaptic signal transmission [21] via transient activity spikes (phasic activity) that occur in response to salience [17, 22]. This LC-NE reactivity to salience represents a neurophysiological filter mechanism of sensory selectivity in early sensory processing [23], which has been explained by connectivity of the pontine LC with the SC [24] and cortical networks of salience estimation [22, 25]. The LC-NE phasic activity releases norepinephrine in sensory processing areas [20, 26] and, thus, emphasizes the sensory processing of salient stimuli [27, 28].

This subcortical mediation of SSP by LC-NE phasic activity has been suggested to underlie stimulus-driven visual attention [29, 30]. In ASD, altered stimulus-driven attention may correspond to an impaired attention disengagement [31] and a slower identification of global versus local information [32]. Thus, we have proposed altered LC-NE phasic activity in SSP as a key mechanism of different visual attentions in ASD that might perpetuate to attenuated gazes on human faces [33].

LC-NE phasic activity is indexed by changes in pupil size [34,35,36] when controlled for luminance adaptation [37]. While this pupil size is not a continuous activity readout, spikes in activity characterize LC-NE phasic activity and are reflected in pupillary responses [38, 39]. Previous studies in ASD have shown attenuated pupillary responses for targets in neuropsychological [40, 41] and social-cognitive tasks [42, 43], as well as elevated pupillary responses during visuospatial tasks [44, 45]. The visual stimuli in these different tasks share an inherent utility for performance, i.e., they represent semantic content. Thus, these contrasting findings of pupillary reactivity in ASD might be associated with an attenuated adaptation of the LC-NE activity in ASD [46] that results in diverging sensory reactivity to different semantic contents. Recently, an attenuated sensory reactivity has been reported in autistic children, where attenuated pupillary reactivity to oddball stimuli likely indexes a reduced LC-NE phasic activity to semantic content [47, 48]. However, no previous study has explored pupillary reactivity in response to sensory salience, which might alternatively explain diverging sensory reactivity to visual stimuli in ASD.

The sensory salience of stimuli can be quantified by using computer vision approaches that aim to reconstruct human sensory processing in the visual cortex [3]. Algorithms convert input stimuli into an output that highlights sensory conspicuous features (e.g., high contrast) and, thus, approximate saliency maps in human sensory processing [49]. In a seminal paper, these algorithms were applied to extract the sensory salience of naturalistic images that were presented to adult participants [15]. Subsequent gaze analysis showed that the visual attention in autistic adults compared to neurotypical controls was driven more by sensory salience than by semantic content. This supports an increased SSP in ASD [12], although a direct measure of reactivity to sensory salience was missing. Pupillary responses as an index of LC-NE phasic activity can provide such a reactivity measure of SSP. This requires relating pupillary responses to saliency maps in the gaze analysis of visual attention.

Visual attention has often been investigated by assessing gazes on static images [50]. However, static images insufficiently represent naturalistic gaze behavior in dynamic visual attention [51]. In meta-analyses on ASD compared to neurotypical development (TD), gazes on eyes and faces are attenuated [6, 52], while the attenuation is emphasized for dynamic stimuli such as naturalistic videos [51, 53]. In these gaze analyses, relevant semantic content is usually predefined by areas of interest (AOI) [54]. The combination of computer-generated saliency maps and AOI would allow one to quantify the association of sensory salience and semantic content in naturalistic visual attention.

Sensory salience is not independent of semantic content [55, 56]. SSP is also reactive to semantic content such as reward or faces [57]. Thus, LC-NE phasic activity is likely to be evoked by sensory salience and semantic content, which is supported by pupillary responses to sensory [17] as well as semantic [58] aspects. However, an evaluation of semantic content requires higher-order processing and is expected to be slower than the subcortical SSP [59]. This difference in processing time has been utilized to dissociate neurophysiological reactivity into sensory salience and sematic content by investigating the temporal dynamics of pupillary responses [60, 61].

We want to explore SSP as a determinant of social attention, which is described by gazes on semantic content like human faces. The study assesses autistic individuals compared to neurotypical controls during the visual exploration of video scenes with humans and without humans. We estimate SSP by (1) pupillary responses as an index of LC-NE phasic activity and (2) gaze analysis of computer-generated saliency maps for sensory salience. These estimates of SPP are investigated between groups and associated with gazes on human faces. In addition, temporal dynamics will be considered by modeling time and dissecting the pupillary responses into temporal components. We hypothesize [A] an association of pupillary responses to and gazes on sensory salience across groups to establish pupillary responses as an index of reactivity to sensory salience. We further hypothesize [B] group differences in pupillary responses to and gazes on sensory salience to establish different SSP in ASD during visual exploration. Lastly, we hypothesize [C] attenuated gazes on faces in ASD that is moderated by SSP. Collectively, this would support LC-NE phasic activity as a shared underlying mechanism of altered SSP and attenuated social attention in ASD.

Methods

Sample

We included n = 166 ASD participants and n = 166 matched neurotypical controls (TD) aged 6 to 30 (Table 1). The sample is a subsample of the project “European Autism Interventions—A Multicentre Study for Developing New Medications (EU-AIMS) Longitudinal European Autism Project (LEAP) study” [62] of participants with sufficient eye-tracking data (73.6%). We excluded participants (see Fig. 1) with missing demographic data, a low sampling rate likely due to a false eye-tracker configuration (Hz < 120), particularly low or high IQ scores (IQ < 60 | IQ > 140), and ASD participants with an Autism Diagnostic Observation Schedule (Second Edition; ADOS-2) Calibrated Severity Score (CSS) below the clinical cutoff (CSS < 4) [63]. Groups were matched based on initial group differences in perceptual IQ (i.e., a non-verbal IQ estimate based on the block design and matrix reasoning subtests of the age-appropriate Wechsler Intelligence Scale; WAIS-IV, [64]; WISC-IV, [65]), chronological age, and data quality (eye-tracking accuracy and precision, available data) by the nearest neighbor method with 0.4 SD tolerance.

Sample flowchart. The raw data of n = 543 were available. We excluded data at the participant level, based on the depicted criteria in Methods. ASD = Autism spectrum disorder, TD = neurotypical controls, Hz = eye-tracker sampling rate, ADOS CSS = Autism Diagnostic Observation Schedule (ADOS-2) Calibrated Severity Score

Procedure

The EU-AIMS LEAP study assessed longitudinal data of a heterogeneous sample of autistic and neurotypical individuals with clinical characterization, neurocognition, genetics, magnet-resonance imaging, electroencephalography, and eye tracking [66]. The current investigation focused on the baseline eye-tracking assessments, which were carried out at six, site-specific eye-tracking laboratories with luminance that is adapted for optimal eye detection (Lux: m = 164, SD = 109). Eye tracking was recorded by remote eye trackers (Tobii T120 or TX300, Stockholm, Sweden) at 120 Hz or 300 Hz with a target distance of 65 cm, while the heads could be moved freely. Differences between sites were controlled for in the statistical models by random intercepts for participant.

We analyzed the presentation of natural scenes, which was part of a larger eye-tracking assessment battery [67]. Nine naturalistic videos were presented without a task. Videos were movie clips and cartoons of two categories: (1) humans in social scenes (labeled: human) or (2) scenes without humans (labeled: non-human, Additional file 1: Table S1). Videos were presented for each participant in a pseudo-random order on 17- or 23-inch displays with a fixed display area of 345 × 259 mm. Videos were displayed with audio, although no speech was involved to ensure comparability across multinational sites.

Data processing

A data processing workflow is provided (Fig. 2) and further outlined in the following.

Data processing workflow. Schematic description of the data processing and analysis procedures. a Experimental data: Scene frames and eye-tracking raw data were linked by timestamps. b Data processing: Scene frames were transformed into two saliency maps by salience estimation algorithms (physical salience, motion salience) and the areas of interest (eyes, mouth, face, body) which were manually defined. Eye-tracking raw data were preprocessed according to recent recommendations [69], and gaze information was estimated by Grafix Software [68]. c Statistical analysis: Individual gaze information was matched to both saliency maps and areas of interest and related in the linear mixed models to pupillary reactivity as a proxy of LC-NE phasic activity

Gaze location identification

In the eye-tracking raw data, gaze events were identified by the Grafix software [68]; for both eyes, raw gaze information data were smoothed temporally (20 samples) and spatially (8 mm). We linearly interpolated missing data up to 100 ms within a displacement threshold of 1° (of the visual angle). Gaze events were differentiated into fixations (i.e., gaze on location) and saccades (i.e., fast eye movements) by a velocity threshold of 20° per second. Consecutive fixations with displacements less than 0.5° were merged. Fixations were only considered valid if they had a duration of longer than 100 ms and a root mean square of less than 1°. Fixations were considered as gaze locations in the present analysis. A comparison of gaze locations for each video (Fig. 3) and gaze behavior metrics (Table 1) suggested comparable gaze behavior between groups.

Gaze behavior between groups on the nine naturalistic videos. Gaze behavior represents gaze locations as fixations on the screen that are visualized as heatmaps. Blue colors represent a low number of fixations; green colors represent an average number of fixations; and red colors represent a high number of fixations on the respective video. Of note, the naturalistic videos are segmented to individual scenes in the statistical analysis

Scene segmentation

We considered visual attention as a dynamic process in the progression of a coherent scene. Thus, we manually segmented the nine naturalistic videos based on camera cuts into a total of 85 scenes. Each scene was assigned to the corresponding video category (human versus non-human). These scenes varied in duration (m/SD = 4001 ms/3543 ms; see Additional file 1: Figure S1) and were chopped in the analysis after 5000 ms to allow for a comparison of the scenes within statistical modeling. We expected that a time scale of 5000 ms would allow for a sufficient interplay of sensory salience and semantic content [60].

Pupillary response

Pupil dilation data were provided by the eye-tracking raw data and were preprocessed according to recent recommendations [69]. The onset of a scene did not induce a pupillary light reflex (see Additional file 1: Figure S2). Human scenes were associated with a larger absolute pupil size that is accompanied by a lower luminance in the naturalistic human scenes (see Fig. 4). Pupil size data were standardized by dividing it by each participant’s absolute mean pupil size, while absolute mean pupil size did not differ between groups (Table 1). These standardized pupil dilation data were then aggregated for each gaze location and temporally shifted by 200–400 ms based on the subsequent fixation time point, to account for a delay in pupillary response to presented stimuli [34]. This provided an index of change in pupil size as a measure of relative pupillary response to fixated stimulus features.

Comparison of physical salience, motion salience and luminance across scenes. The x-axis represents the progression of the video scenes (0–5000 ms), and the y-axis represents the extracted estimates of physical salience (top) or motion salience (middle) or luminance (bottom). The estimates are provided in percentage values of total possible salience or luminance in the current frame. Solid lines represent general additive model fits and shaded areas represent 95% confidence intervals. The physical and motion salience represent overall scene salience estimates of the current frame, which are different to the measures of gazes on physical and gazes on motion salience that represent salience estimates of the current gaze location and are applied in the statistical analysis

We further extracted two temporal components from the preprocessed pupil dilation data by principal component analysis (PCA) with varimax rotation [60]. These two components provided a temporally meaningful loading structure and provided the best fit with the least components (Additional file 1: Figure S3; Additional file 1: Table S2). We multiplied the pupillary response during each fixation based on time by the corresponding loadings on the first or second component; this provided measures of the early-weighted (PR1) and late-weighted (RP2) pupillary responses.

Salience estimation

We applied the open-source computer vision library (OpenCV 4.4.0) by custom python scripts (version 3.8) [70] to extract physical and motion salience information that are considered relevant modalities of sensory salience [49]. This is based on Itti and Koch’s computational model of visual attention [3] which aims to generate saliency maps that replicate bottom-up selective sensory processing up to the primary visual cortex [10]. For each sequential video frame, the input data were the RGB matrix (2-D picture information), and the output data were separate grayscale matrices for physical and motion salience. Physical salience was estimated by spectral residual analysis [71] that identifies non-redundant information and has been considered a “fast and effective” estimator of early visual sensory processing [49]. Motion salience was estimated by Gaussian mixture probability density [72] that subtracts spatial background information by comparing arrays of weighted frames (alpha factor = 0.002) and delivers proto-object recognition in sequential data without a priori semantic knowledge [73].

A comparison of physical and motion salience between video scene categories (human versus non-human) is provided below (Fig. 4). Physical salience and motion salience were weakly correlated (r(9938) = 0.11, p < 0.001) and thus are considered as distinct features of sensory salience. Physical salience and motion salience were matched to corresponding gaze locations to derive individual estimates of gazes on sensory salience for each video frame.

Luminance estimation

We estimated luminance with the RGB matrix of the video frames [74]. RGB values were gamma-corrected, converted to linear scales and transformed to a coefficient of luminance (L = 0.2126 * R + 0.7152 * G + 0.0722 * B). Luminance was weakly correlated with physical salience (r(9938) = 0.02, p = 0.013) and motion salience (r(9938) = 0.12, p < 0.001) (see Fig. 4). A 2-D convolutional smooth with a Gaussian kernel (sigma = 10, x and y = 10% of frame size) was applied as a blur filter to estimate a local luminance within video frames. Gaze locations were matched to the corresponding local luminance to derive the luminance of each gaze location.

Gazes on faces

We defined the eyes, mouth, face, and body of humans as the areas of interest (AOI). This was achieved by manually drawing in these areas for each video frame (supplemental information S15). AOI were only available in scenes that depicted humans (k = 36) and some of these scenes were only presented to adolescent and adult participants (k = 21); this was controlled for in the linear mixed models. The proportion of gaze locations within each AOI was applied as an individual estimate of gaze allocation in the progression of a video scene. This estimate is independent of absolute screen attention, which did not differ between groups (see Table 1). We focused our analysis on gazes on faces as the video scenes predominantly consisted of total shots that were not close enough to reliably differentiate between the eyes and mouth AOI. Recent research has emphasized gazes on faces compared to gazes on eyes as a robust group difference between ASD and TD [75].

Statistical analysis

The statistical analysis was done in R 4.2 including additional packages (see supplemental information S16). The data were analyzed by linear mixed models using restricted maximum likelihood estimation to investigate [A] the association of pupillary response and gazes on physical salience and gazes on motion salience, [B] group differences in these three estimates of SSP, and [C] group differences in gazes on faces (Fig. 5). We further included the pupillary response and gazes on physical and motion salience as independent predictors in the gazes-on-faces model to investigate their moderating effects. We applied group (ASD vs. TD) and video category (human vs. non-human) as fixed effects, whereas the gazes-on-faces model was restricted to the human video scenes. The video category was included as a fixed effect to compare SSP in human versus non-human video scenes.

We included a polynomial fixed effect of time (i.e., scene duration, 0–5000 ms) to consider the temporal dynamics of SSP in the progression of a scene. A third-degree polynomial provided the best and most parsimonious fit in describing changes in pupillary response (Additional file 1: Table S3). We allowed interactions between the fixed effects relevant to the hypotheses (group, video category, time).

We applied random intercepts for participant and video scene to account for the interdependence of measurements. The random intercept for participant further controls for interindividual differences and differences between recruiting sites, while the random intercept for scene further controls for differences in stimulus characteristics between scenes. These random intercepts also control for participant- and trial-specific differences in missing data. We further controlled for various potential confounders by fixed effect covariates for sex, age, perceptual IQ, comorbid psychopathology, data quality, gaze behavior, and luminance (see supplemental information S17). Full model definitions are provided in Additional file 1: Table S4. A more complex random effect structure with a random slope for participant did not lead to converging models and thus was not further considered.

In a secondary analysis, we applied the early and late pupillary response components as alternative fixed effects to investigate the moderating effects on gaze behavior (hypothesis C). This weighted pupillary response comprises temporal information by factor weighting, and thus, the polynomial fixed effect of time was dropped for this analysis.

In a supplementary analysis, we checked whether sensory symptoms as measured by the short sensory profile would be associated with the dependent variables across and between groups. For this, we exchanged the group variable by the short sensory profile total score in all models. We did not observe any significant main effects of sensory symptoms, and thus, it was not further considered.

Fixed effect significance was estimated by ANOVA using Satterthwaite’s method [76]. We adjusted for multiple comparisons (3 analyses) by FDR correction (p-adj.). Fixed effects were reported as standardized coefficients (β) that represent an effect size. Interactions were investigated post hoc with marginalized means/coefficients (ΔM/Δβ). Effects are presented with 95% confidence intervals (95% CI). Model comparisons applied refitting with maximum likelihood estimation. The full model results, including all the covariates, are described in the supplements (Additional file 1: Tables S1–S8). Here, we only report the fixed effects that are relevant to the hypotheses (group, video category).

Power analysis

Power was estimated by simulation based on the observed random effect variance [77]. Confidence intervals were based on 1000 iterations. For small, fixed effects (β = 0.1), we achieved a power of 90.1% with the applied linear mixed models, 95% CI [88.1, 91.8].

Results

Gazes on sensory salience differed by pupillary response [hypothesis A]

Gazes on physical salience were lower when viewing human videos compared to non-human videos (F(1, 302) = 15.17, p-adj. < 0.001, β = − 0.35, 95% CI [− 0.61, − 0.08]). Gazes on physical salience were also lower for higher pupillary responses (F(1, 51,986) = 21.19, p-adj. < 0.001, β = − 0.17, 95% CI [− 0.31, − 0.04]), which differed by video category (F(1, 27,988) = 8.734, p-adj. = 0.009). Post hoc analysis showed that the effect of pupillary response on physical salience was specific to non-human video scenes (Δβ = − 0.29, 95% CI [− 0.42, − 0.15]) compared to human video scenes (Δβ = − 0.10, 95% CI [− 0.26, 0.06], Additional file 1: Table S5).

Gazes on motion salience were described by an interaction of video category x pupillary response x time (F(1, 66,329) = 53.54, p-adj. < 0.001). Post hoc analysis showed that the effect of pupillary response dynamically changed over time (Fig. 6a, Additional file 1: Table S6).

Temporal effects in SSP. The x-axis represents the progression of the video scenes (0–5000 ms), and the y-axis represents the estimated marginalized means of standardized effects. Boxplot whiskers correspond to ± 2 standard errors, and the interquartile range corresponds to ± 1 standard error. Boxplots separated by video category or group are displayed side by side for each time point to avoid overplotting. a The effect of pupillary response on gazes on motion salience. b The effect of group on pupillary response. Boxplots represent effect size contrasts [ASD-TD]. c The effects of gazes on physical salience on social attention

Pupillary response differed by group and video category [hypothesis B]

The pupillary response was higher when viewing human compared to non-human video scenes (F(1, 82) = 20.01, p-adj. < 0.001, β = 0.39, 95% CI [0.18, 0.60]), which differed between groups (F(1, 66,329) = 304.54, p-adj. < 0.001). Post hoc analysis in ASD compared to TD showed that the pupillary response was higher for non-human videos (ΔM = 0.06, 95% CI [0.00, 0.13]), while it was lower for human videos (ΔM = − 0.08, 95% CI [− 0.14, − 0.01]); this interaction differed further with time, as indicated by a three-way interaction of group x video category x time (F(1, 66,056) = 6.43, p-adj. = 0.033). Post hoc analysis indicated that differences in the pupillary response between groups for human videos were attenuated in the early phases of the scenes (1000–2000 ms, see Additional file 1: Table S7, Fig. 6b).

Group differences in gazes on faces were influenced by gazes on physical salience [hypothesis C]

Gazes on faces were indicated to be lower in ASD compared to TD (F(1, 290) = 5.08, p-adj. = 0.075, β = − 0.07, 95% CI [− 0.14, − 0.01], Additional file 1: Table S8, Fig. 7a). The group effect was emphasized when excluding all covariates (F(1, 298) = 17.29, p-adj < 0.001, β = − 0.12, 95% CI [− 0.18, − 0.06], Additional file 1: Table S9).

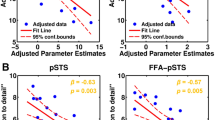

Group differences in gazes on faces are modulated by SSP. a Group difference in gazes on faces between ASD and TD as index of social attention. b Gazes on physical salience are associated with the group difference in gazes on faces. c Pupillary response moderated the group difference in gazes on faces. n.s. = not significant

The inclusion of physical salience as fixed effect delivered a better model fit (χ2(8) = 57.68, p < 0.001, Additional file 1: Table S10). The main effect of physical salience (F(1, 30,175 = 15.28, p-adj. < 0.001) was a positive association with gazes on faces across groups (β = 0.02, 95% CI [0.01, 0.04]). In secondary analyses, gazes on physical salience (F(1, 223) = 8.58, p-adj. = 0.012, ΔM = − 0.05, 95% CI [− 0.08, − 0.02]) were lower in ASD compared to TD for human scenes (Additional file 1: Table S11). Together, this indicated that gazes on physical salience could mediate the group difference in gazes on faces (Fig. 7b).

Gazes on physical salience further differed by time (F(1, 30,125) = 42.46, p-adj. < 0.001). Post hoc analysis indicated that the gaze effect of physical salience on faces was attenuated when the mean gazes on faces was at its maximum during the video clips (1000–2000 ms, see Additional file 1: Table S10, Fig. 6c). There were no group differences in gazes on motion salience for human scenes (F(1, 207) = 0.12, p-adj. = 1, ΔM = 0, 95% CI [− 0.03, 0.02]) (Additional file 1: Table S12), and thus, the effect of motion salience as a mediator was not further investigated.

We further observed a covariate effect of age on gazes on faces (F(1, 294) = 24.62, p-adj. < 0.001), which was not altered by the inclusion of physical salience or pupillary response as fixed effect. A higher age was associated with higher gazes on faces (β = 0.07, 95% CI [0.04, 0.10]).

Group differences in gazes on faces were moderated by a higher early pupillary response [hypothesis C]

The inclusion of the pupillary response as an alternative fixed effect rendered the group difference in gazes on faces to be non-significant (F(1, 12,006) = 2.29, p-adj. = 0.390; Additional file 1: Table S13) and delivered a better model fit (χ2(8) = 25.56, p < 0.001). A full moderation is indicated by the main effect of pupillary response (F(1, 28,786 = 6.29, p-adj. < 0.036) that was a negative association with gazes on faces across groups (β = − 0.24, 95% CI [− 0.09, − 0.38]). Post hoc analysis showed that the effect of pupillary response might be more pronounced in ASD (Δβ = − 0.35, 95% CI [− 0.56, − 0.15]) compared to TD (Δβ = − 0.18, 95% CI [− 0.35, − 0.01], Fig. 7c).

In a secondary analysis, we investigated whether the group difference in the effect of pupillary response on gazes on faces was because of early or late pupillary responses. We, thus, exchanged the pupillary response variable by the early (PR1) and late (PR2) pupillary response components (Additional file 1: Table S14). This inclusion also rendered the group difference in gazes on faces to be non-significant (F(1, 296) = 4.03, p-adj. = 0.138). Interestingly, PR1 was negatively associated with gazes on faces (F(1, 30,189) = 38.92, p-adj. < 0.001, β = − 0.04, 95% CI [− 0.05, − 0.02]), while PR2 was positively associated with gazes on faces (F(1, 30,261) = 16.44, p-adj. < 0.001, β = 0.01, 95% CI [0.00, 0.03]).

Discussion

We provide the first empirical study that relates sensory salience processing (SSP) to gazes on faces during naturalistic visual attention in a comprehensive sample of autistic individuals and neurotypical controls. We utilized computer vision algorithms to derive estimates of gazes on sensory salience, while we applied the pupillary response as a proxy of LC-NE phasic activity to assess neurophysiological reactivity to sensory salience. Gazes on and reactivity to sensory salience were associated while sensory reactivity differed between groups. As a main finding, reactivity to sensory salience moderated attenuated gazes on faces in ASD. The findings indicate that altered SSP is associated with an attenuated social attention in ASD.

Sensory salience processing in naturalistic visual attention across groups

Gazes on physical salience were lower in video scenes with humans compared to scenes without humans, although overall physical salience is similar between the video categories (Fig. 4). This is in line with a previous study in neurotypical adults and could be explained by a superior semantic salience of displayed humans that is prioritized in visual exploration compared to physical salience. Across scenes, gazes on physical salience did not differ between ASD and TD, which corresponds to previous findings for static images [78]. In a secondary analysis for human videos, we found lower gazes on physical salience in ASD compared to TD. Both findings contrast the seminal study by Wang et al. [15] that reported increased physical salience for static images in autistic adults. Their conclusion was derived from a machine learning analysis that compared gaze behavior between smaller groups (ASD: n = 20; TD: n = 19); thus, the small sample size may have limited the generalizability of their findings. Our findings suggest that attention to sensory salience is described by attenuated gazes on physical salience in the visual exploration of dynamic stimuli depicting humans, which could be specific to ASD.

We examined motion salience as an additional dimension of gazes on sensory salience, which is understudied in visual exploration. In our study, gazes on motion salience did not differ between ASD and TD. This contrasts a previous study with a smaller sample (ASD: n = 26; TD: n = 15) of preschool children [79], which reported decreased gazes on motion salience in ASD for social videos. Our well-powered sample supports the conclusion that gazes on motion salience, as well as physical salience, are both proxies of attention to sensory salience during naturalistic visual exploration [3].

Pupillary responses were associated with these proxies of attention to sensory salience. This association was negative for physical salience across scenes, whereas, for motion salience, it was negative in the early phases (500–1500 ms) and positive in the intermediate phases (2500–4000 ms) of the non-human scenes, whereas this pattern inversed for human scenes (Fig. 6a). These findings may indicate distinct mechanisms that influence SSP and are reflected in dynamic LC-NE phasic activity [80]. We speculate that semantic content, such as conspicuous objects, causes a top-down salience response that induces LC-NE phasic activity [22] and overrides a bottom-up attention to sensory salience [3] that is rather prevalent during the intermediate phase of a scene. This dynamic reactivity to sensory salience warrants further investigation.

Pupillary responses indicate different sensory salience processing in ASD

For human scenes, pupillary responses were decreased in ASD compared to TD. This corresponds to previous findings that reported attenuated pupillary responses for social versus non-social images [81], insensitivity of pupillary responses to changes in the intensity of social content [43], and attenuated pupillary responses to social-emotional content [42]. In these studies, the pupillary responses may have reflected the subjective utility of the presented stimuli (i.e., semantic content) that is associated with LC-NE phasic upregulation in order to emphasize the sensory processing of the salient stimuli [21]. Thus, our findings extend earlier reports of decreased pupillary responses in ASD to naturalistic movie scenes and indicate a reduced LC-NE phasic activity in response to human stimuli. The group difference was attenuated when mean gazes on faces were highest across the groups (1000–2000 ms, see Fig. 6b). In ASD, gazes on faces may alleviate, but do not compensate for, attenuated LC-NE phasic activity in response to human stimuli.

For non-human scenes, pupillary responses were increased in ASD compared to TD. This could relate to previous findings of increased pupillary responses for a non-human visual search task in toddlers [45] or in response to unexpected targets during a visuospatial reaction time task in adolescents [44]. This may indicate increased sensory salience processing in ASD for non-human stimuli that can lead to superior performance in associated tasks [82]. Taken together, our pupillometric findings in ASD could suggest a bias in SSP that prioritizes non-human over human content in visual exploration. This may further explain gaze preferences for geometric over human stimuli during concurrent presentation, which has been established as a prognostic marker of ASD [83].

Attenuated gazes on faces are moderated by sensory salience processing

In human scenes, gazes on faces were lower in ASD compared to TD. This corresponds to meta-analytic findings of attenuated social attention [6, 53]. As a main finding of the current study, attenuated gazes on faces in ASD (a.) might be mediated by effects of gazes on physical salience across groups and (b.) were moderated by pupillary reactivity within ASD. Across groups, gazes on physical salience were associated with gazes on faces, while gazes on physical salience were lower in ASD compared to TD. We further observed that higher age was associated with more gazes on faces, which was independent from the mediation and moderation effects. Our findings provide evidence that decreased attention to physical salience might correspond to lower social attention in ASD and TD (Fig. 7B mediation).

In addition, higher pupillary responses were associated with substantially lower gazes on faces, which might be more pronounced in ASD. This was characterized further by our secondary analysis that showed a negative association of an early pupillary response component (PR1), but a positive association of a late pupillary response component (PR2) with gazes on faces. Based on previous research on fast and slow pathways of visual processing [59] and on the reactivity of the salience network to sensory salience and semantic content [55, 56], PR1 may overlap with the LC-NE phasic reactivity to sensory salience, while PR2 may overlap with the LC-NE phasic reactivity to semantic content. Thus, in ASD, we conclude that increased LC-NE phasic reactivity to sensory salience is associated with lower social attention. This supports an earlier conceptualization of an altered “fast-track” processing of sensory information that underlies atypical eye contact in ASD [84]. In addition, higher early pupillary responses in ASD have been related to an over-responsivity to sensory changes [41]. Our findings relate these early pupillary responses as an index of sensory processing to an attenuated social attention. Altered LC-NE phasic activity may provide an underlying mechanism to relate ASD clinical phenotypes of altered sensory processing to atypical social attention.

In addition, increased PR2 was associated with increased gazes on faces which could, therefore, provide a compensating mechanism in ASD. The diametrically opposite effects of the early (PR1) and late (PR2) pupillary responses on gazes on faces underline the temporal dynamics of LC-NE phasic activity in moderating visual attention that may reflect distinct underlying mechanisms [80]. Independent studies need to replicate early and late LC-NE phasic activity as differential moderators of social attention.

Limitations

Our naturalistic study design precluded experimental manipulations and comprised that stimulus salience and luminance are not controlled across scenes. The heterogeneous sample may have led to reported effect sizes that are only small to moderate. Accordingly, the significant covariate effects of age and perceptual IQ on social attention indicate that the reported effects may differ between specific developmental subgroups. The exclusion of participants with an IQ < 60 led to findings that are not generalizable to individuals with moderate to severe intellectual disabilities. Lastly, numerous cognitive processes and functional pathways induce LC-NE phasic activity, and we are not able to differentiate those [24, 85].

Conclusions

We characterize sensory salience processing in visual attention. Findings emphasize a moderating role of LC-NE phasic activity in gazes on sensory salience and suggest an increased reactivity to sensory salience as a bottom-up mechanism of attenuated gazes on faces in ASD. Altered LC-NE phasic activity may represent a neurophysiological mechanism of altered sensory salience processing that underlies the clinical phenotypes of altered sensory processing and attenuated social attention.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available due to an embargo period but are available from the corresponding author on reasonable request. The data analysis scripts are accessible online (https://github.com/nicobast/project_video_salience).

Abbreviations

- AOI:

-

Areas of interest

- ADOS-2:

-

Autism Diagnostic Observation Schedule, Second Edition

- ASD:

-

Autism spectrum disorder

- CSS:

-

Calibrated severity score

- RP1:

-

Early pupillary response component

- RP2:

-

Late pupillary response component

- LC-NE:

-

Locus coeruleus–norepinephrine system

- MICE:

-

Multiple imputations of chained equations

- TD:

-

Neurotypical development

- PCA:

-

Principal component analysis

- SSP:

-

Sensory salience processing

- SC:

-

Superior colliculi

References

Treisman AM. Selective attention in man. Br Med Bull. 1964;20(1):12–6.

Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3(3):201–15.

Itti L, Koch C. Computational modelling of visual attention. Nat Rev Neurosci. 2001;2(3):194–203.

Hutchinson JB, Turk-Browne NB. Memory-guided attention: control from multiple memory systems. Trends Cogn Sci. 2012;16(12):576–9.

Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4(6):223–33.

Frazier TW, Strauss M, Klingemier EW, Zetzer EE, Hardan AY, Eng C, et al. A meta-analysis of gaze differences to social and nonsocial information between individuals with and without autism. J Am Acad Child Adolesc Psychiatry. 2017;56(7):546–55.

Chevallier C, Kohls G, Troiani V, Brodkin ES, Schultz RT. The social motivation theory of autism. Trends Cogn Sci. 2012;16(4):231–9.

Clements CC, Zoltowski AR, Yankowitz LD, Yerys BE, Schultz RT, Herrington JD. Evaluation of the social motivation hypothesis of autism: a systematic review and meta-analysis. JAMA Psychiat. 2018;75(8):797–808.

Veale R, Hafed ZM, Yoshida M. How is visual salience computed in the brain? Insights from behaviour, neurobiology and modelling. Philos Trans Roy Soc B Biol Sci. 2017;372(1714):20160113.

Melloni L, van Leeuwen S, Alink A, Müller NG. Interaction between bottom-up saliency and top-down control: how saliency maps are created in the human brain. Cereb Cortex. 2012;22(12):2943–52.

Vossel S, Geng JJ, Fink GR. Dorsal and ventral attention systems: distinct neural circuits but collaborative roles. Neurosci Rev J Bring Neurobiol Neurol Psychiatry. 2014;20(2):150–9.

Green SA, Hernandez L, Bookheimer SY, Dapretto M. Salience network connectivity in autism is related to brain and behavioral markers of sensory overresponsivity. J Am Acad Child Adolesc Psychiatry. 2016;55(7):618-26.e1.

Oldehinkel M, Mennes M, Marquand A, Charman T, Tillmann J, Ecker C, et al. Altered connectivity between cerebellum, visual, and sensory-motor networks in autism spectrum disorder: results from the EU-AIMS longitudinal european autism project. Biol Psychiatry Cogn Neurosci Neuroimaging. 2018.

Jassim N, Baron-Cohen S, Suckling J. Meta-analytic evidence of differential prefrontal and early sensory cortex activity during non-social sensory perception in autism. Neurosci Biobehav Rev. 2021;127:146–57.

Wang S, Jiang M, Duchesne Xavier M, Laugeson Elizabeth A, Kennedy Daniel P, Adolphs R, et al. Atypical visual saliency in autism spectrum disorder quantified through model-based eye tracking. Neuron. 2015;88(3):604–16.

American Psychiatric Association. Diagnostic and statistical manual of mental disorders, (DSM-5®). Washington, DC.: American Psychiatric Association; 2013.

Vazey EM, Moorman DE, Aston-Jones G. Phasic locus coeruleus activity regulates cortical encoding of salience information. Proc Natl Acad Sci USA. 2018;115(40):E9439–48.

Jacob SN, Nienborg H. Monoaminergic neuromodulation of sensory processing. Front Neural Circuits. 2018;12:51.

Hermans EJ, Henckens MJAG, Joëls M, Fernández G. Dynamic adaptation of large-scale brain networks in response to acute stressors. Trends Neurosci. 2014;37(6):304–14.

Aston-Jones G, Cohen JD. An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu Rev Neurosci. 2005;28:403–50.

Mather M, Clewett D, Sakaki M, Harley CW. Norepinephrine ignites local hotspots of neuronal excitation: how arousal amplifies selectivity in perception and memory. Behav Brain Sci. 2016;39:e200.

Kucyi A, Parvizi J. Pupillary dynamics link spontaneous and task-evoked activations recorded directly from human insula. J Neurosci. 2020;40(32):6207–18.

Thiele A, Bellgrove MA. Neuromodulation of attention. Neuron. 2018;97(4):769–85.

Strauch C, Wang C-A, Einhäuser W, Van der Stigchel S, Naber M. Pupillometry as an integrated readout of distinct attentional networks. Trends Neurosci. 2022.

Liebe T, Kaufmann J, Li M, Skalej M, Wagner G, Walter M. In vivo anatomical mapping of human locus coeruleus functional connectivity at 3 T MRI. Hum Brain Mapp. 2020.

Rodenkirch C, Liu Y, Schriver BJ, Wang Q. Locus coeruleus activation enhances thalamic feature selectivity via norepinephrine regulation of intrathalamic circuit dynamics. Nat Neurosci. 2019;22(1):120–33.

McBurney-Lin J, Lu J, Zuo Y, Yang H. Locus coeruleus-norepinephrine modulation of sensory processing and perception: a focused review. Neurosci Biobehav Rev. 2019;105:190–9.

Safaai H, Neves R, Eschenko O, Logothetis NK, Panzeri S. Modeling the effect of locus coeruleus firing on cortical state dynamics and single-trial sensory processing. Proc Natl Acad Sci USA. 2015;112(41):12834–9.

Eldar E, Cohen JD, Niv Y. The effects of neural gain on attention and learning. Nat Neurosci. 2013;16(8):1146.

Corbetta M, Patel G, Shulman GL. The reorienting system of the human brain: from environment to theory of mind. Neuron. 2008;58(3):306–24.

Sacrey L-AR, Armstrong VL, Bryson SE, Zwaigenbaum L. Impairments to visual disengagement in autism spectrum disorder: a review of experimental studies from infancy to adulthood. Neurosci Biobehav Rev. 2014;47:559–77.

Van der Hallen R, Evers K, Brewaeys K, Van den Noortgate W, Wagemans J. Global processing takes time: a meta-analysis on local–global visual processing in ASD. Psychol Bull. 2015;141(3):549.

Bast N, Poustka L, Freitag CM. The locus coeruleus-norepinephrine system as pacemaker of attention—a developmental mechanism of derailed attentional function in autism spectrum disorder. Eur J Neurosci. 2018;47(2):115–25.

Joshi S, Li Y, Kalwani Rishi M, Gold JI. Relationships between pupil diameter and neuronal activity in the locus coeruleus, colliculi, and cingulate cortex. Neuron. 2016;89(1):221–34.

Murphy PR, O’Connell RG, O’Sullivan M, Robertson IH, Balsters JH. Pupil diameter covaries with BOLD activity in human locus coeruleus. Hum Brain Mapp. 2014;35(8):4140–54.

Reimer J, McGinley MJ, Liu Y, Rodenkirch C, Wang Q, McCormick DA, et al. Pupil fluctuations track rapid changes in adrenergic and cholinergic activity in cortex. Nat Commun. 2016;7:13289.

Mathôt S, Fabius J, Van Heusden E, Van der Stigchel S. Safe and sensible preprocessing and baseline correction of pupil-size data. Behav Res Methods. 2018;50(1):94–106.

Megemont M, McBurney-Lin J, Yang H. Pupil diameter is not an accurate real-time readout of locus coeruleus activity. Elife. 2022;11:e70510.

Yang H, Bari BA, Cohen JY, O’Connor DH. Locus coeruleus spiking differently correlates with S1 cortex activity and pupil diameter in a tactile detection task. Elife. 2021;10: e64327.

Granovetter MC, Burlingham CS, Blauch NM, Minshew NJ, Heeger DJ, Behrmann M. Uncharacteristic task-evoked pupillary responses implicate atypical locus coeruleus activity in autism. J Neurosci. 2020;40(19):3815–26.

Lawson RP, Mathys C, Rees G. Adults with autism overestimate the volatility of the sensory environment. Nat Neurosci. 2017;20(9):1293–9.

Nuske HJ, Vivanti G, Dissanayake C. Others’ emotions teach, but not in autism: an eye-tracking pupillometry study. Molecular Autism. 2016;7(1):36.

Aguillon‐Hernandez N, Mofid Y, Latinus M, Roché L, Bufo MR, Lemaire M, et al. The pupil: a window on social automatic processing in autism spectrum disorder children. J Child Psychol Psychiatry. 2019.

Boxhoorn S, Bast N, Supèr H, Polzer L, Cholemkery H, Freitag CM. Pupil dilation during visuospatial orienting differentiates between autism spectrum disorder and attention-deficit/hyperactivity disorder. J Child Psychol Psychiatry. 2019;61(5):614–24.

Blaser E, Eglington L, Carter AS, Kaldy Z. Pupillometry reveals a mechanism for the autism spectrum disorder (ASD) advantage in visual tasks. Sci Rep. 2014;4:4301.

Bast N, Boxhoorn S, Super H, Helfer B, Polzer L, Klein C, et al. Atypical arousal regulation in children with autism but not with ADHD as indicated by pupillometric measures of locus coeruleus activity. Biol Psychiatry Cogn Neurosci Neuroimaging. 2021.

Kim Y, Kadlaskar G, Keehn RM, Keehn B. Measures of tonic and phasic activity of the locus coeruleus—norepinephrine system in children with autism spectrum disorder: an event-related potential and pupillometry study. Autism Res. 2022;15(12):2250–64.

Zhao S, Liu Y, Wei K. Pupil-linked arousal response reveals aberrant attention regulation among children with autism spectrum disorder. J Neurosci. 2022;42:5427–37.

Borji A, Sihite DN, Itti L. Quantitative analysis of human-model agreement in visual saliency modeling: a comparative study. IEEE Trans Image Process. 2012;22(1):55–69.

Ames C, Fletcher-Watson S. A review of methods in the study of attention in autism. Dev Rev. 2010;30(1):52–73.

Chevallier C, Parish-Morris J, McVey A, Rump KM, Sasson NJ, Herrington JD, et al. Measuring social attention and motivation in autism spectrum disorder using eye-tracking: stimulus type matters. Autism Res. 2015;8(5):620–8.

Papagiannopoulou EA, Chitty KM, Hermens DF, Hickie IB, Lagopoulos J. A systematic review and meta-analysis of eye-tracking studies in children with autism spectrum disorders. Soc Neurosci. 2014;9(6):610–32.

Chita-Tegmark M. Social attention in ASD: a review and meta-analysis of eye-tracking studies. Res Dev Disabil. 2016;48:79–93.

Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59(9):809–16.

Supèr H, Spekreijse H, Lamme VA. Two distinct modes of sensory processing observed in monkey primary visual cortex (V1). Nat Neurosci. 2001;4(3):304–10.

Borji A, Sihite DN, Itti L. What stands out in a scene? A study of human explicit saliency judgment. Vision Res. 2013;91:62–77.

Santos A, Mier D, Kirsch P, Meyer-Lindenberg A. Evidence for a general face salience signal in human amygdala. Neuroimage. 2011;54(4):3111–6.

Dobbins IG. Pupil dilation signals recognition salience. Psychonomic Bull Rev. 2021;28:565–73.

Chambers CD, Payne JM, Stokes MG, Mattingley JB. Fast and slow parietal pathways mediate spatial attention. Nat Neurosci. 2004;7(3):217–8.

Bast N, Banaschewski T, Dziobek I, Brandeis D, Poustka L, Freitag CM. Pupil dilation progression modulates aberrant social cognition in autism spectrum disorder. Autism Res. 2019;12:1680–92.

Wierda SM, van Rijn H, Taatgen NA, Martens S. Pupil dilation deconvolution reveals the dynamics of attention at high temporal resolution. Proc Natl Acad Sci USA. 2012;109(22):8456–60.

Charman T, Loth E, Tillmann J, Crawley D, Wooldridge C, Goyard D, et al. The EU-AIMS Longitudinal European Autism Project (LEAP): clinical characterisation. Mol Autism. 2017;8:27.

Gotham K, Pickles A, Lord C. Standardizing ADOS scores for a measure of severity in autism spectrum disorders. J Autism Dev Disord. 2009;39(5):693–705.

Wechsler D. Wechsler adult intelligence scale, 4th edition (WAIS–IV);2008. pp. 816–27.

Wechsler D. The Wechsler intelligence scale for children-fourth edition. London: Pearson Assessment; 2004.

Loth E, Charman T, Mason L, Tillmann J, Jones EJH, Wooldridge C, et al. The EU-AIMS Longitudinal European Autism Project (LEAP): design and methodologies to identify and validate stratification biomarkers for autism spectrum disorders. Mol Autism. 2017;8(1):24.

Bast N, Mason L, Freitag CM, Smith T, Portugal AM, Poustka L, et al. Saccade dysmetria indicates attenuated visual exploration in autism spectrum disorder. J Child Psychol Psychiatry. 2020;62:149–59.

de Urabain IRS, Johnson MH, Smith TJ. GraFIX: a semiautomatic approach for parsing low-and high-quality eye-tracking data. Behav Res Methods. 2014;47(1):53–72.

Kret ME, Sjak-Shie EE. Preprocessing pupil size data: guidelines and code. Behav Res Methods. 2018;51:1336–42.

Van Rossum G, Drake FL. Python 3 reference manual. Scotts Valley: CreateSpace; 2009.

Hou X, Zhang L, editors. Saliency detection: a spectral residual approach. 2007 IEEE Conference on computer vision and pattern recognition; 2007. IEEE.

Zivkovic Z, editor Improved adaptive Gaussian mixture model for background subtraction. In: Proceedings of the 17th international conference on pattern recognition, 2004 ICPR 2004; 2004. IEEE.

Toyama K, Krumm J, Brumitt B, Meyers B, editors. Wallflower: principles and practice of background maintenance. In: Proceedings of the seventh IEEE international conference on computer vision; 1999. IEEE.

Poynton C. Digital video and HD: algorithms and interfaces. Waltham: Elsevier; 2012.

Kwon M-K, Moore A, Barnes CC, Cha D, Pierce K. Typical levels of eye-region fixation in toddlers with autism spectrum disorder across multiple contexts. J Am Acad Child Adolesc Psychiatry. 2019;58(10):1004–15.

Luke SG. Evaluating significance in linear mixed-effects models in R. Behav Res Methods. 2017;49(4):1494–502.

Green P, MacLeod CJ. SIMR: an R package for power analysis of generalized linear mixed models by simulation. Methods Ecol Evol. 2016;7(4):493–8.

Freeth M, Foulsham T, Chapman P. The influence of visual saliency on fixation patterns in individuals with autism spectrum disorders. Neuropsychologia. 2011;49(1):156–60.

Shic F, Scassellati B, Lin D, Chawarska K, editors. Measuring context: the gaze patterns of children with autism evaluated from the bottom-up. In: 2007 IEEE 6th international conference on development and learning; 2007. IEEE.

Corneil Brian D, Munoz DP. Overt responses during covert orienting. Neuron. 2014;82(6):1230–43.

Frost-Karlsson M, Galazka MA, Gillberg C, Gillberg C, Miniscalco C, Billstedt E, et al. Social scene perception in autism spectrum disorder: an eye-tracking and pupillometric study. J Clin Exp Neuropsychol. 2019;41:1–9.

Gliga T, Bedford R, Charman T, Johnson Mark H, Baron-Cohen S, Bolton P, et al. Enhanced visual search in infancy predicts emerging autism symptoms. Curr Biol. 2015;25(13):1727–30.

Pierce K, Marinero S, Hazin R, McKenna B, Barnes CC, Malige A. Eye tracking reveals abnormal visual preference for geometric images as an early biomarker of an autism spectrum disorder subtype associated with increased symptom severity. Biol Psychiatry. 2016;79(8):657–66.

Senju A, Johnson MH. Atypical eye contact in autism: models, mechanisms and development. Neurosci Biobehav Rev. 2009;33:1204–14.

Joshi S, Gold JI. Pupil size as a window on neural substrates of cognition. Trends Cogn Sci. 2020;24(6):466–80.

Acknowledgements

We thank all participants and their families for participating in this study.

Consortium—the EU-AIMS LEAP group. Jumana Ahmad4, Sara Ambrosino7, Bonnie Auyeung8,9, Tobias Banaschewski3, Simon Baron-Cohen8, Nico Bast1, Sarah Baumeister3, Christian F. Beckmann10, Sven Bölte11, Thomas Bourgeron12, Carsten Bours10, Michael Brammer4, Daniel Brandeis3,13, Claudia Brogna14, Yvette de Bruijn10, Jan K. Buitelaar10, Bhismadev Chakrabarti8,15, Tony Charman4, Ineke Cornelissen10, Daisy Crawley4, Flavio Dell’Acqua4, Guillaume Dumas12, Sarah Durston7, Christine Ecker1, Jessica Faulkner4, Vincent Frouin16, Pilar Garcés17, David Goyard16, Lindsay Ham18, Hannah Hayward4, Joerg Hipp17, Rosemary Holt8, Mark Johnson2, Emily J.H. Jones2, Prantik Kundu19, Meng-Chuan Lai8,20, Xavier Liogier D’ardhuy17, Michael V. Lombardo8,21, Eva Loth4, David J. Lythgoe4, René Mandl7, Andre Marquand10, Luke Mason2, Maarten Mennes10, Andreas Meyer-Lindenberg22, Carolin Moessnang22, Declan G.M. Murphy4, Bethany Oakley4, Laurence O’Dwyer10, Marianne Oldehinkel10, Bob Oranje7, Gahan Pandina6, Antonio M. Persico14,23, Barbara Ruggeri4, Amber Ruigrok8, Jessica Sabet4, Roberto Sacco14, Antonia San José Cáceres4, Emily Simonoff4, Will Spooren17, Julian Tillmann4, Roberto Toro12, Heike Tost22, Jack Waldman8, Steve C.R. Williams4, Caroline Wooldridge4 and Marcel P. Zwiers10. 7Department of Psychiatry, Brain Center Rudolf Magnus, University Medical Center Utrecht, Universiteitsweg 100, 3584 CG, Utrecht, The Netherlands. 8Autism Research Centre, Department of Psychiatry, University of Cambridge, Douglas House, 18b Trumpington Road, Cambridge, CB2 8AH, UK. 9Department of Psychology, The School of Philosophy, Psychology, and Language Sciences, University of Edinburgh, Dugald Stewart Building, 3 Charles Street, Edinburgh, EH8 9AD, UK. 10Donders Institute for Brain, Cognition and Behaviour, Radboud University Nijmegen Medical Centre, Kapittelweg 29, 6525 EN, Nijmegen, The Netherlands. 11Center for Neurodevelopmental Disorders at Karolinska Institutet (KIND), Stockholm, Sweden. 12Human Genetics and Cognitive Functions Unit, Institut Pasteur, 25 Rue du Docteur Roux, Paris, Cedex 15, France. 13Department of Child and Adolescent Psychiatry and Psychotherapy, Psychiatric Hospital, University of Zürich, Neumünsterallee 9, 8032, Zürich, Switzerland. 14University Campus Bio-Medico, via Álvaro del Portillo, 21, Rome, Italy. 15Centre for Autism, School of Psychology and Clinical Language Sciences, University of Reading, Whiteknights, Reading, RG6 6AL, UK. 16Neurospin Centre CEA, Saclay, 91191, Gif sur Yvette, France. 17Roche Pharma Research and Early Development, Neuroscience, Ophthalmology and Rare Diseases, Roche Innovation Center Basel, Grenzacherstrasse 124, B.001 N.667, CH-4070, Basel, Switzerland. 18Regulatory Affairs, Product Development, F. Hoffmann-La Roche Pharmaceuticals, Grenzacherstrasse 124, CH-4070, Basel, Switzerland. 19Department of Radiology, Icahn School of Medicine at Mount Sinai, NY, USA. 20Child and Youth Mental Health Collaborative, Centre for Addiction and Mental Health and The Hospital for Sick Children, Department of Psychiatry, University of Toronto, 80, Workman Way, Toronto, ON, M6J 1H4, Canada. 21Center for Applied Neuroscience, Department of Psychology, University of Cyprus, PO Box 20537, 1678, Nicosia, Cyprus. 22Department of Psychiatry and Psychotherapy, Central Institute of Mental Health, Medical Faculty Mannheim, University of Heidelberg, 68159, Mannheim, Germany. 23Child and Adolescent Neuropsychiatry Unit, Gaetano Martino University Hospital, University of Messina, Via Consolare Valeria 1, I-98125, Messina, Italy

Funding

Open Access funding enabled and organized by Projekt DEAL. This study was funded by EU-AIMS (European Autism Interventions—A Multicentre Study for Developing New Medications) and AIMS-2-TRIALS (Autism Innovative Medicine Studies-2-Trials). EU-AIMS received support from the IMI Joint Undertaking (JU) under grant agreement no.115300, the resources of which are composed of financial contribution from the European Union’s Seventh Framework Programme (FP7/2007–2013), from the European Federation of Pharmaceutical Industries and Associations (EFPIA) companies in kind contribution, and from Autism Speaks. AIMS-2-TRIALS received funding from the IMI 2 JU under grant agreement no. 777394. The JU receives support from the European Union’s Horizon 2020 research and innovation program and EFPIA, Autism Speaks, Autistica, and the Simons Foundation Autism Research Initiative. Disclaimer: The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results. Any views expressed are those of the author(s) and not necessarily those of the funders.

Author information

Authors and Affiliations

Consortia

Contributions

The “European Autism Interventions—A Multicentre Study for Developing New Medications (EU-AIMS) Longitudinal European Autism Project (LEAP)” group collaboratively collected the data. NB and CMF conceived the analysis plan. LM provided the preprocessing of the eye-tracking data. NB conducted the data analysis and wrote the first draft of the manuscript. CE and EJ provided critical feedback on the data analysis. SB, TB, DM, JB, EL, and GP and all other authors provided feedback on the manuscript versions and agreed to the final draft. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical approval and consent to participate

All participants (where appropriate) and their parent/legal guardian provided written informed consent. Ethical approval for this study was obtained through ethics committees at each site (King’s College London—London Queen Square Health Research Authority Research Ethics Committee: 13/LO/1156; Autism Research Centre, University of Cambridge—London Queen Square Health Research Authority Research Ethics Committee: 13/LO/1156; Radboud University Medical Centre—Quality and Safety Committee on Research Involving Human Subjects Arnhem-Nijmegen: 2013/455, University Medical Centre Utrecht—Quality and Safety Committee on Research Involving Human Subjects Arnhem-Nijmegen: 2013/455; Central Institute of Mental Health—University Medical Mannheim, Medical Ethics Commission II: 2014-540N-MA; Universita Campus Bio-Medica De Roma—Medical Ethics Committee: 18/14 PAR ComET CBM; Karolinska Institute—Central Ethical Review Board: 32-2010).

Consent for publication

Not applicable.

Competing interests

CMF receives royalties for books on ASD and ADHD and further receives research funding by the German Research Foundation (DFG), the German Ministry of Science and Education and the European Commission. NB receives royalties for lecturing at institutes for training in psychotherapy and receives research funding by the German Research Foundation (DFG). TB served in an advisory or consultancy role for ADHS digital, Infectopharm, Lundbeck, Medice, Neurim Pharmaceuticals, Oberberg GmbH, Roche, and Takeda. He received conference support or speaker’s fee by Medice and Takeda. He received royalties from Hogrefe, Kohlhammer, CIP Medien, Oxford University Press; the present work is unrelated to these relationships. JB has been in the past 3 years a consultant to/member of advisory board of/and/or speaker for Takeda/Shire, Roche, Medice, Angelini, Janssen, and Servier. He is not an employee of any of these companies, and not a stock shareholder of any of these companies. He has no other financial or material support, including expert testimony, patents, royalties. The remaining authors have declared that they have no competing or potential conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1

. Further information on duration of video scenes, pupil size comparisons between groups and video category, pupillary response components, stimuli characterization, comparison of model fits, model definitions, full linear mixed model results, Area-of-interest (AOI) definition criteria, applied R packages, and covariates in the statistical analyses.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Bast, N., Mason, L., Ecker, C. et al. Sensory salience processing moderates attenuated gazes on faces in autism spectrum disorder: a case–control study. Molecular Autism 14, 5 (2023). https://doi.org/10.1186/s13229-023-00537-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13229-023-00537-6