Abstract

Background

Causal mediation analysis is often used to understand the impact of variables along the causal pathway of an occurrence relation. How well studies apply and report the elements of causal mediation analysis remains unknown.

Methods

We systematically reviewed epidemiological studies published in 2015 that employed causal mediation analysis to estimate direct and indirect effects of observed associations between an exposure on an outcome. We identified potential epidemiological studies through conducting a citation search within Web of Science and a keyword search within PubMed. Two reviewers independently screened studies for eligibility. For eligible studies, one reviewer performed data extraction, and a senior epidemiologist confirmed the extracted information. Empirical application and methodological details of the technique were extracted and summarized.

Results

Thirteen studies were eligible for data extraction. While the majority of studies reported and identified the effects of measures, most studies lacked sufficient details on the extent to which identifiability assumptions were satisfied. Although most studies addressed issues of unmeasured confounders either from empirical approaches or sensitivity analyses, the majority did not examine the potential bias arising from the measurement error of the mediator. Some studies allowed for exposure-mediator interaction and only a few presented results from models both with and without interactions. Power calculations were scarce.

Conclusions

Reporting of causal mediation analysis is varied and suboptimal. Given that the application of causal mediation analysis will likely continue to increase, developing standards of reporting of causal mediation analysis in epidemiological research would be prudent.

Similar content being viewed by others

Background

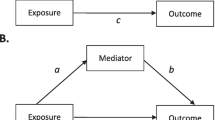

Causal mediation analysis identifies potential pathways that could explain observed associations between an exposure and an outcome [1]. This approach also examines how a third intermediate variable, the mediator, is related to the observed exposure-outcome relationship. Causal mediation analysis has been used to study genetic factors in disease causation [2, 3], pathways associated with response to clinical treatments [4], and mechanisms impacting on public health interventions [5, 6]. There are two approaches for conducting causal mediation analysis. The first, primarily applied in the social sciences, involves the comparison between regression models with and without conditioning on the mediator [7]. The second approach uses the counterfactual framework [8, 9], which allows scientists to decompose the total effect into direct and indirect effects [8–13]. Using the counterfactual framework can help to address the potential bias arising from both incorrect statistical analysis and suboptimal study design [14–16].

The field of causal mediation is relatively new and techniques emerge rapidly. With the rapid development of software packages [11–13, 17], the implementation and/or discussion of this methodology is increasing. In a preliminary search in PubMed, we identified 33 articles in 2013, 59 in 2014, and 61 in 2015. While these software packages allow for estimation in a number of settings, limitations on automated procedures for conducting sensitivity analyses on unmeasured confounding or measurement errors remain. However, causal mediation analysis requires careful implementation of the approach and appropriate evaluations for assumptions to derive valid estimates and the extent to which these studies apply and report the elements of causal mediation analysis remains unknown. Therefore, understanding how these methods have been applied to address issues of bias, how studies have implemented the approach, and how estimates are interpreted may provide useful guidance for future reporting.

The purpose of this review was to systematically review epidemiological studies in which causal mediation analysis was used to estimate direct and indirect effects. In this review, we will extract information on the elements critical to be reported and summarize our findings on how epidemiological studies have conducted and presented results from causal mediation analysis. We will also give recommendations for scientists considering to conduct studies applying causal mediation in the medical literature.

Methods

Selection of articles

Our aim was to identify original empirical epidemiological research published in 2015 that used causal mediation analysis. Two search strategies were used to achieve this goal. First, we retrieved all published studies citing one of the seminal papers [8, 10, 12, 13] on causal mediation analysis using the Web of Science database. One hundred and fifty-seven articles were identified with this approach. Second, we conducted a keyword search within PubMed through working with a research librarian at the University of Massachusetts Medical School. We developed the following keyword search algorithm: causal mediation analysis OR (“causal” AND “mediation analysis” AND “Mediat*”). This search term returned 61 unique records in PubMed dating from January 1, 2015 to December 31, 2015. We excluded the following types of publications or studies: (i) methodological or simulation studies without an empirical application; (ii) studies without examining the effects on health outcomes, that is, studies not including mortality, morbidity, and diagnostic markers, for both mental and physical health; (iii) animal studies or genetic studies; (iv) letters, meeting abstracts, review articles, and editorials; (v) studies without formal discussion of causal framework or using traditional approach, cross-sectional design, and studies using multilevel models or structural equation models approach.

We used the guidelines from the Preferred Reporting Items for Systematic Reviews and Meta-Analyses [18]. After excluding duplicate records, titles and abstracts of the remaining articles were assigned to two reviewers who independently evaluated each study to assess eligibility. Articles with titles and abstracts were then evaluated by two reviewers through full-text review. Any discrepancy in eligibility was discussed and resolved between reviewers. One reviewer (S-H L) performed data extraction, and two reviewers including a senior epidemiologist (SC and KLL) confirmed the extracted information for all eligible studies.

Information abstraction

We considered several elements believed to be important for transparent and complete reporting of causal mediation analyses. These included: (1) motivation for applying causal mediation analysis, (2) evaluation of identifiability assumptions of effects identified, (3) use of sensitivity analyses for unmeasured confounding and/or measurement error of mediators, and (4) elements of implementing causal mediations analysis including power calculations, inclusion of exposure-mediator interactions, and bias analysis for interactions. A brief description and rationale for each element chosen is provided in the following sections.

Rationale for causal mediation analysis

Explanations of cause-effect associations may be enhanced through additional analyses of mediation and interaction. Mediation and interaction phenomena are not mutually exclusive [1]. Several theoretical and practical considerations can also be the motivations to conduct empirical studies for these phenomena of causal effects. Empirically studying mediation can help to: (1) improve understanding; (2) confirm/refute theory; and (3) refine interventions [1]. In this review, we extracted information about whether studies reported (i) the reason for applying causal mediation analysis; (ii) the effect estimates calculated; and (iii) the motivation of the application presented.

Identification of effects and identifiability assumptions

In a counterfactual framework, three measures are estimated: (1) natural direct effect; (2) natural indirect effect; and (3) controlled direct effect [8, 9]. The natural direct effect expresses how much the outcome (Y) would change if the exposure (A) was set to A = 1 compared to A = 0 (if binary) intervening to set the mediator (M) to what it would have been if exposure had been A = 0 (defined by \(\text{Y}_{{1\text{M}}_{0}}-\text{Y}_{{0\text{M}}_{0}}\)). The natural indirect effect comparing fixing the mediator to M1 versus M0 if the exposure is set to level A = 1 (defined by \(\text{Y}_{{1\text{M}}_{1}}-\text{Y}_{{1\text{M}}_{0}}\)). The controlled direct effect expresses how much the outcome would change on average if the exposure were changed from A = 0 to A = 1 but the mediator were set to a fixed level in the population (defined by Y1M–Y0M).

For the mediation analysis to have a causal interpretation, we assume that adjustment for the four types of confounding has been addressed. The four types of confounding are: (1) confounding of the exposure-outcome relationship; (2) confounding of the mediator-outcome relationship; (3) confounding of the exposure-mediator association; and (4) mediator-outcome confounders also affected by the exposure [19]. For controlled direct effect, assumptions (1) and (2) are required. For the identification of natural direct and indirect effects, assumptions (3) and (4) are also needed [13]. However, for studies with randomized treatments, assumptions (1) and (3) are satisfied and control only needed to be made for (2) and (4). We extracted information about what identifiability assumptions were acknowledged in relation to identified effects of estimates.

Sensitivity analysis

In addition to unmeasured confounding common in observational studies [8, 9, 20], measurement error of the mediator could potentially affect the regression coefficient from both the mediator and the outcome regressions and thus result in biased estimates for direct and indirect effects [21–23]. Furthermore, interaction analysis could also be a part of research interests to understand how and why the effect occurs in an observed phenomena. If control has not been made for two sets of confounding factors for each of the exposures, the results from interaction analysis will be biased [1]. In causal mediation analysis, sensitivity analysis can be used as a technique to evaluate the extent to which the direct and indirect effects are robust to assumption violations [24, 25]. We abstracted information on bias analysis to assess: (i) whether sensitivity analysis was conducted or empirically analyzed for identification assumptions; (ii) which identification assumption was a concern and what approach was used for sensitivity analysis; (iii) whether the rationale and approach to conduct sensitivity analysis for measurement errors of the mediators was included; and (iv) whether bias analysis for the interaction was included.

Power calculations

Studies may be powered to detect a main effect, but may not be sufficiently powered to detect an interaction of a certain magnitude. We hypothesized that many studies implementing causal mediation analyses may be underpowered. We extracted information about power calculations for interaction from each study. However, further development and methodologic work regarding power calculations for direct and indirect effects is needed [1]. With this in mind, we extracted information regarding what authors reported on the issue of power calculations for causal mediation analysis without judgment regarding which formulas were appropriate.

Exposure-mediator interactions

In the traditional approach for mediation analysis, no interaction between the effects of the exposure and the mediator on the outcome is assumed [8, 9]. Causal mediation analysis, on the other hand, provides the decomposition of the direct and indirect effects that are valid even in the presence of interaction between the exposure and the mediator on the outcome and when non-linear models are needed [8, 9]. This gives rise to the question of when to include or exclude interactions in conducting causal mediation analysis. The decision to include interaction terms is often driven by statistical findings which may be problematic if statistical power is lacking. As such, a recommended approach is to include exposure-mediator interactions in the outcome model by default and only exclude the interaction terms if the magnitude of interactions is small and the estimates of direct and indirect effects are not altered much in the presence of the interaction terms [1]. Leaving the interaction terms in the outcome model is suggested to avoid drawing incorrect causal conclusions, to help allow for additional model flexibility, and to understand the dynamics of mediation [1]. Therefore, we extracted information about whether or not studies allowed for interactions in the outcome model.

Effects of estimates and results from exposure-mediator interaction

In this review, we assessed whether studies reported both estimates from allowing for exposure-mediator interactions in the outcome model in addition to the effect of estimates without interaction in the model. Moreover, we also extracted estimates from sensitivity analysis conducted for direct/indirect effects and interactions. We also extracted information about explanations of discrepancies when noted.

Results

Figure 1 shows the process of identifying eligible articles for the review. We retrieved 157 and 61 studies from citation search in Web of Science and keyword search in PubMed, respectively. After excluding duplicate studies (n = 22), studies not focusing on the effects of health-related outcomes (n = 57), review articles (n = 6), methodological or simulation studies (n = 46), letters, meeting abstracts and brief reports (n = 10), animals studies (n = 2), studies not using causal mediation analysis (n = 9), genetic studies (n = 9) or studies using multilevel models, structural equation models approach, and cross-sectional design (n = 27), and studies using traditional approach or without formal discussion of formal causal framework (n = 17), we had 13 epidemiological studies that applied causal mediation analysis [26–38].

Summary of study design, primary exposure, outcomes

Two studies used randomized controlled trials; 8 were cohort studies; and 3 were case–control studies (Table 1). We found that studies were not clustered in one specific area (e.g. 3 studies evaluated risks from environmental exposures including environmental substances [31, 38] and changes in environments [28] and 3 studies evaluated parental conditions before [34, 35] and during [33] pregnancy). Regarding outcomes of interest, 4 studies used the first occurrence of a pre-specified event [32, 35–37]. Other studies also examined levels of biomarkers [27, 30, 31, 38], mortality [26, 29], or neonatal health outcomes [33, 34]. Nearly half of studies used biomarkers as the primary mediator [27, 31, 32, 35, 37, 38]. Other studies used a pre-specified medical event [26, 29, 30], health behaviors [34, 36], psychological symptoms [28], and another a neonatal health outcome [33]. All studies provided information on the confounders in the causal mediation analysis and the majority of studies provided a hypothesized directed acyclic graph (DAG).

Motivation for applying causal mediation analysis

The reason for applying causal mediation analysis among all studies was to evaluate mediation (Table 2). With the exception of one study, most studies reported and identified the measures of either direct/indirect effect or controlled direct effect. While the motivation for most studies was to improve understanding, one study used mediation analysis to confirm/refute theory, and one study did not report the motivation.

Evaluation of identifiability assumptions and sensitivity analyses

Four studies did not report identification assumptions for measures of effects identified (Table 3). With the exception of two studies, the empirical approach or sensitivity analysis was used to address the issue of confounding. There were 9 studies addressing unmeasured confounding for the mediator-outcome relationship. Five studies provided the empirical approach and four studies used sensitivity analysis to address the concern. For measurement error or misclassification of mediators, 3 studies addressing this issue (Table 4). Two studies provided the rationale for doing sensitivity analysis for measurement error of mediators. Furthermore, they also noted that the bias may result from misclassification of the mediator and robustness of findings was also discussed.

Elements for implementation of causal mediation analysis

Most studies had a relatively large sample size (Table 5). Three studies had small size (n < 100) and this limitation was acknowledged. The majority of studies did not report whether the power or sample size calculation was calculated. For exposure-mediator interaction, most studies did not report or did not have the exposure-mediator interaction in the model. Among those six studies allowing for exposure-mediator interaction in the model, none reported power or sample size calculation and bias analysis for the interaction.

Effects of estimates and derived results from exposure-mediator interaction

Table 6 shows the estimates from causal mediation analysis with and without interaction in the model for the associations between the primary study exposure and outcome listed in Table 1. While the majority of the studies reported estimates from either with and or without interaction in the model, 3 studies did not report identified estimates of effects. Among 6 studies allowing for exposure-mediator interaction, 2 studies presented results from both with and without interaction in the model and no substantial discrepancies were found.

Discussion

Our review shows that reporting of research on methods using causal mediation analysis to better understand mechanisms of observed exposure-outcome relationship is varied and suboptimal in the field of epidemiology. After reviewing 13 epidemiological studies, we found that while the field of causal mediation analysis has made significant strides, majority of the studies lacked sufficient details on whether the identifiability assumptions were satisfied in relation to identified effect estimates. Furthermore, despite most studies addressing the concern for unmeasured confounders either from empirical approaches or sensitivity analyses, we found that over half of studies did not examine the potential bias arising from the validity of the mediator. In addition, the majority of studies did not provide or comment information on the power calculation or issues of sample size. While some studies allowed for exposure-mediator interaction, only a few presented results from both with and without interaction in the model.

Although it was difficult to judge the adequacy of control for confounding in the reviewed studies without increased knowledge of the specific datasets and subject areas, we found that most studies did not provide enough information on whether either the empirical approach or sensitivity analysis was conducted for identification assumptions in relation to effect estimates identified. It has been emphasized that controlling for mediator-outcome confounders is important when direct and indirect effects are examined [8, 9, 20]. When there is concern for uncontrolled confounding, sensitivity analyses have been recommended to quantify the extent to which the unmeasured confounding variable would have to be to invalidate inferences about the direct and indirect effects [15, 24, 39]. Several approaches can be used to address unmeasured confounding [1]. For example, researchers can choose to report how large the effects of the confounder variable would need to be to completely explain the effects of estimates. To improve reporting of causal mediation analyses in epidemiological literature, we recommend the following. First, studies should be transparent on whether the empirical approach or sensitivity analyses were used to evaluate identifiability assumptions. Second, studies must carefully consider the extent to which bias is present due to concerns regarding valid measurement of the mediator. Several approaches are available to address this issue [21, 22]. Third, if researchers are concerned about the presence of multiple bias in the study, we recommend that researchers prioritize the approaches depending on the context to strengthen their findings.

We found that the majority of studies did not report whether the statistical power or sample size calculation was calculated or if the researchers believed that the sample size available was sufficient to estimate direct and indirect effects with sufficient precision. However, we recognize that approached for calculation power and sample size for direct and indirect effects is limited in the current literature, especially for the exposure-mediator interaction [1]. To understand what sample size is sufficient for mediation analysis, it is currently recommended that researchers use previously published tables for adequate power in single-mediator models [40]. In addition, we also recommend that studies should comment on whether lack of power or insufficient sample size was a likely non-causal explanation of findings especially for these with relatively small sample size.

It has been proven that under sequential ignorability and the additional no-interaction assumption, the estimate based on the product of coefficients method can be interpreted as a valid estimate of the causal mediation effect as long as the linearity assumption holds [41, 42]. However, in many studies it is unrealistic to assume that the exposure and mediator do not interact in their effects on the outcome. Carrying out mediation analysis incorrectly assuming no interaction may result in invalid inferences [13]. Despite the progress of statistical methods in mediation analysis under settings with a binary mediator or count outcomes for exposure-mediator interactions [13], we found that most studies did not report whether there was exposure-mediator interaction in the model.. Although more assumptions are required for the decomposition of a total effect into direct and indirect effects even in models with interactions and non-linearity under the counterfactual framework, this decomposition of total effects allows investigators to assess whether most of the effect is mediated through a particular intermediate or the extent to which it is through other pathways. Therefore, we recommend that future studies include exposure-mediator interactions by default in the outcome model as suggested [1]. We recommend that exposure-mediator interactions only be excluded if the magnitude of interactions is small and do not change the estimates of direct and indirect effects very much.

Our review is subject to some limitations. First, we included only epidemiological studies published in 2015 and limited to those published in English. The findings may not be representative of all publications using causal mediation analysis. However, it is reasonable to give some time for the development and use of methods given that seminal articles for applications were mainly published in 2012 or 2013 and we are interested in a “snapshot” of current practices in reporting such complex methods from the most recent year. Second, the reporting practices of published studies may be influenced by journals’ requirements. Authors may be reporting their approach and findings given word limitations from journals and thus may have limited space to provide details needed for the method. Nevertheless, with methods that require careful implementation of the approach, such reporting is necessary to evaluate the extent to which the method has been appropriately applied. Third, it is possible that we missed some relevant articles due to lack of standardized terminology or exchangeable jargons to describe the study design of causal mediation analysis. However, we believe that including papers which cited the seminal papers reduced the likelihood of this happening. Despite the limitations, this is the first review to examine how epidemiological studies have used causal mediation analysis, what appropriate procedures and analysis are needed to conduct such complex technique, and what elements are critical to report for the method, which is we believe is a strength of our review.

Conclusions

Although the application of causal mediation analysis is increasing in epidemiology, there is an opportunity for improving the quality and presentation of this methodology. We found that there is varied and suboptimal reporting of this emerging approach in literature. We identified that the majority of studies addressing unmeasured confounding for the mediator-outcome relationship. We recommend that future studies should: (1) provide sufficient details on whether either the empirical approach or sensitivity analysis was conducted for identifiability assumptions in relation to effect estimates identified, (2) comment on the bias that may arise from the validity of mediator, (3) discuss whether lack of statistical power or insufficient sample size issue was likely a non-causal explanation of findings, and (4) allow the inclusion of exposure-mediator interaction in the model and present results derived from models with and without interaction terms. We hope that the development of best practices in reporting complex methods in epidemiological research and the adoption of such reporting standards may help quality assessment and interpretation of studies using causal mediation analysis.

References

VanderWeele TJ. Explanation in causal inference: methods for mediation and interaction. Oxford: Oxford University Press; 2015.

Vanderweele TJ, Asomaning K, Tchetgen Tchetgen EJ, Han Y, Spitz MR, Shete S, et al. Genetic variants on 15q25.1, smoking, and lung cancer: an assessment of mediation and interaction. Am J Epidemiol. 2012;175:1013–20.

Huang Y-T, Vanderweele TJ, Lin X. Joint analysis of SNP and gene expression data in genetic association studies of complex diseases. Ann Appl Stat. 2014;8:352–76.

VanderWeele TJ, Lauderdale DS, Lantos JD. Medically induced preterm birth and the associations between prenatal care and infant mortality. Ann Epidemiol. 2013;23:435–40.

Mumford SL, Schisterman EF, Siega-Riz AM, Gaskins AJ, Wactawski-Wende J, VanderWeele TJ. Effect of dietary fiber intake on lipoprotein cholesterol levels independent of estradiol in healthy premenopausal women. Am J Epidemiol. 2011;173:145–56.

Nandi A, Glymour MM, Kawachi I, VanderWeele TJ. Using marginal structural models to estimate the direct effect of adverse childhood social conditions on onset of heart disease, diabetes, and stroke. Epidemiology. 2012;23:223–32.

Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: conceptual, strategic, and statistical considerations. J Pers Soc Psychol. 1986;51:1173–82.

Robins JM, Greenland S. Identifiability and exchangeability for direct and indirect effects. Epidemiology. 1992;3:143–55.

Pearl J. Direct and indirect effects. In: Proceedings seventeenth conference uncertain Artificial Intelligence. 2001. p. 411–20.

Vanderweele TJ, Vansteelandt S. Conceptual issues concerning mediation, interventions and composition. Stat Interface. 2009;2:457–68.

Imai K, Keele L, Tingley D, Yamamoto T. Causal mediation analysis using R. In: Advances in social science research using R. Vol 196. 2010. p. 129–54.

Imai K, Keele L, Tingley D. A general approach to causal mediation analysis. Psychol Methods. 2010;15:309–34.

Valeri L, Vanderweele TJ. Mediation analysis allowing for exposure-mediator interactions and causal interpretation: theoretical assumptions and implementation with SAS and SPSS macros. Psychol Methods. 2013;18:137–50.

Preacher KJ, Rucker DD, Hayes AF. Addressing modearated mediation hypotheses: theory, methods, and prescriptions. Multivar Behav Res. 2007;42:185–227.

VanderWeele TJ, Vansteelandt S. Odds ratios for mediation analysis for a dichotomous outcome. Am J Epidemiol. 2010;172:1339–48.

Richiardi L, Bellocco R, Zugna D. Mediation analysis in epidemiology: methods, interpretation and bias. Int J Epidemiol. 2013;42:1511–9.

Emsley R, Liu H. PARAMED: Stata module to perform causal mediation analysis using parametric regression models. Statistical software components. Boston: Boston College Department of Economics; 2013.

Moher D, Liberati A, Tetzlaff J, Altman DGTPG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;2009(6):e1000097.

VanderWeele TJ. Mediation analysis: a practitioner’s guide. Ann Rev Publ Health. 2016;37:annurev—publhealth—032315–021402.

Cole SR, Hernán MA. Fallibility in estimating direct effects. Int J Epidemiol. 2002;31:163–5.

Valeri L, Lin X, VanderWeele TJ. Mediation analysis when a continuous mediator is measured with error and the outcome follows a generalized linear model. Stat Med Engl. 2014;33:4875–90.

le Cessie S, Debeij J, Rosendaal FR, Cannegieter SC, Vandenbroucke JP. Quantification of bias in direct effects estimates due to different types of measurement error in the mediator. Epidemiology. 2012;23:551–60.

Blakely T, McKenzie S, Carter K. Misclassification of the mediator matters when estimating indirect effects. J Epidemiol Community Health. 2013;67:458–66.

Hafeman DM. Confounding of indirect effects: a sensitivity analysis exploring the range of bias due to a cause common to both the mediator and the outcome. Am J Epidemiol. 2011;174:710–7.

VanderWeele TJ. Bias formulas for sensitivity analysis for direct and indirect effects. Epidemiology. 2010;21:540–51.

Banack HR, Kaufman JS. Does selection bias explain the obesity paradox among individuals with cardiovascular disease? Ann Epidemiol. 2015;25:342–9.

D’Amelio P, Sassi F, Buondonno I, Spertino E, Tamone C, Piano S, et al. Effect of intermittent PTH treatment on plasma glucose in osteoporosis: a randomized trial. Bone. 2015;76:177–84.

Freeman D, Emsley R, Dunn G, Fowler D, Bebbington P, Kuipers E, et al. The stress of the street for patients with persecutory delusions: a test of the symptomatic and psychological effects of going outside into a busy urban area. Schizophr Bull. 2015;41:971–9.

Jackson JW, VanderWeele TJ, Blacker D, Schneeweiss S. Mediators of first-versus second-generation antipsychotic-related mortality in older adults. Epidemiology. 2015;26:700–9.

Kositsawat J, Kuchel GA, Tooze JA, Houston DK, Cauley JA, Kritchevsky SB, et al. Vitamin D insufficiency and abnormal hemoglobin A1c in black and white older persons. J Gerontol Ser A Biol Sci Med Sci. 2015;70:525–31.

Louwies T, Nawrot T, Cox B, Dons E, Penders J, Provost E, et al. Blood pressure changes in association with black carbon exposure in a panel of healthy adults are independent of retinal microcirculation. Environ Int. 2015;75:81–6.

Lu Y, Hajifathalian K, Rimm EB, Ezzati M, Danaei G. Mediators of the effect of body mass index on coronary heart disease decomposing direct and indirect effects. Epidemiology. 2015;26:153–62.

Mendola P, Mumford SL, Mannisto TI, Holston A, Reddy UM, Laughon SK. Controlled direct effects of preeclampsia on neonatal health after accounting for mediation by preterm birth. Epidemiology. 2015;26:17–26.

Messerlian C, Platt RW, Ata B, Tan S-L, Basso O. Do the causes of infertility play a direct role in the aetiology of preterm birth? Paediatr Perinat Epidemiol Engl. 2015;29:101–12.

Raghavan S, Porneala B, McKeown N, Fox CS, Dupuis J, Meigs JB. Metabolic factors and genetic risk mediate familial type 2 diabetes risk in the Framingham heart study. Diabetologia. 2015;58:988–96.

Rao SK, Mejia GC, Roberts-Thomson K, Logan RM, Kamath V, Kulkarni M, et al. Estimating the effect of childhood socioeconomic disadvantage on oral cancer in india using marginal structural models. Epidemiology. 2015;26:509–17.

Song Y, Huang Y-T, Song Y, Hevener AL, Ryckman KK, Qi L, et al. Birthweight, mediating biomarkers and the development of type 2 diabetes later in life: a prospective study of multi-ethnic women. Diabetologia. 2015;58:1220–30.

Xie C, Zhao Y, Gao L, Chen J, Cai D, Zhang Y. Elevated phthalates’ exposure in children with constitutional delay of growth and puberty. Mol Cell Endocrinol. 2015;407:67–73.

Tchetgen Tchetgen EJ, Shpitser I, Tchetgen EJT, Shpitser I. Semiparametric theory for causal mediation analysis: efficiency bounds, multiple robustness and sensitivity analysis. Ann Stat Inst Math Stat. 2012;40:1816–45.

Fritz MS, MacKinnon DP. Required sample size to detect the mediated effect. Psychol Sci. 2007;18:233–9.

Jo B. Causal inference in randomized experiments with mediational processes. Psychol Methods. 2008;13:314–36.

Imai K, Keele L, Yamamoto T. Identification, inference and sensitivity analysis for causal mediation effects. Stat Sci. 2010;25:51–71.

Authors’ contributions

All listed authors have made material contribution to the completion of this manuscript. Mr. Liu conceived and designed the study as well as performed data extraction. This study was conducted under the guidance of Drs. Lapane and Ulbricht with input from the remaining authors. Extracted information were confirmed by Drs. Lapane and Chrysanthopoulou for all eligible studies. Mr. Liu also wrote the first draft of the article, and all other authors revised the manuscript for important intellectual content. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Funding

This work was supported by the National Institute on Aging [grant number 1R21AG046839-01 to Dr. Kate Lapane]; and the National Cancer Institute [Grant number 1R21CA198172 to Dr. Kate Lapane].

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Liu, SH., Ulbricht, C.M., Chrysanthopoulou, S.A. et al. Implementation and reporting of causal mediation analysis in 2015: a systematic review in epidemiological studies. BMC Res Notes 9, 354 (2016). https://doi.org/10.1186/s13104-016-2163-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13104-016-2163-7