Abstract

Background

The aim of this project was to analyze and compare the educational experience in rheumatology specialty training programs across European countries, with a focus on self-reported ability.

Method

An electronic survey was designed to assess the training experience in terms of self-reported ability, existence of formal education, number of patients managed and assessments performed during rheumatology training in 21 core competences including managing specific diseases, generic competences and procedures. The target population consisted of rheumatology trainees and recently certified rheumatologists across Europe. The relationship between the country of training and the self-reported ability or training methods for each competence was analyzed through linear or logistic regression, as appropriate.

Results

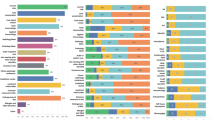

In total 1079 questionnaires from 41 countries were gathered. Self-reported ability was high for most competences, range 7.5–9.4 (0–10 scale) for clinical competences, 5.8–9.0 for technical procedures and 7.8–8.9 for generic competences. Competences with lower self-reported ability included managing patients with vasculitis, identifying crystals and performing an ultrasound. Between 53 and 91 % of the trainees received formal education and between 7 and 61 % of the trainees reported limited practical experience (managing ≤10 patients) in each competence. Evaluation of each competence was reported by 29–60 % of the respondents. In adjusted multivariable analysis, the country of training was associated with significant differences in self-reported ability for all individual competences.

Conclusion

Even though self-reported ability is generally high, there are significant differences amongst European countries, including differences in the learning structure and assessment of competences. This suggests that educational outcomes may also differ. Efforts to promote European harmonization in rheumatology training should be encouraged and supported.

Similar content being viewed by others

Background

Rheumatology specialty training is the educational process required for a physician to be officially recognized as a specialist in rheumatology. In each country, it is defined by one (or sometimes several) officially approved training programs, which aim to bring physicians to an agreed standard of proficiency in the management of patients with rheumatic and musculoskeletal diseases (RMDs). The development and implementation of these training programs are performed under the national authorities. Data on the scope of diseases managed by rheumatologists in each country are lacking, but the national training programs might well reflect the differences in the local definition of a rheumatologist.

Harmonization of specialty training has been considered essential not only to support the free movement of rheumatology specialists within Europe, but also to facilitate equal standards of care for patients with RMDs. Multinational recommendations on rheumatology training are scarce. In the European Union a minimum duration of four years for rheumatology training programs [1] has been established and the Rheumatology Board and Section of the European Union of Medical Specialists (UEMS) has developed recommendations on the structure and content of the training programs [2–4]. The implementation of these recent recommendations, however, remains at the discretion of the individual countries. There has been very little analysis of the current training situation [5, 6].

In a previous study, we assessed the training regulations throughout Europe with regards to length, structure of training, contents and required assessments [7]. Forty-one European countries offer rheumatology training programs, usually organized at a national level, but with substantial variations in all aspects analyzed [7]. Though this prior study provides insight into the training regulations, training programs can be implemented in a variety of ways, as the governing principles are open to interpretation. The delivery of the same (national) training program can therefore vary widely between training centers. There are few comparative data on educational outcomes (i.e., in the competences actually acquired) [8].

The aim of this project, supported by the European League Against Rheumatism (EULAR), was to analyze and compare the educational experience in rheumatology specialty training programs across European countries, specifically looking at competences achieved, and the methods by which they are acquired (education and patient experience) and assessed.

Methods

The current study was a cross-sectional survey. A Steering Group (SG) comprising 12 European rheumatologists with an interest in education agreed upon the main aspects of training to be assessed, after analysis of national training programs across Europe [7] and of the UEMS European Rheumatology Curriculum Framework [3]. A representative from each of the relevant EULAR member countries (national principal investigator (PI)) was identified and oversaw the dissemination of the survey among the target population of the PI’s country. These PIs constituted the Working Group. Given that the study consisted of an online survey among young rheumatologists and researchers, and no patients were involved, no ethics committee approval was required.

Target population

The survey targeted rheumatologists who had been certified in the past five years and rheumatology trainees who were already in the rheumatology-specific part of their training in any of the 41 EULAR countries offering rheumatology specialty training. Rheumatology trainees who were still in their internal medicine training were excluded. The estimated target population included approximately 4500 young rheumatologists [7]. This estimation was obtained by multiplying the number of trainees who started the training in a given country in the year before the survey (as determined by the national PI) times the number of years of the rheumatology-specific period of training plus five years (in order to include rheumatologists certified in the past five years).

Survey content

Through an iterative voting process, the SG selected 21 core competences, which formed the basis of the survey and could be divided into three main areas: (1) Clinical competences: both broad clinical competences ((a) performing a clinical examination; (b) detecting synovitis of metacarpophalangeal and proximal interphalangeal joints; (c) interpreting specific laboratory tests; and (d) applying common measures of disease activity) and disease-specific clinical competences ((a) performing the initial diagnostic and management approach to a patient with a single swollen joint; and managing a patient with (b) osteoarthritis (OA); (c) gout; (d) early rheumatoid arthritis (RA)/undifferentiated arthritis; (e) spondyloarthritis (SpA); (f) autoimmune connective tissue diseases (CTD); (g) systemic vasculitis; (h) osteoporosis (OP); and (i) initiating and monitoring therapy with a biologic agent); (2) Procedures: (a) performing knee aspiration; (b) identifying crystals on an optic microscope; (c) interpreting a conventional hand x-ray; (d) performing musculoskeletal (MSK) ultrasound (US); and (3) Generic competences: (a) working within a multidisciplinary team; (b) interpreting the results of a scientific paper; (c) giving a scientific presentation; and (d) communicating with patients and families).

On each competence, information was gathered on self-reported ability through an 11-point numerical rating scale (NRS) where 0 meant “unable to do” and 10 meant “fully able to do independently”. Respondents were considered to have low self-reported ability if the NRS score was <5 and very low self-reported ability if the NRS score was <3. Information was also gathered on the training process for each competence (where applicable), specifically on the existence of formal education (yes/no) and on the number of patients (personally) managed during training in the pre-set categories (0, 1–10, 11–50, 51–100, 101–150, or >150 patients). Respondents were considered to have limited patient experience if they had managed ≤10 patients/procedures. Finally, data were gathered on whether each competence was formally assessed. Additional questions on research and on the existence of a log-book were included. The complete survey can be found in Additional file 1.

All questions referred to the end of the training period. Trainees were asked to answer based on the reasonable expectations of their training. Qualified rheumatologists were asked to reflect their status at the end of their training period. Data collection took place from June to December 2014.

Data analysis

Descriptive statistics were used to summarize the data. Self-reported ability on each of the competences was compared between groups: respondents who received formal education vs. those who did not, respondents with limited patient experience vs. those with wider experience, respondents assessed vs. those not assessed, respondents from countries with short training vs. long training, and respondents from countries with explicit inclusion of competence in the training program vs. those without inclusion. These comparisons were performed using the Mann-Whitney U test (variables non-normally distributed). In order to investigate whether the country of training was associated with differences in self-reported ability, training methods (education, patient experience) or assessment frequency for each competence we conducted multivariable regression analysis (linear or logistic, for continuous or categorical outcomes, respectively). Four models were therefore constructed for each competence, with country of training as the main variable of interest. Analyses were adjusted for the respondent’s gender, age, certification status, and explicit inclusion of the respective competence in the country’s official training program. The model for self-reported ability was also adjusted for the existence of formal education, for limited practical experience, and for assessment; the model for assessment was adjusted for formal education and for limited patient experience. For self-reported ability, alternative models with total length of training instead of country were computed and the model fit was compared using the R 2 statistic. In the multivariable models, the UK was chosen as the reference country. Data on training length and inclusion of the competence in the curriculum was obtained from prior work by our group [7]. Analyses were repeated including only UEMS member countries. All analyses were performed with Stata SE V.12 (StataCorp, College Station, TX, USA).

Results

In total, 1433 responses were received. After deleting incomplete responses (only demographic data provided) and responses outside the target population (e.g., trainees prior to the rheumatology-specific period of the training or rheumatologists qualified over five years ago), 1,079 responses were included in the final analyses. Respondents were mostly women and were evenly distributed between trainees and certified rheumatologists (Table 1).

The overall response rate was 24 % of the estimated target population. All 41 countries provided responses, but the response rate varied widely amongst countries from 3 % (Belarus) to 100 % (Bosnia & Herzegovina and Estonia). Thirty-six countries achieved a response rate >10 % (all except Armenia, Belarus, Germany, Italy and Ukraine). See Additional file 2 for more details.

Self-reported ability

Mean self-reported ability was high at the end of the training period in most of the 13 clinical competences, ranging from 7.5 (managing a patient with vasculitis) to 9.4 (interpreting laboratory tests) (Table 2). Self-reported ability in the different procedures and techniques was variable, being high for knee aspiration (9.0) and interpreting hand x-rays (8.2) but low for crystal identification (6.1) and performing musculoskeletal (MSK) US (5.8). In general, self-reported ability in generic competences (range 7.8–8.9) was similar to ability in clinical competences.

A proportion of trainees reported low confidence (NRS score <5) or even very low confidence (NRS score <3) in their ability in all core rheumatologic competences (Table 2 and Additional file 3). The percentage of respondents with low self-reported ability (NRS score <5) ranged in clinical competences from 1 % (interpreting laboratory tests) to 9 % (managing a patient with vasculitis), in procedures from 5 % (interpreting hand x-rays) to 32 % (identifying crystals under a microscope) and in generic competences from 2 % (patient communication) to 8 % (participating in a multidisciplinary team).

Training process (education and patient experience)

More than 70 % of the respondents felt that they had received formal education in each of the clinical competences (Table 2). In contrast, the only procedure in which >70 % of respondents had received formal education was knee aspiration (80 %). The proportion of respondents reporting formal education in generic competences was also lower than in clinical competences (53–62 %).

A proportion of respondents managed ≤10 patients with each disease analyzed, ranging from 7 % of respondents managing ≤10 patients with OA to 44 % managing ≤10 patients with vasculitis. The proportion of respondents who performed ≤10 procedures during their training was smaller for knee aspiration (18 %), and hand x-ray interpretation than for US (39 %) and crystal identification (61 %). Forty percent of trainees performed ≤10 scientific presentations during their training.

A total of 844 (83 %) respondents were running a quasi-independent outpatient clinic by the end of their training. Similarly, 835 (82 %) of the respondents initiated and/or monitored patients under treatment with bDMARDs and 936 (92 %) reported using disease activity measures regularly. Half (n = 499) of the respondents published a research paper as a first or second author during their training.

Assessment of competences

Around half the respondents (51–60 %) reported undergoing an assessment in each of the clinical competences (Table 2). A smaller proportion of respondents were assessed in practical procedures and generic competences (29–48 %), with the smallest percentage of assessments being performed for crystal identification. Sixty percent (n = 595) of survey respondents reported keeping a log-book or portfolio in which training activities were registered.

Self-reported ability in different subgroups

Mean self-reported ability in all 21 core competences was higher in the group of respondents who received formal education than in those who did not (Table 3). In a similar manner, the mean self-reported ability in the group of respondents who had limited practical experience (who had managed ≤10 patients) was lower than in those who had greater practical experience (Additional file 4). However, respondents who were assessed only had higher mean self-reported ability in 12 of the competences assessed: performing a MSK examination, using disease activity measures, managing patients with OA, gout, CTD, vasculitis, and OP, identifying crystals, interpreting a hand x-ray, performing US exam, interpreting a research paper and performing a scientific presentation. In the remaining eight competences, the mean self-reported ability in respondents who had been assessed was not different to the mean in those who had not been assessed (Additional file 5).

Longer training was associated with higher mean self-reported ability in all competences except for managing patients with vasculitis (Additional file 6), while the explicit inclusion of the individual competences in the national training program was associated with higher self-reported ability in only some procedures and generic competences, but not in clinical competences (Additional file 7).

Association between country of training and self-reported ability and training methods

Through regression analysis, we identified factors associated with self-reported ability for each competence (separate models). Individual country data are available in Additional file 8. In an adjusted model (overall p value for the dummy variables of country) the country of training was significantly associated with self-reported ability in all 21 core competences (Additional file 9). For example, trainees from the UK reported (on average) 2.6 points higher ability in the management of patients with early RA than a trainee from Albania and 1.4 points higher than a trainee from France. In all 21 competences, formal education and managing >10 patients during training were also associated with increased self-reported ability. On the other hand, the undergoing an assessment was associated with increased self-reported ability in only six of the 21 competences analyzed (using disease activity measures, managing a patient with OA, CTD, or vasculitis, performing US exam and participating in a multidisciplinary team). The length of training was also associated with increased self-reported ability in all competences, but the models had a worse fit compared to the models with country.

In separate and adjusted regression models, the country of training was associated with differences in the odds of receiving formal theoretical education, of having limited patient experience and of undergoing an assessment in any given competence (Additional file 9). All analyses were repeated including only countries that are members of UEMS, and the results were consistent.

Discussion

In this study we have explored the training experience of young European rheumatologists and trainees from the 41 countries where training is offered. Self-reported ability appears moderately high for most core competences, though no external reference has been found for comparison, not even in other medical specialties. Still, there is room for improvement. Moreover, differences between countries persist even after adjustment. If this reflects real differences in competence achieved during specialty training, the trans-national standard of care for patients with RMDs might be compromised.

Several educational “red flags” have been detected. There was consistently poor performance in the identification of crystals by optic microscopy: self-reported ability was low, as was the percentage of trainees receiving education and “sufficient” practical experience. Crystal identification is a mandatory technique for rheumatology trainees according to the UEMS recommendations [3] and to most national training programs. Whether the lack of competence is due to other specialists/laboratory technicians taking over the procedure is unknown; this approach, however, has low reliability [9, 10]. A second procedure - performing MSK US – was also poorly performed, with low self-reported ability in this procedure. This might reflect the prolonged learning curve for US, as those completing training might not yet have attained sufficient proficiency. However, US is not (yet) considered a mandatory competence at a European level or in many national curricula so the low self-reported ability could result from lack of access [11] or the acquisition of the competence by only some of the trainees. Surprisingly, ability even in what could be considered a core rheumatologic procedure - knee aspiration – was not optimal, with over 5 % of responders reporting low ability. Not being able to perform knee aspiration adequately could have a negative impact on other competences such as management of a patient with different types of arthritis or identifying crystals under the microscope. If global deficiencies are to improve, analysis of the causes by national and international organizations should be prioritized and solutions such as further promotion of courses or educational stays at reference centers considered.

Self-reported ability was better for clinical competences than for generic competences. The lowest self-reported ability within the disease areas was in managing patients with CTD, especially those with systemic vasculitis. This could be due to several reasons as these are fewer common diseases with potential multi-organ involvement, which can therefore present in a variety of manners. Also, rheumatologists might not be the main providers of care for these patients in some countries, adding to the European diversity. This issue was not addressed in this study.

Beyond global deficiencies, country-specific strengths and weaknesses have also been detected. In order to interpret the results a minimum background knowledge of rheumatology training programs across Europe is needed. In all countries, a period of general internal medicine training is required, though the timing of the training in internal medicine varies (within or prior to the rheumatology training program). Training length (considered as any prior internal medicine training and the rheumatology training program) will span from 3 to over 8 years. Given the differences in structure, this period provides a better reflection of true training time than the length of the rheumatology training program.

We accepted a broad definition of rheumatology for this project, but diversity in clinical rheumatology practice was documented over two decades ago [12]. This will impact the clinical competences and procedures that each country classes as mandatory during the training. The competences were chosen deliberately to cover a broad spectrum of rheumatology, but in order to maximize participation, the number of competences analyzed was necessarily limited.

Apart from the differences in self-reported ability, some countries have greater reliance on personal study (limited formal education), while in others the trainees are expected to see and manage a greater number of patients. As expected, greater experience increased the self-reported ability of trainees, as did receiving formal education. Even though didactic lectures do not modify physician behavior in continuous medical education, it must be noted that formal education can take different forms, including interactive formats, which do have behavior-modifying potential [13]. Also, postgraduate training comprises a critical educational time, with a need to incorporate vast amounts of knowledge in a relatively short time. The effect of assessments on self-reported ability was inconsistent. However, the type of assessment was not recorded and therefore anything from case discussions to written multiple-choice exams or objective structured clinical examination could be considered an assessment.

Even though comprehensive discussions were performed during the development and dissemination of this survey, several limitations must be noted. First, in this study we used self-reported ability - also referred to as confidence - as a surrogate marker for competence. In the absence of a pan-European examination, it is impossible to obtain a homogeneous assessment of competence, and self-reported ability could be a reasonable surrogate. However, it should be noted that this is a subjective assessment made by the young rheumatologist/trainee and thereby influenced by many external factors such as the referent against which it is compared (i.e., the standard the respondent considers optimal), the social context (e.g., the attitudes towards medical education), and personal traits (e.g., self-esteem).

Second, we asked trainees to estimate outcomes for the end of their training and rheumatologists to recall the end of their training. This introduces several biases (such as recall bias for young rheumatologists) and imprecision. Also, the survey captured answers from around a quarter of the target population; it is plausible that trainees who answered the survey are more engaged and optimistic than the general population. The limited number of participants, particularly from some countries, poses some challenges in the interpretation of the results. Country of training consistently and significantly played a role and analyses are robust for this conclusion. Nevertheless, due to a limited number of participants per country and particularly in some countries, the coefficients reflecting the magnitude of the difference between countries may be unstable, and therefore must be interpreted with caution.

Finally, some of the questions could have led to differences in interpretation. For this, we piloted the survey and provided definitions and examples of all terms considered less clear. For example we provided examples of what should be considered as formal education: “This does not mean that you have seen, managed or discussed patients with these complaints or that you can treat patients with these diseases. Rather we are asking if you have received formal education such as courses, lectures, etc”.

This study suggests that there are significant differences between European countries, not only in training structures and methods, but also in educational outcomes. The differences between countries can be attributed to a number of factors. Length of training does not fully explain the differences between countries. Other factors not assessed in this study (e.g., type of supervision, health care organization, etc.) are potential confounders of this relationship with length of training and should be explored to provide further insight into the differences between countries and the possible intervention strategies.

Homogeneous training might be considered unnecessary or even unwarranted, as national needs differ. However, harmonized training has been encouraged by all European authorities as an essential way to support doctor mobility within the European Union, improve national training, and assure trans-national standards of care for patients. Efforts towards this end have been ongoing for decades in many specialties [14–19]. In rheumatology, the Section and Board of the UEMS has produced several Charters and Educational Training Requirements, and the uptake of these, though currently limited, should be supported.

Other specialties have devised different paths to enhance harmonization, with greater or lesser success. Up to 30 UEMS specialty Boards are providing a (voluntary) European assessment [20]. Even though these are not to be considered as formal specialist qualifications, their quality and recognition have increased significantly and as a result, some countries recognize European assessments as part of (or equivalent to) their national examination. Furthermore, their mere existence has led to an increase in the number of trainees and specialists taking these exams, contributing to a higher standard of knowledge and competence, which may ultimately contribute to better delivery of care. Steps towards an assessment tool that can be used across all the USA rheumatology training programs have also been recently forthcoming [19]. Within the rheumatology community, further steps in this direction are needed; this can only take place with the involvement and commitment of the different countries and stakeholders.

In summary, this study reports that even though overall high self-reported ability is reported for most competences, significant differences in outcome and training methods remain amongst countries. Given the relevance of the issue, further analyses of the extent of the differences in achieved competences are warranted. Initiatives such as the development and implementation of a consensus list of core competences or a multinational examination should be supported and encouraged. Increased knowledge about national training provides the background information necessary to plan successful harmonization attempts.

Conclusions

Trainees across Europe achieve high self-reported ability in most rheumatology competences, but several “red flags” have been identified. Self-reported ability of trainees and training methods differ among European countries. Further harmonization of competences across Europe should be supported to optimize the standard of care.

Abbreviations

- bDMARDs:

-

biologic disease-modifying anti-rheumatic drugs

- CoBaTrICE:

-

competence-based training in intensive care medicine

- CTD:

-

autoimmune connective tissue diseases

- EULAR:

-

European League Against Rheumatism

- MSK:

-

musculoskeletal

- NRS:

-

numerical rating scale

- OA:

-

osteoarthritis

- OP:

-

osteoporosis

- PI:

-

national principal investigator

- RA:

-

rheumatoid arthritis

- RMD:

-

rheumatic and musculoskeletal diseases

- SG:

-

Steering Group

- SpA:

-

spondyloarthritis

- UEMS:

-

European Union of Medical Specialists

- US:

-

ultrasound

References

Directive 2005/36/EC of the European Parliament and of the Council, 7 September 2005, on the recognition of professional qualifications.

da Silva J, Faarvang K, Bandilla K, Woolf A, UEMS Section and Board of Rheumatology. UEMS charter of the training of rheumatologists in Europe. Ann Rheum Dis. 2008;67(4):555–8.

European Board of Rheumatology (a section of UEMS). The European Rheumatology Curriculum Framework http://dgrh.de/fileadmin/media/Praxis___Klinik/european_curriculum_uems_april_2008.pdf [27 May 2014].

UEMS/EBR. Training requirements for the specialty of rheumatology http://www.eular.org/myUploadData/files/European-Training-Requirements-in-Rheumatology%20endorsed%20UEMS%20April%2012%202014.pdf [24 Sept 2014].

Naredo E, D’Agostino M, Conaghan P, Backhaus M, Balint P, Bruyn G, et al. Current state of musculoskeletal ultrasound training and implementation in Europe: results of a survey of experts and scientific societies. Rheumatology. 2010;49:2438–43.

Demirkaya E, Ozen S, Turker T, Kuis W, Saurenmann R. Current educational status of paediatric rheumatology in Europe: the results of PReS survey. Clin Exp Rheumatol. 2009;27:685–90.

Sivera F, Ramiro S, Cikes N, Dougados M, Gossec L, Kvien TK, et al. Differences and similarities in rheumatology specialty training programmes across European countries. Ann Rheum Dis. 2015;74(6):1183–7.

Bandinelli F, Bijlsma J, Ramiro M, Elfving P, Goekoop-Ruiterman Y, Sivera F, et al. Rheumatology education in Europe: results of a survey of young rheumatologists. Clin Exp Rheumatol. 2011;29:843–5.

von Essen R, Holtta AM, Pikkarainen R. Quality control of synovial fluid crystal identification. Ann Rheum Dis. 1998;57(2):107–9. Epub 1998/06/05. eng.

Lumbreras B, Pascual E, Frasquet J, Gonzalez-Salinas J, Rodriguez E, Hernandez-Aguado I. Analysis for crystals in synovial fluid: training of the analysts results in high consistency. Ann Rheum Dis. 2005;64(4):612–5.

Garrood T, Platt P. Access to training in musculoskeletal ultrasound: a survey of UK rheumatology trainees. Rheumatology. 2009;49:391.

Grahame R, Woolf A. Clinical activities: an audit of rheumatology practice in 30 European centres. Br J Rheumatol. 1993;32 Suppl 4:22–7.

Davis D, O’Brien MA, Freemantle N, Wolf FM, Mazmanian P, Taylor-Vaisey A. Impact of formal continuing medical education: do conferences, workshops, rounds, and other traditional continuing education activities change physician behavior or health care outcomes? JAMA. 1999;282(9):867–74.

Barret H, Bion J. An international survey of training in adult intensive care medicine. Intensive Care Med. 2005;31(4):533–61.

CoBaTrICE Collaboration, Bion J, Barret H. Development of core competencies for an international training programme in intensive care medicine. Intensive Care Med. 2006;32(9):1371–83.

Cranston M, Slee-Valentijn M, Davidson C, Lindgren S, Semple C, Palsson R, et al. Postgraduate education in internal medicine in Europe. Eur J Intern Med. 2013;24:633–8.

Knox A, Gilardino M, Kasten S, Warren R, Anastakis D. Competency-based medical education for plastic surgery: where do we begin? Plast Reconstr Surg. 2014;133(5):702e–10e.

European Society of Cardiology. ESC core curriculum for the general cardiologist (2013). Eur Heart J. 2013;34:2381–411.

Criscione-Schreiber L, Bolster M, Jonas B, O’Rourke K. Competency-based goals, objectives and linked evaluations for rheumatology training programs: a standardized template of learning activities from the Carolinas Fellows Collaborative. Arthritis Care Res. 2013;65(6):846–53.

Mathysen D, Rouffet J, Tenore A, Papalois V, Sparrow O, Goldik Z, et al. UEMS-CESMA guideline for the organisation of European postgraduate medical assessments https://www.uems.eu/__data/assets/pdf_file/0018/24912/UEMS-CESMA-Guideline-for-the-organisation-of-European-postgraduate-medical-assessments-Final.pdf [14 Sept 2015].

Acknowledgements

We gratefully acknowledge the help provided by EMEUNET (Emerging EULAR Network) and by the national rheumatology societies of all European countries in the dissemination and distribution of the survey. Members of the Working Group on Training in Rheumatology across Europe are: Ledio Ҫollaku, Rheumatology, Clinic of Internal Medicine, University Hospital Center “Mother Teresa”, Tirana (Albania); Armine Aroyan (Armenia), Helga Radner (Austria), Anastasyia Tushina (Belarus), Ellen De Langhe, Department of Rheumatology, University Hospital Leuven, Leuven (Belgium); Sekib Sokolovic (Bosnia & Herzegovina), Russka Shumnalieva, Department of Internal Medicine, Clinic of Rheumatology, Medical University-Sofia (Bulgaria); Marko Baresic, Division of Clinical Immunology and Rheumatology, Department of Internal Medicine, School of Medicine, University Hospital Center Zagreb (Croatia); Ladislav Senolt, Institute of Rheumatology and Department of Rheumatology, First Faculty of Medicine, Charles University in Prague (Czech Republic); Mette Holland-Fischer, Department of Rheumatology, Aalborg University Hospital (Denmark); Mart Kull (Estonia); Antti Puolitaival, Department of Medicine, Kuopio University Hospital, Kuopio (Finland); Nino Gobejishvili (Georgia); Axel Hueber, Department of Internal Medicine 3, University of Erlangen-Nuremberg (Germany); Antonis Fanouriakis, Department of Rheumatology and Clinical Immunology, “Attikon” University Hospital, Athens (Greece); Paul MacMullan10 Clinical Assistant Professor, Division of Rheumatology, University of Calgary, Alberta, Canada (Ireland); Doron Rimar (Israel); Serena Bugatti, Division of Rheumatology, University of Pavia, IRCCS Policlinico San Matteo Foundation, Pavia (Italy); Julija Zepa (Latvia); Jeanine Menassa (Lebanon); Diana Karpec (Lithuania); Snezana Misevska-Percinkova (Macedonia); Karen Cassar, Department of Medicine, Mater Dei Hospital, Malta (Malta); Elena Deseatnicova (Moldova); Sander W Tas, Amsterdam Rheumatology and immunology Center, Department of Clinical Immunology & Rheumatology, Academic Medical Center/University of Amsterdam, Amsterdam (Netherlands); Elisabeth Lie (Norway); Jan Sznajd, Jagiellonian University Medical College, Krakow (Poland); Florian Berghea (Romania); Anton Povzun, Scientific Research Institute of Emergency Care n.a.l.l. Dzhanelidze, Saint-Petersburg (Russia), Ivica Jeremic (Serbia); Vanda Mlynarikova (Slovakia); Mojca Frank-Bertoncelj, Center of Experimental Rheumatology, University Hospital Zurich (Slovenia); Katerina Chatzidionysiou, Rheumatology Department, Karolinska University Hospital, Karolinska Institute, Stockholm (Sweden); Alexandre Dumusc (Switzerland); Gulen Hatemi (Turkey); Erhan Ozdemirel, Ankara University Medical Faculty, PMR Department, Rheumatology Division, Ankara (Turkey); and Iuliia Biliavska (Ukraine).

Funding

This project was financially supported by EULAR.

Authors’ contributions

FS and SR conceived the study and participated in its coordination. FS, SR, NC, MC, MD, LG, TKK, IEL, PM, AM, SP, JAPS, and JWB participated in the design of the study. FS, SR, LG, PM, AM, and the study group took part in the data collection. SR performed the statistical analysis. FS and SR drafted the manuscript; all authors revised and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

An erratum to this article is available at http://dx.doi.org/10.1186/s13075-016-1199-3.

Additional files

Additional file 1:

Survey of the project “Assessment of training for Rheumatology fellows across Europe”. (DOCX 28 kb)

Additional file 2: Table S1.

Response numbers and rate per country. (DOCX 16 kb)

Additional file 3: Table S2.

Number and percentage of respondents with very low self-reported ability per competence. (DOCX 14 kb)

Additional file 4: Table S3.

Comparison of self-reported ability in each competence in respondents managing ≤10 patients and those managing >10 patients in the corresponding competence during their training. (DOCX 15 kb)

Additional file 5: Table S4.

Comparison of self-reported ability in each competence in respondents with and without assessment in the corresponding competence. (DOCX 15 kb)

Additional file 6: Table S5.

Comparison of self-reported ability in each competence in respondents with a long and short length of training (previous training in internal medicine plus rheumatology training program). (DOCX 15 kb)

Additional file 7: Table S6.

Comparison of self-reported ability in each competence in respondents whose national training program explicitly included the competence and those whose national training program did not. (DOCX 15 kb)

Additional file 8: Table S7.

Self-reported ability per individual country. (DOCX 23 kb)

Additional file 9: Table S8.

Factors associated with self-reported ability and with limited experience in early rheumatoid arthritis/undifferentiated arthritis (≤10 patients) and in performing knee aspiration (≤10 procedures). (DOCX 18 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Sivera, F., Ramiro, S., Cikes, N. et al. Rheumatology training experience across Europe: analysis of core competences. Arthritis Res Ther 18, 213 (2016). https://doi.org/10.1186/s13075-016-1114-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13075-016-1114-y