Abstract

Background

In response to the COVID-19 pandemic and associated adoption of scarce resource allocation (SRA) policies, we sought to rapidly deploy a novel survey to ascertain community values and preferences for SRA and to test the utility of a brief intervention to improve knowledge of and values alignment with a new SRA policy. Given social distancing and precipitous evolution of the pandemic, Internet-enabled recruitment was deemed the best method to engage a community-based sample. We quantify the efficiency and acceptability of this Internet-based recruitment for engaging a trial cohort and describe the approach used for implementing a health-related trial entirely online using off-the-shelf tools.

Methods

We recruited 1971 adult participants (≥ 18 years) via engagement with community partners and organizations and outreach through direct and social media messaging. We quantified response rate and participant characteristics of our sample, examine sample representativeness, and evaluate potential non-response bias.

Results

Recruitment was similarly derived from direct referral from partner organizations and broader social media based outreach, with extremely low study entry from organic (non-invited) search activity. Of social media platforms, Facebook was the highest yield recruitment source. Bot activity was present but minimal and identifiable through meta-data and engagement behavior. Recruited participants differed from broader populations in terms of sex, ethnicity, and education, but had similar prevalence of chronic conditions. Retention was satisfactory, with entrance into the first follow-up survey for 61% of those invited.

Conclusions

We demonstrate that rapid recruitment into a longitudinal intervention trial via social media is feasible, efficient, and acceptable. Recruitment in conjunction with community partners representing target populations, and with outreach across multiple platforms, is recommended to optimize sample size and diversity. Trial implementation, engagement tracking, and retention are feasible with off-the-shelf tools using preexisting platforms.

Trial registration

ClinicalTrials.gov NCT04373135. Registered on May 4, 2020

Similar content being viewed by others

Background

The novel severe acute respiratory syndrome coronavirus (SARS-CoV-2) publicly emerged in December 2019 and has since rapidly spread throughout the world, constituting a major pandemic. Early in the pandemic, concern for health system capacity and virus containment prompted many health officials, universities, and hospitals to undertake development of scarce resource allocation (SRA) policies [1]. These policies outline rules for distribution of limited resources, such as ventilators or hospital beds, and aim to do so in a way that is both consistent and ethical, with imperatives for maximizing benefits, equal treatment, and prioritization on instrumental value and need that can be operationalized in a number of ways [2]. Though medical ethicists have written extensively on how to construct such a framework, there are limited studies that aim to evaluate and intervene on knowledge of and agreement with these ethical principles during an active pandemic when the threat of their application is extant [3].

Conducting such a study requires reaching populations who may be the subject of allocation decisions and may be responsible for applying allocation decisions; both of which can be hard to reach during an active crisis. There is evidence that social media can be the best recruitment method for hard-to-reach populations and can be a similarly effective method as traditional recruitment in many cases [4,5,6,7]. Social media-delivered behavioral interventions have the potential to reduce the expense of more traditional interventions by eliminating the need for direct participant contact, also especially necessary during the pandemic, as well as increasing access to diverse participants that may not be accessible via clinic-based recruitment [8]. Although a burgeoning recruitment and delivery method, researchers are increasingly outlining rigorous methodological and ethical considerations for its use [9,10,11]. These methods have proven especially useful for the rapid recruitment into COVID-related studies during the current pandemic [12].

We sought to utilize Internet-based methods for engaging community participants and health care workers in completing a trial testing an educational intervention designed to influence knowledge of SRA policies and trust in institutional implementation of these policies during an ongoing pandemic. In this report, we outline detailed methods for recruitment strategies and implementation of an entirely online trial, including considerations for data fidelity and ethical oversight, with the use of widely available tools; we further summarize strengths and limitations of our approach and make recommendations for utilization of these methods in future work.

Methods

The UC-COVID (Understanding Community Considerations, Opinions, Values, Impacts, and Decisions for COVID-19) study is a community engagement study undertaken to characterize health and access to care during the COVID-19 pandemic. This study features an educational trial component to test the ability of a novel intervention to impact knowledge of and trust in institutional capacity to implement ethical allocation of scarce resources. We adopted a broad social media based recruitment strategy where we collaborated with community organizations, disease advocacy groups, and professional societies to invite study participation by direct messaging from organizations to their members; we also employed targeted social media posts and referrals from participants. Though our recruitment strategy primarily focused on groups in California (71% of our sample), eligibility was not restricted by location and all adults (age ≥ 18) were eligible. Recruitment for the survey opened on May 8, 2020, and closed on September 30, 2020; though 99% of respondents entered the survey between May and August. This study was approved by the Institutional Review Board and informed consent was obtained from all participants. This study was registered with ClinicalTrials.gov (NCT04373135).

Study design and procedures

To promote recruitment, we established partnerships with several disease advocacy groups (e.g., COPD Foundation, Taking Control of Your Diabetes, Pulmonary Hypertension Association, Vietnamese Cancer Foundation, AltaMed) and professional societies (e.g., California Thoracic Society, American Thoracic Society, Society for General Internal Medicine). Investigators contacted these groups, presented aligned goals, and proposed utilizing their networks for targeted study recruitment. Recruitment messages were primarily posted on social media accounts, message boards, and via direct newsletters to the email distribution lists of networks and partner groups. Broader study promotion was also achieved through “sharing” of study information via personal/professional social networks of study investigators, colleagues/institutions, and participants (website analytics revealed that visitors shared study information 279 times via embedded share applet—similar to snowball sampling). Recruitment messages included IRB-approved language to promote the study (brief descriptions, inclusion criteria) and provide a link directing participants to a hosted study website. The study website included general study information (including IRB and trial registration information), research team contact information, and a share applet that allowed users to send an email or create a social media post linking back to the landing page. We tracked study website traffic (user and page views) during the recruitment period, including device on which the page was accessed (mobile, tablet, desktop), referral source, language, and location.

The study website directed participants to click an outbound link that transferred them to a Research Electronic Data Capture [13, 14] (REDCap, Nashville, TN; May 8, 2020, to June 19, 2020) or Qualtrics (Provo, UT) survey (June 19, 2020, to study close), hosted on secure servers at UCLA. Though we initially implemented our study in English only, to expand our ability to include diverse participants we utilized professional translation services (International Contact, Inc.; Berkeley, CA) to translate our study (website, consent, and survey) into the five most commonly spoken foreign languages in California (Spanish, Mandarin Chinese, Korean, Tagalog, and Vietnamese). As REDCap did not have native support for translation of the command buttons for the survey (e.g., “Next,” “Submit”), we migrated the survey to Qualtrics to facilitate full translation of the survey and interface.

After entering the survey, respondents first viewed an online consent form with language included in a typical written consent. Of the 2844 survey initiations (Fig. 1), 362 (12.7%) entries from “bots” and 82 (2.9%) duplicate participant entries were excluded; 2384 respondents affirmed consent via the online form, 15 respondents declined consent, and 1 respondent exited the survey without affirming or declining consent. Of those who consented, 413 (17.3%) respondents did not continue the survey beyond that point, resulting in 1971 (82.7%) consented, active participants. One thousand five hundred forty (78.1%) participants completed the baseline assessment through at least part of the section on SRA policies and thus were eligible for pre/post comparison of key trial outcomes.

Baseline participants who did not complete on their first attempt (and who provided an email address) received a reminder email 4 weeks after their last activity, then weekly for 3 weeks (for up to four total invitations) thereafter to remind/encourage survey completion.

Assessing data validity

The Qualtrics platform has a built-in option (“Prevent Ballot Box Stuffing”) that is designed to prevent duplicative entries by placing a cookie on the browser of participants during their first entry into the survey. If the same respondent comes back on the same browser and device, without having cleared their cookies, they are flagged as a duplicate and not permitted to take the survey again. However, clearing browser cookies, switching to a different web browser, using a different device, or using a browser in “incognito” mode would all allow a participant to enter the survey again. As such, we additionally relied on embedded data to identify potential fraudulent entries for records attached to IP addresses that were duplicated in the data greater than four times; three of four instances were suspected to be the result of bots (fraudulent activity) [15] and discarded from the data. In the first instance, one IP address (geo-tagged to a location in China) contributed 172 attempted survey entries, none of which progressed in the survey beyond the consent page, that were all submitted within a 24-min window. In the second and third instances, two IP addresses contributed 121 and 69 attempted survey entries, none of which progressed past the demographics section of the survey, all included similarly formatted email addresses (random word + four random letters @ domain), including some emails that were duplicated across these two IP addresses. The final instance included 19 records with unique and valid emails; these records were determined to be valid and submitted by unique individuals using a shared server. Invalid records submitted by bots were largely consistent with each other (e.g., 100% identified as health care workers, 100% reported their age reported an age between 30 and 33) and compared to valid records were more likely to report younger age, male sex, divorced/widowed/separated, having a bachelor’s degree, currently working, and having a military background (data not shown).

Follow-up surveys

Following consent, respondents were asked to provide an email address for eligibility to receive a gift card and to receive follow-up surveys; respondents could still participate in the baseline survey if they did not provide an email (N = 222 did not provide an email). Follow-up invitations were sent in batches by month of baseline survey entry beginning in the second week of August so follow-up surveys were predominantly completed 2–3 months after the baseline survey; the first follow-up survey was closed in December. Participants received an email with a unique link to participate in the first follow-up survey; participants who did not complete the survey after the original invitation subsequently received a reminder email weekly for three additional attempts (for up to four total invitations) thereafter or until they completed. Of 1749 provided e-mail addresses, only 1550 invitations were initially sent as 18 e-mails were returned as undeliverable and by an unidentified error, 181 e-mails were marked as “not sent” at the close of the pre-programmed Qualtrics distribution. Of follow-up invitations sent, 19 respondents opted-out/declined to participate in the follow-up survey and 592 did not respond to the follow-up requests, resulting in 939 (60.6%) entries into the first follow-up survey. Participants were sent a second follow-up survey via automated email invitation in January 2021, with up to 4 automated reminders to complete.

Participants who provided email addresses were entered in a raffle to win one of twenty-five (25) $100 gift cards for an online retailer; participants who complete two surveys receive one entry and those who complete all three surveys receive two entries.

Intervention

During the first follow-up survey, respondents from California were automatically randomized to receive either a brief educational video explaining SRA policies or no intervention using a randomization module programmed into the survey that executed a stratified randomization scheme based on health care worker status, gender, age, race, ethnicity, and education. As the intervention was based on the policy developed by the University of California system (one of the largest providers in the state, with 10 campuses, five medical centers, and three affiliated national laboratories) and our hypotheses focused on the intervention’s impact for California adults (i.e., those who could potentially be the subject of allocation decisions and/or may be responsible for applying allocation decisions per the University of California policy), only respondents who reported residing in California were randomized. As those outside California may be subject to different SRA policies, we elected not to expose them to information about a policy that was potentially not relevant to them and so did not randomize them (“negative controls”).

Participants randomized to the intervention were automatically shown the intervention video, which was housed on a private Vimeo (New York, NY) channel and embedded in the survey. The 6:30-min long video was animated by a professional video production company (WorldWise Productions, Los Angeles, CA) and covered key topic of public health ethics, policy development, and a summary of how the University of California’s SRA policy would be implemented during a crisis. A copy of the video is available upon request. In addition to viewing the intervention video, participants randomized to treatment were also shown five additional survey questions to assess their impressions of the intervention; all other content of the follow-up survey was identical to controls.

Safety

At the completion of each survey, participants were directed to a “Thank you” page that additionally included a message directing them to contact the study team with any questions or concerns, including information on how to do so. Participants were also instructed to reach out to their personal health care or mental health provider if they experienced discomfort or distress and were provided with the website and phone number for the National Suicide Prevention Lifeline in the event they were in crisis.

External comparison

To determine the extent to which our non-probability sample is representative of the larger population from which our sample was drawn (primarily California adults, but also US adults), we compared our sample to respondents from 2019 Behavioral Risk Factor Surveillance System (BRFSS) [16]. Our survey used a number of BRFSS questions (see below) to facilitate comparison.

Survey measures

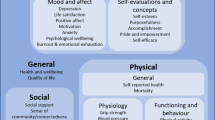

Survey data included information on demographics (sections 1 and 6), health and health behaviors (section 2), access to care (section 3), experience with COVID-19/coronavirus (section 4), and SRA policies (section 5). The baselines questionnaire was the longest (approximately 35 min to complete) while subsequent surveys were designed to be shorter (approximately 15 min to complete).

Respondents first self-reported their status as a health care worker (Are you a health care professional? Examples include: physician/doctor, nurse, pharmacist, respiratory therapist, rehab specialist, psychologist, clinical social worker, or hospital chaplain. If you are a health professional student (pre-degree or certificate) please select “no” for the purposes of this survey.) Those who identified themselves as a health care worker received different survey items than non-health care workers. All participants were also asked to report their employment status, educational attainment, gender identity, year of birth, race, ethnicity, health insurance, place of residence, marital status, and if they had children. Health care workers were also asked to identify their specialty and tenure. A shorter version of this section was administered in the follow-up surveys to assess changes to employment and insurance.

The second section of the survey ascertained information on health and health behaviors; the majority of these questions were drawn from the BRFSS [16]. Information included diagnosed chronic conditions, self-reported general health status (5-point Likert scale from “poor” to “excellent”), number of days in the past 30 days where mental health was “not good” (“Now thinking about your mental health, which includes stress, depression, and problems with emotions…”) and where physical health was “not good” (“Now thinking about your physical health, which includes physical illness and injury…”), screeners for depression (PHQ-2) [17] and anxiety (GAD-2) [18], a single item on sleep from the PHQ-9 [19], and respondents were asked to compare their mental health now to the same time last year. Respondents were asked about alcohol use in the past 30 days (number of days of use, number of drinks per occasion), cigarette [20] and e-cigarette [21] use in the past 30 days, and exercise in the past 30 days; they were also asked to report recent changes in these behaviors and if COVID-19 was a cause. A shorter version of this section was administered in the follow-up surveys to assess physical and mental health. Self-identified healthcare workers were also asked about burnout in subsequent surveys [22].

The third section focused on access to care; all participants were asked about receipt of an influenza vaccination for the 2019 season, if they had a personal doctor, and the time they last saw their personal doctor. Those who previously reported any chronic medical condition were asked a set of novel questions about the impact of COVID-19 and related social distancing on their disease management and symptoms. Non-health care workers were asked an additional series of novel questions about changes in their ability to access health care during COVID-19, delayed or forgone care during COVID-19, and changes in the use of prescription and over-the-counter medications during COVID-19. This section was not administered during the first follow-up survey and an abbreviated version was administered at the second follow-up survey.

The fourth section focused on COVID impacts and asked respondents about their knowledge of government regulation of activities during the pandemic, personal protective behaviors, COVID-19 information seeking [23], COVID-19 related stress [23], and perceived personal risk from COVID-19. At follow-up, this section additionally contained questions about COVID testing and vaccination.

The fifth and longest section focused on awareness/knowledge of SRA policies, alignment with values governing SRA policies, preferences for SRA implementation and communication, and trust/anxiety for SRA. All SRA questions were novel but demonstrated acceptable psychometric properties (e.g., Cronbach’s alphas ranging from 0.5666 to 0.8954; Appendix). A shorter version of this section was administered in the follow-up surveys.

The final section asked optional personal questions regarding COVID-19 experiences [24] (exposure to COVID-19), disability status [25], advanced care planning, general sources of news/information [26], the experience of discrimination in health care, and other personal characteristics [27] (e.g., religion, sexual orientation, political identity). This section was not administered in the follow-up surveys.

Statistical analysis

All analyses were performed using SAS 9.4 (Cary, NC). Summary statistics were used to describe website traffic information based on analytics derived from the hosting platform (Square Space) and from Google Analytics; correlation between website traffic and respondent counts by geography were evaluated. To ascertain the representativeness of the sample, we calculated and compared descriptive statistics for participants (N = 1971), BRFSS respondents from California (N = 11,613), and BRFSS respondents from all 50 states and Washington DC (N = 409,810); BRFSS prevalence data are weighted to represent the US adult populations. Finally, differential non-responses were evaluated by comparing characteristics of those with complete (into SRA section) vs incomplete data at baseline. Differences were assessed using appropriate two-sided bivariate tests with a 0.05 alpha criterion.

Results

As about 1% of website traffic originated from organic searches, compared to the 55–60% from direct website entry or referral and 40–45% from social media recruitment, likelihood of un-invited survey participation (i.e., respondents who did not enter the survey as a direct result of targeted recruitment efforts) is relatively low (Table 1). Observed website traffic reflected the shift in recruitment strategy from outreach through partner organizations from May through July to broader social media based recruitment in August. Among social media platforms, Facebook was the highest yield recruitment source (85% of website traffic originating from social media). Website traffic was predominantly from California but still geographically diffuse, suggesting substantial national reach of recruitment efforts, and was highly correlated to place of residence reported by respondents, indicating similar response rates across geography (Fig. 2).

Geographic coverage of UC-COVID recruitment. Google Analytics coverage map showing website traffic from May 1, 2020, to September 30, 2020, from metro areas of the USA (traffic from outside the USA not shown) with inset table showing Pearson’s correlation between traffic from SquareSpace, traffic from Google Analytics and respondents’ survey-reported residence at the country and state (USA only) levels

Characteristics of recruited participants differed from the population of California and from the US population as a whole (Table 2). Compared to the adult population of California, study participants (71.0% from California) were more likely to identify as female, less likely to identify as Hispanic, and more likely to report having a bachelor’s degree. Despite being slightly more likely to report health insurance coverage and having a personal health care provider, study participants did not differ from adults in California with respect to the prevalence of chronic conditions or the likelihood of having a routine check-up in the prior year. Similar distinctions were observed when comparing study participants to the adult population of the USA.

Respondents who did versus did not complete the baseline SRA questions also varied by sociodemographics (Table 3). Participants with complete SRA data were more likely to be younger, white non-Hispanic, have a bachelor’s degree, currently employed, married or partnered, insured, and living with a chronic condition.

Discussion

We demonstrate that rapid recruitment of a large cohort into a longitudinal educational trial via outreach through social media is feasible, efficient, and acceptable to participants. While recruitment through partner organizations was advantageous, broader social media-based outreach was useful for expanding the number of recruited participants; diversified recruitment efforts may also be useful for maximizing sample diversity. Tracking of initial engagement with recruitment posts is facilitated by off-the-shelf software and preexisting platforms.

An emerging evidence base describes opportunities, such as rapidity, and shortcomings in Internet-enabled research, such as potential selection bias. This study is similarly subject to many of the potential biases reported elsewhere [28, 29]. Most notable are the potential issues with generalizability when the study sampling frame is an Internet-engaged audience [30]; though the digital divide has transformed over time [31], there are clear selection biases stemming both from Internet-based recruitment and voluntary participation with limited remuneration. Indeed, we found that our sample was more likely to be female and less likely to be ethnically diverse, as with many Internet-recruited samples, but a considerable source of deviation from characteristics of the general adult population potentially stemmed from targeted recruitment of health care workers [32, 33], and thus over-representation of college-educated and employed individuals. Examination of attrition revealed that loss from website engagement to survey participation was largely non-differential with respect to geography but that survey completion was linked to differences in many of the same characteristics that were associated with initial engagement. Despite these limitations, our sample was relatively representative with respect to age, certain racial subgroups, and, importantly, presence of chronic medical conditions. Overall, such comparisons within and between the sample and target population, while not definitive, are helpful to assess the extent to which otherwise diffuse (non-probability-based) recruitment was effective for constructing a minimally-biased sample (compared to expectations for Internet-recruited samples) and we strongly recommend designing similar comparisons in future work.

Despite these common sampling limitations with participatory research, we were still able to recruit and retain a number of respondents from smaller subgroups given our larger sample size and concerted efforts to partner with community organizations representing diverse subpopulations. Any future work in this area should strive to adopt a truly community-based participatory research (CBPR) approach in order to maximize reach among communities who are typically under-represented in research. We identified that offering to share data summaries with partners to “close the loop” and ensure shared use of study information, consistent with CBPR principles, was one effective method for engaging recruitment partners. Further efforts to improve recruitment and retention across socioeconomic strata could be enhanced by employing guaranteed remuneration. Of note, both overall recruitment and recruitment of various subgroups may have been enhanced by the timeliness of this survey’s topic; for studies of other health issues, additional efforts highlighting the importance of the topic for target populations may be needed to boost recruitment.

In prior work using similar recruitment methods [7, 15], identification of bots or other sources of invalid records is of particular concern and several methods for such identification have been utilized. We importantly identified that even with survey platform tools (such as the “Prevent Ballot Box Stuffing” option), additional attention to records’ metadata is critical to identify residual entries submitted from bots. Future studies should consider how these tools could be applied in consort in order to generate a maximally valid sample.

Conclusions

The unique challenges of diffuse, non-probability-based recruitment via social media can raise concerns about efficiency, generalizability, and validity. We demonstrate the feasibility of implementing a rapidly deployed yet rigorous trial that engaged and retained a large cohort using off-the-shelf tools. In light of these successes in virtually recruiting for and conducting an educational trial, researchers may wish to implement similar strategies and reporting methods for future studies.

Availability of data and materials

The datasets generated and/or analyzed during the current study are not publicly available as IRB approval was granted with the stipulation that data would only be available to the study team (those listed on the approved IRB protocol with appropriate human subjects certification) but are available from the corresponding author on reasonable request. BRFSS data are publicly available from the CDC.

References

Cleveland Manchanda EC, Sanky C, Appel JM. Crisis standards of care in the USA: a systematic review and implications for equity amidst COVID-19. J Racial Ethn Health Disparities. 2020;13:1–13.

Emanuel EJ, Persad G, Upshur R, Thome B, Parker M, Glickman A, et al. Fair allocation of scarce medical resources in the time of COVID-19. N Engl J Med. 2020;382(21):2049–55. https://doi.org/10.1056/NEJMsb2005114.

Fallucchi F, Faravelli M, Quercia S. Fair allocation of scarce medical resources in the time of COVID-19: what do people think? J Med Ethics. 2021;47(1):3–6.

Topolovec-Vranic J, Natarajan K. The use of social media in recruitment for medical research studies: a scoping review. J Med Internet Res. 2016;18(11):e286. https://doi.org/10.2196/jmir.5698.

Khatri C, Chapman SJ, Glasbey J, Kelly M, Nepogodiev D, Bhangu A, et al. Social media and internet driven study recruitment: evaluating a new model for promoting collaborator engagement and participation. PLoS One. 2015;10(3):e0118899. https://doi.org/10.1371/journal.pone.0118899.

Darmawan I, Bakker C, Brockman TA, Patten CA, Eder M. The role of social media in enhancing clinical trial recruitment: scoping review. J Med Internet Res. 2020;22(10):e22810. https://doi.org/10.2196/22810.

Wisk LE, Nelson EB, Magane KM, Weitzman ER. Clinical trial recruitment and retention of college students with type 1 diabetes via social media: an implementation case study. J Diabetes Sci Technol. 2019;13(3):445–56. https://doi.org/10.1177/1932296819839503.

Pagoto S, Waring ME, May CN, Ding EY, Kunz WH, Hayes R, et al. Adapting behavioral interventions for social media delivery. J Med Internet Res. 2016;18(1):e24. https://doi.org/10.2196/jmir.5086.

Gelinas L, Pierce R, Winkler S, Cohen IG, Lynch HF, Bierer BE. Using social media as a research recruitment tool: ethical issues and recommendations. Am J Bioeth. 2017;17(3):3–14. https://doi.org/10.1080/15265161.2016.1276644.

Arigo D, Pagoto S, Carter-Harris L, Lillie SE, Nebeker C. Using social media for health research: methodological and ethical considerations for recruitment and intervention delivery. Digit Health. 2018;4:2055207618771757.

Russomanno J, Patterson JG, Jabson Tree JM. Social media recruitment of marginalized, hard-to-reach populations: development of recruitment and monitoring guidelines. JMIR Public Health Surveill. 2019;5(4):e14886. https://doi.org/10.2196/14886.

Ali SH, Foreman J, Capasso A, Jones AM, Tozan Y, DiClemente RJ. Social media as a recruitment platform for a nationwide online survey of COVID-19 knowledge, beliefs, and practices in the United States: methodology and feasibility analysis. BMC Med Res Methodol. 2020;20(1):116. https://doi.org/10.1186/s12874-020-01011-0.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81. https://doi.org/10.1016/j.jbi.2008.08.010.

Harris PA, Taylor R, Minor BL, Elliott V, Fernandez M, O'Neal L, et al. The REDCap consortium: building an international community of software platform partners. J Biomed Inform. 2019;95:103208.

Pozzar R, Hammer MJ, Underhill-Blazey M, Wright AA, Tulsky JA, Hong F, et al. Threats of bots and other bad actors to data quality following research participant recruitment through social media: cross-sectional questionnaire. J Med Internet Res. 2020;22(10):e23021. https://doi.org/10.2196/23021.

Pierannunzi C, Hu SS, Balluz L. A systematic review of publications assessing reliability and validity of the Behavioral Risk Factor Surveillance System (BRFSS), 2004-2011. BMC Med Res Methodol. 2013;13(1):49. https://doi.org/10.1186/1471-2288-13-49.

Kroenke K, Spitzer RL, Williams JB. The Patient Health Questionnaire-2: validity of a two-item depression screener. Med Care. 2003;41(11):1284–92. https://doi.org/10.1097/01.MLR.0000093487.78664.3C.

Plummer F, Manea L, Trepel D, McMillan D. Screening for anxiety disorders with the GAD-7 and GAD-2: a systematic review and diagnostic metaanalysis. Gen Hosp Psychiatry. 2016;39:24–31. https://doi.org/10.1016/j.genhosppsych.2015.11.005.

Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001;16(9):606–13. https://doi.org/10.1046/j.1525-1497.2001.016009606.x.

Office on Smoking and Health. 2020 National Youth Tobacco Survey: Methodology Report. Atlanta: Department of Health and Human Services, Centers for Disease Control and Prevention, National Center for Chronic Disease Prevention and Health Promotion, Office on Smoking and Health; 2020.

Pearson JL, Hitchman SC, Brose LS, Bauld L, Glasser AM, Villanti AC, et al. Recommended core items to assess e-cigarette use in population-based surveys. Tob Control. 2018;27(3):341–6. https://doi.org/10.1136/tobaccocontrol-2016-053541.

West CP, Dyrbye LN, Sloan JA, Shanafelt TD. Single item measures of emotional exhaustion and depersonalization are useful for assessing burnout in medical professionals. J Gen Intern Med. 2009;24(12):1318–21. https://doi.org/10.1007/s11606-009-1129-z.

Conway LGI, Woodard SR, Zubrod A. Social Psychological Measurements of COVID-19: coronavirus perceived threat, government response, impacts, and experiences questionnaires. PsyArXiv. 2020. https://doi.org/10.31234/osf.io/z2x9a.

Mehta S. COVID-19 community response survey. Baltimore: Johns Hopkins University; 2020.

Brault MW. Review of changes to the measurement of disability in the 2008 American Community Survey. Washington, D.C: United States Census Bureau; 2009. 2009 September 22

Barthel M, Mitchell A, Asare-Marfo D, Kennedy C, Worden K. Measuring news consumption in a digital era. Washington, D.C: Pew Research Center; 2020. 2020 December 8

California Health Interview Survey. CHIS 2020 Adult CAWI Questionnaire. Los Angeles: UCLA Center for Health Policy Research; 2020. 2020 October 20

Benedict C, Hahn AL, Diefenbach MA, Ford JS. Recruitment via social media: advantages and potential biases. Digit Health. 2019;5:2055207619867223.

Salvy SJ, Carandang K, Vigen CL, Concha-Chavez A, Sequeira PA, Blanchard J, et al. Effectiveness of social media (Facebook), targeted mailing, and in-person solicitation for the recruitment of young adult in a diabetes self-management clinical trial. Clin Trials. 2020;17(6):664–74. https://doi.org/10.1177/1740774520933362.

Chunara R, Wisk LE, Weitzman ER. Denominator issues for personally generated data in population health monitoring. Am J Prev Med. 2017;52(4):549–53. https://doi.org/10.1016/j.amepre.2016.10.038.

Gonzales A. The contemporary US digital divide: from initial access to technology maintenance. Inf Commun Soc. 2016;19(2):234–48. https://doi.org/10.1080/1369118X.2015.1050438.

Artiga S, Rae M, Pham O, Hamel L, Muñana C. COVID-19 risks and impacts among health care workers by race/ethnicity. Issue Brief. San Francisco: Kaiser Family Foundation; 2020.

Boniol M, McIsaac M, Xu L, Wuliji T, Diallo K, Campbell J. Gender equity in the health workforce: analysis of 104 countries. Geneva: World Health Organization; 2019. Report No.: WHO/HIS/HWF/Gender/WP1/2019.1

Acknowledgements

Not applicable.

Funding

This project was supported from a contract from the University of California Office of the President (62165-RB) and the UCLA Clinical and Translational Science Institute (NIH/NCATS UL1TR001881). Dr. Buhr is additionally supported under a UCLA CTSI career development award (NIH/NCATS KL2TR001882). Dr. Wisk is additionally supported by a career development award from the National Institutes of Health (NIH/NIDDK K01DK116932).

Author information

Authors and Affiliations

Contributions

RGB and LEW conceptualized and designed the study and carried out all study operations and data collection. LEW analyzed and interpreted study data and was a major contributor in writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was reviewed and approved by the UCLA Institutional Review Board (20-000683). All participants provided informed consent.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Wisk, L.E., Buhr, R.G. Rapid deployment of a community engagement study and educational trial via social media: implementation of the UC-COVID study. Trials 22, 513 (2021). https://doi.org/10.1186/s13063-021-05467-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-021-05467-3