Abstract

Background

Trials financed by for-profit organizations have been associated with favorable outcomes of new treatments, although the effect size of funding source impact on outcome is unknown. The aim of this study was to estimate the effect size for a favorable outcome in randomized controlled trials (RCTs), stratified by funding source, that have been published in general medical journals.

Methods

Parallel-group RCTs published in The Lancet, New England Journal of Medicine, and JAMA between 2013 and 2015 were identified. RCTs with binary primary endpoints were included. The primary outcome was the OR of patients’ having a favorable outcome in the intervention group compared with the control group. The OR of a favorable outcome in each trial was calculated by the number of positive events that occurred in the intervention and control groups. A meta-analytic technique with random effects model was used to calculate summary OR. Data were stratified by funding source as for-profit, mixed, and nonprofit. Prespecified sensitivity, subgroup, and metaregression analyses were performed.

Results

Five hundred nine trials were included. The OR for a favorable outcome in for-profit-funded RCTs was 1.92 (95% CI 1.72–2.14), which was higher than mixed source-funded RCTs (OR 1.34, 95% CI 1.25–1.43) and nonprofit-funded RCTs (OR 1.32, 95% CI 1.26–1.39). The OR for a favorable outcome was higher for both clinical and surrogate endpoints in for-profit-funded trials than in RCTs with other funding sources. Excluding drug trials lowered the OR for a favorable outcome in for-profit-funded RCTs. The OR for a favorable surrogate outcome in drug trials was higher in for-profit-funded trials than in nonprofit-funded trials.

Conclusions

For-profit-funded RCTs have a higher OR for a favorable outcome than nonprofit- and mixed source-funded RCTs. This difference is associated mainly with the use of surrogate endpoints in for-profit-financed drug trials.

Similar content being viewed by others

Background

Results of well-conducted randomized controlled trials (RCTs) are the most important building blocks of evidence-based medicine and guide clinical management. Clinical decisions based on RCTs rest on the assumption that selection bias is reduced compared with in nonrandomized trials. Randomization improves the likelihood of balance between known and unknown prognostic factors.

However, no study completely lacks confounding factors and biases. Bias can be introduced into RCTs during the entire study course. Imperfect study design, lack of blinding, ascertainment bias, funding, publication bias, and many more factors could all play an important role in influencing the results of RCTs. If there are unreported biases in RCTs influencing clinical guidelines, these guidelines could potentially have been designed on the basis of inadequate medical evidence and could potentially hamper adequate clinical decision making.

Industry sponsorship has been shown to favor the company’s own product [1–3]. Earlier research on industry funding and its impact on outcome in RCTs has been focused on particular fields in medicine. Further, the definition of a favorable outcome varies between different studies or has been defined through the use of scoring systems of trial conclusions [4–6] or on the basis of reported outcomes [3], possibly in itself introducing bias.

The actual treatment effect associated with funding source is unknown. Furthermore, the mechanisms related to more favorable outcome in studies funded by for-profit sources are conflicting. It has been suggested by one group of authors to be caused by the use of surrogate endpoints [7], but other authors have not been able to confirm this [8].

To address the issue of the association of funding source with outcome and follow-up regarding the implementation of the reporting of clinical trials, we evaluated all parallel-group RCTs published in JAMA, The Lancet, and New England Journal of Medicine between 2013 and 2015. The primary aim of this study was to calculate the OR for a favorable outcome in the intervention group compared with the control group in RCTs, stratified by funding source of trials published in 2013–2015, using a meta-analytic approach.

Methods

Data source

A librarian performed an electronic search to identify studies published in JAMA, The Lancet, and New England Journal of Medicine between 2013 and 23 September 2015. Trials were identified by using the search terms “randomized controlled trials,” “N Eng J Med,” “Lancet,” and “JAMA” in the PubMed database. A full search strategy is presented in Additional file 1: Table S1. The study followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines.

Study selection

The following inclusion criteria were used:

-

1.

Only two-armed RCTs were included.

-

2.

RCTs with binary primary endpoints were included.

We decided a priori to study the primary outcome of each study as stated in the published articles. When primary outcome was a continuous variable and the secondary outcome was binary (e.g., survival), the secondary outcome was chosen for evaluation.

The following exclusion criteria were used:

-

1.

Multiarm RCTs

-

2.

Editorials, abstracts, observational studies, and commentaries

-

3.

Non-RCTs

Data extraction

The primary specified outcome was the OR of a favorable outcome in the intervention group compared with the control group. The intervention group was defined as the group receiving a new treatment compared with a standard treatment or a control group. Favorable outcome was defined as events that were favorable for the patient (e.g., survival, progression free-survival, modified Rankin Scale score 0–2). Unfavorable outcome was defined as events that were unfavorable to the patient (e.g., mortality, myocardial infarction, stroke, modified Rankin Scale score 3–6). OR was calculated as the number of events that occurred in the intervention and control groups. Time-to-survival studies were included, and the number of events that occurred during the study period was used to calculate the OR. If the primary endpoint was unfavorable (e.g., mortality), the number of unfavorable events was subtracted from the total number of patients in the respective treatment arm in order to obtain the number of corresponding favorable (in this case, survival) events to calculate the OR for a favorable outcome.

A clinical endpoint was defined as events with a direct consequence for the patient, such as mortality, stroke, or improvement of symptoms. A surrogate endpoint was defined as a measure with an indirect/unclear consequence for the patient (e.g., biomarkers, imaging, laboratory tests).

One author screened all search results and included studies meeting all inclusion criteria. Outcome data were extracted according to a prespecified protocol for each study by one author. Outcome data were then hidden, and data on funding source were extracted. This procedure was repeated twice. Data on funding were stratified into three groups depending on funding type: for-profit organization (as the only funding source), nonprofit organization (as the only funding source), or mixed funding with both for-profit and nonprofit funding (at any percentage each). Free provision of drugs/devices to studies was defined as funding and thus was assigned to the for-profit-financed trial group if no nonprofit organization participated in the study.

Statistical analysis

The Open Meta-Analyst (Center for Evidence Synthesis in Health, Brown University, Providence, RI, USA [9]) was used for all analyses. For each study, data were analyzed to estimate the OR for a favorable outcome by the published per-protocol analyses as reported in the published article and according to the intention-to-treat (ITT) population. In the ITT analysis, a single imputation method was used, applying a worst-case scenario with unfavorable outcome imputed for missing data. We obtained summary ORs with corresponding 95% CIs for all outcome analyses. An a priori use of the Mantel-Haenszel method with a random effects model was applied. Outcome heterogeneity was evaluated with Cochrane’s Q test (significance level cutoff value <0.10) and the I 2 statistic (significance cutoff value >50%). To also include zero total events trials in the meta-analyses, an adjusted continuity correction of 0.5 was applied [10]. To calculate differences between ORs stratified for funding source, a z-score was calculated by the logarithm (the summary OR/SE to logarithmic OR), and a two-tailed z-test was calculated to obtain a p value for the difference between ORs. To explore factors associated with the magnitude of OR, we performed a metaregression where the dependent variable was OR for a favorable outcome and the explanatory variables were funding source, population size, intervention type, journal, and type of endpoint (clinical/surrogate). A logistic mixed effects model for the metaregression implemented in R [11] as a plugin for Open Meta-Analyst was used because it allows for both within-study variation and between-study variation. To investigate differences in the OR between different subgroups, prespecified sensitivity analyzes were performed, including medical fields, type of intervention, type of endpoint, blinding, and comparator (placebo/nonplacebo) in drug trials. Because we had a rather strict definition of funding source in which free provision of drug was considered as sponsorship by industry, we carried out a sensitivity analysis excluding studies with industry-funded free provision of drugs.

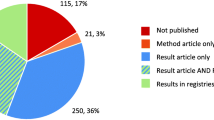

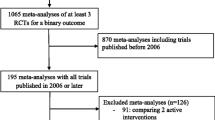

Results

Description of included studies

The electronic search identified a total of 1149 publications, of which 509 RCTs fulfilled all inclusion criteria. Topics covered all medical disciplines. One hundred forty-eight (29%) trials were exclusively funded by for-profit organizations, 117 (23%) trials were jointly funded by for-profit and nonprofit organizations, and 244 (48%) trials were exclusively financed by nonprofit organizations.

Characteristics of the included studies are presented in Table 1. There were no differences in study sample size or the use of clinical and/or surrogate endpoints between funding sources (Table 1).

Association of funding source on outcome

Calculation of the effect size for a favorable outcome in the intervention group compared with the control group was performed for all included studies and stratified for funding source. The OR for a favorable outcome in for-profit funded RCTs was 1.92 (95% CI 1.72–2.14), which was higher than in the mixed-funded RCTs (OR 1.34, 95% CI 1.25–1.43). For-profit-funded RCTs had a higher OR for a favorable clinical outcome than nonprofit-funded RCTs (OR 1.32, 95% CI 1.26–1.39). No statistical differences were found between the per-protocol and ITT analyses (Table 2). There was considerable heterogeneity between the included studies (I 2 73% to 96%).

Subgroup analyses, metaregression, and sensitivity analyses

Medical field

Effect estimates in different medical fields and different funding sources are presented in Table 3. For-profit-funded RCTs had a higher OR for a favorable outcome than nonprofit-funded RCTs for all medical fields except diabetes, cardiovascular, and autoimmunity. The OR was higher in for-profit-funded research than for mixed-funded research in cardiovascular and autoimmunity studies. Almost exclusively, behavioral intervention trials were nonprofit-funded (n = 44). Excluding behavioral intervention trials did not change the overall results for the nonprofit group (OR 1.31, 95% CI 1.23–1.41). There were no differences in the ORs between different funding sources in device and procedure trials.

Metaregression

Metaregression was performed to assess the role of moderator variables on the effect size. The following factors were included: sample size, medical journal, clinical/surrogate endpoint, intervention type, and funding source. The metaregression analysis showed that differences in OR could be explained by reporting on surrogate compared with clinical endpoints, as well as by funding source, such as for-profit funding compared with other funding sources. Furthermore, drug trials are associated with a higher OR than device trials and procedure trials in combination with other types of intervention. Smaller sample size and medical journal were not related to differences in effect size (Table 4).

Clinical and surrogate endpoints

The OR of a favorable outcome for clinical endpoints was significantly higher in for-profit funded RCTs (OR 1.50, 95% CI 1.40–1.61) than in mixed-funded RCTs (OR 1.27, 95% CI 1.16–1.34) and nonprofit-funded RCTs (OR 1.27, 95% CI 1.19–1.37). This difference in OR between for-profit and nonprofit/mixed-funded RCTs was also seen in surrogate endpoints (Table 2).

When we stratified drug trials into clinical and surrogate endpoints, we found no difference in the OR of clinical endpoints between funding sources. However, we found significantly higher OR for surrogate endpoints in for-profit-funded drug trials (OR 4.10, 95% CI 2.64–6.37) than in nonprofit-funded drug trials (OR 1.66, 95% CI 1.11–2.47) (Table 5).

Drug trials

Excluding drug trials changed the OR from a statistical difference depending on funding source to a nonsignificant difference in OR between funding. Placebo-controlled for-profit drug trials had a higher OR than mixed- and nonprofit-funded placebo drug trials. Double-blind drug trials and non-double-blind trials had a higher OR in for-profit-financed trials than nonprofit-financed trials (Table 5). Non-placebo-controlled drug trials with for-profit funding had a higher OR than mixed-funded trials, but there was no difference from nonprofit-funded research.

Discussion

In this study, we calculated the OR for a favorable outcome in 509 RCTs published in general medical journals between 2013 and 2015, stratified by funding source, and we investigated the underlying mechanisms for differences in OR. RCTs funded by for-profit organizations had a higher OR for a favorable outcome than both nonprofit-funded and mixed-funded RCTs. The OR for a favorable outcome was higher for both clinical and surrogate endpoints in for-profit-funded trials than for RCTs with other funding. The significant difference in OR between for-profit- and nonprofit-funded RCTs was lost when we excluded drug trials, indicating that a higher OR is associated with drug trials. After stratifying drug trials into clinical endpoints, we found no differences in OR between funding sources. For-profit-financed drug trials in which researchers reported on surrogate endpoints had a significantly higher OR of a favorable outcome than nonprofit-financed drug trials.

We speculated that the resulting OR stratified for funding source would differ between the per-protocol and ITT analyses owing to differences in missing data between studies. However, consistent with earlier observations, our results contradicted this speculation, showing no difference between the ITT and per-protocol analyses [12].

The strengths of this study are the large number of included studies (n = 509) from recent years. Trials from all medical fields were included to fully study the concept of funding influencing trial results. We analyzed studies with binary outcomes, which are more commonly associated with hard clinical endpoints such as progression-free survival than continuous variables, which are more often surrogate markers such as blood pressure [13]. In addition, we included studies with time-to-survival rates and recorded the actual events at a certain time point, because those studies are often conducted in cancer fields and often financed by industry. Using a meta-analytic technique, we were able to quantify the effect size of a favorable outcome in the intervention group compared with the control group on the basis of actual study event rates of outcomes rather than scoring systems. To our knowledge, the aggregated effect size based on funding source has not been previously reported. To limit bias in selecting reported outcomes we prespecified that we would study the primary study outcome as reported in the published articles.

Limitations of this study are due mainly to the statistical constraints of a meta-analysis combining different study designs, populations, and interventions. A high heterogeneity was expected in this study because different studies with completely different outcomes from all medical fields were included, along with a variety of types of interventions. Also, the study design varied between trials. Altogether, this yielded a high heterogeneity. However, in order to comprehensively explore the size and magnitude of the association between funding source and study outcome, a meta-analysis with appropriate sensitivity analyses was warranted.

There are several approaches to addressing heterogeneity [14]. We chose to explore heterogeneity by applying metaregression analysis and a random effects model. The metaregression analysis revealed that the use of surrogate endpoints in clinical trials was associated with higher OR than the use of clinical endpoints. Also, drug trials were associated with a higher OR than device trials or procedure trials in combination with other types of interventions. Furthermore, the metaregression analysis showed that for-profit-funded trials were associated with higher OR than other funding sources. The inclusion of small studies and medical journal type in the model did not have a moderating variable effect on the meta-analysis effect size (OR). These results contradict the hypothesis that smaller studies are related to higher treatment effects or that higher treatment effect is associated with publication (e.g., New England Journal of Medicine compared with The Lancet or JAMA). Given the diversity of clinical conditions and outcomes that were pooled in our analysis, we tried to adjust for differences in baseline characteristics by performing relevant sensitivity analyses.

Our findings are in line with earlier studies demonstrating an association between for-profit-funded research and a more favorable outcome [1, 3, 5, 15]; however, these studies were based on data published 10–15 years ago. Previous research has been focused on specific medical fields, such as cardiovascular research [3], nutrition [16], or drug trials [5]. Our stratified analysis for different medical fields showed a higher OR for a favorable outcome in all groups except autoimmunity, cardiovascular, and diabetes, with the highest absolute OR being in autoimmunity trials. Our study shows that the association between a higher OR for a favorable outcome and for-profit-financed trials is consistent in all medical fields. The OR for a favorable outcome in for-profit-financed drug trials was higher in placebo-controlled studies and double-blind studies than in nonprofit-financed drug trials. Free provision of drugs by industry is common in RCTs performed today, here defined as mixed funding if the trial was otherwise funded by a nonprofit organization. Excluding these trials did not change the OR.

Previous studies of the association between funding source and study outcome or conclusion have defined a favorable outcome on the basis of either statistical significance [16] or a scoring system [3, 5]. The ORs in for-profit-funded research in these studies have not been statistically different from nonprofit-funded studies [5, 17, 18]. Two exceptions are trials investigating nicotine replacement therapy [19] and glucosamine [20]. The authors of a Cochrane report published in 2012 stated that the higher OR in industry-sponsored studies could be mediated by unknown factors regarded as “metabias” [1].

There are several hypotheses explaining the differences in rates of favorable outcome between studies. Differences due to variations in methodological quality are unlikely in studies published in the included high-impact journals, because study quality in general is higher in high-impact journals than in other medical journals [21]. Probably, this is because many drug trials are almost consistently conducted by contract research organizations, which are frequently multi-billion-dollar organizations that have more experience in conducting trials than many academic centers and also have more risk safeguards in place than many academic or nonprofit centers. This has been further evaluated in a study by Boutron et al. [22], who reported on the presence of “spin” in RCTs with nonsignificant results for the primary endpoint. In the study by Boutron et al., spin was reported in studies published mainly in lower-impact journals. Previous studies do not support the notion that industry funding is associated with inferior study quality [4, 23, 24]. Our results show that study blinding and comparator group (e.g., active control or placebo) poorly explain differences in effect size between funding sources. Our findings suggest that the use and selection of surrogate markers as primary endpoints partly explain the difference in effect size between funding sources.

One could argue that the differences in outcome between funding types can be explained by publication bias whereby industry groups rarely publish negative results and drug industry-funded research is less likely to be published [15]. In accordance with these concepts, research funded by drug companies has been reported to be subject to selective publication, selective reporting, and multiple publications [25]. Previous researchers reported that pharmaceutical companies have suppressed studies reporting severe adverse effects [26, 27]. Kasenda et al. [28] reported on reasons for discontinuation of clinical trials. For-profit-funded studies were less likely than nonprofit-funded trials to be discontinued because of poor recruitment. Do pharmaceutical industries have a better availability of effective drugs? This is partly contradicted by a study from Kasenda et al., who found discontinuation of clinical trials because of futility in equal proportions between for-profit- and nonprofit-funded trials [28]. In analyzing drug trials, they found that there were no differences in effect sizes for clinical endpoints. This contradicts the hypothesis that for-profit organizations have more effective drugs. In addition, as reviewed by Svensson et al., several drugs approved on the basis of surrogate endpoints have later been shown to have substantial side effects, impacting patients’ clinical course negatively [29].

In a RCT, Kesselheim et al. [30] compared how physicians interpret trials depending on methodological differences and funding sources. For-profit funding reduced physicians’ willingness to believe the data. In the last decade, several measures have been taken to reduce selective reporting in trials through the introduction of trial registration [31] and the Consolidated Standards of Reporting Trials (CONSORT) guidelines [32]. The existence of industry-financed RCTs is essential for the development of new drugs and modern treatments. However, physicians’ mistrust of for-profit-funded research is of great concern. Despite the latest year’s implementation of trial registration and methodological guidelines for RCTs, the present study shows a clear association between for-profit-financed studies and a higher OR for a favorable outcome than for RCTs supported by other types of funding.

The present study shows evidence that for-profit-financed RCT researchers still report higher ORs, despite recent years’ efforts to increase good research conduct and transparency in RCTs. Recently, the International Committee of Medical Journal Editors proposed the concept of data sharing for clinical trials, enabling secondary analyses of studies [33]. In a previous study, Ebrahim et al. [34] evaluated reanalyses of published RCTs and found that 35% of the reanalyses could alter conclusions from those originally published, were the original authors to have concluded that they were inept in their analysis, further indicating this need for an open access to published study data. Other strategies can contain greater public funding of RCTs or “money-blind” independent organizations that would help to develop trial methodology on the basis of industry funding. The findings of the present study indicate that the use of surrogate markers as primary endpoints should be clearly stated as such in the abstracts of the trials, in order not to overestimate the potential clinical benefit for the individual patients.

Conclusions

Our study shows evidence supporting the hypothesis that for-profit-financed RCTs are associated with a higher OR for a favorable outcome of a new treatment than nonprofit- and mixed-funded RCTs, and that this difference is associated mainly with the use of surrogate endpoints in for-profit-financed drug trials. However, for-profit-financed drug trials with clinical endpoints have ORs equal to those of trials with other funding sources.

Abbreviations

- CONSORT:

-

Consolidated Standards of Reporting Trials

- ITT:

-

Intention to treat

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- RCT:

-

Randomized controlled trial

References

Lundh A, Sismondo S, Lexchin J, Busuioc OA, Bero L. Industry sponsorship and research outcome. Cochrane Database Syst Rev. 2012;12:MR000033.

Bekelman JE, Li Y, Gross CP. Scope and impact of financial conflicts of interest in biomedical research: a systematic review. JAMA. 2003;289(4):454–65.

Ridker PM, Torres J. Reported outcomes in major cardiovascular clinical trials funded by for-profit and not-for-profit organizations: 2000–2005. JAMA. 2006;295(19):2270–4.

Djulbegovic B, Lacevic M, Cantor A, Fields KK, Bennett CL, Adams JR, et al. The uncertainty principle and industry-sponsored research. Lancet. 2000;356(9230):635–8.

Als-Nielsen B, Chen W, Gluud C, Kjaergard LL. Association of funding and conclusions in randomized drug trials: a reflection of treatment effect or adverse events? JAMA. 2003;290(7):921–8.

Kjaergard LL, Als-Nielsen B. Association between competing interests and authors’ conclusions: epidemiological study of randomised clinical trials published in the BMJ. BMJ. 2002;325(7358):249.

Roper N, Zhang N, Korenstein D. Industry collaboration and randomized clinical trial design and outcomes. JAMA Intern Med. 2014;174(10):1695–6.

Aneja A, Esquitin R, Shah K, Iyengar R, Nisenbaum R, Melo M, et al. Authors’ self-declared financial conflicts of interest do not impact the results of major cardiovascular trials. J Am Coll Cardiol. 2013;61(11):1137–43.

Wallace BC, Dahabreh IJ, Trikalinos TA, Lau J, Trow P, Schmid CH. Closing the gap between methodologists and end-users: R as a computational back-end. J Stat Softw. 2012;49(5). https://www.jstatsoft.org/article/view/v049i05.

Friedrich JO, Adhikari NKJ, Beyene J. Inclusion of zero total event trials in meta-analyses maintains analytic consistency and incorporates all available data. BMC Med Res Methodol. 2007;7:5.

Viechtbauer W. Conducting meta-analyses in R with the metafor package. J Stat Softw. 2010;36(3). https://www.jstatsoft.org/article/view/v036i03.

Montedori A, Bonacini MI, Casazza G, Luchetta ML, Duca P, Cozzolino F, et al. Modified versus standard intention-to-treat reporting: are there differences in methodological quality, sponsorship, and findings in randomized trials? A cross-sectional study. Trials. 2011;12:58.

Ioannidis JPA, Haidich AB, Pappa M, Pantazis N, Kokori SI, Tektonidou MG, et al. Comparison of evidence of treatment effects in randomized and nonrandomized studies. JAMA. 2001;286(7):821–30.

Higgins JPT, Green S, editors. Cochrane handbook for systematic reviews of interventions. Version 5.1.0 [updated March 2011]. The Cochrane Collaboration. http://handbook.cochrane.org/. Accessed 13 Mar 2016.

Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ. 2003;326(7400):1167–70.

Lesser LI, Ebbeling CB, Goozner M, Wypij D, Ludwig DS. Relationship between funding source and conclusion among nutrition-related scientific articles. PLoS Med. 2007;4(1):e5.

Barden J, Derry S, McQuay HJ, Moore RA. Bias from industry trial funding? A framework, a suggested approach, and a negative result. Pain. 2006;121(3):207–18.

Davis JM, Chen N, Glick ID. Issues that may determine the outcome of antipsychotic trials: industry sponsorship and extrapyramidal side effect. Neuropsychopharmacology. 2008;33(5):971–5.

Etter JF, Burri M, Stapleton J. The impact of pharmaceutical company funding on results of randomized trials of nicotine replacement therapy for smoking cessation: a meta-analysis. Addiction. 2007;102(5):815–22.

Vlad SC, LaValley MP, McAlindon TE, Felson DT. Glucosamine for pain in osteoarthritis: why do trial results differ? Arthritis Rheum. 2007;56(7):2267–77.

Jacobson DA, Bhanot K, Yarascavitch B, Chuback J, Rosenbloom E, Bhandari M. Levels of evidence: a comparison between top medical journals and general pediatric journals. BMC Pediatr. 2015;15:3.

Boutron I, Dutton S, Ravaud P, Altman DG. Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA. 2010;303(20):2058–64.

Kjaergard LL, Nikolova D, Gluud C. Randomized clinical trials in Hepatology: predictors of quality. Hepatology. 1999;30(5):1134–8.

Cho MK, Bero LA. The quality of drug studies published in symposium proceedings. Ann Intern Med. 1996;124(5):485–9.

Melander H, Ahlqvist-Rastad J, Meijer G, Beermann B. Evidence b(i)ased medicine—selective reporting from studies sponsored by pharmaceutical industry: review of studies in new drug applications. BMJ. 2003;326(7400):1171–3.

Madigan D, Sigelman DW, Mayer JW, Furberg CD, Avorn J. Under-reporting of cardiovascular events in the rofecoxib Alzheimer disease studies. Am Heart J. 2012;164(2):186–93.

Tanne JH. Merck pays $1bn penalty in relation to promotion of rofecoxib. BMJ. 2011;343:d7702.

Kasenda B, von Elm E, You J, Blümle A, Tomonaga Y, Saccilotto R, et al. Prevalence, characteristics, and publication of discontinued randomized trials. JAMA. 2014;311(10):1045–51.

Svensson S, Menkes DB, Lexchin J. Surrogate outcomes in clinical trials: a cautionary tale. JAMA Intern Med. 2013;173(8):611–2.

Kesselheim AS, Robertson CT, Myers JA, Rose SL, Gillet V, Ross KM, et al. A randomized study of how physicians interpret research funding disclosures. N Engl J Med. 2012;367(12):1119–27.

De Angelis C, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med. 2004;351(12):1250–1.

Schulz KF, Altman DG, Moher D, CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c332.

Taichman DB, Backus J, Baethge C, Bauchner H, de Leeuw PW, Drazen JM, et al. Sharing clinical trial data: a proposal from the International Committee of Medical Journal Editors. PLoS Med. 2016;13(1):e1001950.

Ebrahim S, Sohani ZN, Montoya L, Agarwal A, Thorlund K, Mills EJ, et al. Reanalyses of randomized clinical trial data. JAMA. 2014;312(10):1024–32.

Acknowledgements

The authors thank the reviewers and editor for improving the manuscript.

Funding

Alberto Falk Delgado holds a research residency position financed by Uppsala University Hospital. The funder had no influence on the preparation of the manuscript.

Availability of data and materials

The data reported in this article are available upon request from the corresponding author.

Authors’ contributions

Both authors contributed equally, had full access to all of the data in the study, and take responsibility for the integrity of the data and the accuracy of the data analysis. Both authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Author information

Authors and Affiliations

Corresponding author

Additional file

Additional file 1:

Supplementary material. (DOCX 14 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Falk Delgado, A., Falk Delgado, A. The association of funding source on effect size in randomized controlled trials: 2013–2015 – a cross-sectional survey and meta-analysis. Trials 18, 125 (2017). https://doi.org/10.1186/s13063-017-1872-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-017-1872-0