Abstract

This article is one of ten reviews selected from the Annual Update in Intensive Care and Emergency Medicine 2019. Other selected articles can be found online at https://www.biomedcentral.com/collections/annualupdate2019. Further information about the Annual Update in Intensive Care and Emergency Medicine is available from http://www.springer.com/series/8901.

Similar content being viewed by others

Introduction

Iron is required for erythropoiesis and is also essential for many other life-sustaining functions including deoxyribonucleic acid (DNA) and neurotransmitter synthesis, mitochondrial function and the innate immune response. Despite its importance in maintaining health, iron deficiency is the most common nutritional deficiency worldwide and many of the risk factors for iron deficiency are also risk factors for developing critical illness. The result is that iron deficiency is likely to be over-represented in critically ill patients, with an estimated incidence of up to 40% at the time of intensive care unit (ICU) admission [1].

Critical illness results in profound and characteristic changes to iron metabolism that are highly conserved from an evolutionary perspective. These changes are mediated predominantly by the polypeptide hepcidin, which acts to decrease the absorption and availability of iron, despite acute phase increases in iron-binding proteins, such as ferritin, which may suggest normal or increased iron stores. The result is a state of functional iron deficiency. This may be protective in the short term, providing a form of ‘nutritional immunity’ against invading microbes by diminishing access to free iron in response to infection. However, by reducing the capacity of the body to access iron for vital processes, persistent functional iron deficiency can become harmful. For patients with prolonged ICU admission, this may contribute to critical illness-associated cognitive, neuromuscular and cardiopulmonary dysfunction.

Historically, the possibility of iron deficiency was largely unexplored in critically ill patients due to the confounding effects of acute inflammation on commonly available iron measures, the lack of safe and effective treatments and uncertainty as to the clinical significance of deranged iron metabolism. However, assays, including hepcidin, offer the potential to identify iron restriction despite the presence of inflammation and may be coupled with promising therapeutic options to address issues including nosocomial infection and functional recovery for patients admitted to the ICU.

These advances are timely as emerging data suggest that disordered iron metabolism is of substantial prognostic significance in critical illness. High serum transferrin saturation and iron concentration are independent predictors of mortality in patients admitted to the ICU [2]. These data are consistent with findings of increased infection risk and organ failure associated with deranged iron metabolism in studies of patients undergoing hematopoietic stem cell and renal transplantation [3, 4]. Failure to maintain iron homeostasis early after a profound insult may result in an accumulation of highly reactive free iron, or non-transferrin bound iron, inflicting further oxidative stress on vulnerable organs or scavenged by invading microorganisms. The requirement for tight homeostatic control of iron metabolism is further demonstrated by population data from Norway, suggesting an association between severe iron deficiency and risk of bloodstream infection [5].

In summary, the available evidence suggests that both iron deficiency and iron excess may be harmful for critically ill patients and that clinical assessment of iron status in the ICU is important and should include consideration of both possibilities. The risks related to different iron states and associated iron study patterns are provided in Fig. 1.

The advent of safe and effective intravenous iron preparations provides an opportunity to explore the potential benefits of treating patients diagnosed with functional iron deficiency in the ICU, when enteral iron is ineffective due to the actions of hepcidin. Intravenous iron therapies have largely been investigated in the context of erythropoiesis. There are high quality data that intravenous iron, compared to either oral iron or no iron, significantly decreases anemia and red blood cell (RBC) transfusion requirement in hospitalized patients, albeit with a potential increased risk of infection [6]. The evidence for patients admitted to the ICU is less clear. To date few randomized controlled trials (RCTs) have been conducted in critically ill patients, although a recent multicenter study suggested that intravenous iron is biologically active in this population, increasing hemoglobin without any signal of harm [7].

Whilst much of the focus of iron has been as a treatment for anemia, a risk factor for adverse outcomes in patients admitted to the ICU, non-anemic iron deficiency impairs aerobic metabolism and is associated with reduced maximal oxygen consumption (VO2max), muscle endurance and cognitive performance [8,9,10]. It is plausible that interventions to address disrupted iron metabolism may have much wider benefit for reducing complications and improving functional recovery after critical illness, independent of erythropoiesis.

The similarities are striking between the pathological consequences of the alterations in iron metabolism during critical illness and many common and characteristic complications, particularly those associated with functional impairment, in patients requiring prolonged ICU admission. Exploring the role of iron dysmetabolism in nosocomial infection and cognitive, neuromuscular and cardiopulmonary dysfunction may uncover novel therapeutic targets to help to address the substantial public health burden of these conditions in survivors of critical illness.

Iron metabolism and critical illness

Critical illness precipitates an inflammatory response that results in early and profound changes in iron metabolism. An acquired form of iron dysmetabolism can be said to occur when these changes are persistent and contribute to impaired end-organ function. Ferritin, a major repository of intracellular iron, and the iron-binding glycoprotein, lactoferrin, are acute phase reactants and are upregulated in proportion to the severity of the inflammatory response [11]. The higher affinity of ferritin and lactoferrin to bind iron relative to transferrin, the circulating iron transporter and a negative acute phase reactant, results in hypoferremia [11]. This pattern of low serum iron, low transferrin and high ferritin occurs in more than 75% of critically ill patients within 3 days of ICU admission [12, 13]. Akin to the partial uncoupling of intravascular volume status from total body water as a common consequence of ICU treatment in critical illness, total body iron stores may become uncoupled from available and circulating iron.

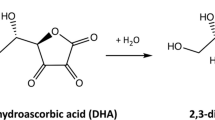

Although the immediate changes in ferritin and transferrin are initiated directly by cytokines, it is the effect of hepcidin, described as the master regulator of iron, that determines the severity and duration of an iron-restricted state. Hepcidin, a 25-amino acid peptide hormone synthesized predominantly by hepatocytes, is secreted in response to a variety of stimuli including inflammatory cytokines and iron excess. Hepcidin acts by binding to and degrading ferroportin, a cellular iron exporter found in duodenal cells, macrophages and hepatocytes. Increasing hepcidin secretion decreases the intestinal absorption of dietary iron, decreases the release of recycled heme iron by macrophages, increases the sequestration of iron stores in hepatocytes and reduces circulating free iron [14].

There are two key clinical consequences of the changes in iron metabolism associated with critical illness. The first is that diagnosis of functional iron deficiency is problematic and cannot be reliably excluded on the basis of standard iron study parameters. The second is that a persistent state of dysmetabolism predisposes a vulnerable population to the consequences of iron-restricted metabolism, a state most commonly considered in the context of erythropoiesis but with important implications for the risk and severity of nosocomial infection and critical illness-associated cognitive, neuromuscular and cardiopulmonary dysfunction.

Diagnosing Iron deficiency

The inflammatory response that occurs in critical illness confounds the interpretation of commonly available assays to diagnose iron deficiency, including ferritin and transferrin. To address this, one approach has been to alter the threshold values of these markers to account for the acute phase response [15]. In patients with anemia requiring long term dialysis, a serum ferritin of < 200 μg/L is highly suggestive of iron deficiency and predicts a good response to intravenous iron, whereas patients with a ferritin of between 500 and 1200 μg/L and a transferrin saturation < 25% may also show an increase in hemoglobin in response to intravenous iron [15]. By applying similar criteria in critical illness, iron-restricted erythropoiesis has been reported to occur in more than a fourth of patients on admission to ICU [16]. However, validation studies against a gold standard of iron deficiency in critically ill patients are lacking and the accuracy of these criteria in predicting response to intravenous iron in this setting remains uncertain. Given the association between high transferrin saturation and adverse outcomes in critically ill patients, it may be that standard measures of iron metabolism are of greater use in identifying patients with potential iron overload, in whom intravenous iron therapy may pose greater risks, than in diagnosing functional iron deficiency per se.

In contrast, serum hepcidin concentration appears to provide a more reliable signal of iron-restricted erythropoiesis, decreasing in concentration with the onset of iron deficiency in patients admitted to the ICU even in the presence of inflammation [17]. In a recent study in critically ill patients with anemia, serum hepcidin concentration, but not ferritin or transferrin saturation, was able to identify patients in whom intravenous iron therapy was effective in reducing RBC transfusion requirement [18]. These findings require validation in further studies but are similar to findings in oncology, where hepcidin concentration appears to predict response to intravenous iron therapy in patients with chemotherapy-induced anemia [19]. In order for large validation studies to occur, accurate, clinically-available hepcidin assays are required.

A two-stage process may be of value, in which standard iron assays are first used to exclude patients at risk of iron excess and identify clear cases of iron deficiency. For patients not clearly overloaded or deficient, hepcidin concentration is then measured. As the evidence base grows and the role of hepcidin becomes more clearly defined, studies investigating its association with functional outcomes after ICU may also be of substantial interest.

Anemia

Anemia is associated with adverse outcomes and remains the most common indication for RBC transfusion in patients admitted to the ICU, even when adherence to a conservative transfusion threshold is high [20]. Preventing the onset and progression of anemia requires a multifaceted approach adapted to the specific clinical context. Major surgery requiring elective ICU admission represents a large patient cohort where a pre-emptive approach is preferable [21]. Approximately one in three patients scheduled to undergo major surgery is anemic, a potentially modifiable risk for perioperative adverse events, including myocardial infarction, stroke and mortality [22]. A recent international consensus statement recommends routine screening of all patients undergoing surgery with an expected blood loss > 500 mL and consideration of intravenous iron for patients with anemia and evidence of iron deficiency when oral iron is inefficacious, not tolerated or surgery is planned to occur in less than 6 weeks [23]. In contrast, a Cochrane review on the use of preoperative iron therapy to correct anemia identified only three small RCTs and found no significant reduction in allogeneic RBC transfusion requirements [24]. The safety and efficacy of preoperative intravenous iron therapy is now the focus of several ongoing large scale RCTs in cardiac, abdominal and orthopedic surgery. A summary of major completed and ongoing RCTs of preoperative intravenous iron is provided in Table 1.

For patients admitted to the ICU there is some evidence to guide decisions on the use of intravenous iron therapy. A systematic review and meta-analysis on the efficacy of intravenous iron in the treatment of anemia in ICU patients did not demonstrate a significant decrease in anemia or RBC transfusion requirements but only included five relatively small studies [25]. More recently, a multicenter RCT including 140 patients demonstrated that intravenous iron administered within 48 h of ICU admission resulted in a significant increase in hemoglobin concentration at hospital discharge (107 g/L vs. 100 g/L, p = 0.02) without any infusion-related adverse event, but no difference in RBC transfusion rates, hospital length of stay or mortality [7]. Although these data suggest biological activity, the available evidence is currently insufficient to assess the effect of early intravenous iron administration on patient outcomes, or to exclude increased infection risk.

The timing of intravenous iron administration in patients admitted to the ICU may also be a strong determinant of whether the benefits outweigh the risks. The period of greatest physiological stress generally occurs early after ICU admission. The effects of critical illness and related treatments, such as RBC transfusion and catecholamine infusions, increase the risks and also exacerbate the consequences of iron dysmetabolism and excess free iron. A diminished capacity to process exogenous parenteral iron may worsen this situation. In vitro data suggest dose-dependent and formulation-dependent effects of intravenous iron compounds on macrophage handling and the extent of oxidative stress [26]. Early initiation of intravenous iron provides time to develop an erythropoietic response. However, this neglects a wider therapeutic window after the most acute period of critical illness has abated when the risk benefit ratio may be more favorable and addressing the functional outcomes of prolonged ICU admission can be prioritized. The temporal changes in iron metabolism and response to intravenous iron therapy in critical illness are summarized in Fig. 2.

Cognitive dysfunction

Cognitive dysfunction is common in survivors of critical illness, affecting more than one in four patients and often persisting after physical recovery [27]. The pathophysiology is multifactorial and characterized by new deficits or deterioration of pre-existing mild deficits in global cognition or executive function. However, the underlying causes remain poorly understood and there are no established treatments [27].

Iron is essential for neurotransmitter synthesis, uptake and degradation and is necessary for mitochondrial function in metabolically active brain tissue [28]. Iron deficiency has cerebral and behavioral effects consistent with dopaminergic dysfunction, manifesting as poor inhibitory control and diminished executive and motor function [29]. In both children and pre-menopausal women with non-anemic iron deficiency, iron supplementation is associated with improved mental quality-of-life and cognitive function [9, 30]. Studies on the effects of intravenous iron on cognitive outcomes in patients recovering from critical illness are currently lacking and will need to consider the optimal timing, dose and duration of therapy.

Neuromuscular dysfunction

Critical illness myopathy is a frequent complication of prolonged acute illness, affecting a substantial proportion of patients admitted to ICU. Weakness is often prolonged with many patients experiencing decreased exercise capacity and compromised quality of life years after the acute event [31]. Even in healthy non-anemic subjects, adequate iron status is essential for efficient aerobic capacity [10]. Fatigue is also a significant factor in recovery after critical illness, influencing a patient’s ability to engage with rehabilitation. Iron deficiency persisted in more than one in three patients at 6 months after prolonged ICU admission and is associated with increased fatigue independent of anemia [32]. Given the catabolic state conferred by critical illness and role of iron in myoglobin and muscle oxidative metabolism, further evaluation of the relationship between iron deficiency and critical illness myopathy may provide insight into the role of iron supplementation in improving physical recovery after acute severe illness.

Cardiopulmonary dysfunction

Iron is an essential component of structural proteins in cardiomyocytes and an obligate co-factor for hypoxia-inducible factor (HIF), a mediator of the systemic cardiovascular response to hypoxia. As a consequence, iron plays an important role in systolic heart function and hypoxic pulmonary vasoconstriction.

Independent of anemia, iron deficiency is associated with increased risk of death in patients with heart failure [33]. High quality evidence suggests that intravenous iron for patients with systolic heart failure improves exercise capacity, survival and quality of life [34]. Critical illness may impair myocardial iron use through a number of mechanisms including catecholamine-induced downregulation of myocardial transferrin receptor 1 (TfR1) and upregulation of hepcidin [35]. Emerging data also suggest disrupted iron metabolism may be important in various subtypes of pulmonary artery hypertension and that altitude-associated pulmonary artery hypertension is improved by intravenous iron therapy [36]. Pulmonary artery hypertension, and associated right ventricular dysfunction, is common in patients admitted to the ICU with severe acute respiratory distress syndrome (ARDS) and is a poor prognostic indicator [37]. Whether the beneficial effects of intravenous iron for patients with isolated systolic heart failure and pulmonary artery hypertension are translatable to patients being treated in the ICU requires further investigation, but assessment of iron status and potential initiation of iron therapy in patients with severe cardiac failure and markers of iron deficiency should be considered on an individual patient basis.

Infection

The term ‘nutritional immunity’ describes the changes in iron metabolism of the mammalian host during infection, in which the acute phase response acts to limit the availability of free iron for microbes including invasive fungi and avid iron-binding bacteria [38, 39]. Hereditary iron overload disorders increase the risk and severity of infection with siderophilic bacteria, such as Vibrio vulnificus and Yersinia enterocolitica, although these bacteria are only moderately pathogenic in other settings [39]. Iron overload not only predisposes to infection from particular organisms, but also then impairs components of the innate immune response including chemotaxis, phagocytosis, lymphocyte and macrophage function [40].

Investigating a potential causal link between iron supplementation and infection risk is complex. The relationship is likely to depend on a large range of factors, including the setting, participant population and particular iron dosing strategy. For example, oral iron supplementation for children in malaria endemic areas does not appear to increase the risk of malaria overall, but may increase the risk where malaria prevention strategies are unavailable and decrease the risk where they are available [41]. Although an increased risk of infection has been suggested in a systematic review of RCTs investigating hospitalized patients treated with intravenous iron, infection was only reported in a minority of trials and the risk, including in critically ill patients, remains uncertain [6]. A RCT of intravenous iron sucrose compared with oral iron in patients with chronic kidney disease was stopped early due to increased serious adverse events, including infection requiring hospitalization, in the intravenous iron group [42]. On the other hand a RCT of intravenous ferric carboxymaltose for patients with cardiac failure demonstrated improved functional capacity and quality of life with no significant between-group differences in serious adverse events, including infection [43]. These contrasting findings demonstrate the need for context-specific assessment of infection risk and the potential for dose and formulation-dependent differences [26]. For patients admitted to the ICU, it is plausible that the risk of infection related to parenteral supplementation is not uniform, but may vary between patients and within a patient over time (see Fig. 2).

Whilst uncertainty regarding the risk of infection may still limit widespread use of intravenous iron in the acute phase of critical illness, the relationship between changes in iron metabolism and infection also provides therapeutic opportunities. Nosocomial infection remains a major source of morbidity in patients admitted to the ICU. Oral supplementation with the iron-binding glycoprotein, lactoferrin, appears to reduce nosocomial infection in preterm infants, although the results of large studies are awaited; results have not been replicated in the adult ICU setting [44, 45].

Other potential therapeutic targets of iron metabolism exist or are in development that may also be of relevance to reducing nosocomial infection. For example, the nutritional immunity conferred by reducing iron availability during infection is mediated by hepcidin, secreted in response to inflammatory mediators. However, inhibitors of hepcidin secretion, including anemia and hypoxia, may exert a counterbalancing effect in critically ill patients resulting in relative hepcidin deficiency and increased free iron available for invading microbes. For critically ill patients with early sepsis and low hepcidin concentrations, hepcidin agonists currently in development may attenuate the severity of the infective insult [46]. Likewise, iron chelators are known to be synergistic with some antifungal therapies in managing a range of fungal infections and may be beneficial in the prevention and treatment of nosocomial infections [47].

Conclusion

Iron deficiency is common in the general population and likely to be over-represented in patients admitted to the ICU. Critical illness exacerbates this situation by initiating a form of functional iron deficiency in which iron absorption and recycling is diminished and stored iron is less accessible for use. Although there may be beneficial short-term effects from this process by conferring a form of nutritional immunity against invading microbes, there are also risks. Homeostatic control of iron may be lost leading to the risk of iron excess, as suggested by emerging evidence that high transferrin saturation is a poor prognostic marker early after ICU admission. Perhaps most importantly, whether or not critical illness is initiated by an infection, it is often prolonged. Persistent functional iron deficiency may lead to iron dysmetabolism in which reduced iron availability contributes to impaired end-organ function.

The reduced availability of iron in critical illness is often considered in the context of anemia, but the consequences are much broader than this and overlap extensively with many of the issues faced by ICU survivors. Correction of non-anemic iron deficiency improves cognitive and cardiopulmonary dysfunction and aerobic performance and reduces fatigue. Recent data suggest that hepcidin measurement may be useful in targeting intravenous iron therapy at those most likely to benefit. For patients requiring prolonged ICU admission, considering iron dysmetabolism may be of substantial therapeutic benefit in improving functional recovery after critical illness.

References

Bellamy MC, Gedney JA. Unrecognised iron deficiency in critical illness. Lancet. 1998;352:1903.

Tacke F, Nuraldeen R, Koch A, et al. Iron parameters determine the prognosis of critically ill patients. Crit Care Med. 2016;44:1049–58.

Bazuave GN, Buser A, Gerull S, Tichelli A, Stern M. Prognostic impact of iron parameters in patients undergoing Allo-SCT. Bone Marrow Transplant. 2011;47:60.

Fernández-Ruiz M, López-Medrano F, Andrés A, et al. Serum iron parameters in the early post-transplant period and infection risk in kidney transplant recipients. Transpl Infect Dis. 2013;15:600–11.

Mohus RM, Paulsen J, Gustad L, et al. Association of iron status with the risk of bloodstream infections: results from the prospective population-based HUNT study in Norway. Intensive Care Med. 2018;44:1276–83.

Litton E, Xiao J, Ho KM. Safety and efficacy of intravenous iron therapy in reducing requirement for allogeneic blood transfusion: systematic review and meta-analysis of randomised clinical trials. BMJ. 2013;347:f4822.

Litton E, Baker S, Erber WN, et al. Intravenous iron or placebo for anaemia in intensive care: the IRONMAN multicentre randomized blinded trial: a randomized trial of IV iron in critical illness. Intensive Care Med. 2016;42:1715–22.

Abbaspour N, Hurrell R, Kelishadi R. Review on iron and its importance for human health. J Res Med Sci. 2014;19:164–74.

Bruner AB, Joffe A, Duggan AK, Casella JF, Brandt J. Randomised study of cognitive effects of iron supplementation in non-anaemic iron-deficient adolescent girls. Lancet. 1996;348:992–6.

Brutsaert TD, Hernandez-Cordero S, Rivera J, Viola T, Hughes G, Haas JD. Iron supplementation improves progressive fatigue resistance during dynamic knee extensor exercise in iron-depleted, nonanemic women. Am J Clin Nutr. 2003;77:441–8.

Weiss G, Goodnough LT. Anemia of chronic disease. N Engl J Med. 2005;352:1011–23.

Bobbio-Pallavicini F, Verde G, Spriano P, et al. Body iron status in critically ill patients: significance of serum ferritin. Intensive Care Med. 1989;15:171–8.

Hobisch-Hagen P, Wiedermann F, Mayr A, et al. Blunted erythropoietic response to anemia in multiply traumatized patients. Crit Care Med. 2001;29:743–7.

Ganz T. Hepcidin, a key regulator of iron metabolism and mediator of anemia of inflammation. Blood. 2003;102:783–8.

Thomas DW, Hinchliffe RF, Briggs C, et al. Guideline for the laboratory diagnosis of functional iron deficiency. Br J Haematol. 2013;161:639–48.

Litton E, Xiao J, Allen CT, Ho KM. Iron-restricted erythropoiesis and risk of red blood cell transfusion in the intensive care unit: a prospective observational study. Anaesth Intensive Care. 2015;43:612–6.

Lasocki S, Baron G, Driss F, et al. Diagnostic accuracy of serum hepcidin for iron deficiency in critically ill patients with anemia. Intensive Care Med. 2010;36:1044–8.

Litton E, Baker S, Erber WN, et al. Hepcidin predicts response to IV iron therapy in patients admitted to the intensive care unit: a nested cohort study. J Intensive Care. 2018;6:60.

Steensma DP, Sasu BJ, Sloan JA, Tomita DK, Loprinzi CL. Serum hepcidin levels predict response to intravenous iron and darbepoetin in chemotherapy-associated anemia. Blood. 2015;125:3669–71.

Westbrook A, Pettila V, Nichol A, et al. Transfusion practice and guidelines in Australian and New Zealand ICUs. Intensive Care Med. 2010;36:1138–46.

Lim J, Miles L, Litton E. Intravenous iron therapy in patients undergoing cardiovascular surgery: a narrative review. J Cardiothorac Vasc Anesth. 2018;32:1439–51.

Klein AA, Collier T, Yeates J, et al. The ACTA PORT-score for predicting perioperative risk of blood transfusion for adult cardiac surgery. Br J Anaesth. 2017;119:394–401.

Munoz M, Acheson AG, Auerbach M, et al. International consensus statement on the perioperative management of anaemia and iron deficiency. Anaesthesia. 2017;72:233–47.

Ng O, Keeler BD, Mishra A, Simpson A, Neal K, Brookes MJ, Acheson AG. Iron therapy for pre-operative anaemia. Cochrane Database Syst Rev. 2015:CD011588.

Shah A, Roy NB, McKechnie S, Doree C, Fisher SA, Stanworth SJ. Iron supplementation to treat anaemia in adult critical care patients: a systematic review and meta-analysis. Crit Care. 2016;20:306.

Connor JR, Zhang X, Nixon AM, Webb B, Perno JR. Comparative evaluation of nephrotoxicity and management by macrophages of intravenous pharmaceutical iron formulations. PLoS One. 2015;10:e0125272.

Pandharipande PP, Girard TD, Jackson JC, et al. Long-term cognitive impairment after critical illness. N Engl J Med. 2013;369:1306–16.

Youdim MB, Yehuda S. The neurochemical basis of cognitive deficits induced by brain iron deficiency: involvement of dopamine-opiate system. Cell Mol Biol. 2000;46:491–500.

Lozoff B. Early iron deficiency has brain and behavior effects consistent with dopaminergic dysfunction. J Nutr. 2011;141:740S–6S.

Favrat B, Balck K, Breymann C, et al. Evaluation of a single dose of ferric carboxymaltose in fatigued, iron-deficient women—PREFER a randomized, placebo-controlled study. PLoS One. 2014;9:e94217.

Guarneri B, Bertolini G, Latronico N. Long-term outcome in patients with critical illness myopathy or neuropathy: the Italian multicentre CRIMYNE study. J Neurol Neurosurg Psychiatry. 2008;79:838–41.

Lasocki S, Chudeau N, Papet T, et al. Prevalence of iron deficiency on ICU discharge and its relation with fatigue: a multicenter prospective study. Crit Care. 2014;18:542.

Jankowska EA, Kasztura M, Sokolski M, et al. Iron deficiency defined as depleted iron stores accompanied by unmet cellular iron requirements identifies patients at the highest risk of death after an episode of acute heart failure. Eur Heart J. 2014;35:2468–76.

Jankowska EA, Tkaczyszyn M, Suchocki T, et al. Effects of intravenous iron therapy in iron-deficient patients with systolic heart failure: a meta-analysis of randomized controlled trials. Eur J Heart Fail. 2016;18:786–95.

Maeder MT, Khammy O. dos Remedios C, Kaye DM. Myocardial and systemic iron depletion in heart failure: implications for anemia accompanying heart failure. J Am Coll Cardiol. 2011;58:474–80.

Ramakrishnan L, Pedersen SL, Toe QK, Quinlan GJ, Wort SJ. Pulmonary arterial hypertension: iron matters. Front Physiol. 2018;9:641.

Zochios V, Parhar K, Tunnicliffe W, Roscoe A, Gao F. The right ventricle in ARDS. Chest. 2017;152:181–93.

Cassat JE, Skaar EP. Iron in infection and immunity. Cell Host Microbe. 2013;13:509–19.

Ganz T. Iron and infection. Int J Hematol. 2018;107:7–15.

Puntarulo S. Iron, oxidative stress and human health. Mol Asp Med. 2005;26:299–312.

Neuberger A, Okebe J, Yahav D, Paul M. Oral iron supplements for children in malaria-endemic areas. Cochrane Database Syst Rev. 2016:CD006589.

Agarwal R, Kusek JW, Pappas MK. A randomized trial of intravenous and oral iron in chronic kidney disease. Kidney Int. 2015;88:905–14.

Anker SD, Comin Colet J, Filippatos G, et al. Ferric carboxymaltose in patients with heart failure and iron deficiency. N Engl J Med. 2009;361:2436–48.

Pammi M, Suresh G. Enteral lactoferrin supplementation for prevention of sepsis and necrotizing enterocolitis in preterm infants. Cochrane Database Syst Rev. 2017:CD007137.

Muscedere J, Maslove DM, Boyd JG, et al. Prevention of nosocomial infections in critically ill patients with lactoferrin: a randomized, double-blind, placebo-controlled study. Crit Care Med. 2018;46:1450–6.

Sebastiani G, Wilkinson N, Pantopoulos K. Pharmacological targeting of the hepcidin/ferroportin axis. Front Pharmacol. 2016;7:160.

Balhara M, Chaudhary R, Ruhil S, et al. Siderophores; iron scavengers: the novel & promising targets for pathogen specific antifungal therapy. Expert Opin Ther Targets. 2016;20:1477–89.

Kim YW, Bae JM, Park YK, et al. Effect of intravenous ferric carboxymaltose on hemoglobin response among patients with acute isovolemic anemia following gastrectomy: the FAIRY randomized clinical trial. JAMA. 2017;317:2097–104.

Johansson PI, Rasmussen AS, Thomsen LL. Intravenous iron isomaltoside 1000 (Monofer®) reduces postoperative anaemia in preoperatively non-anaemic patients undergoing elective or subacute coronary artery bypass graft, valve replacement or a combination thereof: a randomized double-blind placebo-controlled clinical trial (the PROTECT trial). Vox Sang. 2015;109:257–66.

Bernabeu-Wittel M, Romero M, Ollero-Baturone M, et al. Ferric carboxymaltose with or without erythropoietin in anemic patients with hip fracture: a randomized clinical trial. Transfusion. 2016;56:2199–211.

Froessler B, Palm P, Weber I, Hodyl NA, Singh R, Murphy EM. The important role for intravenous iron in perioperative patient blood management in major abdominal surgery: a randomized controlled trial. Ann Surg. 2016;264:41–6.

Acknowledgements

Edward Litton is supported by a National Health and Medical Research Council Early Career Fellowship. The authors would like to thank the Fiona Stanley Hospital Intensive Care Unit for their support.

Funding

This work did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors. Publication costs were funded by the Fiona Stanley Hospital Intensive Care Unit Departmental Research Funds.

Availability of data and materials

Not applicable.

Author information

Authors and Affiliations

Contributions

The authors contributed equally to all aspects of the manuscript. Both authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Edward Litton was the principle investigator of the IRONMAN study that received in-kind support of study drug from Vifor Pharma. The authors declare no other conflicts of interest.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Litton, E., Lim, J. Iron Metabolism: An Emerging Therapeutic Target in Critical Illness. Crit Care 23, 81 (2019). https://doi.org/10.1186/s13054-019-2373-1

Published:

DOI: https://doi.org/10.1186/s13054-019-2373-1