Abstract

Background

External validation on different TBI populations is important in order to assess the generalizability of prognostic models to different settings. We aimed to externally validate recently developed models for prediction of six month unfavourable outcome and six month mortality.

Methods

The International Neurotrauma Research Organization – Prehospital dataset (INRO-PH) was collected within an observational study between 2009-2012 in Austria and includes 778 patients with TBI of GCS < = 12. Three sets of prognostic models were externally validated: the IMPACT core and extended models, CRASH basic models and the Nijmegen models developed by Jacobs et al – all for prediction of six month unfavourable outcome and six month mortality. The external validity of the models was assessed by discrimination (Area Under the receiver operating characteristic Curve, AUC) and calibration (calibration statistics and plots).

Results

Median age in the validation cohort was 50 years and 44% had an admission GSC motor score of 1-3. Six-month mortality was 27%. Mortality could better be predicted (AUCs around 0.85) than unfavourable outcome (AUCs around 0.80). Calibration plots showed that the observed outcomes were systematically better than was predicted for all models considered. The best performance was noted for the original Nijmegen model, but refitting led to similar performance for the IMPACT Extended, CRASH Basic, and Nijmegen models.

Conclusions

In conclusion, all the prognostic models we validated in this study possess good discriminative ability for prediction of six month outcome in patients with moderate or severe TBI but outcomes were systemically better than predicted. After adjustment for this under prediction in locally adapted models, these may well be used for recent TBI patients.

Similar content being viewed by others

Introduction

Traumatic brain injury (TBI) is the leading cause of disability and mortality among young individuals in high-income countries and it is a rising public health issue in low-income countries [1]. Partly because of the heterogeneity of the disease in terms of cause, pathology and severity considerable uncertainty may exist in the expected outcome of patients [2]. Many studies reported on univariate associations between predictors and outcome after TBI and further research has been devoted to combine single predictors into prognostic models [1]. Such models have a relatively wide applicability ranging from clinical settings and research to providing information on expectations to relatives of patients [1]-[3]. Facilitating better severity classification, stratification in the context on Randomized Clinical Trials (RCTs) and setting baseline for clinical audits could be seen as the prime roles of prognostic models in TBI [2].

There is a relatively large number of prognostic models for outcome of TBI which utilize a variety of predictors and outcome measures. A systematic review published in 2006 identified 53 reports, which included a total of 102 models [4], but their quality was mostly poor [2],[4].

Lack of external validation represents one of the main shortcomings of most models. External validation on different TBI populations, preferably collected in a multicentre setting is important in order to assess the generalizability of prognostic models to different settings [2],[4]. It is reasonable to expect that even though biologically the prognostic factors should be the same for all patients, the association could differ depending on medical care received, which may differ by place and evolve over time [4]. Specifically, predictions based on data from decades ago may overestimate the incidence of poor outcomes for current clinical settings.

The International Mission for Prognosis and Analysis of Clinical Trials in TBI (IMPACT) [5] and the Corticosteroid Randomization After Significant Head Injury (CRASH) studies [6] are the most established prognostic models currently. Both were developed on large patient samples from multiple countries with state of the art methodology and have been externally validated beyond the process of model development [7]-[10]. More recently, a set of prognostic models was developed by Jacobs et al [11] using patient data from Nijmegen, the Netherlands. External validation in a fully new setting has not yet been performed (besides the external validation during development). Further validation of these 3 sets of models in recent patients is important to assess generalizability, since differences in treatment and health care organization in different populations have been suggested [10].

The aim of this study was to externally validate the IMPACT, CRASH and Nijmegen models for the prediction of six month unfavourable outcome and six month mortality in a recent observational patient cohort from Austria in 2009-2012.

Methods

Patients and population

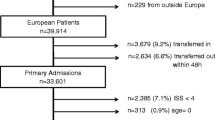

The International Neurotrauma Research Organization (INRO) based in Vienna aims to improve the care and outcome of patients with TBI primarily in Austria, the wider region of Central Europe, and in countries of the South-Eastern European region. INRO led a prospective observational study focusing on prehospital and early hospital care of patients with TBI in Austria between 2009 and 2012. The study has been approved by the Ethical Committees of all participating centres and have been performed in accordance with the ethical standards laid down in the Declaration of Helsinki and its later amendments. All patients with Glasgow Coma Scale score (GCS) < = 12 within 48 hours after the accident and/or Abbreviated injury scale (AIS) [12] score of head >2 were included in the study. Data on demographic characteristics, injury type and severity, prehospital treatment, trauma room treatment, surgical procedures, CT scans, Intensive Care Unit (ICU) based treatment (first 5 days) and outcome (at ICU discharge, hospital discharge and six month after injury) were recorded. The Extended Glasgow Outcome Scale (GOSE) was used to categorize the outcome at hospital discharge (assessed by a local study investigator) and at six months after injury (assessed by telephone interview by a trained physician). The follow-up rate six months after injury was 70%. We analysed data from 778 patients from 16 centres.

Prognostic models

For this study three sets of prognostic models (eight models in total) were selected. These models fulfilled requirements for prognostic models in TBI with respect to the study population, predictors, outcome, model development and validation [2],[3]. All models were designed to predict six month mortality and six month unfavourable outcome in patients with TBI. Unfavourable outcome was defined as GOS of 1-3 (death, vegetative state or severe disability) in all models. Table 1 presents a comparative description of models and their development characteristics.

Within the IMPACT study [13] three models of increasing complexity were developed (core model, extended model and lab model which added glucose and haemoglobin levels to predictors in the extended model). We focused on the core and extended models because the lab parameters were not available in the INRO-PH dataset.

The CRASH prognostic model [6] has two variants. One is for patients in low and middle income countries and the second for patients in high income countries. For the purposes of our study the models for high income countries were used. Only the basic model was validated since the CT predictors as they were defined in the CRASH CT models were not available in the INRO-PH dataset. Major extra-cranial injury in the INRO-PH dataset was defined as being serious or worse (2 or more scoring points) according to the AIS.

The Nijmegen models [11] were developed using the Radboud University Brain Injury Cohort Study (RUBICS) dataset, which enrolled patients between 1999-2006 [14]. Two prognostic models were developed. In our study only the demographic and clinical models were validated because the predictors as defined in the CT models were not available in our validation dataset.

The IMPACT models were developed using both RCT and observational data from a total of 11 studies, the CRASH and Nijmegen models were developed using data from a single study – an RCT and an observational study, respectively. The IMPACT and CRASH datasets were considerably larger than the dataset used to develop the Nijmegen models. Age, GCS (either summary or motor score only) and pupillary reactivity as predictors were common for all validated models. The Nijmegen models used the most recent data for development.

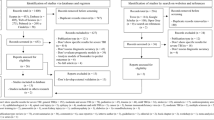

Statistical analysis

To investigate the difference in predictor effects between the developed models and the validation population we fitted logistic regression models containing the predictors of the models in the validation population (using second level of customization). The estimated ORs of the different predictors were compared to the ORs of the original models. The external validity of the models was assessed using analysis of discrimination and calibration, following previous studies [3],[7],[10],[13].

To assess the discrimination (ability to distinguish between survival and death or favourable and unfavourable outcome) the area under the receiver operating characteristic curve (AUC) was used. For a model with perfect discrimination the AUC = 1.0. An AUC of 0.5 indicates that the discriminative ability of the model is no better than by chance. For all models AUCs at external validation (AUCVAL) were calculated along with AUCs of the models refitted on the INRO-PH dataset (AUCREFIT). Confidence intervals are presented for each AUCVAL calculated non-parametrically, using the bootstrap method of the ‘ci.auc’ function within the R package pROC [15].

Calibration evaluates the agreement between observed and predicted outcomes and was assessed using validation plots and calibration statistics (calibration-in-the-large) [3]. In the validation plots predicted vs. observed outcomes are plotted to depict the deviation of their agreement from the optimal situation. Calibration-in-the-large was assessed by fitting a logistic regression model with the model predictions as an offset variable and by calculating the intercept and calibration slope. The intercept was used to assess whether the model predictions are systematically too low or too high. In case of perfect calibration-in-the-large, i.e. the percentage predicted outcome is equal to the percentage observed, the intercept is 0. If outcomes are better than predicted, the intercept is negative. The calibration slope, which in an ideal case equals 1, was used to quantify the average strength of the predictors, compared to the development dataset.

Results

Comparison of datasets

The INRO-PH dataset was compared side by side with datasets used to develop the IMPACT, CRASH and Nijmegen models. Table 2 presents the description of predictor variables used in all models and their equivalent in the INRO-PH dataset. Compared to the IMPACT dataset the patients in the INRO-PH dataset were significantly older (median of 50 vs. 30 years) with higher proportions of EDH and tSAH, higher proportion of reactive pupils (71% vs 63%) and similar distribution of GCS motor scores (scores 1-3 in 44% vs. in 41% in the IMPACT dataset). Both mortality and the proportion of unfavourable outcome were lower in the INRO-PH dataset.

About one third of patients in the CRASH dataset had a mild TBI whereas all patients had moderate or severe TBI in the INRO-PH dataset. Thus, in general the severity was lower in the CRASH patients. The mean age was higher in the INRO-PH dataset (mean 49 vs. 37 years). The outcomes in the subset of CRASH patients with moderate or severe TBI were worse than in the INRO-PH patients.

The data for the RUBICS dataset (used to develop the Nijmegen models) was reported separately for moderate and severe TBI and where possible it was summarized. In general, patients in the RUBICS dataset were slightly younger and had less severe injuries (higher median of total GCS, lower proportion of mass lesions) but had worse outcomes compared to the INRO-PH dataset.

Predictor effects

A detailed comparison of predictor effects (using ORs) reported with the original models and in the models refitted using the INRO-PH dataset is presented in Table 3. Age as a predictor was included in all models and its effects were similar in all comparisons. The ORs of the effect of the summary GCS in the models and refits were identical both in case of CRASH and Nijmegen models. The effects if GCS motor scores 1-4 in case of the IMPACT core and extended models were stronger in models for prediction of unfavourable outcome; the differences were smaller in case of mortality prediction. A generally strong effect of none reactive pupils observed in the refitted models was similar to values in the Nijmegen models (15.3 vs. 13.2 for mortality and 9.3 vs. 10.9 for unfavourable outcome) but differed from the values in the IMPACT and CRASH models where this effect was smaller. Compared to the IMPACT extended models, stronger effects of hypoxia and Marshall CT classification were observed in the INRO-PH dataset.

Model performance

All models showed a good ability to discriminate between survival and death and between favourable and unfavourable outcome, as indicated by values of AUCVAL (Table 4). In all validations, the AUC was 0.8 or higher. In all cases higher values were achieved for prediction of mortality than for unfavourable outcome. Although the Nijmegen models showed the best discriminative ability for both outcomes, the difference in AUCVAL compared to other models were small (0.01-0.02 points in case of unfavourable outcome and 0.01-0.04 in case of mortality) and non-significant.

The difference between AUCVAL and AUCREFIT reflected the extent to which the effects of predictors in the original models were suboptimal. The AUCREFIT shows the maximum discrimination achievable using the INRO-PH dataset, given the specification of predictors in each of the original models. For all models, the performance was better after refitting, with AUCs of 0.82 or higher.

The calibration plots (Figures 1 and 2) indicate systematic under prediction in all cases and a better calibration of models for prediction of mortality compared to the models for prediction of unfavourable outcome. Except for the CRASH mortality model (intercept 0.207), all observed frequencies of unfavourable outcome and death were better than predicted (intercept <0). Calibration slopes were close to 1 in all cases (0.865-1.073 in case of unfavourable outcome and 0.866-1.381 in case of models for prediction of mortality), indicating good calibration. The IMPACT extended model had the slope closest to 1 (1.061) for prediction of unfavourable outcome and for mortality prediction the slope of the Nijmegen model was closest to 1 (0.984).

Calibration plots for prognostic models predicting 6 month mortality. In the figures predicted probabilities of mortality or unfavourable outcome are plotted against actual observed proportions and this relationship is shown as a curve. The dotted diagonal line shows the optimal shape of the curve where the predicted and observed values match. The triangles show observed proportions by decile of predicted probability.

Discussion

We conducted a study to externally validate three sets of prognostic models for prediction of outcome in patients with moderate or severe TBI using a recently (2009-2012) collected observational dataset of 778 TBI patients from Austria. We focused on recently developed models that fulfilled high methodological standards for their development. We confirmed the external validity of the IMPACT and CRASH models in predicting outcome of patients with moderate or severe TBI in the INRO-PH database of Austrian TBI patients. In addition, our results showed good performance of the more recently developed Nijmegen models. The overall performance of all models in cross-comparison was similar as indicated by calibration and discrimination measures.

All models were externally validated before as part of their development. The IMPACT models were externally validated on a set of 6,681 patients from the CRASH trial; CRASH models have been externally validated using the IMPACT dataset; and the Prospective Observational Cohort Neurotrauma [16] dataset was used to validate the Nijmegen models.

The IMPACT core and CRASH basic models were additionally externally validated side by side using 5 different datasets (3 RCTs and 2 observational studies) [10]. Furthermore, both the IMPACT core and extended models were validated using the Prospective Observational Cohort Neurotrauma [16] dataset of 415 cases [7] and using a dataset of 587 patients with prospective consecutive data collection [8]. All three studies concluded that the models performed well on the validation datasets. Another study validated the IMPACT models on a set of 342 patients from a retrospective single centre study and also confirmed their good performance [9].

Our findings contribute further to the evidence base on the performance of prognostic models for TBI patients. Specifically, we observed a better than expected outcome in recent patients. We note however that caution must be used when interpreting our findings because factors beyond the model and its development must be considered.

First, the discrimination of the model on external datasets is highly sensitive to case-mix of patients - substantially higher AUCs were reported when using observational dataset compared to RCTs due to generally less restricted patient enrolment criteria [10].

Second, the calibration of the models is influenced by the effect of predictors and by the distribution of outcomes. A previous study reported that miscalibration could occur due to differences in outcome of patients on the GOS scale. This was found to be U-shaped in the IMPACT and CRASH development datasets (e.g. relatively many patients died or had favourable outcome, and relatively few patients were vegetative or had severe disability) and differed from the distribution in their validation datasets [10]. In our patients, the distribution of outcomes was similarly U-shaped as in the IMPACT and CRASH studies. We can therefore assume that in our study the miscalibration was at least to some extent influenced by the different predictor effects (see Table 3 for details).

Third, additional factors that influence the overall performance of the models include system factors such as trauma care organization or treatment policies. We can speculate that the advances in treatment could be the cause of better than predicted outcomes, although there is no clear evidence to support this presumption [17],[18]. A number of specific factors could be relevant here: A) patients in the INRO-PH dataset were treated by well-trained emergency physicians in the field, and the mean interval between arrival of Emergency Medical Service teams and arrival at hospital was only 51 minutes; B) the short intervals between hospital admission and first CT scan (median of 25 minutes), and between CT scan and surgery (median between ER admission and surgery was 93 minutes); and C) a high proportion of patients had advanced management of possible coagulation disorders [19].

Influences of case-mix of patients, predictor effects, outcome distribution and improvements of care could be tackled by adjusting the models [20].

Nevertheless, our study and previous evidence [7]-[10],[13] suggests that the IMPACT, CRASH and Nijmegen models are valid and useful tools that can be effectively used for predicting the risk of adverse outcome in patients with TBI. Such prediction is relevant in clinical settings as well as research where quantification of expected outcomes of patients can be helpful in assessing quality of care and evaluating the effectiveness of certain procedures (e.g. by comparing expected vs. observed outcome). Furthermore, they could be used to inform relatives of patients, to make treatment decisions or to decide on allocation of resources [7].

From the practical point of view, two aspects could be considered when deciding on the model to be used. First, the complexity of the model. In general, more complex models (e.g. those including clinical variables such as CT scan findings) have better discriminative ability [7],[10]. However, in our study the performance of more complex models (IMPACT extended models) was not much better than that of simpler models. In fact, the IMPACT core model, the simplest model validated in this study which uses only three predictors, performed similarly to all other models. Another dimension to this aspect of a model is that the more predictors are needed the higher chance that some will be missing - making predictions impossible. Thus, models with less predictors could be preferred over more complex models as we can assume that the information for the prediction will be easier to obtain and such models perform similarly to more complex models. Second, the actual usability of the model is tested by external validation studies using various populations, such as in our study. Therefore, more external validations could mean higher confidence in the performance of the model. In our study the Nijmegen models performed best (although the differences compared to other models were small). However, in the time of writing this paper these models were not externally validated as widely as the IMPACT or CRASH models and thus recommending their preference over other models should be cautious.

The fact that a number of different prognostic models for prediction of outcome in patients with TBI are available with different sets of predictors and outcomes used provides clinicians and researchers with a range of choices. The decision on which model to use in specific settings and situations could be made according to the above mentioned factors and will be in most situations driven by availability of predictor values and/or the desired outcome to be predicted. In order to maintain and extend the range of available models it is of cardinal importance that the models will be continuously validated in external settings and updated using more recent data.

We acknowledge that this study has some limitations. S ome measures and variables may not exactly match with the development data. For example, some measures differ as to the timing of their assessment (e.g. GCS at randomization in the CRASH dataset is compared to GCS at trauma room in the INRO-PH dataset). Furthermore, there might be other differences such as methodology of assessment of six month outcome, which tends to differ between studies, related to outcome distribution and predictor effects [10].

In the CRASH model a relatively loose definition of MEI is used: “an injury requiring hospital admission within its own right” [21]. Such a broad definition may include various types of injuries in various settings. In our study we defined MEI as any injury with an AIS > 2 and thus the actual injuries considered as MEI in CRASH and in our study may differ.

The findings of this study should not be generalized without cautious considerations. Prognostic models should be seen as tools to predict outcomes in clusters of patients with certain characteristics and may thus aid clinical, research and policy work. The findings of this study inherit these characteristics and they should be interpreted and used in such context.

In conclusion, all the prognostic models we validated in this study possess good discriminative ability for prediction of six month outcome in patients with moderate or severe TBI but outcomes were systemically better than predicted. After adjustment for this under prediction in locally adapted models, these may well be used for recent TBI patients.

References

Maas AI, Stocchetti N, Bullock R: Moderate and severe traumatic brain injury in adults. Lancet Neurol. 2008, 7: 728-741. 10.1016/S1474-4422(08)70164-9.

Mushkudiani NA, Hukkelhoven CW, Hernandez AV, Murray GD, Choi SC, Maas AI, Steyerberg EW: A systematic review finds methodological improvements necessary for prognostic models in determining traumatic brain injury outcomes. J Clin Epidemiol. 2008, 61: 331-343. 10.1016/j.jclinepi.2007.06.011.

Steyerberg EW: Clinical Prediction Models: A Practical Approach to Development, Validation, and Updating. 2009, Springer, New York

Perel P, Edwards P, Wentz R, Roberts I: Systematic review of prognostic models in traumatic brain injury. BMC Med Inform Decis Mak. 2006, 6: 38-10.1186/1472-6947-6-38.

Maas AI, Marmarou A, Murray GD, Teasdale SG, Steyerberg EW: Prognosis and clinical trial design in traumatic brain injury: the IMPACT study. J Neurotrauma. 2007, 24: 232-238. 10.1089/neu.2006.0024.

Perel P, Arango M, Clayton T, Edwards P, Komolafe E, Poccock S, Roberts I, Shakur H, Steyerberg E, Yutthakasemsunt S: Predicting outcome after traumatic brain injury: practical prognostic models based on large cohort of international patients. BMJ. 2008, 336: 425-429. 10.1136/bmj.39461.643438.25.

Lingsma H, Andriessen TM, Haitsema I, Horn J, van der Naalt J, Franschman G, Maas AI, Vos PE, Steyerberg EW: Prognosis in moderate and severe traumatic brain injury: external validation of the IMPACT models and the role of extracranial injuries. Trauma Acute Care Surg. 2013, 74: 639-646. 10.1097/TA.0b013e31827d602e.

Panczykowski DM, Puccio AM, Scruggs BJ, Bauer JS, Hricik AJ, Beers SR, Okonkwo DO: Prospective independent validation of IMPACT modeling as a prognostic tool in severe traumatic brain injury. J Neurotrauma. 2012, 29: 47-52. 10.1089/neu.2010.1482.

Raj R, Siironen J, Kivisaari R, Hernesniemi J, Tanskanen P, Handolin L, Skrifvars MB: External validation of the international mission for prognosis and analysis of clinical trials model and the role of markers of coagulation. Neurosurgery. 2013, 73: 305-311. 10.1227/01.neu.0000430326.40763.ec.

Roozenbeek B, Lingsma HF, Lecky FE, Lu J, Weir J, Butcher I, McHugh GS, Murray GD, Perel P, Maas AI, Steyerberg EW: Prediction of outcome after moderate and severe traumatic brain injury: external validation of the International Mission on Prognosis and Analysis of Clinical Trials (IMPACT) and Corticoid Randomisation After Significant Head injury (CRASH) prognostic models. Crit Care Med. 2012, 40: 1609-1617. 10.1097/CCM.0b013e31824519ce.

Jacobs B, Beems T, van der Vliet TM, van Vugt AB, Hoedemaekers C, Horn J, Franschman G, Haitsma I, van der Naalt J, Andriessen TM, Borm GF, Vos PE: Outcome prediction in moderate and severe traumatic brain injury: a focus on computed tomography variables. Neurocrit Care. 2013, 19: 79-89. 10.1007/s12028-012-9795-9.

Copes WS, Champion HR, Sacco WJ, Lawnick MM, Gann DS, Gennarelli T, MacKenzie E, Schwaitzberg S: Progress in characterizing anatomic injury. J Trauma. 1990, 30: 1200-1207. 10.1097/00005373-199010000-00003.

Steyerberg EW, Mushkudiani N, Perel P, Butcher I, Lu J, McHugh GS, Murray GD, Marmarou A, Roberts I, Habbema JD, Maas AI: Predicting outcome after traumatic brain injury: development and international validation of prognostic scores based on admission characteristics. PLoS Med. 2008, 5: e165-10.1371/journal.pmed.0050165. discussion e165

Franschman G, Verburg N, Brens-Heldens V, Andriessen TM, Van der Naalt J, Peerdeman SM, Valk JP, Hoogerwerf N, Greuters S, Schober P, Vos PE, Christiaans HMT, Boer C: Effects of physician-based emergency medical service dispatch in severe traumatic brain injury on prehospital run time. Injury. 2012, 43: 1838-1842. 10.1016/j.injury.2012.05.020.

Robin X, Turck N, Hainard A, Tiberti N, Lisacek F, Sanchez JC, Muller M: pROC: an open-source package for R and S + to analyze and compare ROC curves. BMC Bioinformatics. 2011, 12: 77-10.1186/1471-2105-12-77.

Andriessen TM, Horn J, Franschman G, van der Naalt J, Haitsma I, Jacobs B, Steyerberg EW, Vos PE: Epidemiology, severity classification, and outcome of moderate and severe traumatic brain injury: a prospective multicenter study. J Neurotrauma. 2011, 28: 2019-2031. 10.1089/neu.2011.2034.

Roozenbeek B, Maas AI, Menon DK: Changing patterns in the epidemiology of traumatic brain injury. Nat Rev Neurol. 2013, 9: 231-236. 10.1038/nrneurol.2013.22.

Stein SC, Georgoff P, Meghan S, Mizra K, Sonnad SS: 150 years of treating severe traumatic brain injury: a systematic review of progress in mortality. J Neurotrauma. 2010, 27: 1343-1353. 10.1089/neu.2009.1206.

Maegele M: Coagulopathy after traumatic brain injury: incidence, pathogenesis, and treatment options. Transfusion. 2013, 53 (Suppl 1): 28S-37S. 10.1111/trf.12033.

Steyerberg EW, Borsboom GJ, van Houwelingen HC, Eijkemans MJ, Habbema JD: Validation and updating of predictive logistic regression models: a study on sample size and shrinkage. Stat Med. 2004, 23: 2567-2586. 10.1002/sim.1844.

CRASH Head Injury Prognostic Models: web based calculator. [], [http://www.crash.lshtm.ac.uk/Risk%20calculator/index.html]

Acknowledgements

We would like acknowledge the members of the “Austrian Working Group on Improvement of Early TBI Care” for their work in the design of the study and collection of data used for analyses in this paper. Specifically we would like to thank these institutions and individuals: International Neurotrauma Research Organisation (Alexandra Brazinova MD PhD MPH, Johannes Leitgeb MD), Amstetten hospital (Alexandra Frisch MD, Andreas Liedler MD, Harald Schmied MD); Feldkirch hospital (Reinhard Folie MD, Reinhard Germann MD PhD, Jürgen Graber RN ICU), Graz University hospital (Kristina Michaeli MD, Rene Florian Oswald MD, Andreas Waltensdorfer MD), Graz trauma hospital (Thomas Haidacher MD, Johannes Menner MD), Horn hospital (Anna Hüblauer MD, Elisabeth Nödl MD), Innsbruck University hospital (Christian Freund MD, Karl-Heinz Stadlbauer Prof. MD), Klagenfurt hospital (Michel Oher MD, Ernst Trampitsch MD, Annemarie Zechner MD), Linz hospital (Franz Gruber MD, Walter Mitterndorfer MD, Sandra Rathmaier MS), Linz trauma hospital (Norbert Bauer MD), Salzburg hospital (Peter Hohenauer MD), Salzburg trauma hospital (Josef Lanner MD, Wolfgang Voelckel Prof. MD), Sankt Poelten hospital (Claudia Mirth MD), Schwarzach hospital (Hubert Artmann MD, Bettina Cudrigh MD), Wiener Neustadt hospital (Daniel Csomor MD, Günther Herzer MD, Andreas Hofinger MD, Helmut Trimmel MD), Wien Meidling trauma hospital (Veit Lorenz MD, Heinz Steltzer Prof. MD), Wien „Lorenz Boehler“ trauma hospital.

The project was funded jointly by the Austrian Ministry of Health (Contract Oct. 15, 2008) and by the Austrian Worker’s Compensation Board (AUVA; FK 11/2008 and FK 11/2010). Data analysis was supported by a grant from AUVA (FK 09/13). INRO is supported by an annual grant from Mrs. Ala Auersperg-Isham and Mr. Ralph Isham, and by small donations from various sources. The work of Marek Majdan on this paper was supported by an institutional grant from the Trnava University, No. 12/TU/13. The validation study and the preparation of this paper has been partly done within the CENTER-TBI project that has received funding from the European Union Seventh Framework Programme (FP7/2007-2013) under grant agreement n°602150.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

MM participated in data collection, performed statistical analyses, drafted the manuscript and critically reviewed it. HL performed statistical analyse, has been involved in drafting the manuscript and critically reviewed it. DN performed statistical analyses, has been involved in drafting the manuscript and critically reviewed it. ES has been involved in drafting the manuscript, contributed to the concept of the statistical analyses and critically reviewed the manuscript. WM have been involved in drafting the manuscript, was PI for the study to collect the data used in the analyses and critically reviewed the manuscript. MR critically reviewed the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Majdan, M., Lingsma, H.F., Nieboer, D. et al. Performance of IMPACT, CRASH and Nijmegen models in predicting six month outcome of patients with severe or moderate TBI: an external validation study. Scand J Trauma Resusc Emerg Med 22, 68 (2014). https://doi.org/10.1186/s13049-014-0068-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13049-014-0068-9