Abstract

Background

Shared decision-making (SDM) is preferred by many patients in cancer care. However, despite scientific evidence and promotion by health policy makers, SDM implementation in routine health care lags behind. This study aimed to evaluate an empirically and theoretically grounded implementation program for SDM in cancer care.

Methods

In a stepped wedge design, three departments of a comprehensive cancer center sequentially received the implementation program in a randomized order. It included six components: training for health care professionals (HCPs), individual coaching for physicians, patient activation intervention, patient information material/decision aids, revision of quality management documents, and reflection on multidisciplinary team meetings (MDTMs). Outcome evaluation comprised four measurement waves. The primary endpoint was patient-reported SDM uptake using the 9-item Shared Decision Making Questionnaire. Several secondary implementation outcomes were assessed. A mixed-methods process evaluation was conducted to evaluate reach and fidelity. Data were analyzed using mixed linear models, qualitative content analysis, and descriptive statistics.

Results

A total of 2,128 patient questionnaires, 559 questionnaires from 408 HCPs, 132 audio recordings of clinical encounters, and 842 case discussions from 66 MDTMs were evaluated. There was no statistically significant improvement in the primary endpoint SDM uptake. Patients in the intervention condition were more likely to experience shared or patient-lead decision-making than in the control condition (d=0.24). HCPs in the intervention condition reported more knowledge about SDM than in the control condition (d = 0.50). In MDTMs the quality of psycho-social information was lower in the intervention than in the control condition (d = − 0.48). Further secondary outcomes did not differ statistically significantly between conditions. All components were implemented in all departments, but reach was limited (e.g., training of 44% of eligible HCPs) and several adaptations occurred (e.g., reduced dose of coaching).

Conclusions

The process evaluation provides possible explanations for the lack of statistically significant effects in the primary and most of the secondary outcomes. Low reach and adaptations, particularly in dose, may explain the results. Other or more intensive approaches are needed for successful department-wide implementation of SDM in routine cancer care. Further research is needed to understand factors influencing implementation of SDM in cancer care.

Trial registration

clinicaltrials.gov, NCT03393351, registered 8 January 2018.

Similar content being viewed by others

Background

In cancer care, health care decisions often revolve around complex treatment options with various patterns of benefits and risks and with a substantial impact on the patient’s subsequent quality of life [1]. This makes it especially important to consider patients’ values and preferences during the decision-making process [2, 3]. Many patients with cancer prefer to be involved in medical decisions [4,5,6,7]. In shared decision-making (SDM), an important component of high-quality health care, patients and health care professionals (HCPs) build a team in the decision-making process by combining medical knowledge with personal preferences and values to find the option that best suits the patient’s individual situation [8,9,10]. Therewith, SDM is an important pillar of both evidence-based medicine and patient-centered care [11, 12]. SDM is widely supported by ethical considerations [13] and by health policy makers [14, 15]. A range of patient- and clinician-mediated interventions to facilitate SDM have been evaluated in clinical trials, including SDM communications skills training for HCPs [16] and patient decision aids (PtDAs [17],). However, translation into routine practice has repeatedly been found to be limited [4, 18,19,20,21]. This lack of implementation has been associated with patients’ decision regret as well as lower patient-reported quality of care and physician communication [7, 22].

In the past years, a range of SDM implementation efforts has been made. Some of these endeavors focused on the implementation of PtDAs as the main strategy to foster SDM implementation. Many of these studies did not explicitly ground their work in theoretical considerations [23], as recommended by implementation scientists [24, 25]. Several SDM implementation projects did include multiple strategies, e.g., the MAGIC (Making good decisions in collaboration) program in the UK [26] and an SDM implementation program in breast cancer care in the Netherlands [27]. In Germany, at the time of planning this study, no projects focusing on the implementation of SDM in routine clinical practice had been concluded [14, 28].

Building on the importance of using a theoretical underpinning in implementation projects [24, 25] and of conducting pre-implementation studies to understand the local context and its stakeholders’ perspectives on potential implementation strategies, we used the Consolidated Framework for Implementation Research (CFIR [29],) and developed a multi-component SDM implementation program for cancer care based on the results of a thorough pilot study. In this pilot study, we assessed the current state of SDM implementation and the needs of different stakeholders regarding SDM implementation at the same comprehensive cancer center that also participated in the implementation study reported here. The pilot study used a range of qualitative methods, including interviews, focus groups, and observational methods, and triangulated perspectives between different stakeholders and researchers. Detailed results are described elsewhere [18, 19, 30,31,32].

The aim of the present study was to evaluate this theoretically and empirically grounded multi-component program for implementation of SDM in routine cancer care.

Methods

Design

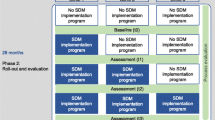

We used a stepped wedge design, a variant of the cluster randomized controlled trial, in which the participating clusters received the intervention in a randomized order. The SDM implementation program was sequentially introduced in each of the three participating departments in time intervals of 6 months, i.e., each department moved from control condition (prior to introduction of the implementation program) to intervention condition (exposure to implementation program). The findings are reported in accordance with relevant reporting guidelines ( [33, 34], see Additional files 1 and 2). Methodological details have been described in a published study protocol [28].

Setting and participants

The study was conducted in three departments of a comprehensive cancer center within an academic hospital in Germany treating a wide range of cancer entities. Each department offers inpatient and outpatient care. We selected the departments due to their respective leadership’s high interest in the implementation of SDM identified in the pilot study, which is a known facilitator for SDM implementation [35]. The study team consisted of researchers with expertise in SDM interventions and implementation. Hospital administrators and health services managers were involved in the study in an advisory capacity (e.g., in a workshop meeting at the beginning of the study).

We aimed to include an unselected sample of patients who had a confirmed or suspected diagnosis of a neoplasm (ICD 10: C00-D49, excluding D10-D36), received health care at one of the participating departments, were 18 years old or older, and spoke German sufficiently. As it was not always possible to verify diagnosis and age at the time of recruitment, we decided to also include German-speaking patients with uncertainty regarding diagnosis or age who visited the cancer-specific in- and outpatient facilities at the departments during the data collection waves (see Additional file 3 for a list of changes from the study protocol). All physicians and nurses who were working at the departments at the time of the study were invited to participate.

Intervention

The multi-component SDM implementation program was based on theoretical considerations [29] and empirical findings from a preparatory pilot study [18, 19, 30,31,32]. It consisted of SDM training for HCPs (one group session per HCP), individual SDM coaching of physicians (two sessions per physician), a patient activation intervention (i.e., Ask 3 Questions, ASK3Q [36, 37]), provision of information material and decision aids for patients, revision of quality management documents (i.e., incorporation of SDM in the departments’ standard operating procedures), and reflection on multidisciplinary team meetings (MDTMs) [28]. Which findings of the pilot study informed which component of the implementation program has been described in the study protocol [28]. While most strategies focused on the individual level (patient, HCP), the last two strategies focused on the organizational level. Additionally, we developed a title in laypeople’s terms and a label for this study that we used on all documents and on pens specifically designed for this study. As suggested by Proctor et al. [38], actors, actions, targets of action, temporality, and dose of each implementation strategy were defined a priori [28].

The control condition was standard medical decision-making without the specific implementation program to foster SDM. Although patient-centeredness has a continuously increasing impact on the organization of health care in Germany [14], specific effort to implement SDM in routine practice is generally absent. Therefore, the control condition did not include any intentional or direct SDM implementation efforts.

Outcome evaluation

Measures and outcomes

Implementation outcomes [39] were collected from four sources: a standardized survey of patients, a standardized survey of HCPs, rating of audio-recorded clinical encounters, and systematic observation of MDMTs.

The primary outcome was uptake of SDM assessed by the 9-item Shared Decision Making Questionnaire (SDM-Q-9), a patient-reported measure of the SDM process in patient-physician encounters [40]. Secondary patient-reported outcomes included the uptake of SDM using the 3-item collaboRATE measure [41,42,43], a single-item measure of the experienced decision control during the rated clinical encounter (adapted Control Preference Scale (CPS) [44,45,46],), and a single-item measure of patient satisfaction.

HCP-rated measures were single items for self-assessed knowledge and use of SDM, a single-item measure of general preference for decision control in clinical encounters (adapted CPS [44]), the 8-item IcanSDM measure assessing perceived barriers of SDM implementation [47, 48] as an indicator of appropriateness of SDM, the 10-item Organizational Readiness for Implementing Change (ORIC) scale [49, 50], and six single-item assessments of acceptability of SDM adapted from McColl’s questionnaire on attitudes towards evidence-based practice [51] and the Evidence-Based Practice Attitude Scale (EBPAS [52],), and derived from results of the pilot study.

Outcomes for the audio-recorded clinical encounters were the uptake of SDM as measured by the Observer OPTION5 tool [53,54,55] and patient-rated single-item assessments of the experienced decision control in the rated encounter and the general preference for decision control in clinical encounters (adapted CPS [44],).

As indicators for penetration of SDM in MDTMs, for each case discussed in the MDTMs, observer-rated outcomes were the quality of information on patient view, the quality of psychosocial information, and the number of recommendations given, as measured by an adapted version of the Metric for the Observation of Decision Making in Multidisciplinary Team Meetings (MDT-MODe [32, 56],).

Most of these measures had been defined prior to starting the study [28] and all of them were specified prior to data analysis (for deviations from the study protocol regarding the outcome measures see Additional file 3). All surveys included assessment of demographic and clinical or professional information, respectively. Patients’ global health was assessed using a single item derived from the Short-Form-Health Survey (SF-12 [57],) and their distress was assessed using the German version of the NCCN Distress Thermometer [58].

Sample size considerations

In order to be able to identify a small to moderate effect (Cohen’s d of 0.3) of the implementation program on the patients’ experience of SDM, we aimed to collect data from 1440 patients [28]. The target sample size of HCPs was not fixed a priori (complete sampling). Additionally, we aimed to analyze the audio recordings of 144 clinical encounters (12 encounters × 3 departments × 4 measurement waves) and to observe 64 MDMTs (4 types of MDTMs × 4 meetings × 4 measurement waves).

Data collection

Data collection was planned at four measurement waves with a 2-month duration each. Some deviations from the study protocol occurred due to insufficient recruitment and, regarding the fourth measurement wave, the pandemic of the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) (see Additional file 3). Hence, data were collected from baseline to month 2, months 9 to 10.5, months 17 to 18.5, and months 25 to 30 (including 10 weeks recruitment stop due to the pandemic).

Patients were approached by members of the study team in the waiting areas of outpatient clinics and on the wards for inpatients. They were transparently informed about the purpose of the study and gave written informed consent before participation in the paper and pencil survey and/or the audio recording of the clinical encounter with the physician. They were asked to fill out questionnaires anonymously after the clinical encounter. HCPs were approached in team meetings or via mail with the request to participate in the anonymous paper and pencil HCP survey. The ID for matching questionnaires from the same HCP at different measurement waves was created by the HCP and not decipherable by the study team. MDTMs were sampled from the four types of MDTMs for which the participating departments were responsible. MDTMs and consultations for audio recordings were selected unsystematically according to availability of staff resources during the measurement waves.

Data analysis

We used guidelines developed by the study team for data entry and quality control. Quality of quantitative data was checked by partial double entry and calculation of agreement rates. Quality of transcripts of audio recordings was examined through proofreading by a second member of the study team. For the evaluation of audio recordings members of the study team were trained in the use of the Observer OPTION5 measure by the principal investigator (IS), experienced in the method. Raters were blinded to the allocation of audio recordings regarding control and intervention condition.

For the outcome evaluation, an analysis plan was prepared and reported in the study protocol [28]. Analyses were performed consistently with the same statistical procedure for all outcomes, using linear mixed models for continuous and generalized linear mixed models for dichotomous outcomes [59]. All models included a fixed effect for the intervention, a linear fixed effect for the measurement time point (wave), and a random intercept for department differences [60]. All available cases were analyzed. Covariates were added to model deviations from the study protocol and trial registration, i.e., for returning questionnaires more than 14 days after the end of the respective measurement wave, for rating clinical encounters that took place more than 90 days before the survey, for underage patients, and for the patient’s diagnosis not being confirmed or suspected malignant neoplasm. Additional covariates, of which distribution were imbalanced between the intervention and control condition, were included as necessary in order to control confounding.

The last measurement wave of the study was impacted by the SARS-CoV-2 pandemic. We handled this incident in two ways [61, 62]. In the main analyses, an additional covariate was included to indicate measurement under pandemic conditions. In the per-protocol sensitivity analysis (see below), we considered data collected during the pandemic as missing. Estimated marginal means were calculated for the per-protocol population, i.e., participants fulfilling all per-protocol criteria and being surveyed under non-pandemic conditions.

We conducted several sensitivity analyses for the most important outcomes to test the robustness of the intervention effect estimate. In a “full-covariate” analysis, we fitted models including additional covariates to further minimize baseline imbalance. In a “categorical time” analysis, we allowed the wave effect to have a non-linear effect. In a “multiplicative effect” analysis, we tested whether including the interaction between intervention and wave influences the results. In a “heterogeneous effects” analysis, we included a department-level random slope for the intervention effect. In a “repeated measures” analysis, we took into account that a minority of the HCPs answered the survey more than once, leading to dependencies in the data. Finally, in a “per-protocol” analysis, we included only data that were collected in full accordance with the trial registration.

We have calculated standardized mean differences (Cohen’s d) by dividing the estimated mean difference between groups by the pooled observed standard deviation for continuous data [63] and by using approximations from the odds ratio in case of dichotomous data [64]. Findings with P < .05 were considered statistically significant. As the analyses of the secondary outcomes were not adjusted for multiple testing, these findings should be considered exploratory. All statistical analyses were conducted with IBM SPSS Statistics 25 (IBM Corp, Armonk, NY).

Process evaluation

We assessed quantitative implementation process indicators (including reach) as well as fidelity and adaptations of the implementation strategies by systematic documentation on how the different strategies were implemented. Process evaluation was used to address necessary adaptations of the program throughout the study and to support the interpretation of the outcome evaluation results. Descriptive statistics were calculated to describe quantitative implementation process indicators and to assess reach of the different implementation strategies. Fidelity and adaptations were furthermore evaluated. The following dataset were analyzed: (a) structured field notes of observations made by the study team, (b) minutes of meetings with clinical partners, and (c) transcripts of process interviews with HCPs. Qualitative data analysis, using primarily a deductive approach, was conducted: First, a coding scheme was created by one researcher (HC). Second, approximately 25–30% of the material was coded (HC). Third, the coding scheme was discussed and revised within the study team (HC, PH, IS). Fourth, coded material was revised and the remaining material was coded (HC). Fifth and last, results were discussed in the study team (HC, PH, IS) and final revisions were made (HC).

Results

Outcome evaluation

Sample characteristics

The case flow throughout the study is depicted in Figure 1. Return rates for analyzable patient surveys were 2128 of 4224 invited patients (50.4%). Most frequently voiced reasons for patients’ non-participation in the survey were prior participation in the study (n = 459), physical or psychological burden (n = 225), or no interest in the study (n = 183). 809 patients did not voice a reason for non-participation. 559 of 1186 potential HCP surveys were returned and included in data analyses (47.1%). 146 of 161 invited patients contributed to the study by allowing to audio-record their clinical encounter with a physician. Of these, 14 cases were not applicable for OPTION5 observer rating (i.e., both external raters appraised not applicable). This led to a return rate of analyzable audio recordings of 132 of 161 (82.0%).

On average, the 2128 surveyed patients (61.4% female, mean age 57.0 years) rated their level of subjective health and their level of distress as moderate. Most of them had a confirmed cancer diagnosis for less than five years. They visited the department mostly due to diagnostic, treatment-related, or monitoring reasons in approximately equal shares. About two-thirds reported that they were consulting their HCP about a treatment-related decision. Notable differences between intervention and control conditions were identified regarding gender, time since the first diagnosis, reason for visit, and the topic of decision. Table 1 gives a detailed overview on sample characteristics of surveyed patients.

Among the 559 HCP responses (71.8% female, 67.9% 40 years old or younger), slightly more were from nurses compared to physicians. About 60% of HCPs had five years or more experience in oncological care, and the vast majority treated oncological patients at the time of the study. The responses were given by 408 different individuals, with 300 of them (73.5%) only participating once in the survey. No substantial differences were identified between the intervention and the control condition. For details on characteristics of surveyed HCPs, see Table 2.

Audio recordings of 132 patients (56.5% female, mean age 58.6 years) were analyzed. The majority had a confirmed cancer diagnosis, which they received mostly less than five years ago. Notable group differences existed regarding gender, occupational status, diagnosis, time since first diagnosis, and appropriateness of the clinical encounter for SDM ratings as judged by external raters. Details on this sample are reported in Table 3.

Sixty-six MDTMs with a total of 842 discussed cases were observed. This resulted in 425 cases in 38 meetings in the intervention condition, and 417 cases in 28 meetings in the control condition.

Across all data sources, approximately 20 to 25% of the data were collected during the SARS-CoV-2 pandemic. The pandemic situation began during the fourth (final) measurement wave of this study. At this point, all implementation intervals were completed, i.e., all three departments had moved to the intervention condition. Due to the stepwise implementation of the intervention, about 10% of the post-intervention patient data from department 1, about 40% of the post-intervention patient data from department 2, and about 90% of the post-intervention patient data from department 3 were collected during the pandemic situation.

Results of the outcome evaluation

We did not find a statistically significant difference between the control and intervention condition regarding the primary outcome, i.e., uptake of SDM from the patients’ perspective (as measured by the SDM-Q-9, Table 4).

Most secondary outcomes did not show a statistically significant difference between the groups either (Tables 4 and 5). Regarding the experienced decision control, patients in the intervention condition reported 55% higher odds of having had a shared or patient-led rather than a physician-led decision compared to the control condition (P = .017, d = 0.24; Table 5). HCPs in the intervention condition reported statistically significantly higher self-assessed knowledge about SDM (estimated difference 1.58 points on a 0 to 10 visual analog scale, P = .002, d = 0.50; Table 4). A detrimental effect was identified in terms of penetration of SDM in MDTMs. 58% lower odds of including the patient’s view appropriately in the MDTM case discussion were found in the intervention condition compared to the control condition (P = .020, d = − 0.48; Table 5). The sensitivity analyses largely confirmed these findings (Additional file 4).

Intraclass correlations were below 5% in most cases, with the largest between-department variations regarding organizational readiness for implementing change, observer-assessed uptake of SDM, and patient-reported decision control (Tables 4 and 5).

Process evaluation

296 pages of field note documentation, minutes of 39 meetings, and 107 process interviews with 126 participants were analyzed.

Reach was calculated for two of the implementation strategies: SDM trainings for HCPs and individual coaching for physicians. Overall, 173 of 392 eligible HCPs (44%) participated in an SDM training. Fewer eligible nurses participated in comparison to eligible physicians (41% nurses, 52% physicians). Over all three departments, 57 of 118 eligible physicians (48%) participated in at least one coaching session. 37 of 118 (31%) participated in both coaching sessions. There was considerable variation with regards to the participation rates between departments, especially for SDM training (range: 35 to 73%).

Over the course of the three implementation intervals, 2709 postcards of the patient activation intervention ASK3Q, 762 information brochures “Patienten und Ärzte als Partner” (English: patients and physicians as partners [65],) and 370 generic decision aids [66, 67] were distributed to the departments. Furthermore, 136 ASK3Q posters were hung. For more detailed information on reach and implementation indicators, see Additional file 5.

Concerning fidelity, several adaptations were made regarding the different implementation strategies. Most adaptations concerned dose and temporality of the implementation strategies. For example, the SDM team trainings for HCPs lasted on average 50 minutes instead of two hours as planned a priori. Also, some coaching sessions took place without prior training of physicians. Table 6 gives an overview on how the strategies were originally planned in the study protocol [28] and which adaptations were made.

Discussion

In this large-scaled study, the results on the primary and most secondary outcomes imply that the introduction of a multi-component program did not lead to more SDM implementation in the implementation condition compared to the control condition. Limited positive effects were found on few secondary outcomes, including an increased knowledge on SDM in HPCs. Results of the process evaluation yielded limited reach, and considerable adaptations of some of the implementation strategies were required.

Results from a pilot study formed the empirical basis of the implementation program. The pilot study led to a pre-selection of participating departments based on their head physicians being open to SDM and to the implementation study. The thorough prior analysis of the current state allowed the study team to get familiar with the respective setting and local barriers for SDM implementation before the beginning of the implementation trial. Needs identified during the pilot study were largely incorporated in the implementation program [28]. However, some aspects could not be considered as intended. Interdisciplinary training and facilitating team communication were planned but could be accomplished to a limited extent only [19, 31]. Also, the implementation program had a focus on HCPs. Patient empowerment training, tailored patient decision aids, and the establishment of a patient advocate were not included in the implementation program [31].

According to the National Cancer Institute [68] and the Consolidated Framework for Implementation Research [29], certain changes to core components of implementation strategies, e.g., reduction of dosage, which was necessary for several of the strategies in this study, have to be considered “red light changes” that should be avoided. While we deemed those adaptations necessary to fit the local context (due to limited available resources in the departments), they might have undermined the effectiveness of the program. In retrospect, the study might have benefitted from more rigorous and critical discussion of the possible advantages and disadvantages of adaptations. In this context, it is important to discuss the aspect of stakeholder engagement within the participating departments. Implementation science recommends to reflect a priori on capacities and resources within an organization [68]. This has not been in the focus of this study and might have led to implementation strategies that did not match the existing capacities. Thus, it was difficult for the core implementation team to balance fidelity and adaptations, leading to these potentially critical changes. The process evaluation also showed limited reach, suggesting that even a program with lower dose than initially planned was difficult to implement in the participating departments. Future implementation studies might benefit from more detailed a priori planning of resource allocation together with clinical leaders. Furthermore, despite controversial views, financial reimbursement for SDM or payment models incentivizing SDM might ease resource allocation to foster SDM implementation [35, 69].

Also, we have to reflect critically, whether the implementation strategies addressed attitudes and beliefs of HCPs enough [68], which has been found a key factor in SDM implementation in a multicenter study from the UK [26]. The implementation program had an effect on HCPs’ SDM knowledge, but not on their attitudes towards SDM. Even though attitudes towards SDM were found to be relatively positive, there was considerable variation between HCPs. Also, positive individual attitudes reported in a survey might not suffice to implement SDM behaviors in routine care. Future research could incorporate stakeholder engagement and participatory research ( [70], e.g., co-design of implementation strategies) as well as performance feedback ( [71], e.g., direct patient-reported feedback for HCPs regarding SDM) as potential means to foster the translation into routine SDM behavior.

Regarding patient decision aids and information material, it was not part of this trial to develop new decision aids as other implementation studies or studies with hybrid effectiveness-implementation designs [72] do (e.g. [73],). When systematically screening for evidence-based decision aids in German language for cancer-related decisions within this trial, the lack of such material became apparent. Instead we distributed a generic patient decision aid [66, 67]. Thus, this strategy has probably not developed its full strength. Furthermore, while we were able to document the amount of material distributed, we were not able to assess reach of this strategy as well as of the ASK3Q strategy.

Despite being seen as important in the pre-implementation study [30,31,32], our implementation strategy targeting MDTMs did not lead to structural changes on the organizational level. Limited capacities, resources, and stakeholder engagement within the departments that prioritize such changes might explain the lack of effects on MDTMs. Yet, in light of recent literature on barriers to SDM implementation [36, 69], it can be assumed that SDM implementation at the department level is not possible without a range of organizational changes that eventually—together with changes on the individual level—lead to a culture or paradigm shift. The revision of quality management documents alone was most probably not enough. Organizational changes could include mandatory documentation of psychosocial patient information and patient preferences in the electronic medical record as well as standardized integration of these aspects in MDTMs. While a range of strategies to foster SDM on the organizational level have been suggested in the literature [35], the lack of evidence of their effectiveness limits leverage to convince the highest level leadership to support such changes. A long-term US implementation project has shown that developing an organizational culture receptive to SDM uptake can take years [74]. Thus, the length of our program, limited by the total funding period of three years, could have been too short. Also, factors that have been found to influence SDM implementation at the level of health systems (e.g., payment models, medical education [35], cannot be changed by an implementation program like ours.

Although we did not find convincing evidence of an average positive effect of the implementation program in the whole study population, it is possible that considerable positive (or negative) effects are present in certain subgroups (e.g., physicians vs. nurses), contexts, or settings. The distal evaluation of effects at the department level (instead of individual HCP level) was not designed to detect potential changes of SDM behavior of individual HCPs. In further analyses, we will explore whether a heterogeneity of effects exists and, if so, how it can be explained [75].

The results of this study can be compared to several other very recent SDM implementation studies. Another German study evaluating a large-scale SDM implementation program found statistically significant effects for their multi-component implementation program in an interim analysis with data from a single department [76]. This trial used an uncontrolled before-and-after design and a different primary outcome measure to assess uptake of SDM [77]. The difference in results could be attributed to a much higher reach with over 90% of physicians from that department participating in an SDM training. It can also be explained by the strong involvement of the clinical team in the creation of new decision aids for their department and the temporary allocation of workforce resources to the study [76]. An SDM implementation trial for breast cancer care in the Netherlands found effects on observer-assessed SDM (assessed with OPTION5), but not with regards to the patient-assessed SDM (assessed with the SDM-Q-9) [27]. This study used an unpaired before-and-after study design and only included patients with breast cancer facing a treatment decision. Furthermore, the Dutch implementation program was co-designed with each participating clinic, allowing for major adaptations regarding focus and content of the implementation efforts [27]. This might have increased stakeholder engagement. The comparison of these recent implementation studies might bring valuable insights into what works and what does not work regarding the implementation of SDM in routine care.

Strengths and limitations

A central strength of this trial is its high ecological validity. We managed to investigate a largely unselected population of patients, HCPs, clinical encounters, and MDTMs, which were representative of routine care in three departments of a German comprehensive cancer center. The study was informed by a pre-implementation pilot study and theoretically grounded in a conceptual framework. Also, the thorough execution of the study protocol including an extensive process evaluation is a major strength of this trial. Its further advantages include its large sample size and statistical power, the investigation of a wide range of outcomes from several perspectives, and a careful examination of the robustness of the findings.

As this study was a single-center trial, caution is necessary regarding generalizability of its results. Furthermore, only three clusters were included, which might limit the applicability of the stepped wedge cluster randomized design. Additionally, while the study was carried out according to schedule until the last measurement wave, the SARS-CoV-2 pandemic led to a delay in completing the final measurement wave. The pandemic situation had a considerable impact on routine healthcare (e.g., more remote consultations, restrictions to MDTMs, time constraints of HCPs [78, 79],) and might have impacted several of our implementation strategies (e.g., no training to apply SDM in remote consultations). Hence, the pandemic situation was incorporated as a covariate in the data analyses.

Conclusion

In the present study, we did not find a statistically significant increase in the average level of SDM as perceived by the patients by applying an empirically and theoretically grounded multi-component implementation program to foster SDM in cancer care. Limited reach and considerable adaptations might explain the lack of change. As prior work suggested [23], there “are many miles to go” to fully implement SDM in routine practice. Future work should investigate other or more intensive approaches for successful department-wide implementation of SDM in routine cancer care and further assess factors influencing implementation of SDM in cancer care.

Availability of data and materials

Deidentified data that support the findings of this study are available on reasonable request. Investigators who propose to use the data have to provide a methodologically sound proposal directed to the corresponding author. Signing a data use/sharing agreement will be necessary, and data security regulations both in Germany and in the country of the investigator who proposes to use the data must be complied with. Preparing the data set for use by other investigators requires substantial work and is thus linked to available or provided resources.

Change history

14 May 2022

The original publication was missing a funding note declaring funding enabled by Projekt DEAL.

Abbreviations

- AC:

-

Anja Coym

- AL:

-

Anja Lindig

- ASK3Q:

-

Ask 3 Questions

- BS:

-

Barbara Schmalfeldt

- CB:

-

Carsten Bokemeyer

- CFIR:

-

Consolidated Framework for Implementation Research

- CPS:

-

Control Preference Scale

- EBPAS:

-

Evidence-Based Practice Attitude Scale

- HC:

-

Hannah Cords

- HCPs:

-

Health care professionals

- IS:

-

Isabelle Scholl

- IW:

-

Isabell Witzel

- JZ:

-

Jördis Zill

- LK:

-

Levente Kriston

- MAGIC:

-

Making good decisions in collaboration

- MDT-MODe:

-

Metric of the Observation of Decision Making in Multidisciplinary Team Meetings

- MDTMs:

-

Multidisciplinary team meetings

- MH:

-

Martin Härter

- ORIC:

-

Organizational Readiness for Implementing Change

- PH:

-

Pola Hahlweg

- PtDAs:

-

Patient decision aids

- RS:

-

Ralf Smeets

- SARS-CoV-2:

-

Severe acute respiratory syndrome coronavirus 2

- SDM:

-

Shared decision-making

- SDM-Q-9:

-

9-item Shared Decision Making Questionnaire

- TV:

-

Tobias Vollkommer

- WF:

-

Wiebke Frerichs

References

Politi MC, Studts JL, Hayslip JW. Shared decision making in oncology practice: what do oncologists need to know? Oncologist. 2012;17(1):91–100.

Whitney SN. A new model of medical decisions: exploring the limits of shared decision making. Med Decis Mak. 2003;23(4):275–80.

Mulley AG, Trimble C, Elwyn G. Stop the silent misdiagnosis: patients’ preferences matter. BMJ. 2012;345. https://doi.org/10.1136/bmj.e6572.

Hahlweg P, Kriston L, Scholl I, Brähler E, Faller H, Schulz H, et al. Cancer patients’ preferred and perceived level of involvement in treatment decision-making: an epidemiological study. Acta Oncol. 2020;59(8):967–74.

Chewning B, Bylund CL, Shah B, Arora NK, Gueguen JA, Makoul G. Patient preferences for shared decisions: a systematic review. Patient Educ Couns. 2012;86(1):9–18.

Schuler M, Schildmann J, Trautmann F, Hentschel L, Hornemann B, Rentsch A, et al. Cancer patients’ control preferences in decision making and associations with patient-reported outcomes: a prospective study in an outpatient cancer center. Support Care Cancer. 2017;25(9):2753–60.

Kehl KL, Landrum M, Arora NK, Ganz PA, van Ryn M, Mack JW, et al. Association of actual and preferred decision roles with patient-reported quality of care: shared decision making in cancer care. JAMA Oncol. 2015;1(1):50–8.

Charles C, Gafni A, Whelan TJ. Shared decision-making in the medical encounter: what does it mean? (or it takes at least two to tango). Soc Sci Med. 1997;44(5):681–92.

Makoul G, Clayman ML. An integrative model of shared decision making in medical encounters. Patient Educ Couns. 2006;60(3):301–12.

Elwyn G, Durand M-A, Song J, Aarts J, Barr PJ, Berger ZD, et al. A three-talk model for shared decision making: multistage consultation process. BMJ. 2017;359:j4891.

Hoffmann TC, Montori VM, Del Mar C. The connection between evidence-based medicine and shared decision making. JAMA. 2014;312(13):1295–6.

Barry MJ, Edgman-Levitan S. Shared decision making - the pinnacle of patient-centered care. N Engl J Med. 2012;366(9):780–1.

Salzburg Global Seminar. Salzburg statement on shared decision making. BMJ. 2011;342:d1745.

Härter M, Dirmaier J, Scholl I, Donner-Banzhoff N, Dierks ML, Eich W, et al. The long way of implementing patient-centered care and shared decision making in Germany. Z Evid Fortbild Qual Gesundhwes. 2017;123–124:46–51.

Härter M, Moumjid N, Cornuz J, Elwyn G, van der Weijden T. Shared decision making in 2017: international accomplishments in policy, research and implementation. Z Evid Fortbild Qual Gesundhwes. 2017;123–124:1–5.

Müller E, Strukava A, Scholl I, Härter M, Diouf NT, Légaré F, et al. Strategies to evaluate healthcare provider trainings in shared decision-making (SDM): a systematic review of evaluation studies. BMJ Open. 2019;9(6):e026488.

Stacey D, Légaré F, Lewis K, Barry MJ, Bennett CL, Eden KB, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2017;4:CD001431.

Hahlweg P, Härter M, Nestoriuc Y, Scholl I. How are decisions made in cancer care? A qualitative study using participant observation of current practice. BMJ Open. 2017;7(9):e016360.

Frerichs W, Hahlweg P, Müller E, Adis C, Scholl I. Shared decision-making in oncology – a qualitative analysis of healthcare providers’ views on current practice. PLoS One. 2016;11(3):e0149789.

Tariman JD, Berry DL, Cochrane B, Doorenbos A, Schepp K. Preferred and actual participation roles during health care decision making in persons with cancer: a systematic review. Ann Oncol. 2010;21(6):1145–51.

Kunneman M, Engelhardt EG, Ten Hove FL, Marijnen CA, Portielje JE, Smets EM, et al. Deciding about (neo-)adjuvant rectal and breast cancer treatment: issed opportunities for shared decision making. Acta Oncol. 2015;3:1–6.

Nicolai J, Buchholz A, Seefried N, Reuter K, Harter M, Eich W, et al. When do cancer patients regret their treatment decision? A path analysis of the influence of clinicians’ communication styles and the match of decision-making styles on decision regret. Patient Educ Couns. 2016;99(5):739–46.

Elwyn G, Scholl I, Tietbohl C, Mann M, Edwards AGK, Clay C, et al. “Many miles to go...”: a systematic review of the implementation of patient decision support interventions into routine clinical practice. BMC Med Inform Decis Mak. 2013;13(Suppl. 2):14.

Grol RP, Bosch MC, Hulscher ME, Eccles MP, Wensing M. Planning and studying improvement in patient care: the use of theoretical perspectives. Milbank Q. 2007;85(1):93–138.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Joseph-Williams N, Lloyd A, Edwards A, Stobbart L, Tomson D, Macphail S, et al. Implementing shared decision making in the NHS: lessons from the MAGIC programme. BMJ. 2017;357:j1744.

van Veenendaal H, Voogdt-Pruis H, Ubbink DT, Hilders CGJM. Effect of a multilevel implementation programme on shared decision-making in breast cancer care. BJS Open. 2021;5(2):zraa002.

Scholl I, Hahlweg P, Lindig A, Bokemeyer C, Coym A, Hanken H, et al. Evaluation of a program for routine implementation of shared decision-making in cancer care: study protocol of a stepped wedge cluster randomized trial. Implement Sci. 2018;13(51):1–10.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Hahlweg P, Hoffmann J, Härter M, Frosch DL, Elwyn G, Scholl I. In absentia: an exploratory study of how patients are considered in multidisciplinary cancer team meetings. PLoS One. 2015;10(10):e0139921.

Müller E, Hahlweg P, Scholl I. What do stakeholders need to implement shared decision making in routine cancer care? A qualitative needs assessment. Acta Oncol. 2016;55(12):1484–91.

Hahlweg P, Didi S, Kriston L, Härter M, Nestoriuc Y, Scholl I. Process quality of decision-making in multidisciplinary cancer team meetings: a structured observational study. BMC Cancer. 2017;17(1):772.

Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, et al. Standards for Reporting Implementation Studies (StaRI) statement. BMJ. 2017;356:i6795.

Hemming K, Taljaard M, McKenzie JE, Hooper R, Copas A, Thompson JA, et al. Reporting of stepped wedge cluster randomised trials: extension of the CONSORT 2010 statement with explanation and elaboration. BMJ. 2018;363:1614.

Scholl I, LaRussa A, Hahlweg P, Kobrin S, Elwyn G. Organizational- and system-level characteristics that influence implementation of shared decision-making and strategies to address them - a scoping review. Implement Sci. 2018;13(1):40.

Lindig A, Hahlweg P, Frerichs W, Topf C, Reemts M, Scholl I. Adaptation and qualitative evaluation of Ask 3 Questions - a simple and generic intervention to foster patient empowerment. Health Expect. 2020;23:1310–25.

Shepherd HL, Barratt A, Trevena LJ, McGeechan K, Carey K, Epstein RM, et al. Three questions that patients can ask to improve the quality of information physicians give about treatment options: a cross-over trial. Patient Educ Couns. 2011;84(3):379–85.

Proctor EK, Powell B, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139.

Proctor EK, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Admin Pol Ment Health. 2011;38(2):65–76.

Kriston L, Scholl I, Hölzel L, Simon D, Loh A, Härter M. The 9-item Shared Decision Making Questionnaire (SDM-Q-9). Development and psychometric properties in a primary care sample. Patient Educ Couns. 2010;80(1):94–9.

Elwyn G, Barr PJ, Grande SW, Thompson R, Walsh T, Ozanne EM. Developing CollaboRATE: a fast and frugal patient-reported measure of shared decision making in clinical encounters. Patient Educ Couns. 2013;93(1):102–7.

Barr JP, Thompson R, Walsh T, Grande WS, Ozanne ME, Elwyn G. The psychometric properties of CollaboRATE: a fast and frugal patient-reported measure of the shared decision-making process. J Med Internet Res. 2014;16(1):e2.

Hahlweg P, Zeh S, Tillenburg N, Scholl I, Zill J, Barr P, et al. Translation and psychometric evaluation of collaboRATE in Germany – a 3-item patient-reported measure of shared decision-making. In: 10th International Shared Decision-Making Conference. Quebec City: Laval University; 2019.

Degner LF, Sloan JA, Venkatesh P. The control preferences scale. Can J Nurs Res. 1997;29(3):21–43.

Rothenbacher D, Lutz MP, Porzsolt F. Treatment decisions in palliative cancer care: patients’ preferences for involvement and doctors’ knowledge about it. Eur J Cancer Part A. 1997;33(8):1184–9.

Giersdorf N, Loh A, Härter M. Quantitative measurement of shared decision-making [German: Quantitative Messverfahren des Shared Decison-Making]. In: Scheibler F, Pfaff H, editors. Shared Decision-Making: The patient as a partner in the medical decison-making process [German: Shared Decision-Making: Der Patient als Partner im medizinischen Entscheidungsprozess]. Munich: Juventa; 2003. p. 69–85.

Giguere AM, Bogza L-M, Coudert L, Carmichael P-H, Renaud J-S, Légaré F, et al. Development of the IcanSDM scale to assess primary care clinicians’ ability to adopt shared decision making. medRxiv. 2020. https://doi.org/10.1101/2020.07.01.20144204.

Lindig A, Hahlweg P, Christalle E, Giguere A, Härter M, Knesebeck O, et al. Translation and psychometric evaluation of the German version of the IcanSDM measure– a cross-sectional study among healthcare professionals. BMC Health Serv Res. 2021;21(1):541.

Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ. Organizational readiness for implementing change: a psychometric assessment of a new measure. Implement Sci. 2014;9:7.

Lindig A, Hahlweg P, Christalle E, Scholl I. Translation and psychometric evaluation of the German version of the Organizational Readiness for Implementing Change measure (ORIC) – a cross-sectional study. BMJ Open. 2020;10(6):e034380.

McColl A, Smith H, White P, Field J. General practitioners’ perceptions of the route to evidence based medicine: a questionnaire survey. BMJ. 1998;316(7128):361–5.

Aarons GA, Cafri G, Lugo L, Sawitzky A. Expanding the domains of attitudes towards evidence-based practice: the evidence based practice attitude scale-50. Adm Policy Ment Health Ment Health Serv Res. 2012;39(5):331–40.

Elwyn G, Tsulukidze M, Edwards A, Légaré F, Newcombe R. Using a “talk” model of shared decision making to propose an observation-based measure: Observer OPTION 5 Item. Patient Educ Couns. 2013;93(2):265–71.

Barr PJ, O’Malley AJ, Tsulukidze M, Gionfriddo MR, Montori V, Elwyn G. The psychometric properties of Observer OPTION (5), an observer measure of shared decision making. Patient Educ Couns. 2015;98(8):970–6.

Kölker M, Topp J, Elwyn G, Härter M, Scholl I. Psychometric properties of the German version of Observer OPTION5. BMC Health Serv Res. 2018;18(1):74.

Lamb B, Sevdalis N, Benn J, Vincent C, Green JSA. Multidisciplinary cancer team meeting structure and treatment decisions: a prospective correlational study. Ann Surg Oncol. 2013;20(3):715–22.

Wirtz MA, Morfeld M, Glaesmer H, Brähler E. Evaluation of norm values of the SF-12 version 2.0 to measure health-related quality of life in a German population-representative sample [German: Normierung des SF-12 Version 2.0 zur Messung der gesundheitsbezogenen Lebensqualität in einer deutschen bevölkerungsrepräsentativen Stichprobe]. Diagnostica. 2018;64(4):215–26.

Mehnert A, Müller D, Lehmann C, Koch U. The German version of the NCCN Distress Thermometer: empiric evaluation of a screening tool assessing psycho-social burden in cancer patients [Germen: Die deutsche Version des NCCN Distress-Thermometers: Empirische Prüfung eines Screening-Instruments zur Erfassung psychosozialer Belastung bei Krebspatienten]. Z Psychiatr Psychol Psychother. 2006;54(3):213–23.

Li F, Hughes JP, Hemming K, Taljaard M, Melnick ER, Heagerty PJ. Mixed-effects models for the design and analysis of stepped wedge cluster randomized trials: an overview. Statistical Methods in Medical Research. 2021;30(2):612–39.

Hussey MA, Hughes JP. Design and analysis of stepped wedge cluster randomized trials. Contemp Clin Trials. 2007;28(2):182–91.

Meyer RD, Ratitch B, Wolbers M, Marchenko O, Quan H, Li D, et al. Statistical issues and recommendations for clinical trials conducted during the COVID-19 pandemic. Stat Biopharm Res. 2020;12(4):399–411.

Cro S, Morris TP, Kahan BC, Cornelius VR, Carpenter JR. A four-step strategy for handling missing outcome data in randomised trials affected by a pandemic. BMC Med Res Methodol. 2020;20(1):1–12.

Engel C, Meisner C, Wittorf A, Wölwer W, Wiedemann G, Ring C, et al. Longitudinal data analysis of symptom score trajectories using linear mixed models in a clinical trial. Int J Stat Med Res. 2013;2(4):305–15.

Chinn S. A simple method for converting an odds ratio to effect size for use in meta-analysis. Stat Med. 2000;19:3127–31.

Zürner P, Beckmann I-A. Guidebook: Patients and physicians as partners [German: Ratgeber: Patienten und Ärzte als Partner]. Bonn: Deutsche Krebshilfe; 2020. Available from: www.krebshilfe.de [cited 19 May 2021].

O’Connor AM, Stacey D, Jacobsen MJ. Ottawa Personal Decision Guide. Ottawa: Ottawa Hospital Research Institute; 2015. Available from: http://decisionaid.ohri.ca [cited 19 May 2021].

Institut für Qualität und Wirtschaftlichkeit im Gesundheitswesen (IQWIiG). German translation of the Ottawa Personal Decision Guide [German: Entscheidungshilfe]. 2013. Available from: https://www.gesundheitsinformation.de/pdf/entscheidungshilfe/entscheidungshilfe_interaktiv.pdf?rev=122498 [cited 19 May 2021].

National Cancer Institute. Implementation science at a glance: a guide for cancer control practitioners (NHI publication number 19-CA-8055). Bethesda: U.S. Department of Health and Human Services, National Institute of Health; 2019. Available from: https://cancercontrol.cancer.gov/is/tools/practice-tools [cited 08 December 2021].

Scholl I, Kobrin S, Elwyn G. “All about the money?” - a qualitative interview study examining organizational- and system-level characteristics that promote or hinder shared decision-making in cancer care in the United States. Implement Sci. 2020;15(1):1–9.

Bush PL, Pluye P, Loignon C, Granikov V, Wright MT, Pelletier JF, et al. Organizational participatory research: a systematic mixed studies review exposing its extra benefits and the key factors associated with them. Implement Sci. 2017;12(1):1–15.

National Institute for Health and Care Excellence (NICE). NICE guideline: shared decision making [NG197]. 2021. Available from: www.nice.org.uk/guidance/ng197 [cited 28 Jun 2021]

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–26.

Danner M, Geiger F, Wehkamp K, Rueffer JU, Kuch C, Sundmacher L, et al. Making shared decision-making (SDM) a reality: protocol of a large-scale long-term SDM implementation programme at a Northern German University Hospital. BMJ Open. 2020;10(10):e037575.

Sepucha KR, Simmons LH, Barry MJ, Edgman-Levitan S, Licurse AM, Chaguturu SK. Ten years, forty decision aids, and thousands of patient uses: shared decision making at massachusetts general hospital. Health Aff. 2016;35(4):630–6.

Kent DM, Paulus JK, Van Klaveren D, D’Agostino R, Goodman S, Hayward R, et al. The Predictive Approaches to Treatment effect Heterogeneity (PATH) statement. Ann Intern Med. 2020;172(1):35–45.

Geiger F, Novelli A, Berg D, Hacke C, Sundmacher L, Kopeleva O, et al. The hospital-wide implementation of shared decision-making: initial findings of the Kiel SHARE TO CARE program. Dtsch Aerzteblatt Online. 2021;118:225–6.

Lerman CE, Brody DS, Caputo GC, Smith DG, Lazaro CG, Wolfson HG. Patients’ perceived involvement in care scale: - relationship to attitudes about illness and medical care. J Gen Intern Med. 1990;5(1):29–33.

Weisel KC, Morgner-Miehlke A, Petersen C, Fiedler W, Block A, Schafhausen P, et al. Implications of SARS-CoV-2 infection and COVID-19 crisis on clinical cancer care: report of the University Cancer Center Hamburg. Oncol Res Treat; 2020;43:307–13.

Abrams EM, Shaker M, Oppenheimer J, Davis RS, Bukstein DA, Greenhawt M. The challenges and opportunities for shared decision making highlighted by COVID-19. J Allergy Clin Immunol Pract. 2020;8(8):2474–80.e1.

Acknowledgements

We thank all patients and HCPs who participated in this study. We are also thankful to all research associates, student research assistants and research interns who contributed to the study: Lisa Bußenius, Eva Christalle, Lara Dreyer, Marie-Kristin Eilert, Nina Elpers, Merisa Ferati, Rabea Friedrich, Teresa Greiter, Sophie Hachmeister, Hendrik Hagen, Stefanie Heger, Fatima Hussein, Anastasia Izotova, Joy Kukemüller, Finja Mäueler, Sophia Neumann, Nicolai Pergande, Martin Reemts, Greta Rose, Sophia Schulte, Cheyenne Topf, Marie-Luise Ude, Ayse Yilmaz, Stefan Zeh. Furthermore, we thank all non-author collaboration partners at the University Cancer Center Hamburg and the University Medical Center Hamburg, especially Marcus Freytag, Volkmar Müller, and Mia Carlotta Peters. Last but not least, we are thankful to the members of the advisory board, Glyn Elwyn, Dominick Frosch, Mirjam Körner, Heather Shepherd, Monica Taljaard, and Michel Wensing.

Funding

The study was funded by the German Research Foundation (Deutsche Forschungsgemeinschaft, grant number 232160533). Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

IS was the principal investigator of the study. IS, PH, MH, and LK made substantial contributions to the conceptualization and design of the study, writing the grant proposal, and the preparation of the study. CB and IW were involved in the conceptualization and design of the study and preparation of the study. AL, AC, and RS were involved in the preparation of the study. IS, PH, AL, WF, JZ, and HC were involved in the data collection. IS, PH, AL, and MH were involved in delivering the implementation program. CB, AC, BS, RS, TV, and IW supported coordination of the study in the participating departments. LK conducted outcome evaluation analyses. PH, IS, and AL contributed to those analyses. IS, PH, AL, WF, and HC performed process evaluation analyses. All authors were involved in the interpretation of the data. IS, PH, and LK wrote the first manuscript draft. All authors were engaged in critically revising the manuscript for important intellectual content. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the Ethics Committee of the Medical Association Hamburg (Germany, study ID PV5368). It was conducted in accordance with the latest version of the Helsinki Declaration of the World Medical Association. We respected principles of good scientific practice and met requirements of data protection. We obtained written informed consent from all patients participating in the study. We obtained a waiver of consent for HCPs from the Ethics Committee. Study participation was voluntary.

Consent for publication

Not applicable.

Competing interests

AL, WF, JZ, HC, CB, AC, BS, RS, TV, IW, and LK declare that they have no competing interests. IS, PH, and MH declare that they currently are (PH, MH) or have been (IS) members of the executive board of the International Shared Decision Making Society, which has the mission to foster SDM implementation. PH, MH, and IS have no further competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Completed Standards for Reporting Implementation Studies (StaRI) checklist.

Additional file 2.

Completed checklist of information to include when reporting a stepped wedge cluster randomised trial (SW-CRT).

Additional file 3.

Methodological changes from the study protocol.

Additional file 4.

Sensitivity analyses.

Additional file 5.

Reach and further implementation indicators.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Scholl, I., Hahlweg, P., Lindig, A. et al. Evaluation of a program for routine implementation of shared decision-making in cancer care: results of a stepped wedge cluster randomized trial. Implementation Sci 16, 106 (2021). https://doi.org/10.1186/s13012-021-01174-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-021-01174-4