Abstract

Background

Research use in policymaking is multi-faceted and has been the focus of extensive study. However, virtually no quantitative studies have examined whether the determinants of research use vary according to the type of research use or phase of policy process. Understanding such variation is important for selecting the targets of implementation strategies that aim to increase the frequency of research use in policymaking.

Methods

A web-based survey of US state agency officials involved with children’s mental health policymaking was conducted between December 2019 and February 2020 (n = 224, response rate = 33.7%, 49 states responding (98%), median respondents per state = 4). The dependent variables were composite scores of the frequency of using children’s mental health research in general, specific types of research use (i.e., conceptual, instrumental, tactical, imposed), and during different phases of the policy process (i.e., agenda setting, policy development, policy implementation). The independent variables were four composite scores of determinants of research use: agency leadership for research use, agency barriers to research use, research use skills, and dissemination barriers (e.g., lack of actionable messages/recommendations in research summaries, lack of interaction/collaboration with researchers). Separate multiple linear regression models estimated associations between determinant and frequency of research use scores.

Results

Determinants of research use varied significantly by type of research use and phase of policy process. For example, agency leadership for research use was the only determinant significantly associated with imposed research use (β = 0.31, p < 0.001). Skills for research use were the only determinant associated with tactical research use (β = 0.17, p = 0.03) and were only associated with research use in the agenda-setting phase (β = 0.16, p = 0.04). Dissemination barriers were the most universal determinants of research use, as they were significantly and inversely associated with frequency of conceptual (β = −0.21, p = 0.01) and instrumental (β = −0.22, p = 0.01) research use and during all three phases of policy process.

Conclusions

Decisions about the determinants to target with policy-focused implementation strategies—and the strategies that are selected to affect these targets—should reflect the specific types of research use that these strategies aim to influence.

Similar content being viewed by others

Background

“Evidence-based practice” is central to the enterprise of implementation science, which has roots in clinical health care. However, the concept of evidence-based practice is not directly transferable from clinical to public policy contexts [1]. At best, public policy can be evidence-informed, and the use of research evidence in policymaking has emerged an accepted indicator of evidence-informed policymaking [2]—with the (albeit untested) [3] assumption that more evidence use results in policy decisions that are more informed by, and aligned with, evidence. Research use in policymaking has been studied for at least half a century, and has long been recognized as a multi-faceted phenomenon. As described by Carol Wiess in the 1970s [4,5,6], there are a multitude of different types of research use in policymaking. Four specific types have been the focus of extensive study.

First, conceptual (i.e., “enlightenment”) research use relates to that which broadly shapes how a policymaker thinks about an issue. Instrumental (i.e., “problem-solving”) research use relates to that in which research directly informs a decision or solves a problem. Tactical (i.e., “political” or “symbolic”) research use relates to that in which research is used to persuade someone to see a point of view or support a policy position. Finally, imposed research use relates to that which is carried out to satisfy organizational requirements for research use, often with the goal of encouraging instrumental research use [7]. While these specific types of research use have been the focus of extensive qualitative study [8,9,10,11,12,13,14,15,16,17], and numerous reviews have documented the determinants of using research in policymaking in general [3, 18,19,20], surprisingly little quantitative research has assessed variation in the frequency of different types of research use or examined whether the determinants of research use vary according to the type of research use.

Prior studies

A landmark study by Amara and colleagues was the first to quantify different types of research use in a large sample of policymakers [21, 22]. The study surveyed 833 policymakers of Canadian provincial agencies in 1998 (response rate 35.0%) and characterized the frequency of conceptual, instrumental, and tactical uses of “university research” and identified determinants of research use for these purposes. It was found that all three types of research use occurred fairly frequently and that similar determinants (e.g., agency dedication of resources to support research use, interactions with researchers) were associated with conceptual, instrumental, and tactical use.

Only a few other quantitative studies have examined the prevalence and correlates of policymakers’ uses of research for different purposes. Zardo and Collie examined these issues in a 2012 survey of 372 administrative public health policymakers in Victoria, Australia (response rate = 31.7%) [23, 24]. One analysis of the survey data examined variations in the past-year prevalence of using “academic research” for different purposes and found that a significantly larger proportion of respondents used academic research for conceptual (50.3%) and instrumental (44.8%) than tactical (19.3%) purposes [24]. While this analysis did not assess the determinants of using research for these different purposes, a separate analysis of the survey data used logistic regression to identify factors associated with whether respondents used research evidence for any purpose in the past year [23]. This latter analysis found that factors such as relevance of available research evidence, skills for research use, and organization supports for research use were significantly associated with research use.

Most recently, Williamson and colleagues conducted and scored structured interviews with administrative health policymakers in Sydney, Australia, to quantify the extent of different types of research use in the development of 131 policy documents and to identify associations with determinants of research use [25]. Within this context of policy development, they found that tactical research use (mean = 5.60, 9-point scale) occurred most frequently and that imposed research use (mean = 3.97) occurred least frequently.

Knowledge gaps: the need for a more nuanced understanding of research use in policymaking

A more nuanced understanding is needed about the dynamics of research use for different purposes in policymaking. This knowledge gap needs to be addressed to develop empirically informed theories about the mechanisms through which implementation strategies could increase the use of research for different purposes in policymaking [26, 27]. In turn, this information is needed to provide an empirical basis to inform the selection of targets and implementation strategies to increase research use in policy processes [26, 28, 29]. There are four specific knowledge gaps that motivate the current study.

First, prior quantitative assessments of different types of research use in policymaking have been limited by simplistic measures—using single-item Likert-scales [21, 22, 25] or dichotomous items [23, 24]. Relatedly, with the exception of Amara’s and colleagues study (conducted more than 20 years ago) [21, 22], the determinants of different types of research use have been measured using single-item dichotomous variables. Second, no studies have focused on how the frequency of research use—in general, or specific type of research use—varies across phases of the policy process. Although the policy process is complex and generally non-linear, there are discrete phases such as deciding which issues to address (i.e., the agenda-setting phase), determining how issues will be addressed and budgeted for (i.e., the policy development phase), and how policies will be rolled out and enforced (i.e., implementation phase) [30].

Third, it is unclear how findings from prior quantitative studies about research use in policymaking—conducted in Canada and Australia—apply to the US context. While surveys have quantified barriers to research use and evidence dissemination preferences among US state agency policymakers [31,32,33,34,35], this work has not examined the frequency of research use. This knowledge gap reflects the fact that policy-focused dissemination and implementation (D&I) research is understudied in the USA. A review of D&I research funded by the National Institutes of Health between 2007 and 2014 found that < 10% of projects explicitly examined policy issues, with most considering policy as a peripheral factor—not the focus of inquiry [36]. An updated review using an identical search strategy identified only 10 additional projects funded between 2015 and 2018 [37]. A review of 22 studies published between 1999 and 2016 that evaluated interventions to increase the capacity for research use in policymaking identified only one study conducted in the USA [26].

Fourth, little prior work about research use in policymaking has focused on mental health, let alone children’s mental health [38]. The topic of children’s mental health in the USA deserves particular attention because rates of depression, anxiety, and suicide among youth having been increasing and could be exacerbated by the stresses of COVID-19 pandemic [39,40,41,42,43,44]. A review of articles related to evidence-informed mental health policymaking published between 1995 and 2013 identified few rigorous studies, with most studies focusing on the implementation of a specific evidence-based practice at the organizational level—not research use in policymaking more broadly [45]. Only two studies, both qualitative, have explored how research evidence is used in children’s mental health policymaking [13, 14]. A small survey (n = 43) of state mental health agency policymakers characterized preferences for receiving, and barriers to using, mental health research but did not assess research use behaviors [32, 33]. Bruns and colleagues used agency and state data (e.g., state per capita income, controlling political party) to identify factors associated with state mental health agencies providing evidence-based treatments for children and adults between 2002 and 2012 [46, 47]. While this work sheds important light on the role of outer-setting context (i.e., the economic, political, and social context that surrounds state agencies) [48] in mental health policymaking, it does not elucidate research use behaviors, or the determinants of these behaviors, among individual policymakers who make policy decisions.

Current study

The current study addresses these knowledge gaps through a quantitative survey of 224 US state agency officials who are involved with policy decision-making processes related to children’s mental health. The study uses continuous scales to assess and compare different types of children’s mental health research use across phases of the policy process. The study is informed by the SPIRIT Action Framework [49]. The framework is the product of a literature review of research use in policymaking [50], interviews with policymakers [51], and was created and applied in Australian health agencies with the explicit purpose “to guide the development and testing of strategies to increase to use of research in policy” (p. 153) [49].

The following aims of the study are to:

-

1)

Characterize the determinants and frequency of children’s mental health research use in policymaking

-

2)

Assess whether the frequency of children’s mental health research use in policymaking varies according to the type of research use and phase of policy process

-

3)

Identify determinants that are independently associated with the overall frequency of children’s mental health research use in policymaking and assess whether these determinants vary according to the type and phase of research use.

Methods

Data

Between December 2019 and February 2020, web-based surveys were conducted of senior-level state mental health agency officials and administrators of grants from the Substance Abuse and Mental Health Service Administration (SAMHSA). State mental health agencies are the government entity responsible for mental health within every state. Officials in these agencies perform functions such as developing and implementing policies and programs and providing and contracting for clinical mental health services [52]. These agencies fund approximately 8,500 providers in the USA annually and who serve a population of 7.3 million [53]. SAMHSA grant administrators—who are typically, but not always, based in state mental health agencies—implement and monitor federal block grants that are a major source of funding for mental health services. For example, the Community Mental Health Services Block Grant program allocated $722 million in fiscal year 2020, $125 million specifically for child mental health services [54].

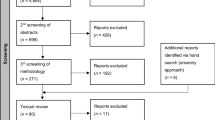

The survey was created and distributed using Qualtrics, a web-based survey tool. The survey sample frame was created by using contact databases maintained by The National Association of State Mental Health Program Directors’ (NASMHPD) [55] and SAMHSA [56]. Each person in the sample frame was e-mailed eight times with a survey link and telephone follow-up was conducted to ensure that e-mails were received. Respondents were offered a $20 gift card. The survey was sent to 253 SMHA officials with valid e-mail addresses and completed by 129 (response rate = 51.0%), with 63.6% of all state children’s division directors and 57.1% of all state child mental health planners completing the survey. Four-hundred-twelve SAMHSA grant administrators with valid e-mail addresses were sent the survey and it was completed by 95 (response rate = 23.1%). At least one survey was completed in 49 states (respondents per state range = 1, 10; median = 4; 98% of states responding) and the total aggregate sample size was 224 (aggregate response rate = 33.7%).

All survey items explicitly pertained to decision-making and use of research evidence related to children’s mental health, not mental health broadly. Because it was likely that some respondents would not have knowledge about or experience with decision-making related to children’s mental health, a “not applicable to me in my work” response option was provided for each question. The order of the survey items in each domain of questions was randomized to reduce the risk of order-effect bias [45]. Using a definition aligned with that of the US Commission on Evidence-Based Policymaking [57], “research” was defined in the survey as “information produced by using reliable data and systematic methods—such as findings reported in peer-reviewed publications or from analyses of local, state, or national data.” This definition appeared above each question that pertained to research use. The survey instrument was piloted with seven former state mental health officials and experts in children’s mental health policy and two telephone-based focus group sessions were conducted to receive feedback on the survey instrument to ensure clarity and comprehensiveness.

Dependent variables

The primary dependent variable was the overall frequency of children’s mental health research use, operationalized as a score (range = 12, 60) that was the sum of responses to 12 items that assessed the frequency of four types of research use (i.e., conceptual, instrumental, tactical, imposed) across three phases of the policy process (i.e., agenda setting, policy development, policy implementation). The score had strong internal consistency (Cronbach’s alpha = 0.90). The score was created using items adapted from the Seeking, Engaging with and Evaluating Research (SEER) instrument [58], which was developed to operationalize aspects of the SPIRIT Action Framework. The original SEER instrument assesses these four types of research use but was modified to assess them across separate phases of the policy process and on 5-point Likert scales instead of yes/no items. For each phase of the policymaking process, respondents were separately asked to indicate the frequency (1 = “very rarely,” 5 = “very frequently”) with which they engaged in each of the four types of children's mental health research use. When answering these questions, respondents were instructed to respond in reference to their “general experience over the past three years.” An overall frequency of children’s mental health research use score was not calculated for a respondent if they selected “not applicable to me in my work” in response to any of the items used to calculate the score.

Consistent with feedback obtained when piloting the survey, we did not explicitly name the types of research use or phases of policy process. Rather, we defined each in lay terms that were consistent with definitions used in the SEER instrument [58]. The exact wording used for each type of research use and phase of policy process is included in Supplemental Table 1.

The secondary dependent variables were the frequency of each type of children’s mental health research use (summing the scores for each type across all three phases of policy process) and frequency of children’s mental health research use during each phase of policy process (summing the scores for all four types of research use within each phase). The scores that quantified each type of research use had a range of 3 to 15 and high internal consistency (Cronbach’s alpha ranging from 0.79 to 0.95) and the scores that quantified research during each phase of the policy process had a range of 4 to 16 and also had high internal consistency Cronbach’s alpha ranging from 0.72 to 0.82). These composite scores were not calculated for a respondent if they selected “not applicable to me in my work” in response to any of the items used to calculate the scores.

Independent variables

The independent variables were composite scores assessing four domains of determinants of using children’s mental health research in policymaking. The selection of these variables was informed by the SPIRIT Action Framework [49], reviews of barriers to evidence-informed policymaking [3, 18,19,20], and prior research related to evidence use in mental health policymaking in the USA [13, 32, 36, 59, 60]. The exact wording of all questions is in Supplemental Table 1.

Skills for research use were assessed by a score that was the sum of responses to three, five-point Likert scale items adapted from the SEER instrument [58] (Cronbach’s alpha = 0.86). Agency leadership for research use was assessed by a score that was the sum of responses to two, five-point Likert scale items also adapted from the SEER instrument (Cronbach’s alpha = 0.75, r = 0.61). Agency barriers to research use were assessed by a score that was the sum of responses to three, five-point Likert scale items adapted from prior assessments of barriers to using research evidence in US state policy contexts (Cronbach’s alpha = 0.53) [33]. Research dissemination barriers were assessed by a score that was the sum of responses to four, five-point Likert scale items that have also been used in prior assessments of barriers to research use in US state policy contexts (Cronbach’s alpha = 0.71) [33].

Covariates

Ordinal variables characterized respondents’ highest level of education and the number of years they had worked at their agency. The US state of respondents’ agency was also included as a covariate.

Analysis

Means were calculated for all composite scores as well as individual items. To aid interpretation, descriptive statistics were also generated with each individual item treated as a dichotomous variable in which responses of 1-3 were coded as “no” and 4-5 were coded as “yes.” Paired sample t tests compared the mean composite frequency of research use scores for each type of research use and research use during each phase of the policy process. Pearson product-moment bivariate correlations and partial correlations assessed relationships between the composite scores for each domain of determinant of research use. These correlations were depicted in accordance with recommendations for Gaussian graphical models [61].

Multiple linear regression was used to estimate associations between the determinants and frequency of children’s mental health research use. Assessment of multi-collinearity revealed that the variance inflation factor was between 1.0 and 2.0 for all independent variables in all models, indicating the absence of multi-collinearity [62]. Assessment of the normality of the data revealed that the agency leadership for research composite score (skewness = −0.47) and agency barriers to research composite score (skewness = −0.89) were negatively skewed at a threshold ≥ 0.20. Thus, these scores were log transformed when entered into the models. To make the interpretation regression coefficients consistent, the skills for research use and dissemination barrier composite scores were also log transformed when entered in the models. Frequency of research use composite scores was skewed at a threshold ≥ 0.20 and was thus also log transformed. All models adjusted for respondent highest level of education, years working at their agency, and state.

First, the overall frequency of children’s mental health research use score served as the dependent variable in a set of models that sequentially added determinant of research use composite scores as independent variables. Then, each of the four frequency of different types of research use composite scores served as the dependent variable in a separate model that adjusted for all determinants of research use composite scores and covariates. The same analysis procedure was conducted with each of the three phases of policy process composite research use scores as the dependent variable in a separate model. We compared the size and statistical significance of beta coefficients for each determinant of research use across these models to assess variation in the determinants of different types of research use and research use during different phases of the policy process.

Because many US states and the federal government have implemented requirements for imposed research use in policymaking [57, 63], we conducted two exploratory analyses related to the frequency of imposed research use. First, we classified survey respondents according to whether their state had a law requiring the use of research evidence in mental health policymaking, defined as such by a 2017 Pew-MacArthur Foundation report [63]. We then used independent sample t tests to compare the mean composite frequency of imposed research use score between respondents who did and did not this law in their state. Second, because imposed research can be perceived as an antecedent to instrumental research use [7], we calculated Pearson product-moment correlations between the frequency of imposed and instrumental research use.

Results

Respondent characteristics and determinants of children’s mental health research use in policymaking

Table 1 shows the characteristics of respondents and the prevalence of possible determinants of using children’s mental health research in policymaking. The mean skills for research use composite score was 11.23 (SD = 2.91; 15-point scale). Seventy percent of respondents indicated that they had confidence in their ability to find children’s mental health research, while 59.6% had confidence in their ability to evaluate the quality of this research. The mean agency leaderships for research use composite score was 7.24 (SD = 1.89; 10-point scale). Three-quarters (76.5%) of respondents expressed that leadership in their agency believed it was important to use children’s mental health research, but only 42.1% believed that their agency dedicated resources to promote the use of this research. The mean agency barriers to research use composite score was 9.66 (SD = 2.59; 15-point scale) with 58.3% of respondents indicating that limited agency resources (e.g., budget deficits) was a barrier. The mean dissemination barriers to research use composite score was 11.86 (SD = 3.28; 20-point scale) with lack of actionable messages/recommendations in summaries of research (43.1%) and lack of interaction or collaboration with researchers 37.2% most frequently identified as barriers in this domain.

Figure 1 depicts correlations between the composite scores for all determinants of children’s mental health research use. There were weak (r ≤ 0.3) but significant (p ≤ 0.006) bivariate correlations between each determinant score, with the exception of a moderately strong correlation between dissemination barriers and agency barriers (r = 0.63, p < 0.001). In partial correlations that adjusted for the other domains of determinants, there were significant positive correlations between research dissemination barriers and agency barriers for research use (r = 0.43, p < 0.0001) and agency leadership for research use and skills for research use (r = 0.32, p < 0.0001).

Correlations between determinants of using children’s mental health research in policy decision-making, State Agency Officials, Winter 2019-2020, N = 224. Note. Dark blue lines indicate positive partial correlations, p ≤ 0.01; light red lines indicate negative partial correlations with p > 0.01, but negative non-partial bivariate correlations p ≤ 0.01. Correlation coefficients and p values are only displayed for partial correlations that adjusted for the other two domains of determinants of research use that were not being correlated

Frequency of using children’s mental health research in policymaking

Table 2 presents summary statistics about the frequency of using children’s mental health research in policymaking. The overall frequency of children’s mental health research use was 44.26 (SD = 8.23; 60-point scale). There were no significant differences in the composite frequency scores between conceptual (mean = 12.11; SD = 2.32), instrumental (mean = 12.01; SD = 2.26), and tactical (mean = 12.07; SD = 2.40) research use (p ≥ 0.52 for all comparisons). However, the imposed research use composite score (mean = 8.02; SD = 3.57) was significantly lower (p < 0.0001 for all comparisons). There were no significant differences in the composite frequency of research use scores across phases of agenda setting (mean = 14.84; SD = 2.86), policy development (mean = 14.70; SD = 3.17), or policy implementation (mean = 14.49; SD = 3.34) (p ≥ 0.08 for all comparisons).

When children’s mental health research use variables were dichotomized and analyzed as individual items, the majority of respondents frequently (i.e., 4 or 5 on the 5-point scale) engaged in conceptual, instrumental, and tactical research use—ranging from 76.7% for tactical use in the agenda phase to 69.9% for tactical use in the implementation phase. The proportion of respondents who frequently engaged in imposed research use ranged from 24.7% in the agenda-setting phase to 26.2% in the policy implementation phase. To illustrate how the use of research did not vary across phases of the policy process, Fig. 2 depicts the proportion of respondents who frequently engaged in each type of research use during each phase of the policy process. Supplemental Figure 1 contains this figure with means displayed instead of percentages and shows a nearly identical pattern.

Associations between determinants of research use and frequency of research use: variation by type of research use and phase of policy process

Table 3 shows adjusted associations between each domain of determinant of children’s mental health research use and overall frequency of research use score. In the fully adjusted model, agency leadership for children’s mental health research use (β = 0.25, p < 0.01) and dissemination barriers (β = −0.21, p < 0.01) were significantly associated with the frequency of research use. The interpretation of these coefficients is that, after adjustment, a 1% increase in agency leadership is associated with a 0.25% increase in the frequency of research use, and a 1% reduction in dissemination barriers is associated with a 0.21% increase in research use.

Table 4 shows the results of fully adjusted models in which composite frequency of research use scores for each of the four types of research use served as the dependent variable in a separate model. A different combination of determinants of research use and covariates were significantly associated with the frequency of research use for each purpose. In terms of conceptual use of children’s mental health research, the numbers of years that a respondent had been working at their agency (β = 0.16, p = 0.03), skills for research use (β = 0.18, p = 0.02), and dissemination barriers (β = −0.22, p = 0.01) were significantly associated with the frequency of use. For instrumental research use, only dissemination barriers (β = −0.22, p = 0.01) were significantly associated with the frequency use. In regard to tactical research use, the only determinant significantly associated with the frequency of use was skills for research use (β = 0.17, p = 0.03). Finally, in terms of imposed research use, the only determinant significantly associated with the frequency of use was agency leadership for research use (β = 0.31, p < 0.001). The interpretation is that, after adjustment, a 1% increase in agency leadership for research use is associated with a 0.31% increase in the frequency of imposed research use. The magnitude of the association between agency leadership for research use and imposed research use was twice as large as the association between this determinant and the frequency of any other type of research use.

There was also variation in the determinants that were associated with the frequency of research use within each phase of the policy process (Table 5). During the agenda-setting phase, state (β = 0.17, p = 0.02), skills for research use (β = 0.16, p = 0.04), and dissemination barriers (β = −0.20, p = 0.02) were significantly associated with frequency of research use. In both the policy development phase and the implementation phase, agency leadership for research use (β = 0.27 and β = −0.21, respectively, both p < 0.01) and dissemination barriers (β = 0.18 and β = −0.24, respectively, both p ≤ 0.05) were significantly associated with the frequency of research use.

Exploratory analysis related to the frequency of imposed research use

Forty-three percent of survey respondents worked in a state that had a law requiring the use of research evidence in mental health policymaking. There was no significant difference in the frequency of imposed research use between respondents who did and did not have this law in their state (7.88 vs. 8.13, p = 0.47). There was a significant correlation (r = 0.42, p < 0.0001) between the frequency of imposed and instrumental research use. However, the strength of these correlations was not substantially different than those of correlations between imposed and conceptual (r = 0.35, p < 0.0001) or imposed and tactical (r = 0.38, p < 0.0001) research use.

Discussion

We aimed to characterize the determinants and frequency of children’s mental health research use in US state policymaking and understand how these constructs vary by type of research use and phase of policy process. We find that the most common barriers to using children’s mental health services research relate to limited agency resources, agencies not dedicating resources to promote research use, and insufficient time to use research. We also find that research evidence is regularly used for a diversity of purposes throughout the policy process, with the vast majority of respondents frequently using research conceptually, instrumentally, and tactically across all three phases of the policy process. With the exception of imposed research use, which was found to occur least frequently, we observe that the frequency of research use does not vary according to the type of research use or phase of policy process. However, we find that the determinants of research use do vary by type of research use and phase of policy process. Of note, a different combination of determinants of research use and covariates were significantly associated with the frequency of each type of research use. This finding raises questions about why there is variation in the determinants of different types of research use and how this information can be used to inform the design of policy-focused implementation strategies.

Many of the variations observed in the determinants of research use intuitively make sense. For example, skills for research use, but no other domains of determinants, were significantly associated with the frequency of tactical research use. It is logical that the policymakers who are most adept at finding, interpreting, and evaluating the quality research evidence have the most ability to strategically use research findings for persuasive purposes [8]. It also makes sense that agency leadership for research use was the only domain of determinant associated with imposed research use. This is because agency leaders—and the leadership to whom they are accountable (e.g., governors, state legislators)—have the authority to require that their staff use research evidence in policymaking. The magnitude of this association was also two times larger than the association between agency leadership for research use and the frequency of conceptual, instrument, and tactical research use.

Consistent with prior research [7, 25], we found that the mean frequency of imposed research use score was significantly lower than that of other types of research use. Our exploratory analysis found no significant association between state laws that require the use of research evidence in mental health policymaking and the frequency of imposed research use. Relatedly, our exploratory correlational analyses do not provide strong support for the notion that imposed research is an antecedent to instrumental research use. While future research is needed to examine these exploratory findings in greater depth, the findings suggest that research use in policymaking is not something that can be simply mandated by state laws or other imposed requirements.

The finding that the determinants of research use vary for different types of research use suggests that decisions about the determinants to target with policy-focused implementation strategies should reflect the specific types of research use that these strategies intend to affect. This raises an important question for the field: which types of research use should be prioritized? Are all types of research use equally important, or are some more critical to evidence-informed policymaking than others? For example, should instrumental research use be prioritized because it is most proximal to a concrete policy decision? Should tactical research be a lower priority because it can be [8, 16, 64], although is not always, used to promote political gain and policies that are misaligned with evidence? While the literature provides little guidance on these questions, an implication of our findings is that this may be an important area for future debate and consensus building.

An alternative approach, which negates the need to focus on some types of research use and not others, is to target determinants that increase the overall frequency of research use in policymaking. In this case, our findings suggest that implementation strategies that increase agency leadership for research use and reduce dissemination barriers would be most effective and efficient [65], given that these two domains of determinants were significantly associated with the overall frequency of children’s mental health research use. In terms of strategies to increase agency leadership for the use of children’s mental health use, approaches could be adapted from those that have demonstrated success at improving leadership for evidence-based practice at organization and clinical levels [66]. Examples of such approaches include the Leadership and Organizational Change for Implementation model [67], the iLead model [68], and the Ottawa Model of Implementation Leadership (O-MILe) [69, 70]. Future research could explore how these models might be adapted to focus on state policymakers and research use in decision-making as opposed to organization leaders and specific EBPs.

In terms of addressing dissemination barriers, guidance exists in recent reviews that have synthesized information about strategies to enhance the policy impact of health research [33, 71,72,73,74,75,76,77]. Many of these strategies relate to either the packaging of research evidence (e.g., enhancing the relevance and presentation of evidence summaries) or fostering collaboration between researchers and policymakers. For example, one strategy to improve the packaging of evidence for policymakers is to use local, as opposed to national, data to characterize a problem, and highlight policies to address it. A 2017 survey found that the vast majority (93%) of state mental health agency officials identified “relevance to residents in my state” as a “very important” feature of mental health research [32]. Field experiments conducted in the USA and the UK have found that policymakers’ engagement with research evidence can be increased by tailoring evidence summaries so that the geographic level of the data presented corresponds with the population they serve [78, 79].

In terms of fostering collaboration between researchers and policymakers, many models have demonstrated success. In the USA, Family Impact Seminar model (state level) [80,81,82,83] and the Research-to-Policy Collaboration model (federal level) [84] serve as examples. The William T. Grant Foundation has also synthesized guidance about approaches in this domain [85]. Outside of the USA, the SPIRIT model in Australia included a component intended to increase interactions between researchers and policymakers [86], and intermediary organizations in Canada are supported by government funds to help facilitate these connections [87]. While none of these models focus on children’s mental health in US state contexts, they offer guidance to inform the selection of implementation strategies that build on the findings of our study.

Limitations

The results of our study should be considered within the context of its scope and limitations. The study was broadly focused on the uses of research in state children’s mental health policymaking and not research evidence related to a specific mental health issue, intervention, or policy. Relatedly, the survey used a broad definition of research evidence and did not assess the uses of different types of evidence (e.g., testimony of families, agency reports). Prior qualitative research suggests that different types of evidence might be used in different ways [12, 13].

The aggregate response rate of 33.7% is considered good for a sample of policymakers [88] and consistent with prior surveys of administrative policymakers about uses or research evidence [21,22,23,24]. However, it is possible that survey respondents are not fully representative of all children’s mental health policymakers in US states, although 98% of all states had at least one respondent. It should be noted, however, the response rates were high for key stakeholder groups in our sample (e.g., 63.6% of all state children’s division directors and 57.1% for state child mental health planners). Our analyses did not focus on differences in research use between different types of decision-makers (e.g., agency directors vs. children’s division directors, SAMHSA grant administrators vs. non-SAMHSA grant administrators) and such comparisons are an area for future research.

Given that a relatively small number of respondents were from each state (median = 4), it is possible that the study was underpowered to fully capture state effects (although this was not an aim of the study). There would be value to future research which links survey data with administrative data about state agency context—similar to the data used by Bruns and colleagues in their analysis of outer-context factors associated with state mental health agency provision of evidence-based treatments [46, 47].

Conclusion

The frequency of children’s mental health research use in state agency policymaking does not vary according to the phase of policy process or type of research use, with the exception of imposed research use which occurs least frequently. Importantly, however, there is significant variation in the determinants of different types of research use. This suggests that decisions about the determinants to target with policy-focused implementation strategies—and the strategies that are selected to affect these targets—should be aligned with the specific types of research use that these strategies aim to influence.

Availability of data and materials

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Cairney P, Oliver K. Evidence-based policymaking is not like evidence-based medicine, so how far should you go to bridge the divide between evidence and policy? Health Res Policy Syst. 2017;15(1):1–11.

Brownson RC, Colditz GA, Proctor EK. Dissemination and implementation research in health: translating science to practice. Oxford: Oxford University Press; 2017.

Oliver K, Lorenc T, Innvær S. New directions in evidence-based policy research: a critical analysis of the literature. Health Res Policy Syst. 2014;12(1):34.

Weiss CH. Research for policy’s sake: the enlightenment function of social research. Policy Anal. 1977;3(4):531–45.

Weiss CH. Using social research in public policy making. Lexington Books; 1977.

Weiss CH. The many meanings of research utilization. Public Adm Rev. 1979;39(5):426–31.

Weiss CH, Murphy-Graham E, Birkeland S. An alternate route to policy influence: how evaluations affect DARE. Am J Eval. 2005;26(1):12–30.

Purtle J, Peters R, Kolker J, Diez Roux AV. Uses of population health rankings in local policy contexts: a multisite case study. Med Care Res Rev. 2019;76(4):478–96.

Haynes AS, Gillespie JA, Derrick GE, et al. Galvanizers, guides, champions, and shields: the many ways that policymakers use public health researchers. Milbank Q. 2011;89(4):564–98.

Haynes AS, Derrick GE, Redman S, et al. Identifying trustworthy experts: how do policymakers find and assess public health researchers worth consulting or collaborating with? PLoS One. 2012;7(3):e32665.

Zardo P, Collie A, Livingstone C. External factors affecting decision-making and use of evidence in an Australian public health policy environment. Soc Sci Med. 2014;108:120–7.

Jack S, Dobbins M, Tonmyr L, Dudding P, Brooks S, Kennedy B. Research evidence utilization in policy development by child welfare administrators. Child Welfare. 2010;89(4):83–100.

Hyde JK, Mackie TI, Palinkas LA, Niemi E, Leslie LK. Evidence use in mental health policy making for children in foster care. Adm Policy Ment Health Ment Health Serv Res. 2016;43(1):52–66.

Waddell C, Lavis JN, Abelson J, et al. Research use in children’s mental health policy in Canada: maintaining vigilance amid ambiguity. Soc Sci Med. 2005;61(8):1649–57.

Jewell CJ, Bero LA. “Developing good taste in evidence”: facilitators of and hindrances to evidence-informed health policymaking in state government. Milbank Q. 2008;86(2):177–208.

Woodruff K, Roberts SC. “My good friends on the other side of the aisle aren’t bothered by those facts”: US State legislators’ use of evidence in making policy on abortion. Contraception. 2020;101(4):249–55.

Meisel ZF, Mitchell J, Polsky D, et al. Strengthening partnerships between substance use researchers and policy makers to take advantage of a window of opportunity. Subst Abuse Treat Prev Policy. 2019;14(1):12.

Oliver K, Innvar S, Lorenc T, Woodman J, Thomas J. A systematic review of barriers to and facilitators of the use of evidence by policymakers. BMC Health Serv Res. 2014;14(1):2.

Jakobsen MW, Karlsson LE, Skovgaard T, Aro AR. Organisational factors that facilitate research use in public health policy-making: a scoping review. Health Res Policy Syst. 2019;17(1):90.

Masood S, Kothari A, Regan S. The use of research in public health policy: a systematic review. Evid Policy. 2020;16(1):7–43.

Amara N, Ouimet M, Landry R. New evidence on instrumental, conceptual, and symbolic utilization of university research in government agencies. Sci Commun. 2004;26(1):75–106.

Landry R, Lamari M, Amara N. Extent and determinants of utilization of university research in public administration. Public Adm Rev. 2003;63(2):191–204.

Zardo P, Collie A. Predicting research use in a public health policy environment: results of a logistic regression analysis. Implement Sci. 2014;9(1):142.

Zardo P, Collie A. Type, frequency and purpose of information used to inform public health policy and program decision-making. BMC Public Health. 2015;15(1):381.

Williamson A, Makkar SR, Redman S. How was research engaged with and used in the development of 131 policy documents? Findings and measurement implications from a mixed methods study. Implement Sci. 2019;14(1):44.

Haynes A, Rowbotham SJ, Redman S, Brennan S, Williamson A, Moore G. What can we learn from interventions that aim to increase policy-makers’ capacity to use research? A realist scoping review. Health Res Policy Syst. 2018;16(1):31.

Lewis CC, Boyd MR, Walsh-Bailey C, et al. A systematic review of empirical studies examining mechanisms of implementation in health. Implement Sci. 2020;15:1–25.

Powell BJ, Beidas RS, Lewis CC, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44(2):177–94.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):1–11.

Sabatier PA, Weible CM. Theories of the policy process: Westview Press; 2014.

Bogenschneider K, Little OM, Johnson K. Policymakers’ use of social science research: looking within and across policy actors. J Marriage Fam. 2013;75(2):263–75.

Purtle J, Lê-Scherban F, Nelson KL, Shattuck PT, Proctor EK, Brownson RC. State mental health agency officials’ preferences for and sources of behavioral health research. Psychol Serv. 2019. https://doi.org/10.1037/ser0000364.

Purtle J, Nelson KL, Bruns EJ, Hoagwood KE. Dissemination strategies to accelerate the policy impact of children’s mental health services research. Psychiatr Serv. 2020. https://doi.org/10.1176/appi.ps.201900527.

Jacob RR, Allen PM, Ahrendt LJ, Brownson RC. Learning about and using research evidence among public health practitioners. Am J Prev Med. 2017;52(3):S304–8.

Hu H, Allen P, Yan Y, Reis RS, Jacob RR, Brownson RC. Research full report: organizational supports for research evidence use in state public health agencies: a latent class analysis. J Public Health Manag Pract. 2019;25(4):373.

Purtle J, Peters R, Brownson RC. A review of policy dissemination and implementation research funded by the National Institutes of Health, 2007–2014. Implement Sci. 2015;11(1):1.

Purtle J, Lê-Scherban F, Wang X, Brown E, Chilton M. State legislators’ opinions about adverse childhood experiences as risk factors for adult behavioral health conditions. Psychiatr Serv. 2019;70(10):894–900.

Hoagwood KE, Purtle J, Spandorfer J, Peth-Pierce R, Horwitz SM. Aligning dissemination and implementation science with health policies to improve children’s mental health. Am Psychol. 2020;75(8):1130.

Twenge JM, Cooper AB, Joiner TE, Duffy ME, Binau SG. Age, period, and cohort trends in mood disorder indicators and suicide-related outcomes in a nationally representative dataset, 2005–2017. J Abnorm Psychol. 2019;128(3):185–99.

Mojtabai R, Olfson M, Han B. National trends in the prevalence and treatment of depression in adolescents and young adults. Pediatrics. 2016;138(6):e20161878.

CDC. Youth risk behavior survey–data summary & trends report: 2007–2017, vol. 2018; 2018.

Ruch DA, Sheftall AH, Schlagbaum P, Rausch J, Campo JV, Bridge JA. Trends in suicide among youth aged 10 to 19 years in the United States, 1975 to 2016. JAMA Netw Open. 2019;2(5):e193886.

Patrick SW, Henkhaus LE, Zickafoose JS, et al. Well-being of parents and children during the COVID-19 pandemic: a national survey. Pediatrics. 2020;146(4):e2020016824.

Leeb RT, Bitsko RH, Radhakrishnan L, Martinez P, Njai R, Holland KM. Mental health–related emergency department visits among children aged < 18 years during the COVID-19 pandemic—United States, January 1–October 17, 2020. Morb Mortal Wkly Rep. 2020;69(45):1675.

Williamson A, Makkar SR, McGrath C, Redman S. How can the use of evidence in mental health policy be increased? A systematic review. Psychiatr Serv. 2015;66(8):783–97.

Bruns EJ, Parker EM, Hensley S, et al. The role of the outer setting in implementation: associations between state demographic, fiscal, and policy factors and use of evidence-based treatments in mental healthcare. Implement Sci. 2019;14(1):96.

Bruns EJ, Kerns SE, Pullmann MD, Hensley SW, Lutterman T, Hoagwood KE. Research, data, and evidence-based treatment use in state behavioral health systems, 2001–2012. Psychiatr Serv. 2016;67(5):496–503.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):1–15.

Redman S, Turner T, Davies H, et al. The SPIRIT Action Framework: a structured approach to selecting and testing strategies to increase the use of research in policy. Soc Sci Med. 2015;136:147–55.

Moore G, Redman S, Haines M, Todd A. What works to increase the use of research in population health policy and programmes: a review. Evid Policy. 2011;7(3):277–305.

Huckel Schneider C, Campbell D, Milat A, Haynes A, Quinn E. What are the key organisational capabilities that facilitate research use in public health policy? Public Health Res Pract. 2014;25(1):e2511406.

National Association of State Mental Health Program Directors. Too significant to fail: the importance of state behavioral health agencies in the daily lives of Americans with mental illness, for their families, and for their communities. 2012.

Substance Abuse and Mental Health Services Administration, Funding and Characteristics of Single State Agencies for Substance Abuse Services and StateMental Health Agencies, 2015. HHS Pub. No. (SMA) SMA-17-5029. Rockville: Substance Abuse and Mental Health Services Administration; 2017.

Mental Health Liaison Group. Appropriations recommendations for fiscal year 2021. https://www.mhlg.org/wordpress/wp-content/uploads/2020/03/MHLG_FY2021_Approps_Request_FINAL_3.2.20.pdf. Accessed 13 Jan 2021.

National Association of State Mental Health Program Directors. 2020 Rosters. https://www.nasmhpd.org/content/nasmhpd-rosters. Accessed 13 Jan 2021.

Substance Abuse and Mental Health Services Administration. https://bgas.samhsa.gov/Module/BGAS/Users. Accessed 13 Jan 2021.

United States. Commission on Evidence-Based Policymaking. The promise of evidence-based policymaking: report of the Commission on Evidence-Based Policymaking: Commission on Evidence-Based Policymaking; 2017. https://www.cep.gov/report/cep-final-report.pdf

Brennan SE, McKenzie JE, Turner T, et al. Development and validation of SEER (Seeking, Engaging with and Evaluating Research): a measure of policymakers’ capacity to engage with and use research. Health Res Policy Syst. 2017;15(1):1.

Purtle J, Dodson EA, Brownson RC. Uses of research evidence by State legislators who prioritize behavioral health issues. Psychiatr Serv. 2016;67(12):1355–61.

Purtle J, Dodson EA, Nelson K, Meisel ZF, Brownson RC. Legislators’ sources of behavioral health research and preferences for dissemination: variations by political party. Psychiatr Serv. 2018;69(10):1105–8.

Epskamp S, Waldorp LJ, Mõttus R, Borsboom D. The Gaussian graphical model in cross-sectional and time-series data. Multivar Behav Res. 2018;53(4):453–80.

O’brien RM. A caution regarding rules of thumb for variance inflation factors. Qual Quant. 2007;41(5):673–90.

The Pew Charitable Trusts, MacArthur Foundation. How states engage in evidence-based policymaking: a national assessment. Washington, DC; 2017. Retrieved from http://www.pewtrusts.org/-/media/assets/2017/01/how_states_engage_in_evidence_based_policymaking.pdf

Marston G, Watts R. Tampering with the evidence: a critical appraisal of evidence-based policy-making. Drawing Board. 2003;3(3):143–63.

Glasgow RE, Fisher L, Strycker LA, et al. Minimal intervention needed for change: definition, use, and value for improving health and health research. Transl Behav Med. 2014;4(1):26–33.

Moullin JC, Ehrhart MG, Aarons GA. The role of leadership in organizational implementation and sustainment in service agencies. Res Soc Work Pract. 2018;28(5):558–67.

Aarons GA, Ehrhart MG, Farahnak LR, Hurlburt MS. Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci. 2015;10(1):11.

Richter A, von Thiele Schwarz U, Lornudd C, Lundmark R, Mosson R, Hasson H. iLead—a transformational leadership intervention to train healthcare managers’ implementation leadership. Implement Sci. 2015;11(1):108.

Gifford W, Graham ID, Ehrhart MG, Davies BL, Aarons GA. Ottawa model of implementation leadership and implementation leadership scale: mapping concepts for developing and evaluating theory-based leadership interventions. J Healthc Leadersh. 2017;9:15.

Gifford WA, Davies B, Graham ID, Lefebre N, Tourangeau A, Woodend K. A mixed methods pilot study with a cluster randomized control trial to evaluate the impact of a leadership intervention on guideline implementation in home care nursing. Implement Sci. 2008;3(1):51.

Brownson RC, Eyler AA, Harris JK, Moore JB, Tabak RG. Research full report: getting the word out: new approaches for disseminating public health science. J Public Health Manag Pract. 2018;24(2):102.

Petkovic J, Welch V, Jacob MH, et al. The effectiveness of evidence summaries on health policymakers and health system managers use of evidence from systematic reviews: a systematic review. Implement Sci. 2016;11(1):162.

Scott JT, Larson JC, Buckingham SL, Maton KI, Crowley DM. Bridging the research–policy divide: pathways to engagement and skill development. Am J Orthopsychiatry. 2019;89(4):434.

Bogenschneider K. Positioning universities as honest knowledge brokers: best practices for communicating research to policymakers. Fam Relat. 2020;69(3):628–43.

Day E, Wadsworth SM, Bogenschneider K, Thomas-Miller J. When university researchers connect with policy: a framework for whether, when, and how to engage. J Fam Theory Rev. 2019;11(1):165–80.

Ashcraft LE, Quinn DA, Brownson RC. Strategies for effective dissemination of research to United States policymakers: a systematic review. Implement Sci. 2020;15(1):1–17.

Purtle J, Marzalik JS, Halfond RW, Bufka LF, Teachman BA, Aarons GA. Toward the data-driven dissemination of findings from psychological science. Am Psychol. 2020;75(8):1052.

Lyons RA, Kendrick D, Towner EM, et al. The advocacy for pedestrian safety study: cluster randomised trial evaluating a political advocacy approach to reduce pedestrian injuries in deprived communities. PLoS One. 2013;8(4):e60158.

Brownson RC, Dodson EA, Stamatakis KA, et al. Communicating evidence-based information on cancer prevention to state-level policy makers. J Natl Cancer Inst. 2011;103(4):306–16.

Tomayko EJ, Godlewski B, Bowman S, Settersten RA, Weber RB, Krahn G. Leveraging public health research to inform state legislative policy that promotes health for children and families. Matern Child Health J. 2019;23(6):1–6.

Bogenschneider K, Olson JR, Linney KD, Mills J. Connecting research and policymaking: implications for theory and practice from the family impact seminars. Fam Relat. 2000;49(3):327–39.

Bogenschneider K, Corbett TJ, Parrott E. Realizing the promise of research in policymaking: theoretical guidance grounded in policymaker perspectives. J Fam Theory Rev. 2019;11(1):127–47.

Wilcox BL, Weisz PV, Miller MK. Practical guidelines for educating policymakers: the family impact seminar as an approach to advancing the interests of children and families in the policy arena. J Clin Child Adolesc Psychol. 2005;34(4):638–45.

Crowley M, Scott JTB, Fishbein D. Translating prevention research for evidence-based policymaking: results from the research-to-policy collaboration pilot. Prev Sci. 2018;19(2):260–70.

Palinkas LA, Short C, Wong M. Research-practice-policy partnerships for implementation of evidence-based practices in child welfare and child mental health. 2015; http://wtgrantfoundation.org/library/uploads/2015/10/Research-Practice-Policy_Partnerships.pdf.

Williamson A, Barker D, Green S, et al. Increasing the capacity of policy agencies to use research findings: a stepped-wedge trial. Health Res Policy Syst. 2019;17(1):14.

Bullock HL, Lavis JN. Understanding the supports needed for policy implementation: a comparative analysis of the placement of intermediaries across three mental health systems. Health Res Policy Syst. 2019;17(1):82.

Fisher SH III, Herrick R. Old versus new: the comparative efficiency of mail and internet surveys of state legislators. State Polit Policy Q. 2013;13(2):147–63.

Acknowledgements

The authors thank Rozhan Ghanbari and Julia Spandorfer for their assistance with data collection. The authors also thank the survey respondents who took time from their busy schedules to share their perspectives and experiences.

Funding

National Institute of Mental Health (P50MH113662). The funder had no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

JP lead the writing of the manuscript, conceptualized of study, and analyzed the data. JP, KN, SMH, MM, and KEM all contributed to the conceptualization of the study, data collection instruments, and development of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the Drexel University Institutional Review Board (1902006976).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplemental Figure 1.

Frequency of Different Types of Children’s Mental Health Research Use across Different Phases of the Policy Process, State Agency Officials, Winter 2019-2020, N=224.

Additional file 2: Supplemental Table 1.

Wording of Survey Items.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Purtle, J., Nelson, K.L., Horwitz, S.M.C. et al. Determinants of using children’s mental health research in policymaking: variation by type of research use and phase of policy process. Implementation Sci 16, 13 (2021). https://doi.org/10.1186/s13012-021-01081-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-021-01081-8