Abstract

Background

In healthcare settings, system and organization leaders often control the selection and design of implementation strategies even though frontline workers may have the most intimate understanding of the care delivery process, and factors that optimize and constrain evidence-based practice implementation within the local system. Innovation tournaments, a structured participatory design strategy to crowdsource ideas, are a promising approach to participatory design that may increase the effectiveness of implementation strategies by involving end users (i.e., clinicians). We utilized a system-wide innovation tournament to garner ideas from clinicians about how to enhance the use of evidence-based practices (EBPs) within a large public behavioral health system.

Methods

Our innovation tournament occurred in three phases. First, we invited over 500 clinicians to share, through a web-based platform, their ideas regarding how their organizations could best support use of EBPs. Clinicians could rate and comment on ideas submitted by others. Second, submissions were judged by an expert panel (including behavioral scientists, system leaders, and payers) based on their rated enthusiasm for the idea. Third, we held a community-facing event during which the six clinicians who submitted winning ideas presented their strategies to 85 attendees representing a cross-section of clinicians and system and organizational leaders.

Results

We had a high rate of participation (12.3%), more than double the average rate of previous tournaments conducted in other settings (5%). A total of 65 ideas were submitted by 55 participants representing 38 organizations. The most common categories of ideas pertained to training (42%), financing and compensation (26%), clinician support and preparation tools (22%), and EBP-focused supervision (17%). The expert panel and clinicians differed on their ratings of the ideas, highlighting value of seeking input from multiple stakeholder groups when developing implementation strategies.

Conclusions

Innovation tournaments are a useful and feasible methodology for engaging end users, system leaders, and behavioral scientists through a structured approach to developing implementation strategies. The process and resultant strategies engendered significant enthusiasm and engagement from participants at all levels of a healthcare system. Research is needed to compare the effectiveness of strategies developed through innovation tournaments to strategies developed through design approaches.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

A significant body of evidence supports the importance of evidence-based practice (EBP) in community mental health and health settings [1], yet these practices are largely underused by organizations and clinicians [2,3,4]. Implementation strategies are the interventions used to increase adoption, implementation, and sustainment of EBP in health services [5]. The evidence base for implementation strategies has advanced significantly in recent years including the identification of a complex set of factors related to implementation success. These factors include organizational- and individual-level factors, as well as characteristics related to the intervention, and the economic, political, and social context [6,7,8]. Although there are several proposed typologies of implementation strategies [5], no systematic approach to developing these strategies has been proposed [9]. Moreover, implementation strategy development has rarely systematically incorporated stakeholder involvement in the design [10], a critical oversight given research in healthcare suggesting that stakeholder input results in more effective outcomes [11, 12]. These weaknesses may explain why many implementation strategies fail to improve either implementation or clinical outcomes and why most strategies fail to engage their targeted mechanisms of action [13].

The importance of participatory approaches has been long recognized [14]. Multiple research traditions and disciplines such as implementation science, community-partnered research, and action research have incorporated provider input through stakeholder engagement methods and dynamic partnership [14,15,16,17,18]. Participatory design approaches are lauded for creating effective implementation strategies because they provide systematic methods to include and empower stakeholders at the beginning stages [19,20,21], and provide opportunities for stakeholders to be involved in design [14]. The current study utilizes an innovation tournament, a structured participatory design approach, in community mental health, as a springboard for implementation strategy co-design. Innovation tournaments are a form of crowdsourcing in which a host issues a call for ideas to address a specific challenge or problem within a system, and frontline providers and staff who work within the system are invited to submit their ideas for how to address the challenge. After multiple steps of screening and evaluation, a few ideas emerge as winners and others do not advance. Systematic review evidence points to the effectiveness of such crowdsourcing participatory design strategies in generating novel and useful solutions to complex and intractable problems in areas outside of healthcare settings [22,23,24].

The aims of the current study were twofold: (a) to test the utility of an innovation tournament for developing participatory-informed implementation strategies that enhance the use of evidence-based practices (EBPs) within a large behavioral health system and (b) to generate ideas from clinicians about the best ways for organizations to facilitate EBP implementation. Our study adds to the literature in several ways. First, our study extends the current literature on participatory approaches in implementation science, specifically providing proof-of-concept of the utility of the innovation tournament approach. We are aware of only one other study that applied the innovation tournament methodology to problems in healthcare systems and that study included a single organization focused on improving patients’ experience of care [24]. Second, we extend this work by using an innovation tournament to address EBP implementation and by applying the method across an entire behavioral health system comprised of a network of 200 independent treatment organizations (of mental health and substance use disorders) which are affiliated only to the extent that they are subject to the same policy and funding constraints through a single policy and payer infrastructure. Third, we collected ideas directly from the stakeholders who will be targeted by implementation strategies and have the most intimate understanding of factors that could affect the implementation process. The innovation tournament provided a structured and efficient way to integrate insights from clinicians (who have unique expertise about the problem), system leaders (who can speak to feasibility and financing), and experts in behavioral economics who understand drivers of implementation-relevant behavior. Behavioral science, including psychology and behavioral economics, can contribute valuable information about the best way to structure implementation efforts to optimize the design of implementation strategies that target organizations, critically important given the scarcity of resources in the mental health system.

Due to past successful experiences with recruitment within this system, we anticipated high engagement and a successful tournament. We had one hypothesis regarding the content of ideas generated in the tournament: In our past work, financial challenges such as the scarcity of resources in the mental health system and the organizational financial investment required to support EBPs have been highlighted by all stakeholders [6, 25]. In addition, clinicians have previously reported that they do not feel incentivized by their provider organizations to implement EBPs [26]. We hypothesized therefore that the majority of ideas offered by clinicians would be related to compensation and financial incentives.

Method

This study was approved by the Institutional Review Boards of the University of Pennsylvania and City of Philadelphia.

Setting

The Department of Behavioral Health and Intellectual disAbility Services (DBHIDS) in Philadelphia is a large publicly funded behavioral health system that annually services approximately 169,000 individuals with mental health and substance use disorders. Since 2007, DBHIDS has supported the implementation of multiple EBPs [6, 26, 27] including cognitive behavior therapy [28, 29], trauma-focused cognitive-behavioral therapy [30], prolonged exposure [31], dialectical behavior therapy [32], and parent-child interaction therapy [33]. DBHIDS funds training and consultation in line with treatment developer recommendations, and staff time to coordinate implementation, training, and ongoing consultation by treatment developers for each of the five EBPs. In 2013, the Evidence-Based Practice and Innovation Center (EPIC) was launched as a centralized infrastructure for EBP administration. In addition to supporting the EBP initiatives, which predated the creation of this entity, EPIC aligned policy, fiscal, and operational approaches to EBP implementation by developing systematic processes to contract for EBP delivery, hosting events to publicize EBP delivery, designating providers as EBP agencies, and creating enhanced rates for the delivery of some EBPs [27].

Procedure

This innovation tournament procedure was borrowed from Terwiesch and colleagues [24], developed through the Penn Medicine Center for Health Care Innovation [34].

Recruitment

We invited, by email, publicly funded behavioral health organization leaders (n = 210), clinicians (n = 527), and other community stakeholders (e.g., leaders of community provider organizations, directors of a clinician training organization; n = 6) in Philadelphia to participate in an innovation tournament called “The Philly Clinician Crowdsourcing Challenge.” We also e-mailed the invitation to four local electronic mailing lists known to reach large swaths of the network (e.g., the managed care organization listserv, the community provider organization listserv). Leaders and stakeholders were asked to forward the email to clinicians, so it is impossible to know exactly how many clinicians received and read the request. Moreover, some clinicians may have received the invitation more than once (i.e., from a direct e-mail and from their executive director). We sent leaders and stakeholders a priming email 1 week prior to the start of the tournament [35]. We then sent an initial invitation e-mail containing the link to participate and four reminder emails over the course of 5 weeks. The innovation tournament link was live for 5 weeks from February to March 2018. Potential participants clicked on the link and provided consent.

Tournament platform

The “Your Big Idea Platform,” powered by the Penn Medicine Center for Health Care Innovation, is a web-based platform that enables crowdsourcing for ideas and solutions. Idea challenges are posted to the platform, and participants can submit an idea, rate other ideas on a 1–5 “star” scale, and comment and rate ideas submitted by other participants [24].

Idea elicitation prompt

The tournament prompt (i.e., the question posed to potential participants) was developed through an iterative process. The authors created an initial draft of the tournament prompt and solicited feedback from the project’s steering committee. Our team conducted four cognitive interviews with clinicians and policy-makers to receive feedback on the wording, score, and design of the prompt. The final prompt asked clinicians, “How can your organization help you use evidence-based practices in your work?” Participants could submit and rate as many ideas as they wished.

Idea vetting and selection of winners

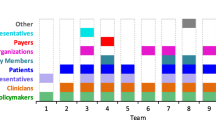

We assembled an expert panel of stakeholders to vote on the ideas and determine the winners. We wanted the committee to represent the perspectives of those who (a) understand what is feasible and acceptable to clinicians, (b) are in a position to implement the ideas, and (c) can evaluate the idea’s potential to leverage principles of behavioral economics. Therefore, the Challenge Committee included two agency stakeholders, two city administrators (including the Commissioner of the Philadelphia Department of Behavioral Health and Intellectual DisAbility Services [DBHIDS]), and two behavioral science experts. To minimize burden on our Challenge Committee, the research team refined the list from 65 to 22 by eliminating ideas that were not actionable (e.g., increase inpatient stays to a minimum of 60 days) or too similar to another, better-elaborated idea. The committee was asked to rate the ideas based on their enthusiasm for the idea’s “potential to increase therapist use of evidence-based practice” using a 1–5 Likert scale (1 = not at all enthusiastic, 5 = very enthusiastic). The top six ideas as rated by the Challenge Committee were chosen as winners.

Participants who submitted winning ideas (hereafter referred to as “innovators”) were invited to a working lunch with our team to refine and develop their idea leveraging principles of behavioral economics. For example, innovators discussed how to amplify the impact of their proposed strategy using such behavioral economic principles as changing defaults, peer comparisons, loss-framed incentives, and nudges [36,37,38,39].

During this meeting, innovators prepared a brief presentation summarizing their idea for a community-facing event, The Idea Gala, held in May 2018. All who received the invitation to participate in the tournament (described above) were invited to the event. During The Idea Gala, each of the six innovators presented their idea for 3 min. Attendees had the option of using an online polling system (www.mentimeter.com) to answer the following question on a 1–10 Likert scale (1 = not at all excited, 10 = very excited): “How excited are you by this idea?” and to submit brief comments about the ideas. After the presentation of each idea, there were 10 min of questions from the audience and an unstructured discussion of the idea by the Challenge Committee. Challenge Committee members focused their comments on the problem, the solution, applications of principles of behavioral economics to enhance implementations, metrics for success, and resources necessary for implementation.

Coding and categorization of ideas

After reviewing submitted ideas, we developed a coding scheme to categorize the ideas into eight non-mutually exclusive categories. These categories included (a) training, (b) financing and compensation, (c) clinician support and preparation tools, (d) client support, (e) EBP-focused supervision, (f) changes to the scope or definition of EBPs, (g) changes to the system and structure of publicly funded behavioral health care, and (h) other. Two study team members (RS and VB) categorized all the ideas, and inter-rater reliability was found to be excellent (K = .92). Discrepancies were resolved through discussion.

Results

Tournament feasibility and respondent characteristics

A total of 65 ideas were submitted by 55 participants. The participants represented 38 behavioral health organizations representing multiple levels of care (e.g., outpatient, inpatient, residential) and specialties (mental health, substance use). The majority of participants were white (61.8%) and female (61.8%). Additionally, 59 participants submitted 899 ratings, and 22 participants submitted 55 comments on the 65 ideas. Of the clinicians (n = 527) who were contacted directly to participate in the tournament, 12.3% submitted an idea. According to personal communications with “Your Big Idea” staff, this response rate is consistent with (or exceeds) the average response rate (5%) of challenges administered through the “Your Big Idea” platform.

Categories of solutions

Submitted ideas encompassed all aspects of supporting EBPs. We categorized ideas into eight categories. Over 40% of ideas pertained to training (42%), followed by ideas related to changes to financing and compensation (26%), including paid training, financial incentives, and rewards. A large proportion of ideas included clinician support and preparation tools (22%) and supervision (17%). Other categories included supporting clients (5%), changes to the scope or definition of EBPs (8%), and changes to the system and structure of publicly funded behavioral health care (6%). See Table 1 for examples of ideas and ratings by category by other participants.

Winning ideas and The Idea Gala

See Table 2 for the Challenge Committee’s ratings of the top winning ideas, each innovator’s idea, and the target problem addressed by the idea.

A total of 85 participants (12 project staff, 6 Challenge Committee members, 6 innovators, 61 other attendees) representing 22 organizations attended The Idea Gala. Attendees’ ratings of the innovators’ ideas are shown in column 3 of Table 2, alongside the Challenge Committee’s ratings in column 2. Both groups rated “pre-session preparation,” defined as creating a relaxing waiting room to prepare clients for the upcoming session, the highest among the 6 winning ideas.

Discussion

The present study is the first to utilize an innovation tournament to crowdsource ideas from community clinicians about how they can best be supported to use EBPs. The main purpose and benefit of an innovation tournament is to engage a community through participatory design to provide their best ideas. While many studies have included stakeholder elicitation of suggestions, preferences, and targets for intervention (e.g., [40]), an innovation tournament has a unique contribution as a participatory method for the following reasons. First, an innovation tournament can engage an infinite number of people in a fun and creative way for low incremental cost, while giving each participant an equal voice with the submission of an idea. A tournament allows for quick identification of potentially high impact ideas at every level of a system and can provide a training ground to empower stakeholders’ good ideas and improve their ability to express them. Lastly, a tournament provides public acknowledgement of people who come up with ideas, and this may increase buy-in to the ideas when ultimately implemented (the “Ikea effect”) and encourage future participation. Innovation tournaments are therefore a relatively inexpensive and efficient way to solicit and powerfully engage end-user input.

Our tournament was a success in this regard: we had 65 ideas submitted by 55 participants and 899 ratings of ideas by those participants. The number of submissions and overall participation exceeded our expectations, and we engaged many more in our community through our celebratory event. The study team was continuously struck by the high level of community stakeholder enthusiasm throughout the study period. While use of crowdsourcing in health research is nascent [22], this study demonstrates how an innovation tournament can be not only feasible to implement across an entire system, but also a successful and acceptable means to engage a community of service providers in sharing their expertise to generate useful implementation strategies that address local barriers, contexts, and populations.

The prompt for our innovation tournament was purposefully broad in order to encourage clinicians to share their unique local knowledge about targeted barriers that interfere with EBP implementation and their innovative ideas for overcoming those barriers. We wanted to capture the full spectrum of levers that support clinicians in implementing EBPs [34]. The most common theme reflected in the ideas involved training. This was surprising given that over the last 10 years, DBHIDS has supported implementation of several EBPs in over 50 mental health and substance use provider agencies by funding training, consultation, coordination, administration through EPIC, and in some cases reimbursing for lost therapist time, in addition to providing an enhanced reimbursement rate [26, 27]. Despite this, clinicians within this system overwhelmingly reported that additional (free) training would increase their use of EBPs with their clients. Obviously, active training is an important vehicle to change therapist behavior; training also impacts knowledge and attitudes [41]. Nonetheless, training is not the answer to every implementation problem: even clinicians trained via current gold standard approaches (i.e., workshop, manual, and clinical supervision) often do not demonstrate fidelity [42].

One alternative and more parsimonious explanation for well-trained clinicians’ thirst for additional training is that, put simply, EBPs are hard. Almost every EBP requires a skilled interventionist to deliver a complex, multi-component repertoire of behaviors, while responding to the client’s inputs and needs. It is striking that two thirds of the ideas put forth in the tournament target training and/or clinician support tools. There is also evidence from the research literature suggesting that trained clinicians do not feel confident delivering EBPs [43]. If we start with an assumption that clinicians want to deliver EBPs and would like to feel confident that they can enact effective treatment, we might accelerate implementation if we invest in implementation strategies that make our treatments easier to do, rather than additional training initiatives. Future research on implementation strategy design might draw on insights from behavioral economics such as “nudges” and changing the choice architecture; simple checklists can make multistep procedures easier [44,45,46].

Consistent with our hypothesis that a significant portion of ideas would be financially motivated, over a quarter of the submitted ideas pertained to compensation and pay. As we found in our prior research, stakeholders note that EBPs are associated with higher marginal costs that need to be reimbursed and that existing reimbursement strategies rarely cover these higher costs [6, 25]. While there were ideas calling for additional lump compensation, there were relatively few ideas involving complex incentive structures such as those used in pay-for-performance or value-based payment models. This may reflect the “bottom up” approach of the innovation tournament; involving clinicians helped us identify new opportunities that would be overlooked if innovation was left to administrators and executives alone. Ultimately, a combination of “top down” and “bottom up” approaches may tell us most about how to motivate complex, expensive repertoires of behavior such as those required to implement EBPs.

There are limitations to the study design and methodology that deserve mention and highlight avenues for future research. First, because it draws from one system that is clearly aligned with promoting EBPs—this sample may not be representative of clinicians in this system or other public behavioral health systems. The specificity of implementation strategies that emerge from an innovation tournament conducted within a given service system is both an advantage and a limitation of this approach—the strategies developed through the tournament may be ideal for a particular context and system but may not generalize to other contexts and systems. Second, beyond “winning” the tournament, clinicians in our study were not promised that their ideas would be implemented. This may have restricted clinicians’ willingness to participate or encouraged ideas that were not “implementable.” Given evidence suggesting discordance between behavior and stated preferences [47], we framed tournament ideas as an “input” to design rather than relying entirely on stated preferences. Moving these inputs to design will require additional scholarship and analysis. Currently, we are re-analyzing the ideas emphasizing behavioral processes and barriers, not just elicited preferences, as part of a multifaceted approach towards implementation strategy design. Third, although our response rate was more than double that of the average innovation tournament, some tournaments have generated much higher participation rates, even in larger organizations. Future research should examine how participants’ expectations regarding the eventual implementation of their ideas influence engagement. Fourth, we elected to form a Challenge Committee of city administrators, agency stakeholders, and experts in behavioral economics to decide on the winners; a challenge committee comprised of different stakeholder groups (e.g., clinicians, patients) may have selected different strategies as winners. Future studies should compare whether different stakeholder groups select the same or different strategies and, most importantly, whether these choices predict the effectiveness of strategies used to improvement EBP implementation within the system.

Conclusion

Through a novel methodology for participatory design, findings from this study highlight the feasibility and utility of engaging clinicians—arguably the ultimate target of implementation strategies—through a structured, system-wide innovation tournament. Moreover, the innovation process does not have to end with the final and winning ideas [24]. Analysis of all of the ideas (or better yet, re-engagement with those who did not have winning ideas) can provide a fertile ground for future research. We believe the tournament succeeded in engaging stakeholders with critical expertise and provided valuable data that furthers the science of developing implementation strategies to improve the implementation of EBPs. More importantly, the success of our tournament was in engaging and empowering the community. Although we did not measure acceptability directly, the overwhelming enthusiasm for this project from clinicians, agency administrators, and city officials indicated to us that clinicians were pleased to be queried and felt validated that their ideas and viewpoints were not only heard, but celebrated. The clear implication to us is that involving clinicians in every stage of implementation (from strategy design to sustainability) consistent with community-participatory research is essential to designing effective implementation strategies that improve the quality of community-based care.

Availability of data and materials

Data will be made available upon request. Requests for access to the data can be sent in the Penn ALACRITY Data Sharing Committee. This Committee is comprised of the following individuals: Rinad Beidas, PhD, David Mandell, ScD, Kevin Volpp, MD, PhD, Alison Buttenheim, PhD, MBA, Steven Marcus, PhD, and Nathaniel Williams, PhD. Requests can be sent to the Committee’s coordinator, Kelly Zentgraf at zentgraf@upenn.edu, 3535 Market Street, 3rd Floor, Philadelphia, PA 19107, 215-746-6038.

Abbreviations

- DBHIDS:

-

Department of Behavioral Health and Intellectual disAbility Services (DBHIDS)

- EBP:

-

Evidence-based practice

- EPIC:

-

Evidence-Based Practice and Innovation Center

References

McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments: a review of current efforts. Am Psychol. 2010;652:73–84.

Aarons GA, Wells RS, Zagursky K, Fettes DL, Palinkas LA. Implementing evidence-based practice in community mental health agencies: a multiple stakeholder analysis. Am J Public Health. 2009;99:2087–95.

Hoagwood K, Burns BJ, Kiser L, Ringeisen H, Schoenwald SK. Evidence-based practice in child and adolescent mental health services. Psychiatr Serv. 2001;52:1179–89.

Kazdin AE. Evidence-based treatment and practice: new opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. Am Psychol. 2008;63:146–59.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21.

Beidas RS, Stewart RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, et al. A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Admin Pol Ment Health. 2016;43:893–908.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Admin Pol Ment Health. 2011;38:4–23.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44:177–94.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, McHugh SM, Weiner BJ. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. 2019;7:3. https://doi.org/10.3389/fpubh.2019.00003.

Lindhiem O, Bennett CB, Trentacosta CJ, McLear C. Client preferences affect treatment satisfaction, completion, and clinical outcome: a meta-analysis. Clin Psychol Rev. 2014;34:506–17.

Fleurence R, Selby JV, Odom-Walker K, Hunt G, Meltzer D, Slutsky JR, et al. How the patient-centered outcomes research institute is engaging patients and others in shaping its research agenda. Health Aff (Millwood). 2013;32:393–400.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Admin Pol Ment Health. 2016;43:783–98.

Simonsen J, Robertson T. Routledge international handbook of participatory design. New York: Routledge; 2013.

Pellecchia M, Mandell DS, Nuske HJ, Azad G, Wolk CB, Maddox BB, et al. Community–academic partnerships in implementation research. J Community Psychol. [cited 17 Jul 2018];0. Available from: https://onlinelibrary.wiley.com/doi/abs/10.1002/jcop.21981.

Green LW. Making research relevant: if it is an evidence-based practice, where’s the practice-based evidence? Fam Pract. 2008;25:i20–4.

Casey M, O’ Leary D, Coghlan D. Unpacking action research and implementation science: implications for nursing. J Adv Nurs. 2018;74:1051–8.

Sikkens JJ, van Agtmael MA, Peters EJG, Lettinga KD, van der Kuip M, Vandenbroucke-Grauls CMJE, et al. Behavioral approach to appropriate antimicrobial prescribing in hospitals: the Dutch Unique Method for Antimicrobial Stewardship (DUMAS) participatory intervention study. JAMA Intern Med. 2017;177:1130–8.

Frank L, Forsythe L, Ellis L, Schrandt S, Sheridan S, Gerson J, et al. Conceptual and practical foundations of patient engagement in research at the patient-centered outcomes research institute. Qual Life Res Int J Qual Life Asp Treat Care Rehab. 2015;24:1033–41.

Lyon AR, Koerner K. User-centered design for psychosocial intervention development and implementation. Clin Psychol Publ Div Clin Psychol Am Psychol Assoc. 2016;23:180–200.

Chambers DA, Azrin ST. Research and services partnerships: partnership: a fundamental component of dissemination and implementation research. Psychiatr Serv. 2013;64:509–11.

Ranard BL, Ha YP, Meisel ZF, Asch DA, Hill SS, Becker LB, et al. Crowdsourcing--harnessing the masses to advance health and medicine, a systematic review. J Gen Intern Med. 2014;29:187–203.

Asch DA, Terwiesch C, Mahoney KB, Rosin R. Insourcing health care innovation. N Engl J Med. 2014;370:1775–7.

Terwiesch C, Mehta SJ, Volpp KG. Innovating in health delivery: the Penn medicine innovation tournament. Healthcare. 2013;1:37–41.

Stewart RE, Adams DR, Mandell DS, Hadley TR, Evans AC, Rubin R, et al. The perfect storm: collision of the business of mental health and the implementation of evidence-based practices. Psychiatr Serv. 2016;67:159–61.

Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatr. 2015;169:374–82.

Powell BJ, Beidas RS, Rubin RM, Stewart RE, Wolk CB, Matlin SL, et al. Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Admin Pol Ment Health. 2016;43:909–26.

Creed TA, Stirman SW, Evans AC, Beck AT. A model for implementation of cognitive therapy in community mental health: the Beck Initiative. Behav Ther. 2010;37:56–65.

Stirman SW, Buchhofer R, McLaulin JB, Evans AC, Beck AT. The beck initiative: a partnership to implement cognitive therapy in a community behavioral health system. Psychiatr Serv. 2009;60:1302–4.

Cohen JA, Deblinger E, Mannarino AP, Steer R. A multi-site, randomized controlled trial for children with abuse-related PTSD symptoms. J Am Acad Child Adolesc Psychiatry. 2004;43:393–402.

Foa EB, Hembree EA, Cahill SP, Rauch SAM, Riggs DS, Feeny NC, et al. Randomized trial of prolonged exposure for posttraumatic stress disorder with and without cognitive restructuring: outcome at academic and community clinics. J Consult Clin Psychol. 2005;735:953–64.

Linehan MM, Comtois KA, Murray AM, Brown MZ, Gallop RJ, Heard HL, et al. Two-year randomized controlled trial and follow-up of dialectical behavior therapy vs therapy by experts for suicidal behaviors and borderline personality disorder. Arch Gen Psychiatry. 2006;63:757–66.

Thomas R, Abell B, Webb HJ, Avdagic E, Zimmer-Gembeck MJ. Parent-child interaction therapy: a meta-analysis. Pediatrics. 2017;140(3). https://doi.org/10.1542/peds.2017-0352.

Penn Medicine Center for Health Care Innovation; https://healthcareinnovation.upenn.edu/.

Dillman DA. The design and administration of mail surveys. Annu Rev Sociol. 1991;17:225–49.

Patel MS, Day SC, Halpern SD, et al. Generic medication prescription rates after health system–wide redesign of default options within the electronic health record. JAMA Intern Med. 2016;176(6):847–8. https://doi.org/10.1001/jamainternmed.2016.1691.

Meeker D, Linder JA, Fox CR, et al. Effect of behavioral interventions on inappropriate antibiotic prescribing among primary care practices: a randomized clinical trial. JAMA. 2016;315(6):562–70. https://doi.org/10.1001/jama.2016.0275.

Meeker D, Knight TK, Friedberg MW, et al. Nudging guideline-concordant antibiotic prescribing: a randomized clinical trial. JAMA Intern Med. 2014;174(3):425–31. https://doi.org/10.1001/jamainternmed.2013.14191.

Navathe AS, Volpp KG, Caldarella KL, et al. Effect of financial bonus size, loss aversion, and increased social pressure on physician pay-for-performance: a randomized clinical trial and cohort study. JAMA Netw Open. 2019;2(2):e187950. https://doi.org/10.1001/jamanetworkopen.2018.7950.

Ajslev JZN, Persson R, Andersen LL. Contradictory individualized self-blaming: a cross-sectional study of associations between expectations to managers, coworkers, one-self and risk factors for musculoskeletal disorders among construction workers. BMC Musculoskelet Disord. 2017;18 [cited 19 Mar 2019]. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5223457/.

Beidas RS, Kendall PC. Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clin Psychol Sci Pract. 2010;17:1–30.

Sholomskas D, Syracuse-Siewert G, Rousanville B, Ball S, Nuro K, Carroll K. We don’t train in vain: a dissemination trial of three strategies of training clinicians in cognitive behavioral therapy. J Consult Clin Psychol. 2005;73:106–15.

Mulkens S, de Vos C, de Graaff A, Waller G. To deliver or not to deliver cognitive behavioral therapy for eating disorders: replication and extension of our understanding of why therapists fail to do what they should do. Behav Res Ther. 2018;106:57–63.

Matjasko JL, Cawley JH, Baker-Goering MM, Yokum DV. Applying behavioral economics to public health policy. Am J Prev Med. 2016;50:S13–9.

Amos T, Daniel K. Prospect theory: an analysis of decision under risk: Econometrica; 1979. [cited 27 Aug 2018]; Available from: http://citec.repec.org/d/ecm/emetrp/v_47_y_1979_i_2_p_263-91.html

Patel MS, Volpp KG, Asch DA. Nudge units to improve the delivery of health care. N Engl J Med. 2018;378:214–6.

Gong CL, Hay JW, Meeker D, Doctor JN. Prescriber preferences for behavioural economics interventions to improve treatment of acute respiratory infections: a discrete choice experiment. BMJ Open. 2016;6:e012739.

Acknowledgements

We are especially grateful for the support that DBHIDS and EPIC have provided for this project. We gratefully acknowledge Deirdre Darragh and the Penn Medicine Your Big Idea Platform for administering the tournament. We thank David Mandell for his comments on a previous version of this manuscript. We would also like to thank all of the clinicians who participated in the study and all who helped recruit those clinicians, making it possible.

Funding

P50MH113840 (Beidas, Mandell, Volpp)

Author information

Authors and Affiliations

Contributions

All authors substantially contributed to the conception, design, and analysis of the work and provided final approval of the version to be published.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The Institutional Review Boards of the University of Pennsylvania and the City of Philadelphia approved all study procedures and all ethical guidelines were followed.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Stewart, R.E., Williams, N., Byeon, Y.V. et al. The clinician crowdsourcing challenge: using participatory design to seed implementation strategies. Implementation Sci 14, 63 (2019). https://doi.org/10.1186/s13012-019-0914-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-019-0914-2