Abstract

Background

While ‘dose’ is broadly understood as the ‘amount’ of an intervention, there is considerable variation in how this concept is defined. How we conceptualise, and subsequently measure, the dose of interventions has important implications for understanding how interventions produce their effects and are subsequently resourced and scaled up. This paper aims to explore the degree to which dose is currently understood as a distinct and well-defined implementation concept outside of clinical settings.

Methods

We searched four databases (MEDLINE, PsycINFO, EBM Reviews and Global Health) to identify original research articles published between 2000 and 2015 on health promotion interventions that contained the word ‘dose’ or ‘dosage’ in the title, abstract or keywords. We identified 130 articles meeting inclusion criteria and extracted data on how dose/dosage was defined and operationalised, which we then synthesised to reveal key themes in the use of this concept across health promotion interventions.

Results

Dose was defined in a variety of ways, including in relation to the amount of intervention delivered and/or received, the level of participation in the intervention and, in some instances, the quality of intervention delivery. We also observed some conflation of concepts that are traditionally kept separate (such as fidelity) either as slippage or as part of composite measures (such as ‘intervention dose’).

Discussion

Dose is not a well-defined or consistently applied concept in evaluations of health promotion interventions. While current approaches to conceptualisation and measurement of dose are suitable for interventions in organisational settings, they are less well suited to policies delivered at a population level. Dose often accompanies a traditional monotonic linear view of causality (e.g. dose response) which may or may not fully represent the intervention’s theory of how change is brought about. Finally, we found dose and dosage to be used interchangeably. We recommend a distinction between these terms, with ‘dosage’ having the advantage of capturing change to amount ‘dispensed’ over time (in response to effects achieved). Dosage therefore acknowledges the inevitable dynamic and complexity of implementation.

Similar content being viewed by others

Introduction

Implementation of effective interventions at scale is essential to improving population health [1]. Health promotion interventions often seek to address multiple risk factors simultaneously and at various levels, including the individual, interpersonal, organisational and/or environmental level. Such interventions are often implemented within complex systems, which may respond in unpredictable ways to the intervention [2]. The need to understand how such interventions are implemented is pressing, particularly if we are to draw conclusions about their effectiveness and enable implementation of the same interventions in different settings.

Several frameworks have been developed to guide the evaluation of implementation efforts [3,4,5,6,7,8]. A key concept in many of these frameworks is dose, broadly understood to refer to the ‘amount’ of intervention provided. Dose is a particularly important concept, as understanding how much of an intervention is delivered (and to whom) is critical if we are to replicate and scale up interventions, provide appropriate resourcing and have confidence that observed effects (or lack thereof) can be attributed to the intervention [9]. However, understanding dose in relation to health promotion interventions is not necessarily straightforward.

When it comes to the practice of medicine, dose is commonly understood to mean the amount of a treatment, usually a drug, delivered with the aim of achieving a particular physiological response [10]. While the therapeutic effect of the treatment may vary as a result of other factors (e.g. interactions with other medications, differences in metabolism), the measurement of the dose (i.e. amount) of a drug being delivered is usually straightforward and is typically measured in metric mass units (e.g. milligrammes). However, outside of medicine, the operationalisation and measurement of dose becomes more difficult.

How dose is conceptualised differs somewhat across implementation frameworks. For example, Dusenbury and colleagues [4] define dose as the ‘amount of program content received by participants’ (p. 241, italics added), while Dane and Schneider [3] refer to ‘exposure,’ which focuses on the delivery of the intervention and includes ‘(a) the number of sessions implemented; (b) the length of each session; or (c) the frequency with which program techniques were implemented’ (p. 45). Steckler and Linnan [11] distinguish between ‘dose delivered’, defined as the ‘number or amount of intended units of each intervention or each component delivered or provided,’ and ‘dose received,’ which is ‘the extent to which participants actively engage with, interact with, are receptive to, and/or use materials or recommended resources’ (p. 12). In some of these frameworks, dose is a single element of a larger framework. In others, dose is a composite concept made up of multiple elements. For example, Wasik and colleagues [12] distinguish between implementation and intervention dose and further subdivide the latter into the amount of intervention intended, offered, and received. Legrand and colleagues [7] provide an equation to calculate dose that involves measuring delivery quantity and quality and participation quantity and quality. Finally, Cheadle and colleagues [13] introduce the concept of ‘population dose’ which is defined as a product of the reach of an intervention and the strength of the effect (estimated as the effect on each person reached by the intervention). See Table 1 for an overview of how dose is conceptualised across these frameworks.

How we conceptualise and measure the dose of interventions has important implications for understanding how interventions produce their effects, and for whether and how interventions are resourced and scaled up. Policy makers want to know what they need to fund and implement to produce population-level health gains, while practitioners want to know ‘how much’ they need to do to ensure that health promotion programmes ‘work’ at a local level. As indicated in Table 1, there is considerable variation in how dose is conceptualised and measured. These incongruences are important regardless of whether one subscribes to the view that the fidelity of the intervention lies in the faithful replication of particular core programme components [15] or in more complex understanding of the intervention where effects are seen to derive more from interaction with the dynamic properties of the system into which the intervention is introduced [16, 17]. It is therefore vital to think critically about what dose means so that researchers and policymakers can gain the evidence they need to inform decisions.

Aim

The variation in how dose is defined across the frameworks in Table 1 prompted us to undertake an investigation of the nature and extent of differences in how dose has been conceptualised and measured in the implementation of health promotion interventions. We chose to focus our review on health promotion interventions because of the wide variation of intervention types that exist in this space. Health promotion interventions may include, for example, educational, behavioural, environmental, regulatory and/or structural actions, such as mass media campaigns; legislation and regulation; changes to infrastructure; and community, school, and workplace health and safety programmes. In doing so, we sought to identify key elements of dose that have been monitored during implementation and understand the degree of variation in the application of dose across interventions.

Methods

A scoping review was used as it allows for rapid mapping of the key concepts underpinning a research area [18]. The methodology for this scoping review was based on the framework outlined by Arksey and O’Malley [19] and ensuing recommendations made by Levac and colleagues [20].

Identifying relevant studies

We searched four electronic databases, MEDLINE, PsycINFO, Embase and Global Health, to identify articles published in English. See Table 2 for search terms and strategy. Search terms were piloted and refined prior to use, including consultation with experts and checking for capture of studies that the authors expected to be included.

Study selection

After removing duplicates, a total of 4611 articles were identified. Given the large number of results, we decided to further focus the search by identifying a subset of articles that contained the term ‘dose’ or ‘dosage’ within the title, abstract or key words, using a structured field search in Endnote.

The criteria for study inclusion were refined through discussion among the research team as the reviewers became more familiar with the research [20]. A two-stage screening process was used to select studies for inclusion: (1) title and abstract review and (2) full-text review. Articles were included if they were peer-reviewed, original research which used the concept of dose in relation to a health promotion intervention. Studies of medical treatments (e.g. medication, surgery), conference abstracts and dissertations were excluded. Studies of effectiveness were included, as well as studies of diffusion and scale-up, as both types of studies have the opportunity to operationalise dose as part of intervention implementation. Hence, both types of studies can inform ideas about best ways to measure dose.

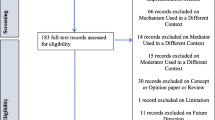

Title and abstract review was conducted by one reviewer (SR), and a second reviewer (KC) reviewed a random sample of 77 articles (20%) for reliability purposes. Percentage agreement was 95% (Cohen’s kappa k = 0.90) and all disagreements were discussed and resolved. Figure 1 outlines the flow of articles through the review process.

Data extraction and synthesis

We developed a template to extract study characteristics, intervention details, sample, dose terms and definitions and other implementation concepts (see Additional file 1 for data extraction template). The template was pretested on a randomly selected subset of five articles. The characteristics of each full-text article were then extracted by one reviewer, while a second reviewer performed data extraction on a random sample of 20% of articles to check for consistency. There was 91% agreement (k = 0.52) between the coders and all disagreements were discussed and resolved.

Extracted data were imported into NVivo qualitative data management software [21] for further coding and synthesis using an inductive approach to identify key patterns in how dose was defined and operationalised across studies, and the original articles were revisited frequently to check interpretations. Data synthesis was performed by one reviewer (SR) and refined through ongoing discussion with other authors.

Results

Characteristics of included articles

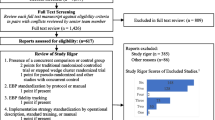

A total of 130 articles were included in the review. Interventions were conducted across a range of settings, most frequently school, workplace and health care settings. The most common types of interventions were those which aimed to provide information or education or increase awareness about an issue, such as school curriculums, mass media campaigns and information leaflets. Interventions that sought to support behaviour change through strategies such as goal setting, motivational interviewing and counselling were also common in this sample. Less common were interventions providing financial incentives or involving restrictions, regulation or structural and environmental change. See Fig. 2 for a summary of article characteristics and Additional file 2 for details of all included articles.

Characteristics of included articles. Bar charts depicting the key characteristics of studies in the sample. From top left to bottom right: (1) intervention target: number of studies targeting each health behaviour or disease; (2) intervention setting: number of studies conducted within each setting; (3) type of study: number of studies that were defined as protocol, process or outcome evaluations; and (4) continent: number of studies conducted in each continent

The number of articles using the concept of dose has increased in recent years, with over two-thirds of the papers in our sample having been published since 2010. Within our sample, physical activity and nutrition were the most frequent intervention targets for studies using the concept of dose. A cumulative frequency chart (see Fig. 3) indicates that the use of ‘dose’ in interventions targeting nutrition and diet, physical activity and weight management has increased considerably in the last decade.

Cumulative frequency of research articles using ‘dose’ over time. Number of studies containing reference to the concept of dose according to health topic. For clarity/ease of interpretation, intervention target categories containing fewer than five studies in total across the sample timeframe (diabetes prevention, drugs and alcohol, oral health, cancer risk, CVD risk) are not shown in the cumulative frequency graph

Variation in terms used to refer to the concept of ‘dose’

Across the sample, there was a range of terms used to refer to dose. Here, we provide a brief overview of these terms and how they were applied. In the subsequent section, we unpack the operationalisation of dose in more depth.

Dose and dosage were used somewhat interchangeably

Nearly half of the studies used the term dose (n = 56), with a handful using the term dosage (n = 7). It is worthwhile noting that in medicine, the terms ‘dose’ and ‘dosage’ refer to different things: dose refers to the amount of medication (usually measured by weight) given at a single time, while dosage refers to amount of medication per unit of time (i.e. the rate at which dose is administered) and implies a medication regimen rather than a single administration. Within our sample, these terms were used somewhat interchangeably and did not align with the definitions used in medicine. In particular, the term ‘dose’ was used in a variety of ways, sometimes to refer to the amount of intervention at a single time point, but often also to refer to the delivery or receipt of the intervention over time. For example, Ayala and colleagues [22] measured ‘intervention dose’ as ‘the number of classes each community participant attended between baseline and 6 months and between 6 and 12 months’ (p. 2264). The term ‘dosage’ was generally used to refer to the proportion, duration or frequency of intervention components over the intervention period. For example, Fagan and colleagues [23] defined programme dosage as ‘the extent to which programs achieved the required number, length, and frequency of sessions’ (p. 244).

Dose delivered and dose received were well differentiated

The terms dose delivered (n = 33) and dose received (n = 28) were commonly used, frequently appearing together as differentiated concepts within the same papers (n = 27). For example, Curran and colleagues [24] defined dose delivered as ‘the amount of intended units of each intervention component provided to target audience’ and dose received as ‘the extent of engagement of participants’ (p. 721).

Dose-response was captured in about half of the studies

Sixty-four studies (49%) went beyond simply defining and measuring dose and linked their measure of dose to the outcomes of the study. For example, in their evaluation of phone-based weight loss programmes, Sherwood and colleagues [25] defined dose as the number of telephone counselling sessions provided and reported that more counselling calls were associated with greater weight loss and a higher frequency of engaging in weight-related self-monitoring behaviours. It was most common to use the term ‘dose-response’ although three studies used the term ‘dose-effect’ to mean the same thing.

Some studies developed new terms to capture particular aspects of dose

Occasionally, new terms were introduced to identify particular aspects of dose. For example, two studies [26, 31] used the term ‘population dose’ to refer to the effect size of an intervention, calculated as intervention reach multiplied by effect size for each person. This measure of dose attempts to capture the impact of interventions delivered at the population level, where traditional measures of dose may fall short.

Other terms used to refer to dose included ‘minimum dose sample’ to refer to a sample of participants who participated to a minimum extent [27] and ‘dose intensity’ which was defined as the relative difference in intensity of dose across similar versions of the same intervention [28] In this case, a ‘higher dose intensity’ in a church-based lifestyle intervention involved adding, for example, follow-up phone calls, increasing the number of sessions and providing on-site equipment.

Wasik and colleagues [12] argued that dosage must be considered on two levels: (1) the intervention staff learning new skills and (2) the target group (intended beneficiaries). They suggest that one component of what they call implementation dosage ‘refers to implementation activities necessary for intervention to be carried out with fidelity’. They further suggest that another necessary consideration is what they call intervention dosage which is the amount of intervention provided that is necessary to change the target group’s behaviour. These factors, they argue, are critical for cost, staffing, replication and scale-up considerations. However, the new term is an umbrella for several variables: a conventional view of dose (amount of an intervention), its delivery over time, its potency (capacity to bring about an effect) and interaction with context. The term implies a critical threshold level of dosage. Critical levels of the other factors need to be examined as well.

Variation in how dose was operationalised

While most studies provided a definition of dose and/or detail on how it was operationalised, there was some variation in how this was presented. Table 3 presents an overview of how dose has been operationalised across studies. The main way in which dose was operationalised was in terms of the amount of intervention delivered and/or received, with these aspects being relatively well delineated. However, in some instances, there was an apparent conflation of dose with other concepts such as fidelity to planned intervention and participant satisfaction. These aspects are unpacked in more detail below.

Dose as intervention delivered

Consistent with the frameworks outlined in Table 1, a large proportion of studies operationalised dose in terms of the amount of intervention delivered, considered as a function of the efforts of intervention providers. Most frequently, this was captured using measures such as the number of intervention components delivered (e.g. number of lessons, posters and stickers delivered; [29]), frequency or duration of intervention components (e.g. lesson length; [30]) and time spent on intervention activities [31]. For example, Baquero and colleagues [32] measured dose delivered as the number and length of home visits completed, Hall and colleagues [33] as the duration of education classes delivered, and Rosecrans and colleagues [8] as the number of intervention materials (food samples, flyers and recipes distributed).

A handful of studies operationalised dose as the intensity of intervention delivery by creating discrete categories. For example, Koniak-Griffin and colleagues [34] considered the effect of ‘treatment dosage (intensity)’ such that ‘participants were classified into two categories (low/medium and high intensity levels)’ (p. 80) based on class attendance and teaching and coaching contacts received, while Rubinstein and colleagues [35] examined ‘potential changes in outcomes with increasing intensity (dose) of the intervention’ (p. 56).

Some studies also considered completeness of intervention delivery, where the number of intervention components delivered was considered as a proportion of those planned (e.g. [23, 31, 36]). One school-based study called this ‘fidelity to classroom dose’ and while time spent on each activity was also measured, it was referred to as ‘duration’, not dose [37]. Given that fidelity of an intervention might manifest in a number of ways (e.g. teaching quality, information accuracy), this non-conventional conflating of fidelity and dose together as a term could mislead readers who do not take care to read the details of author’s methods. But it was not uncommon; a number of studies used composite measures of dose that incorporated a range of elements including aspects of quality of communication. For example, in their evaluation of a school-based tobacco intervention, Goenka and colleagues [38] refer to ‘dose given (completeness)’, which they define as ‘the quantity and rigour of implementation of the intended intervention units that are actually delivered to the participants’ (p. 925, italics added). This is calculated as a composite score across a range of variables, including percentage of classroom sessions and intra-session elements delivered, proportion of posters displayed, proportion of postcards delivered, participation in an inter-school event, proportion of teachers and student peer leaders trained to deliver the intervention and proportion of sessions where teachers and peer leaders communicated well [38]. As such, their conceptualisation of ‘dose given’ contains a number of different elements of dose related not only to quantity of dose delivered, but also quality of delivery and implementation dose (i.e. training to deliver the intervention). These authors then investigated which aspects were most strongly associated with programme effects. Quality of delivery and implementation dose would typically be considered to be distinct from dose delivered, so referring to these together within the composite term ‘dose given’ could potentially mislead the reader.

Dose as intervention received

Another common way in which dose was operationalised was in terms of the amount of intervention received by participants, which was generally captured in one of two ways, implying either an active or passive role for participants. Measures of dose that implied a more passive role for participants included the number of intervention components received by participants (such as telephone calls, leaflets, home visits; e.g. [39,40,41]). A number of studies also used the concepts of exposure and reach. For example, Lee-Kwan and colleagues [42] conducted a modified Intervention Exposure Assessment survey to assess whether people had seen the intervention materials (within a retail setting). In the context of media campaigns, both Farrelly and colleagues [43] and Huhman and colleagues [44] referred to dose in terms of reach or exposure to the campaign, to examine the dose-response. Similarly, Birnbaum and colleagues [45] indicated that participants could be identified as ‘belonging to one of four exposure groups (or “doses”): (1) control group: lowest exposure, (2) school environment interventions only, (3) classroom curriculum plus school environment interventions, and (4) peer leaders plus classroom curriculum plus school environment interventions: highest exposure’ (p. 428). Here, dose was defined according to the logic of the intervention designers, with some categories being reasoned to be lower or higher than another in terms of what appears to be the intervention’s penetration into different ecological levels (i.e., organisational level versus group level).

Concerning measures of dose that implied a more active role for participants, Steckler and Linnan [11] define dose received as the extent to which participants engage or interact with, are receptive to or use an intervention, such that dose received is a function of the actions of intervention participants. Examples from studies in our sample included those measuring attendance and participation rates [46, 47], time spent engaged in intervention activities [48], use of intervention components [49] and completion of intervention activities [8, 50,51,52]. A number of studies also considered dose received in terms of the extent to which participants actively engaged with intervention components [38, 47, 49]. One study also measured the amount of intervention received in terms of the number of activities remembered by participants at the end of the intervention [31]. The last example could be considered problematic if used on its own as, cognitively speaking, remembering an activity is a step beyond being exposed to it and programme effects may or may not be captured through conscious recall processes. However, the school-based health education programme being assessed in that study also used observer rating of lessons delivered, and teacher reports of components delivered to assess differences in participant-perceived dose and dose as measured by observers and ‘deliverers’ [31].

As with dose as intervention delivered, some studies conflated dose received with other implementation concepts. For example, Berendsen and colleagues [53] studied a lifestyle intervention in primary care settings and ‘dose received was defined as participant satisfaction and perception of the program that was delivered to them’ (p. 4). While the latter idea (perception that the programme was delivered) would commonly be used as a way of tapping into dose received, the former (satisfaction) might be thought of as something separate. This illustrated a tendency in the literature to characterise dose in terms of the extent to which the intervention met a particular criteria. Another study [54] clearly makes a conceptual distinction between dose received and satisfaction throughout the paper, but measuring and listing them together, i.e. as ‘dose received (exposure and satisfaction)’ (p. 76) could mislead readers into thinking they were treated as one and the same.

One investigator team reported on participants’ ‘evaluation of dose received’ [55] and defined this as the participants’ views about intervention feasibility. This is not conflating the definition of feasibility with dose. Evaluation involves two processes: (1) observation/measurement and (2) judgement [56]. By declaring that they are ‘evaluating’, both processes are assumed and indeed in this study, both processes were performed. However, the use of the words ‘evaluating’ dose as opposed to ‘measuring’ dose could mislead readers not familiar with these distinctions from the field of evaluation to think there is definitional slip about dose.

A small proportion of studies referred to ‘dose’ but did not operationalise it in their methods

While most studies provided some detail on how dose was measured, 15 (11.5%) studies referred to dose without any further detail. Of these, most used the term dose when interpreting their results but did not refer to the measurement or operationalisation of dose within their methods or findings. For example, Kelishadi and colleagues [57] state that ‘the overall lower increase of junk food consumption … showed that the dose or intensity of our community-based and school-based interventions, although not sufficient, was necessary to act against other forces in the community’ [57] but did not refer to the measurement of dose elsewhere within the main body of the paper. The effect was that it was not clear what was actually considered the dose of the intervention (i.e. what components and how much of these was considered to have brought about the observed effect).

Discussion

We have presented the results of a scoping review exploring how dose is defined and operationalised in the implementation and evaluation of health promotion interventions. The findings suggest that there are some commonalities in how dose was defined and operationalised across studies, with studies most commonly focusing on measurements of (1) the amount of intervention delivered, where dose is conceptualised as a function of intervention providers and the focus is on the supply side of the intervention and (2) the amount of intervention received, where the focus is on how much of the intervention the recipients’ actually get, sometimes conceptualised as a function of the actions of recipients (e.g. whether they attend sessions or collect intervention materials). Most of the studies within this review measured dose in some form and in that sense, we note that they complied with the Template for Intervention Description and Replication (TIDieR) guidelines for intervention reporting (Item 8: ‘when and how much’) [58].

However, in many instances, it would not have been easy for study authors to fit health promotion interventions into the conceptual framing of dose delivered and dose received. While some aspects of health promotion interventions allow for such a separation, for example, dose delivered as the number of intervention materials (e.g. leaflets, posters) displayed within an intervention site or dose received as the number of intervention materials taken away by participants, the distinction becomes somewhat arbitrary for components that concern participant interaction and engagement (such as team competitions) [52]. Similarly, the separation of components like phone calls and home visits (i.e. events for which delivery and receipt are inseparable) into categories of ‘delivered and received’ could seem somewhat artificial. However, despite these difficulties, the distinction between dose delivered and dose received is important. In a notable example, Wilson and colleagues demonstrated that their intervention achieved moderate to high levels of dose delivered and only moderate levels of dose received and that the former was better than the latter in terms of achieving health outcomes. This was sufficient for them to recommend to others replicating their intervention to spend more time making sure components of interventions are delivered and to worry less about people completing all the activities as directed [52].

All studies were limited by what was measurable and hence investigators may have had to devise a proxy for what might have been their preferred way to measure dose. Within environmental interventions dose measurement is often based on recall or self-report of what people saw (e.g. [42]) although this is not, strictly speaking, the same as being exposed. Identifying what should be measured in order to capture dose is also likely to be problematic when considering population-level policy interventions, such as increasing the amount of urban green space in order to improve health [59]. For example, should ‘dose’ be counted as the number of new trees planted, amount of coverage of green space relative to size of neighbourhood or something else? Traditional conceptualisations of dose as intervention components delivered and received are not easily applied to such interventions. Such considerations have important implications for how interventions are planned, resourced and delivered, highlighting the need for critical thought about how we capture the ‘dose’ of population level interventions that go beyond ‘traditional’ health promotion interventions. While our review highlighted some novel attempts to capture dose, we may need to look more to disciplines like geography and political science in order to further the notion of dose as applied to population-level policy interventions.

Unfortunately, our capacity to review innovative approaches to how policy and environmental interventions in health promotion are addressing ‘dose’ may have been limited by our choice of ‘dose’ and ‘dosage’ as our primary search terms. For example, if a study assessed the impact of policy exposure without using the word dose, our search would not have captured this. This was the chief limitation of our study design and is a clear priority area for further investigation. Progress in the scanning and benchmarking of international obesity policy could provide an example in this direction [60].

A few investigators expanded on the traditional concept of dose, seeking to combine it with other elements to tell a story that is more than just amount received or delivered. As discussed previously, Wasik and colleagues [12] developed the term implementation dosage to refer to activities needed to achieve fidelity and intervention dosage (defined as the amount of intervention needed to change the target group’s behaviour). In a similar vein, Cheadle and colleagues [13, 26] offer the concept of population dose, defined as ‘the estimated community-level change in the desired outcome expected to result from a given community-change strategy, operationalized as the product of penetration (reach divided by target population) and effect size’ [13]. This approach has been applied to measurement of the impact of community, organisation and school level policy and environmental changes to improve physical activity and nutrition [26, 61]. Cheadle reports that he and his team first ‘tested’ the lay understanding of dose with health department practitioners, community groups, funders and the federal government and decided to build on it because it conveys the notion of ‘active ingredient’ as something that makes something happen [15]. The ideas of both Wasik et al. and Cheadle et al. draw attention to the dynamics of the change process and the role of dose in the change process. The idea of ‘intervention dose’ (although Wasik et al. use ‘dosage’) has caught on, with researchers now applying it to fields like (medical) quality improvement where they define intervention dose as quantity, exposure, intensity, scope, reach, engagement, duration and quality.

In a sense, therefore, ‘dose’ has become the gateway for the appreciation of factors that others would consider to be part of process evaluation, such as fidelity or rigour [11]. Whether this is a problem depends on whether these conceptualisations of dose lead to an over-simplification of ‘what’ has to be transported for place-to-place achieve effects. That is, if the measurement of dose overemphasises the intervention at the expense of understanding contextual dynamics. Cotterill and colleagues have recently suggested changes to the TIDieR guidelines in acknowledgement that all aspects of an intervention can change over time and researchers need to be alert to differences arising through adaptation [62]. A population health and policy version of the checklist has since been launched which, in addition to tracking planned and unplanned variation, calls for the date and duration of intervention to be reported [63]. Researchers have also been urged to fully appreciate the complex, non-monotonic ways in which dose response is brought about [64]. This implies a need to not ‘over assume’ the criticality of dose, vis-à-vis other options, such as the critical threshold aspects within the context (e.g. demographics, interactivity, existing resources).

Another implication is that researchers should measure dose in multiple ways and fully interrogate the meaning of the results. An exemplar in this regard is the work by Goenka and colleagues [38] in evaluating a school-based tobacco prevention programme in schools in India. They found that the proportion of teachers trained to deliver the intervention was a better predictor of the results than time spent on the intervention. The findings point to the possibility of a multi-strand pathway of change in school settings where, in this instance, the proportion of teachers trained acts as an alternative or additional casual pathway to health outcomes [16]. This idea invites the use of complicated and/or complex logic models in intervention evaluation where the ‘dose’ of multiple factors, and the way each interacts with the others, become critical considerations [65]. Interactions among causal factors might explain non-linear, dose-response relationships where the same ‘amount’ delivered at different times in an intervention’s history or in different contexts has different proportional effects on outcome, because of the cumulative effects of reinforcing feedback loops and/or the existence of threshold effects or ‘tipping points’ [65].

Conclusion

It seems vital that researchers in health promotion continue to measure dose in multiple ways, and further explore how to define and measure the dose of population-level interventions and policies. We suggest researchers also reverse their tendency to use ‘dose’ and ‘dosage’ interchangeably. Dosage’s focus on rate of delivery over time requires researchers to monitor response to ‘dose’ and adjust accordingly. It embraces the complexity of intervention design and evaluation that is now being more widely acknowledged [66, 16].

Abbreviations

- TIDieR:

-

Template for Intervention Description and Replication

References

Milat AJ, King L, Bauman A, Redman S. Scaling up health promotion interventions: an emerging concept in implementation science. Health Promot J Aust. 2011;22:238.

Moore G, Audrey S, Barker M, Bond L, Bonell C, Cooper C, et al. Process evaluation in complex public health intervention studies: the need for guidance. J Epidemiol Community Health. 2014;68:101.

Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: are implementation effects out of control? Clin Psychol Rev. 1998;18:23–45.

Dusenbury L, Brannigan R, Falco M, Hansen WB. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings, vol. 18; 2003. p. 237–56.

Baranowski T, Stables G. Process evaluations of the 5-a-day projects. Health Educ Behav. 2000;27:157–66.

Saunders RP, Evans MH, Joshi P. Developing a process-evaluation plan for assessing health promotion program implementation: a how-to guide. Health Promot Pract. 2005;6:134–47.

Legrand K, Bonsergent E, Latarche C, Empereur F, Collin JF, Lecomte E, et al. Intervention dose estimation in health promotion programmes: a framework and a tool. Application to the diet and physical activity promotion PRALIMAP trial. BMC Med Res Methodol. 2012;12:146.

Rosecrans AM, Gittelsohn J, Ho LS, Harris SB, Naqshbandi M, Sharma S. Process evaluation of a multi-institutional community-based program for diabetes prevention among first nations. Health Educ Res. 2008;23:272–86.

Scheirer MA, Shediac MC, Cassady CE. Measuring the implementation of health promotion programs: the case of the breast and cervical cancer program in Maryland. Health Educ Res. 1995;10:11–25.

National Cancer Institute. NCI dictionary of Cancer terms. 2018. https://www.cancer.gov/publications/dictionaries/cancer-terms/def/dose. Accessed 8 May 2019.

Steckler AB, Linnan L. Process evaluation for public health interventions and research. Jossey-Bass San Francisco, CA; 2002.

Wasik BA, Mattera SK, Lloyd CM, Boller K. Intervention dosage in early childhood care and education: it’s complicated. Washington, DC: Office of Planning, Research and Evaluation, Administration for Children and Families, U.S. Department of Health and Human Services; 2013.

Cheadle A, Schwartz P, Rauzon S, Bourcier E, Senter S, Spring R. Using the concept of "population dose" in planning and evaluating community-level obesity prevention initiatives. Am J Eval. 2013;34:71–84.

Collins LM, Murphy SA, Bierman KL. A conceptual framework for adaptive preventive interventions. Prev Sci. 2004;5:185–96.

Bellg AJ, Borrelli B, Resnick B, Hecht J, Minicucci DS, Ory M, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH behavior change consortium. Health Psychol. 2004;23:443.

Hawe P. Lessons from complex interventions to improve health. Annu Rev Public Health. 2015;36:307–23.

Hawe P, Shiell A, Riley T. Theorising interventions as events in systems. Am J Community Psychol. 2009;43:267–76.

Mays N, Roberts E, Popay J. Synthesising research evidence In: Fulop N, Allen P, Clarke A, Black N, editors. Studying the organisation and delivery of health services: research methods; 2001. p. 188–220.

Arksey H, O'Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8:19–32.

Levac D, Colquhoun H, O'Brien KK. Scoping studies: advancing the methodology. Implement Sci. 2010;5:69.

QSR International Pty Ltd. NVivo pro software. In., 11 edn; 2015.

Ayala GX. Effects of a promotor-based intervention to promote physical activity: familias sanas y activas. Am J Public Health. 2011;101:2261–8.

Fagan AA, Hanson K, Hawkins JD, Arthur MW. Bridging science to practice: achieving prevention program implementation fidelity in the community youth development study. Am J Community Psychol. 2008;41:235–49.

Curran S, Gittelsohn J, Anliker J, Ethelbah B, Blake K, Sharma S et al. Process evaluation of a store-based environmental obesity intervention on two American Indian Reservations. Process evaluation of a store-based environmental obesity intervention on two American Indian reservations 2005;20:719–29.

Sherwood NE, Jeffery RW, Welsh EM, VanWormer J, Hotop AM. The drop it at last study: six-month results of a phone-based weight loss trial. Am J Health Promot. 2010;24:378–83.

Cheadle A, Rauzon S, Spring R, Schwartz PM, Gee S, Gonzalez E, et al. Kaiser Permanente’s community health initiative in northern California: evaluation findings and lessons learned. Am J Health Promot. 2012;27:e59–68.

Hwang KO, Ottenbacher AJ, Graham AL, Thomas EJ, Street RL Jr, Vernon SW. Online narratives and peer support for colorectal cancer screening: a pilot randomized trial. Am J Prev Med. 2013;45:98–107.

Thomson JL, Goodman MH, Tussing-Humphreys L. Diet quality and physical activity outcome improvements resulting from a church-based diet and supervised physical activity intervention for rural, southern, African American adults: Delta body and soul III. Health Promot Pract. 2015;16:677–88.

Chittleborough CR, Nicholson AL, Young E, Bell S, Campbell R. Implementation of an educational intervention to improve hand washing in primary schools: process evaluation within a randomised controlled trial. BMC Public Health. 2013;13:757.

Nicklas TA, Nguyen T, Butte NF, Liu Y. The children in action pilot study. Int J Child Health Nutr. 2013;2:296–308.

Buckley L, Sheehan M. A process evaluation of an injury prevention school-based programme for adolescents. Health Educ Res. 2009;24:507–19.

Baquero B, Ayala GX, Arredondo EM, Campbell NR, Slymen DJ, Gallo L, et al. Secretos de la Buena Vida: processes of dietary change via a tailored nutrition communication intervention for Latinas. Health Educ Res. 2009;24:855–66.

Hall WJ, Zeveloff A, Steckler A, Schneider M, Thompson D, Trang P, et al. Process evaluation results from the HEALTHY physical education intervention. Health Educ Res. 2012;27:307–18.

Koniak-Griffin D, Brecht M-L, Takayanagi S, Villegas J, Melendrez M, Balcazar H. A community health worker-led lifestyle behavior intervention for Latina (Hispanic) women: feasibility and outcomes of a randomized controlled trial. Int J Nurs Stud. 2015;52:75–87.

Rubinstein A, Miranda JJ, Beratarrechea A, Diez-Canseco F, Kanter R, Gutierrez L, et al. Effectiveness of an mHealth intervention to improve the cardiometabolic profile of people with prehypertension in low-resource urban settings in Latin America: a randomised controlled trial. Lancet Diabetes Endocrinol. 2016;4:52–63.

Ross MW, Essien EJ, Ekong E, James TM, Amos C, Ogungbade GO, et al. The impact of a situationally focused individual human immunodeficiency virus/sexually transmitted disease risk-reduction intervention on risk behavior in a 1-year cohort of Nigerian military personnel. Mil Med. 2006;171:970–5.

Day ME, Strange KS, McKay HA, Naylor P-J. Action schools! BC--healthy eating: effects of a whole-school model to modifying eating behaviours of elementary school children. Can J Public Health. 2008;99:328–31.

Goenka S, Tewari A, Arora M, Stigler MH, Perry CL, Arnold JPS, et al. Process evaluation of a tobacco prevention program in Indian schools--methods, results and lessons learnt. Health Educ Res. 2010;25:917–35.

Caldera D, Burrell L, Rodriguez K, Crowne SS, Rohde C, Duggan A. Impact of a statewide home visiting program on parenting and on child health and development. Child Abuse Negl. 2007;31:829–52.

Goode AD, Winkler EAH, Lawler SP, Reeves MM, Owen N, Eakin EG. A telephone-delivered physical activity and dietary intervention for type 2 diabetes and hypertension: does intervention dose influence outcomes? Am J Health Promot. 2011;25:257–63.

Lane A, Murphy N, Bauman A. An effort to ‘leverage’ the effect of participation in a mass event on physical activity. Health Promot Int. 2015;30:542–51.

Lee-Kwan SH, Goedkoop S, Yong R, Batorsky B, Hoffman V, Jeffries J, et al. Development and implementation of the Baltimore healthy carry-outs feasibility trial: process evaluation results. BMC Public Health. 2013;13.

Farrelly MC, Davis KC, Haviland ML, Messeri P, Healton CG. Evidence of a dose-response relationship between “truth” antismoking ads and youth smoking prevalence. Am J Public Health. 2005;95:425–31.

Huhman ME, Potter LD, Duke JC, Judkins DR, Heitzler CD, Wong FL. Evaluation of a national physical activity intervention for children: VERB campaign, 2002-2004. Am J Prev Med. 2007;32:38–43.

Birnbaum AS, Lytle LA, Story M, Perry CL, Murray DM. Are differences in exposure to a multicomponent school-based intervention associated with varying dietary outcomes in adolescents? Health Educ Behav. 2002;29:427–43.

Oude Hengel KM, Blatter BM, van der Molen HF, Joling CI, Proper KI, Bongers PM, et al. Meeting the challenges of implementing an intervention to promote work ability and health-related quality of life at construction worksites: a process evaluation. J Occup Environ Med. 2011;53:1483–91.

Robbins LB, Pfeiffer KA, Wesolek SM, Lo Y-J. Process evaluation for a school-based physical activity intervention for 6th- and 7th-grade boys: reach, dose, and fidelity. Eval Program Plann. 2014;42:21–31.

Sharma SV, Shegog R, Chow J, Finley C, Pomeroy M, Smith C, et al. Effects of the quest to Lava Mountain computer game on dietary and physical activity behaviors of elementary school children: a pilot group-randomized controlled trial. J Acad Nutr Diet. 2015;115:1260–71.

Coffeng JK, Hendriksen IJM, van Mechelen W, Boot CRL. Process evaluation of a worksite social and physical environmental intervention. J Occup Environ Med. 2013;55:1409–20.

Bolier L, Haverman M, Kramer J, Westerhof GJ, Riper H, Walburg JA, et al. An internet-based intervention to promote mental fitness for mildly depressed adults: randomized controlled trial. J Med Internet Res. 2013;15:209–26.

Buller DB, Borland R, Woodall WG, Hall JR, Hines JM, Burris-Woodall P, et al. Randomized trials on consider this, a tailored, internet-delivered smoking prevention program for adolescents. Health Educ Behav. 2008;35:260–81.

Wilson MG, Basta TB, Bynum BH, DeJoy DM, Vandenberg RJ, Dishman RK. Do intervention fidelity and dose influence outcomes? Results from the move to improve worksite physical activity program. Health Educ Res. 2010;25:294–305.

Berendsen BAJ, Kremers SPJ, Savelberg HHCM, Schaper NC, Hendriks MRC. The implementation and sustainability of a combined lifestyle intervention in primary care: mixed method process evaluation. BMC Fam Pract. 2015;16:37.

Androutsos O, Apostolidou E, Iotova V, Socha P, Birnbaum J, Moreno L, et al. Process evaluation design and tools used in a kindergarten-based, family-involved intervention to prevent obesity in early childhood. The ToyBox-study. Obes Rev. 2014;15:74–80.

Branscum P, Sharma M, Wang LL, Wilson B, Rojas-Guyler L. A process evaluation of a social cognitive theory-based childhood obesity prevention intervention: the comics for health program. Health Promot Pract. 2013;14:189–98.

Deniston OL. Whether evaluation — whether utilization. Eval Program Plann. 1980;3:91–4.

Kelishadi R, Sarrafzadegan N, Sadri GH, Pashmi R, Mohammadifard N, Tavasoli AA, et al. Short-term results of a community-based program on promoting healthy lifestyle for prevention and control of chronic diseases in a developing country setting: Isfahan healthy heart program. Asia Pac J Public Health. 2011;23:518–33.

Hoffman T, Glasziou P, Bouton I, Milne R, Perera R. Better reporting of interventions: template for intervention description and replication checklist guide (TIDieR). BMJ. 2014.

Jorgensen A, Gobster PH. Shades of green: measuring the ecology of urban green space in the context of human health and well-being. Shades of green: measuring the ecology of urban green space in the context of human health and well-being 2010;5:338–363.

Sacks G, The food-EPI Australia project team. Policies for tackling obesity and creating healthier food environments: scorecard and priority recommendations for Australian governments. Melbourne: Deakin University; 2017.

Heelan KA, Bartee RT, Nihiser A, Sherry B. Healthier school environment leads to decreases in childhood obesity: the Kearney Nebraska story. Child Obes. 2015;11:600–7.

Cotterill S, Knowles S, Martindale A-M, Elvey R, Howard S, Coupe N, et al. Getting messier with TIDieR: embracing context and complexity in intervention reporting. BMC Med Res Methodol. 2018;18.

Campbell M, Katikireddi SV, Hoffmann T, Armstrong R, Waters E, Craig P. TIDieR-PHP: a reporting guideline for population health and policy interventions. BMJ. 2018;361:k1079.

Fedak KM, Bernal A, Capshaw ZA, Gross S. Applying the Bradford Hill criteria in the 21st century: how data integration has changed causal inference in molecular epidemiology. Emerg Themes Epidemiol. 2015;12:14.

Rogers PJ. Using programme theory to evaluate complicated and complex aspects of interventions. Evaluation. 2008;14:29–48.

Grudniewicz A, Tenbensel T, Evans JM, Gray CS, Baker GR, Wodchis WP. ‘Complexity-compatible’ policy for integrated care? Lessons from the implementation of Ontario's health links. Soc Sci Med. 2018;198:95–102.

Acknowledgements

We would like to thank Dr. Andrew Milat and Professor John Wiggers for providing feedback on the initial study protocol and search strategy and to delegates at the Emerging Health Policy Research Conference at the Menzies Centre for Health Policy, University of Sydney in July 2016 for providing feedback on early insights from this work.

Dedication

We dedicate this work to our colleague, mentor, and above all, our friend, Associate Professor Sonia Wutzke (1970–2017). The public health community is richer for having had you as one of its most passionate advocates and our lives are richer for having known you.

Funding

The work was funded by the National Health and Medical Research Council of Australia (NHMRC) through its Partnership Centre grant scheme [grant number GNT9100001]. NSW Health, ACT Health, the Australian Government Department of Health, the Hospitals Contribution Fund of Australia and the HCF Research Foundation have contributed funds to support this work as part of the NHMRC Partnership Centre grant scheme. The contents of this paper are solely the responsibility of the individual authors and do not reflect the views of the NHMRC or funding partners.

Availability of data and materials

The datasets during and/or analysed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

SR developed and executed the search strategy and review process, led the data extraction and synthesis process and drafted the manuscript. KC performed data extraction and contributed to the refinement of the search strategy, data analysis and drafting of the manuscript. PH conceived the original idea for the paper and contributed to the development of the search strategy, data analysis, and drafting of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional information

Submitted to: Implementation Science

Additional files

Additional file 1:

Data extraction template with example data extraction. (DOCX 15 kb)

Additional file 2:

Article characteristics. (DOCX 183 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Rowbotham, S., Conte, K. & Hawe, P. Variation in the operationalisation of dose in implementation of health promotion interventions: insights and recommendations from a scoping review. Implementation Sci 14, 56 (2019). https://doi.org/10.1186/s13012-019-0899-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-019-0899-x