Abstract

Background

Evidence-based practice (EBP) is a complex process. To quantify it, one has to also consider individual and contextual factors using multiple measures. Modern measurement approaches are available to optimize the measurement of complex constructs. This study aimed to develop a robust measurement approach for constructs around EBP including practice, individual (e.g. knowledge, attitudes, confidence, behaviours), and contextual factors (e.g. resources).

Methods

One hundred eighty-one items arising from 5 validated EBP measures were subjected to an item analysis. Nominal group technique was used to arrive at a consensus about the content relevance of each item. Baseline questionnaire responses from a longitudinal study of the evolution of EBP in 128 new graduates of Canadian physical and occupational therapy programmes were analysed. Principles of Rasch Measurement Theory were applied to identify challenges with threshold ordering, item and person fit to the Rasch model, unidimensionality, local independence, and differential item functioning (DIF).

Results

The nominal group technique identified 70/181 items, and modified Delphi approach identified 68 items that fit a formative model (2 related EBP domains: self-use of EBP (9 items) and EBP activities (7 items)) or a reflective model (4 related EBP domains: attitudes towards EBP (17 items), self-efficacy (9 items), knowledge (11 items) and resources (15 items)). Rasch analysis provided a single score for reflective construct. Among attitudes items, 65% (11/17) fit the Rasch model, item difficulties ranged from − 7.51 to logits (least difficult) to + 5.04 logits (most difficult), and person separation index (PSI) = 0.63. Among self-efficacy items, 89% (8/9) fit the Rasch model, item difficulties ranged from − 3.70 to + 4.91, and PSI = 0.80. Among knowledge items, 82% (9/11) fit the Rasch model, item difficulties ranged from − 7.85 to 4.50, and PSI = 0.81. Among resources items, 87% (13/15) fit the Rasch model, item difficulties ranged from − 3.38 to 2.86, and PSI = 0.86. DIF occurred in 2 constructs: attitudes (1 by profession and 2 by language) and knowledge (1 by language and 2 by profession) arising from poor wording in the original version leading to poor translation.

Conclusions

Rasch Measurement Theory was applied to develop a valid and reliable measure of EBP. Further modifications to the items can be done for subsequent waves of the survey.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Background

Health care professionals are expected to integrate best available research evidence, patients’ preferences, and their clinical expertise to support clinical decision-making, a process known as evidence-based practice (EBP) [1]. Production of high-quality research in fields related to rehabilitation over the past 15 years [2] has provided evidence for occupational therapists (OTs) and physical therapists (PTs) to guide practice [3,4,5]. As a result of this exponential rise in knowledge, there is an urgent need to mobilize evidence into clinical practice [6]. According to the World Health Organization (WHO), rehabilitation is defined as “a set of interventions designed to optimize functioning and reduce disability in individuals with health conditions in interaction with their environment” [7]. Without implementing effective interventions, rehabilitation will not be successful [8,9,10,11]. As a response to these expectations [12, 13], a priority for all professional OT and PT programmes was to emphasize EBP. Despite the emphasis on teaching and promoting the competencies associated with EBP in professional programmes, measuring EBP remains a daunting challenge.

EBP is a complex area of enquiry necessitating multiple measurement approaches to identify the practice itself and the individual and contextual factors influencing it. Several socio-cognitive theories have been applied in research to identify and tackle these factors [14,15,16]. To determine if EBP is changing professional practices, improving the quality of care, and informing organizations [17], there is a need to administer several multi-item questionnaires covering the relevant domains [18]. The use of validated and reliable measures of EBP outcomes is essential to improve EBP across studies and to inform areas for contextual change [17].

Measurement challenges

There are over 100 tools to measure domains related to EBP [19,20,21,22]. As a result, many items cover the same construct but with different phrasing and response options. There is always a need for parsimony in measurement, as redundancy can be a reason for non-completion and can produce false reliability [23]. The removal of redundant items from the questionnaire can raise concerns for fear of invalidating the interpretation of the total score derived from the original set of items. However, there is a vast literature on the validity of total scores derived from ordinal measures [24]. Typically, summing the numerical labels assigned to each ordinal response option is done to produce a total score. This score does not necessarily have mathematical properties nor does every item contribute equally to the total. Application of Rasch Measurement Theory (RMT) can shed light on how individual items contribute to a theoretically defined construct used to form a measure [25]. The basis of RMT is the Rasch model, named for Georg Rasch the Danish statistician, which is a probabilistic model used to situate a person and an item on a linear continuum from least able (easiest item) to most able (hardest item) [25].

RMT is one of the several measurement theories that have been applied in the context of health care. The best-known theory is Classical Test Theory which assumes that the true score is a function of the total score plus error; the error is assumed to be the same for each person and for each item [26]. Theories that are based on how each item behaves with respect to other items in the theoretical construct and how the items align along an expected linear hierarchy, from easiest to hardest, do not assume that these errors are the same. Therefore, the location and error for each item and each person are estimated. The location of each item along this “ability” continuum is estimated by a logit transformation of participants’ responses to each level of each item. An item response category that 50% of participants endorse, the middle item, has a logit of 0. The optimal linear scale for the items is required to have a mean of 0 and a standard deviation of 1, with locations all along the continuum from − 4 to + 4 logits. This represents the theoretical range of a standard normal distribution with a mean of 0 and a standard deviation of 1. The person’s values along this continuum are also optimal when they follow this standard normal distribution.

RMT has a number of requirements including measuring only one construct (i.e. unidimensionality). The analytical details of how to apply and interpret Rasch analysis to a set of person responses to items are presented in Table 1.

Items that do not fit the Rasch model should not be used in the total score until improved. Only few EBP measures [27,28,29,30,31,32] have used Rasch analysis for developing the scoring system, and none of these items were designed for rehabilitation EBP. Moreover, none of these studies were designed for Canadian professionals where the items have to be both in English and French.

Study context

In the context of a study on the evolution of individual characteristics (including knowledge, attitudes, confidence), contextual factors (including support for work setting), and actual use of EBP in graduates of the professional M.Sc. programme in PT and OT (grant number 148544) [33], 5 existing measures were assembled to tap into these important EBP constructs. This resulted in 181 items which clearly would be a barrier to study recruitment and completion. In addition, many of the items were redundant leading to low efficiency [34] or had multiple concepts in one item leading to high content density [35]. An example of item redundancy is the “feedback process” subcategory of the Alberta Context Tool [36] that includes 5 items that can be reflected in one. These items (I routinely receive information on my team’s performance on data like the examples provided above, our team routinely discuss this data informally, our team has a scheduled formal process for discussing this data, our team routinely formulates action plans based on the data, our team routinely compares our performance with others) can be captured in one item (our team routinely monitors our performance with respect to the action plans). Another example of multiple concepts in one item exists in the subcategory “sympathy” in the Evidence-Based Practice Profile Questionnaire-2 which is Critical appraisal of the literature and its relevance to the client is not very practical in the real world of my profession. This item fits in both the “sympathy” and “relevance” subcategories. These shortfalls in item development can lead to inconsistency in responses and biased estimates of change. From a measurement perspective, there is value in the items from multiple questionnaires as they could be considered to form a pool of items from which new combinations could be constructed with legitimate total scores.

Therefore, the global aim of this study was to apply a robust measurement approach on constructs around EBP including practice, individual characteristics variants (e.g. knowledge, attitudes, confidence, behaviours), and contextual factors (e.g. resources). The specific study objectives were to (i) identify the extent to which items from existing EBP measures reflect constructs suitable for use in a survey of EBP in PTs and OTs and (ii) the extent to which the items reflective of EBP constructs fit the expected unidimensional hierarchy sufficient to create a total score with interval-like properties optimizing the estimation of change or differences across groups.

Methods

Study design

This study was based on three steps: a nominal group process [37], a modified Delphi approach [38], and a cross-sectional electronic survey. An item analysis was conducted on all items arising from the five EBP measures chosen for inclusion in the longitudinal study of the evolution of EBP in new graduates of Canadian PT and OT programmes mentioned above. Data from the newest PT/OT cohort to enter clinical practice were analysed to refine the measurement strategy for future phases.

Population

The target population for the cross-sectional survey was all graduates (n = 1703) of the 28 Canadian OT and PT programmes that completed their professional education during the 2016–2017 academic year. To ensure complete ascertainment of graduates, participants were identified from university academic programmes. For the item analysis, the newest entry cohort was queried within 1-month post-graduation. The new graduates were prioritized for recruitment in order to assess EBP-related constructs at entry to practice and then subsequently over time. At the time of analysis, data from new graduates from 12 of the planned 28 university programmes were available.

Measurement

To identify potential measures for the broader study, all relevant EBP measures reported in the literature were identified. Table 2 lists the 5 measures used in this study from which the 181 items were chosen by the research team as targeting the constructs of self-use of EBP and EBP activities, individual factors (attitudes towards EBP, confidence in applying EBP, knowledge), and contextual factors (specifically resources).

Procedures

The procedures for appraising the 181 items and identifying potential constructs for further analyses are shown in Fig. 1. We used a three-phase process with two groups of experts (potential future respondents (user panel) and methodological (expert panel)) for the item analysis and then translated the results to French. The user panel comprised 12 PTs and OTs all with experience in clinical practice and training in EBP. The participants had a range of experience that covered the scope of practice of PT and OT including recent graduates and experienced clinician-researchers. The expert panel comprised the core research team members (AT, NEM, FAZ, AR).

Phase 1: Nominal group process

A nominal group process [36], involving both the user and expert panels, was used to screen each item by applying three criteria. Contextual relevance was defined based on whether the item was judged (by 80% or more of the user panel) to be relevant to the EBP and research/clinical scenarios facing PTs and/or OTs. Redundancy was to be avoided, and so, items were excluded if an item had a similar meaning to another item. In case of repetition, the item judged by > 50% of the user panel to have the clearest wording was retained. Re-wording was needed for items created for other health care providers such as doctors or nurses but relevant for EBP in our context. The expert panel suggested alternatives for re-wording the item, and the final decision was made by consensus (see the Additional file 1). This process reduced the initial 181 items to a pool of 81 items.

A modified Delphi process involving all the investigators on the research team (n = 8) was used to refine the items.

First round

An invitation e-mail was sent enclosing an explanation of the aim of the work and a description of the previous steps that had led to the generation of the item pool. Once the invitee accepted to participate, the item pool was sent by e-mail with specific instructions. Each investigator was asked to vote by marking on an Excel sheet an X under the category Item Clarity (completely clear or completely unclear) and under the category Informative (highly informative, moderately informative, not informative, not sure). Another category was created for additional comments. In this round, the goal was to clarify any redundancy or comprehension problems regarding each item [49]. Response frequencies for each item were calculated, and the experts’ identity was anonymized by a research assistant. Each item required 80% agreement from the panel in order to keep or remove the item as suggested by Lynn [49]. If the item showed < 80% agreement and there were comments for re-wording, then the item was kept to be re-worded and re-administered in the next round. All the responses were assigned to one of the three categories: keep without changes, keep with re-wording, and remove because of redundancy. At the end of this round, the expert panel met and re-worded the items that fell under the category of “re-word”. In case of any ambiguous comments, the investigator was contacted to seek clarifications for the comment. After reviewing all items, a final list was prepared for the second round.

Second round

An e-mail was sent to the expert panel for a final review and feedback on the changes applied to the items using similar procedures for rating as described in the first round.

This process resulted in 70 endorsed items from the pool of 81 items.

Phase 2: Meeting with the core team

Once all comments were in, two meetings with the core research team were held to identify the ideal set of items that would be carried forward in subsequent phases. Four activities were carried out. The first was to confirm the constructs and their labels. Second, items were assigned to constructs or left unassigned. Third, each item was prioritized for inclusion according to its relevance to the construct and whether the item indicated a high or low degree of EBP. The fourth activity was to consider whether the constructs fit best with a formative or reflective conceptual model. Formative models are where the items form the construct rather than reflecting it. For example, a priori, self-use of EBP practices was considered formative as there was a list of recommended practices. The more practices, the higher the EBP use. The most valid method for creating a legitimate total score for formative constructs is to count the number of items at a particular level of expertise. The construct self-efficacy is a good example of a reflective model, as having more self-efficacy or confidence in applying EBP in practice is reflected in people endorsing confidence in certain behaviours chosen to reflect the construct. A necessary but not sufficient criterion for a reflective model is that the items fit the underlying measurement model, here the Rasch model.

Phase 3: Translation

The quality of translation followed the Guidelines for the Process of Cross-Cultural Properties of Self-Report Measures [50]. This guideline helps the translation process into a new language through the following six steps: (1) initial (forward) translation—two professional translators with French-first language as their mother tongue (one was informed about the project, and the other translator was novice to the area) independently translated the final list of items from English to Canadian French; (2) synthesis of the translation—the same two translators synthesized the results of the two translations by preparing a consensus translation in the Canadian French language with the help of a research assistant; (3) back translation—two professional translators with English-first language as their mother tongue that are naive to measurement made back-translations; (4) use of an expert committee—in this step, a methodologist, PT, and OT professionals and a language professional met to come up with a clean version of the translation by solving any discrepancies through discussions until all the final items were judged to be linguistically equivalent; (5) testing the pre-final version—the clean version of the Canadian French set of items was completed by five clinicians and graduate students who were recent graduates (less than 5 years of clinical experience). This group identified some items to be hard to answer for recent graduates who have not worked in clinical practice. That led to suggest adding “My work does not involve clinical care” as a response option to all questions except those in knowledge or confidence domain; and (6) appraisal of the adaptation process—after adding “My work does not involve clinical care” as a response option, the final set of items was approved by the core team to be administered online.

Rasch analysis

Rasch measurement analysis was carried out to test the fit of the items related to EBP to the Rasch model. Rasch model was considered fit by item/person if the observed item/person perform consistently like the expected item/person performance. This can be quantified using chi-square (χ2) probability value if the value is > 0.05 with a Bonferroni adjustment and item/person fit residuals (sum of person and item deviations) that were close to 0 with a standard deviation of 1. Item residual correlation matrix was examined for possible local item independence, and unidimensionality was explored using principal component analysis of the residuals. Individual item fit was evaluated using the χ2 probability value and the fit residual values. If χ2 probability value of < 0.05 (Bonferroni adjustment) and fit residual values ≥ 2.5, then the individual item was considered misfit [51, 52]. The person separation index (PSI) was used to assess the internal consistency reliability of the scale which is equivalent to Cronbach’s α [47]; however, it only uses the logit values instead of the raw scores [52], where a value of ≥ 0.7 was considered acceptable representing the minimum required to divide the participants into two distinct groups (low/high ability) [53, 54]. Differential item functioning (DIF) was assessed to identify items that work differently for some groups who have the same level of ability in the sample. DIF was tested by profession, gender, language, and the type of clinical setting. The items which did not fit theoretically or mathematically the Rasch model were removed. Items that showed DIF were either deleted or split. This step was repeated multiple times until all items fit the model and the measure was formed.

All Rasch measurement analyses were performed using the Rasch Unidimensional Measurement Model Software (RUMM) version 2030 [55]. All descriptive analyses were performed using the SAS statistical software (version 9.4) [56]. Data were reported as means ± standard deviation (SD) or as frequencies (percentages).

Ethics

The research project from which the data were taken had ethical approval from all relevant university ethics committees to carry out the study.

Results

The characteristics of the participants are shown in Table 3. Figure 1 shows the process and results of the item analysis. The nominal group process resulted in a streamlined group of 81 items, from the original pool of 181 items: 55 which could be used without modification (“keep” list) and 38 that would need some re-wording. For the modified Delphi approach, all 8 investigators participated in all rounds. For the first round, experts provided their ratings for all the items. For the additional comments, redundant ones having similar suggestion were grouped and reduced to produce 57 comments which included suggestions to rephrase the item or make it clearer. There was an agreement among the experts to keep without changes (51), keep with re-wording (29), and remove because of redundancy (11). For both the item clarity and informative agreement, initially, 41 items were rated to be completely clear and highly informative (> 80% agreement) while 29 items were rated to be completely unclear or moderately/not informative (< 80% agreement). The items that were rated to be completely clear were not necessarily rated as highly informative and the opposite way.

For the second round, items were either kept as they were, removed, or re-worded in light of the experts’ comments. In total, 70 items were examined by the expert panel where no more major changes were required.

In total, 2 items were found to not be related to any domain resulting in a total of 68 items distributed on 6 EBP domains that were covered by the items. Two sets were considered to fit a formative model: self-use of EBP (9 items) and EBP activities (7 items). For these measures, the best total score is derived by counting the number of uses or activities carried out per day over the specified time frame.

Self-use of EBP

For this construct, more frequent use of EBP does not necessarily translate to better EBP if every contact with a patient initiates an EBP activity. For example, identifying a gap in knowledge more than 10 times a month would seem problematic rather than desirable. For this measure, counting the number of practices used in the past 6 months would eliminate giving problematic behaviours more weight. A total score from 0 to 9 would now be the indicator for self-use of EBP (see Table 4).

EBP activities

For EBP activities, more frequent use does indicate more EBP, and hence, a total number of activity days would be a reasonable metric. This requires assigning a frequency for each of the categories of never, monthly or less, bi-weekly, weekly, and daily over the past working month and giving the estimates of 0, 1, 2, 4, and 20 for these categories respectively. The total score is the cross-product of item days yielding activity days on a continuous scale (see Table 5).

Conceptual model

Four sets of items were considered to potentially fit a reflective conceptual model: attitudes towards EBP (n = 17 items), self-efficacy (n = 9 items), knowledge (n = 11 items), and resources (n = 15 items). Items that were compatible with a reflective model were tested to estimate the extent to which they fit the Rasch model, a necessary condition for a reflective model. Tables 4, 5, 6, and 7 and Figs. 2, 3, 4, and 5 present the results of the Rasch analysis for the constructs originally considered to be reflective.

Attitudes towards EBP items

The attitudes towards EBP items are shown in Table 6. Seventeen items were tested, all measured on a 5-point Likert Scale for the degree to which the respondent agrees or disagrees with the statement with an additional response options for “My work does not involve clinical care” in the event that the respondent does not provide hands-on direct care to patients but is involved in another aspects or rehabilitation practice (e.g. case management, clinical research). This latter option was merged with the “neutral” option. Nine items had disordered thresholds and needed rescoring. For 7 items, the categories “strongly disagree” and “disagree” were collapsed (items 1, 2, 3, 5, 7, 10, 11); for 3 items, the final rescoring resulted in only 2 (binary) categories (items 10, 14, 15). All 17 items fit the Rasch model after rescoring. Five items showed dependency with at least 1 other item, and the best-worded item was kept resulting in the deletion of items 3, 7, 9, 12, and 14. Three items showed DIF: item 2 showed DIF by profession, and the item was split to allow 2 different scoring; item 5 showed DIF by language, and as it was very close in content to item 3, it was deleted; and item 11 (I stick to tried and trusted methods in my practice rather than changing to anything new) showed DIF by language and profession. On close inspection, the English wording was idiomatic, and the French translation did not reflect the right meaning of the idiom. Item 13 is close in content, and so, item 11 was deleted. The final set of 10 items best reflecting the attitudes the EBP all fit the Rasch model and formed a measure (χ2 = 25.14, df = 22, p = 0.29). Figure 2 shows the threshold map (part a) and the targeting map (part b). The threshold map lists the items according to their average location on the latent trait (x-axis). The thresholds, transitions from 1 category of response to the next, are illustrated in different colours. There is a gradient across the items in the threshold location such that the easiest item, item 17 (see Table 6), is situated at the lowest end of the latent trait (− 7.51). The hardest item (item 2, split by profession) has the most difficult threshold at + 5.04. Figure 2b shows the location of the people and the items along the latent trait (x-axis) with the people above the line in pink and the items threshold below the line in blue. The items are poorly targeted to the sample primarily because the sample scored above 0, the expected mean location (mean 1.38; SD 0.83), whereas the range of items is from ≈ − 8.0 to + 5.0.

Self-efficacy towards EBP

The self-efficacy towards EBP items are shown in Table 7 and Fig. 3. Nine items were tested; all measured on an 11-point scale from 0% (no confidence) to 100% (completely confident). All the items had disordered thresholds and needed rescoring. For eight items, the categories “90%” and “100%” were collapsed (items 1, 2, 4, 5, 6, 7, 8, 9); for one item, the final rescoring resulted in only two (binary) categories (item 3). For all items, the first four categories (0%, 10%, 20%, 30%) were merged; for all but item 3, the middle categories (40%, 50%, 60%) were collapsed. All nine items fit the Rasch model after rescoring. Item 8 showed dependency with two other items (items 7 and 9), and the best-worded items were kept resulting in the deletion of item 8. None of the items showed DIF. The final set of eight items best reflecting the self-efficacy towards EBP all fit the Rasch model and formed a measure (χ2 = 10.89, df = 16, p = 0.82). Figure 2a shows the gradient across item-thresholds, and Fig. 2b shows that the sample is reasonably well targeted by the items as the mean location of the sample is 0.52 (expectation 0) with SD of 1.46 (expectation 1.0).

Knowledge

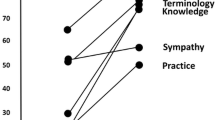

The knowledge items are shown in Table 8. Eleven items were tested, all measured on a 5-point ordinal scale of declarative statements related to scientific terminology needed for EBP. Four items had disordered thresholds and needed rescoring. The categories “never heard the term” and “have heard it but don’t understand” were collapsed for items 1, 7, 9, and 10. All 11 items fit the Rasch model after rescoring. Two items showed dependency with at least 1 other item, and the best-worded item was kept resulting in the deletion of items 2 and 11. Three items showed DIF: items 4 and 9 by profession and item 7 by language. In order to include all possible knowledge items, these items were split; however, the DIF by language for item 7 was likely due to poor translation (English: number-needed-to-treat (NNT); French: nombre de sujet à traiter). The final set of 9 items best reflecting the knowledge all fit the Rasch model and formed a measure (χ2 = 25.13, df = 18, p = 0.12). The threshold map in Fig. 5a shows a hierarchy across knowledge items with the easiest item familiarity with reliability and the hardest item treatment effect size. Figure 5b shows that the people are not well targeted by the items (mean location 2.25, SD 1.45; expectation mean 0, SD 1).

Resources

The resources items are shown in Table 9. Fifteen items were tested, all measured on a 5-point Likert Scale for the degree to which the respondent agrees or disagrees with the statement with additional response options for “My work does not involve clinical care” as per the attitudes items. This latter option was merged with the “neutral” option. All 15 items had disordered thresholds and needed rescoring. The categories “strongly disagree” and “disagree” were collapsed for all items. After rescoring, all but 1 item (item 12) fit the Rasch model and it was deleted. Only 1 item showed dependency on another item, and the best-worded item was kept resulting in the deletion of item 14. None of the items showed DIF. The final set of 13 items best reflecting the resources all fit the Rasch model, but the global fit was poor (χ2 = 56.92, df = 26, p = 0.00). Figure 3a shows the hierarchy across the items and Fig. 3b shows that the people are reasonably well targeted by the items, but there are many items at the low end of the resource continuum and no people.

Discussion

This study illustrated the psychometric steps needed to test the extent to which a set of items from existing measures of EBP form measures designed for PTs and OTs. The original 181 items obtained from 5 measures represented the core constructs of EBP. Theoretical and methodological processes were then applied for item reduction which resulted in 68 unique candidate items. Two constructs fit a formative conceptual model: self-use of EBP (9 items) and EBP activities (7 items). Four constructs fit a reflective conceptual model: attitudes towards EBP (n = 17 items), self-efficacy (n = 9 items), knowledge (n = 11 items), and resources (n = 15 items).

Rasch analysis showed that items reflecting four core constructs around EBP formed measures: attitudes towards EBP (n = 10 items), self-efficacy (n = 8 items), knowledge (n = 9 items), and resources (n = 13 items) (see Table 10). We discuss each one next.

Attitudes towards EBP

The items fit the Rasch model but were poorly targeted to our participants primarily because the mean fit for the participants (> 0) suggested that their overall scores on attitudes towards the EBP construct were greater than what would be expected in the general population. This was not surprising as our participants are recent graduates of rehabilitation programmes with a strong focus on EBP. This finding is congruent with previous studies showing recent graduates are more likely to report positive attitudes towards EBP than more seasoned clinicians and those with bachelor’s level training in OT and PT [57,58,59]. Scale reliability for this construct was unacceptable using person separation index (PSI) = 0.63, indicating that the items inadequately divided our participants along a continuum. RMT was applied to testing attitudes towards EBP in a previous study [27], and the results showed that 10 items fit the Rasch model with good reliability (0.83); however, the authors did not test DIF as the sample size was small (n = 110).

Three attitudes items showed DIF: item 2 showed DIF by profession; PTs are more likely than OTs to change their practice because of the evidence they find. Previous research on the use of and attitudes towards EBP showed that OTs score significantly lower than other health care providers which might indicate that this professional group faces different challenges related to EBP compared to other health care providers [60, 61]. Another explanation might be that OT, as a profession, is considered heavily client-centred and as such may value patient preferences as a contributor to EBP more so than the level of evidence [62, 63].

Items 5 and 11 showed DIF by language. Upon review, the English wording was idiomatic and the French translation did not reflect the correct meaning of the idiom. This might be attributed to the lack of proficiency in the English language [64]. The occurrence of DIF in this construct was considered infrequent (2/17 items) and comparable with other findings [65,66,67]. Future measure development should use simultaneous translation as it has advantages over forward translation particularly in that idiomatic wording; linguistic discrepancies between languages can be minimized or resolved during the item generation process [64, 68].

Self-efficacy

The final eight items fit the Rasch model and were reasonably well targeted to participants; however, the mean value for the participants was > 0 suggesting that the participants had a higher level of self-efficacy towards EBP than expected from the items. Self-efficacy has good internal reliability (PSI = 0.80), indicating that the items adequately separated the sample along the self-efficacy continuum. The internal consistency reliability value we found is similar to the original source of our items which is the evidence-based practice confidence (EPIC) scale [45]. RMT has been applied to testing self-efficacy towards EBP showing similar findings among different targeted populations with larger sample sizes [31, 32].

Knowledge

Nine items fit the Rasch model, but the sample was not well targeted by the items with mean fit for the participants (> 0); this suggests that the overall level of knowledge about EBP of our participants was greater than what would be expected from the items. The knowledge construct has a good internal reliability (PSI = 0.81), indicating that the items adequately separated our participants along the measurement continuum. RMT has been used by others [27, 28, 30] to validate knowledge of EBP, and this research has yielded very similar findings to ours.

Three items showed DIF: items 4 which showed that OTs’ familiarity with the statistical term “meta-analysis” is greater than that of PTs’. Item 9 showed that PTs are more aware of the statistical term “minimally important change (MIC)” than OTs. This might be explained by OTs’ use of a combination of different assessment and treatment approaches and their focus on client-centred strategies. Standardized tools are used when relevant and available [69,70,71]. PTs might know more the measurement-related statistical terms like “minimally important change (MIC)” as they mainly use the standardized tool over qualitative approaches. Also, the professional education and exposure to concepts associated with EBP may vary across programmes and between the two professions. Item 7 showed that francophones are more familiar with the term “number-needed-to-treat (NNT)” than anglophones. However, the DIF here was more likely due to the poor translation rather than real differences because “nombre de sujet à traiter” is associated with “caseload” in French than with a statistical term. Harris-Haywood et al. [30] evaluated the measurement properties of the Cultural Competence Health Practitioner Assessment Questionnaire across health care professionals which includes the following domains: knowledge, adapting practice, and promoting health for culturally and linguistically diverse populations. The authors reported DIF by almost all 23 knowledge items: 17 items had DIF by race, 4 by gender, and 6 by profession.

Resources

The 13 items reflecting resources available for EBP fit the Rasch model and reasonably well for the targeted participants. The mean value was > 0, but there are many items at the low end of the resource continuum with no participants. This suggested that the resources available for our participants were greater than what would be expected from the items included.

This might suggest a selection bias towards people who are good in EBP [72]. Although all items fit, there was still evidence of global misfit, indicating that a review of the items in this measure is warranted. This construct has a good internal reliability (PSI = 0.86), indicating that the items adequately separated participants along the measurement continuum. Bobiak et al. [29] showed almost similar findings in terms of the threshold range of the items and PSI values.

This project would not have been possible without the wealth of items and measures already developed, tested, and used in the study of EBP. However, in order to implement these measures in a practical way, through a survey of EBP of busy practitioners, we had to ensure a minimum number of non-redundant items. Otherwise, the response rate would be nil. This process, which was started for practical reasons, took the field of implementation science a step forward by optimizing the measurement of these important constructs so that it is easier to detect differences between groups and change over time.

Implementation of research in practice is considered to be a multidimensional process that involves clients, practitioners, and organizations [73]. Therefore, the development of measures that can reflect these dimensions is important because it provides tools that help the ultimate goal of implementation for behaviour change (i.e. the use of EBP).

Furthermore, these measures will ease the study of behaviour change mechanisms like those presumed in the Theoretical Domains Framework [74] or Theory of Planned Behaviour [75]. For instance, these mechanisms may include factors such as attitudes towards EBP or knowledge of EBP that in turn impact the intention to use EBP in daily practice. As a result, if we can provide measures of attitudes towards EBP or knowledge of EBP, this then will predict EBP behaviours (i.e. actual use of EBP) [73]. The final set of items provided in this work for each construct fairly covers the full range of the latent variable from “easier” to “difficult” items which will allow researchers to capture different abilities of clinicians regarding aspects of EBP. That by itself will support researchers in the design and implementation of robust knowledge translation interventions in a gradual manner aimed at targeting different levels of clinician abilities instead of designing one-size-fits-all interventions.

Strengths

Our study has several strengths. The analyses provide a single legitimate total score for four constructs around EBP on a continuum from very low to very high. These measures can be used to identify determinants of good EBP, to guide the development of implementation interventions, and to evaluate sustainability. The validation of such indices around EBP addresses the lack of standardized tools for EBP educational evaluation [19, 76,77,78]. This study tackled a specific gap in EBP measures for groups like PTs and OTs in two languages, English and French. The analyses provided a mathematically sound way of reducing the multitude of items to only those that behave in the manner that sufficiently assesses the constructs around EBP while maintaining strong theoretical and methodological processes. We used data from an inception cohort which will allow us to follow participants during the next 3 years to gauge the changes over time. We recruited participants from several Canadian universities in English and French languages which allow for generalizing the findings. The use of simultaneous translation helped to resolve idiomatic wording and linguistic discrepancies between languages compared to sequential translation where items are first generated in only one language followed by subsequent translation into another language [64, 68]. Future work is required to validate these constructs over time, and there is a need to address the gaps in the lower and upper ends of the scales. Further, the correlation between different EBP constructs will allow for greater understanding of the EBP constructs. This will be described in a subsequent descriptive analysis of this large data set and though the ongoing longitudinal study of these graduates into practice.

Limitations

Our study has some limitations. First, the data were from a cross-sectional study, and unnecessarily, the data reflect all time points, a weakness reported in previous work [27,28,29,30,31,32]. Therefore, it is essential to revalidate these constructs with subsequent waves of data to explore stability, intensity, and direction of EBP constructs. Second, the participants were new graduates, and 43% reported that they were not working in clinical practice which limits the use of EBP. Third, the sample size was generally small and diversified across the EBP constructs due to missing data. Fourth, the number of administered items was much less than other questionnaires targeting several constructs; however, it requires about 15 min, which might influence the return rates especially for future time points. Fifth, it was noticed that the level of EBP knowledge for our participants was high which causes a ceiling effect that may explain the high mean person location (> 0) for all EBP constructs. Sixth, despite the fact that the items capture a wide range of difficulty, gaps appear in some locations, making the estimation of the ability of persons located near or within those gaps less precise. Lastly, the knowledge items may not be the true reflection of the participants’ EBP knowledge level since this construct elicits knowledge about statistical and methodological terms, one aspect of EBP. Some items were missed in our knowledge item sets such as knowing how to search literature and different literature appraisal issues.

Conclusion

This study provides evidence supporting the construct and content validity and internal consistency reliability of four measure to assess key constructs around EBP. Our use of a strong theoretical and methodological processes was helpful in reducing the number of items for further analysis, and the use of Rasch analysis was critical and helpful in choosing the best fitting items for each construct. However, a number of gaps in the measures were uncovered indicating a further refinement of the content and wording is needed.

Abbreviations

- DIF:

-

Differential item functioning

- EBP:

-

Evidence-based practice

- OT:

-

Occupational therapy

- PT:

-

Physical therapy

- RMT:

-

Rasch Measurement Theory

References

Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312(7023):71–2.

Feng X, Liu C, Guo Q, Bai Y, Ren Y, Ren B, et al. Research progress in rehabilitation treatment of stroke patients: a bibliometric analysis. Neural Regen Res. 2013;8(15):1423–30. https://doi.org/10.3969/j.issn.1673-5374.2013.15.010.

Frontera WR. Some reflections on the past, present, and future of physical and rehabilitation medicine (on the occasion of the 30th SOFMER congress). Ann Phys Rehabil Med. 2016;59(2):79–82. https://doi.org/10.1016/j.rehab.2016.02.002.

Kleim JA, Jones TA. Principles of experience-dependent neural plasticity: implications for rehabilitation after brain damage. J Speech Lang Hear Res. 2008;51(1):S225–39. https://doi.org/10.1044/1092-4388(2008/018).

Winstein CJ, Kay DB. Translating the science into practice: shaping rehabilitation practice to enhance recovery after brain damage. Prog Brain Res. 2015;218:331–60. https://doi.org/10.1016/bs.pbr.2015.01.004.

Moore JL, Shikako-Thomas K, Backus D. Knowledge translation in rehabilitation: a shared vision. Pediatr Phys Ther. 2017;29(Suppl 3 Supplement, IV Step 2016 Conference Proceedings Supplement):S64–s72. https://doi.org/10.1097/pep.0000000000000381.

World Health Organisation. Rehabilitation: WHO framework on rehabilitation services: expert meeting. 2017.

Korner-Bitensky N, Desrosiers J, Rochette A. A national survey of occupational therapists’ practices related to participation post-stroke. J Rehabil Med. 2008;40(4):291–7. https://doi.org/10.2340/16501977-0167.

Salls J, Dolhi C, Silverman L, Hansen M. The use of evidence-based practice by occupational therapists. Occup Ther Health Care. 2009;23(2):134–45. https://doi.org/10.1080/07380570902773305.

Petzold A, Korner-Bitensky N, Rochette A, Teasell R, Marshall S, Perrier MJ. Driving poststroke: problem identification, assessment use, and interventions offered by Canadian occupational therapists. Top Stroke Rehabil. 2010;17(5):371–9. https://doi.org/10.1310/tsr1705-371.

Menon-Nair A, Korner-Bitensky N, Ogourtsova T. Occupational therapists’ identification, assessment, and treatment of unilateral spatial neglect during stroke rehabilitation in Canada. Stroke. 2007;38(9):2556–62. https://doi.org/10.1161/strokeaha.107.484857.

Accreditation Council for Canadian Physiotherapy Academic Programs. Essential competency profile for physiotherapists in Canada. 2009. http://www.physiotherapyeducation.ca/Resources/Essential%20Comp%20PT%20Profile%202009.pdf. Accessed 15 April 2018.

Canadian Association of Occupational Therapy. Profile of practice of occupational therapists in Canada. 2012. https://www.caot.ca/document/3653/2012otprofile.pdf. Accessed 15 April 2018.

Godin G, Belanger-Gravel A, Eccles M, Grimshaw J. Healthcare professionals’ intentions and behaviours: a systematic review of studies based on social cognitive theories. Implement Sci. 2008;3:36. https://doi.org/10.1186/1748-5908-3-36.

Thompson-Leduc P, Clayman ML, Turcotte S, Legare F. Shared decision-making behaviours in health professionals: a systematic review of studies based on the Theory of Planned Behaviour. Health Expect. 2015;18(5):754–74. https://doi.org/10.1111/hex.12176.

Kwasnicka D, Dombrowski SU, White M, Sniehotta F. Theoretical explanations for maintenance of behaviour change: a systematic review of behaviour theories. Health Psychol Rev. 2016;10(3):277–96. https://doi.org/10.1080/17437199.2016.1151372.

Newhouse RP. Instruments to assess organizational readiness for evidence-based practice. J Nurs Adm. 2010;40(10):404–7. https://doi.org/10.1097/NNA.0b013e3181f2ea08.

Gawlinski A. Evidence-based practice changes: measuring the outcome. AACN Adv Crit Care. 2007;18(3):320–2.

Shaneyfelt T, Baum KD, Bell D, Feldstein D, Houston TK, Kaatz S, et al. Instruments for evaluating education in evidence-based practice: a systematic review. JAMA. 2006;296(9):1116–27. https://doi.org/10.1001/jama.296.9.1116.

Cadorin L, Bagnasco A, Tolotti A, Pagnucci N, Sasso L. Instruments for measuring meaningful learning in healthcare students: a systematic psychometric review. J Adv Nurs. 2016;72(9):1972–90. https://doi.org/10.1111/jan.12926.

Buchanan H, Siegfried N, Jelsma J. Survey instruments for knowledge, skills, attitudes and behaviour related to evidence-based practice in occupational therapy: a systematic review. Occup Ther Int. 2016;23(2):59–90. https://doi.org/10.1002/oti.1398.

Leung K, Trevena L, Waters D. Systematic review of instruments for measuring nurses’ knowledge, skills and attitudes for evidence-based practice. J Adv Nurs. 2014;70(10):2181–95. https://doi.org/10.1111/jan.12454.

Sick J. Rasch measurement in language education part 5: assumptions and requirements of Rasch measurement. Shiken. 2010;14(2):23–9.

Grimby G, Tennant A, Tesio L. The use of raw scores from ordinal scales: time to end malpractice? J Rehabil Med. 2012;44(2):97–8. https://doi.org/10.2340/16501977-0938.

Andrich D. Rasch models for ordered response categories. Encyclopedia Stat Behav Sci. 2005;4:1698–707. https://doi.org/10.1002/0470013192.bsa541.

Traub RE. Classical Test Theory in historical perspective. Educ Meas. 1997;16(4):8–14. https://doi.org/10.1111/j.1745-3992.1997.tb00603.x.

Akram W, Hussein MS, Ahmad S, Mamat MN, Ismail NE. Validation of the knowledge, attitude and perceived practice of asthma instrument among community pharmacists using Rasch analysis. Saudi Pharm J. 2015;23(5):499–503. https://doi.org/10.1016/j.jsps.2015.01.011.

Zoanetti N, Griffin P, Beaves M, Wallace EM. Rasch scaling procedures for informing development of a valid Fetal Surveillance Education Program multiple-choice assessment. BMC Med Educ. 2009;9:20. https://doi.org/10.1186/1472-6920-9-20.

Bobiak SN, Zyzanski SJ, Ruhe MC, Carter CA, Ragan B, Flocke SA, et al. Measuring practice capacity for change: a tool for guiding quality improvement in primary care settings. Qual Manag Health Care. 2009;18(4):278–84. https://doi.org/10.1097/QMH.0b013e3181bee2f5.

Harris-Haywood S, Goode T, Gao Y, Smith K, Bronheim S, Flocke SA, et al. Psychometric evaluation of a cultural competency assessment instrument for health professionals. Med Care. 2014;52(2):e7–e15. https://doi.org/10.1097/MLR.0b013e31824df149.

Blackman IR, Giles T. Psychometric evaluation of a self-report evidence-based practice tool using Rasch analysis. Worldviews Evid Based Nurs. 2015;12(5):253–64. https://doi.org/10.1111/wvn.12105.

Hasnain M, Gruss V, Keehn M, Peterson E, Valenta AL, Kottorp A. Development and validation of a tool to assess self-efficacy for competence in interprofessional collaborative practice. J Interprof Care. 2017;31(2):255–62. https://doi.org/10.1080/13561820.2016.1249789.

Thomas A, Rochette A, Lapointe J, O’Connor K, Ahmed S, Bussières A, et al. Evolution of evidence-based practice: evaluating the contribution of individual and contextual factors to optimize patient care. Canadian Institutes for Health Research, Project scheme grant; 2016-2022. 2016. http://webapps.cihr-irsc.gc.ca/funding/detail_e?pResearchId=8239783&p_version=CRIS&p_language=E&p_session_id=

Fekete C, Boldt C, Post M, Eriks-Hoogland I, Cieza A, Stucki G. How to measure what matters: development and application of guiding principles to select measurement instruments in an epidemiologic study on functioning. Am J Phys Med Rehabil. 2011;90(11 Suppl 2):S29–38. https://doi.org/10.1097/PHM.0b013e318230fe41.

Geyh S, Cieza A, Kollerits B, Grimby G, Stucki G. Content comparison of health-related quality of life measures used in stroke based on the international classification of functioning, disability and health (ICF): a systematic review. Qual Life Res. 2007;16(5):833–51. https://doi.org/10.1007/s11136-007-9174-8.

Estabrooks CA, Squires JE, Cummings GG, Birdsell JM, Norton PG. Development and assessment of the Alberta Context Tool. BMC Health Serv Res. 2009;9:234. https://doi.org/10.1186/1472-6963-9-234.

Delbecq AL, Van de Ven AH. A group process model for problem identification and program planning. J Appl Behav Sci. 1971;7(4):466–92. https://doi.org/10.1177/002188637100700404.

Fink A, Kosecoff J, Chassin M, Brook RH. Consensus methods: characteristics and guidelines for use. Am J Public Health. 1984;74(9):979–83.

McEvoy MP, Williams MT, Olds TS, Lewis LK, Petkov J. Evidence-based practice profiles of physiotherapists transitioning into the workforce: a study of two cohorts. BMC Med Educ. 2011;11:100. https://doi.org/10.1186/1472-6920-11-100.

McEvoy MP, Williams MT, Olds TS. Development and psychometric testing of a trans-professional evidence-based practice profile questionnaire. Med Teach. 2010;32(9):e373–80. https://doi.org/10.3109/0142159x.2010.494741.

Aarons GA, Glisson C, Hoagwood K, Kelleher K, Landsverk J, Cafri G. Psychometric properties and U.S. national norms of the Evidence-Based Practice Attitude Scale (EBPAS). Psychol Assess. 2010;22(2):356–65. https://doi.org/10.1037/a0019188.

Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the Evidence-Based Practice Attitude Scale (EBPAS). Ment Health Serv Res. 2004;6(2):61–74.

Aarons GA, McDonald EJ, Sheehan AK, Walrath-Greene CM. Confirmatory factor analysis of the evidence-based practice attitude scale in a geographically diverse sample of community mental health providers. Admin Pol Ment Health. 2007;34(5):465–9. https://doi.org/10.1007/s10488-007-0127-x.

Salbach NM, Jaglal SB. Creation and validation of the evidence-based practice confidence scale for health care professionals. J Eval Clin Pract. 2011;17(4):794–800. https://doi.org/10.1111/j.1365-2753.2010.01478.x.

Salbach NM, Jaglal SB, Williams JI. Reliability and validity of the evidence-based practice confidence (EPIC) scale. J Contin Educ Heal Prof. 2013;33(1):33–40. https://doi.org/10.1002/chp.21164.

Clyde JH, Brooks D, Cameron JI, Salbach NM. Validation of the evidence-based practice confidence (EPIC) scale with occupational therapists. Am J Occup Ther. 2016;70(2):7002280010p1–9. https://doi.org/10.5014/ajot.2016.017061.

Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16(3):297–334. https://doi.org/10.1007/bf02310555.

Squires JE, Hayduk L, Hutchinson AM, Mallick R, Norton PG, Cummings GG, et al. Reliability and validity of the Alberta Context Tool (ACT) with professional nurses: findings from a multi-study analysis. PloS one. 2015;10(6):e0127405. https://doi.org/10.1371/journal.pone.0127405.

Lynn MR. Determination and quantification of content validity. Nurs Res. 1986;35(6):382–5.

Beaton DE, Bombardier C, Guillemin F, Ferraz MB. Guidelines for the process of cross-cultural adaptation of self-report measures. Spine (Phila Pa 1976). 2000;25(24):3186–91.

Gibbons CJ, Mills RJ, Thornton EW, Ealing J, Mitchell JD, Shaw PJ, et al. Rasch analysis of the hospital anxiety and depression scale (HADS) for use in motor neurone disease. Health Qual Life Outcomes. 2011;9:82. https://doi.org/10.1186/1477-7525-9-82.

Tennant A, Conaghan PG. The Rasch measurement model in rheumatology: what is it and why use it? When should it be applied, and what should one look for in a Rasch paper? Arthritis Rheum. 2007;57(8):1358–62. https://doi.org/10.1002/art.23108.

Bond TG, Fox CM. Applying the Rasch model: fundamental measurement in the human sciences. Third ed. New York: Routledge; 2015.

Linacre JM. Sample size and item calibration [or person measure] stability. Rasch measurement transactions; 1994. http://www.rasch.org/rmt/rmt74m.htm. Accessed 15 May 2018.

Andrich D, Sheridan B, Luo G. Rasch unidimensional measurement models (RUMM) 2030. Perth: RUMM Pty Ltd; 2008.

Statistical Analysis System Institute Inc. SAS® 9.4 Software. 2014. http://support.sas.com/software/94/index.html. 15 Mar 2018.

Morrison T, Robertson L. New graduates’ experience of evidence-based practice: an action research study. Br J Occup Ther. 2016;79(1):42–8. https://doi.org/10.1177/0308022615591019.

Powell CA, Case-Smith J. Information literacy skills of occupational therapy graduates: promoting evidence-based practice in the MOT curriculum. Med Ref Serv Q. 2010;29(4):363–80. https://doi.org/10.1080/02763869.2010.518923.

Thomas A, Law M. Research utilization and evidence-based practice in occupational therapy: a scoping study. Am J Occup Ther. 2013;67(4):e55–65. https://doi.org/10.5014/ajot.2013.006395.

Wilkinson SA, Hinchliffe F, Hough J, Chang A. Baseline evidence-based practice use, knowledge, and attitudes of allied health professionals: a survey to inform staff training and organisational change. J Allied Health. 2012;41(4):177–84.

Brown T, Tseng MH, Casey J, McDonald R, Lyons C. Research knowledge, attitudes, and practices of pediatric occupational therapists in Australia, the United Kingdom, and Taiwan. J Allied Health. 2010;39(2):88–94.

Mroz TM, Pitonyak JS, Fogelberg D, Leland NE. Client centeredness and health reform: key issues for occupational therapy. Am J Occup Ther. 2015;69(5):6905090010p1–8. https://doi.org/10.5014/ajot.2015.695001.

Alsop A. Evidence-based practice and continuing professional development. Br J Occup Ther. 1997;60(11):503–8. https://doi.org/10.1177/030802269706001112.

Rogers WT, Lin J, Rinaldi CM. Validity of the simultaneous approach to the development of equivalent achievement tests in English and French. Appl Meas Educ. 2010;24(1):39–70. https://doi.org/10.1080/08957347.2011.532416.

Kwakkenbos L, Willems LM, Baron M, Hudson M, Cella D, van den Ende CHM, et al. The comparability of English, French and Dutch scores on the Functional Assessment of Chronic Illness Therapy-Fatigue (FACIT-F): an assessment of differential item functioning in patients with systemic sclerosis. PLoS One. 2014;9(3):e91979. https://doi.org/10.1371/journal.pone.0091979.

Delisle VC, Kwakkenbos L, Hudson M, Baron M, Thombs BD. An assessment of the measurement equivalence of English and French versions of the Center for Epidemiologic Studies Depression (CES-D) Scale in systemic sclerosis. PLoS One. 2014;9(7):e102897. https://doi.org/10.1371/journal.pone.0102897.

Arthurs E, Steele RJ, Hudson M, Baron M, Thombs BD. Are scores on English and French versions of the PHQ-9 comparable? An assessment of differential item functioning. PLoS One. 2012;7(12):e52028. https://doi.org/10.1371/journal.pone.0052028.

Askari S, Fellows L, Brouillette MJ, Moriello C, Duracinsky M, Mayo NE. Development of an item pool reflecting cognitive concerns expressed by people with HIV. Am J Occup Ther. 2018;72(2):7202205070p1–9. https://doi.org/10.5014/ajot.2018.023945.

Stapleton T, McBrearty C. Use of standardised assessments and outcome measures among a sample of Irish occupational therapists working with adults with physical disabilities. Br J Occup Ther. 2009;72(2):55–64. https://doi.org/10.1177/030802260907200203.

Bowman J. Challenges to measuring outcomes in occupational therapy: a qualitative focus group study. Br J Occup Ther. 2006;69(10):464–72. https://doi.org/10.1177/030802260606901005.

Atwal A, McIntyre A, Craik C, Hunt J. Occupational therapists’ perceptions of predischarge home assessments with older adults in acute care. Br J Occup Ther. 2008;71(2):52–8. https://doi.org/10.1177/030802260807100203.

Fowler F. Survey research methods. 4th ed. Thousand Oaks; 2009. https://doi.org/10.4135/9781452230184.

Moullin JC, Ehrhart MG, Aarons GA. Development and testing of the Measure of Innovation-Specific Implementation Intentions (MISII) using Rasch measurement theory. Implement Sci. 2018;13(1):89. https://doi.org/10.1186/s13012-018-0782-1.

Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7:37. https://doi.org/10.1186/748-5908-7-37.

Ajzen I. The theory of planned behavior. Organ Behav Hu Dec Processes. 1991;50(2):179–211 doi:https://doi.org/10.1016/0749-5978(91)90020-T.

Stevenson K, Lewis M, Hay E. Do physiotherapists’ attitudes towards evidence-based practice change as a result of an evidence-based educational programme? J Eval Clin Pract. 2004;10(2):207–17. https://doi.org/10.1111/j.1365-2753.2003.00479.x.

Stevenson K, Lewis M, Hay E. Does physiotherapy management of low back pain change as a result of an evidence-based educational programme? J Eval Clin Pract. 2006;12(3):365–75. https://doi.org/10.1111/j.1365-2753.2006.00565.x.

Flores-Mateo G, Argimon JM. Evidence based practice in postgraduate healthcare education: a systematic review. BMC Health Serv Res. 2007;7:119. https://doi.org/10.1186/1472-6963-7-119.

Acknowledgements

The authors would like to thank Carly Goodman, MSc, for helping with sending invitations, developing the e-survey, data collection, data processing, and taking field notes. We wish to thank all physiotherapists and occupational therapists who took part in our study for their time. FAZ acknowledges the generous support of the postdoctoral bursary from the Centre for Interdisciplinary Research in Rehabilitation of Greater Montreal (CRIR).

Funding

This study was funded by the Canadian Institutes of Health Research (CIHR). The funding body did not influence the study results and was not involved in the study in any way.

Availability of data and materials

The datasets generated and/or analysed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

All authors participated in adapting the study design, contributed to the development of the item set, and analysed and interpreted the data. FAZ wrote the initial manuscript. NEM and AT oversaw all phases of the study. The authors contributed to the study design, development of the item set, and analyses and interpretation of the data. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Authors’ information

FAZ has clinical training in physical therapy with an MSc degree in Professional Physical Therapy, a thesis-based MSc in rehabilitation science and a PhD in Rehabilitation Science from the School of Physical and Occupational Therapy at McGill University. NEM is the James McGill Professor in the Department of Medicine and the School of Physical and Occupational Therapy at McGill University. She is one of Canada’s best-known researchers in stroke rehabilitation. She is a health outcomes, health services, and population health researcher with interests in all aspects of disability and quality of life in people with chronic diseases and the elderly. AR has clinical training in occupational therapy and is a Professor at the School of Rehabilitation at the University of Montreal. AT has clinical training in occupational therapy and is an Associate Professor at the School of Physical and Occupational Therapy at McGill University with a cross-appointment at the Center for Medical Education, Faculty of Medicine, McGill University.

Ethics approval and consent to participate

All participants provided informed consent. Approval for this project was granted by all Institutional Review Board belonging to each university.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

A list of included and excluded items. (XLSX 31 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Al Zoubi, F., Mayo, N., Rochette, A. et al. Applying modern measurement approaches to constructs relevant to evidence-based practice among Canadian physical and occupational therapists. Implementation Sci 13, 152 (2018). https://doi.org/10.1186/s13012-018-0844-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-018-0844-4