Abstract

Background

Examining the role of modifiable barriers and facilitators is a necessary step toward developing effective implementation strategies. This study examines whether both general (organizational culture, organizational climate, and transformational leadership) and strategic (implementation climate and implementation leadership) organizational-level factors predict therapist-level determinants of implementation (knowledge of and attitudes toward evidence-based practices).

Methods

Within the context of a system-wide effort to increase the use of evidence-based practices (EBPs) and recovery-oriented care, we conducted an observational, cross-sectional study of 19 child-serving agencies in the City of Philadelphia, including 23 sites, 130 therapists, 36 supervisors, and 22 executive administrators. Organizational variables included characteristics such as EBP initiative participation, program size, and proportion of independent contractor therapists; general factors such as organizational culture and climate (Organizational Social Context Measurement System) and transformational leadership (Multifactor Leadership Questionnaire); and strategic factors such as implementation climate (Implementation Climate Scale) and implementation leadership (Implementation Leadership Scale). Therapist-level variables included demographics, attitudes toward EBPs (Evidence-Based Practice Attitudes Scale), and knowledge of EBPs (Knowledge of Evidence-Based Services Questionnaire). We used linear mixed-effects regression models to estimate the associations between the predictor (organizational characteristics, general and strategic factors) and dependent (knowledge of and attitudes toward EBPs) variables.

Results

Several variables were associated with therapists’ knowledge of EBPs. Clinicians in organizations with more proficient cultures or higher levels of transformational leadership (idealized influence) had greater knowledge of EBPs; conversely, clinicians in organizations with more resistant cultures, more functional organizational climates, and implementation climates characterized by higher levels of financial reward for EBPs had less knowledge of EBPs. A number of organizational factors were associated with the therapists’ attitudes toward EBPs. For example, more engaged organizational cultures, implementation climates characterized by higher levels of educational support, and more proactive implementation leadership were all associated with more positive attitudes toward EBPs.

Conclusions

This study provides evidence for the importance of both general and strategic organizational determinants as predictors of knowledge of and attitudes toward EBPs. The findings highlight the need for longitudinal and mixed-methods studies that examine the influence of organizational factors on implementation.

Similar content being viewed by others

Background

There are a growing number of evidence-based practices (EBPs) for youth psychiatric disorders [1, 2], but they continue to be underutilized and poorly implemented in community settings [3,4,5,6,7]. To understand the relative lack of implementation success to date, a number of studies have examined the factors that facilitate or impede the adoption, implementation, and sustainment of EBPs (e.g., [8,9,10,11,12,13,14,15]). Implementation barriers have been summarized in a range of conceptual models and frameworks [16], including the Theoretical Domains Framework, the Consolidated Framework for Implementation Research [17], the Exploration, Preparation, Implementation, and Sustainment framework [18], and the Checklist for Identifying Determinants of Practice [19]. Nearly all of these models include multiple ecological levels and emphasize that effective implementation requires consideration of both provider and organizational levels. Successful implementation requires providers who view EBPs favorably and possess the knowledge and skill to deliver core components of interventions with fidelity, as well as organizational contexts that are sufficiently supportive of EBPs.

While individual- and provider-level factors are widely acknowledged to be critical to effective implementation, the literature is still in a nascent state in two respects. First, many conceptual models and frameworks do not specify relationships among individual and organizational constructs. This limits our ability to understand how these factors coalesce to influence implementation success or failure and, hence, how implementation strategies [20, 21] should be selected and sequenced to promote the implementation of EBPs.

Second, while some of the more general organizational constructs (e.g., organizational culture, organizational climate, leadership) have a relatively long history and have been well studied in a variety of fields [22, 23], many implementation-specific organizational constructs are emerging (e.g., implementation climate [24,25,26], implementation leadership [27], implementation citizenship [28]). Rather than assessing organizational characteristics and functioning more broadly, these constructs are theorized to be more proximal to implementation and to sharpen focus on the specific strategies that need to be enacted and the contexts that need to be created to ensure effective implementation [23]. The relationship between general and strategic (i.e., implementation-specific) organizational factors has yet to be established, and few studies have focused on whether they are uniquely predictive of individual-level factors (e.g., knowledge and attitudes) that are more proximal to implementation effectiveness [29, 30]. Developing a better understanding of the role and modifiability of these contextual factors is a priority for implementation research [31,32,33], as this knowledge could inform the careful development and testing of implementation strategies that could promote the implementation, sustainment, and scale-up of EBPs [20, 21, 34, 35]. The present study examined a set of general (organizational culture, organizational climate, transformational leadership) and strategic (implementation climate and implementation leadership) organizational factors and their association with clinician knowledge of and attitudes toward EBPs within the context of a large publicly funded behavioral health system in Philadelphia. We begin by defining each of these constructs and describing their relationship to implementation.

Organizational culture and climate

Organizational culture and climate have a relatively long history in organizational research [23]. In the present study, we draw upon the work of Glisson and colleagues [36]. They define organizational culture as the shared views of norms and expectations within organizations [36]. Organizational climate refers to organizational members’ shared perception of the psychological impact of the work environment on their own well-being [36]. Organizational culture and climate have been associated with attitudes toward EBPs [37], provider turnover [38,39,40], quality of services [41], sustainment of new practices [39], and youth mental health outcomes [41, 42]. For further reference, Glisson and Williams [43] provide a thorough overview of work to develop more positive cultures and climates.

Implementation climate

Implementation climate has been defined as employees’ shared perception that a specific innovation is expected, supported, and rewarded within their organization [44]. Strong implementation climates encourage the use of EBPs by (1) ensuring employees are adequately skilled in their use, (2) incenting the use of EBPs and eliminating any disincentives, and (3) removing barriers to EBP use [44]. Weiner and colleagues [24] have documented ways in which the conceptualization and measurement of implementation climate can advance the field. Perhaps most central to the present inquiry is that it may have greater predictive validity than more general constructs. The empirical evidence linking implementation climate to implementation effectiveness is primarily drawn from the information systems implementation literature [24]. However, two pragmatic measures for implementation climate have recently been developed by Ehrhart et al. [26] and Jacobs et al. [25], offering new opportunities for empirical work focusing on implementation climate.

Transformational leadership

Effective leadership is essential for implementation success [17, 23] and can emerge (formally or informally) from any level of the organization [17]. Much work in this area has focused on the Full-Range Leadership model [45], which differentiates among transformational, transactional, and passive or laissez-faire leadership. Corrigan et al. [46] note that transformational leadership is characterized by helping team members transform their services to meet the ever-evolving needs of their patients and is achieved through charisma, inspiration, intellectual stimulation, and consideration of individual staff members’ interests. Transactional leaders attend to day-to-day tasks necessary for the program to operate, and they accomplish their goals by using goal-setting, feedback, self-monitoring, and reinforcement. Both transformational and transactional leadership styles have been shown to be effective, in contrast to laissez-faire leaders, who are characterized as aloof, uninvolved, and disinterested in the activities of the front-line workers [46]. However, transformational leadership is associated with more positive outcomes than is transactional leadership, including higher innovation climates and attitudes toward EBPs [47]. Aarons and colleagues [23, 48] provide informative reviews of empirical findings from applications of this model in a wide range of settings, including health, mental health, and child welfare.

Implementation leadership

Aarons and colleagues [27] have developed the Implementation Leadership Scale (ILS), which measures specific behaviors that leaders exhibit to promote effective implementation. Implementation leadership is measured with four subscales: (1) proactive leadership, (2) knowledgeable leadership, (3) supportive leadership, and (4) perseverant leadership. A pilot study demonstrated that implementation leadership can be improved through an organizational implementation strategy called the Leadership and Organizational Change Intervention (LOCI) [49]; however, given that this measure was only recently developed, further research is required to establish its utility in predicting additional implementation determinants and outcomes.

Knowledge of and attitudes toward evidence-based practices

Knowledge of EBPs is a precursor to implementation and is a key construct in some of the most commonly used implementation frameworks [17, 50]. For example, in child and adolescent mental health, it is necessary (although not sufficient) that therapists are knowledgeable about treatments that are indicated (or contraindicated) for common mental health problems such as anxiety and avoidance, depression and withdrawal, disruptive behavior, and attention and hyperactivity [51]. Therapists’ attitudes toward EBPs are also an important precursor to effective implementation. Aarons et al. [52] note that attitudes toward EBPs are important for two reasons. First, these attitudes may influence whether or not a therapist adopts a new practice. Second, if therapists do adopt a new practice, their attitudes may influence their decisions about the actual implementation and sustainment of the innovation. Measures have been developed to assess mental health therapists’ attitudes toward EBPs, including the 15-item Evidence-Based Practice Attitudes Scale (EBPAS). Studies using the EBPAS [53] have shown that organizational factors such as constructive organizational cultures and organizational support for EBPs are associated with more positive attitudes toward EBPs and that more positive attitudes toward EBPs are associated with provider adoption of EBPs [54,55,56]. However, Aarons and colleagues [52] underscore the need for more research that examines the association between organizational factors and attitudes toward EBPs.

Study purpose and hypotheses

The purpose of this study was to (1) assess the relationship between general and strategic organizational-level determinants and therapist-level determinants of implementation and (2) to determine whether strategic organizational-level determinants predict individual-level determinants after controlling for general organizational-level determinants that are more firmly established in the implementation literature. We hypothesized that more positive general organizational determinants (i.e., organizational culture and climate, transformational leadership) would be associated with increased therapist knowledge of and more positive attitudes toward EBPs, because they are characterized by (1) more proficient organizational cultures in which therapists are expected to have up-to-date knowledge of best practices and they are less resistant to change [36]; (2) organizational climates that are more engaged, functional, and less stressful, which could facilitate the exploration of new programs and practices [36]; and (3) higher levels of transformational leadership, which has been shown to lead to more positive climates for innovation [47]. Moreover, we hypothesized that more positive strategic organizational contexts for implementation would be associated with higher levels of knowledge and more positive attitudes toward EBPs, as those contexts would be characterized by EBPs being expected, supported, and rewarded [24, 44] and by leaders who provide the concrete supports needed to facilitate the exploration (and ultimately the preparation, implementation, and sustainment) of EBPs [18, 27].

Methods

Setting

This study was conducted in partnership with Philadelphia’s Department of Behavioral Health and Intellectual disAbility Services (DBHIDS), a large, publicly funded urban system that pays for mental health and substance abuse services through a network of more than 250 providers. In partnership with Community Behavioral Health, a not-for-profit 501(c)(3) corporation contracted by the City of Philadelphia, they provide behavioral health coverage to more than 500,000 Medicaid-enrolled individuals [57]. Since 2007, DBHIDS has supported several large-scale initiatives to implement EBPs and has worked to develop a practice culture that embraces recovery and evidence-based care [58]. These initiatives have included efforts to implement cognitive therapy [59], trauma-focused cognitive behavioral therapy [60], prolonged exposure, dialectical behavior therapy, and, most recently, parent-child interaction therapy. In addition to providing robust training from treatment developers and other expert purveyors of these clinical practices, DBHIDS has attempted to address implementation challenges at the practitioner, organizational, and system levels through the use of a number of implementation strategies [58]. For example, DBHIDS utilized multilevel implementation strategies including forming an Evidence-Based Practice and Innovations Center (EPIC) to guide the overall vision of EBP implementation and address challenges related to communication, coordination, and institutional learning in the context of multiple EBP initiatives; establishing an EBP Coordinator role within EPIC; paying for clinicians’ lost billable hours during training; funding ongoing supervision and consultation; providing CEUs for EBP initiatives; providing enhanced reimbursement rates for some EBPs; giving public recognition to organizations implementing EBPs; ensuring that care managers and other DBHIDS staff members were well informed about EBPs; ensuring EBPs were billable under Medicaid; engaging a wide range of stakeholders to build buy-in and inform implementation; and sponsoring public events that focused on reducing stigma and raising awareness of behavioral health concerns. It is beyond the scope of this paper to describe DBHIDS’ transformation effort in great detail; however, it is described elsewhere. For example, Powell et al. [58] provide a broad overview of the effort by describing the aforementioned strategies (and more) and linking them to the policy ecology model of implementation [61]. Wiltsey Stirman et al. [59] and Beidas et al. [60] provide detailed descriptions of two individual initiatives focusing on cognitive therapy and trauma-focused cognitive behavioral therapy, respectively. The present study involved child- and youth-serving agencies and clinicians that were implementing a wide range of interventions and therapeutic techniques [56, 62].

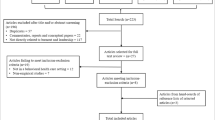

Agencies and participants

More than 100 community mental health agencies in Philadelphia provide outpatient services for children and youth (Cathy Bolton, PhD, written communication, January 3, 2013). Purposive sampling [63] was used to recruit the 29 largest child-serving agencies, which serve approximately 80% of children and youth receiving publicly funded mental health care. Of those 29 agencies, 18 (62%) agreed to participate and one additional agency that was involved with DBHIDS’ efforts to implement EBPs asked to participate, resulting in a final sample of 19 agencies (23 sites, 130 therapists, 36 supervisors, and 22 executive administrators). Due to their distinct leadership structures, locations, and staff, each site was treated as a distinct organization. The leader of each organization was invited to participate as the executive administrator. There were no exclusion criteria. Sixteen of the 23 participating organizations had participated in city-sponsored EBP initiatives, and those that did participate varied in terms of cumulative years of participation (see Table 2). We controlled for organizational participation in EBP initiatives in all analyses, which are described below. Additional studies focusing on the same sample of organizations have documented a myriad of barriers to implementing EBPs [15], including challenges related to turnover [40], a shifting workforce [64], and financial distress [65]. While all of these organizations operated within a broader system that was prioritizing and supporting the use of EBPs, variable participation in the initiatives and ample evidence that organizations were faced with challenges shared by most organizations in public service sectors minimize potential concerns about selection bias. However, we acknowledge that the baseline willingness to implement EBPs may be higher in this system given concerted efforts to prioritize and support EBP use.

Procedure

The institutional review boards in the City of Philadelphia and at the University of Pennsylvania approved all study procedures. The executive administrator at each of the 23 organizations was asked to participate in the study. They completed their questionnaires using REDCap, a secure Web-based application that supports online data collection [66]. For supervisors and therapists, we scheduled a one-time, 2-h meeting at each organization, at which we provided lunch, obtained written informed consent, and completed data collection. Approximately 49% of therapists employed by the 23 organizations participated in the study. We collected data from March 1, 2013, through July 25, 2013. Participants received $50 for their participation.

Measures

Predictor variables

Participant characteristics

Demographics were assessed using the Therapist Background Questionnaire [67].

Organizational characteristics

Three organizational characteristics were measured: number of therapists, proportion of staff that were independent contractors, and the total number of years each organization participated in each of the city-sponsored EBP initiatives (e.g., an organization that participated in the dialectical behavior therapy initiative for 2 years and the trauma-focused cognitive behavioral therapy initiative for 3 years would be coded as participating for 5 years in EBP initiatives). Data regarding the number of therapists and proportion of staff that were independent contractors was collected from organizational leaders, and data regarding EBP initiative participation was obtained from Community Behavioral Health and DBHIDS leadership. All three of these characteristics can be considered proxies for the amount of financial and human capital that may be available for EBP efforts. Financial difficulties [65] have led many organizations to employ an increasing number of therapists as independent contractors, and these therapists have been shown to have poorer attitudes toward and less knowledge of EBPs [64]. Participation in EBP initiatives gives organizations increased access to direct financial supports, including the provision of training and consultation related to a specific EBP. Controlling for these characteristics minimizes concerns about selection bias and informs the broader generalizability of this study to other contexts that may not have formal EBP initiatives underway.

Organizational social context (organizational culture and climate)

Organizational culture and climate were measured from the perspectives of therapists, supervisors, and executive administrators using the Organizational Social Context Measurement System (OSC) [36]. The OSC is a 105-item measure of organizational culture, climate, and work attitudes (the latter of which was not used for the current study). Organizational culture is measured using three second-order factors: proficiency, rigidity, and resistance. Proficient cultures are those in which employees prioritize the well-being of clients and are expected to be competent and have up-to-date knowledge. Rigid cultures are those in which employees have little discretion or flexibility in conducting their work. Resistant cultures are characterized by employees who show little interest in new ways of providing services and suppress any change effort. Organizational climate is also measured using three second-order factors, including engagement, functionality, and stress. Engaged climates are characterized by employee perceptions that they can personally accomplish worthwhile tasks, remain personally involved in their work, and maintain concern for their clients. Functional climates are characterized by employee perceptions that they receive the support that they need from coworkers and administrators to do a good job and that they have a clear understanding of their role and how they can be successful within the organization. In stressful climates, employees feel emotionally exhausted. Organizational culture and climate are measured with T-scores, in which a score of 50 represents the mean and the standard deviation is 10. These T-scores are based upon a normed sample of 100 community mental health clinics in the USA [36]. The OSC has strong psychometric properties [68], and the measurement model has been confirmed in two nationwide samples [36, 69].

Implementation climate

Implementation climate was measured from the perspective of therapists, supervisors, and executive administrators with the Implementation Climate Scale (ICS) [26]. The ICS is an 18-item scale that measures the climate for EBP implementation using six dimensions: focus on EBPs, educational support for EBPs, recognition for using EBPs, rewards for EBPs, selection of staff for EBPs, and selection of staff for openness. Each of the items is measured on a five-point Likert scale ranging from 0 (not at all) to 4 (very great extent), and each subscale represents the mean of the items within that dimension. There is strong support for the reliability and validity of the ICS, as well as the aggregation of the ICS and its subscales to the group level [26].

Transformational leadership

Transformational leadership was measured from the perspective of therapists who rated their direct supervisor using the Multifactor Leadership Questionnaire (MLQ 5X Short) [70]. The MLQ includes 45 items that measure transformational leadership, transactional leadership, and laissez-faire leadership. Given its association with innovation [47], we focused only on transformational leadership, which is measured using the 20 items and 3 subscales of the MLQ, including idealized influence (8 items), inspirational motivation (4 items), intellectual stimulation (4 items), and individual consideration (4 items). Each item is measured on a continuum from 0 (not at all) to 4 (to a very great extent), with each subscale representing the mean of the items within that dimension. The MLQ possesses strong psychometric properties and has been widely used in a variety of fields [70].

Implementation leadership

Implementation leadership was measured from the perspective of therapists who rated their direct supervisor with the Implementation Leadership Scale (ILS) [27]. The ILS is a 12-item scale that measures leader behaviors relevant to implementation of EBPs with four subscales, including proactive, knowledgeable, supportive, and perseverant. Each item is measured on a continuum from 0 (not at all) to 4 (very great extent), with each subscale representing the mean of the items within that dimension. The ILS has demonstrated excellent internal consistency reliability as well as convergent and discriminant validity [27].

Dependent variables

Knowledge of EBPs

Therapist knowledge of EBPs was measured using the Knowledge of Evidence-Based Services Questionnaire (KEBSQ) [51], a 40-item self-report instrument. Knowledge is measured on a continuum from 0 to 160, with higher scores indicating more knowledge of evidence-based services for youth. Psychometric data suggest temporal stability, discriminative validity, and sensitivity to training [51].

Attitudes toward EBPs

Therapist attitudes toward EBPs were assessed using the Evidence-Based Practice Attitudes Scale (EBPAS) [53], a 15-item self-report measure that assesses four dimensions: appeal of EBPs, likelihood of adopting EBPs given requirements to do so, openness to new practices, and divergence between EBPs and usual practice. Each item is measured using a scale ranging from 0 (not at all) to 4 (very great extent), and each of the aforementioned subscales represent the average of the items within that factor. Previous studies have demonstrated that the EBPAS has good internal consistency [54] and validity [71]. Aarons et al. [72] assessed the psychometric properties of the EBPAS in a study of 1089 mental health service providers from a nationwide sample drawn from 100 organizations in 26 states in the USA. The study provided support for the second-order factor structure of measure, provided further evidence of the reliability of its subscales and total scale, and yielded scale norms based upon a national sample [72].

Data analysis

Organizational scores are constructed by aggregating individual responses within the organization; however, this is only meaningful if there is sufficient agreement within the organizational unit. We used mean within-group correlation statistics to determine agreement [73, 74]. The OSC, ICS, and ILS all had levels of agreement above the recommended 0.60 level [74, 75]; thus, individual participant responses within organizations were averaged.

Analyses were conducted using PROC MIXED in SAS statistical software, version 9.0 (SAS Institute, Inc.). We produced six linear mixed-effects regression models to determine the associations between the predictor variables (organizational characteristics, general and strategic organizational determinants) and dependent variables (knowledge of EBPs and attitudes toward EBPs). All models included random intercepts for organization to account for nesting of therapists within organizations and fixed effects for individual demographic and organizational factors. Demographic factors included age, sex, and years of clinical experience. Organizational characteristics included program size (number of therapists), ratio of independent contractor therapists, organizational culture (proficient, rigid, and resistant), organizational climate (engaged, functional, stressful), implementation climate (EBP focus, educational support, recognition, rewards, staff selection, openness), transformational leadership (idealized influence [attributed], idealized influence [behavior], inspirational motivation, intellectual stimulation, individualized consideration), and implementation leadership (proactive, knowledgeable, supportive, perseverant). The six dependent variables included knowledge of evidence-based services (KEBSQ) and attitudes toward EBPs (EBPAS; including scores for the four subscales [requirements, appeal, openness, and divergence] and the overall total).

Results

Descriptive statistics

Demographic information for the participating therapists is detailed in Table 1. Of the 22 executive administrators, 11 (50%) were male; they identified as Asian (2 [9%]), Hispanic/Latino (3 [14%]), African American (4 [18%]), white (12 [55%]), multiracial (2 [9%]), or missing ethnicity/race (2 [9%]). Of the 36 supervisors, 25 (69%) were female; they identified as African American (6 [17%]), white (20 [56%]), Hispanic/Latino (5 [14%]), other (1 [3%]), or missing ethnicity/race (4 [11%]). Descriptive statistics (medians, interquartile ranges) for the organizational variables (i.e., cumulative years participating in EBP initiatives, program size [number of therapists], proportion of staff employed as independent contractors, OSC subscales, ICS subscales, transformational leadership subscales, and ILS subscales) are shown in Table 2.

Predictors of therapists’ knowledge of EBPs

Table 3 reports the results of the linear mixed-effects regression models of the association between organizational factors and knowledge of EBPs as measured by the KEBSQ. Five organizational variables were associated with knowledge of EBPs. General organizational factors that predicted knowledge included organizational culture, organizational climate, and transformational leadership. More proficient organizational cultures were associated with an increase in knowledge, whereas more resistant cultures were associated with a decrease in knowledge. More functional organizational climates were associated with a decrease in knowledge of EBPs. Transformational leadership (idealized influence [attributed]) was associated with increased knowledge of EBPs. The only strategic organizational factor that predicted knowledge was implementation climate, as higher ratings on the financial rewards for the EBP subscale of the Implementation Climate Scale were associated with decreased knowledge of EBPs.

Predictors of therapists’ attitudes toward evidence-based practices

Table 3 also reports the results of the linear mixed-effects regression models of the association between organizational factors and attitudes toward EBPs as measured by the EBPAS. General organizational factors that predicted attitudes toward EBPs included organizational culture, organizational climate, and transformational leadership. Organizational cultures characterized by higher rigidity ratings were associated with higher levels of appeal. Organizational climates that were more functional and stressful were associated with lower levels of appeal, and organizational climates that were more engaged were associated with higher total EBPAS scores. Transformational leadership also predicted attitudes toward EBPs. Inspirational motivation was associated with lower levels of perceived divergence. Individual consideration was associated with lower levels of appeal and total EBPAS scores.

Both strategic organizational factors (implementation climate and implementation leadership) were predictive of attitudes toward EBPs. Implementation climates characterized by higher levels of educational support were positively associated with higher ratings for requirements, appeal, and total attitudes toward EBPs, and negatively associated with divergence. Implementation climates that provided more monetary or tangible rewards for EBPs were associated with higher ratings for divergence. Selection for openness (implementation climate) was positively associated with appeal of EBPs. Proactive implementation leadership was associated with higher levels of appeal and total attitudes toward EBPs. Knowledgeable implementation leadership was associated with lower levels of perceived divergence. Finally, perseverant implementation leadership was associated with lower ratings of attitudes toward EBPs (requirements, appeal, and total attitudes toward EBPs).

Discussion

This observational, cross-sectional study contributes to our understanding of how organizational factors are associated with clinician-level determinants of implementation in behavioral health [43, 48, 56, 76]. It confirms that both general and strategic organizational factors are associated with knowledge and attitudes toward EBPs. Indeed, at least one of the scales from each of the general (organizational culture, organizational climate, transformational leadership) and strategic (implementation climate, implementation leadership) constructs was associated with knowledge of or attitudes toward EBPs in the hypothesized direction.

Associations between general organizational factors and knowledge and attitudes

More proficient organizational cultures were associated with higher knowledge of EBPs, which is consistent with the literature that suggests proficient cultures are characterized by having up-to-date knowledge of best practices [36, 37]. Conversely, resistant organizational cultures were associated with less knowledge of EBPs. This relationship is intuitive, as therapists working in resistant cultures are expected to show little interest in new ways of working and to suppress any change effort; thus, they are unlikely to seek and obtain new knowledge related to EBPs [36].

Organizational climates that were more engaged were associated with higher overall attitudes toward EBPs. Engaged climates are characterized by therapists’ perceptions that they are able to personally accomplish worthwhile things, remain personally involved in their work, and maintain concern for their clients [36]. Thus, organizational climates that are more engaged may provide fertile ground for therapists to orient toward personal growth and to nurture more positive attitudes toward EBPs. Organizational climates that were characterized as stressful were associated with lower ratings for appeal of EBPs. This is not surprising, as stressful climates are characterized by employees who feel exhausted, overworked, and unable to get necessary tasks completed [36]. It follows that they would find the prospect of learning about or using EBPs less appealing. More functional organizational climates were associated with lower knowledge of EBPs and lower appeal of EBPs. While this may seem counterintuitive, there does not seem to be as direct of a theoretical relationship between functional climates (i.e., those in which therapists receive the support and cooperation that they need to do a good job [36]) and attitudes toward EBPs as there is for other constructs, such as proficient cultures. Moreover, if therapists in more functional climates believe that they already have what they need to provide quality services and that the organization is functioning well without EBPs, they may not feel motivated to pursue EBPs.

Transformational leadership (idealized influence) was associated with higher levels of knowledge of EBPs and attitudes toward EBPs as inspirational motivation was associated with less perceived divergence between EBPs and usual practice. Transformational leaders inspire others and provide them a vision for what can be accomplished through extra personal effort and also encourage development and changes in mission that may lead therapists to have greater willingness to expend extra effort to learn new EBPs and push themselves to achieve more professionally [70]. Transformational leadership (individual consideration) was also associated with lower appeal and overall attitudes toward EBPs. One potential reason for this is that leaders who are more likely to focus on individual consideration may be more focused on the individual developmental needs of their therapists, some of which may be focused on more specific areas of development (e.g., diagnostic ability, common factors of psychosocial treatment that may promote recovery [77]) that may detract from a focus on EBPs.

Associations between strategic organizational factors and knowledge and attitudes

Implementation climates that provided more educational support were associated with more positive attitudes toward EBPs, including higher ratings for being likely to adopt EBPs if they were required, higher ratings for appeal, lower levels of perceived divergence between EBPs and usual practice, and more positive overall attitudes toward EBPs. The educational support dimension of the ICS includes items about support for conferences, workshops, seminars, EBP trainings, training materials, and journals [26]. Enabling therapists to access these types of resources may give them opportunities to learn about EBPs and other effective practices and may also allow them to more clearly see the ways in which EBPs are similar to their preferred ways of working therapeutically. Implementation climates that were characterized by selecting staff who were more flexible, adaptable, and open to new interventions where associated with higher levels of the EBPAS subscale focusing on openness to EBPs. Implementation climates that fostered more financial or tangible rewards for EBPs were associated with less knowledge of EBPs and perceptions of higher levels of divergence between EBPs and routine practice. It is important to note that rewards for EBPs were rated extremely low in this sample of therapists and agencies (i.e., very limited range); however, it may be that providing financial reward for EBPs was more prevalent when knowledge of EBPs was low and that it could reinforce notions that they are highly divergent from therapists’ usual practices. It is also possible that these tangible rewards may undermine therapists’ intrinsic motivation to learn about EBPs [78].

Finally, more proactive implementation leadership was associated with greater appeal and more positive total EBPAS scores, and implementation leadership characterized as more knowledgeable was associated with less perceived divergence between EBPs and routine practice. Leaders that are proactive and have up-to-date knowledge of EBPs and are able to answer staff questions about them are likely to encourage therapists to view EBPs as a potentially feasible complement to their practice and dissuade them from thinking that EBPs are irrelevant or invalid treatment options. Perseverant implementation leadership was associated with lower willingness to adopt EBPs if required, less appeal of EBPs, and poorer overall attitudes toward EBPs. While it is difficult to interpret this finding, it may be that some leaders are more perseverant as an adaptive response to working in agencies comprised of therapists with poorer attitudes toward EBPs. It is also possible that the perseverance of leaders is being interpreted negatively by therapists, as they may perceive that leaders are being too dogmatic in attempting to implement EBPs in the face of all of the challenges associated with EBP implementation in large publicly funded behavioral health systems and that they may not be listening to or validating their concerns [15].

Limitations

These findings should be viewed in light of several limitations. First, while we obtained a relatively high response rate (~50% of therapists employed at the 23 agencies), we did not have 100% participation and it is possible that nonparticipants’ views of their practice context differed from participants’ views. Second, the cross-sectional data preclude our ability to make causal statements about how the organizational context influences attitudes toward and knowledge of EBPs over time. Third, the organizations and therapists participating in this study were implementing a range of treatments, not all of which would be considered evidence-based. Variation in exposure to EBPs may have impacted their attitudes toward and knowledge of EBPs; however, the number of years the organizations participated in EBP initiatives was not predictive of either attitudes toward or knowledge of EBPs. Fourth, given the observational nature of this study and the inherent variation in the types of treatments implemented in community settings, we were unable to obtain objective ratings of fidelity. The variation in treatments implemented also precluded our ability to use innovation-specific versions of the ICS or the EBPAS, which may have greater predictive validity [24, 44].

Future directions

This study suggests several important directions for the field. Improving our understanding of context will require continued efforts to develop and refine pragmatic measures of implementation determinants that would be relevant to behavioral health and health-care settings [29, 79]. Some of this work is underway. Lewis and colleagues [30] are working to develop criteria for pragmatic measures in implementation science, and there are an increasing number of measures in behavioral health and implementation research that are free, brief, and validated [25,26,27, 80, 81]. Efforts to document the predictive validity of measures are particularly needed [29, 30]. The mounting evidence of the impact of organizational-level factors on implementation outcomes suggests a need for interventions that more explicitly target the organizational context. Opportunities to develop, refine, and test organizational-level implementation strategies abound [82, 83], as a recent Cochrane review of interventions to improve organizational culture in health care returned no studies that met the inclusion criteria [84]. In behavioral health, interventions such as the Availability, Responsiveness, and Continuity (ARC) [42, 85, 86] and Leadership and Organizational Change (LOCI) [49] interventions demonstrate the utility of implementation strategies that target organizational factors, and serve as exemplars for the development of interventions that will allow organizations and systems to adaptively respond to the implementation challenges that emerge across different innovations and phases of implementation. These implementation strategies should address both factors associated with general organizational contexts (e.g., organizational culture, organizational climate, transformational leadership) and factors associated with strategic organizational contexts (e.g., implementation climate, implementation leadership), exploring their potential roles as mediators and moderators of implementation effectiveness [87]. The use of mixed methods will complement those efforts, adding nuance to our understanding of when and how contextual factors influence proximal and distal outcomes related to the implementation of effective practices (e.g., [88,89,90]). Further, system science methods might be leveraged to better understand the dynamic complexity of contextual determinants [91, 92].

Conclusions

This study provides further evidence that both general and strategic organizational factors are important determinants to consider. While both general and strategic factors predicted knowledge of and attitudes toward EBPs, it is possible that the strategic organizational factors could be more feasible targets for change, as it may be somewhat easier to promote the improvement in implementation climate and leadership as compared to changing more durable general organizational determinants such as organizational culture and climate, which are expensive and time-consuming to address [43]. However, it is also possible that developing solid general organizational context and leadership capacity is a necessary precursor to the formation of effective implementation climate and leadership. We suggest that each of these organizational determinants may be relevant to a wide range of implementation studies and merit further research to determine how these constructs (and their associated subscales) influence implementation across a diverse array of interventions and settings.

Abbreviations

- ARC:

-

Availability, Responsiveness, and Continuity

- DBHIDS:

-

Philadelphia’s Department of Behavioral Health and Intellectual disAbility Services

- EPIC:

-

Evidence-Based Practice and Innovations Center

- EBPAS:

-

Evidence-Based Practice Attitudes Scale

- EBPs:

-

Evidence-based practices

- ICS:

-

Implementation Climate Scale

- ILS:

-

Implementation Leadership Scale

- KEBSQ:

-

Knowledge of Evidence-Based Services Questionnaire

- LOCI:

-

Leadership and Organizational Change Intervention

- OSC:

-

Organizational Social Context Measurement System

References

The California Evidence-Based Clearinghouse for Child Welfare. The California Evidence-Based Clearinghouse for Child Welfare [Internet]. 2014 [cited 2014 Apr 27]. Available from: http://www.cebc4cw.org/

Substance Abuse and Mental Health Services Administration. NREPP: SAMHSA’s national registry of evidence-based programs and practices [Internet]. 2012 [cited 2012 Apr 18]. Available from: http://www.nrepp.samhsa.gov/

Kohl PL, Schurer J, Bellamy JL. The state of parent training: program offerings and empirical support. Fam Soc. 2009;90:247–54.

Raghavan R, Inoue M, Ettner SL, Hamilton BH. A preliminary analysis of the receipt of mental health services consistent with national standards among children in the child welfare system. Am J Public Health. 2010;100:742–9.

Zima BT, Hurlburt MS, Knapp P, Ladd H, Tang L, Duan N, et al. Quality of publicly-funded outpatient specialty mental health care for common childhood psychiatric disorders in California. J Am Acad Child Adolesc Psychiatry. 2005;44:130–44.

Institute of Medicine. Preventing mental, emotional, and behavioral disorders among young people: progress and possibilities. Washington, DC: National Academies Press; 2009.

Institute of Medicine. Psychosocial interventions for mental and substance use disorders: a framework for establishing evidence-based standards. Washington, D. C.: The National Academies Press; 2015.

Addis ME, Wade WA, Hatgis C. Barriers to dissemination of evidence-based practices: addressing practitioners’ concerns about manual-based psychotherapies. Clin Psychol Sci Pract. 1999;6:430–41.

Raghavan R. Administrative barriers to the adoption of high-quality mental health services for children in foster care: a national study. Adm Policy Ment Health Ment Health Serv Res. 2007;34:191–201.

Cook JM, Biyanova T, Coyne JC. Barriers to adoption of new treatments: an internet study of practicing community psychotherapists. Adm Policy Ment Health Ment Health Serv Res. 2009;36:83–90.

Forsner T, Hansson J, Brommels M, Wistedt AA, Forsell Y. Implementing clinical guidelines in psychiatry: a qualitative study of perceived facilitators and barriers. BMC Psychiatry. 2010;10:1–10.

Rapp CA, Etzel-Wise D, Marty D, Coffman M, Carlson L, Asher D, et al. Barriers to evidence-based practice implementation: results of a qualitative study. Community Ment Health J. 2010;46:112–8.

Stein BD, Celedonia KL, Kogan JN, Swartz HA, Frank E. Facilitators and barriers associated with implementation of evidence-based psychotherapy in community settings. Psychiatr Serv. 2013;64:1263–6.

Ringle VA, Read KL, Edmunds JM, Brodman DM, Kendall PC, Barg F, et al. Barriers and facilitators in the implementation of cognitive-behavioral therapy for youth anxiety in the community. Psychiatr Serv. 2015;66(9):938–45.

Beidas RS, Stewart RE, Adams DR, Fernandez T, Lustbader S, Powell BJ, et al. A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system. Adm Policy Ment Health Ment Health Serv Res. 2016;43:893–908.

Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43:337–50.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:1–15.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health Ment Health Serv Res. 2011;38:4–23.

Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:1–11.

Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69:123–57.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:1–14.

Ferlie E. Organizational interventions. In: Straus S, Tetroe J, Graham ID, editors. Knowledge translation in health care: moving from evidence to practice. Oxford, UK: Wiley-Blackwell; 2009. p. 144–50.

Aarons GA, Horowitz JD, Dlugosz LR, Ehrhart MG. The role of organizational processes in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: translating science to practice. New York: Oxford University Press; 2012. p. 128–53.

Weiner BJ, Belden CM, Bergmire DM, Johnston M. The meaning and measurement of implementation climate. Implement Sci. 2011;6:1–12.

Jacobs SR, Weiner BJ, Bunger AC. Context matters: measuring implementation climate among individuals and groups. Implement Sci. 2014;9:1–14.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS). Implement Sci. 2014;9:1–11.

Aarons GA, Ehrhart MG, Farahnak LR. The Implementation Leadership Scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9:1–10.

Ehrhart MG, Aarons GA, Farahnak LR. Going above and beyond for implementation: the development and validity testing of the Implementation Citizenship Behavior Scale (ICBS). Implement Sci. 2015;10:1–9.

Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implement Sci. 2014;9:1–9.

Lewis CC, Weiner BJ, Stanick C, Fischer SM. Advancing implementation science through measure development and evaluation: a study protocol. Implement Sci. 2015;10:1–10.

Eccles MP, Armstrong D, Baker R, Cleary K, Davies H, Davies S, et al. An implementation research agenda. Implement Sci. 2009;4:1–7.

Newman K, Van Eerd D, Powell BJ, Urquhart R, Cornelissen E, Chan V, et al. Identifying priorities in knowledge translation from the perspective of trainees: results from an online survey. Implement Sci. 2015;10:1–4.

National Institutes of Health. Dissemination and implementation research in health (R01) [Internet]. Bethesda, Maryland: National Institutes of Health; 2016. Available from: http://grants.nih.gov/grants/guide/pa-files/PAR-16-238.html

Wensing M, Oxman A, Baker R, Godycki-Cwirko M, Flottorp S, Szecsenyi J, et al. Tailored implementation for chronic diseases (TICD): a project protocol. Implement Sci. 2011;6:1–8.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44:177–94.

Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, et al. Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Adm Policy Ment Health Ment Health Serv Res. 2008;35:98–113.

Aarons GA, Glisson C, Green PD, Hoagwood K, Kelleher KJ, Landsverk JA, et al. The organizational social context of mental health services and clinician attitudes toward evidence-based practice: a United States national study. Implement Sci. 2012;7:1–15.

Glisson C, James LR. The cross-level effects of culture and climate in human service teams. J Organ Behav. 2002;23:767–94.

Glisson C, Schoenwald SK, Kelleher K, Landsverk J, Hoagwood KE, Mayberg S, et al. Therapist turnover and new program sustainability in mental health clinics as a function of organizational culture, climate, and service structure. Adm Policy Ment Health Ment Health Serv Res. 2008;35:124–33.

Beidas RS, Marcus S, Benjamin Wolk C, Powell BJ, Aarons GA, Evans AC, et al. A prospective examination of clinician and supervisor turnover within the context of implementation of evidence-based practices in a publicly-funded mental health system. Adm Policy Ment Health Ment Health Serv Res. 2016;43:640–9.

Glisson C, Hemmelgarn A. The effects of organizational climate and interorganizational coordination on the quality and outcomes of children’s service systems. Child Abuse Negl. 1998;22:401–21.

Glisson C, Hemmelgarn A, Green P, Williams NJ. Randomized trial of the Availability, Responsiveness and Continuity (ARC) organizational intervention for improving youth outcomes in community mental health programs. J Am Acad Child Adolesc Psychiatry. 2013;52:493–500.

Glisson C, Williams NJ. Assessing and changing organizational social contexts for effective mental health services. Annu Rev Public Health. 2015;36:507–23.

Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manage Rev. 1996;21:1055–80.

Avolio BJ, Bass BM, Jung DI. Re-examining the components of transformational and transactional leadership using the Multifactor Leadership Questionnaire. J Occup Organ Psychol. 1999;72:441–62.

Corrigan PW, Lickey SE, Campion J, Rashid F. Mental health team leadership and consumers’ satisfaction and quality of life. Psychiatr Serv. 2000;51:781–5.

Aarons GA, Sommerfeld DH. Leadership, innovation climate, and attitudes toward evidence-based practice during a statewide implementation. J Am Acad Child Adolesc Psychiatry. 2012;51:423–31.

Aarons GA, Ehrhart MG, Farahnak LR. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74.

Aarons GA, Ehrhart MG, Farahnak LR, Hurlburt MS. Leadership and Organizational Change for Implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci. 2015;10:1–12.

Michie S, Johnston M, Abraham C, Lawton R, Parker D, Walker A. Making psychological theory useful for implementing evidence based practice: a consensus approach. Qual Saf Health Care. 2005;14:26–33.

Stumpf RE, Higa-McMillan CK, Chorpita BF. Implementation of evidence-based services for youth: assessing provider knowledge. Behav Modif. 2009;33:48–65.

Aarons GA, Cafri G, Lugo L, Sawitzky A. Expanding the domains of attitudes towards evidence-based practice: the Evidence Based Attitudes Scale-50. Adm Policy Ment Health Ment Health Serv Res. 2012;39:331–40.

Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: the Evidence-Based Practice Attitude Scale (EBPAS). Ment Health Serv Res. 2004;6:61–74.

Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychol Serv. 2006;3:61–72.

Aarons GA, Sommerfeld DH, Walrath-Greene CM. Evidence-based practice implementation: the impact of public versus private sector organizational type on organizational support, provider attitudes, and adoption of evidence-based practice. Implement Sci. 2009;4.

Beidas RS, Marcus S, Aarons GA, Hoagwood KE, Schoenwald S, Evans AC, et al. Predictors of community therapists’ use of therapy techniques in a large public mental health system. JAMA Pediatr. 2015;169:374–82.

Department of Behavioral Health and Intellectual disAbility Services. Community Behavioral Health [Internet]. 2015 [cited 2015 Aug 13]. Available from: http://www.dbhids.org/cbh/.

Powell BJ, Beidas RS, Rubin RM, Stewart RE, Benjamin Wolk C, Matlin SL, et al. Applying the policy ecology framework to Philadelphia’s behavioral health transformation efforts. Adm Policy Ment Health Ment Health Serv Res. 2016;43:909–26.

Wiltsey Stirman S, Buchhofer R, McLaulin JB, Evans AC, Beck AT. The Beck Initiative: a partnership to implement cognitive therapy in a community behavioral health system. Psychiatr Serv. 2009;60:1302–4.

Beidas RS, Adams DR, Kratz HE, Jackson K, Berkowitz S, Zinny A, et al. Lessons learned while building a trauma-informed public behavioral health system in the City of Philadelphia. Eval Program Plann. 2016;59:21–32.

Raghavan R, Bright CL, Shadoin AL. Toward a policy ecology of implementation of evidence-based practices in public mental health settings. Implement Sci. 2008;3:1–9.

Wolk CB, Marcus SC, Weersing VR, Hawley KM, Evans AC, Hurford MO, et al. Therapist- and client-level predictors of use of therapy techniques during implementation in a large public mental health system. Psychiatr Serv. 2016;67:551–7.

Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood K. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Policy Ment Health Ment Health Serv Res. 2013;42:533–44.

Beidas RS, Stewart RE, Wolk CB, Adams DR, Marcus SC, Evans AC, et al. Independent contractors in public mental health clinics: implications for use of evidence-based practices. Psychiatr Serv. 2016;67:710–7.

Stewart RE, Adams D, Mandell D, Hadley T, Evans A, Rubin R, et al. The perfect storm: collision of the business of mental health and the implementation of evidence-based practices. Psychiatr Serv. 2016;67:159–61.

Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap): a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–81.

Weisz JR. Therapist Background Questionnaire. Los Angeles, CA: University of California; 1997.

Glisson C, Williams NJ, Green P, Hemmelgarn A, Hoagwood K. The organizational social context of mental health Medicaid waiver programs with family support services: implications for research and practice. Adm Policy Ment Health Ment Health Serv Res. 2014;41:32–42.

Glisson C, Green P, Williams NJ. Assessing the organizational social context (OSC) of child welfare systems: implications for research and practice. Child Abuse Negl. 2012;36:621–32.

Avolio BJ, Bass BM. Multifactor Leadership Questionnaire: manual and sample set. 3rd ed. Menlo Park, California: Mind Garden, INc.; 2004.

Aarons GA. Measuring provider attitudes toward evidence-based practice: consideration of organizational context and individual differences. Child Adolesc Psychiatr Clin N Am. 2005;14:255–71.

Aarons GA, Glisson C, Hoagwood K, Kelleher K, Landsverk J, Cafri G. Psychometric properties and U.S. national norms of the Evidence-Based Practice Attitude Scale (EBPAS). Psychol Assess. 2010;22:356–65.

James LR, Demaree RG, Wolf G. Estimating within-group interrater reliability with and without response bias. J Appl Psychol. 1984;69:85–98.

Brown RD, Hauenstein NMA. rwg: an assessment of within-group interrater agreement. Organ Res Methods. 1993;78:306–9.

Bliese P. Within-group agreement, non-independence, and reliability: implications for data aggregation and analysis. In: Klein K, Kozlowski S, editors. Multilevel theory, research, and methods in organizations: foundations, extensions, and new directions. San Francisco, CA: Jossey-Bass, Inc; 2000. p. 349–80.

Powell BJ, Beidas RS. Advancing implementation research and practice in behavioral health systems: introduction to the special issue. Adm. Policy Ment. Health Ment. Health Serv Res. 2016;43(6):825–33.

Davidson L, Chan KKS. Common factors: evidence-based practice and recovery. Psychiatr Serv. 2014;65(5):675–77.

Deci EL, Koestner R, Ryan RM. A meta-analytic review of experiments examining the effects of extrinsic rewards on intrinsic motivation. Psychol Bull. 1999;125:627–68.

Proctor EK, Powell BJ, Feely M. Measurement in dissemination and implementation science. In: Beidas RS, Kendall PC, editors. Dissemination and implementation of evidence-based practices in child and adolescent mental health. New York: Oxford University Press; 2014. p. 22–43.

Beidas RS, Stewart RE, Walsh L, Lucas S, Downey MM, Jackson K, et al. Free, brief, and validated: standardized instruments for low-resource mental health settings. Cogn Behav Pract. 2015;22(1):5–19.

Shea CM, Jacobs SR, Esserman DA, Bruce K, Weiner BJ. Organizational readiness for implementing change: a psychosocial assessment of a new measure. Implement Sci. 2014;9:1–15.

Novins DK, Green AE, Legha RK, Aarons GA. Dissemination and implementation of evidence-based practices for child and adolescent mental health: a systematic review. J Am Acad Child Adolesc Psychiatry. 2013;52:1009–25.

Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Res Soc Work Pract. 2014;24:192–212.

Parmelli E, Flodgren G, Schaafsma ME, Baillie N, Beyer FR, Eccles MP. The effectiveness of strategies to change organizational culture to improve healthcare performance. Cochrane Database Syst Rev. 2011;1.

Glisson C, Schoenwald S, Hemmelgarn A, Green P, Dukes D, Armstrong KS, et al. Randomized trial of MST and ARC in a two-level evidence-based treatment implementation strategy. J Consult Clin Psychol. 2010;78:537–50.

Glisson C, Hemmelgarn A, Green P, Dukes D, Atkinson S, Williams NJ. Randomized trial of the availability, responsiveness, and continuity (ARC) organizational intervention with community-based mental health programs and clinicians serving youth. J Am Acad Child Adolesc Psychiatry. 2012;51:780–7.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Adm Policy Ment Health Ment Health Serv Res. 2016;43:783–98.

Beidas RS, Wolk CB, Walsh LM, Evans AC, Hurford MO, Barg FK. A complementary marriage of perspectives: understanding organizational social context using mixed methods. Implement Sci. 2014;9:1–15.

Aarons GA, Fettes DL, Sommerfeld DH, Palinkas LA. Mixed methods for implementation research: application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services. Child Maltreat. 2012;17:67–79.

Powell BJ, Proctor EK, Glisson CA, Kohl PL, Raghavan R, Brownson RC, et al. A mixed methods multiple case study of implementation as usual in children’s social service organizations: study protocol. Implement Sci. 2013;8:1–12.

Burke JG, Lich KH, Neal JW, Meissner HI, Yonas M, Mabry PL. Enhancing dissemination and implementation research using systems science methods. Int J Behav Med. 2015;22:283–91.

Holmes BJ, Finegood DT, Riley BL, Best A. Systems thinking in dissemination and implementation research. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: translating science to practice. New York: Oxford University Press; 2012. p. 175–91.

Acknowledgements

We thank Lucia Walsh and Danielle Adams for their assistance with data collection. We would also like to thank the agencies, administrators, supervisors, and therapists that participated in this study. We thank the staff and leadership of Philadelphia’s Department of Behavioral Health and Intellectual disAbility Services for their assistance with this work. Finally, we thank Gregory Aarons, Ph.D., Frances Barg, Ms.Ed., Ph.D., Kimberly Hoagwood, Ph.D., and Sonja Schoenwald, Ph.D., for their role as mentors to RSB.

Funding

This work is supported in part by the National Institute of Mental Health through contracts and grants to BJP (L30 MH108060), RSB (K23 MH099179), and DSM (R01 MH106175; R21 MH106887). Additionally, the preparation of this article was supported in part by the Implementation Research Institute (IRI) at the Brown School, Washington University in St. Louis, through an award from the National Institute of Mental Health (R25 MH080916). BJP is a current IRI fellow, and RSB is a past IRI fellow.

Availability of data and materials

Data and materials will be made available upon request.

Authors’ contributions

BJP and RSB conceptualized this study, analyzed the data, and drafted the manuscript. RSB conceptualized, obtained funding, and collected the data for the parent study. All authors critically reviewed, edited, and approved the final manuscript.

Authors’ information

When this article was written, ACE was the Director of Philadelphia’s Department of Behavioral Health and Intellectual disAbility Services. He is now the CEO of the American Psychological Association.

Competing interests

BJP is on the Editorial Board of Implementation Science, but was not involved in any editorial decisions for this manuscript. The authors declare that they have no competing interests.

Consent for publication

Not applicable

Ethics approval and consent to participate

The institutional review boards in the City of Philadelphia and at the University of Pennsylvania approved all study procedures. Written informed consent was obtained for all study procedures.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Powell, B.J., Mandell, D.S., Hadley, T.R. et al. Are general and strategic measures of organizational context and leadership associated with knowledge and attitudes toward evidence-based practices in public behavioral health settings? A cross-sectional observational study. Implementation Sci 12, 64 (2017). https://doi.org/10.1186/s13012-017-0593-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-017-0593-9