Abstract

Background

The lens of complexity theory is widely advocated to improve health care delivery. However, empirical evidence that this lens has been useful in designing health care remains elusive. This review assesses whether it is possible to reliably capture evidence for efficacy in results or process within interventions that were informed by complexity science and closely related conceptual frameworks.

Methods

Systematic searches of scientific and grey literature were undertaken in late 2015/early 2016. Titles and abstracts were screened for interventions (A) delivered by the health services, (B) that explicitly stated that complexity science provided theoretical underpinning, and (C) also reported specific outcomes. Outcomes had to relate to changes in actual practice, service delivery or patient clinical indicators. Data extraction and detailed analysis was undertaken for studies in three developed countries: Canada, UK and USA. Data were extracted for intervention format, barriers encountered and quality aspects (thoroughness or possible biases) of evaluation and reporting.

Results

From 5067 initial finds in scientific literature and 171 items in grey literature, 22 interventions described in 29 articles were selected. Most interventions relied on facilitating collaboration to find solutions to specific or general problems. Many outcomes were very positive. However, some outcomes were measured only subjectively, one intervention was designed with complexity theory in mind but did not reiterate this in subsequent evaluation and other interventions were credited as compatible with complexity science but reported no relevant theoretical underpinning. Articles often omitted discussion on implementation barriers or unintended consequences, which suggests that complexity theory was not widely used in evaluation.

Conclusions

It is hard to establish cause and effect when attempting to leverage complex adaptive systems and perhaps even harder to reliably find evidence that confirms whether complexity-informed interventions are usually effective. While it is possible to show that interventions that are compatible with complexity science seem efficacious, it remains difficult to show that explicit planning with complexity in mind was particularly valuable. Recommendations are made to improve future evaluation reports, to establish a better evidence base about whether this conceptual framework is useful in intervention design and implementation.

Similar content being viewed by others

Background

Many influential articles of the last 20 years promoted using the lens of complexity science to improve health care delivery [1–8]. Health care and health care delivery are said to be increasingly complex [4], with outcomes that are often unpredictable and can even be paradoxical. As a result, possible solutions to some public health issues are not well-suited to the otherwise gold standard test method for intervention research (randomized controlled trials) [2, 3, 8], due to the sheer complexity of inputs as well as diverse interpretations or relative importance of many inputs and outputs. Inconsistent inputs, ever-changing active agents and institutions, unforeseen relationships and consequences are common aspects of real-life public health problems. Nonetheless, recognizing the inherent properties of complex systems may suggest opportunities to design more effective health care delivery [1, 4, 7].

In this paper, we use “complexity science” as an umbrella term for a number of closely related concepts: complex systems, complexity theory, complex adaptive systems, systemic thinking, systems approach and closely related phrases. All these concepts may be useful for working within systems with these features (among others):

-

Large number of elements, known and unknown.

-

Rich, possibly nested or looping, and certainly overlapping networks, often with poorly understood relationships between elements or networks.

-

Non-linearity, cause and effect are hard to follow; unintended consequences are normal.

-

Emergence and/or self-organization: unplanned patterns or structures that arise from processes within or between elements. Not deliberate, yet tend to be self-perpetuating.

-

A tendency to easily tip towards chaos and cascading sequences of events.

-

Leverage points, where system outcomes can be most influenced, but never controlled.

The merits of a perspective informed by complexity science (CS) in public health appear to be strongly supported by pragmatic acceptance of the nature of real-life situations, and also a useful counter-balance to the weaknesses in reductionist perspectives, and potentially over-optimistic reliance on evidence-based medicine [1, 2, 4]. Much previous applied research used CS ideas to undertake process evaluation (without requiring that CS principles were part of intervention design), usually leading to recommendations for future improvements in implementation [9, 10]. However, subsequent impacts after implementing recommendations derived from using the lens of complexity theory are not widely published. Even if such follow-up impacts were available, it can be argued that the results would still not be conclusive: inherently, cause and effect are hard to show in complex systems [11]. Complexity science is thus stuck in an ironic paradox of appearing very useful for evaluation but with uncertain value if applied to inform implementation. It is argued that lack of empirical evidence discourages widespread adoption of complexity-informed research design [12, 13].

Previous reviews looked for efficacy of the CS strategy in intervention design or implementation by defining what are the key CS principles to follow in order to find opportunities for positive change (leverage). Intervention programmes were scored for how well they seemed to follow these key principles, leading to some comments on final efficacy [14–17]. Other discussions concentrated on showing the impact of complexity-informed interventions at intermediate points in the possible transition to change—evidencing influence on the likely causal pathway without attempting to assess final impacts [8, 18]. These previous articles did not require that selected studies include phrases such as “complexity science” (or closely related terms). It was tacitly understood that many interventions which utilize ideas that are compatible with CS are not described as such. Among the few reviews that explicitly looked for any conceptual framework in intervention designs, Adam et al. [9] reviewed studies in low- and middle-income countries. Applying customized evaluation criteria, Adam et al. found that theoretical underpinning was often not described, and CS especially was often missing from design as well as process evaluation.

Our scoping review directly addresses the feasibility of and difficulties in finding elusive empirical evidence in complexity-informed interventions: is it possible to show that interventions purposefully designed with complexity concepts are effective? We then develop recommendations for both those seeking such evidence and those planning intervention research using a complexity-informed conceptual framework.

Hence, in this paper, we looked for cases where authors explicitly state that CS informed intervention design, and we also noted what evaluation metrics were used. However, interventions that utilized CS only as part of evaluation (and not in design) were excluded. In so doing, we attempt to explore some of the difficulties in finding, reporting on and evaluating interventions that have a design informed by CS. The approach is that of a scoping review because the core objective is not thorough assessment of correct application of complexity theory or rigorous assessment of final impacts (such as a systematic review [19]), but rather the viability of trying to find out if theory has been used purposefully, if reporting is useful to others, and how feasible is it to find evidence to demonstrate that CS-informed interventions lead to benefits.

Methods

In late December 2015, a literature search was undertaken for relatively modern articles (published after 1994) using Scopus and Ovid bibliographic databases. Review documents were hand-searched, and supplemental searches performed on specific websites. A grey literature search was undertaken using English language search phrases. All search strategies are described in Additional file 1. Titles and abstracts were screened (single reviewer) for eligibility, and exclusion criteria were applied as below.

Exclusions

Conference abstracts, editorials and poster presentations were excluded. Reports on education or professional development programmes were also excluded, unless they described changes in service delivery measures or patient outcomes (see “Eligibility” section). When a pilot study with limited evaluation or protocol was found that described a CS-informed intervention, follow-up evaluation reports were sought and considered for eligibility. Review papers were excluded but also hand-searched for eligible studies not previously found. There were no initial exclusions for country or language, but data extraction and detailed analysis (see below) was only undertaken on studies in the most common countries: UK, Canada and USA, in order to consider only relatively similar cultural contexts and health systems together, and because a similar and detailed evaluation of complexity-informed interventions was previously and recently undertaken for interventions in low- and middle-income countries [9].

Eligibility

Interventions were only included where CS was explicitly stated as having influenced the intervention design (including implementation and delivery, but not solely in evaluation). Evaluation of impacts must also be present after implementation. A CS-informed design was indicated by the use of one of the following phrases or very similar wording, in the abstract or in the description of intervention design or theory underpinning intervention design:

Complex systems, complexity theory, complex adaptive systems, systemic thinking and systems approach

Several phrases that are often linked to complexity science were not by themselves adequate to confirm eligibility. These include the phrases (each citation indicates distinctions from applications using complexity science) “systems change” [20], “complex interventions” [6], “systems science” [21] and “quality improvement” [22]. Instead, CS had to be explicitly cited using one of the search phrases as part of the conceptual framework for intervention design.

The evaluation must include at least one observed change that was believed by the authors to be linked or possibly linked to the intervention, and the evaluation metric had to be specific, not a simple opinion that the intervention had been helpful or increased confidence, but rather helpful in a specific way or context. A statement such as “I feel more confident” was inadequately specific and thus excluded, but the statement “I am now more confident when contributing to meetings” would be included. Changes were typically reported for perspectives, habits, service performance indicators, clinical or treatment targets and/or patient outcomes. However, the pathway between intervention and outcomes did not need to be highly detailed. Moreover, many interventions had multiple components (not only CS-informed), and the authors did not have to explain an explicit causal link between the CS-informed aspects of the intervention and any particular outcome.

After screening, we further focused on interventions in the three single countries which had yielded the most articles: USA, UK and Canada. Adam et al. [9] previously sought and evaluated complexity-informed theoretical underpinnings in interventions in low- and middle-income countries.

Data extraction and thematic analysis

The approach of this review is necessarily more narrative and qualitative than quantitative, and extraction therefore tried to capture data that can be categorized thematically. Table 1 shows the data that were extracted from each included study, using a standardized form. The results were grouped thematically and are discussed narratively.

Quality assessment

Quality assessment was concerned with some aspects of evaluation, especially those relevant to complexity science. The concern was to determine whether reported results could be confidently linked to the intervention and, indeed, whether CS had informed evaluation as well as intervention design. Hence, the questions in Table 2 were asked about each intervention, where a “yes” answer was preferred and feasible to ascertain for each question. The justification for these questions is as follows: Complex systems are said to be hard to change; hence, changes should be observed over longer rather than shorter periods (Q1); evaluation should be consistent (Q2); were changes observed by impartial methods, or simply observed as opinion statements (Q3); poor response from participants was undesirable and could bias results (Q4); and a common feature of complex systems are unintended consequences following input changes; ideally, any evaluation report should look for them (Q5). Each quality assessment question was scored (−1 = No, 0 = partly or unclear, 1 = yes), and a composite (sum) score was generated for each study out of the five questions, to indicate quality of reporting. Interventions which scored 4 or 5 were interpreted as good, score = 3 interpreted as fair and others as less completely evaluated.

Results

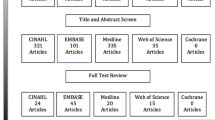

Scientific literature searches were undertaken on 18–21 December 2015 and supplemental searches from 22 December 2015 to 4 January 2016. Three thousand four hundred sixty-four mostly unique scientific articles and 171 items from grey literature and reference checking were screened for eligibility and exclusion criteria. Grey literature search strategies and results are in Additional file 1. Sixty-six articles could not be excluded after screening abstracts and titles. At this stage, articles and reports came from countries: USA (n = 29, 44 %), UK (n = 9, 13.6 %), Canada (n = 5, 7.6 %), sub-Saharan Africa (n = 15, 22.7 %) and other parts of the world (Australia, Indian subcontinent, Taiwan, Morocco and Bosnia-Herzegonvina: n = 8, 12.1 %).

Full-text review was confined to the 43 articles about interventions in the three most common high-income countries: Canada, UK and USA. Some interventions were reported in multiple articles. After full-text review, “snowballing” (reading text for references that seem to describe similar studies and checking those studies for references to similar studies), and looking for evaluations which followed promising protocols or pilot studies, the final selected and eligible interventions numbered 22, which were described in 29 reports. There were four interventions in the UK, three in Canada (described in five reports) and 15 interventions (described in 20 articles) in the USA. Figure 1 shows the study selection process. A list of included studies with key positive outcomes is in Table 3, while Additional file 2 gives further details on intervention format, complexity science concepts, timescale for observations, target conditions or problems and specific positive outcomes linked to each intervention.

Applications

A distinction between UK/Canada and USA research emerged. All but one [23, 24] of the UK/Canadian reports had the objectives of changing mindsets and perspectives but without specific target outcomes. These types of interventions (also one in the USA [25]) could be described as primarily building personal competencies and capacities [26]. In contrast, the majority of US research (14 of 15 intervention programmes) identified specific targets linked to specific problems. These specific targets included increasing hospital capacity, improving neonatal care, improved critical care recovery, improved diabetes care, smoking reduction, cancer screening take-up, better medication management, improving dementia care, reduction in hospital acquired infections, improvements in chronic care indicators, and time savings in paperwork.

Intervention format

Many interventions were implemented via a small number of consultative workshops or training sessions. Typically, in workshops (or retreats, or regular team meetings), a trained facilitator encouraged participants to identify and trial solutions to a specific problem [24, 27–37]. Audits, surveys, further reviews and feedback were often used to reinforce focus and objectives. The second most common approach was workshops designed to increase individual or group capacity across a range of health care responsibilities, with no specific target outcomes [11, 25, 38–42]. Three other applications of complexity science were employed for improvements to health care or service delivery. Clancy et al. [43] described a team of nurse managers who simplified care management forms, using the guideline that 80 % of cases fell into only 20 % of diagnoses and 20 % of cases comprised 80 % of diagnoses (power law tradeoff). Backer et al. [44] used complexity science to guide staff meetings which had the purpose of identifying barriers to imposition of care plans, so CS was used to facilitate implementation and identify barriers to a predesigned initiative. Solberg et al. [45] describe a single clinician applying systems thinking to identify, propose and test possible health care delivery improvements; team meetings facilitated this process, but the process was driven overwhelmingly by just one practitioner with a supervisory role.

Barriers

Many studies did not describe any barriers to implementation [28, 30–32, 38, 43, 45, 46]. Difficulties in changing the larger health system and entrenched practices or habits of others were the most commonly cited barriers to applying systemic thinking usefully [11, 24, 25, 29, 39, 40, 42, 44]. Apparent conflicts with other rules and priorities were mentioned [39, 40]. Fears of the unknown and difficulty in maintaining a chain of accountability were mentioned [11, 41]. Without specific targets, some participants feared unstated institutional motives [42] or felt pointlessly imposed upon [34]. Other barriers to successful implementation were individuals’ resistance to change [24, 33, 34, 41, 44, 47], reluctance by senior staff to yield to collaborative decision-making [24, 27, 47] and lack of financial incentives [47] or external facilitation [11, 44]. Three articles provided especially detailed analyses of barriers to effective implementation [24, 44, 47].

Time period for monitoring impacts

In a minority of studies [25, 28, 31, 38], impact monitoring occurred for less than 12 months. In some cases, the time elapsed between intervention initiation and evaluation of impacts was unclear [29, 43].

Impacts, consequences and significance

Table 3 gives a brief list of positive impacts linked to each intervention, so is meant to be demonstrative rather than definitive about reported outcomes. Many of the measures relate to efficiency gains, only some of which directly benefit patients. In a minority of interventions [28–31, 35, 36, 43, 46], there was not a clearly described process (such as open-ended questions with participants) to facilitate recognition of negative or unintended consequences. No negative changes were linked to interventions, although some metrics failed to improve as hoped. In a multi-site trial [47–49], practices that had more contact with facilitators reported the greatest positive impacts.

Statistical significance to describe observed changes was not possible to calculate for most interventions eligible for this review, but two studies described the results of randomized clinical trials. Horbar et al. [30] reported several significantly improved indicators for speed of preterm infants receiving surfactant treatment in intervention hospitals. Parchman et al. [48] reported significantly improved (and sustained) chronic care indicators for intervention practices.

Quality of reporting

Table 4 shows the quality assessment scores for each study. Scoring depended on details available about each intervention, which might be summarized in one or several articles. The average quality score for USA (2.4) is lower than for UK/Canada articles (3.4). The Canadian and UK studies more often reported only subjective observations about changes (such as “I have more tools which have helped when working with groups of staff” [38]). American articles mostly used objectively determined evaluation metrics (e.g. efficiency in filling out paperwork [43] or fewer patient hospitalisations [46]), but were somewhat less likely than UK/Canadian applications to look for (in evaluation methods) unintended consequences, and tended towards shorter evaluation periods. Five interventions (23 %) scored 4–5 (reported relatively well), seven had score = 3 (fair quality reporting), and ten (45 %) had scores of 2 or below (mostly due to unclear or omitted elements in reporting). Studies which were desirably informed by complexity science in their evaluation strategy (yes answers to both questions 1 and 5: reporting over time periods >1 year or looking for unintended consequences), combined with objectively measured outcomes (question 3), were in a small minority (3/22 studies, 13.6 %) [11, 23, 24, 44].

Discussion

Twenty-two interventions were found that stated that CS was part of the conceptual framework for design. The most common approach was to use workshops for health care professionals and managers to encourage systemic thinking to address either specific or non-specific problems. The linked positive benefits from these interventions were diverse, ranging from subjective perceptions of improved confidence in multiple situations, to specific patient benefits (such as reduced waiting times).

From more than 5000 scientific articles, only 22 eligible interventions were found that had been informed by CS and reported specific outcomes. This seems somewhat surprising, given the many very influential articles [1–8] (>250 citations each on Google Scholar, as of 1 January 2016), that endorsed complexity science for health care system improvement. Difficulty in finding suitable articles was partly due to reporting quality. A previous search for and review of CS-informed interventions in low-middle income countries also reported frustration with lack of specificity about the conceptual framework used in intervention design [9]. One of our selected interventions was not described as informed by CS in actual evaluation reports [47, 48], even though CS was cited heavily as part of the conceptual framework in the intervention protocol [49]. Another study [50] was excluded in spite of being lauded for high compatibility with CS [51], because there was no evidence that the intervention was designed with CS as part of the conceptual framework. We conclude that a comprehensive inventory of CS-informed interventions (which include evaluation) is not currently feasible. Other authors responded to this difficulty by applying less demanding evaluation evidence, and also quality grading criteria to identify CS-compatible intervention approaches, but that approach also has shortcomings if intended to form an evidence base (see “Limitations” discussion below). It may be argued that the difficulties this review found in evidence search arise because complexity theory is primarily an explanatory tool and is poorly suited for supporting intervention design [52, 53] or simply as a helpful tool in identifying potential leverage points for change. Addressing those perspectives is beyond the scope of this paper but has been discussed by others [54].

Missing mention of unintended (possibly negative) consequences in reports may suggest a misunderstanding about inherent characteristics of complex systems; unintended consequences following complex system leverage should always be expected and looked for. Process evaluation was not thorough in many of the selected studies; for example, many studies described no barriers to implementation. Hence, although CS may have informed design, it often did not inform evaluation. However, some discussions of barriers were very thorough [44, 47] and may help others to design more effective CS-informed interventions.

Barriers to implementation where they were mentioned: UK and Canadian interventions more often mentioned institutional barriers, institutional resistance to change and conflicting performance targets. This may reflect an advantage of the fragmented American health care system: it may be a more agile environment and therefore more likely to facilitate innovation. Simple and specific goals in US programmes also seem to have contributed to more tangible positive impacts on final reports. The UK/Canada approaches seemed more oriented towards general change by a small number of perhaps isolated agents, who as a result were more likely to report large institutional barriers. Plsek and Wilson [5] discussed institutional resistance to change and made recommendations for how complex adaptive systems theory can ideally be used to guide managers and health leaders to facilitate improved services.

Limitations

Not eligible were interventions that taught health professionals to think through a complexity science lens (e.g. [55–57]), but without subsequent mention of change in actual practice or specific impacts. Also, excluded were initiatives to develop networks with potential to encourage systemic change, but typically, the only measured outcome was to verify the existence of the network contacts (e.g. [58]). Many such network, capacity or competence building programmes exist. We focused instead on specific, sustained or actual changes, especially in health service delivery or clinical targets. It also may be that changes in personal competencies or improvements in collaborative opportunities are often still not enough to affect systemic change, due to greater institutional forces [59] (also, see previous discussion on “Barriers”). We do not comment on whether the barriers identified are especially likely to be present when dealing with complex systems.

Defining what is or is not a CS-informed intervention is challenging. We wanted explicit statements in the research design about complexity science, but we acknowledge that diverse terminology might be valid. So inevitably, our eligibility criteria (see “Methods” section) cannot be perfect, although we tried to exclude similar or related descriptions only where other authors had made clear distinctions from complexity science. Only one investigator screened items for inclusion; this increases the risk that we may have overlooked some eligible articles. Other reviewers have quality graded (or “scored”) interventions according to their compatibility with CS principles, either according to expert opinion or a specific theoretical framework [10, 14–17, 60]. Whereas our research question was rather more functional, could we find out if, people who say they applied CS, managed to achieve useful results? So the eligibility test was not correct use of CS ideas, but rather stating that CS had influenced intervention design, with specific apparent impacts.

Therefore, we did not attempt to estimate how much intervention design was informed by CS vs. other conceptual frameworks. Inventorying the range of theoretical foundations in intervention design would be an exhaustive and probably still not definitive exercise. Moreover, we relied heavily on information that was in the abstract, title or keywords; if these did not suggest application of complexity science in intervention design, then the article was usually excluded. It would be an enormous effort to screen every article published since 1995 that mentioned any CS concept in the main text, for application of CS in real-world intervention design.

It is quite conceivable that some of the selected studies cited CS as part of the conceptual framework in research design without understanding or truly embracing many aspects of CS. Some authors have explicitly addressed possible misapplications of CS [60, 61]. Other researchers [10, 14, 15, 62] looked in interventions for CS principles, by grading items for eligibility, rather than rely on CS phrases. There is merit in evaluating compatibility with CS, but also drawbacks. It is to be expected, in empirical efforts, that implementors adopt and adapt ideas for our own purposes. It can even be argued that applying CS principles flexibly (picking and choosing) is, in fact, applying CS ideas correctly. This applies even in the context of research design, which otherwise, traditionally, is developed by rigid (“clockware”) methods [63]. It may be argued that using CS in the real-world means, ideally, an agile and adaptive process [64, 65]. Therefore, quality grading or rating interventions that said they were informed by CS, to try to calculate how much they were truly informed by CS, could be antithetical to the spirit of how CS is supposed to be applied to address real-life problems.

Recommendations

This scoping review leads to a number of suggestions how to improve future implementation and reporting of CS-informed interventions and to make evidence more available and useful for adaptation by others. Many of these principles apply to all public health interventions and indeed to product development and other types of projects. Complexity science or related phrases should be in article titles, abstracts or keywords. Interventions with specific simple targets at project inception that can be measured objectively seem to report more tangible outcomes—that are also more compatible with evidence-based medicine. Results should be monitored for a minimum of 12 months. Barriers to implementation need to be explored and discussed, to help others foresee and perhaps mitigate in own applications. Unintended or negative changes should be actively looked for. Perhaps, most importantly, success seems to depend on support by institutions and senior staff combined with widespread collaborative effort. Applying CS principles to effect system change seems especially unlikely to produce significant success if led or imposed by single individuals or indeed if undertaken by isolated individuals; rather, CS ideas may be the most effective as impetus for change when applied as part of a process involving many stakeholders who perceive that they equally own the process towards change and who have institutional support to prioritize this process over other institutional targets. Nonetheless, facilitation by nominated individuals or external incentives can also be beneficial.

In the short and medium term, investigators looking for evidence that complexity-informed interventions are effective will probably continue to need to seek theoretical and CS-compatible studies rather than expect to find clearly documented evidence of positive impacts from embracing complexity-informed conceptual frameworks.

Conclusions

Intervention elements that are compatible with CS are common; but these elements may be compatible with or indeed inspired by other theoretical frameworks, too. Moreover, many articles about CS-compatible interventions do not discuss intervention design in the context of CS. This makes it difficult to find evidence that using “the lens” of complexity science is actually useful in intervention design or implementation.

It is not yet feasible to confidently evaluate the efficacy of complexity-informed interventions. It is not unusual that an intervention is lauded for including many core principles of CS, even though the research report itself never mentions CS or CS-related concepts as part of the conceptual framework underpinning design. Meanwhile, other researchers apply methods without realizing that their intervention approach only came to prominence because of endorsement by others for being compatible with CS principles. Even when an intervention clearly states it was designed using CS, there may be no indication that CS was used in evaluation strategy. It is thus clear that many reports on CS-informed interventions give incomplete information about aspects of applying CS that are integral to understanding elements of successful implementation—e.g. information is missing about barriers to implementation, contextual factors or unintended or negative consequences. These last practical insights could make CS-informed interventions (indeed all interventions) much easier for others to adapt and make effective.

We cannot and indeed should not expect everyone to explain all their interventions according to theory. Nevertheless, the endorsement of CS thinking to improve health care delivery is undermined by lack of empirical evidence [2, 12]. People will be less inclined to apply CS to design improvements in health care without evidence that it works [13]. Some argue that cause and effect are inherently impossible to establish in CAS, but it seems (ironically and perhaps suitably), that even finding CS-informed interventions is in itself, a complex problem.

A number of recommendations are listed to make reporting and details about implementation of CS-informed interventions easier to find and useful to other researchers—to make CS-informed intervention design and implementation work in the real world.

Abbreviations

- CS:

-

Complexity science, complex systems or complex adaptive systems

- UK:

-

United Kingdom

- USA:

-

United States of America

References

Anderson RA, McDaniel RRJ. Managing health care organizations: where professionalism meets complexity science. Health Care Manage Rev. 2000;25(1):83–92.

Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006;96(3):406–9.

Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be? Br Med J. 2004;328(7455):1561–3.

Plsek PE, Greenhalgh T. Complexity science: the challenge of complexity in health care. Br Med J. 2001;323(7313):625–8.

Plsek PE, Wilson T. Complexity, leadership, and management in healthcare organisations. Br Med J. 2001;323(7315):746–9.

Shiell A, Hawe P, Gold L. Complex interventions or complex systems? Implications for health economic evaluation. Br Med J. 2008;336(7656):1281–3.

Sterman JD. Learning from evidence in a complex world. Am J Public Health. 2006;96(3):505–14.

Victora CG, Habicht J-P, Bryce J. Evidence-based public health: moving beyond randomized trials. Am J Public Health. 2004;94(3):400–5.

Adam T, Hsu J, de Savigny D, Lavis JN, Rottingen J-A, Bennett S. Evaluating health systems strengthening interventions in low-income and middle-income countries: are we asking the right questions? Health Policy Plan. 2012;27(4):iv9–iv19.

Best A, Greenhalgh T, Lewis S, Saul JE, Carroll S, Bitz J. Large-system transformation in health care: a realist review. Milbank Q. 2012;90(3):421–56.

Mowles C, van der Gaag A, Fox J. The practice of complexity: review, change and service improvement in an NHS department. J Health Organ Manag. 2010;24(2):127–44.

Gatrell AC. Complexity theory and geographies of health: a critical assessment. Soc Sci Med. 2005;60(12):2661–71.

Martin CM, Félix-Bortolotti M. W(h)ither complexity? The emperor’s new toolkit? Or elucidating the evolution of health systems knowledge? J Eval Clin Pract. 2010;16(3):415–20.

Johnston LM, Matteson CL, Finegood DT. Systems science and obesity policy: a novel framework for analyzing and rethinking population-level planning. Am J Public Health. 2014;104(7):1270–8.

Leykum LK, Pugh J, Lawrence V, Parchman M, Noël PH, Cornell J, McDaniel RR. Organizational interventions employing principles of complexity science have improved outcomes for patients with type II diabetes. Implement Sci. 2007;2(1):28.

Nieuwenhuijze M, Downe S, Gottfredsdóttir H, Rijnders M, du Preez A, Vaz Rebelo P. Taxonomy for complexity theory in the context of maternity care. Midwifery. 2015;31(9):834–43.

Riley BL, Robinson KL, Gamble J, Finegood DT, Sheppard D, Penney TL, Best A. Knowledge to action for solving complex problems: insights from a review of nine international cases. Chronic Dis Inj Can. 2015;35(3):47–53.

Bauman A, Nutbeam D. Planning and evaluating population interventions to reduce noncommunicable disease risk–reconciling complexity and scientific rigour. Public Health Res Practice. 2014;25:1–8.

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, Shekelle P, Stewart LA. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Reviews. 2015;4(1):1.

Schneider M, Somers M. Organizations as complex adaptive systems: implications of complexity theory for leadership research. Leadersh Q. 2006;17(4):351–65.

Shen W, Jiang L, Zhang M, Ma Y, Jiang G, He X. Research approaches to mass casualty incidents response: development from routine perspectives to complexity science. Chin Med J. 2014;127(13):2523–30.

Shojania KG, Ranji SR, Shaw LK, Charo LN, Lai JC, Rushakoff RJ, McDonald KM, Owens DK. Closing the quality gap: a critical analysis of quality improvement strategies (Vol. 2: diabetes care). AHRQ Technical Reviews and Summaries. 2004;9:2.

Gitterman L, Reason P, Gardam M. Front line ownership approach to improve hand hygiene compliance and reduce health care-associated infections in a large acute care organization. Canadian Journal of Infectious Diseases and Medical Microbiology. 2013;24:21B–2B.

Zimmerman B, Reason P, Rykert L, Gitterman L, Christian J, Gardam M. Front-line ownership: generating a cure mindset for patient safety. Healthcare Papers. 2013;13(1):18.

Kegler MC, Kiser M, Hall S. Evaluation findings from the institute for public health and faith collaborations. Public Health Rep. 2007;122(6):793–802.

Fraser SW, Greenhalgh T. Coping with complexity: educating for capability. Br Med J. 2001;323(7316):799.

Balasubramanian BA, Chase SM, Nutting PA, Cohen DJ, Strickland PAO, Crosson JC, Miller WL, Crabtree BF, Team US. Using learning teams for reflective adaptation (ULTRA): insights from a team-based change management strategy in primary care. The Annals of Family Medicine. 2010;8(5):425–32.

Capuano T, MacKenzie R, Pintar K, Halkins D, Nester B. Complex adaptive strategy to produce capacity-driven financial improvement. J Healthc Manag. 2009;54(5):307.

Fontanesi J, Martinez A, Boyo T, Gish R. A case study of quality improvement methods for complex adaptive systems applied to an academic hepatology program. The Journal of Medical Practice Management. 2014;30(5):323–7.

Horbar JD, Carpenter JH, Buzas J, Soll RF, Suresh G, Bracken MB, Leviton LC, Plsek PE, Sinclair JC. Collaborative quality improvement to promote evidence based surfactant for preterm infants: a cluster randomised trial. Br Med J. 2004;329(7473):1004.

Khan BA, Lasiter S, Boustani MA. Critical care recovery center: an innovative collaborative care model for ICU survivors. Am J Nurs. 2015;115(3):24–31.

MacKenzie R, Capuano T, Durishin LD, Stern G, Burke JB. Growing organizational capacity through a systems approach: one health network’s experience. Joint Commission Journal on Quality and Patient Safety. 2008;34(2):63–73.

Moody-Thomas S, Celestin Jr MD, Horswell R. Use of systems change and health information technology to integrate comprehensive tobacco cessation services in a statewide system for delivery of healthcare. Open J Prev Med. 2013;3(01):75.

Moody-Thomas S, Nasuti L, Yi Y, Celestin Jr MD, Horswell R, Land TG. Effect of systems change and use of electronic health records on quit rates among tobacco users in a public hospital system. Am J Public Health. 2015;105:e1–7.

Institute Cabin creek. Organizational change: improved pain management and better rural healthcare. 2015.

Positive deviance in MRSA prevention [http://www.plexusinstitute.org/?page=healthquality].

Lindberg C, Clancy TR. Positive deviance: an elegant solution to a complex problem. J Nurs Adm. 2010;40(4):150–3.

Chin H, Hamer S. Enabling practice development: evaluation of a pilot programme to effect integrated and organic approaches to practice development. Practice Development in Health Care. 2006;5(3):126–44.

Dattée B, Barlow J. Complexity and whole-system change programmes. J Health Serv Res Policy. 2010;15(2):19–25.

Kothari A, Boyko JA, Conklin J, Stolee P, Sibbald SL. Communities of practice for supporting health systems change: a missed opportunity. Health Research Policy and Systems. 2015;13:1.

Rowe A, Hogarth A. Use of complex adaptive systems metaphor to achieve professional and organizational change. J Adv Nurs. 2005;51(4):396–405.

Solomon P, Risdon C. A process oriented approach to promoting collaborative practice: incorporating complexity methods. Med Teach. 2014;36(9):821–4.

Clancy TR, Delaney CW, Morrison B, Gunn JK. The benefits of standardized nursing languages in complex adaptive systems such as hospitals. J Nurs Adm. 2006;36(9):426–34.

Backer EL, Geske JA, McIlvain HE, Dodendorf DM, Minier WC. Improving female preventive health care delivery through practice change: an every woman matters study. J Am Board Fam Pract. 2005;18(5):401–8.

Solberg LI, Klevan DH, Asche SE. Crossing the quality chasm for diabetes care: the power of one physician, his team, and systems thinking. J Am Board Fam Med. 2007;20(3):299–306.

Boustani MA, Sachs GA, Alder CA, Munger S, Schubert CC, Guerriero Austrom M, Hake AM, Unverzagt FW, Farlow M, Matthews BR, et al. Implementing innovative models of dementia care: the healthy aging brain center. Aging and Mental Health. 2011;15(1):13–22.

Noël PH, Romero RL, Robertson M, Parchman ML. Key activities used by community based primary care practices to improve the quality of diabetes care in response to practice facilitation. Qual Prim Care. 2014;22(4):211–9.

Parchman ML, Noel PH, Culler SD, Lanham HJ, Leykum LK, Romero RL, Palmer RF. A randomized trial of practice facilitation to improve the delivery of chronic illness care in primary care: initial and sustained effects. Implemention Science. 2013;8(93):1–7.

Parchman ML, Pugh JA, Culler SD, Noel PH, Arar NH, Romero RL, Palmer RF. A group randomized trial of a complexity-based organizational intervention to improve risk factors for diabetes complications in primary care settings: study protocol. Implemention Science. 2008;3(1):15.

Tuso P, Huynh DN, Garofalo L, Lindsay G, Watson HL, Lenaburg DL, Lau H, Florence B, Jones J, Harvey P. The readmission reduction program of Kaiser Permanente Southern California—knowledge transfer and performance improvement. The Permanente Journal. 2013;17(3):58.

Kottke TE. Simple rules that reduce hospital readmission. The Permanente Journal. 2013;17(3):91.

Paley J. The appropriation of complexity theory in health care. J Health Serv Res Policy. 2010;15(1):59–61.

Paley J, Eva G. Complexity theory as an approach to explanation in healthcare: a critical discussion. Int J Nurs Stud. 2011;48(2):269–79.

Greenhalgh T, Plsek P, Wilson T, Fraser S, Holt T. Response to ‘The appropriation of complexity theory in health care’. J Health Serv Res Policy. 2010;15(2):115–7.

Colbert CY, Ogden PE, Ownby AR, Bowe C. Systems-based practice in graduate medical education: systems thinking as the missing foundational construct. Teach Learn Med. 2011;23(2):179–85.

Eubank D, Geffken D, Orzano J, Ricci R. Teaching adaptive leadership to family medicine residents: what? why? how? Families, Systems, and Health. 2012;30(3):241.

James KM. Incorporating complexity science theory into nursing curricula. Creat Nurs. 2010;16(3):137–42.

Walker JS, Koroloff N, Mehess SJ. Community and state systems change associated with the healthy transitions initiative. J Behav Heal Serv Res. 2015;42(2):254–71.

Mathers J, Taylor R, Parry J. The challenge of implementing peer‐led interventions in a professionalized health service: a case study of the national health trainers service in England. Milbank Q. 2014;92(4):725–53.

Kremser W. Phases of school health promotion implementation through the lens of complexity theory: lessons learnt from an Austrian case study. Health Promot Int. 2011;26(2):136–47.

Canyon DV. Systems thinking: basic constructs, application challenges, misuse in health, and how public health leaders can pave the way forward. Hawaii Journal of Medicine and Public Health. 2013;72:12.

Berkowitz B, Nicola RM. Public health infrastructure system change: outcomes from the turning point initiative. J Public Health Manag Pract. 2003;9(3):224–7.

Smith R. Our need for clockware and swarmware. Br Med J. 2012.

Harrald JR. Agility and discipline: critical success factors for disaster response. The Annals of the American Academy of Political and Social Science. 2006;604(1):256–72.

Rouse WB. Health care as a complex adaptive system: implications for design and management. Bridge - Washington National Academy of Engineering. 2008;38(1):17.

Gardam M, Reason P, Gitterman L. Healthcare-associated infections: new initiatives and continuing challenges. Healthc Q. 2012;15:36–41.

Stroebel CK, McDaniel RR, Crabtree BF, Miller WL, Nutting PA, Stange KC. How complexity science can inform a reflective process for improvement in primary care practices. Joint Commission Journal on Quality and Patient Safety. 2005;31(8):438–46.

Acknowledgements

Both authors were funded by the National Institute for Health Research (NIHR) Health Protection Research Unit in Emergency Preparedness and Response in partnership with Public Health England (PHE). The views expressed are those of the authors and not necessarily those of the NHS, the NIHR, the Department of Health or PHE.

Funding

This research was funded by the National Institute for Health Research (NIHR) Health Protection Research Unit in Emergency Preparedness and Response in partnership with Public Health England (PHE). The views expressed are those of the authors and not necessarily those of the NHS, the NIHR, the Department of Health or PHE.

Authors’ contributions

JB conceived the study, ran the searches, extracted the data, undertook the preliminary analysis and wrote the first draft. PRH reviewed the extracted data, extended the analysis of data and revised the presentation of results. Both authors revised text in the manuscript and approved of the final version.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable

Ethics approval and consent to participate

Not applicable

Author information

Authors and Affiliations

Corresponding author

Additional files

Additional file 1:

Search strategy for finding potentially eligible studies. (DOCX 37 kb)

Additional file 2:

Extracted data on intervention format, complexity science concepts as described by authors, timescale for observations, target conditions or problems, and specific positive outcomes linked to each intervention. (DOCX 58 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Brainard, J., Hunter, P.R. Do complexity-informed health interventions work? A scoping review. Implementation Sci 11, 127 (2015). https://doi.org/10.1186/s13012-016-0492-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-016-0492-5