Abstract

Background

Despite the availability of psychosocial evidence-based practices (EBPs), treatment and outcomes for persons with mental disorders remain suboptimal. Replicating Effective Programs (REP), an effective implementation strategy, still resulted in less than half of sites using an EBP. The primary aim of this cluster randomized trial is to determine, among sites not initially responding to REP, the effect of adaptive implementation strategies that begin with an External Facilitator (EF) or with an External Facilitator plus an Internal Facilitator (IF) on improved EBP use and patient outcomes in 12 months.

Methods/Design

This study employs a sequential multiple assignment randomized trial (SMART) design to build an adaptive implementation strategy. The EBP to be implemented is life goals (LG) for patients with mood disorders across 80 community-based outpatient clinics (N N = 1,600 patients) from different U.S. regions. Sites not initially responding to REP (defined as <50% patients receiving ≥3 EBP sessions) will be randomized to receive additional support from an EF or both EF/IF. Additionally, sites randomized to EF and still not responsive will be randomized to continue with EF alone or to receive EF/IF. The EF provides technical expertise in adapting LG in routine practice, whereas the on-site IF has direct reporting relationships to site leadership to support LG use in routine practice. The primary outcome is mental health-related quality of life; secondary outcomes include receipt of LG sessions, mood symptoms, implementation costs, and organizational change.

Discussion

This study design will determine whether an off-site EF alone versus the addition of an on-site IF improves EBP uptake and patient outcomes among sites that do not respond initially to REP. It will also examine the value of delaying the provision of EF/IF for sites that continue to not respond despite EF.

Trial registration

ClinicalTrials.gov identifier: NCT02151331

Similar content being viewed by others

Background

It can take years to translate evidence-based practices (EBPs) into community-based settings [1]. This research-to-practice gap is especially pronounced for psychosocial EBPs for mood disorders (depression, bipolar disorders), which represent the top ten causes of disability according to the World Health Organization. Mood disorders are associated with significant functional impairment, high medical costs, and preventable mortality [2],[3]. For many patients, pharmacotherapy is not enough to improve outcomes, with psychosocial treatments in addition to pharmacotherapy recommended [4]-[7].

Despite the availability of psychosocial EBPs [1],[4]-[8] for mood disorders, they rarely get implemented and sustained in community-based practices [9]-[11]. This is primarily due to a lack of available strategies to help providers embed the EBP into routine clinical workflows, and garner support from clinical and administrative leadership on the EBP’s added value to the practice [8],[12]-[25]. As a result, outcomes for persons with mental disorders remain suboptimal [12],[26]. New healthcare initiatives including medical home models designed to improve efficiency and value have not included specific strategies to assist local providers in implementing EBPs in routine care [27]-[29]. Moreover, up to 98% of patients with mood disorders receive care from smaller clinical practices, which may not have the tools to fully implement medical homes [30].

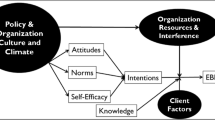

For EBPs to reach these patients, effective implementation strategies are needed [31]-[34]. Implementation strategies that have been highly specified, operationalized, and previously used to implement EBPs into usual care settings include Replicating Effective Programs (REP) [35]. REP primarily focuses on standardization of the EBP implementation into routine care settings through toolkit development and marketing, provider training, and program assistance (Figure 1). Used successfully to improve the uptake of brief HIV interventions [36]-[39], REP is a low-intensity intervention with minimal costs for sites. However, when applied to the implementation of a psychosocial EBP for mood disorders, REP resulted in less than half of sites implementing the EBP [40].

Recognizing the need for more intensive implementation strategies that leverage local provider initiative and outside expertise [41], REP was enhanced to include Facilitation [40],[41]. Two Facilitation roles evolved: an External Facilitator (EF), employed from outside the local site, and an Internal Facilitator (IF), employed at each of the local sites. EFs are situated at a central location and provide technical expertise and program support in implementing the EBP at the local site. In contrast, IFs have a direct reporting relationship to site leadership and have protected time to support providers in implementing EBPs by helping them align the EBP activities with the priorities of the clinic providers and local leadership.

A recent randomized controlled trial [40],[42] of added an External Facilitator (REP + EF) versus REP alone found that among 88 sites not responding to REP, sites randomized to receive REP + EF compared to sites continuing with REP were more likely to adopt the EBP within 6 months (defined as percentage of patients receiving care management: 56% vs. 28%) [42]. This suggests that while REP + EF led to increased uptake, a more intensive, locally oriented implementation strategy (e.g., Internal Facilitation) might be needed for EBP uptake in sites that do not fully implement the EBP. In a different study, REP in combination with EF and IF (REP + EF/IF) versus standard REP alone improved adoption and fidelity to a mood disorder EBP [43],[44], underscoring the promise of REP + EF/IF.

However, because IF requires additional time commitment from sites, and not all sites may need IF, an adaptive implementation strategy approach is a more practical design. In contrast to measuring implementation non-response (or correlates of it) and not using it to guide improved implementation and outcomes, in adaptive implementation strategies, implementation interventions are augmented in direct response to limited adoption of EBPs among specific sites based on circumstances that may not be observable at baseline. This study addresses key scientific questions that need to be answered in order to develop an effective adaptive implementation strategy. Notably, among sites not responding to REP (i.e., not adopting the EBP), is it best to augment with REP + EF/IF or with REP + EF, or is it best to delay the provision of REP + EF/IF for sites that continue not to respond despite an initial REP + EF augmentation?

Aims and objectives

The overarching goal of this study is to build the most effective adaptive implementation strategy involving two cutting-edge implementation strategies (REP and Facilitation) to improve practice-level uptake of EBPs and patient outcomes. The EBP, life goals (LG), is an evidence-based psychosocial treatment delivered in six individual or group sessions that was shown in seven randomized controlled trials to improve mental and physical health outcomes among patients with mood disorders [44]-[49]. We will use a sequential multiple assignment randomized trial (SMART) [50],[51] design to build the adaptive intervention in which data on early response or non-response to REP will be used to determine the next implementation strategy.

Primary study aim

The primary aim of this study is to determine in a SMART implementation trial, among patients with mood disorders in sites that do not exhibit response to REP alone after 6 months (i.e., <50% patients receive ≥3 LG sessions), the effect of adding an External and Internal Facilitator (REP + EF/IF) versus REP + EF on patient-level changes in mental health-related quality of life (MH-QOL; primary outcome), receipt of LG sessions, mood symptoms, implementation costs and organizational change (secondary outcomes) from month 6 to month 24.

Secondary aim 1

The first secondary aim is to determine, among REP + EF sites that continue to exhibit non-response after an additional 6 months, the effect of continuing REP + EF vs. REP + EF/IF on patient-level changes in the primary and secondary outcomes from month 12 to month 24.

Secondary aim 2

The second secondary aim is to estimate the site-level costs of REP + EF/IF compared to REP + EF.

Secondary aim 3

The third secondary aim is to describe the implementation of EF and EF/IF at the site level, including interaction between the two roles and the specific strategies EFs and IFs use to facilitate LG uptake across different sites.

The result of this current SMART study will be an optimized, adaptive implementation strategy that that could be applied to healthcare systems to improve outcomes for patients with mental disorders.

Methods

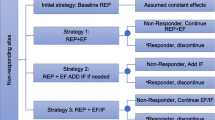

This cluster randomized SMART implementation trial (Figure 2) involves community-based clinics (sites) from Michigan (MI) and Colorado (CO) that care for persons with mood disorders (depression or bipolar disorders). This study was reviewed and approved the local institutional review boards.

Setting

Eighty community-based mental health or primary care clinics from the two states will be recruited to participate in the study based on lists of primary care and community mental health programs available from state organizations. Since the EBP to be implemented (LG) has been shown to be effective in improving outcomes across different settings (primary care, community mental health) [45]-[47],[49],[52], a diverse array of sites will be recruited to maximize study generalizability.

Sites

Site inclusion criteria include the following:

-

1.

Community-based mental health or primary care clinic located in Michigan or Colorado with at least 100 unique patients diagnosed with or treated for mood disorders.

-

2.

Availability of a bachelor’s- or master’s-level healthcare provider with a mental health background and experience with implementing individual or group sessions (core modality of LG) who can be trained to provide LG to up to 20 adult patients with mood disorders in the clinic in a 1-year period.

-

3.

Availability of an employee at the site with direct reporting authority to the leadership of the site or parent practice organization who could serve as potential internal facilitator.

Study design flow

Primary care or mental health outpatient clinics confirming eligibility using a standard organizational assessment and agreeing to participate will initially be offered REP for 6 months in order to implement LG (Figure 2). Based on previous research [53] and preliminary data, it is expected that after 6 months of REP, at least 80% of sites will be non-responsive to REP. The primary focus of the randomized comparisons in this study are sites that are initially non-responsive to REP, defined based on our preliminary studies as <50% of previously identified patients receiving at least three LG sessions (≥3 out of 6) to achieve minimum clinically significant results (85-89). Although not part of the primary randomized comparisons, sites that are responsive at 6 months will continue to be followed and outcomes will be assessed.

Patients within sites

The unit of analysis is LG eligible patients within sites who are diagnosed with or treated for mood disorders. Patients are identified during the first 6 months as part of REP, i.e., prior to site-level randomization (see step 1 of REP below). Patient-level primary and secondary outcomes will be assessed by independent evaluators (i.e., study associates who are not aware of the assignment to REP + EF or REP + EF/IF) across all sites prior to identifying site response/non-response status at month 6, prior to identifying site response/non-response status at month 12, and at months 18 and 24.

Site randomization

The unit of intervention (randomization) is the site. Sites that are not responding to REP at month 6 will be randomized 1:1 by the study data analyst to receive additional External Facilitation (REP + EF) or External plus Internal Facilitation (REP + EF/IF). After another 6 months (at month 12), (i) REP + EF sites that are still non-responsive (defined as <50% patients receiving ≥3 life goals sessions) will be randomized 1:1 to either continue REP + EF or augmentation with IF (REP + EF/IF) for an additional 12 months, and (ii) intervention will be discontinued for sites that are responsive. The month 6 randomization will be stratified by state, practice type (primary care or mental health site) [54],[55], and by site-average baseline MH-QOL (e.g., low (<40) vs. high (≥40)) at baseline. The month 12 randomization will be stratified by state, practice type, and site-average MH-QOL at month 12. This will ensure that intervention groups are balanced for site variables that may correlate highly with outcomes. The study analyst will generate the stratified permuted-block random allocation lists (blocks of size 2, 4, and 6) using a computer program such as PROC PLAN in SAS. Sites are expected to become eligible and accrue in groups of 6-12 (staggered entry). A site is considered randomized once the study analyst informs the study coordinator of each site’s random assignment.

Evidence-based practice to be implemented

LG [44]-[49],[52] is a psychosocial intervention for mood disorders delivered in six individual or group sessions (Table 1). Based on social cognitive theory [56]-[58], LG encourages active discussions focused on individuals’ personal goals that are aligned with healthy behavior change and symptom management strategies. LG was chosen to be the EBP to implement because mood disorders are common in both community-based primary care and mental health clinics [59],[60], are considered to be high-priority populations based on input from community partners, and because LG was shown to improve outcomes including mental health-related quality of life in this group [45]-[47],[49],[52]. Compared to usual care, LG improved outcomes among a cross-diagnosis sample of community-based outpatients with mood disorders [45]-[49],[52], notably a four-point increase in mental and physical health-related quality of life scores based on the SF-12 (e.g., Cohen’s D = .36) [45],[46],[49]. LG has been shown to be equally effective in patients with co-occurring substance use and medical comorbidities [46],[47],[49],[52],[61]. Community-based providers helped to adapt LG [46]-[48], but as with many psychosocial EBPs, have not been widely implemented in smaller practices [62].

Implementation strategies

REP

All sites will receive REP (Table 2) in the first 6 months. REP is based on the Centers for Disease Control and Prevention’s (CDC’s) Research to Practice Framework project [35],[62],[63] and includes initial marketing of the LG program by study investigators, package dissemination and training by an off-site trainer, as-needed program support in using LG (program assistance), and LG uptake monitoring for up to 6 months [35],[44]. The theories underlying REP [64],[65] include Rogers’ Diffusion of Innovations [36] and Social Learning Theory [66]. For this study, REP includes the following components:

Step 1: marketing and dissemination of LG package, in-service, and patient selection

Regional in-services will first be provided by study investigators who will give an overview of LG including the evidence, and details on how to implement LG in their setting. Each site will designate at least one provider with a mental health background to implement LG (“LG provider”), and the LG package will be disseminated by the REP trainer to these providers.

The LG REP package includes all of the components needed to implement LG, including the LG provider manual; a protocol for identifying patients to enroll in LG; LG session scripts and focus points covered in each session in a semi-directed fashion; the registry template for tracking enrolled patients’ progress in LG session-based personal goals, symptoms, and health behavior change; scripts for follow-up calls, patient workbooks, and an implementation manual describing logistics (e.g., identifying rooms if group sessions are used, identifying patients for LG, medical record templates for LG sessions, billing codes). Because LG effectiveness was already demonstrated in patients with mood disorders and that the primary goal of the study is to promote LG implementation, LG providers will identify and offer LG to patients at their site with mood disorders based on guidance from the REP trainer [44],[62]. The trainer will work with LG providers to identify up to 30 patients within a 3-month period who are appropriate for the LG program using the following criteria that are included in the registry template:

-

1.

adults 21 years or older with a diagnosis and current documentation of antidepressant or mood stabilizer for a mood disorder (depression or bipolar disorder) based on medical record review

-

2.

not currently enrolled in residential treatment

-

3.

can understand English and have no terminal illness or cognitive impairment that precludes participation in outpatient psychosocial treatment based on confirmation by the treating clinician.

Assuming a 33% refusal or ineligibility rate [47], as well as a potential 12-month attrition rate of around 20% from prior studies [47], LG providers will be expected to identify and initially offer LG to 30 patients, assuming 20 will initially participate, with 16 to complete 12-month follow-ups.

Step 2: REP LG training and provider competency

LG providers will undergo a 1-day training program that has been provided to over 200 clinicians nationally [44],[67]-[69]. The LG trainer will first provide an orientation to the evidence behind LG and core elements and then a step-by-step walk-through of LG components. The trainer will then demonstrate each LG session and follow-up contact procedures, as well as the standardized criteria for identifying patients who are appropriate for LG in routine practice. LG providers will break into groups and practice each component.

After identifying eligible patients, LG providers will make an initial contact to each patient, introduce the LG program, and schedule in-person group or individual sessions. Individual sessions typically last 50 min each, while group sessions typically last around 90 min to allow for additional discussions between group members. Participants can make up sessions over the phone if they are unable to make in-person sessions. In the sessions, the LG provider will encourage active discussions that progressively have participants identify a personal symptom profile and triggers to the mood symptom or episode and develop an activity plan for identifying warning signs of symptoms and an activity plan for adopting a specific health behavior to mitigate symptoms and promote wellness. Participants will be given a workbook with exercises on behavior change goals, symptom assessments, and coping strategies. The LG provider will also make individual contacts to patients after the end of the sessions on a regular basis to review symptoms and behavior change. LG providers are trained to handle patients with elevated symptoms or suicidal ideation as part of their clinical responsibilities.

Step 3: As-needed REP program assistance and LG monitoring

The final phase of REP consists of as-needed program assistance provided to LG clinicians who contact the LG program support specialist. In addition, each LG provider is sent a standard monitoring form on a biweekly basis for up to 6 months to have them record the number of patients approached and number receiving each LG session. Monitoring forms will be used to assess non-response across sites and will be corroborated based on patient self-reported LG use from the follow-up surveys (see data collection below). Sites will receive feedback reports from the program support assistant that includes performance on uptake measures (i.e., how many enrolled, how many sessions) in comparison to other sites. Also, sites will receive periodic (quarterly) newsletters about the study progress.

Facilitation

The Facilitation implementation strategy will consist of EF and IF (Table 2). Based on the Promoting Action on Research Implementation in Health Services (PARiHS) Framework [70]-[74], facilitation is defined as the process of interactive problem solving and support that occurs in the context of a recognized need for improvement and a supportive interpersonal relationship [41],[74].

LG providers from sites that are not responsive to REP in 6 months after initiating LG at their site will be randomized to receive REP + EF or REP + EF/IF. Sites receiving REP + EF will be contacted by the EF, who resides off site. The EF will contact individual providers from each site on a regular basis and conduct 1-hour phone calls to provide guidance on implementing LG components. Notably, the EF will be trained to identify individual strengths of each LG provider and help leverage those strengths with available influence the LG provider has at the site. The EF will also set measurable objectives in implementing LG (e.g., number of patients completing at least one group session), review implementation progress, and where appropriate, refer the provider to the study program support assistant for specific guidance on LG use (Table 2).

LG providers from sites that are not responsive to REP initially and randomized to receive REP + EF/IF will also be contacted by the EF, and the study team will also contact the site to identify an IF at the time of randomization. The IF will be an individual supervising the LG provider in some capacity. In this arm, LG providers will have regular calls with the EF and IF, as well as meet with IF one on one on a regular basis. As with the REP + EF arm, EFs will identify the individual strengths of each LG provider and help leverage those strengths with available influence the LG provider has at the site. In addition, the IF at each site will meet with the LG provider and will use their internal knowledge of the site to help the LG provider identify opportunities to align LG program goals with existing site priorities (Table 2). Sites randomized to receive IF will receive up to $5,500 to cover IF-related time.

Ensuring fidelity to implementation strategies

Fidelity monitoring will be used to assess whether each site is receiving the core components of each implementation strategy (REP, EF, and IF) and to ensure that there is no contamination across roles. Data from LG provider logs and EF/IF activities completed by study staff will be used to ascertain fidelity within each 6-month period of implementation strategy exposure using established checklists. All sites will get the same LG REP package, and LG training will be conducted by the study trainer who will hold regional trainings in each state. The EF will be trained by study investigators based on a 2-day training program developed for national roll-out of both the REP and EF/IF programs.

Fidelity to REP is defined based on number of sites receiving an LG package, number of providers completing the 1-day LG training program, and number of completed lists of patients receiving LG. Fidelity to the EF role will be defined using the following criteria based on the components outlined in Table 2: 1) number of completed calls by the EF with LG providers at each site; 2) number of documented barriers, facilitators, and specific measurable goals to LG uptake; and 3) documentation of LG provider strengths and available opportunities to influence site activities and overcome barriers. Fidelity to the IF role is defined as 1) number of meetings with the LG provider and EF, 2) number of meetings IF and LG provider have with site leadership, 3) number of documented opportunities to leverage LG uptake with existing site priority goals, and 4) development of a strategic plan to implement LG by the IF.

Primary outcomes and measures

Study staff members (not site employees) will collect patient and provider data to ensure consistency of outcomes data collection over time. Outcomes assessors will be blinded to implementation condition. The assessment package previously implemented by study investigators was informed by the RE-AIM framework for evaluating implementation of EBPs and includes measures used in routine clinical care [75],[76]. Key measures include patient-level outcomes, LG fidelity, organizational factors, and REP, EF, and IF activities [49],[77]-[79].

Patient data collection

Lists of patients and contact information that were identified by the LG providers during pre-randomization will be sent to study staff members who will conduct independent clinical assessments. As the study is focused on implementation processes for an evidence-based treatment that does not involve randomizing at the patient level, local institutional review boards (IRBs) considered the protocol to not fall under research, and no patient informed consent is required for the clinical assessments. Staff members will contact patients and describe the purpose of the phone-based clinical assessment, which will be conducted at baseline, 6, 12, 18, and 24 months later.

The clinical assessment will include previously established measures used in routine care, including the SF-12 for MH-QOL [80] (primary outcome), the Patient Health Questionnaire (PHQ-9) for mood symptoms [81],[82], the World Health Organization Disability Assessment Scale (WHO-DAS) for functional impairment [83],[84], GAD-7 for anxiety symptoms [85], and the Internal State Scale for manic symptoms [86],[87] (secondary and exploratory outcomes). Additional information [44],[88]-[91] including participant demographics will also be ascertained from the clinical assessment during each follow-up period.

Provider and organizational surveys

Study staff members will survey providers from the participating sites, including the site clinical director and LG providers on organizational factors that might impact implementation and outcomes. As the study’s principal institutional review board (University of Michigan Medical School Institutional Review Board - IRBMED) considered this study to fall under quality improvement activities, no informed consent is required from providers or clinic directors. Clinic directors will be contacted prior to the initiation of the REP in-service to complete an initial site organizational survey [43],[44] that includes questions on resources, staff turnover, and integrated care [92]. A longitudinal organizational assessment will also be given to the clinic directors and LG providers prior to the initiation of REP, then again at 12 and 24 months later to assess changes in organizational features that might be impacted by REP and Facilitation. The assessment includes two established questionnaires focused on organizational capacity to implement EBPs. The Implementation Leadership Scale (ILS) assesses the degree of organizational support for EBPs, and the Implementation Climate Scale (ICS) assesses staff’s impressions on expectations and support for effective EBP implementation through their policies, procedure, and behaviors [93].

Life goals fidelity

Because this study is designed to assess real-world implementation, minimally invasive measures to assess LG fidelity will be based on provider logs sent via a secure web-based form to study staff on a weekly basis. Study staff members will also collect confirmatory data from patient surveys on receipt of LG sessions at the patient level. The fidelity monitor calculates a total score based on number of sessions completed by each patient and the percentage completing five of six sessions. Average patient completion of sessions of ≥75% was associated with improved mental health-related quality of life [49].

Secondary aims: facilitation implementation and costs

Data on the implementation of EF and EF/IF will be collected by study staff members using previously established assessments for monitoring implementation activities [40],[43],[94]. Study staff members will interview LG providers, EF, IF, and site clinic leaders regarding barriers and facilitators to LG, REP, and EF/IF strategies, interactions between the EF and IFs, and specific strategies EFs and IFs use to facilitate LG uptake across different sites.

Implementation strategy costs will be ascertained by the study staff members based on study staff logs of REP activities, and LG provider and EF and IF logs based on a standardized list of activity categories (Table 3). EF and IF time is likely to account for the vast majority of costs associated with the implementation strategies, including site employees (LG provider time on training and LG implementation, IF time at meetings) as well as project staff (REP package development, training/program assistance, EF Facilitator time in site follow-ups). All costs will be multiplied by personnel wage rates including fringe. Patient-level costs will also be estimated from self-reported utilization survey data on inpatient, ER, and outpatient use. Costs will be assigned using Current Procedural Terminology (CPT) codes, and a relative value unit (RVU) weight will allow us to use the Medicare Fee Schedule to calculate a standardized cost in US dollars for each service, adjusted for annual levels of inflation using the consumer price index.

Analyses

Intent to treat analyses will be performed. The primary analysis will compare interventions in non-responding sites beginning with REP + EF/IF versus interventions beginning with REP + EF on longitudinal patient-level change in number of LG sessions received, SF-12 mental health-related quality of life scores, and PHQ-9 scores. This analysis is a two-sample comparison of cells A + B vs. C + D + E (Figure 2). For this analysis, the longitudinal outcomes will be measured at months 6 (pre-randomization), 12, 18, and 24. The primary contrast is the between groups difference in change from month 6 to month 18. The follow-up contrast at month 24 will also be examined in this and all subsequent analyses.

The primary aim analysis for health-related quality of life (the primary longitudinal outcome) will use linear mixed models (LMM; [95]), also known as random effects models. The unit of analysis is the individual patient within a site (recall that approximately 20 individuals will be identified prior to randomization). LMMs use all available measurements, allowing individuals to have an unequal number of longitudinal observations and producing unbiased parameter estimates as long as unobserved values are missing at random. The analysis will fit a three-level (repeated measures for each individual clustered within site) LMM with fixed effects for the intercept, time, group, and a group-by-time interaction term, where group is an indicator of REP + EF/IF vs. REP + EF. The LMM will include random effects for site and time, an unstructured within-person correlation structure for the residual errors, and it will adjust for state and type of practice (primary care or mental health site). LMMs similar to the above will be conducted for the secondary patient-level outcomes: change in number of LG sessions received, functional impairment, and mood symptoms.

Secondary aim analyses will be conducted to determine whether continuing REP + EF vs. augmenting with REP + EF/IF leads to changes in outcomes, among sites who are non-responsive to REP + EF at month 12. This secondary analysis is a comparison of cells B vs. C (Figure 2). Outcomes will be examined using an LMM similar to that described above, except (a) including only the subset of sites that do not respond at month 12 to REP + EF, (b) using monthly longitudinal outcomes from month 12 to month 24, and (c) the LMM will use dummy indicators for time (i.e., time-saturated model since there are only three measurement times for each longitudinal outcome). The longitudinal course of discontinuing REP + EF (or REP + EF/IF) will also be examined at month 12 among sites that are responsive at month 12.

Other secondary aim analyses include comparison of EF and EF + IF costs over time using a standardized list of REP and EF/IF activities (Table 3), as well as assessment of organizational change over time [96]. Additional exploratory analyses will also compare the different adaptive interventions embedded in the SMART design (Figure 2) in terms of changes in longitudinal outcomes using methods based on Robins and colleagues [97],[98].

Missing values may occur in outcomes due to dropout or inability to reach patients for follow-up (anticipated 10% attrition). A thorough investigation of mechanisms for missing data will be carried out and will be dealt with using multiple imputation procedures [99]. In stability analyses, data will be analyzed with and without the multiple imputation strategy. Any discrepancies will be reported and carefully examined.

Sample size and power

The estimated sample size for this study is based on a comparison of between groups (REP + EF/IF versus REP + EF) in changes in the most conservative effect size estimate (mental health-related quality of life scores) between month 6 and month 18 (Cohen’s D = .23). This is a two-sample comparison of patients within sites in cells A + B + C versus D + E (Figure 2). To account for the between-site variation induced by the within-site correlation in quality of life outcomes, we inflate the variance term in the standard sample size formula by 1 + (n - 1) × ICC, where ICC is the site inter-class correlation coefficient for the SF-12 mental health-related quality of life component score. Based on our previous randomized controlled implementation trial [40],[43], the site ICC was estimated at .01. Using a two-sided, two-sample t test, a Type I error rate of 5%, and with 60 sites (30 sites randomized to REP + EF/IF versus 30 sites randomized to REP + EF) and 16 patients per site after accounting for attrition, we will have 94% power to detect clinically significant changes in quality of life scores, assuming an ICC = .01. Based on previous data, with SD = 8.35 for the MH-QOL component score, this effect size corresponds to being able to detect a clinically meaningful difference of at least 4 units in MH-QOL score.

Trial status

Sites will be identified and participation confirmed by October 2014. Site training and REP procedures will begin in the fall of 2014. A timeline of implementation activities is provided in Table 3.

Discussion

To date, this will be one of the largest randomized controlled trials to develop a site-level adaptive implementation strategy. This is also one of the first studies to test the augmentation of an established implementation strategy (REP) using REP + EF/IF or REP + EF alone among sites that exhibit non-response after 6 months. This study will determine whether augmentation of the implementation strategy is needed (e.g., REP + EF/IF) or whether in some circumstances withholding augmentation may result in a delayed implementation effect of REP + EF alone among non-responsive sites. Second, this study will utilize a novel SMART design developed by the study investigators [51] to accomplish this implementation trial. SMARTs allow efficient comparison of the overall impact of receiving different intervention augmentation strategies over time and incremental costs of one intervention strategy over another in improved outcomes among non-responsive sites. In addition, this design allows the potential to determine the added value of implementation strategies applied to real-world treatment settings.

There is a paucity of research on effective implementation strategy that improves EBP uptake and ultimately patient outcomes, notably in smaller, community-based, safety net practices, which serve a substantial proportion of patients with mood disorders. There have been few rigorous trials of implementation strategy to promote the uptake of EBPs in community-based practices [1],[35]. Previous implementation studies have focused on highly organized practices such as the VA or staff-model HMOs and mainly involved intensive implementation strategy that might not be feasible to apply to smaller, lower-resourced practices. Among the frameworks that guide implementation efforts (e.g., [100]), few have been operationalized sufficiently to enable community-based practices to enhance EBP uptake.

Adaptive Implementation of Effective Programs Trial (ADEPT) represents a growing cadre of rigorous yet real-world trials that involve site-level randomization of quality improvement strategies to augment the implementation of evidence-based practices. Specifically, informed consent from participants is not required per the recommendation of the IRB because the focus of ADEPT is on the use of implementation strategies at the site level, above and beyond available resources to disseminate effective programs, in order to assist existing providers in adopting an evidence-based practice. Moreover, the delivery of the evidence-based practice to patients is not altered or controlled at the sites by the study team, and patient clinical assessment includes measures that are used across many of the practice settings as part of routine clinical care. These types of studies have high generalizability to real-world settings because they reflect the pragmatic strategies needed to improve healthcare delivery and foster learning health systems [101],[102]. ADEPT evaluates the use of an evidence-based intervention (i.e., life goals) that is superior to usual care from the patient perspective while testing an implementation strategy (facilitation) that is beneficial to providers and practices by improving their ability to adopt such evidence-based practices [102]. Designation as a QI trial does not remove IRB regulatory oversight but instead reduces the burden on researchers (costs) and patients (time) to participate in written informed consent and formal survey assessment meetings which do not reflect real-world care but instead introduce potential patient selection biases that undermine patient participation in the intervention [101],[102].

REP uses key tactical strategies that can promote effective EBP adoption in community-based providers. However, these providers may face multiple organizational barriers at their sites including competing demands or lack of experience in garnering leadership buy-in that are beyond the scope of toolkit dissemination, structured training, or initial performance feedback [10],[15],[16],[103]. Addressing these barriers may require strategic thinking and multilevel organizational alliances [104],[105] that engage providers in supporting and owning the implementation [106]-[108]. While leadership support in EBP adoption is important [109]-[111], involvement and buy-in from frontline providers are also crucial to EBP sustainability [94],[109],[112]-[118]. Hence, REP may need to be augmented to address these organizational barriers to adoption [119],[120] and to ultimately show value of the EBP through the triple aim (improving patient outcomes, experience, lowering costs) [121].

Our study will determine the added value of augmenting implementation programs through Facilitation on EBP uptake, sustainability, and patient outcomes, ultimately determining the public health impact of implementation strategies for persons with mental disorders. Akin to stepped treatment for patients, adaptive SMART designs provide a tailored and potentially cost-effective approach to augmenting standard implementation programs based on the specific needs of a site. This approach is needed to better understand how to improve EBP uptake and patient mental health outcomes using Facilitation, whereby REP is augmented given early signs of site non-response. While REP might be sufficient for some sites in adopting EBPs, our preliminary studies and prior research [122],[123] suggest that the majority will need additional assistance. Moreover, sites initially not responding to REP (i.e., limited adoption of EBPs) were unlikely to do so in the future. IF is more expensive for sites to implement, as the additional customization and relationship building across individual sites due to variations in culture, climate, and capacity can be time consuming [113]. It is also unclear how long Facilitation is needed to achieve its effect [103],[109],[117]. Adaptive interventions involving REP and augmentation through Facilitation are needed to determine the added value of REP + EF/IF on improved patient outcomes, how long Facilitation need to be continued to achieve outcomes, and the cost-effectiveness of REP + EF/IF.

While this study has a number of strengths, including the use of a novel implementation study design within community-based practices, there are key limitations that need to be considered. First, there is the potential for self-selection of site early adopters which can limit generalizability. The involvement of sites most willing to participate in studies is inevitable with any implementation design. We have mitigated this potential selection effect by inviting all sites from networks of practices through state associations from across the country. In addition, LG uptake and fidelity measures do not include direct observations of sessions. In order to conduct an implementation trial that reflected real-world considerations, we chose to use indirect methods to measure LG uptake, and cost considerations precluded us from conducting in-person observations. Nonetheless, because the study was considered non-regulated (non-research), there is potential for increased generalizability especially if patient dropout is minimized because they will be assessed as part of routine clinical care. In addition, there is a chance for contamination between the EF and IF implementation strategies, especially if a less engaged IF might promote the EF to become more active in assisting the LG provider in identifying barriers and facilitators to program uptake. Finally, not all sites from each state participated, and those what are enrolled may not be representative of community-based practices nationwide.

Conclusions

The results of this study will inform the implementation of evidence-based practices across sites in two states, as well as how to conduct practical implementation studies in real-world healthcare settings. This study also sets the stage for determining the added value of more intensive implementation strategies within sites that need additional support to promote the uptake of EBPs. Ultimately, adaptive implementation strategies may produce more relevant, rapid, and generalizable results by more quickly validating or rejecting new implementation strategies, thus enhancing the efficiency and sustainability of implementation research and potentially lead to the rollout of more cost-efficient implementation strategies.

Authors’ contributions

AMK provided funding for the study, developed the implementation methods, and drafted the manuscript. DA, DE, MSB, and JCF provided input on the study design and feedback on paper drafts. DEG, MRT, JEK, LIS, and JW provided literature and relevant background on the implementation model, as well as critically reviewed subsequent drafts. MSB, LIS, DE, and JK provided input on the adaptation of the clinical program and outcomes assessment design. KMN, JEK, JK, SAM, and JW operationalized the adaptive interventions and reviewed paper drafts. KMN, DM, JF, SM, and JK reviewed the study drafts and provided components of the clinical intervention. All authors read and approved the final manuscript.

References

Proctor E, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B: Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health. 2009, 36: 24-34.

Murray CJ, Lopez AD: Global mortality, disability, and the contribution of risk factors: Global Burden of Disease Study. Lancet. 1997, 349: 1436-1442.

The Impact of Mental Illness on Society, NIH Report No. 01 4586. 2001, National Institutes of Health, Bethesda

Yatham LN, Kennedy SH, Schaffer A, Parikh SV, Beaulieu S, O'Donovan C, MacQueen G, McIntyre RS, Sharma V, Ravindran A, Young LT, Young AH, Alda M, Miley R, Vieta E, Calabrese JR, Berk M, Ha K, Kapczinski F: Canadian Network for Mood and Anxiety Treatments (CANMAT) and International Society for Bipolar Disorders (ISBD) collaborative update of CANMAT guidelines for the management of patients with bipolar disorder: update 2009. Bipolar Disord. 2009, 11: 225-255.

Bauer MS, Callahan AM, Jampala C, Petty F, Sajatovic M, Schaefer V, Wittlin B, Powell BJ: Clinical practice guidelines for bipolar disorder from the Department of Veterans Affairs. J Clin Psychiatry. 1999, 60: 9-21.

Practice Guideline for the Treatment of Patients with Major Depressive Disorder. 2010, American Psychiatric Association (APA), Arlington

Practice guideline for the treatment of patients with bipolar disorder (revision). Am J Psychiatry. 2002, 159: 1-50.

Roy-Byrne PP, Sherbourne CD, Craske MG, Stein MB, Katon W, Sullivan G, Means-Christensen A, Bystritsky A: Moving treatment research from clinical trials to the real world. Psychiatr Serv. 2003, 54: 327-332.

Wells KB, Miranda J, Gonzalez JJ: Overcoming barriers and creating opportunities to reduce burden of affective disorders: a new research agenda. Ment Health Serv Res. 2002, 4: 175-178.

Horvitz-Lennon M, Kilbourne AM, Pincus HA: From silos to bridges: meeting the general health care needs of adults with severe mental illnesses. Health Aff (Millwood). 2006, 25: 659-669.

Kessler RC, Berglund P, Demler O, Jin R, Koretz D, Merikangas KR, Rush AJ, Walters EE, Wang PS: The epidemiology of major depressive disorder: results from the National Comorbidity Survey Replication (NCS-R). JAMA. 2003, 289: 3095-3105.

Kilbourne AM, Schulberg HC, Post EP, Rollman BL, Belnap BH, Pincus HA: Translating evidence-based depression management services to community-based primary care practices. Milbank Q. 2004, 82: 631-659.

Sullivan G, Duan N, Kirchner J, Henderson KL: Reinventing evidence-based interventions?. Psychiatr Serv. 2005, 56: 1156-1157.

Wells K, Miranda J, Bruce ML, Alegria M, Wallerstein N: Bridging community intervention and mental health services research. Am J Psychiatry. 2004, 161: 955-963.

Hovmand PS, Gillespie DF: Implementation of evidence-based practice and organizational performance. J Behav Health Serv Res. 2010, 37: 79-94.

Swain K, Whitley R, McHugo GJ, Drake RE: The sustainability of evidence-based practices in routine mental health agencies. Community Ment Health J. 2010, 46: 119-129.

Steckler A, Goodman RM, Kegler MC: Mobilizing organizations for health enhancement: theories of organizational change. Health Behavior and Health Education: Theory, Research and Practice. Edited by: Glanz K, Rimmer BK, Lewis FM. 2002, Jossey-Bass, San Francisco, 335-360. 3

Glisson C, Green P: The effects of organizational culture and climate on the access to mental health care in child welfare and juvenile justice systems. Adm Policy Ment Health. 2006, 33: 433-448.

Aarons GA, Sawitzky AC: Organizational climate partially mediates the effect of culture on work attitudes and staff turnover in mental health services. Adm Policy Ment Health. 2006, 33: 289-301.

Glisson C: The organizational context of children's mental health services. Clin Child Fam Psych. 2002, 5: 233-253.

Glisson C, James LR: The cross-level effects of culture and climate in human services teams. J Organ Behav. 2002, 23: 767-794.

Kilbourne AM, Greenwald DE, Hermann RC, Charns MP, McCarthy JF, Yano EM: Financial incentives and accountability for integrated medical care in Department of Veterans Affairs mental health programs. Psychiatr Serv. 2010, 61: 38-44.

Schutte K, Yano EM, Kilbourne AM, Wickrama B, Kirchner JE, Humphreys K: Organizational contexts of primary care approaches for managing problem drinking. J Subst Abuse Treat. 2009, 36: 435-445.

Kilbourne AM, Pincus HA, Schutte K, Kirchner JE, Haas GL, Yano EM: Management of mental disorders in VA primary care practices. Adm Policy Ment Health. 2006, 33: 208-214.

Insel TR: Translating scientific opportunity into public health impact: a strategic plan for research on mental illness. Arch Gen Psychiatry. 2009, 66: 128-133.

Crossing the Quality Chasm: A New Health System for the 21st Century. 2003, National Academy Press, Washington, D.C

Fisher ES, McClellan MB, Bertko J, Lieberman SM, Lee JJ, Lewis JL, Skinner JS: Fostering accountable health care: moving forward in medicare. Health Aff (Millwood). 2009, 28: w219-w231.

Fisher ES: Building a medical neighborhood for the medical home. N Engl J Med. 2008, 359: 1202-1205.

McClellan M, McKethan AN, Lewis JL, Roski J, Fisher ES: A national strategy to put accountable care into practice. Health Aff (Millwood). 2010, 29: 982-990.

Bauer MS, Leader D, Un H, Lai Z, Kilbourne AM: Primary care and behavioral health practice size: the challenge for health care reform. Med Care. 2012, 50: 843-848.

Berwick DM: Disseminating innovations in health care. JAMA. 2003, 289: 1969-1975.

Duan N, Braslow JT, Weisz JR, Wells KB: Fidelity, adherence, and robustness of interventions. Psychiatr Serv. 2001, 52: 413-

Cohen D, McDaniel RR, Crabtree BF, Ruhe MC, Weyer SM, Tallia A, Miller WL, Goodwin MA, Nutting P, Solberg LI, Zyzanski SJ, Jaen CR, Gilchrist V, Stange KC: A practice change model for quality improvement in primary care practice. J Healthc Manag. 2004, 49: 155-168.

Sales A, Smith J, Curran G, Kochevar L: Models, strategies, and tools: theory in implementing evidence-based findings into health care practice. J Gen Intern Med. 2006, 21 (Suppl 2): S43-S49.

Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R: Implementing evidence-based interventions in health care: application of the replicating effective programs framework. Implement Sci. 2007, 2: 42-

Rogers E: Diffusion of Innovations. 2003, Free Press, New York

Green LW, Kreuter MW: Health Promotion Planning: An Educational and Environmental Approach. 1991, Mayfield Publishing Co., Mountain View

Tax S: The Fox Project. Hum Organ. 1958, 17: 17-19.

Bandura A: Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977, 84: 191-215.

Kilbourne AM, Abraham KM, Goodrich DE, Bowersox NW, Almirall D, Lai Z, Nord KM: Cluster randomized adaptive implementation trial comparing a standard versus enhanced implementation intervention to improve uptake of an effective re-engagement program for patients with serious mental illness. Implement Sci. 2013, 8: 136-

Kirchner JE, Kearney LK, Ritchie MJ, Dollar KM, Swensen AB, Schohn M: Research & services partnerships: lessons learned through a national partnership between clinical leaders and researchers. Psychiatr Serv. 2014, 65 (5): 577-79.

Kilbourne AM, Goodrich DE, Lai Z, Almirall D, Nord KM, Bowersox NW, Abraham KA: Re-engaging veterans with serious mental illness into care: preliminary results from a national randomized trial of an enhanced versus standard implementation strategy.Psychiatr Serv 2014, in press.,

Waxmonsky J, Kilbourne AM, Goodrich DE, Nord KM, Lai Z, Laird C, Clogston J, Kim HM, Miller C, Bauer MS: Enhanced fidelity to treatment for bipolar disorder: results from a randomized controlled implementation trial. Psychiatr Serv. 2014, 65 (1): 81-90.

Kilbourne AM, Goodrich DE, Nord KM, VanPoppelen C, Clogston J, Bauer MS, Waxmonsky JA, Lai Z, Kim HM, Eisenberg D, Thomas MR: Long-term clinical outcomes from a randomized controlled trial of two implementation strategies to promote collaborative care attendance in community practices.Adm Policy in Mental Health, in press.,

Kilbourne AM, Post EP, Nossek A, Drill L, Cooley S, Bauer MS: Improving medical and psychiatric outcomes among individuals with bipolar disorder: a randomized controlled trial. Psychiatr Serv. 2008, 59: 760-768.

Simon GE, Ludman EJ, Bauer MS, Unutzer J, Operskalski B: Long-term effectiveness and cost of a systematic care program for bipolar disorder. Arch Gen Psychiatry. 2006, 63: 500-508.

Kilbourne AM, Goodrich DE, Lai Z, Clogston J, Waxmonsky J, Bauer MS: Life Goals Collaborative Care for patients with bipolar disorder and cardiovascular disease risk. Psychiatr Serv. 2012, 63: 1234-1238.

Kilbourne AM, Li D, Lai Z, Waxmonsky J, Ketter T: Pilot randomized trial of a cross-diagnosis collaborative care program for patients with mood disorders. Depress Anxiety. 2013, 30: 116-122.

Bauer MS, McBride L, Williford WO, Glick H, Kinosian B, Altshuler L, Beresford T, Kilbourne AM, Sajatovic M: Collaborative care for bipolar disorder: part II. Impact on clinical outcome, function, and costs. Psychiatr Serv. 2006, 57: 937-945.

Lei H, Nahum-Shani I, Lynch K, Oslin D, Murphy SA: A “SMART” design for building individualized treatment sequences. Annu Rev Clin Psychol. 2012, 8: 21-48.

Almirall D, Compton SN, Gunlicks-Stoessel M, Duan N, Murphy SA: Designing a pilot sequential multiple assignment randomized trial for developing an adaptive treatment strategy. Stat Med. 2012, 31: 1887-1902.

Kilbourne AM, Goodrich DE, Lai Z, Post EP, Schumacher K, Nord KM, Bramlet M, Chermack S, Bialy D, Bauer MS: Randomized controlled trial to assess reduction of cardiovascular disease risk in patients with bipolar disorder: the Self-Management Addressing Heart Risk Trial (SMAHRT). J Clin Psychiatry. 2013, 74: e655-e662.

Bauer AM, Azzone V, Goldman HH, Alexander L, Unutzer J, Coleman-Beattie B, Frank RG: Implementation of collaborative depression management at community-based primary care clinics: an evaluation. Psychiatr Serv. 2011, 62: 1047-1053.

Scott NW, McPherson GC, Ramsay CR, Campbell MK: The method of minimization for allocation to clinical trials. A review. Control Clin Trials. 2002, 23: 662-674.

Taves DR: Minimization: a new method of assigning patients to treatment and control groups. Clin Pharmacol Ther. 1974, 15: 443-453.

Kilbourne AM, Post EP, Nossek A, Sonel E, Drill LJ, Cooley S, Bauer MS: Service delivery in older patients with bipolar disorder: a review and development of a medical care model. Bipolar Disord. 2008, 10: 672-683.

Bandura A: Social Foundations of Thought and Action: A Social Cognitive Theory. 1996, Englewood Cliffs, Prentice Hall

Bandura A: The primacy of self-regulation in health promotion. Appl Psychol Int Rev. 2005, 54 (2): 245-254.

Kessler RC, Wang PS: The descriptive epidemiology of commonly occurring mental disorders in the United States. Annu Rev Public Health. 2008, 29: 115-129.

Judd LL, Akiskal HS: The prevalence and disability of bipolar spectrum disorders in the US population: re-analysis of the ECA database taking into account subthreshold cases. J Affect Disord. 2003, 73: 123-131.

Kilbourne AM, Biswas K, Pirraglia PA, Sajatovic M, Williford WO, Bauer MS: Is the collaborative chronic care model effective for patients with bipolar disorder and co-occurring conditions?. J Affect Disord. 2009, 112: 256-261.

Kilbourne AM, Neumann MS, Waxmonsky J, Bauer MS, Kim HM, Pincus HA, Thomas M: Public-academic partnerships: evidence-based implementation: the role of sustained community-based practice and research partnerships. Psychiatr Serv. 2012, 63: 205-207.

Neumann MS, Sogolow ED: Replicating effective programs: HIV/AIDS prevention technology transfer. AIDS Educ Prev. 2000, 12: 35-48.

Adams J, Terry MA, Rebchook GM, O'Donnell L, Kelly JA, Leonard NR, Neumann MS: Orientation and training: preparing agency administrators and staff to replicate an HIV prevention intervention. AIDS Educ Prev. 2000, 12: 75-86.

O'Donnell L, Scattergood P, Adler M, Doval AS, Barker M, Kelly JA, Kegeles SM, Rebchook GM, Adams J, Terry MA, Neumann MS: The role of technical assistance in the replication of effective HIV interventions. AIDS Educ Prev. 2000, 12: 99-111.

Bandura A: Social Learning Theory. 1977, Englewood Cliffs, Prentice Hall

Bauer MS, McBride L: Structured Group Psychotherapy for Bipolar Disorder: The Life Goals Program. 2003, Springer, New York

Bauer MS, McBride L, Williford WO, Glick H, Kinosian B, Altshuler L, Beresford T, Kilbourne AM, Sajatovic M: Collaborative care for bipolar disorder: part I. Intervention and implementation in a randomized effectiveness trial. Psychiatr Serv. 2006, 57: 927-936.

Simon GE, Ludman E, Unutzer J, Bauer MS: Design and implementation of a randomized trial evaluating systematic care for bipolar disorder. Bipolar Disord. 2002, 4: 226-236.

Stetler CB, Damschroder LJ, Helfrich CD, Hagedorn HJ: A guide for applying a revised version of the PARIHS framework for implementation. Implement Sci. 2011, 6: 99-

Kitson AL, Rycroft-Malone J, Harvey G, McCormack B, Seers K, Titchen A: Evaluating the successful implementation of evidence into practice using the PARiHS framework: theoretical and practical challenges. Implement Sci. 2008, 3: 1-

Kitson A, Harvey G, McCormack B: Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care. 1998, 7: 149-158.

Harvey G, Loftus-Hills A, Rycroft-Malone J, Titchen A, Kitson A, McCormack B, Seers K: Getting evidence into practice: the role and function of facilitation. J Adv Nurs. 2002, 37: 577-588.

Stetler CB, Legro MW, Rycroft-Malone J, Bowman C, Curran G, Guihan M, Hagedorn H, Pineros S, Wallace CM: Role of “external facilitation” in implementation of research findings: a qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implement Sci. 2006, 1: 23-

Glasgow RE, Vogt TM, Boles SM: Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999, 89: 1322-1327.

Fortney J, Enderle M, McDougall S, Clothier J, Otero J, Altman L, Curran G: Implementation outcomes of evidence-based quality improvement for depression in VA community based outpatient clinics. Implement Sci. 2012, 7: 30-

Fenn HH, Bauer MS, Altshuler L, Evans DR, Williford WO, Kilbourne AM, Beresford TP, Kirk G, Stedman M, Fiore L: Medical comorbidity and health-related quality of life in bipolar disorder across the adult age span. J Affect Disord. 2005, 86: 47-60.

Kilbourne AM, Post EP, Bauer MS, Zeber JE, Copeland LA, Good CB, Pincus HA: Therapeutic drug and cardiovascular disease risk monitoring in patients with bipolar disorder. J Affect Disord. 2007, 102: 145-151.

Kilbourne AM, Farmer Teh C, Welsh D, Pincus HA, Lasky E, Perron B, Bauer MS: Implementing composite quality metrics for bipolar disorder: towards a more comprehensive approach to quality measurement. Gen Hosp Psychiatr. 2010, 32: 636-643.

Ware J, Kosinski M, Keller SD: A 12-item short-form health survey: construction of scales and preliminary tests of reliability and validity. Med Care. 1996, 34: 220-233.

Kroenke K, Spitzer RL, Williams JB: The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001, 16: 606-613.

Spitzer RL, Kroenke K, Williams JB: Validation and utility of a self-report version of PRIME-MD: the PHQ primary care study. Primary Care Evaluation of Mental Disorders. Patient Health Questionnaire. JAMA. 1999, 282: 1737-1744.

Ustun TB, Chisholm D: Global “burden of disease”-study for psychiatric disorders. Psychiatr Prax. 2001, 28 (Suppl 1): S7-S11.

Rehm J, Ustun TB, Saxena S, Nelson CB, Chatterji S, Ivis F, Adlaf E: On the development and psychometric testing of the WHO screening instrument to assess disablement in the general population. Int J Methods Psychiatr Res. 1999, 8: 110-122.

Spitzer RL, Kroenke K, Williams JB, Lowe B: A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006, 166: 1092-1097.

Bauer MS, Vojta C, Kinosian B, Altshuler L, Glick H: The Internal State Scale: replication of its discriminating abilities in a multisite, public sector sample. Bipolar Disord. 2000, 2: 340-346.

Glick HA, McBride L, Bauer MS: A manic-depressive symptom self-report in optical scanable format. Bipolar Disord. 2003, 5: 366-369.

Gordon AJ, Maisto SA, McNeil M, Kraemer KL, Conigliaro RL, Kelley ME, Conigliaro J: Three questions can detect hazardous drinkers. J Fam Pract. 2001, 50: 313-320.

Kessler RC, Andrews G, Mroczek D, Ustun TB, Wittchen HU: The World Health Organization Composite International Diagnostic Interview short-form (CIDI-SF). Int J Methods Psychiatr Res. 1998, 7: 171-185.

Chyba MM, Washington LR: Questionnaires from the National Health Interview Survey, 1985-89. Vital Health Stat Ser. 1993, 1: 1-412.

Kilbourne AM, Good CB, Sereika SM, Justice AC, Fine MJ: Algorithm for assessing patients' adherence to oral hypoglycemic medication. Am J Health Syst Pharm. 2005, 62: 198-204.

Post EP, Kilbourne AM, Bremer RW, Solano FX, Pincus HA, Reynolds CF: Organizational factors and depression management in community-based primary care settings. Implement Sci. 2009, 4: 84-

Aarons GA, Ehrhart MG, Farahnak LR: The Implementation Leadership Scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014, 9: 45-

Goodrich DE, Bowersox NW, Abraham KM, Burk JP, Visnic S, Lai Z, Kilbourne AM: Leading from the middle: replication of a re-engagement program for veterans with mental disorders lost to follow-up care. Depress Res Treat. 2012, 2012: 325249-

Verbeke G, Molenberghs G: Linear Mixed Models for Longitudinal Data. 2009, Springer, New York

Mackinnon DP, Fairchild AJ: Current directions in mediation analysis. Curr Dir Psychol Sci. 2009, 18: 16-

Orellana L, Rotnitzky A, Robins JM: Dynamic regime marginal structural mean models for estimation of optimal dynamic treatment regimes, Part II: proofs of results. Int J Biostat. 2010, 6: Article 9-

Robins JM: Causal models for estimating the effects of weight gain on mortality. Int J Obes (Lond). 2008, 32 (Suppl 3): S15-S41.

Schafer JL: Analysis of Incomplete Multivariate Data. 1997, Chapman and Hall, London

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC: Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009, 4: 50-

Fu SS, van Ryn M, Sherman SE, Burgess DJ, Noorbaloochi S, Clothier B, Taylor BC, Schlede CM, Burke RS, Joseph AM: Proactive tobacco treatment and population-level cessation: a pragmatic randomized clinical trial. JAMA Inter Med. 2014, 174: 671-677.

Pletcher MJ, Lo B, Grady D: Informed consent in randomized quality improvement trials: a critical barrier for learning health systems. JAMA Intern Med. 2014, 174: 668-670.

Mendel P, Meredith LS, Schoenbaum M, Sherbourne CD, Wells KB: Interventions in organizational and community context: a framework for building evidence on dissemination and implementation in health services research. Adm Policy Ment Health. 2008, 35: 21-37.

Pincus HA, Hough L, Houtsinger JK, Rollman BL, Frank RG: Emerging models of depression care: multi-level ('6 P') strategies. Int J Methods Psychiatr Res. 2003, 12: 54-63.

Frank RG, Huskamp HA, Pincus HA: Aligning incentives in the treatment of depression in primary care with evidence-based practice. Psychiatr Serv. 2003, 54: 682-687.

Ryan AM, Blustein J, Casalino LP: Medicare's flagship test of pay-for-performance did not spur more rapid quality improvement among low-performing hospitals. Health Aff (Millwood). 2012, 31: 797-805.

Werner RM, Kolstad JT, Stuart EA, Polsky D: The effect of pay-for-performance in hospitals: lessons for quality improvement. Health Aff (Millwood). 2011, 30: 690-698.

Hillman AL, Ripley K, Goldfarb N, Weiner J, Nuamah I, Lusk E: The use of physician financial incentives and feedback to improve pediatric preventive care in Medicaid managed care. Pediatrics. 1999, 104: 931-935.

Parker LE, Kirchner JE, Bonner LM, Fickel JJ, Ritchie MJ, Simons CE, Yano EM: Creating a quality-improvement dialogue: utilizing knowledge from frontline staff, managers, and experts to foster health care quality improvement. Qual Health Res. 2009, 19: 229-242.

Rubenstein LV, Parker LE, Meredith LS, Altschuler A, DePillis E, Hernandez J, Gordon NP: Understanding team-based quality improvement for depression in primary care. Health Serv Res. 2002, 37: 1009-1029.

Glasgow RE, Emmons KM: How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health. 2007, 28: 413-433.

Birken SA, Lee SY, Weiner BJ: Uncovering middle managers' role in healthcare innovation implementation. Implement Sci. 2012, 7: 28-

Parker LE, de Pillis E, Altschuler A, Rubenstein LV, Meredith LS: Balancing participation and expertise: a comparison of locally and centrally managed health care quality improvement within primary care practices. Qual Health Res. 2007, 17: 1268-1279.

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O: Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004, 82: 581-629.

Ginsburg LR, Lewis S, Zackheim L, Casebeer A: Revisiting interaction in knowledge translation. Implement Sci. 2007, 2: 34-

Stange KC, Goodwin MA, Zyzanski SJ, Dietrich AJ: Sustainability of a practice-individualized preventive service delivery intervention. Am J Prev Med. 2003, 25: 296-300.

Rubenstein LV, Mittman BS, Yano EM, Mulrow CD: From understanding health care provider behavior to improving health care: the QUERI framework for quality improvement. Quality Enhancement Research Initiative. Med Care. 2000, 38: I129-I141.

Hulscher ME, Wensing M, Grol RP, van Der WT, van Weel C: Interventions to improve the delivery of preventive services in primary care. Am J Public Health. 1999, 89: 737-746.

Goldberg HI, Wagner EH, Fihn SD, Martin DP, Horowitz CR, Christensen DB, Cheadle AD, Diehr P, Simon G: A randomized controlled trial of CQI teams and academic detailing: can they alter compliance with guidelines?. Jt Comm J Qual Improv. 1998, 24: 130-142.

Solberg LI, Fischer LR, Wei F, Rush WA, Conboy KS, Davis TF, Heinrich RL: A CQI intervention to change the care of depression: a controlled study. Eff Clin Pract. 2001, 4: 239-249.

Berwick DM, Nolan TW, Whittington J: The triple aim: care, health, and cost. Health Aff (Millwood). 2008, 27: 759-769.

Kelly JA, Heckman TG, Stevenson LY, Williams PN, Ertl T, Hays RB, Leonard NR, O'Donnell L, Terry MA, Sogolow ED, Neumann MS: Transfer of research-based HIV prevention interventions to community service providers: fidelity and adaptation. AIDS Educ Prev. 2000, 12: 87-98.

Kelly JA, Somlai AM, DiFranceisco WJ, Otto-Salaj LL, McAuliffe TL, Hackl KL, Heckman TG, Holtgrave DR, Rompa D: Bridging the gap between the science and service of HIV prevention: transferring effective research-based HIV prevention interventions to community AIDS service providers 41. Am J Public Health. 2000, 90: 1082-1088.

Acknowledgements

This research was supported by the National Institute of Mental Health (R01 099898). The views expressed in this article are those of the authors and do not necessarily represent the views of the VA.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Kilbourne, A.M., Almirall, D., Eisenberg, D. et al. Protocol: Adaptive Implementation of Effective Programs Trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implementation Sci 9, 132 (2014). https://doi.org/10.1186/s13012-014-0132-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-014-0132-x