Abstract

Background

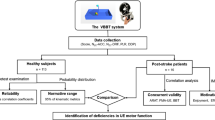

Measuring arm and hand function of the affected side is vital in stroke rehabilitation. Therefore, the Virtual Peg Insertion Test (VPIT), an assessment combining virtual reality and haptic feedback during a goal-oriented task derived from the Nine Hole Peg Test (NHPT), was developed. This study aimed to evaluate (1) the concurrent validity of key outcome measures of the VPIT, namely the execution time and the number of dropped pegs, with the NHPT and Box and Block Test (BBT), and (2) the test-retest-reliability of these parameters together with the VPIT’s additional kinetic and kinematic parameters in patients with chronic stroke.

The three tests were administered on 31 chronic patients with stroke in one session (concurrent validity), and the VPIT was retested in a second session 3–7 days later (test-retest reliability). Spearman rank correlation coefficients (ρ) were calculated for assessing concurrent validity, and intraclass correlation coefficients (ICCs) were used to determine relative reliability. Bland-Altman plots were drawn and the smallest detectable difference (SDD) was calculated to examine absolute reliability.

Results

For the 31 included patients, 11 were able to perform the VPIT solely via use of their affected arm, whereas 20 patients also had to utilize support from their unaffected arm. For n = 31, the VPIT showed low correlations with the NHPT (ρ = 0.31 for time (Tex[s]); ρ = 0.21 for number of dropped pegs (Ndp)) and BBT (ρ = −0.23 for number of transported cubes (Ntc); ρ = −0.12 for number of dropped cubes (Ndc)). The test-retest reliability for the parameters Tex[s], mean grasping force (Fggo[N]), number of zero-crossings (Nzc[1/sgo/return) and mean collision force (Fcmean[N]) were good to high, with ICCs ranging from 0.83 to 0.94. Fair reliability could be found for Fgreturn (ICC = 0.75) and trajectory error (Etrajgo[cm]) (0.70). Poor reliability was measured for Etrajreturn[cm] (0.67) and Ndp (0.58). The SDDs were: Tex = 70.2 s, Ndp = 0.4 pegs; Fggo/return = 3.5/1.2 Newton; Nzc[1/s]go/return = 0.2/1.8 zero-crossings; Etrajgo/return = 0.5/0.8 cm; Fcmean = 0.7 Newton.

Conclusions

The VPIT is a promising upper limb function assessment for patients with stroke requiring other components of upper limb motor performance than the NHPT and BBT. The high intra-subject variation indicated that it is a demanding test for this stroke sample, which necessitates a thorough introduction to this assessment. Once familiar, the VPIT provides more objective and comprehensive measurements of upper limb function than conventional, non-computerized hand assessments.

Similar content being viewed by others

Background

Upper limb function relies on the delicate interaction between hand and brain, defining our ability to perform activities of daily living (ADL) [1]. Brain injury caused by cerebrovascular accident may result in reduced cerebral hand representation over time [1]. The consequences for patients after a stroke are tremendous and ADL become challenging. However, building on brain plasticity and intensive training, hand representation in the brain can be increased again [2]. Novel upper limb training modalities include the use of virtual reality (VR), robotics and computer gaming, all of which can provide ecologically valid, intensive and task specific training [3]. It is essential to regularly measure motor function in order to document changes in upper limb performance during the course of rehabilitation. Such regular evaluation also informs adaptation of therapy settings, as needs arise. To date, there are several assessments measuring upper limb motor function, such as the Box and Block Test (BBT) [4, 5] and the Nine Hole Peg Test (NHPT) [6, 7] which measure gross and fine manual dexterity respectively. Additionally, the use of VR and robotic devices are not only an effective alternative to conventional therapy [8, 9], but can also be used for precise and objective concurrent assessment of attributes such as motor function, cognition and ADL [10–16].

A promising assessment tool for measuring upper limb function is the Virtual Peg Insertion Test (VPIT), a computer-assisted assessment. The task is that of the NHPT, but since there is no precision grip required, the movement has more similarities to the BBT [17]. The VPIT allows measurement of three-dimensional hand position and orientation as well as grasp force during the accomplishment of a goal-oriented task consisting of grasping, transporting and inserting nine virtual pegs into the nine holes of a virtual pegboard. This is achieved by positioning and controlling a grasping force applied to grasping a handle instrumented with force sensors mounted on a PHANTOM Omni haptic device (Geomagic, USA). The clinical practicability and measurement properties of the VPIT were tested in patients with Multiple Sclerosis (MS) [18] and pilot-tested in patients with Autosomal Recessive Spastic Ataxia of Charlevoix-Saguenay (ARSACS) [19]. Both patient groups were significantly less coordinated and slower than age-matched healthy subjects when evaluated using the VPIT. The preliminary results of both studies illustrate the feasibility of using the VPIT in both MS and ARSACS patients, and underline the potential of this test to evaluate upper limb motor function. To date, there is only one pilot study reporting initial evaluation of the VPIT outcome measures in a group of four chronic patients with stroke, showing significant differences in grasping force control and upper limb movement patterns compared to healthy subjects [17]. However, the relation between the analyzed performance parameters during the VPIT and impaired function needs to be further established. A way to achieve this would be to evaluate the validity and reliability of the VPIT parameters for this population. As the VPIT combines the characteristics of the conventional NHPT and the BBT, one option could be to validate their mutual parameters, namely the execution time (Tex[s]) and the number of dropped pegs (Ndp). As the VPIT provides in total nine different outcome measures including kinematic and kinetic parameters quantifying movement coordination, smoothness, upper limb synergies and force control, we decided to evaluate all of these for their reliability when administered twice in a test and retest procedure. Therefore, this study aimed to evaluate (1) the concurrent validity of key outcome measures of the VPIT, namely the execution time and the number of dropped pegs, with the NHPT and BBT, and (2) the test-retest reliability of these parameters together with the VPIT’s additional kinetic and kinematic parameters, in patients with chronic stroke.

Methods

Apparatus

In the VPIT, pegs and holes are displayed in a virtual environment and can be felt through a haptic interface. Subjects are asked to move the handle of the haptic device to grasp, displace and release the pegs (Fig. 1). The aim is to grasp, transport and place all pegs in the holes as fast as possible. To evaluate upper limb function during the execution of the task, the VPIT software records and computes nine specific parameters. These are (Fig. 1):

VPIT setup and upper limb function parameters. Tex[s]: execution time in seconds; Ndp: number of dropped pegs during transport. Kinematic parameters: Etraj[cm]go/return: trajectory error: Nzc[1/s]go/return: number of zero-crossings of the acceleration. Kinetic parameters: Fg[N]go/return: mean grasping force; Fcmean[N]: mean collision force

The two primary outcome parameters evaluating the overall arm and hand functional ability of the patient are: (1) Tex[s]: time to execute the task from the approach to the first peg to the insertion of the last peg. (2) Ndp: number of times a peg is dropped during the transport. Secondary outcome parameters pertain to four kinematic and three kinetic parameters.

The four kinematic parameters are: (3–4) Etraj[cm]go/return: trajectory error measured as the distance between the actual trajectory and the ideal straightest trajectory projected on the horizontal plane. This parameter is used to evaluate movement accuracy and upper limb motor synergies during a movement requiring the simultaneous control of shoulder, elbow and hand [20–22]. (5–6) Nzc[1/s]go/return: the time-normalized number of zero-crossings (i.e., change of sign) of the acceleration during the gross movement from a peg to a hole, or from a hole to a peg. This parameter provides an estimate of the number of sub-movements a point-to-point movement is composed of, which is a commonly accepted measure of movement smoothness and upper limb coordination [19, 23, 24].

The three kinetic parameters are: (7–8) Fg[N]go/return: average grasping force calculated during the transport of one peg and for the return trajectory. This parameter evaluates factors such as force control. (9) Fcmean[N]: mean collision force exerted against the virtual pegboard.

The “go” refers to the trajectory during the outbound trip when a peg is transported to the hole, and the “return” stands for the way back from the hole to approach a new peg. While performing the VPIT, the patient additionally receives visual feedback regarding the force applied on the peg being held: the cursor is yellow when no peg is held, turns orange to indicate that it is properly aligned with a peg, green when a peg is currently held and red when excessive grasping force is applied to the handle but no peg is held. Parameters are computed offline and no feedback related to the user’s performance is displayed during or after the test. For a more detailed description of the apparatus, we refer to Fluet et al. (2011) [17].

Conventional tests

The NHPT version produced by Smith & Nephew Rehabilitation, Inc.Footnote 1 was used. It consists of a plastic board with a shallow round dish to contain the pegs on one end of the board and the nine holes in a 3x3 grid on the opposite end. Initially, the participant had to use their affected hand to grasp, one by one, the nine pegs from the dish, inserting each one into a hole until all pegs are placed. After this the participant then has to replace each of the pegs back into the dish. All of this is carried out as fast as possible [7]. The test was timed, with a stopwatch, from the moment the participant touched the first peg until the moment when all pegs were removed from the holes. As the VPIT stops when all pegs are placed into the holes, we measured an intermediate time when all pegs were placed into the holes of the NHPT board. We labelled this parameter “time point 1” (TP1). All dropped pegs were counted and noted on the case report form (CRF).

The BBT consists of 50 wooden blocks (cubes of 2.54 cm on each side) placed in a wooden box that has 2 equal-sized compartments that are separated by a central wooden partition of 15.2 cm height [5]. The participant was instructed to use their affected hand to move blocks, one by one, from one compartment to the other, evaluating the maximum number which could be transferred within 1 min. The transported blocks were counted during the test duration with the help of a counter clicker. All dropped blocks were noted on the CRF.

Participants

In total, 31 patients with stroke were consecutively recruited in this study. The occupational therapy (OT) outpatient practices that are registered as specialized in neurorehabilitation in the canton of Bern, Switzerland, were contacted to recruit patients with chronic stroke. The inclusion criteria were (1) a stroke diagnosis at least 6 months before study inclusion confirmed by a physician, (2) ≥ 18 years of age, (3) ability to communicate in German language, (4) capable of sitting in a (wheel-)chair with a backrest for up to 90 min, (5) able to lift and hold the arm in 90° of elbow flexion and 45° of shoulder abduction and (6) able to grasp a wooden block of 2.54 cm on each side as used in the BBT [25]. Exclusion criteria were (1) a diagnosis of a brain injury other than stroke, (2) a diagnosed neglect, aphasia or hemianopsia and, (3) non-controlled medical conditions (chronic pain, drug abuse). All included patients were screened for dementia and stereopsis and their handedness was assessed. A Mini Mental State Examination (MMSE) of at least 20 points (light dementia or better) was required to participate in the study [26]. The stereopsis of each patient was evaluated with the Lang Stereotest 1 (LST), which shows three objects differing in disparity and perceived distance: a cat, a star and a car [27, 28]. It has a high predictive value for stereo-positivity in adults [28], which we assumed would be of importance in conducting the VPIT, which is represented in a 3D virtual environment on a computer screen. The study was approved by the Ethics Committee (KEK-Nr. 119/13) of the canton of Bern (Switzerland). All subjects gave their informed consent prior to study entry.

Procedures

The measurements for the patients with stroke took place at the patients’ outpatient OT practice or in their home environment. The setting and test instructions for the BBT and NHPT corresponded to the standards set by Mathiowetz et al. (1985) [5, 6], translated into German by Schädler et al. (2011) [29]. The procedures followed for the VPIT were as described by Fluet et al. (2011) [17], with the following adaptations: 1) only 3 repetitions of the test were carried out as opposed to the 5 suggested by Fluet et al. (2011); 2) The force threshold to grasp and release pegs was set to 2 Newtons. This force threshold was empirically tested in our previous work with neurological patients, where it was shown to be adequate for most participants with mild to moderate hand impairment to perform the task [18, 19]; and 3) participants who needed assistance for the affected arm to perform the test were allowed to do so. The third adaptation was not initially planned, but proved necessary to implement after we observed major difficulties in performing the VPIT during the test trial in some participants (e.g., cursor alignment or regulation of the grasping force while holding the handle not precise enough). The participants were requested to first perform the BBT and the NHPT as per protocol. In some we noticed that participants needed support for the VPIT while the 2 clinical tests were already completed. It was too much due to fatigue to repeat them. The validity of this adaptation was addressed by a later subgroup analysis (see results section).

To minimize the effect of the required adaptation to a novel tool (VPIT), we gave patients a test trial prior to the measurements (on both days), where they were given all the time they needed to explore the virtual environment and get familiar to the functioning of the test. According to our previous work, this proved to be sufficient for patients to understand the task and the use of the VPIT.

All participants were tested twice with a 3–7 day interval between assessments. This time interval (mean 6.4 ± 0.5 days) was defined to minimize any learning effect that may occur from repeating the tests within a short time frame. During the first assessment session, demographic data were collected and the MMSE, FLANDERS and LST were carried out. Then, motor tests were performed in the following order of (1) BBT, (2) NHPT and (3) VPIT. For each test, one test trial (not timed) was allowed, which was then followed by three repetitions of the test. If needed, participants could have a rest between the motor test performances. All tests were done with the affected arm only where possible, with participants being allowed to use both hands for the VPIT as necessary. Therefore, depending on the result of the VPIT test trial (support of the affected arm needed/not needed), the following three repetitions of the VPIT were all performed accordingly. The second assessment session was composed of the test trial followed by three repetitions of the VPIT.

Data analysis

SPSS version 22.0 (SPSS Inc, Chicago, Illinois) was used for data analysis. The study population and clinical characteristics were defined adopting descriptive statistics. The average of the 3 trials of each test was calculated and used for data analysis, as test-retest reliability is highest in all tests when the mean of three trials is used; lower correlations are known to occur when one trial or the highest score of three trials are utilized [30]. Normality of data was evaluated using the Shapiro Wilk test [31]. The level of statistical significance was set to p ≤ 0.05.

Validity

Concurrent validity was assessed by determining Spearman’s rank correlation coefficient (ρ) for the relationship between the VPIT/BBT and the VPIT/NHPT, respectively [31], namely for the parameters measuring a similar construct in both tests: (1) mean time in seconds (Tex[s]VPIT / Tex[s]NHPT), (2) mean number of dropped pegs (NdpVPIT / NdpNHPT), (3) mean number of dropped pegs/cubes (NdpVPIT / NdcBBT) and (4) mean number of transported cubes (Tex[s]VPIT / NtcBBT). The following correlation classification was used: no or very low: ρ = 0–0.25; low: ρ = 0.26–0.40; moderate: ρ = 0.41–0.69; high: ρ = 0.70–0.89; very high: ρ = 0.90–1.0 [32]. To measure the correlation between the mean number of dropped pegs/cubes during the VPIT/NHPT and VPIT/BBT, Cohen’s kappa was computed using GraphPad software (www.graphpad.com/quickcalcs) [33]. We hypothesized that (1) the correlations between Tex[s]VPIT / Tex[s]NHPT and Tex[s]VPIT / NtcBBT would be high for chronic patients with stroke (0.70–0.89). We further hypothesized that (2) the correlations between the number of pegs dropped during the VPIT and the number of pegs/cubes dropped during the conventional NHPT and BBT for chronic patients with stroke would be moderate (0.41–0.69).

Reliability

Relative reliability was determined by calculating intraclass correlation coefficients (ICCs) separately for average measures. In particular, we used the ICC 2 (A,k) formula (two-way mixed effects model where people effects are random and measures effects are fixed; k because the average of the three tests was used) [34, 35]. We selected the option “absolute agreement” in order to take into account the systematic error between raters, as there were two raters (BCT and JH) involved in data collection [36]. In this study, the same rater performed the test and the re-test procedure with a single participant. Furthermore, intensive pilot-testing was performed prior to data collection by both raters. The following classification was used: 0.90–0.99, high reliability; 0.80–0.89, good reliability; 0.70–0.79, fair reliability; 0.69 or below, poor reliability [37, 38]. We anticipated that the relative reliability of the 9 parameters measured with the VPIT would be good (ICC ≥ 0.80) [37].

To calculate absolute reliability, the ICCs were complemented by the Bland-Altman analysis, which can be used to show variation (or the magnitude of difference) of repeated measurements [39, 40]. The plots show the difference between test sessions 2 and 1 against the mean of the two test sessions for each subject [41, 42]. A free sample of the MedCalc statistical software version 14.8.1 (www.medcalc.org) was used to draw the Bland-Altman plots. The degree of heteroscedasticity was measured by calculating Kendall’s tau correlation (Τ) between the absolute differences and the corresponding means of each VPIT parameter. When a positive Τ > 0.1 was found, the data were considered heteroscedastic. When Τ < 0.1 or negative, the data were considered homoscedastic [43]. The data were logarithmically or square root transformed when heteroscedasticity was found [44, 45]. Thereafter, we calculated Kendall’s tau again; if Τtrans decreased - indicating a more homoscedastic distribution of the data - reliability was analyzed using the transformed parameters [43].

To quantify the precision of individual scores on a test, we calculated the Standard Error of Measurement (SEM), using the formula \( \mathrm{S}\mathrm{E}\mathrm{M}=\upsigma \sqrt{\left(1-\mathrm{I}\mathrm{C}\mathrm{C}\right)} \), with σ being the total variance of the scores from all subjects [34, 36]. We then calculated the Smallest Detectable Difference (SDD) based on the SEM, as follows: \( \mathrm{S}\mathrm{D}\mathrm{D}=\mathrm{S}\mathrm{E}\mathrm{M}*1.96*\sqrt{2} \). As a last step we calculated: \( \mathrm{S}\mathrm{D}\mathrm{D}\%=\frac{\mathrm{SDD}}{\mathrm{grand}\ \mathrm{mean}}*100 \). The grand mean is the mean of the means of each VPIT parameter. As agreement parameters (SDDs) are expressed on the actual scale of the assessments, they allow clinical interpretation of the results [34, 36]. Furthermore, the SDD% can be used to compare test-retest reliability among tests [25]. We hypothesized that the SDD% is ≤ 54 % of the mean average values of the VPIT, as Chen et al. (2009) found an SDD% of 54 % for the affected hand using the NHPT in patients with stroke [25].

Results

The demographic and clinical characteristics of the participants are summarized in Table 1 and the results of the achieved scores (BBT, NHPT, VPIT) in Table 2. From the 33 chronic patients with stroke initially recruited, two participants dropped out: one only completed 2 of the 3 required VPIT test trials due to poor physical health, whereas the second could not perform the VPIT task with the affected hand. In 26 participants (83.9 %), stroke had occurred for the first time, while 5 (16.1 %, 4 men and 1 woman) had suffered at least two stroke events. Although all participants fulfilled the inclusion criteria, 11 patients were able to perform all motor tests solely with the affected arm, whereas 20 patients also required support from the non-affected arm to perform the VPIT, as they possibly were fatigued by the duration of the tests. This support was needed to increase stability of the affected arm and therefore motor control of the affected hand. To account for these differences in test performance (with and without support), we decided to additionally conduct a subgroup analysis, subgroup 1 (n = 11) being without support of the non-affected arm and subgroup 2 (n = 20): being those who required support. Shapiro Wilk testing indicated too much difference within the validity and test-retest reliability data to be normally distributed.

Concurrent validity

The results of the concurrent validity calculations (ranges) are presented in Table 3. The correlations between the VPIT and BBT/NHPT were low (ρ = −0.23–0.31) and non-significant (p = 0.09–0.51) in all parameters. The correlations between the VPIT and BBT/NHPT for subgroup 1 were moderate (ρ = −0.41–0.61) and non-significant (p = 0.07–0.60). The correlations between the VPIT and BBT/NHPT for subgroup 2 were low (ρ = −0.21–0.35) and non-significant (p = 0.13–0.51). The strength of agreement for NdpVPIT / NdpNHPT and no (zero) dropped pegs in the VPIT and NHPT was considered to be poor (Kappa = 0.041; SE of kappa = 0.176; 95 % CI = −0.30–0.39) with 16 (51.6 %) observed agreements. Accordingly, the kappa agreement for the NdpVPIT / NdcBBT and no (zero) dropped pegs/cubes during those tests was poor (Kappa = 0.189; SE of kappa = 0.157; 95 % CI = −0.12–0.50) with 18 (58.1 %) observed agreements.

Test-retest reliability

The 9 test-retest reliability parameters of the VPIT are presented in Table 4 for the whole study population and in Table 5 (subgroup 1) and 6 (subgroup 2) for the subgroups. All VPIT parameters are illustrated in Fig. 2 by Bland-Altman plots. For the whole stroke sample, the correlations for the 5 parameters Tex[s], Fggo[N], Nzc[1/s]go/return and Fcmean[N] were good to high (ICCs = 0.83–0.94, SEMs = 0.07–0.63, except for the Tex[s] with SEM = 25.34). Fair reliability was found for the parameters Fgreturn (ICC = 0.75, SEM = 0.43) and Etrajgo[cm] (ICC = 0.70, SEM = 0.19). Poor reliability was measured for Etrajreturn[cm] (ICC = 0.67, SEM = 0.29) and Ndp (ICC = 0.58, SEM = 0.14). The SDD were ≤ 54 % in all VPIT parameters (SDD% = 1.37–21.42) except for Tex[s] with SDD% = 434.5 %.

Bland-Altman plots of the 9 VPIT parameters. Plotted differences of (a) Tex[s]: execution time in seconds, (b) Ndp: number of dropped pegs during transport, (c) Fggo[N]: mean grasping force, (d) Fgreturn[N]: mean grasping force, (e) Nzc[1/s]go/return: number of zero-crossings of the acceleration, (f) Nzc[1/s]go/return: number of zero-crossings of the acceleration, (g) Etrajgo[cm]: trajectory error, (h) Etrajreturn[cm]: trajectory error, (i) Fcmean[N]: mean collision force. ○ Represents subgroup 1 (n = 11); (orange square) Represents subgroup 2 (n = 20) (red broken line) Lines for 95 % CI of limits of agreement, (green broken line) Lines for 95 % CI of mean of differences

The subgroup analysis of subgroup 1 (n = 11) showed good ICCs for the 5 VPIT parameters Fggo[N], Nzc[1/s]go/return, Etrajgo and Fcmean (ICCs = 0.82–0.89, SEMs = 0.07–1.79). Fair reliability was found in the parameter Fgreturn[N] (ICC = 0.78, SEM = 0.50), while Tex[s], Ndp and Etrajreturn[cm] showed poor reliability (ICCs = 0.18–0.35 with SEMs = 0.20–37.01). All VPIT parameters showed SDD of ≤ 54 % (SDD% = 1.27–32.97) except for Tex[s] with SDD% = 682.87 %.

Subgroup 2 (n = 20) showed high ICCs in 5 out of 9 VPIT parameters (Tex[s], Fggo[N], Nzc[1/s]go/return and Fcmean[N] with ICCs = 0.92–0.97, SEMs = 0.02–19.25). Fair reliability was found in Fgreturn[N] (ICC = 0.73, SEM = 0.40). The remaining 3 parameters (Ndp and Etrajgo/return[cm]) showed poor reliability (ICCs = 0.49–0.69, SEMs = 0.10–0.22). Within subgroup 2, all VPIT parameters showed SDD% values of ≤ 54 % (SDD% = 0.26–15.08 %) except for Tex[s] with SDD% = 317.76 %.

Discussion

This is the first study evaluating the test-retest reliability of the novel VPIT and its concurrent validity with conventional upper limb function tests in chronic patients with stroke. In this stroke sample (n = 31), the VPIT presented seven out of nine (78 %) reliable parameters that passed the accepted minimal standards for group comparisons (ICC ≥ 0.70) with ICCs = 0.70–0.94 [46]. The SDD% values were small in all VPIT parameters (1.37–21.42 %) except for Tex[s] (SDD% = 434.5 %). The correlations of the execution time and the number of dropped pegs/cubes of the VPIT with the NHPT and the BBT, respectively, were (ρ = −0.23–0.31), although non-significant (p = 0.09–0.51).

Concurrent validity

For the concurrent validity part of this study, both hypotheses were rejected, as the correlations of the VPIT with the conventional tests NHPT and BBT were low. The rejection of hypothesis 1 (correlations for the Tex[s]VPIT / Tex[s]NHPT and Tex[s]VPIT / NtcBBT) might be due to the following reasons: (1) the diverse and unstable upper limb skills of the study sample led to a large inter-subjects variation, which can be seen in the high Standard Deviation (SD) of Tex[s]VPIT with 72.1 s and SD = 28.9 s for the NHPT, respectively (Table 2). Moreover, support of the affected-arm by the non-affected arm might have caused further variability in the data, (2) Although the test performance of the NHPT was stable within the 3 test trials (mean SD (average from each subject’s SD) = 5.2 s), there was variation within the 3 test trials of the VPIT (mean SD = 27.2 s). The higher intra-subject variation in the Tex[s]VPIT parameter could be attributed to the nature of the VPIT, which is not a real physical object manipulation test and involves tools that patients are not familiar with (e.g., the robotic handle and the computer for some patients). This is supported by Bowler et al. (2011), who achieved a more accurate and consistent data set for an embedded NHPT than for a purely hapto-VR-NHPT version [47]. As the VPIT was the last assessment administered in session one, it might be that patients were already more tired and less able to concentrate than in the NHPT. This observation is supported by the smaller SD of the VPIT during the second assessment session (see Table 4). Furthermore, compared to the BBT, the Tex[s]VPIT has no time limit, thus the participants with poorer upper limb function had to perform the assessment even longer than the more skilled ones, also resulting in increased fatigue of the affected arm from test trial to test trial (although they were allowed to have a rest between tests). Conversely, the correlations of the VPIT with the NHPT - the two tests with no time limit - were better than those with the BBT (time limit: 60 s). A reason for this could be the similar test procedures of the VPIT / NHPT, whereas the BBT has different test procedures [5].

The rejection of hypothesis 2 (correlations for NdpVPIT with the NdpNHPT / NdcBBT) occurred due to very low correlations between those validity parameters. The scatter plots of those parameters showed no linear correlation, as many participants did not drop any pegs or cubes during test trials (no drops n = 17 in VPIT (55 %); n = 10 in NHPT (32 %) and n = 14 in BBT (45 %)). This ceiling-effect - considered to be present if more than 15 % of all participants received the highest possible score (here: dropping no peg/cube) [48] - did not allow a distinction from the participants with the highest achievable score, indicating limited validity. This finding is supported by the poor strength of agreement for the Ndp and no dropped pegs in the VPIT and NHPT (Kappa = 0.04) or BBT (Kappa = 0.19), respectively. Furthermore, the high SDs of the Ndp/c (VPIT/BBT/NHPT) parameters indicate the high variance of the study sample (Table 2). The high variance in the VPIT could be due to the difficulty of the coordination of the PHANTOM Omni arm and the virtual pegboard in the virtual 3D space, especially for subgroup 2 (those with the higher Ndp parameter than subgroup 1; see Table 2). Most participants in this group had difficulty in aligning the handle of the haptic display to the dropped peg lying on the virtual board, which resulted in several grasp attempts and the associated higher drop rate of pegs.

Furthermore, the VPIT handle remains in the patients hand during the entire test, but inserting a peg in the hole requires control of grasping force and to decrease applied grasping force below the 2 N force threshold. Also, patients have to be below the 2 N threshold before being able to grasp a new peg (i.e., the patient cannot just tightly grasp the handle during the whole test and “only” align cursor to pegs/holes to achieve the task, see Fluet et al. (2011) [17] and the force traces presented in that paper may illustrate how force control (grasping AND releasing) is required for achieving the task. Nevertheless, this may have influenced the performance time of the VIPT and also the low values for concurrent validity. The degree of stereopositivity (Table 1), which we assumed to be important to perform the VPIT [28], didn’t seem to be indicative of the participants being able to perform the VPIT or not, as all participants could perform it equally. However, further investigations into this observation would be necessary to allow us to draw a comprehensive conclusion.

Test-retest reliability

In the hypothesis concerning the relative test-retest reliability, we expected the ICCs to be ≥ 0.80. This assumption was met by 5 out of 9 VPIT parameters in the whole stroke sample (Table 4) and both subgroups (Tables 5 and 6). Those 5 parameters were the same for the whole sample and for subgroup 2 (Tex[s], Fggo[N], Nzc[1/s]go/return and Fcmean[N]), with slightly higher correlations in subgroup 2 than for the whole sample. This is not surprising, as subgroup 1 with the highest mean differences between test and retest (Table 5) is not included, indicating that supporting the affected arm allowed a more stable test performance in subgroup 2. The lower ICCs in subgroup 1 might also be due to the lower number of participants (n = 11) [49], as well as due to a learning effect in some parameters; e.g., the important decrease in Tex[s] of 29.6 s from test to retest (Table 5). This finding is illustrated by the Bland-Altman plot (Fig. 2a)), where 4 subjects in subgroup 1 improved by > 50 s.

The kinetic parameters Fggo[N] and Fcmean[N] and the kinematic parameters Nzc[1/s]go/return achieved high test-retest reliability in the whole sample and in both subgroups. This means that the stroke participants were able to transport the pegs with a constant grip strength and movement coordination while transporting the peg (go). However, there were fair correlations when approaching a new peg (return) (Fgreturn) in the whole sample and both subgroups. This might be due to the fact that participants still had to hold an object (the handle) on the way back to approach a new peg without actually carrying a peg. Furthermore, if we look at the overall test-retest reliability of the whole study sample for Ndp, it was poor (ICC = 0.58). This might be due to the artificial force threshold to grasp and release the pegs, which is quite unintuitive, as there is no feedback provided on the force applied by the subject and on the force threshold, although measured by the handle (Fggo/return[N]). This might lead many subjects to drop the pegs. However, to achieve a conclusive statement regarding the clinical use of those unique kinetic and kinematic VPIT parameters, further research is needed. This could be done by comparing the reliability of stroke results with healthy controls, or by evaluating their validity with other outcome measurements quantifying force control and movement coordination.

From the 5 VPIT parameters meeting the hypothesis for relative test-retest reliability, all VPIT parameters fulfilled the hypothesis for the absolute test-retest reliability with SDD% of ≤ 54 % (Tables 4, 5 and 6) except for the parameter Tex[s]. This is quite surprising, as more complex and demanding upper limb function tests – such as the VPIT – have in general higher SDDs than simpler tests – such as the BBT or NHPT. Therefore, our results are in contrast with those of Chen et al. (2009) [25], whose SDD% values were high for the affected hand, especially in the NHPT, with an SDD% of 52 % for the nonspastic and 88 % for the spastic group. In our sample however, the only VPIT parameter not being susceptible to change was Tex[s], as its SDD was high and varied greatly within the whole sample and both subgroups (SDDs = 53.3–102.5 s). In other words, only a change between two consecutive measurements exceeding at least 53 s for Tex[s] can be interpreted as a true clinical improvement when chronic patients with stroke perform the test with the affected hand. In addition, the SDD can be used as a threshold to identify statistically significant individual change [50, 51]. Thus, if a change between 2 consecutive measurements for an individual patient exceeds the SDD (e.g., 53.3 s in subgroup 2) the individual patient may be exhibiting significant improvement. In our sample, this was the case for 4 participants for the Tex[s] parameter in subgroup 2, while one participant exceeded 102.5 s in subgroup 1. The data show that allowing support of the affected arm increased performance stability in the test and retest. This can be seen in the almost twice as high SDD of subgroup 1 (SDD = 102.5 s) compared to subgroup 2 with an SDD of 53.3 s.

Limitations and future research

As the VPIT extracts much more (i.e., kinetic and kinematic) parameters than conventional, non-VR upper limb function assessments (i.e., time, number of dropped/transported objects), it was not possible to validate all of them. Therefore, future studies should focus on the validity of those parameters measurable by VR devices by comparing them with other VR-based upper limb function assessments. Furthermore, it would be of interest to evaluate the discriminant validity of the VPIT by comparing stroke participants’ performances with those of healthy controls.

Future versions of the VPIT should provide visual feedback on the force applied by the subject and on the force threshold. Another limitation might be the fact that two raters collected data, although the test-retest measurements of one participant were performed by the same rater. However, the major limitation of our study can be seen in the given permission to support the weight of the affected arm with the non-affected arm (and not use the other hand to steer), visually monitored by the supervising rater (occupational therapist). We are aware that this can be judged as a bias, because the test performance was not identical between the two subgroups, nor can we be sure how much the non-affected arm really supported (steered) the affected arm. Nevertheless, allowing support of the affected arm opens the use of the VPIT for a motorically weaker stroke population. If available, adjustable armrests (e.g., the Armon Elemento [52]) could be used instead of the non-affected arm, which might improve body stability and therefore the test performance by providing repeatable conditions from trial to trial and patient to patient. Randomisation of the several tests (NHPT, BBT, VPIT) may also reduce tiredness of the patients and increase validity.

Last, the sample size was relatively small and may have affected the values of the reproducibility and measurement error. A sample size of at least 50 is generally seen as adequate for the assessment of the agreement parameter, based on a general guideline by Altman [53]. The sample size we used of 33 patients with chronic stroke is, however, a realistic group size to find first estimates for the assumed relation between stroke and the hemi paretic arm / hand for the VPIT. Future studies may therefore strive for a bigger study sample and evaluate responsiveness to treatment of the VPIT.

Implications for practice

The VPIT provides more objective and comprehensive measurements of upper limb function than conventional, non-computerized hand assessments. Receiving feedback concerning for example the patient’s ability to control force or movement coordination is essential for rehabilitation. Furthermore, getting information about movement smoothness, as provided by the VPIT, offers clinically relevant information, as smoothness has been shown to be a good indicator of upper limb coordination and stroke recovery [23]. Therefore, clinicians can use these information to work on those motor skills crucial for the independent performance of daily activities [54]. Thanks to its compact and transportable shape, the VPIT is easy to administer even in the patient’s home, thus allowing a broad use amongst clinicians working in different settings. As all test results are stored in the computer and could be graphically displayed immediately after completion of the test, performance can be discussed with the patient and adjustments made to the therapy program of a patient depending on the progress. To increase the ease of use of the VPIT, time limitations in the test duration for motorically weaker patients should be considered, together with the allowance to use adjustable armrests if needed.

An important aim of developing this new assessment is to improve the assessment of arm function in a clinical setting where the results of the assessment can be generalized to a population reflective of the “real world”. Seen from this perspective it is rather a strong point of our study that we had a rather heterogeneous sample because this can be considered more realistic for clinical settings.

Conclusions

The VPIT is a promising upper limb function assessment which has proved to be feasible for use with this diverse group of chronic patients with stroke. The low concurrent validity showed that the VPIT was inherently different from the conventional tasks, indicating that performing this hapto-virtual reality assessment requires other components of upper limb motor performance than the NHPT and BBT. The high test-retest reliability in 5 and the low SDD% in 8 out of 9 VPIT parameters showed that those parameters remain consistent when performed by patients with chronic stroke and are susceptible to change, allowing diagnostic and therapeutic use in clinical practice for this patient group. The other 4 parameters (Ndp, Fgreturn[N] and Etrajgo/return[cm]) showed poor to fair ICCs when performed with the affected hand and require further research for this population. The high intra-subject variation indicated that the VPIT is a demanding test for this stroke sample, which requires a thorough introduction to this assessment. Allowing testing trials before starting with the assessment is a prerequisite for a reliable test performance. When using the VPIT as outcome measurement, clinicians may want to use the SDDs reported in this article as reference points for clinically important changes, and the SDD% results to compare test-retest reliability among other tests.

Notes

104W13400 Donges Bay Road, PO Box 1005, Germantown, Wisconsin 53022-8205

Abbreviations

- BBT:

-

Box and Block Test

- CI:

-

Confidence Interval

- Etraj[cm]:

-

Trajectory error in centimeters

- ICC:

-

Intraclass Correlation Coefficient

- Fcmean :

-

Mean collsion force

- Fg[N]:

-

Mean grasping force in Newton

- Ndc :

-

Number of dropped cubes

- Ndp :

-

Number of dropped pegs

- NHPT:

-

Nine Hole Peg Test

- Ntc :

-

Number of transported cubes

- NzcNzc[1/s]:

-

Number of zero-crossings

- SD:

-

Standard Deviation

- SDD:

-

Smallest Detectable Difference

- SEM:

-

Standard Error of Measurement

- Tex[s]:

-

Execution time in seconds

- VPIT:

-

Virtual Peg Insertion Test

References

Lundborg G. The Hand and the Brain. From Lucy’s thumb to the tought-controlled robotic hand. London: Springer; 2014.

Johansson BB. Current trends in stroke rehabilitation. A review with focus on brain plasticity. Acta Neurol Scand. 2011;123(3):147–59.

Fluet GG, Deutsch JE. Virtual reality for sensorimotor rehabilitation post-stroke: the promise and current state of the field. Curr Phys Med Rehabil Rep. 2013;1(1):9–20.

Lin KC, Chuang LL, Wu CY, Hsieh YW, Chang WY. Responsiveness and validity of three dexterous function measures in stroke rehabilitation. J Rehabil Res Dev. 2010;47(6):563–71.

Mathiowetz V, Volland G, Kashman N, Weber K. Adult norms for the Box and Block Test of manual dexterity. Am J Occup Ther. 1985;39(6):386–91.

Mathiowetz V, Weber K, Kashman N, Volland G. Adult norms for the Nine Hole Peg Test of finger dexterity. Occup Ther J Res. 1985;5:24–38.

Oxford Grice K, Vogel KA, Le V, Mitchell A, Muniz S, Vollmer MA. Adult norms for a commercially available Nine Hole Peg Test for finger dexterity. Am J Occup Ther. 2003;57(5):570–3.

Laver KE, George S, Thomas S, Deutsch JE, Crotty M. Virtual reality for stroke rehabilitation. Cochrane Database Syst Rev. 2011;9:CD008349.

Brewer L, Horgan F, Hickey A, Williams D. Stroke rehabilitation: recent advances and future therapies. QJM. 2013;106(1):11–25.

Yeh SC, Lee SH, Chan RC, Chen S, Rizzo A. A virtual reality system integrated with robot-assisted haptics to simulate pinch-grip task: Motor ingredients for the assessment in chronic stroke. NeuroRehabilitation. 2014;35:435–49.

Fordell H, Bodin K, Bucht G, Malm J. A virtual reality test battery for assessment and screening of spatial neglect. Acta Neurol Scand. 2011;123(3):167–74.

Lee JH, Ku J, Cho W, Hahn WY, Kim IY, Lee SM, et al. A virtual reality system for the assessment and rehabilitation of the activities of daily living. Cyberpsychol Behav. 2003;6(4):383–8.

Amirabdollahian F, Johnson G. Analysis of the results from use of haptic peg-in-hole task for assessment in neurorehabilitation. Appl Bionics Biomechanics. 2011;8(1):1–11.

Bardorfer A, Munih M, Zupan A, Primozic A. Upper limb motion analysis using haptic interface. IEEE ASME Trans Mechatron. 2001;6(3):253–60.

Feys P, Alders G, Gijbels D, De Boeck J, De Weyer T, Coninx K, et al. Arm training in multiple sclerosis using phantom: Clinical relevance of robotic outcome measures. In: IEEE International Conference on Rehabilitation Robotics. 2009. p. 576–81.

Xydas E, Louca L. Upper limb assessment of people with multiple sclerosis with the use of a haptic nine hole peg-board test. In: Proceedings of the 9th biennal Conference on Engineering Systems Design and Analysis. 2009. p. 159–66.

Fluet M-C, Lambercy O, Gassert R. Upper limb assessment using a virtual peg insertion test. In: Proc IEEE International Conference on Rehabilitation Robotics (ICORR); Switzerland, Zurich. 2011. p. 1–6.

Lambercy O, Fluet MC, Lamers I, Kerkhofs L, Feys P, Gassert R. Assessment of upper limb motor function in patients with multiple sclerosis using the Virtual Peg Insertion Test: a pilot study. IEEE Int Conf Rehabil Robot. 2013;2013:6650494.

Gagnon C, Lavoie C, Lessard I, Mathieu J, Brais B, Bouchard JP, et al. The Virtual Peg Insertion Test as an assessment of upper limb coordination in ARSACS patients: a pilot study. J Neurol Sci. 2014;347(1–2):341–4.

Nordin N, Xie SQ, Wunsche B. Assessment of movement quality in robot- assisted upper limb rehabilitation after stroke: a review. J Neuroeng Rehabil. 2014;11:137.

Kim H, Miller LM, Fedulow I, Simkins M, Abrams GM, Byl N, et al. Kinematic data analysis for post-stroke patients following bilateral versus unilateral rehabilitation with an upper limb wearable robotic system. IEEE Trans Neural Syst Rehabil Eng. 2013;21(2):153–64.

Panarese A, Colombo R, Sterpi I, Pisano F, Micera S. Tracking motor improvement at the subtask level during robot-aided neurorehabilitation of stroke patients. Neurorehabil Neural Repair. 2012;26(7):822–33.

Rohrer B, Fasoli S, Krebs HI, Hughes R, Volpe B, Frontera WR, et al. Movement smoothness changes during stroke recovery. J Neurosci. 2002;22(18):8297–304.

Milner TE. A model for the generation of movements requiring endpoint precision. Neuroscience. 1992;49(2):487–96.

Chen HM, Chen CC, Hsueh IP, Huang SL, Hsieh CL. Test-retest reproducibility and smallest real difference of 5 hand function tests in patients with stroke. Neurorehabil Neural Repair. 2009;23(5):435–40.

Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12(3):189–98.

Lang JI. Ein neuer Stereotest. Klin Mbl Augenheilk. 1983;182:373–5.

Brown S, Weih L, Mukesh N, McCarty C, Taylor H. Assessment of adult stereopsis using the Lang 1 Stereotest: a pilot study. Binocul Vis Strabismus Q. 2001;16(2):91–8.

Schädler S, Kool J, Lüthi H, Marks D, Oesch P, Pfeffer A, et al., editors. Assessments in der Rehabilitation. 3rd ed. Bern: Verlag Hans Huber; 2011.

Mathiowetz V, Weber K, Volland G, Kashman N. Reliability and validity of grip and pinch strength evaluations. J Hand Surg Am. 1984;9(2):222–6.

Norman GR, Streiner DL, editors. Biostatistics: The bare essentials. 3rd ed. USA: People’s Medical Publishing House; 2008.

Munro BH. Statistical methods for health care research. 5th ed. Philadelphia: Lippincott Williams & Wilkins; 2005.

Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33(1):159–74.

Weir JP. Quantifying test-retest reliability using the intraclass correlation coefficient and the SEM. J Strength Cond Res. 2005;19(1):231–40.

Shrout PE, Fleiss JL. Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin. 1979;86:420–8.

de Vet HC, Terwee CB, Knol DL, Bouter LM. When to use agreement versus reliability measures. J Clin Epidemiol. 2006;59(10):1033–9.

Arnall FA, Koumantakis GA, Oldham JA, Cooper RG. Between-days reliability of electromyographic measures of paraspinal muscle fatigue at 40, 50 and 60 % levels of maximal voluntary contractile force. Clin Rehabil. 2002;16(7):761–71.

Denegar CR, Ball DW. Assessing reliability and precision of measurement: an introduction to lntraclass correlation and standard error of measurement. J Sport Rehabil. 1993;2(1):35–42.

Rankin G, Stokes M. Reliability of assessment tools in rehabilitation: an illustration of appropriate statistical analyses. Clin Rehabil. 1998;12(3):187–99.

Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;1(8476):307–10.

Bland JM, Altman DG. Applying the right statistics: analyses of measurement studies. Ultrasound Obstet Gynecol. 2003;22(1):85–93.

Liaw LJ, Hsieh CL, Lo SK, Chen HM, Lee S, Lin JH. The relative and absolute reliability of two balance performance measures in chronic stroke patients. Disabil Rehabil. 2008;30(9):656–61.

Brehm MA, Scholtes VA, Dallmeijer AJ, Twisk JW, Harlaar J. The importance of addressing heteroscedasticity in the reliability analysis of ratio-scaled variables: an example based on walking energy-cost measurements. Dev Med Child Neurol. 2012;54(3):267–73.

Bland JM, Altman DG. Transforming data. BMJ. 1996;312(7033):770.

Euser AM, Dekker FW, le Cessie S. A practical approach to Bland-Altman plots and variation coefficients for log transformed variables. J Clin Epidemiol. 2008;61(10):978–82.

Aaronson N, Alonso J, Burnam A, Lohr KN, Patrick DL, Perrin E, et al. Assessing health status and quality-of-life instruments: attributes and review criteria. Qual Life Res. 2002;11(3):193–205.

Bowler M, Amirabdollahian F, Dautenhahn K. Using an embedded reality approach to improve test reliability for NHPT tasks. IEEE Int Conf Rehabil Robot. 2011;2011:5975343.

Terwee CB, Bot SD, de Boer MR, van der Windt DA, Knol DL, Dekker J, et al. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol. 2007;60(1):34–42.

Zou GY. Sample size formulas for estimating intraclass correlation coefficients with precision and assurance. Stat Med. 2012;31(29):3972–81.

Jette AM, Tao W, Norweg A, Haley S. Interpreting rehabilitation outcome measurements. J Rehabil Med. 2007;39:585–90.

Schmidheiny A, Swanenburg J, Straumann D, de Bruin ED, Knols RH. Discriminant validity and test re-test reproducibility of a gait assessment in patients with vestibular dysfunction. BMC Ear Nose Throat Disord. 2015;15:6.

Information Brochure Armon Elemento. [http://www.armonproducts.com/images/Information_Brochure_Armon_Elemento.pdf].

Altman DG. Practical statistics for medical research, [First CRC Press repr.] edn: Boca Raton: Chapman & Hall/CRC; 1999.

Pollock A, Farmer SE, Brady MC, Langhorne P, Mead GE, Mehrholz J, et al. Interventions for improving upper limb function after stroke. Cochrane Database Syst Rev. 2014;11:Cd010820.

Acknowledgements

The authors warmly thank all OT outpatient clinics who were willing to help recruiting the participants for this study. Many thanks go to all participants who were willing to share their time for this project. Further thanks go to Judith Häberli (JH) who helped with data collection and to Dr Martin J Watson for proof reading the manuscript on English and structure. Final thanks go to the Rehabilitation Engineering Lab, Department of Health Sciences and Technology, ETH Zurich, Switzerland, who provided the VPIT for this research project.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

MCF is shareholder of ReHaptix GmbH, Zurich, Switzerland, a startup company commercializing assessment technologies. All other authors declare that they have no competing interests.

Authors’ contributions

BCT conceived the methodology and carried out data collection, quality assessment, data analysis and manuscript writing. RK supervised progress, participating in methodology conception and manuscript writing & revision. MCF & OL had developed the VPIT and maintained technical support during data collection. MCF, OL & RDB contributed in manuscript revision. EDB supervised progress, helped with methodology conception, manuscript writing & revision. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Tobler-Ammann, B.C., de Bruin, E.D., Fluet, MC. et al. Concurrent validity and test-retest reliability of the Virtual Peg Insertion Test to quantify upper limb function in patients with chronic stroke. J NeuroEngineering Rehabil 13, 8 (2016). https://doi.org/10.1186/s12984-016-0116-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12984-016-0116-y