Abstract

Background

Cardiovascular resonance (CMR) imaging is a standard imaging modality for assessing cardiovascular diseases (CVDs), the leading cause of death globally. CMR enables accurate quantification of the cardiac chamber volume, ejection fraction and myocardial mass, providing information for diagnosis and monitoring of CVDs. However, for years, clinicians have been relying on manual approaches for CMR image analysis, which is time consuming and prone to subjective errors. It is a major clinical challenge to automatically derive quantitative and clinically relevant information from CMR images.

Methods

Deep neural networks have shown a great potential in image pattern recognition and segmentation for a variety of tasks. Here we demonstrate an automated analysis method for CMR images, which is based on a fully convolutional network (FCN). The network is trained and evaluated on a large-scale dataset from the UK Biobank, consisting of 4,875 subjects with 93,500 pixelwise annotated images. The performance of the method has been evaluated using a number of technical metrics, including the Dice metric, mean contour distance and Hausdorff distance, as well as clinically relevant measures, including left ventricle (LV) end-diastolic volume (LVEDV) and end-systolic volume (LVESV), LV mass (LVM); right ventricle (RV) end-diastolic volume (RVEDV) and end-systolic volume (RVESV).

Results

By combining FCN with a large-scale annotated dataset, the proposed automated method achieves a high performance in segmenting the LV and RV on short-axis CMR images and the left atrium (LA) and right atrium (RA) on long-axis CMR images. On a short-axis image test set of 600 subjects, it achieves an average Dice metric of 0.94 for the LV cavity, 0.88 for the LV myocardium and 0.90 for the RV cavity. The mean absolute difference between automated measurement and manual measurement is 6.1 mL for LVEDV, 5.3 mL for LVESV, 6.9 gram for LVM, 8.5 mL for RVEDV and 7.2 mL for RVESV. On long-axis image test sets, the average Dice metric is 0.93 for the LA cavity (2-chamber view), 0.95 for the LA cavity (4-chamber view) and 0.96 for the RA cavity (4-chamber view). The performance is comparable to human inter-observer variability.

Conclusions

We show that an automated method achieves a performance on par with human experts in analysing CMR images and deriving clinically relevant measures.

Similar content being viewed by others

Background

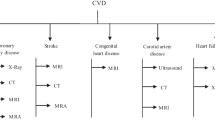

An estimated 17.7 million people died from cardiovascular disease (CVD) in 2015, representing 31% of all global deaths [1]. More people die annually from CVD than any other cause. Technological advances in medical imaging have led to a number of options for non-invasive investigation of CVD, including echocardiography, computed tomography (CT), cardiovascular magnetic resonance (CMR) etc., each having its own advantages and disadvantages. Due to its good image quality, excellent soft tissue contrast and absence of ionising radiation, CMR has established itself as the non-invasive gold standard for assessing cardiac chamber volume and mass for a wide range of CVD [2–4]. To derive quantitative measures such as volume and mass, clinicians have been relying on manual approaches to trace the cardiac chamber contours. It typically takes a trained expert 20 minutes to analyse images of a single subject at two time points of the cardiac cycle, end-diastole (ED) and end-systole (ES). This is time consuming, tedious and prone to subjective errors.

Here we propose a computational method which can automatically analyse images at all time points across the cardiac cycle and derive clinical measures within seconds. The accuracy for clinical measures is comparable to human expert performance. The method would assist clinicians in CMR image analysis and diagnosis with an automated and objective way for deriving clinical measures, therefore reducing cost and improving work efficiency. It would also facilitate large-population imaging studies, such as the UK Biobank study, which aims to conduct imaging scans of vital organs for 100,000 subjects [5]. An automated method is crucial for analysing such a large amount of images and extracting clinically relevant information for subsequent clinical studies.

Machine learning algorithms, especially deep neural networks, have demonstrated great potential, achieving or surpassing human performance in a number of visual tasks including object recognition in natural images [6], Go game playing [7], skin cancer classification [8] and ocular image analysis [9]. Previously, neural networks have been explored for CMR image analysis [10–13]. Most of these studies either use relatively shallow network architectures or are limited by the size of the dataset. None of them have performed a comparison between neural networks and human performance on this task. In 2016, Kaggle organised the second Data Science Bowl for left ventricular (LV) volume assessment [14]. Images from 700 subjects were provided with the LV volumes, however, none of the images were annotated. In 2017, MICCAI organised the ACDC challenge [15], where a training set of 100 subjects were provided with manual annotation. Lieman-Sifry et al. curated a data set of 1,143 short-axis image scans [13], where most of the images had LV endocardial and right ventricle (RV) endocardial contours annotated but only 22% had LV epicardial contours annotated.

In this paper, we utilise a large dataset of 4,875 subjects with 93,500 images, one or two orders of magnitude larger than previous datasets, and for which all the images have been pixelwise annotated by clinical experts. We trained fully convolutional networks for both short-axis and long-axis CMR image analysis. By combining the power of deep learning and a large annotated dataset for training and evaluation, this paper demonstrated that the proposed automated method can match human-level performance.

Methods

Dataset

The dataset consists of short-axis and long-axis cine CMR images of 5,008 subjects (61.2 ±7.2 years, 52.5% female), acquired from the UK Biobank. The baseline characteristics of the UK Biobank cohort can be viewed in the data showcase at [16]. For short-axis images, the in-plane image resolution is 1.8 ×1.8 mm2 with slice thickness of 8.0 mm and slice gap of 2 mm. A short-axis image stack typically consists of 10 image slices. For long-axis images, the in-plane image resolution is 1.8 ×1.8 mm2 and only 1 image slice is acquired. Each cardiac cycle consists of 50 time frames. For both short-axis and long-axis views, the balanced steady-state free precession (bSSFP) magnitude images were used for analysis. Details of the image acquisition protocol can be found in [17].

Manual image annotation was undertaken by a team of eight observers under the guidance of three principal investigators and following a standard operating procedure [18]. For short-axis images, the LV endocardial and epicardial borders and the RV endocardial borders were manually traced at ED and ES time frames using the cvi42 software (version 5.1.1, Circle Cardiovascular Imaging Inc., Calgary, Alberta, Canada). For long-axis 2-chamber view (2Ch) images, the left atrium (LA) endocardial border was traced. For long-axis 4-chamber view (4Ch) images, the LA and the right atrium (RA) endocardial borders were traced.

In pre-processing, the CMR DICOM images were converted into NIfTI format. The manual annotations from the cvi42 software were exported as XML files and also converted into NIfTI format. The images and annotations were quality controlled to ensure that annotations cover both ED and ES frames and without missing slices or missing anatomical structures. For short-axis images, 4,875 subjects (with 93,500 annotated image slices) were available after quality control, which were randomly split into three sets of 3,975/300/600 for training/validation/test, i.e. 3,975 subjects for training the neural network, 300 validation subjects for tuning model parameters, and finally 600 test subjects for evaluating performance. For long-axis 2Ch images, 4,723 subjects were available after quality control, which were split into 3,823/300/600. For long-axis 4Ch images, 4,682 subjects were available, which were split into 3,782/300/600.

Automated image analysis

For automated CMR image analysis, we utilise a fully convolutional network (FCN) architecture, which is a type of neural network that can predict a pixelwise image segmentation by applying a number of convolutional filters onto an input image [19]. The network architecture is illustrated in Fig. 1. The FCN learns image features from fine to coarse scales using convolutions and combines multi-scale features for predicting the label class at each pixel.

The network architecture. A fully convolutional network (FCN) is used, which takes the cardiovascular magnetic resonance (CMR) image as input, learns image features from fine to coarse scales through a series of convolutions, concatenates multi-scale features and finally predicts a pixelwise image segmentation

The network is adapted from the VGG-16 network [20] and it consists of a number of convolutional layers for extracting image features. Each convolution uses a 3 ×3 kernel and it is followed by batch normalisationFootnote 1 and ReLUFootnote 2. After every two or three convolutions, the feature map is downsampled by a factor of 2 so as to learn features at a more global scale. Feature maps learnt at different scales are upsampled to the original resolution using transposed convolutionsFootnote 3 and the multi-scale feature maps are then concatenated. Finally, three convolutional layers of kernel size 1 ×1, followed by a softmax functionFootnote 4, are used to predict a probabilistic label map. The segmentation is determined at each pixel by the label class with highest softmax probability. The mean cross entropy between the probabilistic label map and the manually annotated label map is used as the loss function. Excluding the transposed convolutional layers, this network has in total 16 convolutional layers. Details of the network architecture can be found in Table 1. This architecture is similar to the U-Net [21]. The main difference is that U-Net performs upsampling step by step. It iteratively upsamples the feature map at each scale by a factor of 2 and concatenates with the feature map at the next scale. In contrast to this, the proposed network may be simpler on the upsampling path. It upsamples the feature map from each scale to the finest resolution in one go and then concatenates all of them.

Network training and testing

Three networks were trained, respectively for segmenting short-axis images, long-axis 2Ch images and 4Ch images. For training each network, all images were cropped to the same size of 192 ×192 and intensity normalised to the range of [0,1]. Data augmentationFootnote 5 was performed on-the-fly, which applied random translation, rotation, scaling and intensity variation to each mini-batch of images before feeding them to the network. Each mini-batch consisted of 20 image slices. The Adam method [22] was used for optimising the loss function, with a learning rate of 0.001 and iteration number of 50,000. The method was implemented using Python and TensorFlow. It took about 10 hours to train the VGG-16 network on a Nvidia Tesla K80 GPU.

During the testing stage, it took ∼ 2.2 seconds to analyse the ED and ES time frames of short-axis images for one subject and 9.5 seconds to analyse a full sequence of 50 time frames. For long-axis images, it took ∼ 0.2 seconds to analyse the ED and ES time frames for one subject and 1.4 seconds to analyse a full sequence. It took longer to analyse the short-axis images, because each short-axis image stack typically has 10 slices, whereas a long-axis image stack has only 1 slice.

Evaluation of the method

For quantitative assessment, we evaluated the performance of the automated method in two ways, respectively using commonly used metrics for segmentation accuracy assessment, including the Dice metric, mean contour distance and Hausdorff distance, and using clinical measures derived from segmentations, including ventricular volume and mass.

Figure 2 illustrates the definitions of the Dice metric and contour distance metrics. The Dice metric evaluates the overlap between automated segmentation A and manual segmentation B and it is defined as,

Illustration of the Dice metric and contour distance metrics. A and B are two sets representing automated segmentation and manual segmentation. The Dice metric calculates the ratio of the intersection |A∩B| over the average area of the two sets (|A|+|B|)/2. The mean contour distance first calculates, for each point p on one contour, its distance to the other contour d(p,∂), then calculates the mean across all the points p. The Hausdorff distance calculates the maximum distance between the two contours

It is a value between 0 and 1, with 0 denoting no overlap and 1 denoting perfect agreement. The higher the Dice metric, the better the agreement.

The mean contour distance and Hausdorff distance evaluate the mean and the maximum distance respectively between the segmentation contours ∂A and ∂B. They are defined as,

where d(p,∂) denotes the minimal distance from point p to contour ∂. The lower the distance metric, the better the agreement.

We also evaluated the accuracy of clinical measures, which were derived from image segmentations. We calculated the LV end-diastolic volume (LVEDV) and end-systolic volume (LVESV), LV myocardial mass (LVM), RV end-diastolic volume (RVEDV) and end-systolic volume (RVESV) from automated segmentation and compared them to measurements from manual segmentation. The LV and RV volumes were calculated by summing up the number of voxels belonging to the corresponding label class in the segmentation, multiplied by the volume per voxel. The LV mass was calculated by multiplying the LV myocardial volume with the density of 1.05 g/mL [23].

Evaluation of human performance

For quantitative evaluation of human performance, we assessed the inter-observer variability between manual segmentations by different clinical experts. A set of 50 subjects was randomly selected and each subject was analysed by three expert observers (O1, O2, O3) independently. The Dice metric, contour distance metrics and the difference of clinical measurements were evaluated between each pair of observers (O1 vs O2, O2 vs O3, O3 vs O1).

Qualitative assessment

As an additional qualitative assessment, two experienced image analysts (respectively with over ten years and four years experiences in cardiovascular image analysis) visually assessed the segmentations for 250 test subjects [see Additional file 1]. According to an in-house standard operating procedure for image analysis and experience, the analysts visually compared automated segmentation to manual segmentation and assessed whether the two segmentations achieved a good agreement (visually close to each other) or not. If there was a disagreement between the two, the analysts would score in three categories: automated segmentation performs better; manual segmentation performs better; not sure which one is better. The visual assessment was performed for basal, mid-ventricular and apical slices.

Exemplar clinical study

We demonstrated the application of the method on an exemplar clinical study. Using automatically derived clinical measures, we investigated the association between cardiac function and obesity, similar to a previous research [24]. We compared the ventricular volume and mass between two groups of subjects, the normal weight group (18.5 ≤ body mass index (BMI) < 25) and the obese group (BMI ≥ 30). Pathological cases with CVD were excluded. The normal weight group and the obese group were matched for sex, age, height, diastolic blood pressure and systolic blood pressure using the nearest neighbour propensity score matching, implemented using the MatchIt package in R. After matching, each group consisted of 867 subjects. The clinical measures were then compared between the matched groups using two-sided t-tests.

Results

Short-axis image analysis

Figure 3a illustrates the predicted segmentation of the LV and RV on short-axis images. It shows that automated segmentation agrees well with manual segmentation by a clinical expert at both ED and ES time frames. Additional movie files demonstrate automated segmentation across a cardiac cycle [see Additional files 2, 3 and 4].

Illustration of the segmentation results for short-axis and long-axis images. The top row shows the automated segmentation, whereas the bottom row shows the manual segmentation. The automated method segments all the time frames. However, only end-diastolic (ED) and end-systolic (ES) frames are shown, as manual analysis only annotates ED and ES frames. The cardiac chambers are represented by different colours. a short-axis. b long-axis (2 chamber view). c long-axis (4 chamber view)

Table 2(a) reports the Dice metric, mean contour distance and Hausdorff distance between automated and manual segmentations, evaluated on a test set of 600 subjects, which the network has never seen before. The table shows a mean Dice value of 0.94 for the LV cavity, 0.88 for the LV myocardium and 0.90 for the RV cavity, demonstrating a good agreement between automated and manual segmentations. The mean contour distance is 1.04 mm for the LV cavity, 1.14 mm for the LV myocardium and 1.78 mm for the RV cavity, all of which are smaller than the in-plane pixel spacing of 1.8 mm. The Hausdorff distance ranges from 3.16 mm to 7.25 mm for each class.

Of the 600 test subjects, 39 are with CVD. These pathological cases were selected using the following criteria: cases with the International Classification of Diseases code, 10th Revision (ICD-10) of I21 (acute myocardial infarction), I22 (subsequent myocardial infarction), I23 (certain current complications following acute myocardial infarction), I25 (chronic ischaemic heart disease), I42 (cardiomyopathy), I50 (heart failure); cases where participants had self-reported heart attack. Table 2(b) reports the Dice and distance metrics on these pathological cases. It shows a consistent segmentation performance as on the full test set for the Dice metric and just slightly larger errors for the contour distance metrics.

For evaluating human performance, Table 3 compares the Dice and distance metrics between automated segmentation and manual segmentation, as well as between segmentations by different human observers. It demonstrates that the computer-human difference is close to or even smaller than the human-human difference for all the metrics.

As an additional qualitative assessment, two image analysts visually compared automated segmentation to manual segmentation for 250 test subjects. Table 4 shows that for mid-ventricular slices, automated segmentation agrees well with manual segmentation for respectively 84.8% and 91.6% of the cases by visual inspection of the two analysts. For basal slices where the ventricular contours are more complex and thus more difficult to segment, the percentage of agreement is lower. For example, Analyst 1 scored that automated segmentation agrees well with manual segmentation for only 40.0% of the cases. When discrepancy occurs, however, automated segmentation performs similarly to manual segmentation. Analyst 1 scored that automated segmentation performs better for 26.2% of the cases, whereas manual segmentation performs better for 20.6% of the cases.

Next, we evaluate the accuracy of clinical measures for the LVEDV, LVESV, LVM, RVEDV and RVESV. Table 5 reports the mean absolute difference and relative difference between automated and manual measurements and between measurements by different expert observers. It shows that for the clinical measures, the computer-human difference is on par with the human-human difference.

Figure 4 shows the Bland-Altman plots of the clinical measures. The Bland-Altman plot is commonly used for analysing agreement and bias between two measurements. The first column of the figure compares automated measurements to manual measurements on 600 test subjects. These subjects were annotated by a group of eight observers and each subject was annotated only once by one observer. The first column shows that the mean difference is centred close to zero, which suggests that the automated measurement is almost unbiased relative to the group of observers. Also, there is no evidence of bias over hearts of difference sizes or volumes. By contrast, the bias between different pairs of human observers (second to fourth columns) is often larger than that, especially for RVEDV and RVESV. This indicates that individual observers may be biased. As the automated method is trained with annotations from multiple observers, it learns a consensus estimate across the group of observers and thus it may be less susceptible to biases.

Bland-Altman plots of clinical measures between automated measurement and manual measurement, as well between measurements by different human observers. The first column shows the agreement between automated and manual measurements on a test set of 600 subjects. The second to fourth columns show the inter-observer variability evaluated on the randomly selected set of 50 subjects. In each Bland-Altman plot, the x-axis denotes the average of two measurements and the y-axis denotes the difference between them. The dark dashed line denotes the mean difference (bias) and the two light dashed lines denote ± 1.96 standard deviations from the mean

Long-axis image analysis

We further demonstrate the performance of the method on long-axis CMR images, which are commonly used for assessing the cardiac chambers from a different angle. Figure 3b and c illustrate the segmentations of the LA and RA for the long-axis 2Ch and 4Ch images respectively. Additional movie files demonstrate automated segmentation across a cardiac cycle [see Additional files 5–6].

We evaluate the Dice metric and the contour distances on a test set of 600 subjects, as reported in Table 6. The mean Dice metric is 0.93 for the LA (2Ch), 0.95 for the LA (4Ch), 0.96 for the RA (4Ch), whereas the mean contour distance is smaller than the in-plane pixel spacing of 1.8 mm, demonstrating a good segmentation accuracy on long-axis images. Table 7 demonstrates that for long-axis images, the computer-human difference is also on par with or smaller than the human-human difference.

Exemplar clinical study

The proposed automated method enables us to perform clinical studies on large-scale datasets. Table 8 compares the ventricular volume and mass, which are derived from automated segmentation, between two groups of subjects, the normal weight group and the obese group. The table shows that obesity is associated with increased ventricular volume and mass with statistical significance. This is consistent with a previous finding in [24], which was performed on a dataset of 54 subjects with manual segmentation. Now we can confirm the finding with automated analysis on a much larger dataset with 1,734 subjects.

Discussion

By training and evaluating on a large-scale annotated dataset, we demonstrate that the proposed method matches human expert performance on CMR image segmentation accuracy and clinical measurement accuracy. In terms of speed, it can analyse the short-axis and long-axis images for one subject in a few seconds. The method is fast and scalable, overcoming limitations associated with current clinical CMR image analysis routine, which is manual, time-consuming and prone to subjective errors. The method has a great potential for improving work efficiency and assisting clinicians in diagnosis and performing large-scale clinical research.

Residual networks

We also experimented with a deeper network by replacing the convolutional layers from scale 3 to 5 in Table 1 with residual blocks as described in [25] and constructed a residual network which has 33 convolutional layers. In experiments, we found the residual network achieves a similar performance as the VGG-16 network. Thus, we only reported the results from the VGG-16 network in the paper.

Other clinical measures

The LV and RV volumes are directly calculated from the image segmentations. There are also some other clinical measures for assessing cardiac function, which are derived from the LV and RV volumes, including the LV stroke volume (LVSV), LV ejection fraction (LVEF), LV cardiac output (LVCO), RV stroke volume (RVSV), RV ejection fraction (RVEF) and RV cardiac output (RVCO). Table 9 reports the difference between automated and manual measurements and between measurements by different expert observers on these measures. It shows that for these derived clinical measures, the computer-human difference is also comparable to the human-human difference.

Limitations

A major limitation of our work is that the neural network was trained on a single dataset, the UK Biobank dataset, which is a relatively homogeneous dataset. The majority of the data are healthy subjects in middle and later life and only a small proportion are with self-reported CVD [26]. Although we have demonstrated that the method works well on a subset of pathological cases in Table 2(b), in the clinical environment, there can be a variety of pathological patterns, which are not currently represented in the UK Biobank cohort.

In addition, the UK Biobank dataset was acquired using a standard imaging protocol and the same scanner model [17]. This guarantees that the derived image phenotypes are consistent across the UK Biobank study, without being biased by the imaging protocol or the scanner model. However, this also means that the neural network that we have learnt is adapted to the image patterns in the UK Biobank dataset and might not generalise well to other vendor or sequence datasets. We explored how the network works on two additional datasets, the MICCAI 2009 Left Ventricle Segmentation Challenge (LVSC 2009) dataset [27] and the MICCAI 2017 Automated Cardiac Diagnosis Challenge (ACDC 2017) dataset [28]. These two datasets were acquired using different scanners or different protocols [15, 29] from the UK Biobank dataset. In addition, most of the LVSC 2009 and ACDC 2017 data are pathological cases.

Figure 5 shows the segmentation results of four exemplar cases, two from the LVSC 2009 dataset and two from the ACDC 2017 dataset. The four cases are respectively of heart failure, LV hypertrophy, dilated cardiomyopathy and abnormal RV. The top row shows the segmentation results by directly applying the UK Biobank-trained network to the LVSC and ACDC data. It shows that without any tuning, the network performs well for Cases 1 and 3, but fails for Cases 2 and 4. This is probably because the image patterns or intensity distributions in Cases 2 and 4 are not covered by UK Biobank.

Segmentation results on other datasets. The first two cases come from the LVSC 2009 dataset, whereas the last two cases come from the ACDC 2017 dataset. The four cases are respectively of heart failure, LV hypertrophy, dilated cardiomyopathy and abnormal right ventricle. The top row shows the segmentation results by directly applying the UK Biobank-trained network to the LVSC and ACDC data. The bottom row shows the segmentation results after fine-tuning the network to the new data

Then, we performed fine-tuning for the network by training it for another 10,000 iterations on the new datasets, which took about 2 hour. For LVSC 2009, we fine-tuned using the challenge training set (15 subjects) and evaluated the performance on the challenge validation set (15 subjects). The LVSC 2009 training set only annotates the LV cavity and myocardium. As a result, during fine-tuning, we only trained the network to segment the LV and ignored the RV. For ACDC 2017, we randomly split the challenge training set (100 subjects) into 80 subjects for fine-tuning and 20 subjects for evaluation. The bottom row of Fig. 5 shows the segmentation results on LVSC or ACDC data after fine-tuning. It shows that the segmentation performance is substantially improved for Cases 2 and 4 after the network has adjusted its parameters to adapt to the new data. Table 10 reports the Dice overlap metrics before and after fine-tuning. On both LVSCFootnote 6 and ACDC datasets, the Dice metrics are substantially improved after fine-tuning.

Although the network works well after fine-tuning, this still means each time when we have some new data that are acquired using a different protocol or from a different scanner model, we might need to label some of the new data for fine-tuning the network parameters. It would be interesting to explore whether we could create a large-scale heterogeneous dataset for training and evaluation, which covers typical CMR imaging protocols and scanner types, or to develop novel machine learning techniques that are more generalisable, which is an important research topic on its own [30].

Future directions

Future research will explore developing more generalisable methods for analysing a wider range of CMR images, such as multi-site images acquired from different machines and using different imaging protocols, and integrating automated segmentation results into diagnostic reports. The current method trains networks for short-axis images and long-axis images separately. It would be interesting to combine the two views for image analysis, which can provide complementary information about the anatomy of the heart. Finally, we believe that a benchmark platform based on this annotated dataset is needed, which would benefit the whole community and greatly advance the development of CMR image analysis algorithms.

Conclusions

We have proposed an automated method using deep FCN for short-axis and long-axis CMR image analysis. It has demonstrated a human-level performance on the UK Biobank dataset. We anticipate this to be a starting point for automated CMR analysis, facilitated by machine learning.

Notes

Batch normalisation [31] is a technique which helps address optimisation issues in training deep neural networks, i.e. networks with many layers. It normalises the layer input for each training mini-batch.

ReLU stands for rectified linear unit. It is a type of activation function for a neuron in artificial neural networks.

A transposed convolution is a convolution whose weight matrix has been transposed [32]. It is often used for upsampling an image or a feature map.

Softmax regression is a generalisation of logistic regression to the case where we have multiple classes. It is used for mapping a feature vector to a probability vector.

Data augmentation is a technique to increase the size of the training set by applying random spatial transformation or intensity transformation to the original training samples.

We evaluated the Dice metric between automated and manual segmentions in 3D. Previous studies on LVSC may report the Dice metric for good contours only (with distance error less than 5mm) [33].

Table 10 Dice overlap metrics for segmentations on LVSC 2009 and ACDC 2017 datasets

Abbreviations

- 2Ch:

-

2-chamber

- 4Ch:

-

4-chamber view

- BMI:

-

Body mass index

- bSSFP:

-

Balanced steady-state free precession

- CMR:

-

Cardiovascular magnetic resonance

- CT:

-

Computed tomography

- CVD:

-

Cardiovascular disease

- ED:

-

End-diastole

- ES:

-

End-systole

- FCN:

-

Fully convolutional network

- GPU:

-

Graphics processing unit

- HD:

-

Hausdorff distance

- ICD-10:

-

International Classification of Diseases code, 10th Revision

- LA:

-

Left atrium

- LV:

-

Left ventricle

- LVCO:

-

Left ventricular cardiac output

- LVEDV:

-

Left ventricular end-diastolic volume

- LVEF:

-

Left ventricular ejection fraction

- LVESV:

-

Left ventricular end-systolic volume

- LVM:

-

Left ventricular mass

- LVSV:

-

Left ventricular stroke volume

- MCD:

-

Mean contour distance

- RA:

-

Right atrium

- RV:

-

Right ventricle

- RVCO:

-

Right ventricular cardiac output

- RVEDV:

-

Right ventricular end-diastolic volume

- RVEF:

-

Right ventricular ejection fraction

- RVESV:

-

Right ventricular end-systolic volume

- RVSV:

-

Right ventricular stroke volume

References

World Health Organisation. Cardiovascular diseases (CVDs) fact sheet. http://www.who.int/mediacentre/factsheets/fs317/en/. Accessed 11 July 2017.

Ripley DP, et al. Cardiovascular magnetic resonance imaging: what the general cardiologist should know. Heart. 2016; 102(19):1589–603.

Fihn SD, et al. 2012 ACCF/AHA/ACP/AATS/PCNA/SCAI/STS guideline for the diagnosis and management of patients with stable ischemic heart disease. Circulation. 2012; 60(24):44–164.

McMurray JJV, et al. ESC Guidelines for the diagnosis and treatment of acute and chronic heart failure 2012. Eur J Heart Fail. 2012; 14(8):803–69.

UK Biobank Imaging Study. http://imaging.ukbiobank.ac.uk/. Accessed 11 July 2017.

He K, et al. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In: International Conference on Computer Vision. Santiago: IEEE: 2015. p. 1026–34.

Silver D, et al. Mastering the game of Go with deep neural networks and tree search. Nature. 2016; 529(7587):484–9.

Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017; 542(7639):115–8.

Long E, et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat Biomed Eng. 2017; 1:0024.

Avendi MR, et al. A combined deep-learning and deformable-model approach to fully automatic segmentation of the left ventricle in cardiac MRI. Med Image Anal. 2016; 30:108–19.

Ngo TA, et al. Combining deep learning and level set for the automated segmentation of the left ventricle of the heart from cardiac cine magnetic resonance. Med Image Anal. 2017; 35:159–71.

Tran PV. A fully convolutional neural network for cardiac segmentation in short-axis MRI. arXiv:1604.00494. 2017.

Lieman-Sifry J, et al. FastVentricle: Cardiac segmentation with ENet. In: Functional Imaging and Modelling of the Heart. Toronto: Springer: 2017. p. 127–38.

Kaggle Second Annual Data Science Bowl. https://www.kaggle.com/c/second-annual-data-science-bowl/. Accessed 11 July 2017.

MICCAI 2017 ACDC Challenge. https://www.creatis.insa-lyon.fr/Challenge/acdc/. Accessed 25 Oct 2017.

UK Biobank Data Showcase. http://biobank.ctsu.ox.ac.uk/crystal/label.cgi. Accessed 19 Nov 2017.

Petersen SE, et al. UK Biobank’s cardiovascular magnetic resonance protocol. J Cardiovasc Magn Reson. 2016; 18(1):8.

Petersen SE, et al. Reference ranges for cardiac structure and function using cardiovascular magnetic resonance (CMR) in Caucasians from the UK Biobank population cohort. J Cardiovasc Magn Reson. 2017; 19(1):18.

Long J, et al. Fully convolutional networks for semantic segmentation. In: Conference on Computer Vision and Pattern Recognition. Boston: IEEE: 2015. p. 3431–40.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations. San Diego: 2015. p. 1–14.

Ronneberger O, et al. U-Net: Convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention. Munich: Elsevier: 2015. p. 234–41.

Kingma D, Ba J. Adam: A method for stochastic optimization. In: International Conference on Learning Representations. San Diego: 2015.

Grothues F, et al. Comparison of interstudy reproducibility of cardiovascular magnetic resonance with two-dimensional echocardiography in normal subjects and in patients with heart failure or left ventricular hypertrophy. Am J Cardiol. 2002; 90(1):29–34.

Rider O, et al. Determinants of left ventricular mass in obesity; a cardiovascular magnetic resonance study. J Cardiovasc Magn Reson. 2009; 11(1):9.

He K, et al. Deep residual learning for image recognition. In: Conference on Computer Vision and Pattern Recognition. Las Vegas: IEEE: 2016. p. 770–8.

Fry A, et al. Comparison of sociodemographic and health-related characteristics of UK biobank participants with those of the general population. Am J Epidemiol. 2017; 186(9):1026–34.

Radau P, et al. Evaluation framework for algorithms segmenting short axis cardiac MRI. The MIDAS Journal - Cardiac MR Left Ventricle Segmentation Challenge. 2009. http://hdl.handle.net/10380/3070.

Bernard O, et al. Deep learning techniques for automatic MRI cardiac multi-structures segmentation and diagnosis: Is the problem solved?IEEE Trans Med Imaging, in early access. 2018.

MICCAI 2009 LV Segmentation Challenge. http://smial.sri.utoronto.ca/LV_Challenge/Data.html. Accessed 1 Feb 2018.

Marcus G. Deep learning: A critical appraisal. arXiv:1801.00631. 2018.

Ioffe S, Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning. Lille: 2015. p. 448–56.

Transposed convolution. http://deeplearning.net/software/theano/tutorial/conv_arithmetic.html. Accessed 30 Jan 2018.

Ngo TA, Carneiro G. Left ventricle segmentation from cardiac MRI combining level set methods with deep belief networks. In: International Conference on Image Processing. Melbourne: IEEE: 2013. p. 695–9.

UK Biobank Register and Apply. http://www.ukbiobank.ac.uk/register-apply/. Accessed 11 July 2017.

Acknowledgements

This research has been conducted mainly using the UK Biobank Resource under Application Number 2946. The initial stage of the research was conducted using the UK Biobank Resource under Application Number 18545. The authors wish to thank all UK Biobank participants and staff.

Funding

This work is supported by the SmartHeart EPSRC Programme Grant (EP/P001009/1). G.T. is supported by a Marie Skłodowska Curie European Fellowship. A.L. and S.E.P. acknowledge support from the NIHR Barts Biomedical Research Centre and from the MRC for the MRC eMedLab Medical Bioinformatics infrastructure (MR/L016311/1), which enables data access. N.A. is supported by a Wellcome Trust Research Training Fellowship (203553/Z/Z). S.N. and S.K.P. acknowledge support from the NIHR Oxford Biomedical Research Centre and the Oxford BHF Centre of Research Excellence. S.E.P., S.K.P. and S.N. acknowledge the British Heart Foundation (BHF) for funding the manual analysis to create a cardiovascular magnetic resonance imaging reference standard for the UK Biobank imaging resource in 5000 CMR scans (PG/14/89/31194). H.S. is supported by a Research Fellowship from the Uehara Memorial Foundation. P.M.M. gratefully acknowledges support from the Edmond J. Safra Foundation and Lily Safra, the Imperial College Healthcare Trust Biomedical Research Centre, the EPSRC Centre for Mathematics in Precision Healthcare and the MRC.

Availability of data and materials

The imaging data and manual annotations were provided by the UK Biobank Resource under Application Number 2946. Researchers can apply to use the UK Biobank data resource for health-related research in the public interest [34]. The image analysis source code is available at https://github.com/baiwenjia/ukbb_cardiac. The code is used for data format conversion, pre-processing, segmentation network training, testing and clinical measure calculation.

Author information

Authors and Affiliations

Contributions

WB, BG and DR conceived and designed the study; MS, GT, OO, MR, and GV provided advice and support on computing method aspects; SN, SEP, SKP provided the design of a large data resource to be used for training and testing of artificial intelligence approaches; AML, NA, SEP, SKP and SN provided advice and support on clinical aspects; NA, EL, MMS, FZ, KF, JMP, VC and YJK performed manual image annotation under the senior supervision of SEP, SKP and SN; EL and KF performed qualitative visual assessment of automated segmentation; AML and VC curated the annotation database; HS and PMM provided advice and support in the initial stage of model development; WB, BK and AML performed data pre-processing; WB designed the method, performed data analysis and wrote the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

UK Biobank has approval from the North West Research Ethics Committee (REC reference: 11/NW/0382).

Consent for publication

Not applicable.

Competing interests

S.E.P. receives consultancy fees from Circle Cardiovascular Imaging Inc., Calgary, Alberta, Canada.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1

Image demonstrating visual assessment and comparison between automated segmentation and manual segmentation. (PDF 210 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver(http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Bai, W., Sinclair, M., Tarroni, G. et al. Automated cardiovascular magnetic resonance image analysis with fully convolutional networks. J Cardiovasc Magn Reson 20, 65 (2018). https://doi.org/10.1186/s12968-018-0471-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12968-018-0471-x